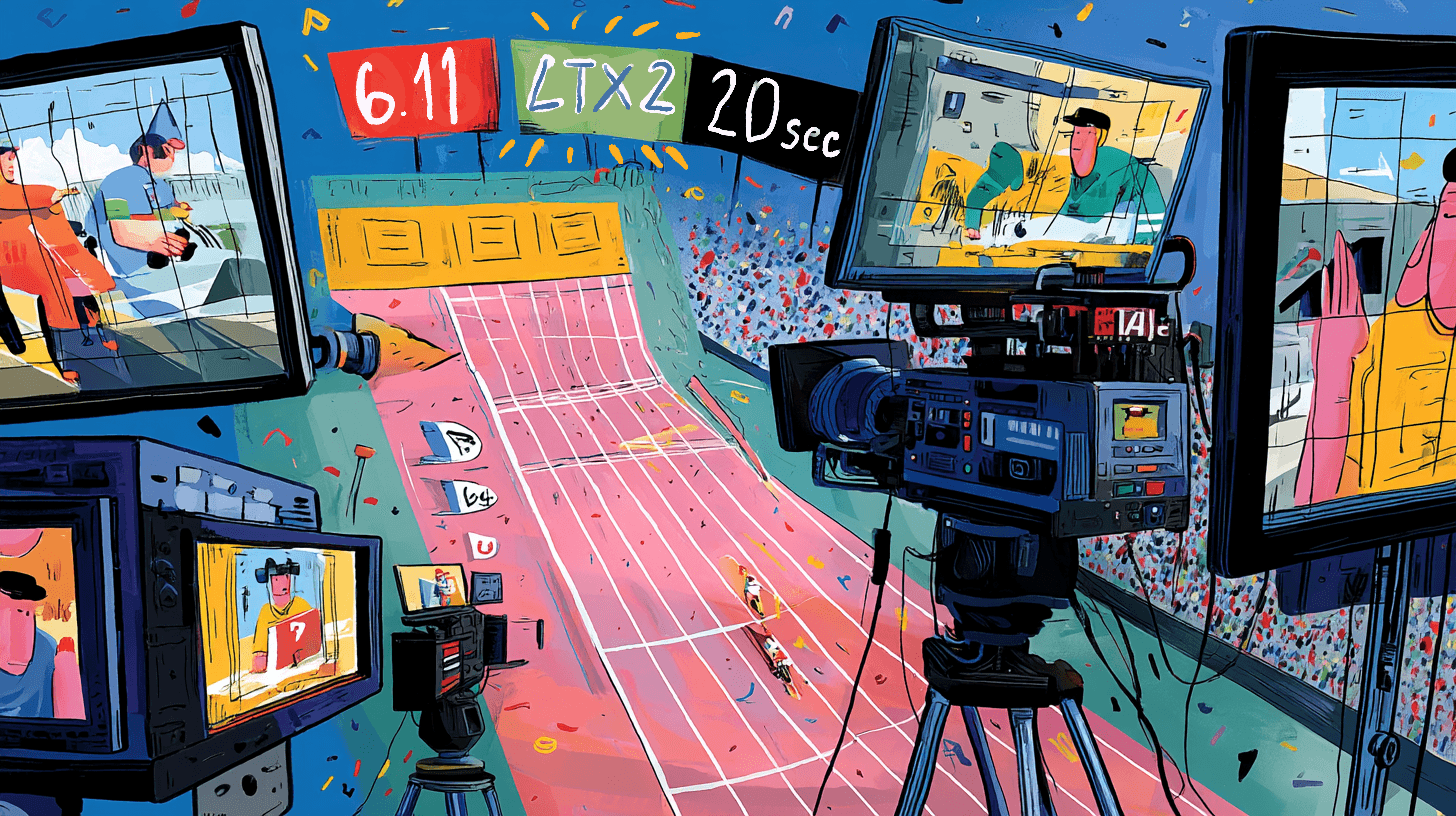

LTX‑2 достигает #3 в Image‑to‑Video, #4 в Text‑to‑Video — 20‑секундные запуски остаются плавными

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Lightricks’ LTX‑2 поднялась на третье место в рейтинге Artificial Analysis в разделах Image‑to‑Video и Text‑to‑Video, а создатели поддерживают её реальными клипами. 20‑секундный непрерывный дубль в режиме Fast сохраняет движение и текстуру без дрожания, и это как раз то, что нужны кинематографистам для сцен с одним дублем и стилизованных последовательностей.

Чем это отличается: это не лабораторный промо‑ролик. Испытатели делятся неотшлифованными попытками, ориентированными на продакшн, а команда опубликовала практическое руководство по промптингу, в котором подробно расписано настройка съемки и формулировки, чтобы вы могли подталкивать язык камеры, не переписывая сцены. LTX‑2 Pro также появился на Replicate, что означает возможность ставить очереди прогонов бок‑о‑бок и проверять согласованность движения, сохранение деталей и перенос стиля без дополнительной настройки. Если вы колебались между двумя лидерами рынка, это выглядит как правдоподобный третий вариант, достойный пробы за выходные.

Тактический совет: сочетайте прогоны LTX‑2 с однопроходовым увеличителем качества вроде SeedVR2 на Replicate, чтобы получить чистые мастер‑версии 4K после фиксации дублей — попкорн по желанию.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught

Feature Spotlight

LTX‑2 storms the video leaderboards

LTX‑2 breaks into the global top tier (#3 I2V, #4 T2V). Creators report smooth motion and 4K/50fps/20s outputs—signal that indie teams can produce studio‑grade shots without mega‑lab budgets.

Cross‑account story today: LTX‑2 climbed to #3 Image‑to‑Video and #4 Text‑to‑Video on Artificial Analysis, with creators sharing smooth, production‑leaning tests. This section focuses on rankings, real‑world clips, and why it matters for filmmakers.

Jump to LTX‑2 storms the video leaderboards topicsTable of Contents

🎬 LTX‑2 storms the video leaderboards

Cross‑account story today: LTX‑2 climbed to #3 Image‑to‑Video and #4 Text‑to‑Video on Artificial Analysis, with creators sharing smooth, production‑leaning tests. This section focuses on rankings, real‑world clips, and why it matters for filmmakers.

LTX‑2 hits #3 Image‑to‑Video and #4 Text‑to‑Video on Artificial Analysis

Lightricks’ LTX‑2 climbed the Artificial Analysis boards to #3 for Image‑to‑Video and #4 for Text‑to‑Video, with creators amplifying the results and the team leaning into a small‑but‑effective narrative leaderboard post, ranking screenshot, underdog thread. Following up on prompting guide highlights, the team also shared a concise how‑to for shot setup and phrasing prompting guide.

• Image‑to‑Video: #3 position on Video Arena’s chart leaderboard post.

• Text‑to‑Video: #4 position among flagship models, close behind the leaders leaderboard post.

For filmmakers, this signals a credible third option alongside the usual giants. The takeaway is simple: test your go‑to prompts against LTX‑2 now and see if the motion‑to‑detail ratio holds up for your pipeline underdog thread.

Creators push LTX‑2 with 20‑second shots and smooth detail

Real‑world clips keep landing: a 20‑second continuous piece from LTX‑2 Fast shows stable motion and atmosphere, and multiple testers report smoothness with fine detail preserved in action 20‑second clip, creator test, creator follow‑up, music video test. One tester even shares a quick giggle‑worthy result while pointing to an easy way to try the model replicate link.

These aren’t lab picks. They’re the kind of unpolished tries you’d expect from a busy team validating a new model against real scenes. If you shoot stylized pieces or want longer, single‑take motion, this is worth an afternoon bake‑off against your current stack.

LTX‑2 Pro lands on Replicate for quick trials

You can now kick the tires on LTX‑2 Pro via Replicate, making it trivial to queue tests without local setup or an internal server sprint replicate link. That lowers the friction for teams who just want to check motion consistency, texture retention, or style carryover on real storyboards before investing in deeper integration.

🌀 One‑click character swap, dubbing, and backgrounds

Higgsfield Recast puts full‑body replacement, gesture fidelity, voice cloning, multi‑language dubbing, and background swaps into a single click—practical for creators scaling persona‑driven video. Excludes LTX leaderboard (see feature).

Higgsfield launches Recast: 1‑click character swap, voice cloning and multi‑language dubbing

Higgsfield unveiled Recast, a creator‑facing tool that turns a single performance into many personas with 1‑click full‑body replacement, gesture‑faithful motion, voice cloning, multilingual dubbing, and background swaps launch thread. A time‑boxed promo offers 211 free credits for early users, with the app already live for sign‑ups and generation free credits call and Recast app.

- Record once, become any character: 30+ presets from dinosaurs to anime, plus background transformation for quick scene changes presets note.

- Pitch to creators: claims of “perfect movement replication” and a “10×” content income angle target persona‑driven channels, dubbing, and globalization workflows launch thread.

- Access: Public web app with guided flow for character selection, voice cloning, language pick, and background edits, positioned for short‑form and ads use cases Recast app.

🧩 Blueprinted pipelines and node workspaces

Today’s workflow news centers on Leonardo’s ready‑made Blueprints, Krea’s all‑in‑one Nodes (codes circulating), and Runware’s Start→End Frame timelapse chain—plus Seedream 4.0’s story‑driven consistency. Excludes LTX rankings (feature).

Leonardo launches Blueprints with 75% off; cross‑style identity holds in tests

Leonardo rolled out Blueprints—ready‑made creative workflows that bundle model choice, prompts, and tuned settings—with a limited 75% token discount and an API plus community sharing on the way Launch thread, and you can explore the catalog now Blueprints page. A creator stress‑tested identity consistency by running the same character through Urban Glare, Brutalist B&W, and Dreamy Polaroid Blueprints; the face stayed recognizable while the look changed Creator demo.

For teams, this reduces prompt drift and “which model/settings” guesswork. Start with a Blueprint that matches the deliverable, then iterate style without breaking continuity.

Krea Nodes debuts an all‑in‑one node workspace; invite codes circulate

Krea introduced Nodes, a single interface that brings its tools into a node‑based canvas so you can compose full pipelines without hopping apps Launch teaser. Early access is rolling out and invite codes have appeared in replies, hinting at a growing test wave Invite codes. A shared screenshot shows the node graph UI with style controls and model selection inline UI preview.

One note for builders: template workflows are already surfacing from creators, so expect a gallery of reusable graphs as access widens Template mention.

Runware chains Seedream→Riverflow→Seedance to timelapse a scene from day to night

Runware demonstrated a Start→End Frame pipeline: generate a starting image in Seedream 4.0, switch it to daytime with Riverflow 1.1, then animate the transition in Seedance 1.0 Pro using a fixed‑camera timelapse prompt Workflow thread. They shared exact steps and the "Static camera, timelapse from day to night" prompt, plus a link to the featured models for replication Step details Runware models.

This pattern is practical for product shots and establishing shots: lock composition, define start and end looks, then let the video model interpolate the arc.

Seedream 4.0 spotlights story‑driven sequences that keep one protagonist consistent

BytePlus highlighted Seedream 4.0’s story‑driven image generation that maintains a single character’s identity across multiple scenes—useful for campaigns, mini‑comics, and brand narratives where continuity matters Feature brief.

If your pipeline juggles several tools, this lets you anchor on one protagonist and vary environments and moods without constant re‑prompting or ID loss.

🎥 Prompted cinematography and camera moves

Cinematography controls move mainstream: creators dissect Veo‑3.1 Camera Control with shot‑by‑shot prompts and lens specs for dynamic sequences. Excludes any LTX leaderboard content (covered as the feature).

Full creator test puts Veo‑3.1 Camera Control through its paces

A new breakdown runs Veo‑3.1’s Camera Control end to end, showing how shot‑by‑shot prompts translate into directed moves and timing in real footage Video deep dive. The demo pairs on‑screen prompts with results so you can see how phrasing changes the move, tempo, and framing, following up on Timestamp prompting which outlined the control scheme and cue structure.

For teams storyboarding social clips and ads, this is the missing link: you can iterate on the exact camera behavior without rewriting the whole scene. Watch the chaptered walkthrough for practical prompt patterns and failure modes, then adapt to your next shot list YouTube breakdown.

Gemini/Veo prompt spells out 8‑sec chase with start–mid–end camera cues

A creator shared a detailed Veo‑3.1 Fast prompt for an 8‑second mountain‑road overtake, specifying camera beats: start as a low bumper‑tracking shot, mid as a rapid pull‑out with a left arc to pass, and end as a forward lock‑on ahead of the new lead car Camera prompt. It also locks in an 85mm look, shallow DoF, golden‑hour volumetrics, and strong radial motion blur to keep the cars sharp against speed lines.

The point is: you can encode a mini shot list inside the prompt and get consistent motion language. Use this structure to iterate action beats (start/mid/end), then swap lenses, angles, and exposure to taste.

📈 Reasoning models and leaks: Kimi K2, GPT‑5.1 hints

Mostly benchmarks and access notes relevant to creative tooling: Kimi K2 Thinking posts strong agentic scores, appears in Kimi Chat UI and anycoder; separate code strings hint at GPT‑5.1 Thinking. No overlap with the feature.

Kimi K2 Thinking hits 44.9% HLE; 256K context and 300 tool calls

Moonshot AI’s K2 Thinking model is now out with strong agentic scores: 44.9% on Humanity’s Last Exam and 60.2% on BrowseComp, plus a 256K context window and support for ~200–300 sequential tool calls. That’s meaningful for research, coding, and multi‑step creative tasks where persistence matters benchmarks chart. Following up on parser hint, this is the first broad, quantified look at K2’s capabilities in the wild.

Community threads reiterate the same numbers and emphasize reasoning, search, and coding wins versus top closed models, with some posts calling out open availability of the weights benchmarks recap. For creative teams testing long chains—story research, shot lists, build scripts—those tool‑call limits and context size are the practical knobs to watch.

Kimi Chat adds 'Thinking' mode and K2 selector for end users

Kimi Chat now exposes a visible “Thinking” toggle and a K2 model selector in the chat UI, signaling end‑user access to K2’s longer, step‑by‑step reasoning mode without extra setup UI screenshot. For creatives, that means you can flip into deliberate mode when a brief or shot plan needs more internal steps, then switch back for faster replies.

The UI hint pairs cleanly with the benchmarked tool‑calling behavior, so expect slower but more thorough chains when the toggle is on; keep it off for quicker ideation.

ChatGPT code references 'gpt‑5‑1‑thinking'; community expects imminent tests

New UI strings referencing “gpt‑5‑1‑thinking” appeared in ChatGPT code, hinting at a forthcoming deliberate mode variant and sparking timing chatter around Gemini 3 code string. Another snippet shows both “gpt‑5‑thinking” and “gpt‑5‑1‑thinking,” suggesting a family of deliberate models rather than a one‑off additional snippet.

If confirmed, expect longer internal chains by default and potentially higher tool‑use limits. Plan a quick eval set for briefs, research, and storyboard tasks to see if the thinking effort slider yields fewer rewrites.

anycoder adds Kimi K2 Thinking to its model menu for coding flows

Kimi K2 Thinking now shows up as a selectable model in anycoder, making it easier to test K2 on repo edits, small agents, and codegen tasks alongside your usual stack model picker. The anycoder space is live for hands‑on trials today Hugging Face space.

If you’re iterating multi‑file changes or longer reasoning passes on briefs, this is a low‑friction way to compare K2’s “thinking” behavior against your current default.

🛰️ Assistants on Android and smarter multi‑step help

Perplexity Comet rolls to Android beta while its Assistant adds persistence and permissioned browser actions—useful for creative research and task chains. Separate from RAG/File Search and LTX feature.

Comet Assistant adds permissioned browsing and claims 23% better multi‑step

Perplexity says its Assistant now persists better on complex, multi‑step tasks (+23% in internal tests), can juggle multiple tabs, and always asks for user approval before any browser action—useful guardrails for creative workflows that fetch, compare, and compile references at scale Feature brief.

Perplexity Comet hits Android beta; creators start testing the assistant

Perplexity’s Comet app is appearing for Android beta testers, with onboarding screens circulating and first impressions landing today Android beta screen, Beta rollout note. Early users report the assistant mode feels fast, responsive, and accurate for daily use, which matters for on‑the‑go research, shot lists, and reference gathering Early feedback.

🧱 3D from anything: heads, worlds, and kinematics

3D news skewed practical: Tencent’s WorldMirror code for video→3D worlds and ANY→ANY outputs; PercHead single‑image 3D‑consistent heads; Kinematify paper on high‑DoF articulated object synthesis.

Tencent releases Hunyuan World 1.1 training code for video‑to‑3D worlds

Tencent Hunyuan published the training stack for World 1.1 (WorldMirror), pitching “your video to 3D worlds in 1 second” and an ANY→ANY pipeline that targets 3DGS, depth, cameras, normals, and point clouds. That’s a practical on‑ramp for creators who want fast scene capture from existing footage and export to common 3D intermediates. See the announcement for demo and code pointers release note.

Kinematify proposes open‑vocabulary, high‑DoF articulated 3D with contact‑aware joints

A new paper, Kinematify, outlines a pipeline for inferring articulated structures from images/text in an open‑vocabulary way, combining part‑aware 3D reasoning with distance‑weighted, contact‑aware joint inference and MCTS‑driven kinematic search. For rigging props, robots, or complex gadgets from sparse inputs, this could cut manual setup time dramatically. Overview and diagram here paper overview, with the full write‑up on Hugging Face paper page.

🎨 Ready‑to‑use styles, srefs, and edit tricks

A packed day of shareable looks: stencil‑style prompt packs, Midjourney V7 recipes, a children’s‑book sref, 3D streetwear character looks, and a Qwen LoRA test for camera‑angle control on memes.

Stencil‑style prompt pack lands with mono palette + accent color recipe

Azed_ai shared a ready‑to‑use stencil prompt that standardizes a bold street‑art look: monochrome base (black/white) plus one accent color, high contrast, sharp shadows, and a minimalist backdrop Prompt recipe.

It’s plug‑and‑play for posters, stickers, or covers, and the examples span portraits and props so you can swap subjects without losing the graphic look.

Children’s‑book illustration sref (--sref 358265320) for warm folk aesthetics

Artedeingenio published a Midjourney style reference that yields hand‑made, childlike drawings with flat colors and a cozy, contemporary‑folk feel; it’s pitched as akin to modern illustrators while prioritizing charm over realism Style reference.

Use it to keep a consistent picture‑book voice across characters, animals, and scenes with a limited palette and naive line.

Qwen Image Edit LoRA shows controllable camera angles on the meme classic

Cfryant demoed the Qwen Image Edit Camera Angle Control LoRA by re‑framing the “distracted boyfriend” meme into overhead and alternate perspectives while preserving identity and scene beats Camera LoRA demo.

This is a handy edit trick for concept boards and continuity—iterate camera viewpoints from one still instead of rebuilding the scene.

3D streetwear character look: bold color blocks, chunky sneakers, toy‑like sheen

Azed_ai surfaced a 3D character style set—color‑blocked jackets, mohawks, oversized sneakers, and clean plasticity—that reads well as a mascot or collectible character line 3D style set.

If you’re building brand characters or social stickers, this pack gives you a cohesive, high‑saturation 3D look you can vary by outfit and pose.

📂 Turnkey File Search RAG for teams

Google DeepMind’s Gemini API File Search lands as a managed RAG layer—automatic chunking, embeddings, citations, and multi‑format support—useful for creative briefs, scripts, and asset libraries.

Gemini API File Search launches managed RAG for creative teams

Google DeepMind rolled out File Search inside the Gemini API—a fully managed RAG layer that auto‑handles storage, chunking, embeddings, retrieval, and returns inline citations. Pricing is simple: $0.15 per 1M tokens for the initial embeddings, with storage and query‑time embeddings free feature recap.

For content teams, this means you can index briefs, scripts, pitch decks, call sheets, and brand guides across PDF, DOCX, TXT, JSON, and code, then ask grounded questions with clickable citations. Early users like Phaser Studio’s Beam report tasks dropping from hours to seconds, and it’s available now in Google AI Studio with demos and docs to try today feature recap feature recap feature recap feature recap.

• Batch your asset library into File Search and validate prompt answers via citations before rollout. • Start with low‑risk corpuses (style guides, brand rules) to gauge retrieval quality and token burn. • Track costs by pre‑embedding large collections once, then lean on free query‑time retrieval as usage grows.

🔧 Sharper finals: one‑pass video/image restoration

Post tools today center on Replicate’s SeedVR2: fast, one‑pass restoration for upscaling soft AI clips to clean 4K with temporal consistency.

SeedVR2 lands on Replicate: one‑pass 4K video/image restoration

Replicate now hosts ByteDance‑Seed’s SeedVR2‑3B, a one‑pass restoration model that sharpens soft clips and images up to clean 4K with strong temporal consistency—ready to fix AI‑generated footage in seconds replicate launch, with details in the model page outlining single‑shot diffusion, video and image inputs, and adaptive detail recovery model card. Following up on fal upscaler (4K/60 fps pricing), this adds a no‑pipeline, upload‑and‑go option that’s ideal for polishing final edits without multi‑step passes.

🛍️ Voice agents for shopping and showcases

ElevenLabs pairs agents with Shopify Storefront MCP for conversational commerce—relevant to brand storytellers and marketers building voice‑led storefronts.

ElevenLabs ships voice shopping agent with Shopify Storefront MCP, code on GitHub

ElevenLabs is showing a working voice-driven shopping flow that talks to a Shopify storefront via Storefront MCP. It’s a full conversational agent that can browse, describe, and help choose products in real time Agents demo.

The team also published a public repo you can clone. It includes a Next.js UI, the agent pieces, and an MCP UI server/client so you can wire your own catalog and intents without rebuilding plumbing GitHub repo, and the source is here GitHub repo. The hosted demo is live if you want to feel the interaction before integrating it into your stack Storefront demo.

So what? Voice-led storefronts move faster than tapping through menus. This lets brand teams and marketers prototype narrative shopping experiences (guided discovery, comparison, upsells) that feel like a smart retail associate. It’s also a cleaner way to test scripted "launch day" flows or seasonal campaigns where tone and pacing matter.

- Clone the repo and point it at a Shopify dev store. Start with a narrow collection to validate intent mapping.

- Define structured intents (e.g., find, compare, add-to-cart). Keep tool calls deterministic and log every action.

- Script your brand voice with short response templates first, then layer richer descriptions and cross-sells.

- Localize early: test two voices and two languages across the same flow to catch content gaps.

Deployment impact: You’ll need API keys and a private app on Shopify. Keep the agent’s permissions scoped to product, variant, and cart endpoints; avoid write access to discounts or inventory until you’ve proven guardrails. Use staged environments and capture transcripts for QA.

Design notes: Short sentences and quick confirmations reduce user fatigue. Ask before cart actions. Summaries after comparisons help close. Treat silence as a signal—offer to send a summary link. If your catalog photography varies, pre-generate canonical descriptions so the agent doesn’t waffle.

This signals a practical turn for voice commerce: not a concept video, but code you can run, adapt, and test with real shoppers. If you sell complex SKUs or bundles, this is worth a weekend spike.

🏆 Challenges, screenings, and community reels

Community momentum: Comfy’s cloud‑themed video challenge, OpenArt MVA clips on Times Square, Runware’s Riverflow winner, and WAN 2.5’s multisensory highlight reel.

OpenArt MVA entries hit Times Square screens; submissions open until Nov 16

OpenArt says creator submissions for its Music Video Awards are now playing on Times Square displays, while inviting new entries ahead of the Nov 16 cutoff Times Square clip. This follows $50k prize, a 12‑day reminder about the $50,000 pool and active call for entries; submit via the program hub submission page.

Comfy Challenge #9 opens: “Cloudcrafted” theme, $100 prize and merch

ComfyUI launched Challenge #9 themed around clouds, with $100 to the top entry, special merch for the Top 3, and one‑month Comfy Cloud access for 20 random participants; submissions close Nov 10 at 7 PM PT challenge brief. Full details, rules and the submission form are on the official page challenge page.

WAN 2.5 “When Image Finds Its Sound” highlight reel spotlights multisensory storytelling

Alibaba’s WAN team cut a showcase reel from its release event, featuring creators demonstrating WAN 2.5‑Preview’s image‑to‑sound storytelling and multi‑modal control, targeted at filmmakers and animators testing audio‑visual sync workflows highlight reel. Try the preview from the official site WAN homepage.

Runware names Riverflow competition winner and teases the next round

Runware congratulated the latest Riverflow contest winner, noting strong entries and hinting the next competition is coming soon—useful signal if you’re building a portfolio with Seedream/Riverflow/Seedance pipelines winner post.

💼 Creative AI business and strategy moves

Operational and strategy signals affecting creative stacks: fal acquires Remade AI; Microsoft forms a human‑centered SI team; OpenAI clarifies revenue/capex posture; valuation debate heats up.

Report: AWS and OpenAI agree to a $38B multi‑year AI infrastructure deal

A new claim suggests AWS and OpenAI have signed a $38B multi‑year partnership for AI infrastructure Deal claim, following up on 500k chips added for agent workloads. If confirmed, this would materially expand model capacity and reduce wait times for high‑throughput creative tasks like long‑form video gen and batch renders.

Sam Altman: OpenAI projects >$20B 2025 run rate, no government datacenter guarantees

Sam Altman said OpenAI is not seeking government datacenter guarantees and clarified planned spend tied to research horizons and compute shortages, while projecting 2025 annualized revenue above $20B and noting $1.4T infrastructure commitments over eight years Altman revenue note. For creative stacks built on GPT‑class models, the message is more supply coming but without public backstops—meaning market pricing and partner capacity will drive availability.

fal acquires Remade AI to accelerate generative media infrastructure

fal announced it has acquired YC‑backed Remade AI, a team known for open video LoRAs and an intelligent creative canvas, to speed up its developer‑focused infrastructure for generative media team welcome. The company says the team will help build the “next generation of creative infrastructure” for studios and app builders, with more tooling for video creators and developers available via its platform blog post.

For creative teams, this signals faster iteration on production‑ready video tooling and a likely expansion of LoRA ecosystems inside fal‑hosted workflows.

OpenAI’s ~$157B valuation stokes “too big to fail” worries amid Microsoft/Nvidia ties

Commentary flagged that OpenAI’s ~$157B valuation, with deep Microsoft and Nvidia interlocks, raises “too big to fail” risk even as the company remains unprofitable and targets ~$11.6B revenue in 2025 Valuation concerns. If you ship on their APIs, budget for potential price changes or regional throttles tied to partner capacity, and keep a plan B for critical creative pipelines.

Microsoft AI forms “humanist superintelligence” team for bounded, human‑centered apps

Microsoft AI outlined a new team focused on “humanist superintelligence,” aiming at controllable, human‑centered systems for companionship, healthcare support, and clean energy, while emphasizing it’s not in an AGI race and can pursue work independently of OpenAI team plan. The framing puts constraints and safety up front, which matters for creative tools that will embed persistent agents into daily workflows.

Watch how this translates into product surfaces creatives actually touch—voice companions in editors, safer autonomous assists in design apps, and clearer permissioning for agent actions.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught