Qwen3‑Omni 30B open weights – 211 ms latency; 65K‑token reasoning

Stay in the loop

Get the Daily AI Primer delivered straight to your inbox. One email per day, unsubscribe anytime.

Executive Summary

Alibaba’s Qwen3‑Omni‑30B lands with open weights, real‑time speech, and crisp latency: 211 ms to first packet, 30‑minute audio understanding, and tool‑calling baked in. It pairs with FP8 Qwen3‑Next‑80B variants for faster serving and DeepSeek‑V3.1‑Terminus upgrades that nudge agent scores upward without regressions. Non‑AI but relevant for AI: OpenAI and NVIDIA signed an LOI for 10 GW of systems, with up to $100B NVIDIA investment starting 2H 2026. Why it matters for AI: a 10 GW ramp cuts compute scarcity for GPT‑5+ training and large‑scale inference.

In numbers:

- 30B params; Apache‑2.0 weights; 211 ms first‑packet; 30‑minute audio comprehension

- Reasoning context up to 65,536 tokens; longest chains reach 32,768 tokens

- Multilingual IO: 119 text, 19 speech inputs, 10 speech outputs; tool calling included

- Qwen3‑Next‑80B FP8 variants; 3 runtimes supported: Transformers, vLLM, SGLang

- DeepSeek‑V3.1‑Terminus: BrowseComp 30.0→38.5; Terminal‑bench 31.3→36.7; SWE Verified 66.0→68.4

- SGLang deterministic mode: ~34.35% slowdown; per‑request seeding stabilizes temperature>0 runs

Also:

- Meta ARE+Gaia2: 1,120 tasks across 12 apps; verifiable write actions and deadlines

- OpenAI×NVIDIA LOI: 10 GW phased; analysts model ~$35B orders per $10B invested

Feature Spotlight

Feature: OpenAI × NVIDIA 10 GW buildout

OpenAI and NVIDIA plan a phased 10‑GW, millions‑of‑GPUs build with up to $100B NVIDIA investment—resetting AI compute supply, costs and roadmap co‑design starting 2026.

Non‑AI but relevant for AI: Massive datacenter capacity pact dominates today’s chatter. Multiple posts detail a letter of intent for 10 GW of NVIDIA systems for OpenAI, phased from 2H26, with up to $100B NVIDIA investment and co‑optimization. Excludes model drops and evals covered below.

Jump to Feature: OpenAI × NVIDIA 10 GW buildout topics📑 Table of Contents

🏗️ Feature: OpenAI × NVIDIA 10 GW buildout

Non‑AI but relevant for AI: Massive datacenter capacity pact dominates today’s chatter. Multiple posts detail a letter of intent for 10 GW of NVIDIA systems for OpenAI, phased from 2H26, with up to $100B NVIDIA investment and co‑optimization. Excludes model drops and evals covered below.

OpenAI and NVIDIA sign LOI for 10 GW AI datacenters, up to $100B NVIDIA investment

Non‑AI but relevant for AI: OpenAI and NVIDIA announced a letter of intent to deploy at least 10 gigawatts of NVIDIA systems (millions of GPUs) for OpenAI’s next‑gen AI infrastructure, with NVIDIA intending to invest up to $100B as each gigawatt comes online; first phase targets 2H 2026 on the Vera Rubin platform OpenAI blog link, OpenAI blog post, OpenAI president post.

- Co‑optimized stack: OpenAI software and NVIDIA hardware/compilers/interconnects will be aligned for training, inference, and data flows OpenAI blog link.

- Phased rollout: initial 1 GW in 2H 2026, followed by additional sites; complements existing partners (Microsoft, Oracle, SoftBank/Stargate consortium) rather than replaces them OpenAI blog link.

- Scale context: 10 GW is roughly 2× Microsoft’s reported 5 GW “Stargate” plan and implies multiple nation‑scale sites project size, scale context.

- Power reality: commenters peg 10 GW as enough for ~7.5M homes; siting and grid capacity become critical constraints scale equivalence, siting question.

- Not yet final: the announcement is an LOI; terms and timing can change as deployments are secured deal caveat.

Why it matters for AI: Massive 10 GW build lowers compute scarcity for training GPT‑5+ class models and global inference.

NVIDIA’s vendor‑financing play locks in OpenAI GPU demand; 10 GW implies grid‑scale siting

Non‑AI but relevant for AI: Beyond the LOI, NVIDIA is effectively backstopping OpenAI’s compute ramp—WSJ details a vendor‑financing pattern where NVIDIA’s investment reduces capital costs while securing long‑term GPU orders (analysts model ~$35B orders per $10B invested) WSJ analysis. The stock jumped ~4% on the news, reflecting confidence in multi‑year demand stock chart.

- Mechanism: milestone‑based funding as each 1 GW deploys, tying capital to live capacity; OpenAI gains cheaper access vs. high‑rate “neo‑cloud” financing deal caveat, WSJ analysis.

- Comparables: dwarfs prior disclosed builds (e.g., 5 GW Stargate); underscores a shift from spot GPU rental to co‑planned, vertically optimized clusters project size.

- Unit economics: prior guidance pegs 1 GW campus at $50–60B TCO, ~$35B of which is NVIDIA systems—implying huge pull‑through for GPUs/networking Huang unit costs.

- Grid constraints: 10 GW needs multi‑site siting, high‑voltage interconnects, and energy procurement on utility/national scales; execution risk is in power and timelines siting question, scale context.

Why it matters for AI: Cheaper, locked‑in GPU supply stabilizes model training roadmaps and lowers per‑token inference costs at scale.

🧪 New models: Qwen omni drop + DeepSeek update

Mostly model drops and updates today: Alibaba’s Qwen3‑Omni (30B open weights) unifies text+image+audio+video; FP8 Qwen3‑Next 80B variants; and DeepSeek‑V3.1‑Terminus incremental agent/search gains. Excludes infra feature above.

Qwen3‑Omni 30B open weights unify text, image, audio and video with 211 ms latency

Alibaba open‑sourced Qwen3‑Omni‑30B‑A3B (Instruct, Thinking) plus a low‑hallucination Captioner, bringing end‑to‑end omni‑modality (text, image, audio, video), tool‑calling, and real‑time speech with ~211 ms first‑packet latency and 30‑minute audio understanding release thread. This lands following up on Transformers PR support for an earlier "talker" path.

- Open components and access: 30B Instruct/Thinking weights on Apache‑2.0; higher‑tier Omni‑Flash available via API and demos release thread, GitHub repo, HF models, HF demo.

- Performance scope: SOTA on 22/36 audio and AV benchmarks; multilingual IO (119 text, 19 speech in, 10 speech out) per the announcement release thread.

- Context and reasoning: Up to 65,536‑token thinking context, longest reasoning chains 32,768 tokens; non‑thinking up to 49,152 tokens overview thread.

- Try it locally or hosted: Instruct weights are ~70 GB before quantization; you can test in the hosted chat as well blog post, chat link.

- API docs and tool‑use: Built‑in tool calling and system‑prompt customization documented for app integration API docs.

DeepSeek‑V3.1‑Terminus cleans language, upgrades Code/Search Agents; modest benchmark gains

DeepSeek shipped V3.1‑Terminus with cleaner multilingual handling (fewer CN/EN mix‑ups, no stray chars) and stronger Code and Search Agents, positioning it as the likely final V3.x before V4 release thread. Benchmarks show small but tangible lifts across agentic suites while keeping base capabilities intact HF page.

- Agentic improvements: BrowseComp 30.0 → 38.5, SimpleQA 93.4 → 96.8, SWE Verified 66.0 → 68.4, Terminal‑bench 31.3 → 36.7; some neutral/slight dips elsewhere (e.g., Codeforces) benchmarks chart.

- Access: Available in the app, web, API; open weights on Hugging Face with updated search agent template and demo code HF page.

- Scope: Same core model structure as V3, focused on stability, language consistency, and reliability under tool use release thread.

Qwen3‑Next 80B adds FP8 Instruct/Thinking variants for faster inference

Alibaba released FP8 variants of Qwen3‑Next‑80B‑A3B (Instruct and Thinking), compatible with Transformers, vLLM, and SGLang for lower‑latency, lower‑memory serving release note.

- Availability: Models listed on Hugging Face and ModelScope collections HF collection, ModelScope page.

- Drop‑in serving: FP8 precision aims to accelerate generation without major code changes in common runtimes release note.

🧰 Agentic coding UX, email agents and DSPy tooling

Busy, practitioner‑oriented day: Perplexity ships an autonomous Email Assistant; Cline posts prompt tactics; Cursor teases SDK; RepoPrompt/Factory CLI patterns; DSPy + MLflow tracing guides. Excludes coding evals (covered above).

Perplexity launches autonomous Email Assistant for Gmail and Outlook

Perplexity rolled out an Email Assistant that schedules meetings, drafts replies in your tone, organizes inboxes, and sends calendar invites for Max subscribers on Gmail and Outlook launch thread, scheduling demo, organizing view, product page.

- Adds itself to threads to negotiate times, check availability, and issue invites automatically scheduling demo.

- Auto‑drafts responses that mirror your style; prioritizes emails to focus attention product page, organizing view, product page.

- Available now to Perplexity Max users; supports both Gmail and Outlook accounts launch thread.

Cursor teases SDK and shows contextual command bar, following multi‑agent beta

Cursor is planning a SDK so teams can wire its agent and features into their own workflows, while showcasing a contextual command bar and a more compact UI to fit more context on screen SDK poll, command bar api, compact view. This comes after its multi‑agent window beta multi‑agent beta added parallel agent workflows.

- SDK ask: developers want programmable access to the agent and features for custom pipelines SDK poll.

- Command bar registers per‑component commands and hotkeys, surfacing the right actions by screen/scope; code shared for reuse command bar api, impl link.

- Quality‑of‑life: compact layout increases visible context; @‑menu can inject branch/metadata into prompts compact view, context insert.

Cline shares prompt patterns for coding agents and hosts training at Nerdearla

Cline published a concise playbook to turn vague bug reports into precise agent instructions using zero‑shot, one‑shot and chain‑of‑thought prompting, and announced two AI development sessions at Nerdearla prompt guide, full guide, conference post.

- Zero‑shot for common patterns, one‑shot for style/format examples, CoT for long‑horizon fixes techniques thread, zero‑shot example, one‑shot example, cot example, blog post.

- Workshop (Sept 25): prompting, plan/act workflows, human‑in‑the‑loop; talk (Sept 26): decoding complex OSS codebases in VS Code with Cline workshop details, talk details, free registration.

DSPy adds one‑line MLflow tracing for agent observability

A practical DSPy × MLflow recipe shows how to spin up a local MLflow server and enable instrumentation of agent runs with a single call (mlflow.dspy.autolog()), giving structured traces beyond ad‑hoc printouts how‑to thread.

- Start a local tracking server (mlflow server --host 127.0.0.1 --port 8080), set the URI, then call mlflow.dspy.autolog() in your DSPy app guide link, DSPy tutorial.

- Compared to inspect_history, MLflow logs multiple questions, metadata, and non‑LLM steps for real‑world debugging at scale guide link.

- Works with notebooks (DataSpell, PyCharm) and managed tracking servers; MLflow quickstart linked for setup details mlflow quickstart, MLflow quickstart.

Add a mandatory 'Verification' pass to agentic coding workflows

Community playbooks emphasize adding a fourth step—Verification—after research, planning, and implementation, forcing agents to run tests and re‑enter the loop if anything fails workflow card.

- Verification requires tool‑run checks, test execution, and bug fixes before marking tasks complete workflow card.

- Pattern is being codified in AGENTS.md/CLAUDE.md playbooks and shared for reuse across agents author reply, playbooks commit.

🎨 Creative media: stronger editing and animation control

Mostly image/video tooling: Qwen‑Image‑Edit‑2509 adds multi‑image editing and ControlNet with identity consistency; Wan Animate eye‑gaze fix in Comfy; Seedream 4 portrait showcases; consumer voice‑guided edit apps. Excludes Qwen3‑Omni core which is in model releases.

Qwen‑Image‑Edit‑2509 adds multi‑image editing, ControlNet and identity‑safe text/face/product edits

Alibaba’s latest Qwen‑Image‑Edit‑2509 brings multi‑image composition and built‑in ControlNet while preserving identity for faces, products, and even on‑image text. It’s open‑sourced with demos already live. feature brief

- Multi‑image workflows (person + product/scene/person) with consistency across poses and styles. Try it in the hosted demo. demo space HF demo space

- ControlNet inputs supported: depth maps, edge maps, keypoints for precise geometry/layout control. feature brief

- Text edits go beyond content changes to font, color, and material; strong face/product identity retention in before‑after runs. demo space

- Community apps are already shipping on top (e.g., Anycoder), with devs reporting noticeably improved consistency over earlier monthly builds. app example developer note

Genspark launches voice‑controlled photo editor powered by Realtime voice + Nano Banana

Instead of tapping sliders, you talk: Genspark Photo Genius lets users restyle portraits, swap backgrounds, adjust poses, and fix shots with voice, blending OpenAI Realtime voice and a Nano Banana image stack. feature thread

- Features include makeup/hair/outfit styling, instant background swaps, pose tweaks, and “rescue my photo” fixes. feature thread

- Available now on iOS and Android; requires the latest app update. App Store Google Play

- The product blog outlines the voice loop, edit intents, and image pipeline. product blog

ComfyUI Wan Animate gets eye‑gaze fully fixed via new preprocessing nodes

Eye contact finally looks right in Wan Animate. A small ComfyUI pipeline tweak—preprocessing nodes dropped into the graph—locks gaze alignment across frames for more natural character animations. update note

- The fix ships as pre‑processing nodes you add before animation; users report stable, natural gaze across outputs. update note

- ComfyUI community confirms the gaze issue is “fully fixed” for Wan Animate with this setup. comfy update

Seedream 4 wows with moody B/W portrait set circulating among creators

Creators are showcasing Seedream 4’s photorealistic chops with striking black‑and‑white portrait sets—consistent tonality, crisp skin texture, and cinematic dynamic range. portrait set

- The set highlights editorial vibes (weathered textures, rain, smoke, motion blur) without melting fine details. portrait set

- Prompt collections circulating alongside help others reproduce the look and adapt it to 2.5D or stylized settings. prompt examples

📊 Agent/coding evals and live leaderboards

New agents benchmark infrastructure (Meta ARE + Gaia2) is the main fresh item; also SWE‑Bench Pro refresh threads and new code‑centric evals (CompileBench/CodingBench), a real‑world preference board (SEAL Showdown), and a GPQA audit. Excludes compute feature.

Meta open‑sources ARE + Gaia2: 1,120 async, time‑critical agent tasks go live

Agents now face a smartphone‑like world with deadlines, notifications and tool failures, not static puzzles. Early results show no single winner: GPT‑5‑high leads tougher scenarios but falters under time pressure, while Claude‑4‑Sonnet trades accuracy for speed and Kimi‑K2 dominates low budgets overview thread, benchmark details, paper links, score callouts.

- Scope: 1,120 scenarios across 12 apps (chat, calendar, shopping, email, etc.) with verifiable write actions and DAG‑defined rules benchmark details, simulator brief.

- Token/calls economics: GPT‑5‑high averages ~20 calls using ~100k tokens; Sonnet ~22.5 calls with <10k tokens per call score callouts.

- Key finding: strong reasoning can hurt timeliness (“inverse scaling”)—instant modes help; multi‑agent setups aid weaker systems but give mixed gains for the strongest paper links.

- Resources: full paper and HF demo are public Meta research, demo, with a community leaderboard too Gaia2 and ARE.

SWE‑Bench Pro community scores land: GPT‑5 ≈23%, enterprise tasks stress agents

Public and commercial boards confirm how far real repo work is from saturated benchmarks, following up on initial launch. GPT‑5 posts ~23% Pass@1 on the public set as tasks span 1,865 issues across 41 repos with long, multi‑file diffs; Claude Opus 4.1 is close behind on the private board overview thread, paper link, scoreboard image.

- Task profile: human‑verified, long‑horizon fixes average ~107 LOC across ~4 files; hidden sets prevent contamination overview thread, paper link.

- Leaderboards: browse both sets and the open GitHub artifacts to replicate public leaderboard, private leaderboard, GitHub repo.

- Takeaway: “70%+ on SWE‑Bench Verified” doesn’t translate—agent planning, tool use, and repo context still bottleneck success paper link.

SEAL Showdown launches: live, real‑world chat leaderboard across 100+ countries

Instead of lab suites, Showdown ranks models on opt‑in user conversations across 70 languages and 200+ domains, slicing by age, profession and use case launch, what’s different. Early read: GPT‑5 Chat leads overall, Claude shines in writing/reasoning, and “more thinking” isn’t always better early findings.

- Explore the data and methods: live board and technical report are public live leaderboard, tech report.

- Why it’s useful: captures preference shifts across regions and tasks, and exposes failure modes hidden by static, English‑heavy benchmarks approach brief.

CompileBench debuts: can agents resurrect 22‑year‑old code under dependency hell?

A new benchmark shifts focus from unit tests to build systems and legacy toolchains—think ancient compilers, flags and broken deps. Early commentary notes its leaderboard aligns better with real‑world “vibes” than SWE‑Bench for compile‑centric work benchmark page, benchmark link, blog post.

- Design: measure if agents can diagnose and fix build failures across crusty repos (not just patch logic).

- Why it matters: compilation and environment triage are prerequisite skills for any practical coding agent; scores highlight gaps in tool use and OS literacy.

Audit: labs’ self‑reported GPQA Diamond scores match third‑party runs within error bars

A skeptical check finds no systematic inflation: for each lab’s top model, independent evaluations align with the self‑reports inside 90% confidence intervals findings, method, result. Full write‑up and benchmarking hub are open for inspection data insight, benchmarking hub.

- Implication: while benchmark saturation is real, “grading their own homework” isn’t obviously skewing GPQA; discrepancies are small and statistically insignificant.

⚙️ Deterministic inference and runtime plumbing

Mostly systems pieces today: SGLang adds batch‑invariant attention/sampling for deterministic rollouts (on‑policy RL/debugging); OpenAI Responses API thread on preserving reasoning state across turns (design rationale). Excludes infra costs and hardware below.

OpenAI’s Responses API keeps encrypted reasoning state across turns

OpenAI detailed why the Responses API is the new default for agentic apps: it preserves a model’s internal (encrypted) reasoning state across calls and allows safe continuation, avoiding raw CoT exposure while enabling persistent workflows OpenAI blog, dev resources.

- Reasoning traces are stored internally (client‑hidden) and can be resumed via previous_response_id or reasoning items; raw chain‑of‑thought is never surfaced to clients OpenAI blog, screenshot.

- Design goal: “keep the notebook open” so multi‑turn reasoning doesn’t reset between requests—unlike Chat Completions which drops state between calls key quote.

- Migration resources include a Responses API guide, troubleshooting for GPT‑5 behavior, and a Codex CLI helper for upgrading app stacks dev resources.

- Intended for hosted tools, multimodal steps, and long‑running agents where persistence, safety, and auditability of state matter OpenAI blog, blog link.

SGLang ships deterministic inference with batch‑invariant attention and sampling

Deterministic outputs across batching with an average ~34% slowdown, following up on FP4 on AMD where LMSYS tuned the stack for speed. The new SGLang mode integrates batch‑invariant kernels and per‑request seeding to make RL rollouts and debugging reproducible at scale blog post, feature thread.

- Batch‑invariant attention and sampling ops plug into the high‑throughput engine; compatible with chunked prefill, CUDA graphs, radix cache, and non‑greedy sampling feature thread, blog post.

- Deterministic across variable batch sizes (avoids floating‑point reduction drift); exposes a per‑request seed so temperature>0 runs are repeatable blog post.

- Reported average slowdown ~34.35% vs baseline (lower than prior ~61.5% approaches) while preserving throughput features blog post.

- Target use cases: on‑policy RL, eval reproducibility, and easier bug isolation in agent systems feature thread.

- Acknowledges upstream batch‑invariant kernels from Thinking Machines and collaboration with FlashInfer; rollout credit notes sponsors powering large‑scale tests sponsor thanks.

🗣️ Speech systems and realtime TTS

A heavier speech day than usual: Qwen3‑TTS‑Flash debuts with bilingual stability, 17 expressive voices × 10 languages, 97 ms first packet; plus smaller voice product sightings. Excludes Qwen3‑Omni overall which is in model releases.

Qwen3‑TTS‑Flash debuts with 97 ms first‑packet and SOTA multilingual stability

Alibaba’s Qwen3‑TTS‑Flash launches with 17 expressive voices across 10 languages, nine+ Chinese dialects, and a 97 ms first‑packet latency, posting state‑of‑the‑art content consistency on several multilingual tests announcement.

- Benchmarks charted against ElevenLabs and MiniMax highlight strong CN/EN stability and competitive speaker similarity across Italian, French, Russian, Japanese, and Korean announcement.

- Live demo and samples available via Hugging Face Space and a detailed engineering blog; a YouTube walkthrough shows realtime output and controls HF demo Qwen blog post video demo.

- API documentation is up for developers to integrate low‑latency streaming TTS in apps and agents API docs API docs.

Grok ‘Imagine with speech’ arrives on the web

Grok’s Imagine with Speech—previously mobile‑only—is now live on the web app, following the earlier web rollout toggle sighting feature card.

- Users can enable speech playback directly in the browser alongside conversation memory controls feature card.

Voice‑controlled photo editor lands in Genspark app

Genspark launches Photo Genius, a voice‑controlled image editor that pairs OpenAI Realtime voice tech with Nano Banana image models to edit photos hands‑free feature thread.

- Supports makeup and styling tweaks, background swaps, pose adjustments, and recovery of bad shots; iOS and Android builds are live store links product blog.

Microsoft to pilot 40 VASA‑1 voice avatars in Portraits Labs

Microsoft will debut Portraits Labs, an experiment offering 40 VASA‑1‑powered 3D avatars you can converse with in voice mode feature note.

- The trial emphasizes natural voice interactions via animated characters, expanding beyond text chat use cases feature note.

ElevenLabs shares open‑source web voice dictation component

ElevenLabs’ developer team previews an open‑source “voice dictator” widget that can be dropped into any web app as part of the ElevenLabs UI component registry component teaser.

- Aimed at faster adoption of audio and agent UI patterns, the registry packages reusable building blocks for voice capture and interaction component teaser.

🛡️ Guardrails and governance (frontier and app‑level)

New safety frameworks and tools: Google DeepMind rolls out its latest Frontier Safety Framework; DynaGuard guardian model targets dynamic policies; a UN‑linked ‘Red Lines’ initiative resurfaces; plus Responses API note on hidden reasoning. Excludes evals feature items.

Google DeepMind rolls out updated Frontier Safety Framework

Google DeepMind says it is implementing its latest Frontier Safety Framework, describing it as its most comprehensive approach yet to staying ahead of emerging risks. The update emphasizes organization‑wide processes for risk identification, monitoring, and response. DeepMind post Google blog post

- Focus areas called out include proactive risk discovery, internal controls, and iteration as capabilities evolve. DeepMind post

- The framework is positioned as the lab’s baseline for frontier‑model governance rather than a one‑off policy. Google blog post

OpenAI Responses API encrypts reasoning and enables safe continuation across turns

OpenAI’s Responses API preserves models’ internal reasoning state but keeps it encrypted and hidden from clients, while allowing continued interactions via previous_response_id—aimed at safer, less leak‑prone agent workflows. OpenAI blog policy excerpt screenshot

- Design avoids exposing raw chain‑of‑thought (CoT) yet maintains context for multi‑turn tasks. policy excerpt

- Continuation handles are provided for safe progress without surfacing intermediate reasoning tokens. OpenAI blog

- Implications: improved privacy/guardrails for apps and more consistent eval setups that don’t depend on visible CoT traces. OpenAI blog

DynaGuard enforces dynamic policies across 40k rules, with PASS/FAIL and explanations

Trained on 40,000 unique policies, DynaGuard evaluates LLM conversations against user‑defined rules and returns a PASS/FAIL decision with optional fast or detailed rationales—following up on guardian models broader safety‑stack overview. It ingests both the policy and the dialogue, and the authors report stronger generalization to unseen policies than GPT‑4o‑mini. model overview feature thread

- Inputs: policy text plus a synthetic or real conversation for that policy; outputs: PASS/FAIL. model overview

- Two explanation modes: short label or step‑by‑step trace pinpointing the violated rule(s). model overview

- Claims better handling of dynamic, custom policies vs other guardian baselines, including GPT‑4o‑mini. model overview

UN‑linked ‘Red Lines for AI’ seeks binding 2026 ban on dangerous AI uses

A ‘Red Lines for AI’ initiative surfaced alongside UN General Assembly programming, urging a binding international agreement by 2026 to prohibit high‑risk AI behaviors and deployments. More than 200 leaders and several Nobel laureates are cited as backers. initiative summary UN session video

- Proposed bans include nuclear launch control, mass surveillance, and impersonation of humans at scale. initiative summary

- Implementation ideas include enforced human approvals, strict change control, default‑off for dangerous functions, and content provenance to fight identity abuse. initiative summary

- Calls for mandatory pre‑deployment evaluations, incident reporting, and tested shutdown plans for powerful systems. initiative summary

🗂️ Retrieval for agents: multimodal and agentic stacks

Fresh research and product posts on retrieval: MetaEmbed (flexible late interaction, Matryoshka multi‑vector) and HORNET (agent‑first retrieval). Also product telemetry like retroactive scoring on logs.

MetaEmbed introduces flexible late‑interaction retrieval and sets SOTA on MMEB and ViDoRe

Meta Superintelligence Labs unveiled MetaEmbed, a multimodal retrieval framework that appends learnable Meta Tokens and uses "Matryoshka" multi‑vector training to trade off accuracy vs efficiency at test time. It reports state‑of‑the‑art results on MMEB and ViDoRe, remaining robust up to 32B models paper overview, ArXiv paper.

- Flexible late interaction: a fixed set of Meta Tokens yields compact, adjustable multi‑vector embeddings at inference paper overview.

- Matryoshka training enables coarse‑to‑fine retrieval without retraining, scaling quality by varying vectors used arXiv link.

- SOTA on MMEB/ViDoRe with competitive efficiency; designed to scale up while preserving test‑time control ArXiv paper.

Hierarchical Retrieval recipe boosts long‑distance recall from 19% to 76% with dual encoders

New work formalizes when dual encoders can solve hierarchical retrieval and introduces a pretrain→finetune recipe that specifically targets far‑ancestor matches, lifting WordNet long‑distance recall from 19% to 76% paper summary, ArXiv paper.

- Theory: DEs are feasible if embedding dimension scales with hierarchy depth/log(#docs) method overview.

- Practice: finetuning on long‑distance pairs fixes the "lost‑in‑the‑long‑distance" failure mode while preserving nearby accuracy ArXiv paper.

- Also improves product retrieval in a shopping dataset, indicating applicability beyond WordNet method overview.

HORNET debuts agent‑first retrieval engine for long, iterative AI queries

HORNET is a purpose‑built retrieval system for agents, optimized for long, structured queries and iterative retrieval loops rather than human keyword search. It emphasizes predictable APIs, high recall, and running near your data (VPC/on‑prem) for agent workflows project page, project page.

- Designed for agentic patterns: supports iterative loops, toolchains, and multi‑agent planning without legacy search assumptions project page.

- Deployment where the data lives: VPC/on‑prem options and model‑agnostic design to slot into existing stacks project page.

- Toolbox over a single trick: aims to cover single‑agent reasoning to multi‑agent systems with predictable APIs project page.

Braintrust Data adds retroactive scoring on past logs to catch regressions faster

Braintrust Data now lets teams apply evaluators to historical production logs, including bulk‑applying a scorer to the last 50 entries in a view, to surface missed issues and regressions without redeploys feature update.

- Per‑log or bulk retro‑scoring accelerates incident triage and model/version comparisons feature update.

- Works directly from your existing log views to minimize friction and increase coverage feature update.

🔌 Racks, GPUs and talks: Blackwell and NVL72

Mostly capacity/system SKUs and briefings: Together AI offering NVIDIA GB300 NVL72 racks (72 Blackwell Ultra GPUs/36 Grace CPUs); Blackwell deep‑dive event with NVIDIA’s Ian Buck teased. Excludes the 10‑GW feature above.

Together AI opens access to NVIDIA GB300 NVL72 racks (72 Blackwell Ultra GPUs)

72 Blackwell Ultra GPUs, 36 Grace CPUs, 21 TB HBM3e, and 130 TB/s NVLink—Together AI is inviting early access to NVIDIA’s GB300 NVL72 rack for large‑scale reasoning and inference product brief NVIDIA GB300 page.

- Single 72‑GPU NVLink domain and 800 Gb/s per‑GPU networking via ConnectX‑8 SuperNIC enable tight, rack‑scale collective ops NVIDIA GB300 page.

- Claimed efficiency: up to 10× higher TPS per user and 5× higher TPS per megawatt vs Hopper, yielding ~50× higher AI‑factory output NVIDIA GB300 page.

- FP4 support for dense reasoning; up to ~1,400 PFLOPS (with sparsity), 21 TB HBM with 576 TB/s bandwidth, and 130 TB/s NVLink fabric NVIDIA GB300 page.

- Early access sign‑up is open; the rack is positioned for low‑latency, high‑throughput Blackwell deployments across training‑adjacent inference and long‑context reasoning access note NVIDIA GB300 page.

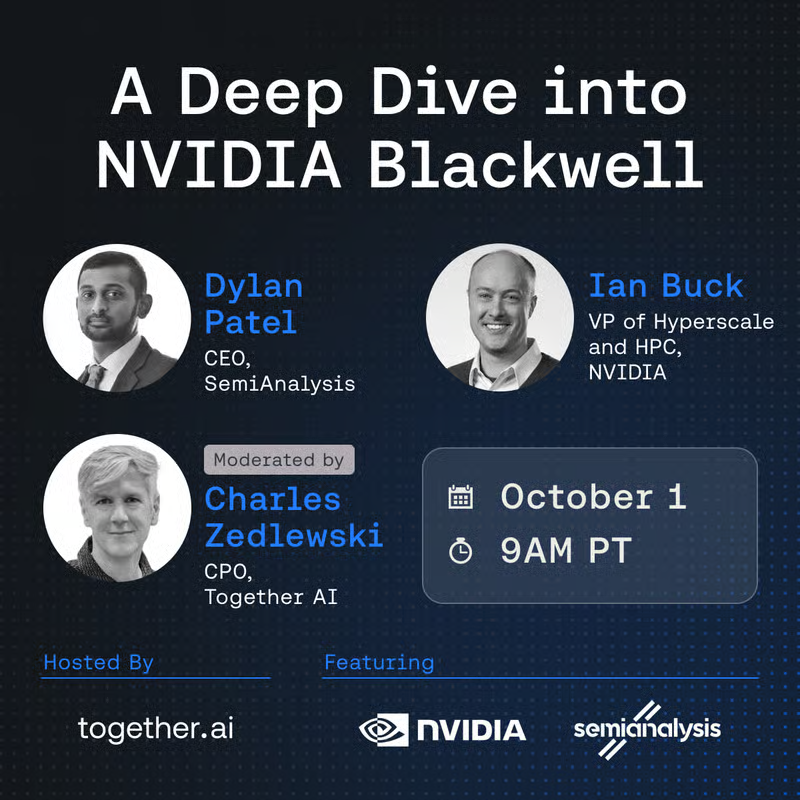

Blackwell deep dive: NVIDIA’s Ian Buck and SemiAnalysis’ Dylan Patel to unpack architecture and GPU cloud needs

NVIDIA’s Ian Buck and SemiAnalysis’ Dylan Patel will host a live deep dive on Blackwell—covering architecture, training/inference optimizations, and GPU cloud requirements; registration is open webinar invite event RSVP.

- Agenda includes how Blackwell works, perf/power optimizations for reasoning, and implications for cloud cluster design webinar invite.

- The session targets practitioners planning Blackwell migrations, with Q&A on workload sizing and deployment tradeoffs RSVP details event RSVP.

💼 Enterprise rollouts and pricing moves

A couple of notable enterprise/product updates: Perplexity Email Assistant GA for Max; ChatGPT Go plan lands in Indonesia; Oracle–Meta AI cloud talks rumor resurfaces. Excludes the OpenAI/NVIDIA feature.

Report: Oracle in talks with Meta on potential $20B multiyear AI cloud deal

Oracle is negotiating a potential $20 billion multiyear cloud agreement to supply compute for Meta’s AI training and inference workloads, though terms remain fluid, according to a report summarized in today’s chatter deal report.

- Deal would cement Oracle as a major AI infrastructure provider for Meta; discussions are ongoing and could change deal report.

Perplexity launches Email Assistant for Max users on Gmail and Outlook

Perplexity rolled out an Email Assistant that auto‑schedules meetings, drafts replies, and prioritizes messages, available now for Perplexity Max subscribers on Gmail and Outlook launch thread. It can propose times, send calendar invites, and tidy inboxes with labeling workflows scheduling demo, organization blurb, with setup available via the new assistant hub get started page.

- Handles scheduling inside the thread, checks availability, and issues invites scheduling demo.

- Auto‑drafts responses matching user tone and style, and organizes inbox views organization blurb, get started page.

- Launch targets Perplexity Max; works across Gmail and Outlook accounts launch thread.

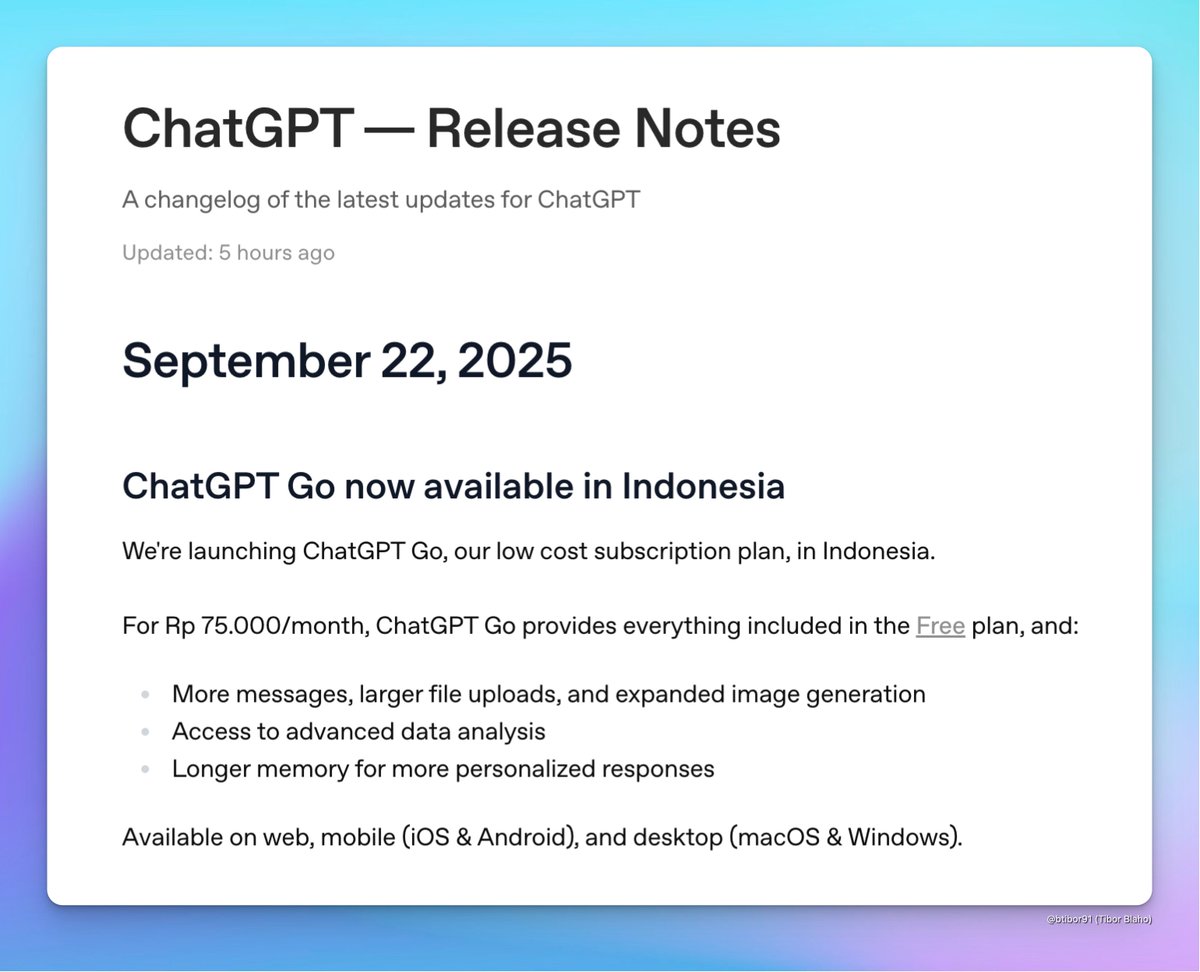

ChatGPT Go debuts in Indonesia at Rp 75,000/month with bigger limits

OpenAI made ChatGPT Go officially available in Indonesia at Rp 75,000 per month, adding more messages, larger file uploads, expanded image generation, advanced data analysis, and longer memory across web, mobile, and desktop apps release note, OpenAI help center.

- Available on iOS, Android, macOS, Windows, and web per the release notes OpenAI help center.

- Positioned as an entry plan with boosted quotas compared to free usage release note.

🦾 Humanoids and resilience demos

A few high‑engagement robotics clips: Unitree G1 ‘anti‑gravity’ stability demos and AheafFrom lifelike facial actuation with self‑supervised control. Light day beyond these.

Unitree G1’s ‘Anti‑Gravity’ mode boosts stability in latest resilience demo

Unitree highlighted a new G1 capability dubbed “Anti‑Gravity,” aimed at markedly improving posture recovery and stability during disturbances Unitree update, echoed in another official post Unitree post. Practitioners note the cadence of rapid skill drops and emphasize that resilience, not just balance, is what matters in the field weekly progress, resilience take.

- Stability is “greatly improved” in the Anti‑Gravity mode according to the company Unitree update.

- Community reactions focus on real‑world robustness over choreographed balance resilience take.

- Frequent G1 updates continue to raise the bar for low‑cost humanoid agility weekly progress.

AheafFrom pairs self‑supervised control with high‑DOF actuation for lifelike faces

AheafFrom (China) showcased a humanoid face rig capable of nuanced, human‑like expressions by combining self‑supervised AI with wide‑range bionic actuation demo thread, follow‑up. The clip underscores how far expressive mimicry has come, signaling fast progress on affect and social cues for embodied agents.

- Self‑supervised algorithms + high‑DOF bionic actuation drive expression fidelity demo thread.

- The demo’s realism suggests tighter human‑robot interaction loops for service and social robotics follow‑up.