Higgsfield Video Face Swap запускает промо на 200 кредитов на 9 часов — команды стремятся к $100 тыс.

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Гигсфилд включил Video Face Swap, и это сразу же полезно: вставляйте своё лицо в любой клип, сохранив освещение, движение, фон и звук. На запуск они раздают 200 бесплатных кредитов в течение 9‑часового окна, а $100K Global Teams Challenge действует до 30 ноября — превратите тесты в готовые работы.

Чем новее по сравнению с планом изображения во вторник: реальное видеоуправление идентичностью на скорости производства. Создатели уже используют цепочку Face Swap для главных крупным планом, Veo 3.1 Fast для движения, затем однокликовый Recast, чтобы зафиксировать полное телопроизведение, лупу Lipsync и многоязычные голоса с более чем 30 наборами предустановок. Ранние демонстрации в реальных условиях выглядят стабильными на повседневном видеоматериале, что важнее блестящему промо‑ролику (приятно видеть, как пыль от мухи и плохое освещение в помещении ведут себя).

Итог: можно подбирать таланты без повторных съёмок, локализовать диалоги без студии дубляжа и сохранять непрерывность между кадрами за один проход. Если у вас есть музыкальное видео или короткометражка, это недорогая неделя, чтобы выстроить согласованную «библию персонажа» и выпустить готовый материал. В качестве опции: открытая функция StepFun Step‑Audio‑EditX (3B) даёт редактируемые вдохи и тон, что плавно встраивается в этот конвейер.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught

Feature Spotlight

Nano Banana 2 pops up across tools

Google’s Nano Banana 2 surfaces in previews (Media IO) and Kling Lab tests; creators share results and note quick takedowns—signaling an imminent, more photoreal baseline for image work feeding video workflows.

Creators spotted Google’s Nano Banana 2 image model in the wild (preview flags, quick removals), with integrations showing up in Media IO and Kling Lab. Multiple demos hint at a near‑term drop and higher realism for image→video pipelines.

Jump to Nano Banana 2 pops up across tools topicsTable of Contents

🍌 Nano Banana 2 pops up across tools

Creators spotted Google’s Nano Banana 2 image model in the wild (preview flags, quick removals), with integrations showing up in Media IO and Kling Lab. Multiple demos hint at a near‑term drop and higher realism for image→video pipelines.

Nano Banana 2 briefly appears in Media IO, then vanishes

Creators spotted “Nano Banana 2 (Preview)” live in Media IO’s Text‑to‑Image picker, generated samples, and then saw the option pulled shortly after UI screenshot. Multiple posts confirm it was accessible for a brief window before removal removal note.

For teams building image→video pipelines, this signals near‑term availability and a realism bump worth planning around.

Kling Lab adds Nano Banana 2 to node workflows

Kling Lab now exposes “nano banana” as a selectable node, letting creators slot Google’s new image model into node‑based I2V/V2V flows integration note. Early tests show style transforms like recoloring and line‑art character looks running through the graph creator test.

This matters for filmmakers and designers who want NB2’s look while keeping a node pipeline for repeatability and hand‑offs.

Creators say Nano Banana 2 passes clock and wine‑glass test

Community reports claim NB2 clears the well‑known consistency check with analog clocks and a filled wine glass—often a failure case for earlier models Turkish note. If this holds in broader testing, it’s a concrete reliability upgrade for product shots and continuity‑sensitive storyboards.

Creators share Nano Banana 2 outputs and drop it into animation flows

During the preview window, creators posted NB2‑credited images (e.g., split product gag) that match the style/quality seen in the picker period creator sample. Others already chained NB2 stills into Veo‑driven music‑video shots via Higgsfield Face Swap/Recast, hinting at practical image→video handoff recipes workflow thread.

If you storyboard in stills, this is a good week to save prompts and frames for side‑by‑side tests when access returns.

Sightings fuel talk Nano Banana 2 could land before Gemini 3

With Media IO’s brief NB2 preview and quick rollback, creators speculate the model may ship ahead of (or alongside) Gemini 3.0 preview sighting. Some posts hype NB2’s image fidelity versus current leaders, though evidence remains anecdotal for now speculation image.

If timing pans out, teams can sequence tests of NB2 stills as inputs to Veo/Kling runs without waiting on broader Gemini updates.

🎥 Edit the camera after you shoot (Veo/Flow)

Veo/Flow gained post‑gen camera tools: change angles, orbit/dolly, and even insert elements directly into generated clips. Multiple demos show practical reframes and object additions. Excludes Nano Banana 2 (covered as feature).

Flow adds post‑gen Camera Adjustment; Ultra can reframe Veo clips now

Flow by Google now exposes post‑gen Camera Adjustment in its editor. Ultra subscribers can tweak position, orbit, and dolly on Veo clips, with access free for a limited time Feature brief.

Following up on camera control tests from yesterday, creators are showing practical reframes. A soccer example shows clear “Before/After” crops that hold detail and motion Before/after reframes. A separate demo toggles wide, close, and top‑down angles from the same generated mountain shot using the UI icons Turkish demo.

Why this matters: you can salvage takes, punch in for coverage, and build multiple shot sizes from one render. That cuts reshoots. It also gives editors something closer to real camera language.

- Try tight “punch‑ins” on dialogue to create B‑cam coverage.

- Use top‑down/drone presets to add geography without re‑generating the scene.

- Batch reframes for social cut‑downs (9:16, 1:1) from one master.

Flow’s Insert lets you add objects into finished Veo videos

Flow’s new Insert tool lets you draw a region and place elements directly into a generated video. In a live demo, a glowing sword is added near a martial artist, turning a plain move into a stylized beat Insert walkthrough.

The point is: you can fix prop continuity, add VFX accents, or punch up a gag without re‑prompting the whole shot. This keeps your visual language consistent while you iterate.

- Isolate the action area, then Insert props or light streaks for emphasis.

- Route subtle overlays first; heavier objects need tighter masks and shorter crops.

- Export variants to test timing before locking the final.

👤 Face & full‑body recast for production

Higgsfield’s Video Face Swap went live and creators are chaining it with Recast for full‑body swaps, lipsync, and background/voice changes—turning quick performances into castable characters.

Higgsfield Video Face Swap launches with 200‑credit 9‑hour promo; uptake spikes

Higgsfield opened a 9‑hour, 200‑credit giveaway for its new Video Face Swap, following up on Recast launch full‑body swaps and dubbing. The tool maps your face onto any video while preserving lighting, motion, background, and audio launch thread, and the team notes DMs are “busier than caffeine,” signaling strong engagement DMs uptick.

Creators get a fast way to cast themselves or talent into shots without reshoots. The post also points users to active creation, with a separate $100K teams challenge running this month Challenge page.

How creators chain Face Swap + Recast + Veo for consistent music videos

A practical thread shows a production workflow: design band members, use Video Face Swap to lock close‑ups, animate with Veo 3.1 Fast, then one‑click Recast to replace actors and keep identity across shots (plus lipsync snippets from the actual lyrics) workflow demo. This turns quick stills into coherent sequences with consistent faces and performance beats.

FaceSwap Video in the wild: creator demo and direct app link

Early user posts show the feature working on everyday footage, with clean transitions as faces morph while camera and lighting remain intact creator demo. If you want to try it right now, the app page outlines the flow (upload face + target video, generate, download) Face Swap app.

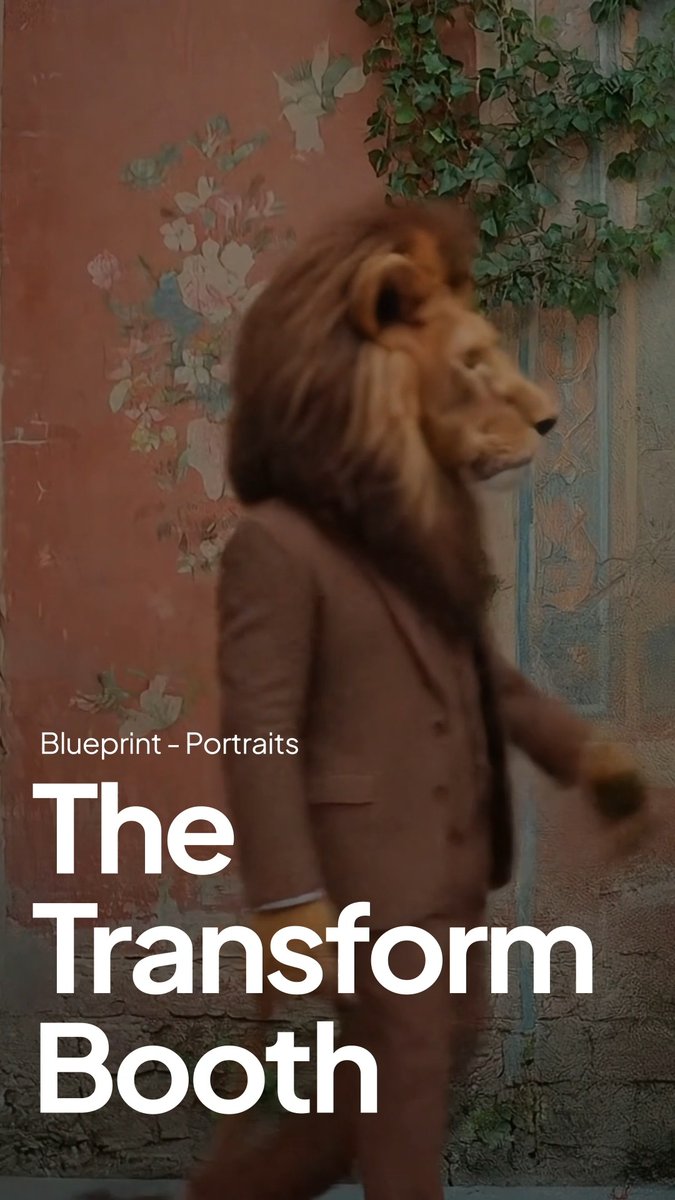

🧭 Blueprints for multi‑angle scenes & portraits

Leonardo Blueprints continue to land in creator workflows—turning a single still into 45+ shot variations and generating studio portraits from one photo (discounted tokens). Excludes Nano Banana 2 (feature).

Blueprints pull 45+ camera angles from one still (PoV Change)

Leonardo’s Blueprints Point‑of‑View Change turns one still into a sequence of 45+ distinct shots, preserving identity and scene continuity. A creator shows rapid angle cuts—close‑ups to wides—in minutes, useful for previz, shorts, and music videos angle montage.

The breakdown: start with a high‑def still (e.g., Lucid Origin), animate a baseline clip, then feed the last frame back into Blueprints for alternate angles—keeping prompts compact while styles stay consistent how‑to thread. You can try it directly in Leonardo’s app while the templates roll out broadly Leonardo app.

Blueprints do studio portraits from one photo, 75% off tokens

Leonardo is pitching “one photo is all it takes”: Blueprints can generate studio‑quality portrait sets from a single upload, with tokens discounted 75% for a limited time pricing promo. That helps teams spin up consistent headshots or character sheets fast.

The promo reel cycles through varied lenses and backdrops without juggling multiple references, which keeps a lookbook coherent across shots. You can jump in from the main site while the discount is live Leonardo site.

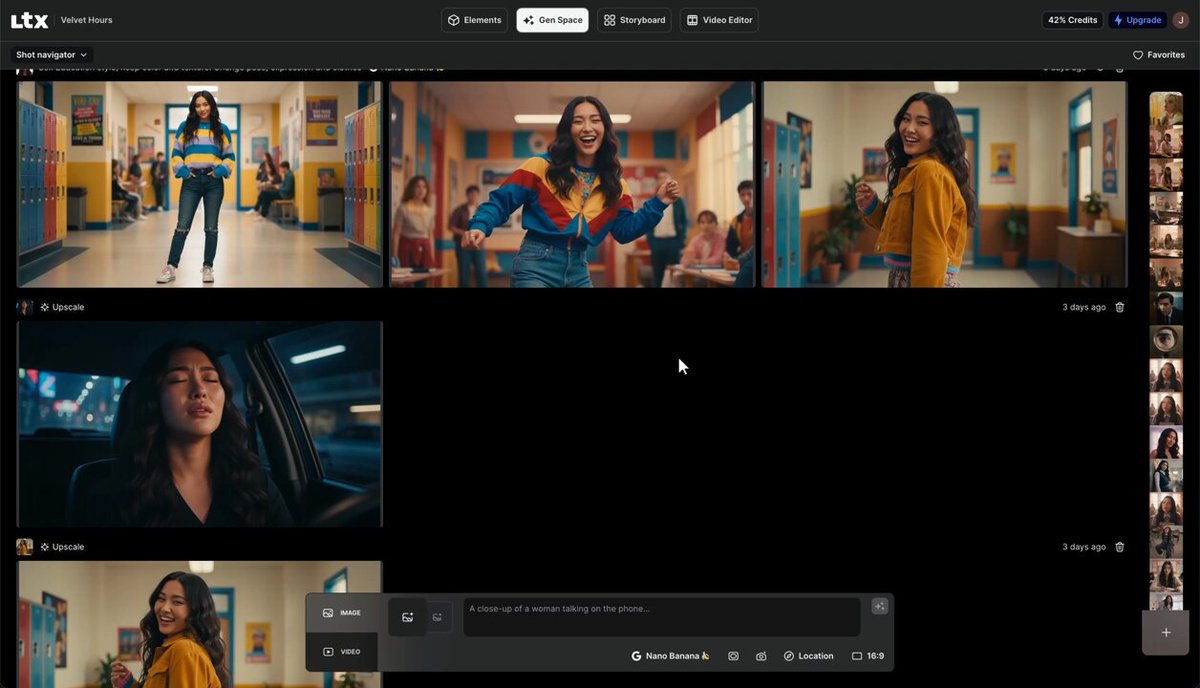

🧩 Keep characters consistent with LTX Elements

LTX Studio’s Elements workflows show how to lock identity across shots without constant re‑uploads—step‑by‑step videos, presets, and storyboard integration. Excludes Nano Banana 2 (feature).

LTX Elements Step 1: Save a close‑up as a reusable character

LTX Studio shows how to lock identity from the start: generate or upload a well‑lit close‑up, then Save as Element and name it for reuse across shots. This establishes a consistent face so you don’t re‑upload references for every scene Step 1 video.

LTX Elements Step 2: Tag the character and add a concise prompt

Next, tag your saved character as an Element and add a short, descriptive prompt so the system knows who this is in every shot. This reduces prompt bloat while preserving identity continuity across scenes Step 2 video.

LTX Elements Step 3: Stack shot presets for repeatable framing

Use shot presets to define angle and framing, then layer weather and lighting parameters for consistent results shot‑to‑shot. This makes composition repeatable without rewriting long prompts Step 3 video.

LTX Elements Step 4: Build continuity in Storyboard before generation

Plan pacing and continuity in Storyboard, then generate with your saved Elements plugged into each shot. It’s a pre‑production pass that reduces reshoots and keeps character identity stable Step 4 video.

LTX‑2 holds #3 video model spot on Artificial Analysis

LTX‑2 is now ranked the #3 video model on the Artificial Analysis Video Arena, reinforcing the viability of the Elements‑led workflow for production use Arena ranking. This follows Arena ranks, where LTX‑2 showed #3 in image‑to‑video and #4 in text‑to‑video.

LTX Elements pro tips: Wardrobe as Elements, organize assets, pair with Storyboard

LTX Studio shares practical ways to stay consistent at scale: save wardrobe as separate Elements for fast outfit swaps, organize/rename assets in the Elements tab, and combine with Storyboard for seamless flow Pro tips video. Try Elements inside LTX Studio here LTX Studio site.

⚡ Grok Imagine’s animation glow‑up

Creators report a step‑change in Grok Imagine for motion and style: from mograph crystals to neo‑noir, water‑ripple magic, and 80s anime romance. Excludes Nano Banana 2 (feature).

Creators say Grok Imagine’s latest build is “new favorite” for animation

Multiple creators report a clear quality jump in Grok Imagine’s motion and style, calling it their “new favorite video model” and a “night and day difference” from recent months favorite comment, quality remark. Sample reels span anime action and stylized clips, pointing to better frame coherence and timing for shorts, music videos, and promos anime set.

For production teams, the takeaway is simple: Grok now looks viable for fast-turn expressive pieces without heavy post.

Neo‑noir graphic style holds up in motion under neon rain

The neo‑noir graphic walk test shows Grok Imagine keeping bold silhouettes, inky shadows, and neon streaks intact across frames neo-noir demo. Stylized looks often fall apart in motion; this one stays legible for bumpers and interstitials.

Use this for comic‑book interludes, lyric videos, or moody scene transitions where vibe consistency matters.

Text→image→video flow looks smoother after the latest update

Creators show a “text to image to video” pipeline with Grok that feels tighter on coherence and pacing, calling the latest update “awesome” text‑image‑video clip. It’s a fast path from concept to motion without hopping tools.

Use it for quick drafts of idents or narrative beats before committing to longer passes.

Water ripples and reflections look more physically plausible in Grok

A water‑surface sequence highlights improved resistance, ripple propagation, and highlight roll‑off around a glowing disk—details that typically break in AI video water ripple clip. The clip reads as one continuous surface, not a frame‑by‑frame guess.

For music visuals and ambience loops, this reduces post fixes to hide shimmer or warping.

80s anime romance shots land with soft lighting and stable close‑ups

An OVA‑style test leans into gentle embraces, pink neon, and starry backdrops; faces stay stable across cuts, which is where many anime runs fail 80s romance clip. Other creator reels echo Grok’s recent animation gains for stylized motion anime set.

If you storyboard emotional beats, Grok now looks credible for character‑driven shorts.

Grok Imagine nails mograph crystal animations with clean lighting and material response

A new motion-graphics demo shows crystalline forms with convincing refraction, glow and timing—“everything you see was created with Grok Imagine AI” mograph demo. This matters for title cards and brand idents where light behavior sells the look.

If you make openers or stingers, it’s worth stress‑testing Grok on light‑driven materials.

Anime character look work: Alucard test stays crisp in motion

A short Alucard clip stays on‑model through a push from close‑up to full‑body, with controlled line weight and gradients alucard clip. That consistency opens the door to short anime bumpers without constant re‑generations.

Try it to validate whether your character bible survives camera changes.

Image‑in, no‑prompt tests still produce varied, usable motion

A montage run seeded by static images—with no added prompt—yields multiple stylized clips, suggesting stronger defaults for exploratory passes no‑prompt montage. This helps when you want motion ideas first, then refine prompts second.

It’s a handy ideation trick for mood boards and quick pitch comps.

Process realism: Grok handles pizza prep macro shots cleanly

A “pizza prep” sequence shows tight hand‑work, dough deformation, and flour residue that remain coherent frame‑to‑frame pizza prep clip. That’s useful for food creators and ad mockups where tactile motion sells the scene.

If you do how‑to reels, this is a good baseline test for texture stability.

✂️ Faster, cheaper video editing engines

fal highlights Decart’s Lucy‑Edit getting 2× faster at half the price (open source) and creators praise Kling’s motion fidelity for fight beats. Excludes Nano Banana 2 (feature).

Decart Lucy‑Edit doubles speed and halves price, now open source

Decart’s Lucy‑Edit video editor just got a significant upgrade: 2× faster edits, half the price, and an open‑source release, with a direct Pro endpoint available via fal for production use upgrade details Pro model page open source note. For small teams and solo creators, this is a straight cost–time win on everyday cutdowns, fixes, and reframes.

The point is: you can now batch more client asks per hour without switching tools or paying more. If you bounced off earlier latency or cost, this clears both blockers in one go.

Creators say Kling 2.5 leads for convincing fight‑scene motion

After hands‑on comparisons, creators highlight Kling 2.5 for the most natural martial‑arts motion among current I2V/V2V tools—clean arcs, weight, and expressiveness that hold up in close shots motion comment. If your reels rely on stunt‑style beats, this is the engine to try first before layering post.

Here’s the catch: quality wins don’t always equal perfect controls, so plan a quick pass for timing trims and impact accents.

🗣️ Real‑time avatars and expressive TTS

New options for dialogue, dubbing, and character presence: HeyGen’s LiveAvatar enables live face‑to‑face AI, and StepFun open‑sources an LLM‑grade audio editor for emotion‑rich speech. Excludes Nano Banana 2 (feature).

HeyGen launches LiveAvatar for real‑time face‑to‑face AI conversations

HeyGen introduced LiveAvatar, a hyper‑real, interactive avatar system that talks back in real time with synced facial motion and gestures, aimed at creators and enterprises building live dialog, support, and talent workflows launch thread.

The pitch highlights expressive presence rather than canned reads, with early power users like Reid Hoffman testing live use cases launch thread. For teams doing live explainers, virtual hosts, or multilingual office hours, this reduces booth time and unlocks “always‑on” talent without a green room.

StepFun open‑sources Step‑Audio‑EditX (3B) for expressive, editable speech

StepFun released Step‑Audio‑EditX under Apache 2.0: a 3B‑parameter model that does zero‑shot TTS, multilingual voice editing, and control over 10 paralinguistic cues (breaths, laughs, whisper, sigh) via text prompts—deployable on a single GPU feature list. It’s positioned as the first open LLM‑grade audio editor that can iterate emotion and style, not just read lines project intro.

For filmmakers and voice leads, this means you can retime or change tone without re‑recording, keep character continuity across languages, and prototype alternate reads inside your pipeline.

Higgsfield Recast adds full‑body swap with voice cloning and multilingual dubbing

Higgsfield is pushing character presence beyond faces: Recast now performs one‑click full‑body swaps with gesture tracking, lip‑sync, voice cloning, and dubbing across multiple languages, with 30+ character presets for fast creative tests feature explainer. Creators are already stacking Face Swap for identity, Recast for performance and voice, then animating in Veo—speeding up music videos and short narratives creator tutorial.

If you need consistent on‑camera personas and localized dialogue without a studio day, this glues identity, motion, and speech into one editable pass.

🎨 Today’s style drops and prompt recipes

Fresh looks for illustrators and art directors: fluid anime prompts, pencil‑sketch sref, pop‑up diorama book style, and MJ V7 collages. Excludes Nano Banana 2 (feature).

Fluid anime: a reusable watercolor prompt for 2D scenes

Azed shares a complete “fluid anime” text prompt that yields soft watercolor linework, airy brush textures, and gentle two‑color blends for dreamlike 2D shots Prompt recipe. The post includes multiple examples and ALT prompts to riff on background, palette, and subject.

Children’s‑book diorama sref (--sref 3809639342) for pop‑up looks

A new Midjourney sref combines narrative children’s illustration with a 3D paper‑stage diorama feel—ideal for storybooks, stop‑motion vibes, or pop‑up spreads Style reference. Expect layered cardstock, cut‑edge shadows, and warm, toy‑scale lighting.

Midjourney pencil sketch sref (--sref 3127145650) lands

Artedeingenio publishes a pencil‑sketch style reference for Midjourney that produces clean graphite portraits with visible strokes, fine shading, and prop‑styled pencils in frame Style reference. It’s a fast way to get consistent hand‑drawn looks across characters.

MJ V7 collage look: sref 3848635586 + stylize/chaos recipe

Azed posts a Midjourney V7 collage recipe using --sref 3848635586 with --stylize and --chaos params to get soft painterly editorial panels in coordinated palettes Collage recipe. It’s a handy preset for moodboards or multi‑panel art direction.

“QT your mask” sparks geometric mask portrait designs

The mini‑prompt invites creators to post masked characters; standouts feature angular folded‑fabric and sculptural forms that double as silhouette anchors in portraits Mini challenge. Community QTs show riffs from metallic braincaps to sequined headpieces Community reply.

Neo‑noir graphic style proves animation‑ready in Grok Imagine

Following up on Neo‑noir style, today’s clip shows the same stark comic look holding up in motion—rain, neon, and silhouette reads remain crisp in Grok Imagine Video demo. This makes the sref a solid pick for moody interludes and logo beats.

🤖 Assistants that actually do the work

Mobile and generalist helpers for creators: Perplexity Comet on Android controls the browser on command; Runable pitches an all‑purpose agent with 10k free credits. Excludes Nano Banana 2 (feature).

Perplexity Comet on Android now drives the mobile browser

Perplexity’s Comet Assistant can now navigate and act inside the Android browser on command, with invites rolling out to select users Invite note. Following Android beta tests, today’s clips show Comet executing voice‑driven search and page actions on phone and tablet.

Why it matters: this moves mobile assistants from “answer engines” to doers—useful for creators on the go who need quick research, link gathering, and task hand‑offs without touching the screen.

Runable debuts a general agent with 10k free credits

Runable announced a general AI agent that builds slides, sites, reports, podcasts, images, and videos, with 10,000 free credits offered for shares and early‑access signups Launch details.

For small creator teams, this is a single entry point to spin up multi‑format assets without bouncing across tools; the question is whether it handles real briefs with source links, revisions, and brand style constraints.

📺 Brands lean into AI storytelling

Progressive runs an AI‑animals spot in Monday Night Football, Fiat Toro pairs real footage with AI worlds, and Coca‑Cola revives its 1995 holiday ad via AI pipelines—case studies for agency teams. Excludes Nano Banana 2 (feature).

Coca‑Cola revives 1995 holiday ad with AI

Coca‑Cola’s creative lead says the brand re‑made its classic “Holidays Are Coming” in < 1 month using AI pipelines alongside partners like Silverside AI, framing it as an “open‑source” model of co‑creation across teams creative model note.

For agency producers, the number matters: a year‑long cycle cut to weeks means you can ship seasonal work on time with modern AI workflows while keeping legacy look‑and‑feel.

Corona Cero posts 440% lift from AI‑informed Olympic spot

AB InBev’s Corona Cero built “For Every Golden Moment” in three weeks, blending AI language analysis with behavioral psychology; the campaign drove a 440% sales increase and is expanding for Winter 2026 case study.

Why it matters: AI can surface universal motivators (esteem, belonging, shared success) to guide story beats, while human teams craft the emotion—useful for briefs that need measurable lift fast.

Bayer tests agentic ad stack, gains 6% new sales

Bayer integrated a custom Chalice AI algorithm inside Snowflake to keep control over first‑party data and drive media decisions, reporting a 6% lift in Claritin sales among new customers case study.

For brand engineers, this signals a shift toward composable, privacy‑aware ad stacks where AI agents plan and buy against secure data rather than shipping it to third‑party black boxes.

Fiat Toro mixes AI universes with real footage

Fiat Brazil launched “New Fiat Toro. Makes any prompt look real.”—pairing AI‑generated worlds with live‑action truck shots, spanning TV, OOH, influencer pushes and football broadcasts on Globo campaign details.

The takeaway for creatives: use AI scenes as a creative language, not a crutch—slotting them beside live footage to sell a tangible product without losing authenticity.

Progressive runs AI-animals spot on Monday Night Football

Progressive aired a 30‑second “Drive Like an Animal” ad featuring AI‑generated lion, hyenas, sloth and deer personas, with a disclosure that all animal and vehicle imagery was created using AI; Flo reappears as an AI llama campaign recap.

For brand teams, this shows broadcast‑grade acceptance of AI visuals when clearly labeled, and a workable hybrid approach that keeps a familiar brand voice while testing new production methods.

🏆 Reasoning model leaderboard watch

Writers and directors watch the benches: polaris‑alpha (rumored GPT‑5.1) tops creative‑writing Elo; Kimi K2 trends on HF; GPT‑5.1 timing rumors resurface. Excludes Nano Banana 2 (feature).

Polaris‑alpha tops Creative Writing; Kimi K2 trends on HF

The Creative Writing v3 leaderboard now shows “polaris‑alpha” in first with Elo ≈1747.9 (rubric score 83.00), ahead of Claude Sonnet and o3; the post suggests it may map to GPT‑5.1, while Kimi K2 Thinking also appears on the chart with Elo ≈1599 Benchmarks chart. Following up on K2 metrics strong agentic scores, Kimi K2 is also the #1 trending model on Hugging Face, signaling fast open‑source adoption among builders HF trending.

For creatives, this hints at sharper long‑form style control and pacing from frontier models, with open‑source options closing the gap. Treat Elo as directional (pairwise, evolving), but the separation at the top is notable for narrative tasks and script polish.

🎬 Calls, challenges, and big screens

Opportunities to ship work: OpenArt’s Music Video Awards put community reels on Times Square; Higgsfield’s $100K Global Teams Challenge continues this month. Excludes Nano Banana 2 (feature).

Higgsfield $100K Global Teams Challenge opens

Higgsfield kicked off a month‑long Global Teams Challenge with a $100,000 prize pool, accepting team submissions through Nov 30 and awarding 26 teams across a grand prize, five categories, and 20 Higgsfield Choice awards. The rules page outlines judging on creativity, teamwork, technical quality, and audience engagement, with Team Leads responsible for prize distribution Challenge thread and Challenge rules.

For AI creators, this is a concrete brief plus real budget—use their video tools (Face Swap, Recast, lipsync) to ship polished pieces fast and rally collaborators.

OpenArt MVA Times Square showcase ongoing; submit by Nov 16

OpenArt says creator reels are still rolling on Times Square screens, and submissions for the Music Video Awards (theme: Emotions) remain open until Nov 16—following up on Times Square placement earlier this week. The program page confirms timing and criteria; winners get prominent billboards plus community spotlight Times Square reel and Program page.

If you have a cut nearly ready, lock the song and narrative, export a tight 20–60s canvas, and submit in the next few days to catch the window.

🏗️ Megascale AI datacenters and footprint

Threads detail massive AI builds (e.g., “Stargate Abilene” scale, GW power, water usage) and climate impacts vs grid. Useful context for creatives planning long‑run cloud costs/availability. Excludes Nano Banana 2 (feature).

OpenAI’s $32B ‘Stargate Abilene’ and a 20–30 GW US AI grid by 2027

A detailed buildout note pegs OpenAI’s “Stargate Abilene” at roughly $32B, with >250× GPT‑4 compute and Seattle‑scale power, while US AI datacenter load could hit 20–30 GW by late‑2027 buildout thread. It cites 17.4B gallons of AI water use in 2023, siting bias to the US Midwest/South for power and permitting, and near‑term reliance on gas turbines with diesel backup.

Why this matters: creatives will feel it in render queues, burst capacity, and pricing. Expect region‑specific availability and energy‑indexed costs as platforms chase stable power and cooling.

- Route heavy jobs to regions with surplus renewables and cooler climates.

- Plan for scheduling and retries; avoid single‑region dependence during peaks.

- Track vendors’ water and energy disclosures; some will add “green” routing toggles.

AI data centers’ footprint: 8,930 tons CO₂ per model and water strain

A new explainer for non‑infra folks frames the externalities: training a single large model can emit up to 8,930 tons of CO₂, with total AI infra spend projected up to $7.9T by 2030; it flags electricity and water stress in already constrained regions and suggests mitigations like verifiable renewables, colder‑climate siting, and smaller, efficient models carbon footprint post.

For studios and freelancers, clients will ask for sustainability posture. Expect labels, region flags, and “green lane” presets in tools.

- Prefer colder, hydro/wind‑rich regions for long renders.

- Document your project’s region choices when pitching enterprise work.

Nvidia’s Jensen Huang flags energy subsidies as China advantage in AI

Nvidia’s CEO told the FT that China’s subsidized power and looser regulation let it stand up AI datacenters faster, while stressing China is only “nanoseconds” behind the US and the US can still win by pushing harder and securing developers FT interview. The subtext is simple: energy price and permitting speed now gate capacity as much as GPUs.

Creators should hedge with multi‑region pipelines. If US states tighten rules or prices spike, cross‑region failover will keep delivery dates intact.

🧪 R&D to watch: camera logic and transparency

Fresh papers/tools relevant to creators: reference‑based camera cloning for I2V/V2V, limited but scaling introspection in LLMs, and DeepMind’s AlphaEvolve approach to discovery. Excludes Nano Banana 2 (feature).

AlphaEvolve uses evolutionary code search to discover new math results

Google DeepMind, working with Terence Tao, introduced AlphaEvolve—an evolutionary discovery agent that writes and tests code to search vast spaces and produce novel constructions. In trials across 67 optimization and geometry problems, it rediscovered known results and found new ones (e.g., finite‑field Kakeya in 3D–5D, an improved 3D sofa shape with verified volume >1.81), then routed candidates into proof tooling for verification project overview.

For creators and toolmakers, this shows a path to agentic “search‑then‑build” loops: instead of prompting one shot, let an agent evolve many candidate pipelines (prompts, parameters, compositors) and select by metrics—useful for camera plans, edit graphs, or animation rigs.

Claude’s self‑reporting on injected thoughts works ~20% at best

Anthropic researchers report that Claude Opus 4.x can sometimes notice when specific “concept” activations are injected into its hidden states, but only in about 20% of cases under optimal settings. The team also finds post‑training improves this introspection and that models can distinguish injected thoughts from input text, yet failures remain the norm—so treat self‑reports as hints, not ground truth study recap.

Why this matters for creatives: reliability governs whether you can trust agent logs, safety flags, or “reasoning traces” inside production assistants. The takeaway is simple. Keep human review in the loop for editorial, safety, and crediting workflows, and log prompts/outputs rather than relying on model‑declared intent.

⚖️ Trust, consent, and AI visuals

Public trust in AI imagery remains shaky in news contexts, and a report alleges xAI required staff biometrics to build a virtual girlfriend. Practical guardrails matter for client work. Excludes Nano Banana 2 (feature).

WSJ: xAI allegedly used staff biometrics to train “Ani” companion

Internal docs cited by WSJ reportedly asked xAI employees to grant a perpetual license to their faces and voices to help train “Ani,” a Grok companion included in the $30/month SuperGrok tier. For creatives and studios, this lands squarely on consent, releases, and data-rights workflows when training or fine‑tuning identity models. allegations thread

Study: Audiences accept AI art in news graphics, reject it as evidence

A Digital Journalism study (N=25 across four focus groups) found people are okay with AI imagery for illustration, charts, and satire—but push back on AI visuals as proof in political/conflict reporting; a “labeling paradox” remains as many can’t reliably tell real from synthetic. If you publish mixed‑media stories, maintain clear labels and provenance notes. study summary

Progressive airs AI‑generated animals ad with on‑screen disclosure

Progressive’s 30‑second “Drive Like an Animal” spot features AI‑generated creatures and opens with an explicit AI disclosure, then brings back mascot Flo as an AI llama. For brand teams, this is a live example of disclosure formatting in broadcast contexts and a bar for client approvals. campaign recap

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught