Gemini Enterprise launches with no‑code agents – 1.3 quadrillion tokens, 50% AI‑written code

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Google Cloud rolled out Gemini Enterprise, a unified agent platform that puts a single chat front‑end on top of a no‑code workbench for building and orchestrating workplace agents. It matters because this isn’t another chatbot; it’s a way to wire real business logic into tools people already use. Google says it now processes 1.3 quadrillion tokens per month across its surfaces, and roughly 50% of Google’s code is AI‑written then reviewed and accepted by engineers — a signal the company trusts its own stack at scale.

Early demos show practical workflows: a meeting‑scheduling agent that negotiates calendars and a Marketing Media Agent that pulls assets, drafts copy, and routes approvals. The pitch is breadth of integrations — Google Workspace, Microsoft 365, Salesforce, SAP — plus prebuilt and custom agents that can chain actions across those systems without custom glue code. The “Gemini at Work” livestream backs it up with end‑to‑end runs rather than slideware, which is exactly what IT leaders want to see before rolling agents into production.

Meanwhile, ignore the Veo 3.1 rumor mill until Google DeepMind or Gemini posts official notes; today’s real news is enterprise agents moving from deckware to deployment.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught

Feature Spotlight

Veo 3.1 rumor check: exclusivity, lengths, pricing

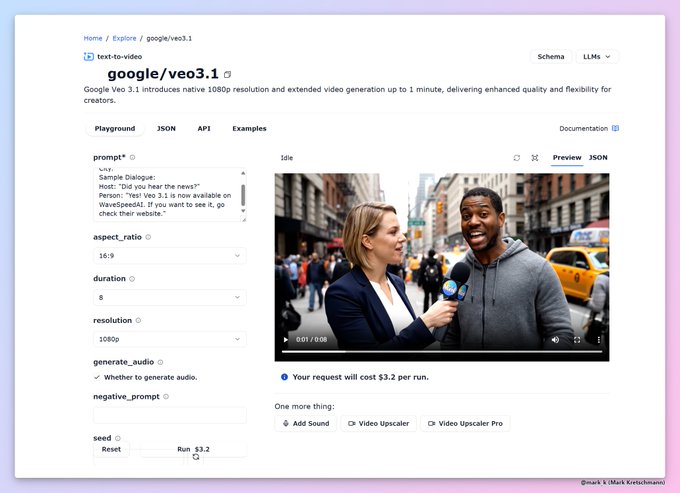

Creators debunked Veo 3.1 hype: “exclusive” access, 30–60s claims, and a $3.2/8s screenshot. Google’s Logan Kilpatrick said “not true,” and suspect pages vanished—don’t plan shoots until details are official.

High‑volume cross‑account scrutiny of Veo 3.1 claims today—exclusivity, 30–60s lengths, and pricing screenshots—met with debunks and removed pages. Creator takeaway: wait for official sources before planning deliverables.

Jump to Veo 3.1 rumor check: exclusivity, lengths, pricing topicsTable of Contents

🚨 Veo 3.1 rumor check: exclusivity, lengths, pricing

High‑volume cross‑account scrutiny of Veo 3.1 claims today—exclusivity, 30–60s lengths, and pricing screenshots—met with debunks and removed pages. Creator takeaway: wait for official sources before planning deliverables.

Logan Kilpatrick denies Vadoo’s Veo 3.1 feature claims

Google’s Logan Kilpatrick publicly refuted a viral Vadoo AI post listing Veo 3.1 features (30‑second videos, native audio, 1080p), stating “This is not true” Debunk reply. That aligns with creator cautions of “No Veo‑3.1 Today” until official word lands Creator roundup, following up on Prompt blueprints which covered actual Veo‑3 Fast guidance.

Hold deliverables that depend on 3.1‑specific specs until Google DeepMind or Gemini surfaces official release notes.

Community pushes back on “Veo 3.1 exclusively on Vadoo” boast

A Vadoo AI teaser claimed Veo 3.1 is “coming soon — exclusively on Vadoo AI,” prompting immediate skepticism from creators who doubted a single‑platform exclusive for a Google model Exclusivity claim. Others noted multiple hosts are hinting at access, contradicting any exclusive Follow‑up note.

Creator takeaway: treat exclusivity banners as marketing until Google names official partners.

Pulled page showed $3.20 for an 8‑second Veo 3.1 run before 404

Screenshots circulated of a third‑party console pricing “Veo 3.1” at $3.20 per 8‑second, 1080p run, but the page later returned a 404, casting doubt on the quote’s validity Pricing screenshot.

Until pricing is published by Google or named resellers, don’t lock budgets to leaked per‑run costs.

Higgsfield page mislabeled Veo 3.1 as Open Sora with a 2025 start date

A Higgsfield webpage described “Veo 3.1” as “the next major version of Open Sora” and listed “Users can start generate Veo 3.1 starting on September 1, 2025,” drawing criticism for mislabeling the Google model and posting an incongruent timeline Higgsfield page. The call‑out was amplified with a follow‑up pointing to the mismatch Follow‑up mention.

If you see “Veo 3.1” paired with non‑Google branding or future‑dated availability, assume placeholder content.

Platforms tout 30–60s Veo 3.1 clips, but no official confirmation

Multiple generators hinted in under 24 hours that Veo 3.1 will produce 30–60 second videos; others say 8 seconds by default, yet none of these durations are confirmed by Google Length claims thread. A separate creator asserted 8s defaults as part of an unverified “Veo 3.1” demo Made with claim.

- Planning tip: assume today’s shipped Veo durations (e.g., Veo‑3 Fast) until Google publishes 3.1 specs.

Unverified “made with Veo 3.1” posts surface with app screenshots

Creators shared videos labeled “made with Veo 3.1” plus UI screenshots as “proof,” but provenance is unclear and arrives ahead of any official release notice Made with claim, Made with claim. A separate “app screenshot” was posted to legitimize the claim, but details remain unverifiable App screenshot.

Treat 3.1 badges and UI grabs as speculative until Google or Gemini documents features and access.

🎬 Sora 2 prompt kits and preset workflows

Fresh creator‑ready prompt frameworks and presets centered on Sora 2, from Freepik’s multi‑format recipes to Higgsfield’s viral, UNLIMITED preset packs and community promos. Excludes Veo 3.1 rumor (feature).

Freepik publishes seven Sora 2 prompt templates with working examples

Freepik rolled out a creator-ready set of Sora 2 prompt frameworks covering marketing spots, UGC, cinematic intros, anime scenes, CCTV cuts, horror trailers, documentaries, and ASMR—with clips that actually match the prompts. They also share a reusable structure to adapt across formats (“A [type of video] showcasing [subject]… Include [audio/VO]…”) so creatives can iterate fast Examples thread, and apply it consistently to each genre example Structure tip.

Higgsfield Trends adds 25+ cinematic Sora 2 presets with limited‑time credits promo

Higgsfield is pushing 25+ cinematic Sora 2 presets organized by use case and social format, paired with a 9‑hour “share 4 replies + RT” offer for 250 credits via DM—framed as UNLIMITED to use for now Preset promo. Following up on unlimited access, the site hub centralizes the presets and access tiers for creators looking to batch produce shorts and reels Higgsfield homepage.

10 Sora 2 prompts with outputs and alt‑text recipes

A creator thread packages ten “viral‑style” Sora 2 prompts—each with a finished clip and the exact prompt in ALT text—spanning slapstick fails, conspiracies, cooking mishaps, and gym stunts. It also explains how to get unlimited Sora 2/Pro access through Higgsfield if you want to replicate the results at scale Examples thread, with an affiliate on‑ramp for Ultimate/Creator plans Higgsfield promo page.

SJinn Agent ships an ‘unlimited duration’ Sora 2 template with image/video inputs

SJinn is promoting an ‘Unlimited duration Sora 2’ template where you feed an image or video plus a duration to generate longer sequences—handy for extending shots or chaining beats inside one run Unlimited duration note, with a ready‑to‑use workflow page for creators to try now Sora 2 template.

WaveSpeedAI offers Sora 2 text‑to‑video runs with simple setup

WaveSpeedAI is hosting Sora 2 generation as a straightforward text‑to‑video flow pitched as “no code, no complicated prompts,” with synchronized audio and a clean run UI for quick tests or social‑ready clips Sora 2 model page.

📼 Grok Imagine nails anime, horror OVA, and toons

Creators highlight Grok Imagine’s speed and stylistic control—especially for 80s/90s OVA‑style anime, horror moods, and even inline dialogue‑driven scenes. Excludes Veo 3.1 rumor (feature).

Creators say Grok Imagine outshines for anime, horror OVA, and toons

Artists are gravitating to Grok Imagine for stylized animation, calling it more compelling than Sora 2 for late‑80s/90s OVA anime, horror vibes, and even Saturday‑morning‑style cartoons Creator comparison, Anime endorsement, Horror praise, Cartoon praise. One creator also praised its ability to add psychological nuance to dark scenes (a broken‑mirror moment) Depth comment.

New Grok video model is producing ‘wild videos’ in seconds

Grok’s latest model is described as “crazy fast,” with people generating complex videos in seconds and customizing scene, style, and voice on the fly Speed and controls, following up on upscale synergy that lifted realism. Community sentiment continues to trend positive Grok video praise.

Inline dialogue in Grok prompts enables voiced micro‑scenes

Creators highlight that you can embed dialogue directly in Grok prompts to generate charming, voice‑acted scenes without extra tooling Dialogue tip. They also report per‑shot control over scene, style, and voice while keeping generation speed high Speed and controls.

OVA-era anime sref 4292182277 supercharges Grok workflows

Looking to nail the golden‑age OVA look? @Artedeingenio shares Midjourney --sref 4292182277 (late‑80s/90s dark OVA with Wicked City/Akira influences) used on a Grok animation that went viral—he says it “works amazingly well with Grok Imagine” Style reference.

Found‑footage and liminal ‘poolrooms’ tests take off in Grok

Filmmakers are exploring Grok’s gritty aesthetics via found‑footage experiments and liminal ‘poolrooms’ looks, pushing texture, lighting, and camera realism in short tests Poolrooms test, Found‑footage test.

Image‑conditioned Grok runs show strong fidelity for designers

Passing a still image into Grok Imagine yields faithful, stylistically consistent motion built around that frame, useful for storyboard‑to‑shot workflows Image reference. See an example result on Grok’s gallery Grok post.

Medieval anime sref 2007995603 lands for Grok stylization

A second style recipe drops—Midjourney --sref 2007995603—for epic medieval anime with painterly shading reminiscent of Vinland Saga/Castlevania; the author notes it pairs cleanly with Grok Imagine for cohesive motion Medieval style ref.

🎥 Beyond Sora: Wan 2.5, Kling 2.5, Hailuo, Veo‑3 Fast

Non‑Sora video engines showed strong motion and fidelity tests today—Wan 2.5 detail retention, Kling 2.5 action prompts, Hailuo story shorts, and a Veo‑3 Fast storyboard on Gemini. Excludes Veo 3.1 rumor (feature).

ComfyUI’s WAN Alpha workflow lands for layered RGBA video with a 2‑minute guide

ComfyUI kicked off a short‑form tutorial series with WAN Alpha, showing creators how to build layered, RGBA video comps directly in node graphs Tutorial thread. The how‑to video is live for quick onboarding YouTube tutorial, alongside official setup docs from the WAN Alpha repo GitHub repo and a one‑click import workflow JSON for immediate use Workflow JSON. The team confirms the full pipeline is usable in ComfyUI today Pipeline status.

Kling 2.5 promptcraft: wet‑road dolly‑in crash with debris and heavy rain

A fresh Kling 2.5 prompt demonstrates an intense, rapid dolly‑in on a red sports car losing traction on a slick mountain road—metal crunch, glass shatter, heavy rain, and headlights on wet asphalt all specified for cinematic fidelity Crash prompt. Following up on trench shot that showed stabilized tracking, this setup gives action directors a blueprint for choreographing chaos with weather, debris, and impact timing baked into the text. A repost is boosting circulation among prompt‑hunters Prompt repost.

Kling 2.5‑Turbo Pro pushes cliff‑edge MTB sequence with designed audio on Haimeta

Creators are stress‑testing Kling 2.5‑Turbo Pro with a high‑stakes MTB ride along a razor‑thin alpine ridge, calling out precise moment beats (near‑vertical chute at 3s, cliff‑edge skid at 6s) and layered sound design from breathing to braking to a final gasp MTB prompt. The prompt’s granular timing notes suggest Kling can respect temporal structure well enough for trailer‑style micro‑arcs without post‑edits.

Veo‑3 Fast on Gemini Flow gets shot‑by‑shot spec prompts with audio cues

A creator shared a tightly specified Veo‑3 Fast prompt in GeminiApp Flow for an 8‑second, hyper‑real elemental scene—single continuous shot, slow push‑in, emissive fire lighting, GI notes, and a staged audio build with diegetic whooshes and a cello tone Gemini Flow spec. While results weren’t attached, the spec shows how Veo‑3 Fast can be directed like a micro‑storyboard, pairing camera grammar with sound design inside the text.

Wan 2.5 preserves tiny mirror reflections in high‑speed car shots

Alibaba’s Wan 2.5 is drawing creator praise for realism under motion—one test highlights convincing speed plus a live, changing rear‑view‑mirror reflection that sells the shot Rearview detail. Another clip underscores nuanced fur and cape motion in a spooky‑cute vignette, pointing to strong cloth and secondary animation modeling Cloth and fur. For filmmakers, those micro‑signals reduce post fixes and help practical‑looking car POVs land without comp work.

Hailuo Story Week spotlights daydream‑to‑eye‑roll micro‑storytelling

OpenArt’s Hailuo Story Week surfaces a compact narrative: a boy’s dreamy crush culminates in a comedic eye‑roll, showing how Hailuo can carry beats and payoff in seconds Story short. For storytellers, it’s a simple proof that character intent and reaction reads cleanly enough for social‑length shorts without hand‑keyframed animation.

Side‑by‑side: WAN 2.5 vs Veo 3 vs Sora 2 on one prompt

WaveSpeedAI showcases a same‑prompt comparison across WAN 2.5, Veo 3, and Sora 2, giving creatives a clean look at motion, texture, and audio differences in a single pass Model showdown. For deeper dives, the platform lists model pages with options like aspect ratios, durations, and synced audio for WAN 2.5 WAN 2.5 page and Sora 2 Sora 2 page, helping teams choose engines by price‑to‑quality tradeoffs.

🧠 Ray3’s directable facial performance

Luma’s Ray3 thread focuses on character expressions—from subtle eye shifts to panic and tears—with cinema‑grade light/geometry fidelity through multiple named shorts. Excludes Veo 3.1 rumor (feature).

Ray3 showcases directable facial performance across eight cinema‑grade shorts

An eight‑short Ray3 thread spotlights directable eye shifts, breath, and tears with steady identity and film‑grade light/geometry fidelity feature thread, following up on face demo lifelike close‑up. For filmmakers and storytellers, these tests suggest close‑up performances without mocap that hold together across cuts and lighting.

Clips include Fearful Traveler for micro eye darts and tension Fearful Traveler clip, Panicked Mountain Grip for panic and breathing nuance Panicked Mountain Grip, Crying Child for believable tear formation and flow Crying Child clip, Captivated Astronaut for gaze tracking under helmet lighting Captivated Astronaut, Contemplative Alien for subtle curiosity beats Contemplative Alien, Exhausted Breathing for fatigue micro‑motions Exhausted Breathing, and Smile at Golden Hour for warm skin shading and expression Smile at Golden Hour.

🖌️ Style refs and prompt packs for visuals

A day rich in visual recipes: bioluminescent coral‑tech pack, Midjourney v7 pop‑art params, moody workshop srefs, and neon‑noir portrait vibes—useful for illustrators and art directors. Excludes Veo 3.1 rumor (feature).

Bioluminescent Coral‑Tech prompt pack: glowing coral/anemone style for portraits and props

Azed shares a versatile “Bioluminescent Coral‑Tech” recipe—translucent forms, internal glow currents, and anemone textures—suited for objects, masks, hands, and skulls in black‑backdrop hero shots Prompt thread.

The pack’s ATL examples show consistent materials language (pearlescent shells, branching veins) that art directors can re‑use across brand sets.

Epic medieval anime sref (--sref 2007995603) lands with painterly armor and cathedral light

A new Midjourney style reference (2007995603) brings Vinland Saga/Castlevania energy—ornate plate, cross emblems, and painterly shading for heroic fantasy Style ref drop, following up on Style release that focused on shōnen‑anime energy.

Strong fit for title sequences, key art, and character sheets that need period grit without losing anime clarity.

Late‑’80s/’90s OVA anime style ref (--sref 4292182277) with clear lineage

Artedeingenio details an OVA‑era anime sref (4292182277) with influences from Wicked City, Vampire Hunter D, Demon City Shinjuku, Cyber City Oedo 808, Angel Cop, Golgo 13, and Akira—ideal for darker adult animation vibes Style references.

Creators note the style pairs well with Grok Imagine for on‑model character motion.

Moody workshop sref 2955977768: the “Young Inventor” lookbook

James Yeung showcases a cohesive workshop series driven by sref 2955977768—goggles, sparks, oily surfaces, and volumetric haze for steampunk‑adjacent frames Series gallery.

Great for kit‑bashing maker spaces or retro‑tech narratives with consistent palette and lighting.

Midjourney v7 pop‑art collage shares exact params and style ref

Azed posts a pop‑art collage look with the complete parameter block for reproducibility: --chaos 17 --ar 3:4 --exp 15 --sref 2425425327 --sw 500 --stylize 500 Params post.

The sref/chaos combo yields ink‑smear accents, newspaper textures, and neon overlays—useful for poster comps and title cards.

Neonnoir × Caravaggio portrait recipe brings dramatic edge‑light and deep blacks

Bri Guy’s “Neonnoir + Caravaggio” study pushes chiaroscuro into contemporary neon, yielding punchy lips/skin against negative space—an easy plug‑in mood board for character posters Style study.

Use warm key plus hard rim, then let the background collapse to black for instant theatricality.

“QT your tears” surreal face: cloud‑tears into melt‑lines as a compositing motif

Azed’s surreal portrait swaps teardrops for cloudscapes that dissolve into vertical glitch‑lines—handy as a repeatable motif for music visuals and title interludes Concept image.

Blend sky elements in facial planes, then drive a directional line‑dissolve for emotional transitions.

🧩 ComfyUI: WAN Alpha layered video workflow

Hands‑on pipeline content for layered RGBA video: quick tutorial, full YouTube walkthrough, official GitHub, and an import‑ready JSON workflow. Excludes Veo 3.1 rumor (feature).

ComfyUI debuts WAN Alpha layered RGBA video workflow with tutorial, GitHub, and one‑click JSON

ComfyUI rolled out WAN Alpha for layered RGBA video, pairing a fast “Get Comfy With Comfy” tutorial with import‑ready assets and official repo support so creators can build composited shots end‑to‑end inside ComfyUI. The quickstart emphasizes a 2‑minute path to first results and notes the full pipeline is available now Tutorial thread, YouTube tutorial, Pipeline availability.

- Official support and setup land via WeChatCV’s repo with an “official ComfyUI version” section for WAN Alpha GitHub repo, GitHub repo.

- Comfy‑Org published the Get_Comfy_With_Comfy_Wan_Alpha.json workflow for one‑click import into your node graph Workflow JSON, Workflow JSON.

📱 Creator apps, model testbeds, and bots

Consumer‑ready creation tools expanded: Krea’s new iOS app, fal’s Sandbox comparisons and Discord bot, plus a marketer‑friendly Text‑to‑Video utility. Excludes Veo 3.1 rumor (feature).

fal debuts Sandbox to run the same input across many models and compare speed and cost

fal introduced Sandbox, a side‑by‑side model testbed where creators can feed one input through multiple models (text‑to‑image, image‑to‑video, etc.) and directly compare quality, latency, and price per run Sandbox launch. Public share pages automatically bundle model details, prompt, and per‑run cost for easy A/B reviews and portfolio proofs shared runs.

Krea launches iOS app with presets, editor, video models, and synced Assets

Krea rolled out a free iOS app that brings its desktop creation flow to mobile, including style presets, a prompt box, an image editor, access to all Krea video models, and an Assets page to manage generations iOS release. The team also cautions users to install the legit listing (developer shown as “Krea AI”) amid App Store clones, and linked the official App Store page for safe download App Store page.

ComfyUI posts WAN Alpha quick‑start for layered RGBA video, with official repo and workflow

ComfyUI kicked off a short “Get Comfy With Comfy – WAN Alpha” series showing how to build layered, RGBA video pipelines in minutes tutorial thread. Creators can pull the official integration and a ready‑to‑import workflow directly from the WAN‑Alpha repository and docs GitHub repo.

fal launches Discord bot with 5 free daily gens for video and image

The new fal bot on Discord gives creators five free generations per day, offering text‑to‑video and image‑to‑video with native audio via the open‑source Ovi model, plus text‑to‑image powered by Hunyuan Image 3.0 Discord bot launch. Join link is open for anyone to start generating inside the community server Discord invite.

SJinn template advertises unlimited‑duration Sora 2 runs with image/video inputs

SJinn surfaced a template that accepts image and video inputs plus a duration field to generate Sora 2 videos beyond typical length constraints, with a public template link for immediate trials template page link. This extends the growing third‑party Sora ecosystem, following up on Higgsfield rollout of unlimited access plans; SJinn’s tweet also claims “Unlimited duration Sora2” support support note.

Pictory pushes script/blog → video automation for marketers

Pictory highlighted its text‑to‑video tool that converts scripts, blogs, and product copy into edited videos with voice and music in minutes—aimed at social and product marketing teams product post. Full feature details and onboarding are outlined on the product page product page.

🎟️ Creator meetups, panels, and showcases

Live community touchpoints from SF Tech Week to festival panels and weekly shows; good for networking and trend‑spotting. Excludes Veo 3.1 rumor (feature).

SF Tech Week AI Brunch packs the house; Kling, Together, Runware share roadmaps

Runware’s AI Brunch drew a full room mid‑event with talks from Kling, TogetherCompute, and Runware, giving creators hands‑on views into workflows and tools Event photos. Following up on SF Tech Week panel (spatial design + AI), this brunch kept the week’s momentum with practical demos and networking.

AI × Creativity at Techweek: Hedra’s panel maps where generative tools are heading

Hedra Labs convened a Techweek panel with @mjlbach, @burkenstocks, and @venturetwins to discuss the near‑term trajectory of generative tools for creators, from style control to production pipelines Panel photos.

ElevenLabs Summit adds Marc Andreessen and Jennifer Li to Nov 11 lineup

The Nov 11 ElevenLabs Summit, focused on voice‑first interfaces, expanded its speaker list with Marc Andreessen and Jennifer Li, signaling strong crossover interest for voice creatives and audio toolmakers Speaker additions. Registration and agenda details are live for the invite‑only event Summit site.

Infinity Festival’s AI Matters screening series brings creators and technologists on stage

Infinity Festival 2025 hosted its "AI Matters Screening Series," with panelists sharing real‑world production lessons and tool stacks for film and media teams Panel photo. For filmmakers, the session offered current practices rather than hype.

Agentic AI Hack Night at Shack15 offers $35k in prizes and an open invite

Tomorrow’s Agentic AI Hack Night at Shack15 is open‑door with $35k in prizes, giving builder‑creators a chance to prototype agent‑powered media tools and pitch in person Hack night invite.

Augmented Conversation hosts Friday 12pm ET chat with AI Massive’s Maxescu

The Augmented Conversation series returns Friday at 12pm ET with guest @maxescu (AI Massive), aimed at creatives exploring practical gen‑AI workflows in content and partnerships Talk announcement.

Builder to demo Fusion today, putting prototyping and iteration directly in designers’ hands

Builder is hosting a live Fusion demo today—showcasing how designers can prototype, validate, and iterate in one flow, useful for teams shipping interactive creative sites and campaign pages Demo invite.

OpenArt × Hailuo Story Week highlights a tender short by @_urbanmotionn

OpenArt’s Story Week with Hailuo surfaced a new short by @_urbanmotionn—a compact vignette about a boy’s daydream that ends on a perfectly timed eye‑roll—showing how small prompts can yield character beats Short film post.

Wondercraft teases Wonda launch with ‘Vibe creation’ merch for next week’s event

Wondercraft previewed new "Vibe creation." caps and said they’ll be handing them out at their Wonda launch event next week—an easy in‑person touchpoint for podcasters and audio storytellers to connect Merch teaser.

🎙️ Voice agents, lipsync, and audio pipelines

Voice‑first news for creators: ElevenLabs Agents adoption and summit speaker adds, plus a promising off‑angle/low‑light lipsync test. Excludes Veo 3.1 rumor (feature).

Blackbox AI adopts ElevenLabs Agents for voice-driven coding at 30M scale

Blackbox AI is using ElevenLabs Agents to power Logger and Robocoder, voice-driven coding assistants now serving over 30 million developers Product announcement, following up on UI kit open-source UI blocks enabling agent UIs. Full details outline incident response and app building via natural dialogue in the official post ElevenLabs blog.

- Logger: 24/7 autonomous monitoring, on-call explanations, and real-time fixes Product announcement.

- Robocoder: Conversational full‑stack app creation without API keys, including AI features ElevenLabs blog.

ElevenLabs Summit adds Andreessen and Li to Nov 11 voice-first lineup

ElevenLabs announced Marc Andreessen and Jennifer Li as new speakers for its Nov 11 San Francisco Summit focused on voice‑first interfaces, with registration now open Speaker announcement and event details on the site Summit site.

fal launches free Discord bot with text‑to‑video and native audio (5 daily gens)

fal’s new Discord bot brings creators free daily runs for text‑to‑video and image‑to‑video with native audio via the open‑source Ovi model, plus text‑to‑image via Hunyuan Image 3.0; members get 5 free generations per day Discord bot launch, with access via the invite link Discord invite.

SyncLabs lipsync‑2‑pro handles off‑angle, low‑light faces convincingly

Creator tests show SyncLabs’ lipsync‑2‑pro producing natural‑feeling lip sync even when subjects aren’t facing camera and under low lighting; visuals were made in Leonardo (Lucid Origin) and Kling 2.5 Turbo, with voices from ElevenLabs Lipsync test.

🏢 Gemini Enterprise and agentic workplace

Enterprise‑grade agent stacks made news—Gemini Enterprise features, live demos, massive token/usage stats, and a 'Gemini at Work' livestream. Excludes Veo 3.1 rumor (feature).

Google Cloud unveils Gemini Enterprise: unified chat, no‑code agents, and deep SaaS integrations

Google Cloud announced Gemini Enterprise, a full‑stack agent platform that brings a single chat interface, a no‑code workbench for building/orchestrating agents, prebuilt/custom agents, and integrations across Google Workspace, Microsoft 365, Salesforce and SAP Feature rundown, with details in the official write‑up Google blog post. Early demos highlighted a meeting‑scheduling agent and a Marketing Media Agent, underscoring practical workflow automation hooks across data sources (h/t Jeff Dean) Agent demo note, Marketing agent clip.

Google cites 1.3 quadrillion monthly tokens and ~50% code generated by AI

Google shared internal scale metrics: more than 1.3 quadrillion tokens processed monthly across its surfaces and roughly 50% of Google’s code now written by AI then reviewed/accepted by engineers (attributed to Sundar Pichai) Token slide, Code stat.

Gemini at Work livestream spotlights workplace agents and real demos

The Gemini at Work livestream went live, showcasing how Gemini Enterprise’s agents operate across everyday business tasks, with creators pointing to the event hub for replays and materials Livestream start, Event page.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught