Grok Imagine 0.9 turns 1 image into 6 videos – directors get precise camera control

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Grok Imagine 0.9 just vaulted from “cool demo” to a tool filmmakers can actually steer. Across creator feeds, it’s nailing split‑screen sequences without panel bleed, pulling off mirror tricks that usually crumble, and obeying top‑down blocking like a seasoned DP. The headline moment: one still produced six distinct videos by changing only the prompt, and a single‑line “reverse push through lilies” move rendered like a storyboarded macro shot. That’s the kind of direction fidelity people have been begging for.

Under the hood, the model’s instruction‑following shows up in the boring but essential ways: image‑to‑video runs keep subject trajectory and facing consistent, reflections stay coherent, and native audio lands with SFX or narration so rough cuts don’t need an immediate trip to the DAW. Fresh clips—think a turtle glide and neon‑soaked city nights—look steadier, hinting at better temporal coherence. Compared with this week’s Sora 2 buzz, Grok’s angle is hands‑on control and looser guardrails; creators say “Safe GPT blocks 99%,” while Grok lets them iterate without euphemism gymnastics.

If you want to pressure‑test this pipeline, Hyperbolic’s $1.99/hr H200 promo this weekend is a cheap window to batch longer gens, upscales, and lipsync passes before rates snap back.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught

Feature Spotlight

Grok Imagine 0.9: filmmaker‑grade control

Grok Imagine 0.9 lands with precise, filmmaker‑style control—split screens, reflections, top‑down camera moves, and promptable shot grammar—making image‑to‑video with native audio a practical directing tool for creators.

Cross‑account surge: creators show Grok Imagine’s precise direction—split screens, reflections, top‑down moves, and prompt‑driven camera language with image‑to‑video and native audio. This is today’s dominant creative thread.

Jump to Grok Imagine 0.9: filmmaker‑grade control topicsTable of Contents

🎬 Grok Imagine 0.9: filmmaker‑grade control

Cross‑account surge: creators show Grok Imagine’s precise direction—split screens, reflections, top‑down moves, and prompt‑driven camera language with image‑to‑video and native audio. This is today’s dominant creative thread.

One still image, six different videos: Grok’s instruction‑following shines

Using the same starting image, a creator produced six distinct videos with prompt‑only changes, underscoring Grok Imagine’s fine‑grained, filmmaker‑style control—fluid motion plus directional notes that actually stick Six videos thread. This builds on Image prompt fidelity where image‑conditioned runs held reference details convincingly.

DP‑style “reverse push through lilies” shot executed from a single prompt

A macro‑diopter prompt—“Reverse push through lilies; stems blur past, resolving on model’s lips”—renders like a DP‑planned move, signaling that Grok understands camera language rather than just object lists Stylized prompt demo.

Directors steer top‑down camera moves and blocking directly in Grok Imagine

Explicit camera direction—like top‑down angles, motion paths, and blocking—translates reliably, letting filmmakers craft dynamic setups without resorting to trial‑and‑error prompt spam Top‑down shot note.

Image‑to‑video runs preserve subject path and direction

Image‑conditioned Grok clips maintain trajectory and facing direction from a start image, a key for continuity when animating still frames into short sequences Start image demo.

Native audio lands: “Grok Imagine speaks” in generated clips

Creators note Grok Imagine outputs arriving with built‑in audio (SFX, narration), reducing post work for quick drafts and social cuts Feature overview.

Split-screen prompting in Grok Imagine keeps panels separate and animates each independently

Creators report that adding “split screen” to Grok Imagine prompts prevents panel merging and lets each panel animate on its own, making multi-panel storytelling far easier to control Split screen tip.

Grok Imagine 0.9 realism rounds: wildlife glide and neon city mood tests

Fresh clips attributed to Grok Imagine 0.9 show a sea turtle gliding with smooth motion and moody night‑city aesthetics, suggesting stronger realism and temporal coherence in everyday scenes Turtle glide demo Night city share.

Grok Imagine nails mirrors and reflections for stylized shots

Reflection-heavy compositions are holding up impressively well in Grok Imagine tests, enabling clean mirror tricks and reflective surfaces that typically trip models Reflections test.

🎞️ Sora 2: creator short films, app listing, celebs

Excludes Grok (feature). Today’s Sora 2 focus is creator films and access: a polished short making rounds, Google Play pre‑reg app sighting, and celeb presence driving attention.

Sora 2 Pro short “FROSTBITE” turns heads with fully text‑to‑video workflow

A polished short film, FROSTBITE, was released as 100% text‑to‑video in Sora 2 Pro and shared in 4K, drawing strong peer praise for cinematic quality Film release, and YouTube 4K. Creators call it “Netflix‑worthy” and say it could change how movies are made Netflix-worthy claim, with additional endorsements urging skeptics to watch Sora film praise; the filmmaker also shared editing takeaways and plans for photoreal image‑to‑video once available Editing notes.

OpenAI’s Sora Android listing shows pre‑registration and USK 12+ rating

Sora by OpenAI has appeared on Google Play with a visible pre‑registration button and a USK 12+ content rating in the listing screenshot Play listing sighting, following up on pre‑reg opens noted yesterday.

Shaq’s arrival on Sora signals growing celebrity interest

Creators claim @SHAQ has joined Sora and circulate a profile link, sparking collaboration pitches and buzz that could accelerate mainstream adoption for AI video Celebrity arrival claim, and Profile claim. Outreach is already underway as creators invite him into Sora‑made projects Creator outreach, and Tag follow-up.

🚀 Beyond Sora: Veo, WAN, and Gemini flows

Separate from Grok (feature) and Sora (above). Fresh promptcraft and specs for Veo and WAN show alternative pipelines for ad‑style spots and action shots.

Veo 3 prompt: ultra‑low FPV marble run with per‑shot direction

A detailed Veo 3 recipe walks through an FPV glass‑marble race across a DIY course, specifying camera hugs, whip‑pans, slow‑downs through funnels, midday sun glints, and crisp glass‑on‑plastic SFX to keep motion readable and thrilling Prompt spec. Creatives can lift this structure to storyboard kinetic tabletop or toy‑scale spots with matching sound design.

Veo‑3 Fast on Gemini: 8‑second Nike unboxing with magical realism

A compact Veo‑3 Fast flow inside Gemini lays out an 8‑second “magical unboxing” of classic high‑tops with a soft glow, volumetric smoke, shallow DOF, and a slow push‑in—timed to a lo‑fi beat plus subtle whooshes and hums for product lift Shot timeline. The shot‑by‑shot timeline makes it a ready template for premium product reveals.

Veo‑3 Fast: rally drift spot with contrast camera movement

Another Veo‑3 Fast spec pushes a gritty rally corner: low‑angle tracking approach, dolly‑arc power‑slide, extreme slow‑mo 360 orbit at the apex (gravel sprays, suspension compresses), then a snap back to real‑time and a dust‑held tagline Flow spec. The built‑in contrast mix (engine roar → bass‑drop slow‑mo → whip‑pan exit) helps cut dynamic 8‑second ads.

WAN 2.0 on Haimeta: high‑speed MTB turn with slow‑mo cut

A WAN 2.0 prompt on Haimeta captures a biker railing a desert berm, blending a wide opener, a tight zoom on suspension compression, and a high‑speed dust plume before a final whoosh—paired with gritty tire‑on‑sand audio and peaking electronic rock Prompt recipe. It’s a clean pattern for action sports reels with a hero slow‑mo beat.

🧊 Animation performance: image→3D and lipsync

Combined animation/3D and performance tooling. New ComfyUI node turns images into 3D; lipsync realism steps up with SyncLabs and community demo reels.

SyncLabs Lipsync‑2‑PRO steps up realism and supports uploaded audio (even singing)

Early users report a visible jump in lip realism with Lipsync‑2‑PRO, along with support for user‑uploaded audio—including vocals—so creators can drive convincing dialogue without re‑recording tester praise, works with singing. For character work, trailers, and music videos, this closes a crucial gap between stylized animation and believable performance.

ComfyUI adds Rodin Gen‑2 node to turn any image into a 3D model

ComfyUI now exposes a Rodin Gen‑2 node that converts a single image into a navigable 3D asset directly in your node graph—useful for previz, turntables, and blocking without leaving the pipeline node announcement. This lowers the barrier for concept‑to‑asset prototyping inside existing ComfyUI workflows.

ComfyUI curates best community lipsync demos after WAN livestream

ComfyUI published a “Best Community Lipsync Demos” thread that spotlights top pipelines and reference clips, following up on WAN livestream multi‑language demos demo thread. The roundup includes WAN InfiniteTalk examples made during the stream, giving creators concrete starting points and settings to replicate results wan examples.

🖌️ Illustration styles and photo looks

Mostly visual prompt recipes and style refs: monochrome sketches with a red accent, Midjourney v7 params, LovArt’s Nobel look, and Leonardo’s film emulation cue string.

Vintage analogue film look via a single “magic” prompt line in Leonardo

Creators highlight a reliable cue string to force convincing analog film aesthetics: “IMG_7754.JPG, low resolution, technicolor, pull processed, clipped highlights, chromatic aberration, 35mm film, filmic grain, medium format,” with advice to skip extra quality modifiers and vary the filename numbers for alternates Prompt string.

Useful when you need a cohesive film stock feel across a set without re‑tuning every prompt.

Gemini “Nano Banana” prompt delivers knitted voxel portraits with Starry Night backdrops

A recipe circulating for Gemini produces charming 3D voxel characters built from fuzzy yarn loops, paired with a knitted ‘Starry Night’ background; call out loop‑knit detail on hair, clothing, and accessories to sell the tactile look Prompt recipe.

Great for playful brand avatars and collectible poster sets where craft texture is the point.

Monochrome Accent Realism: pencil sketches with a single bold red stroke

Azed_ai shares a concise, reusable prompt formula for hyper‑real B/W pencil sketches punctuated by one red accent, with ATL examples ready to copy Prompt recipe.

Community riffs show it adapts cleanly across subjects—from dogs to dragons—while keeping the restrained gallery‑print aesthetic Dragon example.

ImagineArt1.0 earns praise for photo‑grade skin tones, lighting, and textures

A creator spotlights ImagineArt1.0’s accuracy on skin tone, speculars, and fabric detail in a poolside fashion portrait; the shared schema‑style prompt blocks out pose, wardrobe, accessories, and action for repeatable direction Model sample.

If you need consistent campaign looks, mirroring this structured prompt format helps lock continuity shot‑to‑shot.

LovArt rolls out a Nobel Prize–style collage aesthetic pack

LovArt promotes a preset that recreates the Nobel committee’s sophisticated collage style, giving designers a quick path to stately posters and social tiles without manual compositing Style pack thread.

Minimal “KEYCHAIN” prompt template for clean product‑style renders

CharasPowerAI’s PromptShare outlines a two‑element keychain composition (symbols/objects on a ring) suited for merch mockups and icon pairings; specify materials (polished metal, rubber) and negative space to keep the silhouette crisp Prompt share.

🧰 Creator toolchains, APIs, and multi‑model tests

Platform and API signals for creatives: ChatGPT DMs UX leaks, OpenAI Codex GA slide, rumored new model menus, fal’s multi‑model sandbox, and Kimi’s vendor verifier update.

Model picker screenshot teases Sora 2, GPT‑5‑Codex, realtime and image minis

A model menu screenshot lists GPT‑5 variants (pro/mini/nano), GPT‑5‑Codex, gpt‑image‑1‑mini, and gpt‑realtime‑mini, alongside Sora 2 and Sora 2 Pro—hinting at a coming API surface spanning code, image, realtime audio, and advanced video Menu screenshot. While unconfirmed, this aligns with a broader push toward multi‑modal app stacks for creative teams.

OpenAI Codex moves to GA, signaling production‑ready code agents

A conference slide reading “Codex [Now GA]” signals OpenAI’s coding stack has exited research preview, a key threshold for teams formalizing agentic code review, refactor, and inline IDE co‑pilot workflows Stage slide. Expect broader integration in creative pipelines where engineers and technical artists script tools, automate renders, or wire up post workflows.

ChatGPT Messages UI leaks hint at friend DMs and shared chats

Screenshots suggest “ChatGPT Messages” (DMs) with profile setup and a note that your image is visible to people you message, hinting at person‑to‑person and possibly multi‑user chats inside ChatGPT Settings screen. Another teaser frames it as making ChatGPT the “everything app,” implying social sharing or co‑chat features could be near Feature tease.

- Availability: one report says Apps in ChatGPT aren’t working in the EU yet, underscoring staggered feature rollouts EU availability note.

ComfyUI adds Rodin Gen‑2 node to convert any image into 3D

A new Rodin Gen‑2 node in ComfyUI turns any input image into a 3D model, dropping straight into existing node graphs for asset creation and previs without leaving your pipeline Node announcement. For designers and film teams, this accelerates prop/blockout workflows and look‑dev iterations inside ComfyUI.

Kimi K2 Vendor Verifier now visualizes tool‑call precision across providers

MoonshotAI updated its K2 Vendor Verifier to chart tool‑call accuracy across K2 API resellers, comparing thousands of calls against the official endpoint to surface precision gaps that affect agent reliability Update note. The repo includes sample data, a CLI to run your own checks, and filters for testing OpenRouter vendors GitHub repo.

Lipsync‑2‑PRO now works with uploaded audio and singing

Creators confirm SyncLabs’ lipsync‑2‑pro handles uploaded audio—and even singing—broadening use beyond text‑to‑speech into performance‑driven dubbing Uploaded audio claim. This follows earlier demos showing strong realism in off‑angle and low‑light footage lipsync demo, with more testers reporting convincing results Early access note.

ComfyUI curates best community lipsync demos and pipelines

A ComfyUI thread rounds up the best community lipsync demos, giving creators a quick path to reliable pipelines for dialogue‑heavy shorts, ads, or music videos Demos thread. It’s a handy companion to newer lipsync engines and wrappers, helping teams compare outputs before standardizing nodes in their toolchain.

Pollo AI launches Halloween effects collection for one‑click spooky looks

Pollo AI added a Halloween Special section with creepy and fun presets you can apply to videos from inside the app—useful for themed shorts, promos, or quick social posts Launch post, with a live gallery and CTA to try them now Effects gallery.

📈 Benchmarks, forecasts, and compute signals

Mostly eval releases and ecosystem metrics: IOAA contest scores for GPT‑5/Gemini, superforecaster vs LLM leaderboard update, and Epoch’s compute estimate for GPT‑5.

GPT‑5 and Gemini 2.5 post gold‑medal IOAA scores; GPT‑5 tops data analysis

New IOAA exam analysis shows GPT‑5 scoring 88.5% on data analysis vs Gemini 2.5 Pro’s 75.7%, while both achieved gold‑medal‑level theory results (Gemini 85.6%, GPT‑5 84.2%) Benchmarks chart, following up on FrontierMath result where Gemini’s deep‑think math gains were highlighted. For creators, these science‑heavy wins signal stronger reliability on physics‑ and astronomy‑flavored briefs, simulations, and data‑driven story cues.

Epoch estimates GPT‑5 trained with less compute than GPT‑4.5 via post‑training improvements

Epoch AI’s new estimate charts GPT‑5 as using more compute than GPT‑4 but less than GPT‑4.5, citing an approach that builds on a ~100B‑element base model and >30T bits of post‑run information to push capability Compute estimate. For production budgets, this hints at efficiency gains: better reasoning at plateauing training FLOPs could translate into cheaper, more capable creative inference tiers.

Superforecasters still lead ForecastBench; LLMs surpass public baseline, parity eyed by late 2026

ForecastBench’s latest leaderboard keeps the superforecaster median at #1 while top LLMs now outperform the public baseline (median public placed at #22), with trendlines suggesting ~0.016 annual Brier improvement and potential parity by late 2026 Leaderboard update. If that trajectory holds, AI‑assisted planning for content launches and release timing could soon rival expert crowd forecasts.

Hyperbolic offers on‑demand H200s at $1.99/hr for the weekend

Compute costs dip as Hyperbolic runs a weekend promo pricing H200 GPUs at $1.99/hour Pricing update. For indie filmmakers and music/video toolsmiths, that’s a timely window to batch upscale, run longer video gens, or prototype agentic pipelines without blowing budget.

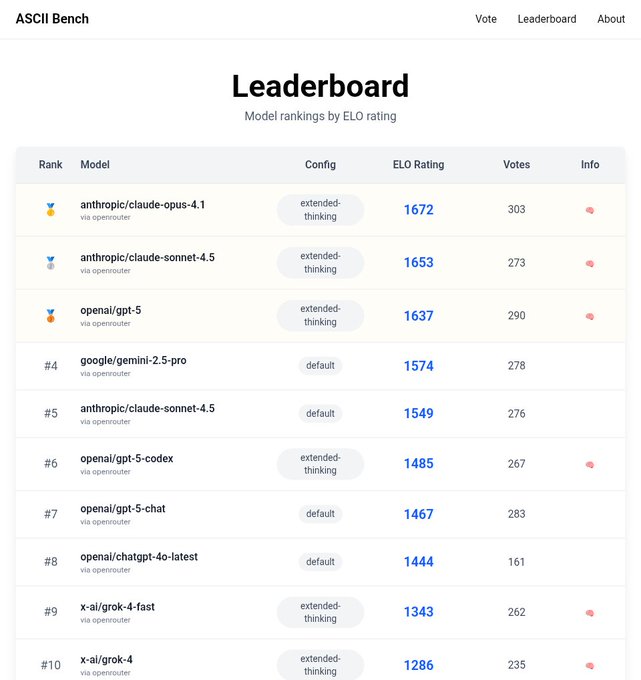

Claude Opus 4.1 tops ASCII Bench with 1672 ELO

A fresh ASCII Bench leaderboard shows anthropic/claude‑opus‑4.1 in the top slot at 1672 ELO (extended‑thinking config), edging Claude Sonnet 4.5 Leaderboard screenshot. While niche, these structured‑reasoning contests remain a useful proxy for long‑form planning—story outlines, prompt pipelines, and multi‑step scene breakdowns.

💬 Creator economy pulse and culture wars

Community signals: payout opacity and blocked currencies, ongoing AI art backlash vs adoption, content‑filter memes, legal jabs, and ethical concerns about posthumous video gen.

Creators in blocked‑currency regions can’t access X payouts

Monetized creators report deposits they can’t withdraw due to currency restrictions, highlighting a structural barrier that undermines the AI creator economy outside major markets Blocked payout note. For filmmakers and designers building global audiences, the inability to actually capture earnings stalls reinvestment into compute, music rights, and production tools.

X payouts feel random after 17M‑view surge delivers similar pay

A creator says 17M impressions over two weeks yielded roughly the same revenue as a prior 1.5M‑impression period, speculating that engagement rate is weighted heavily and makes payouts feel arbitrary Payout rant. For AI creatives optimizing threads and video posts, this uncertainty complicates planning around virality, scheduling, and ad‑friendly formats.

Creators contrast Grok’s permissive mode with “Safe GPT” over‑blocking

A side‑by‑side claim that “anything is possible” in Grok Imagine while “Safe GPT blocks 99% of the prompt” highlights a widening creative‑freedom gap in mainstream tooling Guardrails contrast. For agencies and solo editors, that delta affects turnaround time, prompt iteration, and whether a platform can handle edgy briefs without endless rewrites.

Creators lampoon Sora content filters with euphemism toy memes

Meme posts like “Hairy Potter: Magic on the Green” and “Tragicarp” mock how content filters force absurd rewordings to get harmless ideas past safety checks, following up on guardrails overblocking that blocked benign prompts Hairy Potter meme and Tragicarp meme. The satire underscores real workflow friction for editors trying to ship brand‑safe but playful briefs.

Sora 2’s ability to depict the dead sparks ethical pushback

“It’s creepy that Sora 2 lets you make videos of dead people,” one creator says, reviving questions about consent, estates, and distribution ethics for posthumous likeness and voice Ethics concern. Expect tighter client brief language (rights, releases) and platform guardrails as AI video hits mainstream narratives.

AI anime backlash meets growing audience acceptance

An AI artist vows to post even more anime amid criticism, arguing most viewers now appreciate the quality and that resistance is concentrated among a vocal minority Anime backlash post. Others praise the aesthetic outright, reinforcing a shift where stylistic wins are converting skeptics in the feed Anime praise.

Viral subpoena screenshot fuels anti‑OpenAI sentiment among creators

A circulating image of a subpoena in an OpenAI case is used as a dunk meme (“OpenAI Sucks”), feeding ongoing culture‑war vibes that blur legal news with creator frustration over access, pricing, and policies Subpoena screenshot. For freelancers who depend on these tools, brand trust—and the memes that shape it—can influence which models they choose for client work.

📱 Consumer effects and marketer tools

Generative media for everyday creators: Pollo AI’s Halloween effects and Pictory’s text‑to‑video for marketers with branding and screen‑recording helpers.

Pollo AI opens Halloween Special effects hub for spooky, shareable looks

Pollo AI rolled out a seasonal Halloween Special page with a catalog of creepy, cool, and fun video effects and a clear CTA to try them in‑app—timed for creators chasing holiday engagement spikes Halloween effects page, with a second promo pointing back to the effect library try effects link. For short‑form marketers and everyday editors, this is an easy way to ship themed clips without custom VFX or motion templates.

Pictory spotlights text‑to‑video for product demos with branding and screen recording

Pictory is pitching a turnkey path from scripts to product videos, calling out built‑in screen recording and brand controls to keep outputs on‑message—useful for solo marketers and teams scaling content marketing pitch, with a companion explainer on why text‑to‑video is accelerating in 2025 Pictory blog post. This builds on prior coverage of their automation focus while adding specific tooling for on‑brand assets and demo capture workflow automation.

🏛️ Industry moves and policy signals

Light but notable: leadership and expansion moves that shape creative AI availability and competition across regions.

Anthropic to open Bengaluru office after Modi meeting

Anthropic is deepening its India presence: CEO Dario Amodei met Prime Minister Narendra Modi and the company plans to open a Bengaluru office by early 2026 India meeting.

For creators in the region, this signals local hiring and closer policy dialogue, which could translate into earlier access to tools, partnerships, and support across India’s fast‑growing creative AI market.

Andrew Tulloch joining Meta after earlier $1.5B rumor

WSJ‑referenced chatter says Andrew Tulloch, previously rumored to have turned down a $1.5B offer, is now joining Meta—an aggressive talent move with implications for Meta’s creative AI stack (video, image, and music tooling) WSJ note, WSJ link.

For filmmakers and designers, Meta’s intensified hiring could accelerate model and product updates (e.g., Emu‑family imaging, generative video, and audio tooling) that feed Instagram, Threads, and VR/AR content ecosystems.

Hyperbolic offers H200s at $1.99/hr on-demand

GPU provider Hyperbolic is running a weekend promo for on‑demand NVIDIA H200 instances at $1.99 per hour, undercutting typical Hopper‑class pricing and lowering the bar for short, intensive bursts of rendering, upscaling, or finetuning Pricing promo.

Studios and solo creators can opportunistically slot high‑compute tasks (e.g., 4K upscales, diffusion control‑net batches, style‑transfer passes) into cheaper windows, potentially reshaping weekly post pipelines.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught