Veo 3.1 tops community arenas with 175k+ votes – one‑week free in Gemini

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

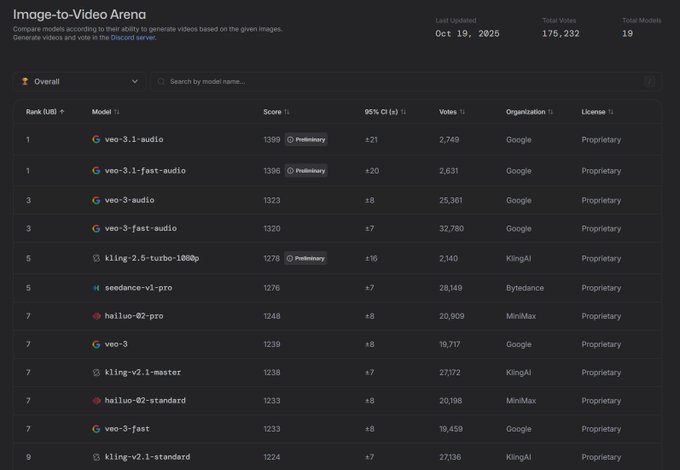

Google’s Veo 3.1 just grabbed the top spots across community video arenas, racking up 175k+ head‑to‑head votes while the audio‑enabled variants hold first and second with preliminary scores around 1,399 and 1,396. Creators also report a one‑week free window inside Gemini, which means you can push image‑to‑video and text‑to‑video runs at scale without touching budget. If you’ve been waiting to pressure‑test audio sync and motion fidelity before committing spend, this is the week to do it.

What stands out is breadth, not a one‑off win. Veo 3.1 leads both image‑to‑video and text‑to‑video boards, and filmmakers are posting real outputs, like a “Galata Timelapse” cut built with Nano Banana + Veo 3.1. Prompt blueprints are spreading fast, translating director notes into repeatable moves: 8‑second set‑pieces like a cathedral “Ballerina of Light” with a subtle 15° crane arc, or a wide tracking Cybertruck roll across a Mars‑like plain, are producing consistent motion and lighting without babysitting the model.

If you’re stitching scenes, the newly open‑sourced HoloCine engine is a timely complement — minute‑long, cut‑driven sequences can give your Veo shots a narrative spine while you benchmark the free Gemini window.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught

Feature Spotlight

LTX‑2 credit blast: 24‑hour giveaway + 50% off

24 hours of 800 free credits + 50% off LTX‑2 cuts the cost of testing native 4K, lipsync video for everyone—prime for pilots, reels, and client proofs before budgets reset.

Time‑boxed promo: creators get 800 free credits via DM for LTX‑2 and all generations are 50% off. Includes in‑app wayfinding and early user reactions. This section is the only one covering LTX‑2 today.

Jump to LTX‑2 credit blast: 24‑hour giveaway + 50% off topicsTable of Contents

🎬 LTX‑2 credit blast: 24‑hour giveaway + 50% off

Time‑boxed promo: creators get 800 free credits via DM for LTX‑2 and all generations are 50% off. Includes in‑app wayfinding and early user reactions. This section is the only one covering LTX‑2 today.

LTX‑2 24‑hour blast: 800 free credits and 50% off generations

For the next 24 hours, LTX‑2 users get 800 free credits via DM and 50% off all LTX‑2 generations Promo announcement. Following up on 4K launch, the deal lowers the barrier to test synchronized audio + video at scale. You’ll need an LTX account to redeem; the CTA is live now with the platform link inline Start generating, and full details sit on the product page LTX landing page.

Early creator uptake: short pieces and strong 4K first impressions

Creators are already posting with the tag—compact pieces like “stranded” and “the arrival” hint at quick concept‑to‑screen trials Creator short, Creator short. One tester called out “4K video… cinematic bass sound… this is crazy,” reflecting early sentiment around the synced audio pipeline; LTX acknowledged the share in‑thread User reaction, LTX acknowledgement.

Where to find LTX‑2 and redeem DM credits in the app

LTX posted a quick locator showing where LTX‑2 lives in the platform UI and where the giveaway credits appear in your account once the DM lands In‑app guidance. The reminder also notes that an LTX account is required to redeem the promo Account reminder, with the primary entry point linked for new signups LTX landing page.

🍿 Popcorn storyboards: zero‑drift character lock

Higgsfield Popcorn pitches 8‑frame, exact‑face consistency for pre‑viz; community posts show applied ad use and credits giveaway. New today: creator field tests and a 200‑credit DM promo.

Higgsfield Popcorn touts “1 face, 8 frames, 0 drift,” drops 200‑credit promo for 9 hours

Popcorn is leaning into zero‑drift storyboards—“1 face, 8 frames, 0 drift”—and is offering 200 credits via DM if you follow, retweet, and reply within a 9‑hour window Promo and claim, following up on 225-credit promo that spotlighted its 8‑frame pre‑viz pitch.

Creators are reporting solid field results: one notes their character ‘Nova’ stayed perfectly consistent across shots from a single still with more tests planned Creator note. Another applied Popcorn to a snowy car‑ad scene and shared the outcome, underscoring practical pre‑viz value for ad workflows Car ad test.

🏁 Veo 3.1: free week and arena leaderboards

Fresh momentum: Veo 3.1 tops community arenas and surfaces a 1‑week free window, with creators posting real clips via Gemini. Excludes LTX‑2 (covered as feature).

Veo 3.1 tops community video arenas; 175k+ votes put it first

Google’s Veo 3.1 sits at the top of community leaderboards, with the Image‑to‑Video Arena showing 175k+ votes and both veo‑3.1‑audio and veo‑3.1‑fast‑audio in first and second place (scores 1399 and 1396, marked preliminary) leaderboard post.

- Image‑to‑Video: Veo 3.1 variants hold #1 and #2 among 19 models with 175,232 total votes in the snapshot leaderboard post.

- Text‑to‑Video: The same post notes Veo 3.1 leads that board as well, underscoring cross‑mode strength for creators leaderboard post.

Veo 3.1 is free for one week via Gemini

Creators flag a one‑week free window for Veo 3.1, encouraging rapid hands‑on testing inside Gemini before committing budgets access note. Early field use includes a “Galata Timelapse” built with Nano Banana + Veo 3.1, signaling quick uptake by filmmakers and editors exploring look development creator demo.

Creators share Veo 3.1 Fast prompt blueprints for 8s cinematic shots

Prompt‑level recipes for Veo 3.1 Fast on Gemini are circulating, detailing camera paths, lighting, and timing—useful for replicable results and team handoffs, following up on on‑stage workflow where Nano Banana + Veo 3.1 prototyping was showcased. Examples include an 8‑second cathedral “Ballerina of Light” with god rays and a subtle 15° crane arc prompt blueprint, and an 8‑second wide‑angle tracking shot of a Cybertruck across a Mars‑like landscape with sharp stainless reflections prompt blueprint.

🎥 Grok Imagine in practice: emotion, zooms, SFX

Hands‑on Grok I2V work: natural talking‑head emotion, creative zoom‑outs, and moody shorts built from stills. New today are tutorials and SFX‑heavy vignettes.

Grok Imagine delivers natural talking‑head emotion; raw clips and simple tutorial shared

A creator showcases image‑to‑video talking heads with subtle expressions and lifelike micro‑movements, achieved with a minimal prompt in Grok Imagine talking head test. They also shared unedited outputs and a quick tutorial so others can replicate the setup end‑to‑end raw clips, with steps linked for easy follow‑along tutorial steps. Early community reactions note steady improvements in realism over time creator reaction.

From still to cosmos: ‘Eye of the Universe’ animated in Grok, expanding stills‑to‑video use

A creator turns a Midjourney still into a cosmic animation—‘Eye of the Universe’—with Grok Imagine, underscoring a growing stills‑to‑motion workflow for fast concept reels cosmic animation. This builds on community interest in image‑to‑animation runs, following up on city‑lights anim where still photos were similarly animated into moody sequences.

Gothic romance and monster beauty: mood‑driven Grok shorts keep landing

Two atmospheric reels—‘night angel’ and a tender ‘monster beauty’ study—show Grok Imagine handling gothic/romantic tone with consistent style across shots, often paired with Midjourney for look development before animation night angel clip, monster beauty clip. Creators note the tool is improving steadily for this aesthetic lane creator note.

When to zoom out: Grok’s camera language tested on a ‘dead astronaut’ scene

A side‑by‑side creative study explores how standard vs extreme zoom‑outs change narrative impact in Grok Imagine, using the same composition to expose framing trade‑offs and reveal more world‑building over time zoom‑out example. It’s a practical cue for selecting camera moves that serve composition, not just motion for motion’s sake.

Promptless storytelling: ‘A timeless farewell’ short built entirely in Grok Imagine

A moody vignette—two souls parting with cinematic SFX—was produced in Grok Imagine without an explicit prompt, highlighting how the tool’s defaults can carry tone and pacing for evocative shorts short film note. This suggests a low‑friction path for quick story beats when speed or experimentation matters more than granular control.

🌊 Hailuo 2.3 early access: motion and MMAudio tests

Ongoing early‑access wave (new today: more physics and camera tests). Posts highlight motion stability, action shots, and MMAudio tags. Excludes LTX‑2 feature.

Hailuo 2.3 holds backgrounds under rapid, FPV‑style camera moves

A Japanese demo shows a bike jump through rubble with fast camera motion while the environment stays coherent, described as “motion stability close to live‑action” JP motion test. For directors and previs, this suggests fewer artifacts during aggressive moves like whip pans and tracking sweeps.

Car‑crushing test hints at stronger rigid‑body feel and sliding dynamics in 2.3

An early‑access ‘crushing cars’ run highlights intense slide behavior and believable mass transfer, useful for action previs and VFX blocking Car crush test, following up on action physics gains (smoke, fire, destruction looking more convincing).

Cinematic previews and a seven‑example thread showcase 2.3 text‑to‑video

A short titled “Rituals” and an early‑tester thread with seven examples spotlight stable compositions and dramatic lighting from pure prompts Short film clip, Example thread. These packs help filmmakers gauge coverage options and lighting continuity before storyboarding.

Testers tag Hailuo 2.3 with MMAudio and praise output quality

Creators flag “amazing quality” and label clips with MMAudio, signaling audio‑enabled runs or pipeline pairings surfacing in early access Quality callout. For storytellers, synchronized soundtracks alongside strong motion control reduce post steps.

More early‑access prompt recipes and JP clips surface for Hailuo 2.3

New prompt formulas (e.g., “Handheld, Arc Right Cyberpunk Black Market Showdown”) and additional Japanese early‑access shares keep rolling in, broadening reference for camera paths and scene density Prompt recipe, JP clip share.

🎨 Brand systems in hours with Firefly Boards

A full brand workflow inside Adobe Firefly Boards: moodboard→logo→color/variations→mockups→social→promo video, with Nano Banana assists. New today: detailed prompts and SVG tip.

Firefly Boards thread shows end‑to‑end brand system in hours

Adobe ambassador Amira (@azed_ai) lays out a practical Boards → logo → color → mockups → social → promo video workflow, aiming to compress full brand builds to under two hours Workflow thread. Step 1 recommends spinning up a Boards moodboard, then generating 1:1 logos (Ideogram 3.0 suggested) and iterating inside Firefly Logo step, with a direct entry point for newcomers via Adobe’s site Adobe Firefly page.

The thread targets working designers and small teams who need a guided path from concept to platform‑ready assets without leaving the Adobe stack.

Colorways, background removal, and PNG→SVG accelerate logo iteration

Step 2 moves to variations on black and quick colorways with Nano Banana, followed by a useful tip: convert PNG logos to SVG using Adobe Express for clean vector editing in Illustrator Color step, Background removal. The converter link is included for a one‑click handoff to Illustrator PNG to SVG converter.

These touches cut friction between ideation and production—especially when packaging multiple color systems and transparent assets for real‑world use.

One‑click brand trials: stationery and packaging mockups from prompts

With a chosen mark, the thread demonstrates Nano Banana prompts to place branding across digital mockups—business cards, packaging, banners—so teams can eyeball legibility and hierarchy before final comps Mockups overview. A detailed stationery flat‑lay prompt (letterhead, cards, envelope, pen, notepad, sticker seals) is provided to speed approvals Stationery prompt.

Seeing type, spacing, and material cues in context helps catch issues early (e.g., thin strokes, contrast on off‑white stock).

Product and UI mockups: cat bed tag, mobile app UI, and merch bundle recipes

Three additional prompt recipes broaden brand context: a stitched fabric tag on a Scandinavian cat bed (texture‑aware), a mobile booking app UI with defined accent colors, and a coordinated merch trio (tote, mug, cap) Cat bed prompt, App UI prompt, Merch prompt.

These are handy drop‑ins for pitch decks and quick lifestyle visuals without hunting for stock or building 3D scenes.

Animate Boards images into promo videos via Veo 3.1, Ray 3, or Runway 4

To close the loop, the guide shows how to turn Boards visuals into short promotional videos by selecting a partner model (e.g., Veo 3.1, Ray 3, Runway 4) and animating a chosen image—useful for quick teasers and campaign openers Promo video tip.

This keeps ideation, brand assets, and launch‑ready motion all within a single creative flow.

Social kits inside Boards: posts, headers, and covers in minutes

Step 4 places the final logo into social templates—posts, banners, profile photos, and covers—so teams can preview cross‑platform looks in one place Social templates.

For brand managers, this is a fast sanity check on safe areas, scale, and color contrast across networks before exporting a final pack.

🛠️ Runway: Video References and chained Workflows

Runway adds Video References to lock style (character, lighting, environment) and teases chaining actions via Workflows. New today: creator tutorial for branded content.

Runway ‘Video References’ locks character, lighting and environment; branded content tutorial

Runway’s new Video References workflow lets creators upload stills to lock character identity, lighting, and environment across scenes, tightening continuity for branded content and film projects. A creator shared a step‑by‑step walkthrough and results showing consistent, cinematic outputs using the feature Creator tutorial.

🎞️ Open‑source multi‑shot: HoloCine scene engine

From clips to scenes: HoloCine outputs minute‑long, multi‑shot sequences with cuts and proper cinematography. New today: open‑source blog + deep‑dive link.

HoloCine open-sources multi-shot scene engine

HoloCine has open‑sourced its scene engine and published a deep dive, enabling up to one‑minute sequences with multiple shots, cuts, and consistent characters in a single pass release overview, in context of multi-shot narrative first teased yesterday. The blog details dual attention tricks for inter‑shot coherence and practical directing controls for shot‑reverse‑shot and proper cinematography project blog post.

- Minute‑long scenes with cuts and shot‑reverse‑shot, maintaining character/style continuity across shots.

- Architecture highlights: window cross‑attention (per‑shot alignment) and sparse inter‑shot self‑attention (compute scaling) for coherent multi‑shot generation.

⚡ Creator infra: faster loads + remixable app gallery

Pipeline upgrades for builders: fal’s FlashPack accelerates PyTorch model loading; Google AI Studio adds an app gallery to remix and extend apps.

Fal’s FlashPack speeds PyTorch model loads by 3–6×

Fal unveiled FlashPack, a checkpoint format and loader that flattens tensors into a contiguous stream, memory‑maps reads, and overlaps CPU/GPU work via CUDA streams to cut model load times by 3–6× Release thread fal blog post. The GitHub release notes cite up to ~25 Gbps disk‑to‑GPU throughput, simple pack/load APIs, and mixins for Diffusers/Transformers—plus one‑command conversion of existing checkpoints and no GDS requirement GitHub release GitHub repo.

Google AI Studio adds remixable app gallery

Google AI Studio now surfaces an app gallery you can fork and remix, letting builders start from working apps and keep iterating instead of starting from scratch—useful for rapid creative tool prototyping and workflows Rollout note.

🧪 Control & physics evals across video models

Independent tests on prompt control and physical plausibility. New today: a cross‑model ‘generate nothing’ probe and a Sora 2 physics teaser via Leonardo. Excludes Grok/Runway specifics covered elsewhere.

Cross-model test asks: can video models generate nothing?

A creator ran a controlled “generate nothing” prompt across six leading video models to probe baseline obedience and prompt control; several systems still hallucinate content or refuse empty scenes, highlighting gaps in negative control useful for compositing workflows and precise timing. Full methodology and prompts are outlined in the thread methodology post, with a supplementary Grok Imagine sample illustrating edge‑case compliance behavior bonus Grok clip.

Sora 2 teases improved physics: rigid bodies, real rebounds, fewer teleports

Leonardo spotlights Sora 2’s physical modeling—rigid‑body behavior, realistic rebounds, and fewer mid‑shot “teleports”—to curb crashy artifacts when prompts get ambitious physics tease. Following up on character consistency, earlier user tests emphasized visual coherence; this speaks to motion plausibility that underpins action beats, with access via Leonardo for hands‑on checks product page.

🔊 WAN 2.5 native audio: sync status check

Creators test WAN 2.5’s built‑in audio. New today: visuals praised, but slight audio latency during transitions noted, with link to try the collection.

WAN 2.5 native audio draws praise on visuals, but slight sync lag appears in transitions

Early creator tests report smooth, cinematic motion and convincing facial acting, while the new built‑in audio trails by a fraction during scene transitions and fast moves test notes. The latency is small but noticeable, with suggestions that prompt tuning or light post can mask it; sync is not yet frame‑accurate in rapid cuts latency note, sync caveat.

- Access: You can try WAN through WaveSpeedAI’s collection to evaluate current audio‑video alignment yourself WaveSpeedAI collection.

🎵 AI music: covers in the wild and a new rival rumor

Music‑side movement for creators: fresh AI covers and chatter that OpenAI is building a music model to rival Suno/Udio. New today: Motown ‘Toxicity’ and cover‑stacking technique.

Rumor: OpenAI is building a music generator to rival Suno/Udio

OpenAI is reportedly developing a music model that accepts text and audio prompts, positioning it against Suno and Udio and expanding beyond Sora’s video efforts rumor note. For musicians and cover creators, a first‑party OpenAI entrant could shift workflows (prompt fidelity, rights tooling, distribution) and reprice access across major platforms.

Motown-style ‘Toxicity’ AI cover drops from Radiomandanga

One new drop lands: a Motown‑style cover of System of a Down’s “Toxicity” Motown cover drop, following up on cover channel 500k views and 8k subs. The release keeps the channel’s rapid cadence of high‑concept stylistic flips; sample the catalog here YouTube channel.

Technique: “cover the cover” loop to push tone in AI remakes

A practical trick is making the rounds: iteratively re‑cover your own AI cover while nudging the style prompt to darken mood or timbre until it lands where you want technique tip. Creators joke it’s “cover, cover and cover until it’s dark enough,” a playful reminder that stacked generations can converge on a target sound faster than one‑shot prompting creator quip.

🖼️ Still looks: MJ V7 recipes and neo‑noir srefs

Image‑gen inspiration for illustrators and designers: a cinematic neo‑noir anime sref, an MJ v7 param recipe, and 1980s OVA prompt packs. New today: community riffs on zodiac/constellation UI.

Cinematic neo‑noir anime style ref —sref 304866741 (MJ V7)

A new Midjourney V7 style reference —sref 304866741— delivers a cinematic neo‑noir anime look with stylized realism and pulp‑vampiric accents, following up on Neo art‑deco coverage by expanding the palette toward darker, graphic‑brushwork vibes. See examples and descriptors in the share for how clean lines meet dramatic lighting and hand‑illustrated texture Style ref thread.

For quick trials, keep V7’s stylize in a mid range and pair with hard‑key lighting for freckles, crowns, and weapon close‑ups like the posted frames.

Futuristic Zodiac UI prompt sparks a community riff (MJ V7)

Azed_AI’s “Futuristic Zodiac Illustrations” prompt (720×1280 vertical, constellation wireframes, radiant energy lines) catalyzes a shared look for app‑style cards in MJ V7, with strong consistency across colorways and motion‑suggesting light trails Prompt share.

- Pisces interpretations lean into circular flow and deep‑blue cosmic fields Pisces example.

- Aries and Taurus variants show warm ember palettes and grounded, star‑linked anatomy Aries example, Cancer and Taurus.

- Libra renders split stylistically between elegant scales and playful, surreal justice motifs Libra styles.

- Off‑zodiac riffs extend the UI language to cosmic oracles and pop‑hero silhouettes, proving the template’s range Cosmic oracle riff, Superhero variant.

MJ V7 collage recipe: --sref 2192758684 with chaos 6, stylize 500

A practical Midjourney V7 setup lands a cohesive watercolor‑storybook grid: --sref 2192758684 --chaos 6 --ar 3:4 --sw 300 --stylize 500. The share shows a six‑panel spread (portraits, winter scene, kimono, artisan) that holds character and palette across varied subjects Params example.

Use this as a starting rig for editorial series; tweak stylize ±200 and chaos ±2 to nudge variance without losing the through‑line.

🗣️ Community pulse: bets, memes, and AI content fatigue

Today’s discourse beats: market bets on model supremacy, an OpenAI monetization meme, and concern over obvious AI‑written blogs. Pure sentiment—no product overlaps.

Markets tilt toward Google: Polymarket gives 77% odds for best AI model by end of 2025

Prediction traders are heavily backing Google to lead AI model quality by year‑end 2025, with a 77% share on Polymarket, reshaping creator expectations around tooling and workflows Polymarket snapshot.

- Snapshot also shows OpenAI 13%, xAI 8%, and Anthropic 1.5%, underscoring a growing narrative around Gemini’s momentum for production use.

“You can just tell”: creators call out obvious AI‑written blogs and predict a flood of more

A pointed take argues AI‑generated articles on news and blog sites are increasingly easy to spot—and likely to surge—fueling content fatigue and trust problems for audiences consuming creative industry coverage Blog fatigue take.

Meme captures creator skepticism: OpenAI mocked for chasing ad revenue and NSFW

A widely shared lane‑change meme frames OpenAI as veering toward ad revenue and NSFW content, distilling creator unease about platform monetization and cultural direction in a single gag Meme post.

While tongue‑in‑cheek, the sentiment signals growing anxiety that business goals could eclipse product focus for creative workflows.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught