ByteDance Seedance 1.0 Pro Fast 3× faster, 60% cheaper – live on fal

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

ByteDance just put speed and budget back on the table. Seedance 1.0 Pro Fast is rolling out on fal, Replicate, Runware, and Freepik with 3× faster renders at roughly 60% less than Pro. Replicate posts concrete latencies: a 5s 480p shot returns in ~15s end‑to‑end and 720p in ~30s, while Freepik clocks a 3s cut in about 7s. If you iterate on storyboards, action beats, or social spots, that’s the difference between ‘try again’ and ‘move on.’

Crucially, the fast mode keeps the studio‑grade motion control people actually use: first‑frame control, consistent characters, multi‑aspect outputs, and smoother motion. It undercuts not just Pro but even Seedance Lite on price, so you can afford more takes without quality collapse. fal shipped day‑0 text‑to‑video and image‑to‑video endpoints for quick A/Bs on identical prompts; Runware added an API‑ready slot; Freepik folded it straight into their creator suite. Early testers call it a rare sweet spot of fidelity, latency, and cost that makes mid‑cycle revisions painless.

Following last week’s multi‑shot sequencing push, Pro Fast looks like the “default” mode for production teams who care about throughput as much as look — and it’s live everywhere you already work.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught

Feature Spotlight

Seedance 1 Pro Fast: speed/cost win for video

Seedance 1.0 Pro Fast arrives day‑0 across major hubs with 3× faster inference and ~60% lower cost than Pro, delivering 2–12s clips (3s in ~7s), T2V/I2V, first‑frame control, and multi‑aspect support—prime for rapid iteration.

Cross‑platform rollout hits today’s feed: ByteDance’s Seedance 1.0 Pro Fast lands on fal, Replicate, Runware, and Freepik with sizable speed/cost gains. This is the big utility story for filmmakers and editors.

Jump to Seedance 1 Pro Fast: speed/cost win for video topicsTable of Contents

⚡ Seedance 1 Pro Fast: speed/cost win for video

Cross‑platform rollout hits today’s feed: ByteDance’s Seedance 1.0 Pro Fast lands on fal, Replicate, Runware, and Freepik with sizable speed/cost gains. This is the big utility story for filmmakers and editors.

Seedance 1.0 Pro Fast: 3× faster and ~60% cheaper rolls out across major platforms

ByteDance’s Seedance 1.0 Pro Fast lands with 3× faster renders and ~60% lower cost than Pro, now live on ModelArk, Replicate, Runware, Freepik and fal BytePlus release. Following up on multi-shot sequencing, this update targets production speed and budget while keeping studio‑grade motion control BytePlus release.

Availability is confirmed by platform posts and pages—fal day‑0 endpoints Fal announcement, the Replicate model page Replicate model page, Runware’s model hub Runware models, and Freepik’s feature rundown Freepik details. Creatives get optimized inference, smoother motion, and consistent storytelling at a fraction of prior cost BytePlus release.

Freepik adds Pro Fast with 3s ≈ 7s renders and first‑frame control

Freepik integrated Seedance 1.0 Pro Fast with rapid 2–12s generations; a 3s clip renders in about 7s, with Text‑to‑Video, Image‑to‑Video, first‑frame control, and multiple aspect ratios Feature details. The team calls it the fastest, most flexible AI video model in their suite Speed overview.

Community partners are already pushing trials and examples, signaling creator momentum around the new mode Partner examples, while teaser posts nudge speed‑hungry users to test it Freepik tease.

Replicate posts concrete latencies: 5s 480p ≈ 15s; 720p ≈ 30s, cheaper than Lite

Replicate highlights Seedance 1.0 Pro Fast with specific speed/price context: a 5s 480p video takes ~15s end‑to‑end and 720p takes ~30s, while pricing undercuts both Seedance Pro and even Seedance Lite Latency and pricing. Hands‑on is available immediately via the hosted runner Replicate model.

fal ships day‑0 Text‑to‑Video and Image‑to‑Video endpoints for Pro Fast

fal rolled out Seedance 1.0 Pro Fast on day‑0 with both Text‑to‑Video and Image‑to‑Video endpoints, enabling instant trials from the browser Day‑0 availability. Direct run links are live for creators who want to compare quality, speed, and motion control on identical prompts Text to video, and Image to video, with a follow‑up “try it today” push Try it today.

Runware adds Pro Fast day‑0, touts smoother motion and stronger consistency

Runware integrated Seedance 1.0 Pro Fast on day‑0, emphasizing faster generations at lower price while improving motion smoothness and consistency for production workflows Runware launch. The model is available in their API‑ready catalog for quick evaluation and deployment Runware models, with a direct launch link shared for immediate use Launch link.

Early testers call Pro Fast a rare sweet spot of quality, speed, and cost

Creators experimenting with Seedance 1.0 Pro Fast report a strong balance between fidelity, render speed, and price—the trifecta that matters in iterative storyboarding and quick cuts Creator verdict. The signal is amplified by platform boosts, nudging more hands‑on trials across the community Replicate boost.

🌀 Hailuo 2.3 early access keeps impressing

More testers post 2.3 clips and call out motion feel and consistency; Hailuo teases a limited ‘API FREE’ window. Excludes Seedance coverage (see feature).

Hailuo 2.3 teases limited‑time free API access

Hailuo signaled “Coming soon & API FREE (limited time),” hinting at a launch window where creatives can try 2.3’s text‑ and image‑to‑video capabilities without cost API teaser. For filmmakers and designers on the fence, this lowers the barrier to evaluate motion quality, character consistency, and throughput in real workflows.

Rapid camera moves still preserve character and object locks in 2.3

A fresh stress test shows Hailuo 2.3 keeping every character and prop consistent even under fast camera motion, following up on group consistency across crowds Fast camera test. That stability under speed matters for whip‑pans, chase beats, and complex coverage without cutaways.

Aerial sweep of Al‑Azhar Mosque shows Hailuo 2.3’s cinematic motion control

A new early‑access demo glides over Cairo’s Al‑Azhar Mosque with stable parallax and atmospheric detail, showcasing 2.3’s ability to carry long, graceful camera moves without warping Aerial demo. This kind of controlled drift and scale change is what story‑first directors need for establishing shots and location reveals.

Arabic testers praise Hailuo 2.3’s FPV immersion and movement feel

An Arabic early‑access thread calls out how convincing first‑person flight and hand‑held energy feel in 2.3, a frequent pain point for prior models FPV praise. For action‑heavy shorts and music videos, that sense of embodied motion is a clear creative unlock.

Early‑access clips point to cinematic feel and stable text‑to‑video

More creator tests underscore 2.3’s mood, motion, and consistency, with both I2V and T2V flows producing clean coverage.

- Friday‑night rain vignette cut on 2.3 Early Access shows tone and camera rhythm holding up end‑to‑end Early access clip.

- Text‑to‑video results get positive marks for cohesion and look, suggesting fewer retries to get a usable take Text-to-video praise.

I2V montage battle (samurai vs mech) highlights action coherence in 2.3

An image‑to‑video prompt “create a montage battle between samurai and mech” generated clear blocking and readable combat beats in Hailuo vid2.3 I2V battle. For stylized trailers and kinetic edits, the model’s temporal cohesion helps sequences play without jitter or geometry drift.

Live creative jam pairs Hailuo with Higgsfield Popcorn at GMC

Onstage at the Generative Media Conference, teams showcased a Hailuo × Higgsfield Popcorn pipeline—storyboard‑to‑shot flows built live for a packed room Stage photos. For producers, it signals practical crossover between tight‑control storyboards and 2.3’s motion engine in a single session.

🎞️ LTX‑2’s style dial: film, UGC, and time travel

Today’s LTXStudio threads focus on style breadth—high‑end film, stylized animation, live broadcast, docs, and time‑period looks—plus a try‑now promo. Excludes Seedance (feature).

LTX‑2 dials in high‑end film language with clean lighting, soft lenses, and dynamic camera moves

LTXStudio showcases how LTX‑2 can reproduce the tone and texture of premium cinema—matching lighting, lens feel, and motion grammar for sequences that read like real film dailies High‑end film examples. This matters for directors seeking cinematic cohesion from text or references without sacrificing shot‑to‑shot consistency.

Stylized animation dial: anime, clay, puppetry, and hand‑drawn—with character identity locked

LTX‑2 adapts to frame‑based styles—anime, stop‑motion, clay, puppetry, and hand‑drawn—while preserving character, environment, and camera consistency across angles Animation examples. For animation teams, this means a single lookbook can drive numerous shots while maintaining a unified visual identity.

Time‑travel looks land with 50% off generations in LTX Studio

LTXStudio spotlights a time‑period dial—1940s film to 2000s camcorder—that reconstructs era‑specific video texture and broadcast grammar, and notes all LTX‑2 generations are currently 50% off Try now offer, following up on initial launch. Creators can dive in immediately via the studio page LTX Studio page.

Live broadcast replication: talk‑show, interview, and mock news aesthetics on cue

The live‑production preset demonstrates studio lighting, desk blocking, seated talent, and clean coverage patterns that read like talk shows and news formats Broadcast look. It enables fast creation of show openers, panel segments, or satirical news bits without stage builds.

UGC and social looks: LTX‑2 matches raw, mobile‑first realism for influencer‑style content

The LTXStudio thread highlights LTX‑2’s ability to generate convincing UGC, from handheld cadence to platform‑native framing and compression, enabling authentic social edits, influencer parodies, and reality‑bending inserts that feel shot on phone UGC examples. Creators can exploit this for brand work and viral shorts without reshoots.

Nature and documentary mode: patient camera language with textured, realistic detail

LTX‑2 renders documentary pacing—slow pans, lingering wildlife beats, and observational sequences—while preserving texture that holds up frame by frame Nature doc look. This is useful for establishing shots, narrative inserts, and docu‑style branded stories.

🕸️ Runway Workflows roll out to all plans

Runway says node‑based Workflows are now rolling out across all plans, with a new Runway Academy episode to get started. Excludes Seedance (feature).

Runway Workflows roll out to all plans with a new Academy walkthrough

Runway says Workflows are now rolling out across all subscription tiers, paired with a Runway Academy episode to help creators start “building the tools that work for you” rollout note. For directors, designers, and editors, this enables chaining generative steps and edits inside Runway to speed up shot-to-shot pipelines without app‑hopping.

Runway’s weekly recap spotlights Workflows, model fine‑tuning, and Ads Apps

Runway’s “This Week” roundup highlights the Workflows rollout alongside model fine‑tuning and the new Apps for Advertising suite recap thread, following up on Ads apps launch. The update frames an end‑to‑end stack for creatives—build automations in Workflows, refine models, then repurpose assets for campaigns—see the Academy starter for Workflows to dive in Academy episode.

🎵 AI music videos: OpenArt OMVA + SFX tools

OpenArt’s step‑by‑step flow and $50k OMVA contest headline music‑video tooling; Mirelo v1.5 adds synced SFX to silent clips; creators share real‑world channel growth. Excludes Seedance (feature).

OpenArt Music Video Awards: $50k prizes and Times Square placement, entries open now

OpenArt’s OMVA is live with more than $50,000 in prizes and a chance for winning videos to appear in NYC’s Times Square, accepting submissions Oct 15–Nov 16. Entry details and rules are on the official page Contest announcement, with the program hub here Contest page. Community momentum includes a new "Stellar’s Choice Awards" ambassador spotlight encouraging bold animated entries Ambassador call, and a creator invite that summarizes how to enter and what’s eligible Creator invite thread.

OpenArt’s AI Music Video workflow: four modes, then one-click “Create Full Video”

A creator walkthrough details OpenArt’s end‑to‑end flow for turning any song into a finished music video. Sign up, pick a mode (Singing, Narrative, Visualizer, or Lyrics), upload your track and artist face, set aspect ratio/models/resolution, then hit “Create Full Video.” How-to thread, and OpenArt home show the entry point, with specific mode examples for Singing Singing example, Visualizer Visualizer example, and Lyrics Lyrics video example. A follow‑up thread bundles all steps and results in one place for easy replication Contest CTA recap.

Case study: AI cover channel hits 500k views and 8k subs in 10 days, monetized

A creator reports a Spanish AI cover channel surged from 0 to 8,000 subscribers in 10 days, reaching 500,000 views and 14,000 watch hours—now fully monetized—with all content produced using AI tools. The Linkin Park “In the End” flamenco cover is showcased as part of the results Channel growth post, with the linked video illustrating the format and aesthetic YouTube video.

Mirelo v1.5 adds synced SFX to silent clips on Replicate at ~$0.01/sec/sample

Mirelo SFX v1.5 generates synchronized sound effects for silent videos (up to ~10s), with improved audio quality and timing, multiple samples per run, and simple prompt guidance—priced around $0.01 per second per sample. Try it on Replicate via the model page shared in today’s posts Model page, with direct access here Replicate model.

🌌 Grok Imagine: anime moods and dialog tests

Creators lean into Grok’s cinematic mood and anime stylings; first tests of Grok Video with dialogue show convincing facial sync and camera flow. Excludes Seedance (feature).

Early Grok Video dialogue test shows natural lip‑sync and camera flow

A first hands-on shows Grok Video handling spoken dialogue with convincing facial sync, expressions, and smooth camera movement Grok video test, following up on in‑app upscale for Grok Imagine the day prior. For creators, this hints at viable talking‑head scenes, dubbed shorts, and avatar hosts without manual keyframing.

Creators turn to Grok for eerie, horror‑anime atmospheres

Multiple tests lean into Grok’s unsettling side, with uncanny compositions and mood‑first animation that read like modern horror anime Eerie scene prompt and a broader thread on techniques to sustain dread and tension Horror anime thread. For storytellers, Grok’s lighting and pacing cues make quick ideation of scary beats practical.

Dark‑fantasy heroine test: backlit, polearm‑wielding character holds style

A rotating, backlit anime heroine with an ornate polearm demonstrates Grok’s consistency on hair light, costume detail, and glow without smearing Dark fantasy character, reinforcing broader claims that this anime look “just works” in the model Anime style result. Great for character reveals and key art motion passes.

Grok nails 90s OVA romance vibe on a train

A 90s OVA‑style vignette—two strangers sharing a fleeting moment on a train—shows Grok’s anime aesthetic landing the soft palettes, filmic grain, and framing language that define the era Anime romance test. This is a strong reference style for narrative shorts and music‑video cutaways.

Image‑to‑animation: moody city‑lights sequences from stills in Grok

Animating a favorite still into a neon, rain‑soaked city vignette shows Grok’s strength at preserving composition and color while adding motion City lights clip. It’s a lightweight path for reels, lyric videos, and ambience loops where vibe continuity matters.

Simple star‑gazing prompt yields magical top‑down framing in Grok

A minimal prompt—“top down shot of the girl pointing at the stars”—produces a lyrical, storybook feel with strong directional light and negative space Star prompt result. This kind of prompt economy is useful for boards, transitions, and title cards.

🗣️ Avatars & motion graphics: lip‑sync to 25s

Avatar/performance tools trend: OmniHuman 1.5 for expressive lip‑sync from stills, HeyGen motion graphics from text, and Sora 2 usage posts. Excludes Seedance (feature).

ElevenLabs + Decart animate real‑time talking avatars for voice agents

A pipeline combining ElevenLabs’ speech stream with Decart’s live facial animation yields real‑time video avatars—useful for support agents, presenters, and interactive signage Integration note. A live stage demo showed rapid style switches and immediate visual response to prompts, underscoring production‑ready latency Stage demo.

For creators, this enables interactive hosts and live performances without pre‑rendered lip‑sync passes.

OmniHuman 1.5 turns a single image into an expressive, lip‑synced video avatar

BytePlus says OmniHuman 1.5 can reanimate any static image with accurate lip‑sync, emotions, and gestures directly driven by audio, spanning use cases from anime characters to pet imagery and creative vlogs Feature brief. Following up on text control, which highlighted scene and camera direction, this update spotlights performance realism from a single still—useful for character vignettes, voiceover explainers, and social promos without a shoot.

Sora 2 hits 25‑second generations on Vadoo AI, opening room for fuller beats

Vadoo AI now runs Sora 2 up to 25 seconds per clip, giving editors enough runway for complete lines of dialogue, music hooks, or micro‑scenes before stitching Platform update. For storytellers, that extra duration helps with pacing and emotional delivery without excessive upscaling or re‑timing.

Creators highlight Sora 2’s strong character consistency across angles and lighting

Leonardo showcases Sora 2 sequences that maintain face identity, lighting, and fine detail across multiple angles, a key requirement for avatar continuity and branded spokescharacters Usage example, with more about its platform at Leonardo AI site. This kind of cross‑shot fidelity reduces cleanup and shot‑matching in post.

Grok Video dialogue demo draws praise for lip‑sync, expressions, and camera flow

Early user tests report natural facial acting, synchronized dialogue, and coherent camera movement in Grok Video, suggesting usable out‑of‑the‑box performances for shorts and social spots Dialogue demo. While not production‑final, the feel of live delivery shortens the gap between script and on‑camera draft reads for iterative story work.

HeyGen teases “explain it, animate it” custom motion graphics from plain text

HeyGen’s latest feature promises motion graphics generated from natural‑language descriptions in minutes, lowering the bar for title cards, kinetic type, and animated explainers without After Effects Feature teaser. For creators, this points to faster iteration on graphic packages and social cutdowns with consistent branding.

🧩 ComfyUI: reactive video, consistency, hiring

ComfyUI showcases audio‑reactive video and character‑consistency workflows, celebrates a GitHub Top‑100 milestone, and opens a senior design role. Excludes Seedance (feature).

Audio‑reactive video built in ComfyUI hints at live, music‑synced visuals

A new audio‑reactive workflow shows ComfyUI driving visuals that pulse and morph in sync with sound, underscoring how strange and expressive gen‑media can get for concerts, VJ sets, and music videos Audio reactive demo.

Character Consistency in ComfyUI: a practical workflow for multi‑shot stories

A concise guide demonstrates how to maintain character identity across shots in ComfyUI—stabilizing faces, outfits, and angles—so filmmakers and animators can keep continuity through edits and camera changes Consistency workflow.

ComfyUI is hiring a Senior Product Designer to shape creative tools

ComfyUI opened a Senior Product Designer role focused on end‑to‑end UX for artist‑centric, node‑based workflows—an opportunity to influence the next generation of AI creative tooling used by filmmakers and designers Hiring post, and the full role details are listed in the posting job description.

Community momentum: “This team is CRACKED” as ComfyUI teases deeper control

Creators are praising ComfyUI’s velocity while the team hints at “ultimate controllability,” signaling faster iteration and more precise direction tools for stylists and directors building complex pipelines Community praise, and a teaser points to coming behind‑the‑scenes reveals Control teaser.

📽️ Previs: Popcorn storyboards + HDR control

Higgsfield Popcorn adoption continues for 8‑frame, high‑consistency boards; a workflow thread shows prompt extraction and multi‑model batching. Luma Ray3 HDR adds fine color/detail control. Excludes Seedance (feature).

Higgsfield Popcorn pushes 8‑frame “pro” storyboards, adds 225‑credit promo

Higgsfield is pushing Popcorn as a professional AI storyboarding tool—8 cinematic frames with locked consistency and directorial control—while offering 225 credits for RT + reply to kickstart trials Storyboard promo. Creators are calling it the new standard and describing practical edit flows (lock subject vs lock world), with real‑world use to ideate a story from a single image and finish in Kling + Suno + CapCut Creator pitch, Creator claim, Creator case study.

On‑stage demos at the Generative Media Conference underline momentum and creator interest as Popcorn pairs with downstream video models in live workflows Conference demo.

Popcorn → Weavy pipeline fans out prompts to multi‑model storyboard batches

A new automation thread shows how to turn Popcorn outputs into one‑click, side‑by‑side boards across multiple image models, following up on Creator guide that covered hands‑on Popcorn sequencing. It strings together Popcorn’s Chrome extension and Higgsfield Soul for prompt extraction, builds a reusable prompt formula in GPT, then triggers a Weavy workflow to batch‑generate comparable frames in parallel Workflow steps.

• The result is an 8‑model comparison grid for fast look‑dev and consistency checks before committing to a video pass Results board.

Luma Ray3 HDR brings fine highlight/shadow control to Dream Machine

Luma’s Ray3 HDR mode promises dynamic control over color with preserved micro‑detail, maintaining nuance across highlights and shadows—useful for previs color direction before final grade HDR announcement.

🏟️ Onstage: Generative Media Conference (GMC)

Live coverage spans real‑time video gen demos, e‑commerce creative talks, panels on studios/investing, and a Katzenberg cameo. Excludes Seedance launch details (covered as feature).

Live creative jam: Higgsfield × Hailuo take storyboards to screen at GMC

Following up on event kickoff promising a live jam, Higgsfield and Hailuo ran an on‑stage creative session showing storyboard‑to‑video flow; a companion MiniMax slide cited 4.5bn+ daily text tokens, 1.6mn+ daily videos, and 370k+ daily audio hours as scale context Creative jam.

This workflow demo reinforces how locked‑look boards can now feed directly into consistent, camera‑aware motion for ads and shorts.

BytePlus demos Seedream 4.0, OmniHuman 1.5, and Seedance Pro on stage

BytePlus’s GM presented fresh model capabilities across worldbuilding (Seedream 4.0), audio‑driven avatar acting (OmniHuman 1.5), and cinematic video (Seedance Pro) BytePlus demos.

For creators, the suite suggests end‑to‑end pipelines: concepting, performance, and cut‑ready motion from a single vendor stack.

GMC opens to a packed house with a “year in review” slide of 200+, 14 models, 2M+

The Generative Media Conference kicked off with a full room and a “This year in review…” slide highlighting 200+, 14 models, and 2M+ as topline metrics—signaling rapid ecosystem growth for creative tooling Kickoff room shot.

For AI creatives, the scale hints at wider model choice and faster iteration loops across production workflows.

Panel: The Rise of AI‑Native Studios explores new production playbooks

A multi‑creator panel dug into how AI‑native studios organize teams, maintain visual identity, and ship multi‑shot narratives without traditional overhead Studios panel.

Takeaway for storytellers: consistent casting, style locks, and asset reuse are becoming the backbone of small‑team, big‑scope production.

Shopify outlines generative media for e‑commerce creatives

Shopify’s product lead walked through generative media for commerce, covering creative constraints, product visualization, and campaign asset pipelines tailored to retail needs Shopify session.

Brand filmmakers and designers get a clearer map for stitching AI video, imagery, and copy into conversion‑driven storefronts.

Jeffrey Katzenberg joins GMC for a big‑studio perspective on gen media

Jeffrey Katzenberg appeared on stage, underscoring how mainstream entertainment is engaging with generative workflows and creator tools Katzenberg on stage.

His presence signals accelerating crossover between Hollywood development cycles and AI‑native production.

On‑stage workflow: Nano Banana, Veo 3.1, and Genie 3 for rapid prototyping

A live talk highlighted chaining Google’s Nano Banana, Veo 3.1, and Genie 3 to go from still mood frames to moving sequences and interactive worlds in minutes Paige stage talk.

For solo creators, the recipe shows how to bootstrap a pilot: establish style in stills, then extend motion and interactivity without leaving the toolchain.

Panel: The Next Frontier of Models spotlights creator‑centric capabilities

Researchers and builders unpacked model directions most relevant to creatives—style faithfulness, camera control, long‑form coherence, and live latency Models panel.

Expect near‑term gains in multi‑shot consistency and director‑style conditioning to reshape storyboarding and editing.

Panel: Top SF investors discuss where gen media capital is flowing

Investors from a16z, Kindred Ventures, Bessemer, and Meritech shared how they evaluate creative‑tooling startups and the traction signals that matter for model‑powered apps Investing panel.

Signal for founders: real usage (not just model novelty) and defensible workflows are driving term sheets.

Foster + Partners shares how generative media is reshaping architecture visuals

Associate Partner Sherif Tarabishy discussed applying generative media to architectural storytelling—from design intent previews to marketing‑ready motion Architecture talk.

Design studios can expect faster client iterations and clearer narratives around form, light, and materiality.

📑 Papers: ultra‑hi‑res diffusion and values RL

Today’s research links include ultra‑high‑res diffusion via DyPE, reinforcement learning with explicit human values (RLEV), and community examples in Gaussian splatting. Excludes Seedance (feature).

DyPE enables 16MP ultra‑high‑res diffusion without retraining

Dynamic Position Extrapolation (DyPE) lets pre‑trained diffusion transformers synthesize ultra‑high‑resolution images—up to 16 million pixels—by dynamically tuning positional encodings during sampling, no extra training needed paper mention, with details on method and results in the paper page paper page.

- The approach balances low‑frequency layout early and high‑frequency detail later, improving fidelity at large scales per the authors’ summary paper page.

RLEV aligns LLMs to explicit human values, improving value‑weighted accuracy

Reinforcement Learning with Explicit Human Values (RLEV) optimizes models for value‑weighted correctness and learns when to stop (value‑sensitive termination), reporting higher alignment on important questions versus standard RL baselines paper abstract.

- The method injects per‑question value signals so training prioritizes impact‑weighted outcomes, a useful framing for safety‑critical creative tooling and assistants paper abstract.

Gaussian splatting demos highlight rapid quality leaps in real‑time 3D capture

Community reels circulating today underscore how fast Gaussian splatting quality and realism are advancing, with creators reacting to increasingly cinematic, view‑consistent scenes built from sparse captures Community examples.

🎨 Still looks: srefs, MJ v7, minimal lines

A rich mix of still‑image recipes: minimalistic line‑art prompt packs, MJ v7 parameter sets, neo–art‑deco fashion‑noir srefs, anime style refs, and gritty neon moodboards. Excludes Seedance (feature).

New MJ v7 sref 7380578 recipe yields cohesive 3:4 collage

A fresh Midjourney v7 parameter set — --chaos 10 --ar 3:4 --sref 7380578 --sw 500 --stylize 500 — delivers a unified graphic collage across multiple panels MJ v7 collage, following up on MJ v7 recipe where a different sref produced similarly cohesive 3:4 sets.

The combo balances style lock (sref, sw) with variation (chaos), useful for deck-ready visual systems.

Editorial 4‑panel grid prompt for consistent model, outfit and lighting

A detailed Nano Banana prompt designs a 2×2 editorial sheet with one model, locked outfit, lighting, and mood, while varying pose and framing — perfect for fashion boards and lookbooks Editorial grid prompt.

The pose-by-panel structure (TL/TR/BL/BR) helps maintain continuity across expressions and body language.

Minimalistic line art recipe: flat 2D outlines, no shadows

A compact prompt formula for minimalistic line art nails a modern abstract look: 2D flat vector, outline-only, no shadows, simplified shapes, and clean curves, with color and background specified per subject Line art prompt.

It’s a plug-and-play template for brand icons, posters, or merch where consistent contour style matters.

Neo–art‑deco style ref —sref 2122433650 nails fashion‑noir look

A style reference captured via --sref 2122433650 evokes Nagel/Gruau editorial minimalism: elongated figures, sharp gazes, flat inks, and restrained palette — ideal for fashion noir and poster work Style ref thread.

Creators note it shines on character-centric frames (e.g., Vampirella) where pose and silhouette carry the scene.

Anime style ref —sref 2671898589 with moody cinematic shots

From a personal archive, --sref 2671898589 yields atmospheric anime looks: moonlit harbors, smoky rehearsal rooms, and dramatic low-angle character beats, all with consistent linework and color mood Anime sref samples.

Handy for maintaining a series look across stills, covers, and key art without re‑tuning prompts.

Gritty, muted‑neon moodboard with prompt code

A ready-made aesthetic seed — “--p hzqhpt4” — produces a fuzzy, grainy, teal‑red neon vibe across scenes (street signage, headlights, portraits) that reads like 35mm night stills Moodboard thread.

Use it as a base ‘look LUT’ for series cohesion; layer subject prompts on top to stay in the world.

Concept prompt idea: kneepad ergonomic keyboards, first‑person POV

A playful still-image concept — first‑person view of ergonomic keyboards embedded in kneepads — shows how a single quirky prompt can drive industrial‑design riffs and memeable visuals Kneepads prompt.

Useful as a creativity warm‑up or speculative product sheet to test composition and lighting on wearable tech.

🧠 Agentic tools: MCP servers & web agents

Dev tooling for agentic creatives: a custom fal MCP server callable from popular IDEs/CLIs, HyperBuild to wire agents to live web data, and an Agent Builder token‑lifecycle question. Excludes Seedance (feature).

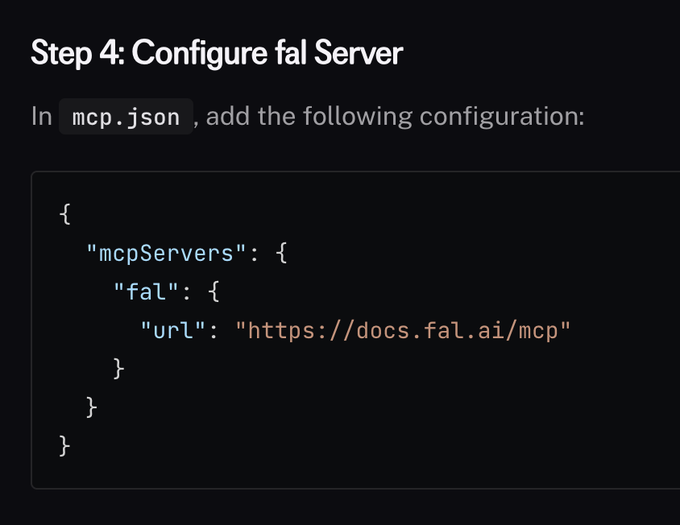

fal ships a custom MCP server callable from Cursor, Gemini CLI, Claude Code and more

fal now exposes a custom Model Context Protocol (MCP) server that you can invoke from popular dev surfaces like Cursor, Gemini CLI, Claude Code, roocode, and cline—making it much easier for creatives to wire agent workflows into familiar IDEs without custom glue code MCP server note. For teams prototyping agentic media tools, this lowers integration friction across editors and terminals where they already work.

HyperBuild open-sources a visual web agent builder

HyperBuild debuts as an open-source, visual way to design agents that use real-time web data—describe behavior in natural language, attach actions, connect data sources, and orchestrate everything in a drag‑and‑drop interface powered by Hyperbrowser Tool thread. • Useful for creative ops like research, content pulls, and campaign automations where agents must fetch, act, and summarize on live pages.

Agent Builder users flag OAuth token refresh gotchas for MCP tools

Practitioners testing OpenAI’s Agent Builder surfaced a common pain: short‑lived OAuth access tokens (e.g., Gmail, HubSpot) used by MCP tools need a reliable refresh/rotation pattern across runs, or agents break mid‑workflow Agent builder question. Expect to pair refresh tokens and secure storage with server‑side exchanges; until first‑party guidance lands, treat connectors as ephemeral and design for failure‑safe retries.

🏛️ Industry & policy pulse for creators

Rights and platform signals: UMG details AI partnerships and artist‑consent stance; posts surface concerns about ChatGPT memory‑based ads and lawsuit data requests. Excludes Seedance (feature).

UMG details AI strategy, inks platform deals, and reaffirms artist‑consent licensing

Universal Music Group circulated an internal memo outlining a “responsible AI” push anchored by licensing deals with major platforms and tools, while reiterating it will not license without explicit artist consent memo summary.

- Partnerships highlighted: YouTube, TikTok, Meta, BandLab, SoundLabs, KDDI, ProRata, KLAY, SoundPatrol (monetized, controlled ecosystems for creators) memo summary.

- Product signal: UMG is experimenting with agentic AI for fan interactions and music discovery, positioning new revenue flows while guarding likeness and catalog rights memo summary.

Reddit reportedly sues Perplexity over training data use, escalating licensing pressure

The same roundup flags a lawsuit by Reddit against Perplexity over AI training data use, adding momentum to paid licensing and stricter terms around user‑generated content for model training roundup list.

- For creative teams, the case signals rising costs and compliance checks around dataset provenance, especially for commercial deployments and enterprise clients roundup list.

YouTube adds likeness detection to help remove AI content using one’s image

A weekly roundup notes YouTube launched a likeness‑detection pathway for takedowns of AI‑generated content, a policy shift that strengthens recourse for creators whose image is used without consent weekly roundup.

- Practical impact: clearer reporting flows can deter deepfake misuse and speed removals, especially for on‑camera educators, performers, and brands weekly roundup.

OpenAI discovery request sparks privacy concerns in ChatGPT suicide lawsuit

A report screenshot says OpenAI sought a memorial attendee list in discovery for an ongoing ChatGPT suicide case, prompting questions about scope and sensitivity of data demanded in litigation involving AI tools lawsuit report.

- For creators and agencies, the flashpoint underscores why minimizing personally identifiable information in prompts, logs, and shared projects matters if disputes escalate to legal discovery lawsuit report.

Post claims OpenAI will test memory‑based ads in ChatGPT; creators debate targeting tradeoffs

A widely shared post asserts OpenAI is planning to serve ads inside ChatGPT that leverage user Memory, potentially starting with Pulse before broader rollout, raising privacy and UX concerns for creative workflows ads claim.

- If true, memory‑targeted placements could improve ad relevance but complicate consent and client confidentiality for agencies and studios using ChatGPT in production ads claim.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught