Sora 2 obeys pro camera prompts – 15s slow‑mo, 2‑view sketch‑locked faces

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Sora 2 just graduated from pretty plates to something you can direct. Following Friday’s watermark‑free web exports, today’s shift is control: creators are publishing reproducible prompt grammar, and the model is actually listening. Diesol shared the exact scene language behind his FROSTBITE blizzard chase — 50mm close‑ups, 24mm tracking, Dutch roll, handheld, overhead drone — and another run nailed a clean 15s slow‑motion shot by stating frame‑rate and camera semantics in‑prompt, no external tools.

Identity consistency — the bane of text‑to‑video — has a practical workaround now. Convert a face to a dual‑view line sketch in Nano Banana and feed that as Sora’s reference; directors report stable characters across shots without tripping photoreal rules. Meanwhile, an anime OP built in ImagineArt with Sora 2 and scored in Suno shows the model’s grip on stylized action, and a circulating yodel demo hints that native audio can carry long takes when you need single‑pass edits. Access is smoothing out too, with WaveSpeedAI offering straightforward Sora 2 Pro text‑to‑video runs for teams iterating on shot grammar.

Caveat: guardrails still twitch — even calendar flips and “kittens in a bouncy castle” get blocked — and the MPA is publicly pressuring OpenAI, so expect stricter content‑matching ahead.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught

Feature Spotlight

Sora 2 as a director’s tool: shorts, slow‑mo, consistency

Sora 2 goes creator‑grade: filmmakers post full shorts and pure‑prompt slow‑mo with DP‑style camera language, plus a sketch‑reference workflow to keep characters consistent across multi‑shot sequences.

Cross‑account surge of Sora 2 Pro creator films and prompt‑level cinematography. Threads share exact camera grammar and a practical sketch‑reference hack for consistent characters across shots.

Jump to Sora 2 as a director’s tool: shorts, slow‑mo, consistency topicsTable of Contents

🎬 Sora 2 as a director’s tool: shorts, slow‑mo, consistency

Cross‑account surge of Sora 2 Pro creator films and prompt‑level cinematography. Threads share exact camera grammar and a practical sketch‑reference hack for consistent characters across shots.

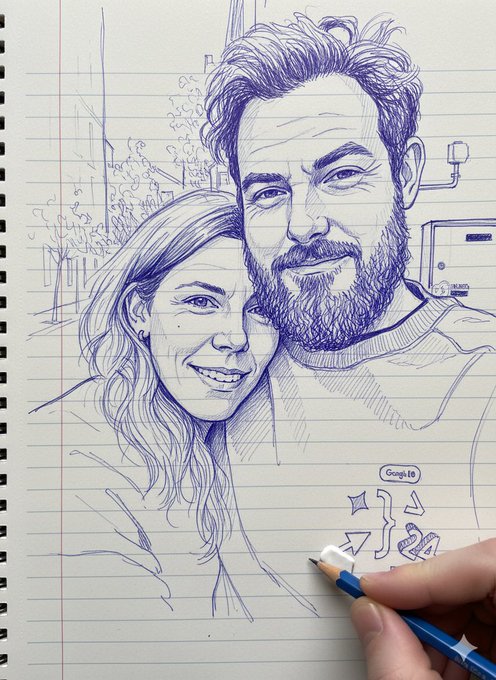

Sketch‑reference hack nails Sora character consistency in “The Runner”

Rainisto’s “The Runner (Part 1)” blends Sora 2 with Veo 3, but the standout is a practical workaround for consistent characters: convert a photoreal face into a dual‑view line sketch in Nano Banana, then feed that sketch as Sora’s reference to lock identity across shots Sketch hack, Short release, Sora reference note, with additional production notes on cut flow and color grading Notes thread.

The trick preserves features without violating photoreal reference rules, and it scales—create one sheet per lead and a combined sheet for multi‑character scenes.

Diesol publishes the scene prompt behind Sora 2 short “FROSTBITE”

Diesol shared the exact text-to-video prompt language he used for a key blizzard chase scene—lens choices (50mm close, 24mm tracking), camera moves (Dutch roll, handheld, overhead drone), and sensory beats—turning his viral short into a reproducible directing recipe, following up on FROSTBITE film which showcased a fully Sora 2 workflow. See the full scene excerpt for timing, blocking, and cuts in his write‑up Prompt example, with the original film post referenced here Short film post.

15s slow‑motion shot generated purely in Sora using camera and frame‑rate grammar

A creator demonstrated a full 15‑second slow‑motion sequence authored entirely via Sora prompt language—specifying technical camera terms and frame‑rate semantics—no outside tools required. It’s a clean proof that Sora can obey pro‑level cinematography cues end‑to‑end in a single pass Slow-mo example.

Anime opening “Flameblade” made with Sora 2 via ImagineArt, scored in Suno

Director heydin_ai produced an original anime OP end‑to‑end by running Sora 2 inside ImagineArt’s pipeline (image‑to‑video), pairing it with a Suno music track. The result shows Sora’s control over dynamic motion and camera movement in stylized action sequences Method details, with the piece shared here Anime opening.

Creators push narrative shorts in Sora 2, from Harlem memories to teenage romance

Today’s drop included multiple narrative experiments: a concept short exploring 1960s Harlem stories and family memory work Concept short, and a lyrical romance vignette about first love’s intensity Romance short. The breadth—documentary‑tinged tone poems to sentiment‑driven fiction—highlights Sora’s viability for varied storytelling genres without leaving the text‑to‑video lane.

WaveSpeedAI offers a straight path to run Sora 2 Pro text‑to‑video

For creators looking to route prompts without wrangling infrastructure, WaveSpeedAI is advertising Sora 2 Pro text‑to‑video runs, with examples like a soft‑lit library romance clip circulating alongside a direct model page link Library scene, WaveSpeed Sora 2 Pro. This is useful for teams that want predictable access while experimenting with shot grammar and longer takes.

Sora 2 handles long yodel vocals in new demo

A circulating clip shows Sora 2 sustaining a traditional yodel with believable phrasing over a long take, underscoring native audio’s usefulness for single‑pass edits and mood pieces. While not a formal benchmark, it signals practical headroom for musical and VO‑driven shorts Yodel clip.

⚖️ IP, moderation, and guardrails this week

Policy and safety news affecting creatives—copyright pushback and content refusals. Excludes Sora 2’s creative wins (covered as the feature).

MPA calls out Sora 2 over alleged infringement, urges OpenAI to act

The Motion Picture Association publicly criticized OpenAI’s Sora 2 for enabling infringing derivatives of members’ films and series, pressing OpenAI to proactively prevent misuse. For creators, policy shifts or tighter filters could follow if platforms respond to studio pressure. MPA statement

- Expect heightened content-matching and takedown sensitivity on high‑profile IP as platforms try to defuse legal risk.

Even “kittens in a bouncy castle” trips Sora 2 content violation

A benign prompt—“kittens bouncing in a bouncy castle”—triggered a Sora 2 “Content Violation,” underscoring continued over‑blocking of harmless ideas, following up on overblocking where multiple innocuous prompts were refused. Friction like this pushes filmmakers toward prompt workarounds or alternative tools with clearer policies. Violation screenshot

Zelda Williams asks fans to stop AI videos of her father

Zelda Williams urged fans to stop sending AI-generated depictions of Robin Williams, calling them disrespectful and over‑processed—an ethical line many creators will face when portraying the deceased. This sentiment may influence platform rules around posthumous likeness use and consent. News headline

Sora 2 blocks a simple calendar‑flip shot for “similarity to third‑party content”

A creator’s prompt for an aesthetic office calendar flipping from December 2024 to October 2025 was blocked by Sora 2’s similarity guardrail, highlighting how generic scenes can be flagged as derivative. Creatives may need to rephrase mundane tropes or add distinctive elements to avoid automatic refusals. Guardrail screenshot

🎥 Veo‑3 Fast on Gemini: per‑shot cinematography specs

Crisp, reproducible Veo prompts with explicit camera moves and lighting on Gemini flows. Excludes Sora 2 (covered as feature).

Veo 3.1 sample clips show up inside Google Vids, likely Fast 720p

Creators spotted “Veo 3.1” sample clips embedded in Google Vids—believed to be the Fast 720p tier—following up on early access claims and proxy caveats; side‑by‑side comparisons against Veo 3 are mentioned in the thread Veo 3.1 samples.

Veo‑3 Fast prompt: F‑16 banking turn with vapor cones and HDR contrast

A fully specified Veo‑3 Fast prompt lays out a four‑shot F‑16 sequence—HDR contrast and rim lighting, a Dutch‑roll camera during a high‑G bank with condensation vapor, and a rack focus from wing vapor to the pilot—ready to paste into Gemini flows F16 prompt spec.

Creators say Veo 3 is still SOTA; pair Nano Banana edits with LTX Studio

A workflow thread argues Veo 3 remains state‑of‑the‑art and shows how to combine Nano Banana for tight edits with LTX Studio for stills→video story flows—useful for consistent characters, cinematic angles, and integrating extra elements before sending shots through Veo LTX Studio thread.

Matte black Challenger desert drift with mid‑ground explosion, shot‑by‑shot spec

For vehicle VFX, a ground‑level rear‑wheel tracking shot glides into a profile reveal of a matte‑black Hellcat drifting across desert, with heat shimmer and a timed mid‑ground explosion ejecting debris in slow‑mo; focus, motion, and framing are spelled out per shot for Veo‑3 Fast on Gemini Challenger drift spec.

Tandem skydive over Palm‑style coast with continuous orbit and harsh split light

This Veo‑3 Fast shot plan emphasizes harsh midday split lighting, a 180° orbital rotation around the skydivers for geometric context, a vertical CRANE DOWN through clouds with volumetric lighting, and a soft canopy‑open denouement—structured for reproducible results on Gemini Skydive prompt spec.

🌌 Grok Imagine: cinematic tricks and failure modes

Practical direction tips and tests for Grok’s video model—reflections, seasonal transitions, moody angles—and where physics breaks.

Grok nails water reflections and wet hair for haunting mood

Following up on reflections handling, creators now highlight lifelike water reflections and convincingly wet hair that sell a somber, Ophelia‑like vibe Water reflection clip. For water scenes, lean into low angles near the surface and let specular highlights carry the emotion.

Prompt hack: seasons cue smooth time transitions in Grok Imagine

Mentioning a change of seasons (for example, spring to winter) in your prompt reliably yields a beautiful, felt passage‑of‑time transition in Grok animations Season transition tip. Use it to bridge scenes, signal story beats, or compress time without jump cuts.

Failure mode: hourglasses expose Grok’s non‑conserving physics

Creators report Grok struggles to conserve granular mass in hourglasses—the top pile doesn’t shrink in sync with the bottom growing, even with careful prompting Hourglass limitation. As a workaround, pick metaphors that don’t require strict volume conservation, or previsualize with a different engine and cut around continuity issues.

Low Dutch angles and shadow play land well in Grok

Low Dutch angles in Grok produce strong atmosphere for night skies and neon—great for tension and scale Low angle example. A companion “dance with your shadow” short shows how minimal blocking plus moody lighting can carry a scene without complex action Shadow dance clip. For tragic romance tones, similar framing sustains the fragile, haunted feel Romanticism clip.

🧩 Beyond Sora: WAN, Kling, Seedance & platform showcases

Community runs on other video engines and contests—ComfyUI+WAN pipelines, Seedance prompts, and Kling finalist highlights.

WAN 2.2 Animate stress‑tested end‑to‑end inside ComfyUI

Creators are pushing Alibaba’s WAN 2.2 Animate fully inside ComfyUI graphs, reporting strong motion control and stability—following up on Extended lipsync ComfyUI+WAN pipelines. A separate demo shows GTA5 GIFs upscaled into near‑photoreal clips using WAN 2.2 with ComfyUI and Cset, underscoring a maturing, node‑based video workflow for stylization and temporal coherence Stress test, and GTA5 upscale.

Kling AI picks 10 finalists from ~5,000 entries; winners Oct 18 (UTC+8)

Kling AI named 10 finalists in its NextGen Creative Contest from nearly 5,000 submissions, with winners set to be announced on Oct 18 (UTC+8). The roundup highlights narrative, execution, creativity and significance as the judging pillars, signaling growing momentum around Kling’s role in AI‑powered storytelling Finalists post, and Congrats thread.

Pollo AI leans into multi‑model: Vidu Q2 and WAN 2.5 creator clips

Pollo AI continues positioning as a multi‑model video hub, with creators showcasing a detailed Vidu Q2 “futuristic tiger” piece and active runs on WAN 2.5. The breadth of engines—and frequent community demos—make it a practical sandbox for testing styles, motion fidelity, and native audio across models Model demo, and WAN 2.5 invite.

Imagen4 + Kling + ElevenLabs: fictional corporate PV pipeline demoed

A creator shared a “fictional enterprise PV” workflow combining Google Imagen4 for images, Kling AI for video generation, and ElevenLabs for voice—an end‑to‑end recipe for brand‑style promos without touching Sora. It’s a clear, reproducible template for motion, VO, and pacing across tools Workflow thread.

Seedance‑1‑pro prompt drop: cinematic war scene with whip‑pan and tracking

A detailed Seedance‑1‑pro recipe was shared for a gritty war sequence: fast whip‑pan from a tense close‑up to battlefield explosions, with dust, smoke, and chaotic forward motion—designed for instant reruns on Replicate. It’s a useful prompt scaffold for directors to test camera grammar (whip‑pans, orbits, rack focus) in a single shot Prompt share.

🛠️ Creator toolchains: offline agents, GitHub ties, upscalers

Practical tooling for production—offline AI agents, stream editing, repo integrations, and upscalers to slot into pipelines.

Libra brings a fully offline AI agent to Mac workflows

GreenBit AI’s Libra runs entirely on‑device (no internet), tackling multi‑step tasks like equity research, structured academic templates, and real‑world planning with a private, multi‑agent context engine First-hand review, Feature thread. The vendor pitches adaptive context management and orchestration for creators who need speed and privacy, with a direct download and overview on the site Libra homepage.

Grok Tasks is getting native GitHub integration

A settings screenshot shows GitHub listed under Integrations with a Connect button, noting that “currently only Grok Tasks can chat with or call external connections,” hinting at repo‑aware chats and actions for creative dev teams Settings screenshot.

Expect tighter loops for script/asset reviews, PR summaries, and automated chores across creative codebases.

DecartStream teases real‑time, no‑install livestream editing

DecartAI previewed DecartStream, promising instant, in‑browser live‑stream editing with no installs or setup—positioned for creators who want real‑time transformations during broadcasts Product teaser. If the latency and stability hold up at scale, this could remove a whole class of scene‑switching and post‑cut friction for streamers.

BasedLabs adds Topaz Video Upscaler to creator pipelines

BasedLabsAI now supports Topaz Video Upscaler, giving creators a high‑quality upscale stage inside their workflow rather than round‑tripping to a separate app Feature blurb. This slots neatly after image/video generation to lift delivery resolution and perceived detail without re‑rendering content.

🖼️ Stylized stills: synthwave packs, ink clones, MJ v7

Image prompt kits and reproducible params for illustrators and designers—synthwave looks, notebook ink portraits, and Midjourney v7 sets.

Midjourney v7 params: sref + high stylize pack for 3:4 frames

A compact MJ v7 recipe lands with exact knobs: --chaos 17 --ar 3:4 --sref 1089077810 --sw 500 --stylize 500, demonstrated via a multi‑panel collage Collage params.

Following up on gothic collage where v7 params first proved impactful, this set balances controlled variance (chaos 17) with strong style adherence (sw 500) and a consistent 3:4 canvas for editorial‑friendly layouts.

Notebook ink clones: blue‑pen portraits that match your reference

A photo‑faithful “notebook ink” recipe circulates: generate a line drawing identical to the uploaded face (features, proportions, and expression), in blue/white ink on grid paper, with a drawing hand and eraser props for realism Prompt details. A second example shows a clean before/after with the pen‑in‑frame cue reinforcing the sketch illusion Before after.

Great for stylized bios and posters; keep likeness permissions in mind if you’re cloning real people.

Synthwave Cinematics: a ready-to-use neon grid recipe for retro city stills

Azed_ai shares a reusable “Synthwave Cinematics” prompt that nails the 80s neon look: glowing grid foreground, retro skyline, magenta/electric‑blue palette, VHS scan lines, palm silhouettes, and a big sun on the horizon Prompt recipe.

Useful as a base style for album art, posters, or hero banners—swap the subject to iterate while keeping lighting, color harmony, and composition consistent.

Neon wireframe Joker composites kick off a community remix

A “QT your joker” call showcases a striking look: a neon wireframe figure filled with a landscape plate, glowing orange/blue edges, and theatrical pose—perfect fodder for remix threads and style swaps Community thread.

Think of it as a compositing exercise: silhouette choice + interior texture + rim‑glow treatment yields fast, dramatic posters.

Pixar‑style 3D toon on a toilet: clean, symmetric image prompt

A playful prompt idea specifies a Pixar‑style 3D character seated in a bright minimalist bathroom, with black cap/white tee/black jeans, calm pose, and soft ambient lighting; symmetrical framing and --ar 3:2 --s 250 keep the render tidy for thumbnails or social tiles Prompt idea.

🎵 Music, voice, and lipsync tools

Audio creation workflows for films and shorts—vocal blending, singing lipsync tests, and quirky Sora audio demos. Excludes Sora’s filmmaking feature.

Udio adds Voice Blending and Playground

Udio rolled out a Voice Blending tool plus a new Playground mode so creators can mix vocals from multiple songs and generate tracks by pairing chosen voices with styles directly under Create → Voice Control Feature overview. The Playground offers a lightweight space for fast, fun exploration before committing to full arrangements, after what the team says was extensive internal testing Feature overview.

Lipsync‑2‑PRO holds up on elongated vocals

A fresh singing test shows SyncLabs’ lipsync‑2‑pro tracking slow, drawn‑out phrases with respectable mouth‑shape alignment, if not perfectly Singing test. This follows uploaded audio where the model added uploaded‑audio support and boosted realism, positioning it as a practical option for music videos and sung dialogue.

Sora 2 generates a convincing long yodel

A circulating demo captures Sora 2 producing an impressively long traditional yodel with coherent phrasing and timbre tied to the on‑screen performer Yodel clip. For teams weighing in‑model vocals versus DAW pipelines, it’s a signal that native audio is maturing; see additional audio notes on the WaveSpeed run page WaveSpeed Sora 2 page.

💸 Monetization: stock sites, payouts, and tool stacks

Where the money meets the workflow—stock platform strategy, payout roadblocks, and real creator subscription stacks.

AI character spend is surging—BytePlus reports +64% YoY

BytePlus reports global spend on AI characters is up 64% year over year, underscoring a fast‑growing monetization lane for social AI creators Trend announcement.

The company posted a 7‑minute primer and free training series to help teams build retention and revenue around virtual characters YouTube explainer.

X payout access still blocked by currency; creators push for escalation

Creators are still unable to withdraw X revenue in unsupported currencies; today, heydin_ai directly asked Nikita Bier to intervene on azed_ai’s blocked payout Escalation request, following up on Blocked payouts noted yesterday. The continued friction makes income fragile for creators outside supported regions; diversify revenue via stock marketplaces and direct support while this resolves.

Turn AI video renders into stock income on Envato and Pexels

A creator highlights a straightforward revenue play: upload AI‑generated videos to stock platforms like Envato and Pexels and earn per download; treat every render as a reusable asset Stock income tip.

- Quality polish helps acceptance; some creators add Topaz Video Upscaler inside BasedLabs for cleaner masters Upscaler add.

A lean monthly AI subscription stack creators actually pay for

ai_for_success shared a lean monthly AI tool budget and asked peers to compare stacks Subscription breakdown.

- Gemini Pro $20; Cursor Pro $20; Sider AI Plus $25 (yearly); Perplexity Pro $20; Grok included with X; $40–50 monthly API for Claude/OpenAI.

- Takeaway: a functional pro stack can land near $125–$135/month before variable API use—budget against expected stock sales or client revenue.

📲 Templates & consumer apps for quick wins

Lightweight, fun creation flows—Gemini Canvas mashups, script‑to‑video, 30s video toys, and AI character trend primers.

BytePlus: Global spend on AI characters up 64% year over year

BytePlus highlights surging creator demand for AI characters, noting a 64% YoY increase in global spend and offering a 7‑minute explainer plus a free training series for app teams and creators Trend video, with the full primer on YouTube YouTube explainer.

Udio launches Voice Blending and Playground for mix‑and‑match vocals

Udio rolled out Voice Blending—mix vocals from multiple tracks—and a new Playground that lets you generate songs by choosing voice and style, both surfaced under Create → Voice Control for faster ideation Feature summary.

DecartStream promises no‑install, real‑time livestream editing for creators

DecartAI teased DecartStream: instant, on‑the‑fly transforms for livestreams with no downloads or setup—aimed at streamers who want quick visual changes during broadcast Product teaser.

Gemini Canvas app puts your photo on TV in seconds

A free Gemini Canvas template from Ozan Sıhay lets anyone drop a selfie into TV program frames and share the result—no installs, just upload a TV shot and your photo, pick the show, and export App demo. Try it via the shared Canvas link Gemini share.

Free Gemini Canvas poster maker ships as a shareable template

A second free Canvas app (by Serkan Girgin) generates stylized posters from your inputs—quick layouts and export without leaving the browser App screenshots. Launch it directly from the shared Canvas link Gemini share.

📅 Creator events and calls

Meetups and calls worth a look—immersive showcases, conference booths, and programming leads seeking creator submissions.

AIMusicVideo opens on‑timeline promo slots for creators

AIMusicVideo’s Director of Programming is actively soliciting submissions and offering on‑timeline promotion, a timely avenue for musicians, editors, and directors to showcase AI‑assisted music videos to a receptive audience Open call thread.

PixVerse shows up at VidSummit with booth and workshops

PixVerse highlighted its presence at VidSummit with booth activity and workshop sessions geared toward creators scaling AI video from idea to distribution; onsite signage and schedules point attendees to download links and RSVPs Booth photos.

BytePlus launches free virtual training series on AI character strategy

BytePlus shared a 7‑minute explainer on AI character trends and invited creators to a free virtual training series focused on engagement, monetization, and app growth using AI characters Series invite YouTube overview.

Kling AI names 10 finalists from ~5,000 entries; winners Oct 18

Kling AI announced 10 finalists in its NextGen Creative Contest from nearly 5,000 global submissions, with winners set to be revealed on Oct 18 (UTC+8)—a high‑visibility showcase for AI filmmakers and motion designers Finalists announcement.

📈 Adoption & capability signals (light day)

Usage pulses and capability notes relevant to creative tooling: Gemini traffic, agent horizons, and a sparse MoE update.

Gemini traffic jumps 46% MoM in September as creatives await 3.0

Similarweb data shared by creators shows Gemini.google.com up 46.24% month over month in Sep 2025—far outpacing peers like ChatGPT (+0.98%) and Perplexity (+14.35%)—as anticipation builds for Gemini 3.0 Flash/Pro “in a few weeks” Traffic chart, following up on CLI usage where Google reported 1M devs and ~1,300T monthly tokens.

Agent time horizons double ~every 7 months; Claude near 1‑hour tasks

METR’s charted 50% success time horizon for AI agents has doubled roughly every 202 days over six years, with Claude Sonnet landing around an hour—suggesting practical multi‑scene, longer edit tasks will keep moving on‑agent for creators Chart post.

Leak points to Oct 22 target for Gemini 3.0

A creator cites an October 22 due date for Gemini 3.0, adding a concrete near‑term window to weeks of speculation and setting expectations for visual model updates sought by filmmakers and designers Date leak claim. Community mood meanwhile nudges DeepMind to “do something,” underscoring pent‑up demand among creatives Waiting meme.

RND1‑Base (30B sparse MoE, 3B active) posts 57.2% MMLU, 72.1% GSM8K

Radical Numerics unveils RND1‑Base, a 30B‑parameter sparse MoE diffusion language model (3B active) converted from Qwen3‑30B‑A3B, claiming 57.2% MMLU, 72.1% GSM8K, and 51.3% MBPP after 500B tokens via simple continual pretraining with bidirectional masks and massive batches—an efficiency signal relevant to on‑device and budget pipelines for creatives Model slide.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught