LongCat ‑ Video 13.6B generates 15‑minute 720p clips – open‑source cuts compute 90%

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Meituan just open‑sourced LongCat‑Video, a 13.6B‑param generator that finally treats long‑form like a first‑class citizen. One model handles text→video, image→video, and continuation, and it renders coherent footage in minutes. The headline: single‑pass runs stretch to roughly 15 minutes of continuous story, not clip stitching.

Under the hood, hierarchical temporal modeling, coarse‑to‑fine rendering, Block Sparse Attention, and multi‑reward RLHF do the heavy lifting. Independent analyses report a 90%+ compute cut versus naïve approaches, and the project ships with commercial licensing — which means indie shorts, music videos, and previz teams can standardize on an open stack without waiting on closed APIs. Early testers say quality beats Wan 2.2 and inches toward Veo 3, with output suitable for boards and social cuts at 720p/30fps. The real trick is consistency: characters, props, and motion cues stay on‑model across long arcs in a single temporal pass, which is exactly what directors need to iterate scenes, not just shots.

If the community dials in faces and motion timing, closed models lose their monopoly on length. And with Sora 2 Pro halving web gen costs this week, the price‑performance curve for high‑end AI video is bending fast in creators’ favor.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught

Feature Spotlight

15‑minute open‑source video (LongCat‑Video)

Open‑source LongCat‑Video (13.6B) generates up to 15 minutes of coherent 720p/30fps video with native continuation and multi‑reward RLHF—bringing long‑form, consistent storytelling to creators without closed‑model lock‑in.

Cross‑account buzz: Meituan’s LongCat‑Video promises minutes‑long, coherent generation with text→video, image→video, and continuation in one model—big implications for indie films, music videos, and previz.

Jump to 15‑minute open‑source video (LongCat‑Video) topicsTable of Contents

🎬 15‑minute open‑source video (LongCat‑Video)

Cross‑account buzz: Meituan’s LongCat‑Video promises minutes‑long, coherent generation with text→video, image→video, and continuation in one model—big implications for indie films, music videos, and previz.

Meituan’s LongCat‑Video debuts: minutes‑long coherent T2V/I2V/continuation at 720p/30fps

Meituan introduced LongCat‑Video (13.6B params), a unified model for text‑to‑video, image‑to‑video, and video continuation that can generate minutes‑long coherent clips in “minutes” at 720p/30fps. It pairs coarse‑to‑fine rendering with Block Sparse Attention and multi‑reward RLHF, with creators noting it outperforms Wan 2.2 and nearly matches Veo 3 on quality Launch thread, with more details and galleries on the official site Project page. This open‑source release targets indie filmmaking, music videos, and previz with long‑form consistency across shots Launch recap.

15‑minute unified video generation with >90% compute cut, open‑source for commercial use

Independent analysis highlights LongCat‑Video’s hierarchical temporal modeling that preserves local smoothness and global story consistency for up to ~15‑minute sequences—this isn’t clip stitching but one coherent temporal pass. Efficiency comes from coarse‑to‑fine rendering and Block Sparse Attention, reportedly cutting compute by over 90%, and the model ships open‑source with commercial licensing, broadening adoption for long‑form shorts, music videos, and previz Creator analysis, with a deeper breakdown here Model analysis.

🧩 Directed worlds with LTX Elements

LTX rolls out Elements—tag, save, and reuse characters, props, and locations with voice assignment and frame‑accurate consistency; plus 20s LTX‑2 one‑take shots with synced audio. Excludes LongCat‑Video feature.

LTX Elements ships: reusable characters, props and locations with voice and shot‑to‑shot consistency

LTXStudio introduced Elements, a system to tag, save, and reuse characters, props, environments, and wardrobe—optionally with assigned voices—so they keep the same look and scale across your boards and edits, and it’s available to all users today release thread, availability note, with details on the workflow at the official page product page.

- Save and reuse flow: generate or upload, Save as Element, name it, assign a voice for characters, then tag it in shots to maintain continuity how to steps.

- Continuity and control: Elements preserve scale/appearance in Storyboard and support add/remove/blend compositing for precise scene tweaks storyboard continuity, compositing tips.

- In‑app motion: pair Elements with LTX‑2 Fast/Pro to animate stills with native lipsync and expressions without leaving your project animation inside LTX.

LTX‑2 delivers 20‑second one‑takes with synchronized audio; API Playground is live

LTX‑2 now generates a single 20‑second cinematic take with synchronized audio from one prompt, and you can try it immediately in the API Playground with a public demo and guide—advancing from yesterday’s 18‑second oner that showcased continuous shots from a still how‑to guide, sample prompt, API playground.

- Demo scene: a frog yoga class plays out in one shot—camera pan, chants, and comedic beat are paced by a single prompt, illustrating timing‑aware A/V sync sample prompt.

🎛️ All‑in‑one creative hubs

Platforms consolidate top video models into single workspaces to speed pipelines and collaboration. Excludes LongCat‑Video feature.

Adobe Firefly Boards lets creators try top AI models inside one canvas

Adobe is pitching Firefly Boards as a creative "god mode" that pulls multiple leading AI models into a single workspace for side‑by‑side exploration and iteration, positioned for MAX‑era makers working across image and video feature thread. A broader Creative Cloud promo window (Oct 28–Dec 1) is also driving hands‑on time across Adobe’s AI stack, which can amplify the value of a multi‑model board free access window.

fal adds Reve Fast Edit & Remix with 4‑image references at $0.01/image

Inference hub fal rolled out Reve Fast Edit & Remix, promising realistic, localized edits that preserve identity and scene coherence, with up to four reference images to compose the target look for just ~$0.01 per image model launch. The team shared before/after examples that swap moods and skies while keeping geometry and reflections intact—useful for lookbooks, product swaps, and social remixes sample gallery.

Sora 2 goes live on Pollo AI, expanding its creator hub

Pollo AI added Sora 2, giving its community direct access to OpenAI’s latest video model inside a broader creative hub that already funnels challenges and rewards to makers availability note, following up on CPP sponsorship that detailed creator funding/events. For AI filmmakers, this centralizes a top‑tier generator alongside existing workflows and community activation in one place.

Vidu App launches Creative version with co‑creation modes and style library

Vidu released its Creative version focused on an “effortless” workflow for making AI video, adding an in‑app library of subjects/styles and interactive co‑creation modes to streamline concept‑to‑cut for solo creators and teams release note. Expect faster look development and fewer round‑trips between tools for stylized shorts and branded explainers.

🖼️ Precision image edits at scale

New image editors focus on realism, consistency, and cheap per‑image costs for product, brand, and design work. Excludes LongCat‑Video feature.

Fal launches Reve Fast Edit & Remix at ~$0.01 per image with up to four reference images

Fal rolled out Reve Fast Edit & Remix for precision image editing that keeps scene consistency while letting you composite up to four reference images—priced around $0.01 per image, which targets large‑scale product and brand workflows Model launch. A follow‑up tease underscores selective edits (“edit what matters, keep everything else untouched”), reinforcing an object‑aware pipeline designed for realistic, localized changes Feature brief.

Adobe previews Firefly 5 layered image editing for contextual, object‑aware adjustments

A sneak peek at Firefly’s next image model highlights layered image editing and contextual object awareness, indicating deeper control for compositing and selective refinements without collateral changes—positioning Firefly as a precision editor for brand assets and product shots Preview note.

Runware’s Riverflow 1.1 shows production‑ready product edits and a promptable Playground

Riverflow 1.1 demonstrates clean, commercial‑grade image edits (e.g., a studio perfume shot adapted seamlessly to a beach at sunrise) that preserve labels, materials, and lighting continuity—useful for catalogs and social refreshes at scale Feature demo. The new Playground exposes promptable tasks like turning armor into gold, converting a character into a truck form, or placing a cream jar on a water lily, signaling controllable, object‑level transformations for brand storytelling and CPG scenes Task examples and Transformer edit; try it directly via the hosted interface Runware playground.

💳 Access and credits: cheaper gens, promo boosts

Lower gen costs and credit promos widen access for creators across tools. Excludes LongCat‑Video feature.

Sora 2 Pro on web cuts generation cost by 50%

OpenAI is halving the web generation cost for Sora 2 Pro, with the team saying they’ll re‑evaluate pricing after the initial reduction pricing update. This meaningfully lowers the barrier for filmmakers and motion designers experimenting with high‑end AI video.

fal unveils Reve Fast Edit & Remix at ~$0.01 per image

fal’s new Reve Fast Edit & Remix promises realistic, consistent image edits for roughly one cent per image and supports up to four reference images for composite control—useful for design and product shots at scale model pricing. A follow‑up shows precise before/after scene edits that preserve composition while changing sky, mood, or subject before/after demo.

ChatGPT Go free for one year in India, then ₹399/month

OpenAI is offering Indian users 12 months free of ChatGPT Go, after which pricing is listed at ₹399/month per the promo modal (cancel anytime, promo terms apply) offer screenshot. For writers and story teams, this lowers the cost of ideation, outline drafting, and asset prep.

PixVerse launches Remix and offers 300 credits for a retweet

PixVerse rolled out a one‑tap Remix that swaps subjects in any feed clip and is dangling 300 free credits to anyone who retweets, delivered via DMs feature and promo. For creators, this is both a new rapid-edit tool and a quick way to stock up credits.

Higgsfield debuts YouTube channel with 200 free credits (12‑hour code)

Higgsfield launched a YouTube channel showcasing Sketch‑to‑Video—turning kids’ drawings into animated stories—and is giving 200 free credits via a code in the video description for the next 12 hours channel launch, with the demo linked for immediate viewing YouTube showcase.

Invideo opens Creative Ambassador cohort with early access and credits

Invideo is recruiting its first Creative Ambassador cohort, offering early access, credits, and a chance to co‑build features with the team—an on‑ramp for power users to scale content pipelines affordably cohort invite.

🎥 Creator premieres, shows, and community moments

Notable content drops and community events: collective shorts, livestreams, and meetups that shape creative trends. Excludes LongCat‑Video feature.

‘Enter The Closet’ premieres: 23+ artists co-create an AI short, now in 4K

A community-made AI short film, Enter The Closet, debuted with contributions from 23+ artists using an arsenal of tools (Veo 3.1, Sora 2 Pro, Hailuo 2.3, Nano Banana, Firefly, Luma, Kling, ElevenLabs, Topaz). Watch it in 4K and scan the full credits and tool list to study real multi-model workflows film thread and YouTube 4K.

The project’s shoutouts and follow-up posts show a coordinated creator hive-mind, signaling how collaborative pipelines are evolving across the AI film community creator repost.

GLIF launches “The AI Slop Review” livestream; Episode 1 airs Nov 4 with 1B‑view guest

GLIF is debuting a new livestream series, The AI Slop Review, to unpack trends, memes, and standout works in AI video; Episode 1 features creator Bennett Waisbren (first AI creator to hit 1B views) and goes live Nov 4 at 1 PM PST show announcement and YouTube livestream. For AI filmmakers and editors, this is a useful pulse check on what resonates at scale.

Freepik hosts in‑person AI Partners Meetup to align creators and platform roadmap

Freepik opened its HQ for an AI Partners Meetup, bringing together creators and product leaders for a roundtable on workflow needs and what’s next for the platform’s creative stack event recap. The photo stream captures community energy and signals tighter feedback loops between tool builders and power users community photos.

Speakers noted the value of in‑person ideation for shaping future features and collaborations roundtable shots.

Hailuo at Upscale Conf Málaga: hands‑on workshop Nov 4 and keynote Nov 5

Hailuo AI will run a filmmaking workshop (Nov 4, 16:35) on prompt‑to‑perception video craft and deliver a keynote (Nov 5, 13:15) on Hailuo 2.3 and Audio 2.6’s impact on motion and collaboration agenda details.

- Workshop: Jorge Caballero and Anna Giralt Gris (Artefacto Films)

- Keynote: Meron Yao (Hailuo community lead)

For AI directors and producers, it’s a rare chance to see studio‑grade motion techniques taught live.

OpenArt Music Video tool spreads: creators test full videos from any song in minutes

OpenArt’s new Music Video capability is circulating, promising an end‑to‑end video cut from a single song in minutes—an enticing workflow for musicians and editors seeking rapid concept pieces tool drop. Early trials suggest fast cuts suitable for social and iterative refinement.

Higgsfield launches Sketch‑to‑Video YouTube channel with 200 free credits promo

Higgsfield opened a YouTube channel centered on Sketch‑to‑Video—turning kids’ drawings into animated stories—and is offering 200 free credits via a code in the video description (12‑hour window) channel launch and YouTube demo. For educators and family creators, it’s a timely on‑ramp to narrative animation with hand‑drawn inputs.

Vidu‑powered short ‘Memento Mori’ lands with cinematic tone in the Q2 era

Creator Dinda shared Memento Mori, a poetic sci‑fi short powered by Vidu Q2 that explores mortality and love through a mother‑astronaut’s dreamlike ordeal and awakening short film post. It’s a compact showcase of Q2‑era aesthetics and pacing for AI storytellers looking to study narrative restraint.

🎚️ Udio’s 48‑hour download scramble (now live)

Follow‑up to prior notice: the downloads window is open with format limits and no fingerprinting—musicians rush to archive catalogs. Excludes LongCat‑Video feature.

Udio opens 48-hour download window; creators race to save non-fingerprinted catalogs

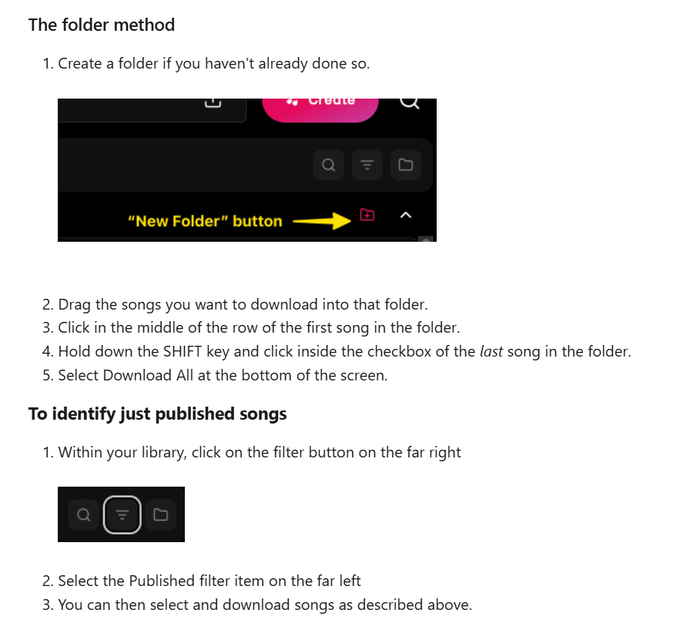

Udio has flipped on its 48-hour downloads window (11am ET Nov 3 → 10:59am ET Nov 5), following window details (WAV/MP3 limits) and confirming urgency for musicians archiving back catalogs. The community notes files from before Oct 29 aren’t fingerprinted, subscribers can pull WAVs and stems, free users get MP3 and video, and bulk grabs work via folders—with rumors of a bulk downloader still unconfirmed. See timing specifics and allowances in window timing, the mobilization thread with Udio’s Reddit link in reddit announcement, the format and “no fingerprinting” callouts in download details, and the creator workload signal (“two nights dedicated to downloads”) in creator note.

- Free vs. paid: MP3/video for free users; WAV + stems for subscribers download details.

- Bulk options: Folder-based bulk MP3; stems/WAV bulk unclear; third‑party bulk rumor circulating window timing.

- No second window promised: “No plans” to reopen, so this 48h window is critical download details.

- Account control: Bulk delete (50 at a time) or full account delete offered download details.

🎨 Fresh style kits and srefs (MJ & more)

A day of practical style shares—Midjourney v7 recipes, cozy vector clipart, gothic anime, and surreal doodle looks. Excludes LongCat‑Video feature.

Midjourney v7 recipe: chaos 22 + sref 2690871695 delivers crisp 3:4 drama

A fresh MJ v7 setup — --chaos 22 --ar 3:4 --sref 2690871695 --sw 500 --stylize 500 — is producing striking, high‑contrast frames across varied subjects, following v7 recipe that spotlighted a different sref/chaos mix. See the collage and parameters in the creator’s share recipe post.

Cinematic 1980s anime look via sref 3413445974

An MJ style reference --sref 3413445974 channels 1980s gothic fantasy anime (Vampire Hunter D, Lodoss War vibes), with guidance on motion grammar if animated: slow pans, wind, gaze, and light over pure action style breakdown.

Horror ‘production still’ prompt template for cinematic symmetry

A versatile prompt pattern — “[Subject], symmetry, production still, horror, soft lighting, cinematic, [Color]-core, 35mm, visible film grain, highly detailed” — yields moody, symmetrical frames that read like on‑set unit photography prompt template.

Naïve surreal doodle style sref 136643823 blends photo and sketch

This childlike expressionist sketch sref fuses naïve illustration with digital collage, often mixing real photographs with drawn elements for playful, slightly melancholic results—great for quirky editorial or poster looks style share.

Winter Whimsy: a reusable vector watercolor prompt for seasonal clipart

A compact, fill‑in‑the‑blanks prompt template yields clean, flat vector watercolor clipart (subjects, accessories, settings, solid backgrounds) with crisp edge separation and clipping paths—ideal for packs and marketplaces prompt blueprint.

Clean cartoon look with srefs 2844626811 and 2431575232

Paired MJ srefs (2844626811, 2431575232) drive a tidy cartoon aesthetic across everyday scenes—think minimal lines, soft palettes, and approachable character design sref examples.

Zero‑prompt silhouette sref for striking B/W figures

A black‑and‑white, high‑texture silhouette sref invites zero‑prompt experimentation—drop it in to get graphic, frost‑like figure studies that pop on white backgrounds sref share.

Midjourney’s reflections are getting uncannily good

Creators are calling out how well MJ now handles reflections—useful for water, glass, and mirror‑driven compositions without extensive post creator note.

🏗️ Big AI contracts and compute alignment

One major infra deal shapes creative AI’s supply side; may influence model access/pricing downstream. Excludes LongCat‑Video feature.

OpenAI signs $38B, seven‑year AWS deal to run advanced AI workloads

OpenAI announced a $38 billion, seven‑year strategic partnership with AWS to run its advanced AI workloads on AWS infrastructure, with operations starting immediately deal graphic. The scale and term signal a multi‑cloud, multi‑supplier posture that could stabilize capacity and pricing for high‑demand creative models used by filmmakers, designers, and musicians.

- What’s in scope: “Advanced AI workloads” on AWS over seven years, implying meaningful new burst capacity for training and inference deal graphic.

- Why creatives should care: more capacity and redundancy typically translate into shorter queues and potential price relief; creators are already seeing cuts like Sora 2 Pro’s 50% gen‑cost reduction on web pricing update.

- Reading the room: community quips about OpenAI partnering with every GPU supplier underscore an aggressive supply‑aggregation strategy to meet demand GPU partner quip.

🗣️ Digital humans and ensemble dialogue

Enterprise‑leaning tools bring multi‑character dialogue and identity‑aware performance into one frame. Excludes LongCat‑Video feature.

OmniHuman 1.5 adds synchronized multi‑character dialogue with automatic voice routing

BytePlus’ OmniHuman 1.5 enables ensemble performances in a single frame by intelligently routing separate audio tracks to the correct character, aligning gestures, glances, and lines for natural group dialogue Feature brief. Teams can engage sales for pilots or deployment via the official channel Contact page.

BytePlus bundles Seedream 4.0, Seedance 1.0, and OmniHuman 1.5 for enterprise digital presenters

BytePlus is positioning an end‑to‑end creation stack—Seedream 4.0 (visual ideas), Seedance 1.0 (motion), and OmniHuman 1.5 (dialogue‑ready digital humans)—to ship explainers, training sims, and interactive presenters faster with brand‑consistent quality Suite overview. Prospects are directed to sales for scoping and pricing Contact sales.

⚖️ Policy and trust signals

Copyright and policy clarity affecting how creators publish and advise audiences. Excludes LongCat‑Video feature.

Judge allows authors’ copyright claims vs OpenAI; ChatGPT summaries could infringe

A federal judge reportedly denied OpenAI’s bid to dismiss authors’ core copyright claims, signaling that ChatGPT outputs such as book summaries may constitute infringement if they substitute for the originals court ruling. For creatives and studios using LLMs to generate synopses, outlines, or promotional copy, this ups the need for rights checks and licensing when works are still in‑copyright.

Udio’s 48‑hour downloads open with old ToS coverage and no fingerprinting, users say

The downloads window is live for works created before Oct 29, with users reporting that retrieved files fall under the old ToS and are not fingerprinted; Udio also outlines a folder method for bulk MP3 grabs and suggests no immediate plan for another window downloads live. The window runs Nov 3, 11:00 ET to Nov 5, 10:59 ET per a posted note window timing, with communities sharing the Reddit announcement to mobilize archival pulls reddit link, following up on 48‑hour downloads.

No, ChatGPT didn’t stop health advice; OpenAI policy unchanged on professional guidance

A viral claim that ChatGPT would stop giving health advice is false; OpenAI’s usage policy remains that AI is not a replacement for licensed professionals, and tailored medical/legal advice requires appropriate professional involvement policy screenshot. Creators can continue publishing health explainers and general guidance, but should keep disclaimers and avoid impersonating licensed care.

Creators call for call watermarking as Sonic‑3 hits 90 ms human‑like voice latency

As real‑time voice models approach human cadence—Sonic‑3 is cited at ~90 ms with natural laughter—creators are urging watermarking for phone calls to deter impersonation and set audience expectations watermark call. Voice actors, podcasters, and brands should consider audible cues, disclosure scripts, and platform labels until standard call‑level provenance emerges.

🧠 Research watch for creative AI

Mostly model/agent methods relevant to multimodal creation and safety; practical to track for future tools. Excludes LongCat‑Video feature.

Online RL fine-tunes flow-based VLA models to 97.6% on LIBERO

π_RL introduces online reinforcement learning for flow‑based Vision‑Language‑Action models, pushing LIBERO success up to 97.6% while improving ManiSkill performance—promising for physically grounded creative agents and robots. See the overview in Paper thread and details in Paper page.

- Two training paths: Flow‑Noise (denoising as MDP with learnable noise) and Flow‑SDE (ODE→SDE for exploration), both designed for parallel simulation fine‑tuning Paper page.

Visual backdoors against embodied MLLMs hit up to 80% ASR

BEAT shows how object‑based visual triggers can backdoor embodied MLLM agents with up to 80% attack success while keeping benign task performance intact—an urgent safety note for tool‑using creative assistants and on‑device agents. Overview in Paper brief, with methods and metrics in Paper page.

Continuous autoregressive LMs compress tokens to vectors with 99.9% fidelity

CALM replaces next‑token with next‑vector prediction via a high‑fidelity autoencoder, reconstructing text with >99.9% accuracy while reducing generation steps—useful for faster story tools, longer drafts, and interactive creative assistants. See the overview Paper page and discussion Author discussion.

Fine‑grained quant study: MXINT8 beats FP at 8‑bit; NVINT4 shines with Hadamard

A comprehensive comparison of low‑bit formats finds a crossover: coarse‑grain favors floating‑point, but at fine‑grain, integer formats (MXINT8) lead on accuracy and efficiency; with Hadamard rotation, NVINT4 can surpass 4‑bit FP. The authors also fix gradient bias via symmetric clipping—good news for on‑device creative apps. Summary in Paper thread and deep dive in Paper page.

- MXINT8: near‑lossless training and hardware‑efficient inference at 8‑bit fine‑grain.

- 4‑bit: FP often ahead, but NVINT4 + rotation exceeds FP on key tasks Author discussion.

OS‑Sentinel combines formal checks and VLM context to police mobile agents

OS‑Sentinel pairs formal verification with VLM‑based contextual assessment to catch unsafe actions in mobile GUI agents across realistic workflows—relevant for creative‑suite copilots that automate app sequences. See the paper overview Paper card and a companion summary in Paper summary.

Plug‑and‑play RoPE variants boost VLM consistency across tasks

Revisiting multimodal positional encoding, the authors propose Multi‑Head RoPE and MRoPE‑Interleave—simple, drop‑in designs that preserve textual priors, use full frequency bands, and improve layout coherence—delivering consistent lifts on general and fine‑grained VLM benchmarks. This lands well for image/video tools that juggle dense spatial text prompts, following up on Modalities grid mapping big‑tech modality coverage. Read the study Paper page and author Q&A in Author discussion.

NeuroAda cuts PEFT memory by selectively adapting neurons with bypass links

NeuroAda blends selective adaptation with bypass connections to unlock strong parameter‑efficient fine‑tuning with far fewer trainables and lower memory—handy for shipping style/character adapters inside constrained creative stacks. Paper snapshot in Paper page.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught