Google Gemini 3 Pro pairs with Nano Banana 2 – mobile Canvas sightings begin

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Google’s next creative stack looks closer to landing. Fresh Gemini app strings say “Try 3 Pro to create images with the newer version of Nano Banana,” and multiple creators report Gemini 3 showing up in the mobile app’s Canvas while the web UI stays unchanged. If 3 Pro really rides with Nano Banana 2, image gen moves into a surface many teams already use—fewer app hops, faster comps.

What’s new since Friday’s Vids‑panel leak tying Nano Banana Pro to Gemini 3 Pro: the pairing now appears inside the core Gemini app, not a separate video tool, and an early on‑device portrait shows long‑prompt fidelity to lighting, styling, and jewelry cues. That hints at better attribute adherence for fashion‑grade directions instead of collapsing into generic looks. Reports suggest fresh TPU capacity is in play (yes, the memes are back), but treat rollout as region‑staggered until Google says otherwise.

Practical takeaway: queue a small A/B script and compare mobile Canvas outputs against your current image‑to‑image pipeline the moment the 3 Pro switch appears. The upside is time—tighter prompt control where you already work and fewer round‑trips to third‑party apps.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught

Feature Spotlight

Gemini 3 + Nano Banana 2 watch

Gemini 3 Pro + Nano Banana 2 appear to be rolling out (code strings + mobile Canvas sightings). If confirmed, Google’s creative stack could shift mobile-first image/video workflows for millions of creators.

Cross‑account signals show Google’s next creative stack landing: code strings tie Gemini 3 Pro to a newer Nano Banana, mobile Canvas sightings, and early creator tests. Bigger than vibes—this impacts everyday image/video flows.

Jump to Gemini 3 + Nano Banana 2 watch topicsTable of Contents

🚀 Gemini 3 + Nano Banana 2 watch

Cross‑account signals show Google’s next creative stack landing: code strings tie Gemini 3 Pro to a newer Nano Banana, mobile Canvas sightings, and early creator tests. Bigger than vibes—this impacts everyday image/video flows.

App strings hint Gemini 3 Pro pairs with a newer Nano Banana for image gen

New UI text found in the Gemini app says “Try 3 Pro to create images with the newer version of Nano Banana,” implying a coordinated drop of Gemini 3 Pro with Nano Banana 2 for creatives Code strings. This builds on earlier reporting that tied Nano Banana Pro to Gemini 3 Pro in Google’s video stack Vids leak.

If accurate, expect image quality and control to step up inside Gemini surfaces where creators already work, reducing the need to bounce between third‑party apps.

Creators spot Gemini 3 in the Gemini mobile app’s Canvas, not on the web

Multiple posts say Gemini 3 is appearing inside the Canvas feature on the Gemini mobile apps while the web UI remains unchanged Mobile sighting. For designers and storytellers, that points to a phone‑first rollout path, which means early testing will skew to on‑device image flows and quick comps rather than desktop pipelines.

Early ‘Nano Banana in GeminiApp’ portrait shows long‑prompt fidelity

A creator shared a highly detailed Nano Banana portrait generated inside the Gemini app, crediting prompt sensitivity down to lighting, styling, and jewelry details Portrait prompt. For art directors, this hints the new model can track dense, fashion‑grade descriptors without collapsing into generic looks.

It’s one sample, but the attribute adherence and DOF cues look closer to pro photo direction than prior Nano Banana runs.

Hype builds: Bard→Gemini 3.0 memes, a poll, and “TPU goes brrrr”

Creator chatter around Gemini 3.0 is spiking: a viral Bard→Gemini 3.0 meme is making the rounds Meme post, a poll is gauging excitement directly in‑feed Excitement poll, and posts hint the next Nano Banana will ride fresh TPU capacity TPU comment. Another quip notes “everyone is waiting for Gemini 3.0 and Nano‑Banana 2” Waiting post.

Signal for teams: plan prompt tests and side‑by‑sides the moment the mobile Canvas or 3 Pro switches light up in your region.

🎬 Faster gens, tighter motion (video)

Today skewed to speed and control: PixVerse V5 accelerates 1080p; Kling 2.5 Start/End Frames stabilize cuts; creators compare Grok vs Midjourney video. Excludes Google’s Gemini/Nano Banana rollout (feature).

Kling 2.5 Start/End Frames: cleaner joins and less color drift in creator tests

Creators report that Kling 2.5’s Start/End Frames now hold joins more tightly and avoid the old “wild color shifts.” One shared workflow even chains Freepik’s new camera‑angle space, Seedream, and Nanobanna—with only light speed‑ramping needed to hide seams Creator workflow. This is a practical control win if you stitch many shots.

Following up on Start–End frames, which showed cleaner continuity, we now see added evidence across settings: a clean city time‑slice demo Start/end frame demo, and an anime‑meets‑live‑action test that keeps characters grounded to the plate Anime‑live blend. These are small, steady improvements. They matter for editor time.

Who should care: Short‑form storytellers and social teams stitching multiple sequences. Less stabilization and color‑matching in post means more time for narrative beats.

PixVerse V5 Fast hits 1080p in under 43s with up to 40% speed boost

PixVerse rolled out V5 Fast with a claimed 40% generation speedup and 1080p renders in under 43 seconds. A 72‑hour promo grants 300 credits if you follow/retweet/reply. This matters if you batch social spots or iterate many takes per prompt. Time adds up fast. See the launch details in Release thread.

Creators already echo the speed feel with a quick follow‑up post, though there are no new controls here—this is a throughput bump, not a features drop Follow‑up promo. The point is: faster loops = more shots reviewed per hour.

Deployment impact: Expect the same quality tier as prior V5, just faster. If your pipeline depends on a fixed frame budget, re‑test your batching and rate limits today. That’s where the real gains show up.

Hailuo 2.3 reels show steady motion and usable start/end control

New Hailuo 2.3 snippets emphasize grounded motion from simple inputs: a gritty step‑by‑step walk in a dim setting (~6 seconds), a clean rice‑pour setup (~10 seconds), and a musical performance staged with start/end framing Gritty walk test Rice pour test Start/end musical take.

The takeaway: Hailuo is becoming a steady option for grounded, tactile motion when you don’t want hyper‑stylized warping. If you storyboard with start/end frames, you can anchor beats without heavy post.

Who should care: Short commercials and mood reels that rely on realistic kinetics and object interactions rather than maximalist effects.

Pollo 2.0 adds long‑video avatars with tight lip‑sync and smoother motion

Pollo 2.0’s long‑video mode is in the wild, with creators showing ~30‑second lip‑sync tests and praising refined camera paths and smoother motion flow 30s lip‑sync test Feature praise. Another demo uses two reference images plus auto‑audio, pointing to quicker avatar setup for talking clips Two‑image ref video.

Why it matters: Longer, stable lip‑sync reduces cut points for reels, explainers, and music‑driven posts. If you run language variants, keep an eye on phoneme accuracy across takes before locking a workflow.

Creators run Grok video vs Midjourney video side‑by‑side

Side‑by‑side tests pit Grok video against Midjourney on similar briefs, highlighting differences in motion feel and render style rather than raw speed claims. These are informal comps, but they help set expectations if you’re choosing a lane for a series look Compare thread.

So what? If you aim for stylized motion with fewer artifacts, watch how each model handles camera energy and micro‑detail under movement. Consistency across consecutive shots often matters more than one pretty frame.

NVIDIA ChronoEdit‑14B Diffusers LoRA speeds style/grade swaps in‑sequence

NVIDIA published ChronoEdit‑14B‑Diffusers‑Paint‑Brush‑LoRA with a demo that rapidly flips through cinematic grades and looks on the same portrait. A follow‑up tease shows “edit as you draw,” hinting at more on‑canvas timing control LoRA announcement Feature tease. For quick creative direction passes, this trims minutes off each iteration.

Use case: Fast look‑dev on sequences before you spend time polishing. Lock vibe first, then chase artifacts. See the model card Model card.

🛠️ Production video models: Pollo 2.0, Hailuo 2.3

Pollo 2.0 clips highlight long‑video mode, lip‑sync and camera paths; Hailuo 2.3 reels show gritty realism and Start/End usage. Contest logistics are separated under Events.

Pollo 2.0 adds long‑video mode, tighter lip‑sync and smoother camera paths

Creators are reporting a new long‑video mode for Pollo’s AI avatar, plus clearly improved lip‑sync and steadier camera paths in short trials Long‑video mode Lip‑sync test Camera path notes. Several posts also show two‑image reference video generation, auto audio, and reliable Japanese‑language prompting, which lowers setup time for music and social ads Two‑image ref demo Prompt notes.

For video teams, this trims the usual mocap/keyframing overhead on talking heads and performances, and makes it more viable to previsualize or ship 30‑second spots on a single pass Lip‑sync test.

Hailuo 2.3 gets real: Start/End shots and gritty motion tests

Hailuo 2.3 continues to impress on controllable motion: creators are using Start/End Frames to plan performance shots like a "Bring Him Home" piano cut, while separate look tests show grounded stepping on slick stones and crisp close‑ups from simple I2V prompts (e.g., rice pour) Start/End performance Gritty stepping clip Rice pour demo.

So what? You can stitch consistent beats for micro‑dramas and product films without fighting drift, then fill gaps with tight inserts for texture Start/End performance.

A practical Hailuo 2.3 continuity workflow spreads: shots → stills → references

A creator thread lays out a simple continuity loop for Hailuo 2.3: generate the first shots via text‑to‑video, export still frames, then feed those frames back as visual references for the next prompts; finish with a music cue and a quick edit in CapCut First step guide Frame export tip Music and edit. The author says most shots landed on the first try, which is a good signal for pre‑viz and fast horror shorts.

The point is: you can lock look and pacing early, then iterate scene by scene without re‑inventing styling each time Frame export tip.

🎨 Reusable style kits and prompt recipes

A strong day for shareable looks: new MJ style refs (dark comic; Ghibli/Ni no Kuni), a V7 collage recipe, and a flat‑illustration template. These drop straight into art direction pipelines.

Midjourney style ref 4289069384 delivers dark‑fantasy comic look

A new Midjourney style reference (--sref 4289069384) lands for a realistic dark‑fantasy comic aesthetic with aggressive inks, painterly volume, and gothic drama. It reads moody and heroic. Good for covers, key art, and character one‑sheets Style ref thread.

The examples show rain‑drenched portraits, moonlit villains, and embattled warriors, suggesting consistent lighting and line weight across prompts. Keep this sref handy when you need high‑contrast narrative beats without over‑tuning every prompt.

MJ V7 collage recipe: sref 2837577475 with chaos 7 and sw 300

A compact V7 recipe yields striking red/black collage panels: --chaos 7 --ar 3:4 --sref 2837577475 --sw 300 --stylize 500. Think bold sun disks, silhouettes, vertical type blocks—great for posters and title cards Recipe post. A second run confirms repeatability across grids Second sample.

This extends the current wave of reusable looks, following up on Folded metallic which spread a different metallic collage vibe. Keep the chaos at 7 to preserve variation without losing the graphic system.

Shareable Ghibli × Ni no Kuni character prompts (ALT) for MJ

A four‑portrait set shares clean, reuse‑ready Midjourney prompts in the ALT text for a Ghibli/Ni no Kuni watercolor look—hand‑drawn lines, warm palettes, and simple cel shading. Most use 1:1 framing and include the --raw flag for gentler processing Prompt set.

Drop these into cast sheets, profile tiles, or IP explorations. The phrasing balances wardrobe cues, mood, and linework notes, so the style transfers without prompt bloat.

Flat‑illustration prompt template nails clean 3:2 front‑facing art

A fill‑in‑the‑blanks template standardizes flat, front‑facing illustrations: “A flat design illustration of a [subject] … plain [background color] … palette of [color1] and [color2] … clean shapes, balanced spacing.” ALT examples (grandma, koala, Mario, plant) use --ar 3:2 for consistent layout Template explainer.

Use it to batch brand icons, thumbnails, and social cards. Swap subjects and palettes; keep the composition and spacing language to maintain a cohesive set.

🪄 Edit with words: NL scene edits & LoRAs

Photo/video edits get simpler: lighting‑control and paint‑brush LoRAs, Seedream’s text edits, Meta AI’s glossy images, and Google Photos’ “Help me edit.” Excludes the Gemini 3/Nano Banana rollout (covered as feature).

NVIDIA ships ChronoEdit-14B “paint‑brush” LoRA for rapid, in‑place style edits

NVIDIA released ChronoEdit‑14B Diffusers “Paint‑Brush” LoRA, showing instant grade and look swaps and teasing an “edit as you draw” workflow. The Hugging Face model card is live with a short demo. See the launch clip and model details for usage. Release clip Hugging Face model

Why it matters: this is a direct speed play for look‑dev and corrections—swapping lighting, color science, and style without round‑tripping to a full compositor. A follow‑up note highlights “edit as you draw,” hinting at frame‑by‑frame paint targeting on top of LoRA control. Edit as you draw

Seedream 4.0 shows true “edit with words”: lights on, add objects, done

Seedream 4.0 demonstrates natural‑language scene edits—type “Turn on the lights” to brighten a room or “Add a cute dog” to insert a subject—no layers or selections required. It’s a clean example of text‑directed environment changes for set fixes and props. Feature demo

The point is: you can now prototype production notes as sentences, then refine. This shortens the loop between art direction and tangible frames.

Google Photos “Help me edit” rolls out typed fixes like removing sunglasses

Google Photos’ “Help me edit” shows a live example of removing sunglasses via a one‑line prompt, with the broader Google rollout bringing natural‑language editing to more regions and platforms. It’s aimed at everyday corrections, fast. Feature brief

Why you care: small photo fixes now move from slider wrangling to intent statements, which speeds social assets and family archives alike.

Qwen‑Edit‑2509 Multi‑Angle Lighting LoRA lands on Hugging Face with live Space

A new Qwen‑Edit‑2509 Multi‑Angle Lighting LoRA dropped, with a quick demo cycling through portrait lighting setups and a companion Space to try it in‑browser. Creators can relight shots by prompt instead of re‑shoots. Model and demo Hugging Face model Hugging Face space

For art leads and photographers, this cuts reshoot time and preserves mood continuity across sets without manual masks or rotoscoping.

Meta AI image generator demo shows a faster, glossier “MJ‑ish” finish

A screen capture of Meta AI image generation flips a “cat astronaut” into a “cat wizard” with a polished, photoreal look that creators compare to Midjourney’s finish. It suggests Meta’s in‑chat tool is viable for quick, high‑sheen variations. Phone demo

For teams already living in Messenger/IG, this can cover fast comps or moodboards without context‑switching to standalone apps.

📣 Paste a link, get an ad (plus BFCM perks)

Ad automation and creator deals dominated: Higgsfield’s URL→ad builder, Leonardo’s instant brand kit from a logo, and Pictory’s BFCM bundles for branded video pipelines.

Higgsfield’s Click to Ad turns any product URL into a ready-to-run video

Higgsfield’s builder now scans a product page and auto-assembles a promo using brand colors, logo, name/description, and up to eight images, with ten templates to choose from

. Following up on initial launch, creators can also manually swap assets before rendering, making brief‑to‑first cut take seconds rather than hours.

Higgsfield posts BF pricing: Sora $3.25, Veo $1.80, Kling $0.39 per clip

A creator shared Higgsfield’s Black Friday table: $39/mo plan (60% off), Sora 2 Pro 8s at $3.25, Veo 3.1 8s at $1.80, and Kling 2.5 10s at $0.39—useful unit economics for ad testing at volume

. The promo also flags “unlimited image models,” adding headroom for visual variations Deal details.

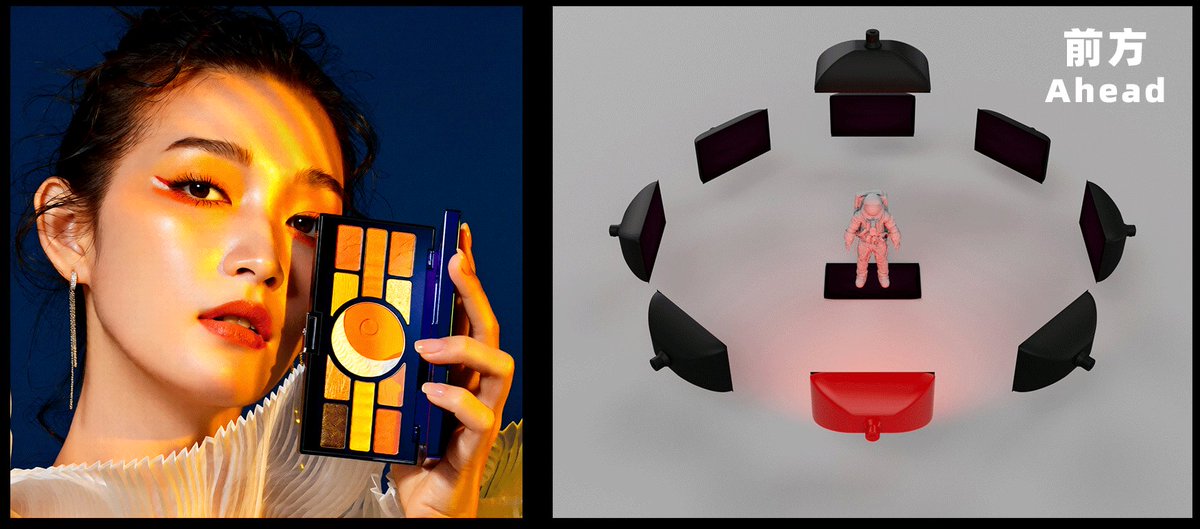

Leonardo’s Instant Brand Kit blueprint builds a brand pack from a logo

Leonardo introduced an Instant Brand Kit Blueprint that generates a starter identity from one logo and a single‑sentence summary—delivering cohesive palettes, type choices, and assets that slot into ads and mocks

. This complements Leonardo’s ad blueprints and gives small teams a fast on‑ramp to consistent branding without a designer on call.

📺 Platforms, IP, and the UGC pivot

Disney+ plans to let subscribers generate short clips with official characters inside a closed sandbox; analysis notes Epic tie‑ins and a $1B 2026 content push. Excludes Google’s feature news.

Disney+ will let subscribers generate AI clips with official IP; $1B content push in 2026

Disney is preparing Disney+ to support short AI‑generated videos made by subscribers using official characters, while allocating $1B for 2026 content expansion, according to CEO Bob Iger Coverage screenshot. Following up on closed sandbox, this UGC will live inside a tightly controlled environment with brand‑safe guardrails and expected tie‑ins to Epic’s ecosystem to drive engagement beyond passive streaming Analysis thread Sandbox angle. For creatives, this signals new, licensed playgrounds to produce fan‑made clips that can be shared within Disney’s walls—no export rights hinted yet.

The point is: Disney wants participation but on its terms. Expect preset styles, usage caps, and approval flows rather than freeform generation. If you build tools, think share‑into‑Disney+ flows, brand‑consistent templates, and ways to help fans storyboard within fixed worlds.

📊 Benchmarks: GPT‑5.* tops creative leaderboards

Fresh leaderboard snapshots show GPT‑5.1 variants overtaking Claude on design‑centric evals. Mostly model ranking chatter; no new datasets today.

GPT‑5.1 variants overtake Claude on Design Arena; top‑10 on Yupp

OpenAI’s GPT‑5.* stack now leads the design‑focused Design Arena: GPT‑5.1 (High) shows 1374 Elo, with other 5.* runs clustered above Claude 3.7 Sonnet (1317) and Opus 4 (1315) Design Arena chart. Separately, Yupp’s live board notes GPT‑5.1 variants already stabilizing in its top‑10 as rankings settle Yupp ranks note.

For creative teams, this signals a near‑term shift in taste‑driven scoring toward GPT‑5.1 presets; watch which instruction levels (“High/Medium/Minimal”) keep their edge as more votes land.

🧪 New model drops and theory to watch

Light but relevant R&D: MetaCLIP 2 recipe on HF, new “Sherlock” models in anycoder with huge context, a spatial‑intelligence op‑ed, and a Qwen‑VL eval space.

“Sherlock” Think Alpha and Dash Alpha hit anycoder with 1.8M context

Anycoder added two frontier multimodal models—Sherlock Think Alpha (reasoning) and Sherlock Dash Alpha (speed)—with a stated 1.8M token context and a focus on tool calling and agent flows. This matters for long project threads and multi‑file work where you need tools orchestrated by the model rather than manual glue code. See the picker screenshot in Model list.

A similar naming also appeared on OpenRouter as a "Grok 4.20 stealth" reference, suggesting broader testing beyond one app OpenRouter mention.

MetaCLIP 2 training recipe lands on Hugging Face

Meta’s updated CLIP recipe, MetaCLIP 2, is now available on Hugging Face with a worldwide training approach. For image–text search, tagging, and dataset bootstrapping, this gives teams a transparent, reproducible baseline rather than a black‑box model. See the drop in HF announcement.

So what? If you build style or asset libraries, a robust open CLIP improves retrieval and consistency across large, messy corpora.

NVIDIA posts ChronoEdit‑14B Diffusers Paint‑Brush LoRA for rapid style/grade edits

NVIDIA released a "Paint‑Brush LoRA" for ChronoEdit‑14B in Diffusers that can sweep through dramatic look changes and color grades in seconds; a follow‑up tease shows "edit as you draw" interactions. This is practical for lookdev and client‑driven alt passes without re‑generating source footage. See the reel in Model demo and the card at Hugging Face model.

Follow the extra tease here: Feature tease.

OpenAI says a stronger IMO‑level math model is coming in the next few months

OpenAI researcher Jerry Tworek said a “much better version” of their IMO gold‑medal model will be released in the coming months. Stronger symbolic/mathematical reasoning ripples into animation control logic, simulation prompts, and tool‑augmented story engines. See the quote card in Quote update.

Retrofitted recurrence proposal aims to make LMs think deeper

A highlighted paper proposes “Retrofitted Recurrence,” a method to add iterative reasoning depth to existing LMs without full re‑training. For prompt engineers and toolchain builders, this is a potential route to longer chains of thought with tighter latency and memory budgets than full self‑play finetunes. See the overview in Paper highlight.

Fei‑Fei Li: spatial intelligence is the next AI frontier for creators

Fei‑Fei Li argues that AI must move from words to worlds—models that understand 3D space, physics, and action—to unlock reliable filmmaking, game design, robotics, and education tools. For creative teams, this frames why today’s video models drift and why world models matter for continuity and direction. Read the op‑ed summary in Op‑ed thread.

This lands after Memory House, a playable world‑model demo, showed single‑image rooms turned walkable; the through‑line is consistent 3D scenes as a prerequisite for dependable story beats.

Hands‑on: Qwen3‑VL comparison space for semantic object detection

A new evaluation space compares Qwen3‑VL variants on semantic object detection, letting you probe which build handles layout, small objects, and occlusions best. That’s useful for shot planning, compositing, and prompt‑conditioned edits where spatial grounding fails easily. Try it from the share in Eval space.

Qwen‑Edit‑2509 Multi‑Angle Lighting LoRA drops with demo app

A new LoRA for Qwen‑Edit‑2509 targets multi‑angle relighting, showcased with a portrait cycling through distinct setups. For posters, thumbnails, and continuity fixes, it’s a fast way to test lighting intent before committing to a full render or re‑shoot. Watch the clip in LoRA demo, and grab the model and space at Model card and HF space.

🌈 Showreels to spark ideas (Grok focus)

A steady stream of short inspiration: Grok Imagine nails OVA monsters, pastoral Ghibli‑style motion, macro creatures, and fashion collages—useful as lookbooks and motion tests.

Grok Imagine nails 80s OVA monster shot with glassy water detail

Today’s monster emergence clip shows Grok’s 80s OVA look holding up under motion—check the reflection, droplets, and zoom‑out roar beat OVA monster clip. A companion reel stacks more OVA‑style cuts across horror and sci‑fi, useful as a mini lookbook for tone tests OVA genres reel.

Ghibli‑style pastoral walk lands clean, with gentle camera pan

A soft, Studio Ghibli‑like sequence—girl, wind‑swept field, house on a hill—demonstrates Grok’s ability to keep character scale and horizon lines consistent during a slow pan. Great for mood reels and children’s storyboards Ghibli scene.

Horror micro‑film shows Grok’s mood, and why fewer words help

A creepy wall‑crawling shot came after ~25 takes, with the creator noting Grok’s prompt sensitivity and that a minimal line like “She screams for help” guided the best result—good insight for thriller beat tests Horror workflow.

Editorial fashion: multi‑angle sets and bold color pop threads

Creators are sharing Grok fashion stills in cohesive sets—multi‑angle coverage and b&w vs color passes that read like a compact lookbook for art direction tests Fashion thread, with follow‑ups focused on polished editorials Editorial set and saturated palettes Color pop set.

Macro “opal spider” shows Grok’s micro‑subject control and gradients

The opal‑sheen spider clip spotlights crisp leg articulation against a dark gradient, a handy test for macro focus, color play, and background separation in creature inserts Macro creature demo.

Side‑by‑side Grok vs Midjourney video clips make quick visual audit

A short comparison reel puts similar briefs next to each other, useful to spot differences in motion continuity, texture fidelity, and lighting stability before you commit a pipeline Comparison reel.

Concept frame: high‑saturation crimson room with faceless silhouette

This single still leans on bold color blocking and negative space—useful as a quick mood card for identity/anonymity themes, and a reminder Grok can carry minimalist frames too Concept still.

Logo/ID motion: “GROK” text morphs into neural brain icon

A tight branding sting—wordmark resolves, dissolves, and re‑forms as a brain—shows Grok can handle clean typographic transitions for intros and bumpers Brand sting.

🎙️ Voices and music: fast tracks for creators

From celeb voice rights to DIY narration: ElevenLabs secures high‑profile voice replicas, a Pictory how‑to for TTS, and an AI flamenco cover—useful for films, trailers, and social clips.

ElevenLabs signs McConaughey and Caine for licensed AI voice replicas

ElevenLabs has secured rights to create AI voice replicas for Matthew McConaughey and Michael Caine, with McConaughey also investing. That’s a clear signal that high‑profile, rights‑cleared voices are moving from novelty to standard supply for ads, trailers, and games Voice deals summary.

Why it matters: licensed voices reduce legal risk and approval cycles for branded work. Expect tighter usage terms, but far easier procurement compared with bespoke celebrity sessions. For creative teams, this expands the palette for narration and character VO without chasing one‑off contracts.

Pictory posts step‑by‑step TTS guide with auto‑synced scene timing

Pictory Academy published a practical walkthrough for generating AI voiceovers from text and auto‑syncing them to scenes—no mic, timing handled for you How‑to guide, with the full tutorial in Pictory guide. If you need language range, Pictory’s ElevenLabs integration offers voices in up to 29 languages on paid tiers Pricing page.

This helps solo creators and small teams add clean narration to promos, explainers, and reels in minutes. It also standardizes timing, so you can iterate fast on script or visuals without re‑recording.

Pollo 2.0 adds long‑form lip‑sync avatars and auto‑generated voice

Pollo’s new 2.0 model is being field‑tested for longer avatar videos with noticeably tighter lip‑sync, and it can auto‑generate the voice track directly from prompts Lip‑sync test Auto voice demo. A separate creator rundown praises improved motion paths and overall smoothness for short clips Feature roundup.

For short ads, lyric videos, and character VO, this reduces the pipeline to script → video in one pass. Expect some drift on tougher passages, but the sync quality looks good enough for social‑first work.

The Offspring’s “Self Esteem” gets a full‑length flamenco AI cover

A creator posted a nearly four‑minute AI performance that reimagines The Offspring’s “Self Esteem” as a flamenco guitar piece, showing how convincingly style transfer can carry a rock classic into a new genre Full performance.

This is a clean case study for soundtrack alternatives, covers, and mood variations in trailers or micro‑dramas. Rights still apply if you publish or monetize, but as a production sketch tool it’s already useful.

🏆 Calls, contests, and deadlines

Opportunities for filmmakers and storytellers: OpenArt’s music video deadline (Times Square prize), Hailuo’s horror contest with credits, plus a Google ADK community call.

OpenArt Music Video Awards deadline tonight; Times Square prize

OpenArt’s Music Video Awards close at 11:59 PM PST today, with winning entries slated to appear on a Times Square billboard Deadline reminder and one last nudge to submit going out this afternoon Final reminder. You can jump straight to the program page to submit and review rules in the official brief Program page.

Hailuo offers 1‑month PRO to 5 creators today; horror film contest ongoing

Hailuo will grant a free 1‑month PRO plan to 5 creators—today is the last day to apply by sharing your work, even if it wasn’t made with Hailuo Pro plan call. This runs alongside the Hailuo Horror Film Contest (Nov 7–30; 20,000‑credit top prizes), following up on Hailuo contest with fresh creator how‑tos and submission steps you can copy Join steps thread, plus an official submission portal for entries Contest site.

Google ADK Community Call returns with DevX upgrades focus

Google’s Agents Development Kit (ADK) Community Call is “back again,” highlighting DevX upgrades and a focus on building—an open invite for teams shipping agent workflows to hear what’s new and ask questions live Community call.

Lisbon Loras issues open call to select 5 creators for year‑end event

Lisbon Loras announced an open call to select five creators to present work at its final 2025 event—an opportunity for AI artists to showcase projects to a live audience Open call details.

🗣️ Culture wars and creator takes

Discourse itself was the story: accusations around an anti‑Coke AI‑ad video, threads on productivity vs “real art,” and tongue‑in‑cheek ‘luddies’ memes. Open‑source pride posts mixed in.

Creator says anti‑Coke AI‑ad video added fake fingers to smear it

A filmmaker alleges a YouTube critic doctored a thumbnail to make Coca‑Cola’s AI Christmas spot look worse, circling a hand with unnaturally long, separated fingers. The post frames it as bad‑faith criticism aimed at fueling the “AI slop” narrative Accusation post.

If true, the incident shows how quickly discourse can be skewed by manipulated artifacts. For brand and agency teams testing gen‑AI work, this is a reminder to keep raw frames and process notes handy when backlash questions authenticity.

“Hours flaming vs hours creating” debate; AI likened to Rubens’ workshop

Two riffs consolidated the weekend’s meme: who actually spends more time making art—the AI artist or the AI critic? One post tosses a “10 hours creating vs 8 hours flaming” riddle Time riddle, while a longer essay argues AI tools are the new workshop apprentices, citing Rubens delegating most of the canvas and reserving the “master touch” Workshop essay.

For creatives, the point lands: ship more, iterate faster, and treat models as production staff—not competitors—to get professional results in hours, not months.

“Open source will win” rallying cry lands with builders

A succinct “open source will win” post captured the mood among tool‑chain tinkerers Open‑source stance. The sentiment pairs with steady public drops like CodeRabbit’s AI‑native git worktree CLI for agent‑assisted dev flows, released under Apache‑2.0 Git tool repo.

For teams, this is a cultural signal: creators value transparent stacks and portable workflows, and will reward projects that ship usable OSS alongside the hot demos.

‘Luddies’ meme wave pokes fun at AI haters

Light‑hearted clips mocking “luddies” flooded feeds, contrasting hand‑drawn toil with polished AI outputs and dramatizing rage‑replies as slapstick Meme clip Meme clip 2. The tone is teasing, not toxic, and it’s clearly resonating with creators tired of flame‑wars.

Expect more meme‑ified culture‑jabs as generative tools feel increasingly everyday.

“AI films will look real soon—and critics will still say slop”

A creator predicts AI‑made films will soon be indistinguishable to the eye, yet detractors will keep calling them “slop” Indistinguishability take. It’s half joke, half warning about goal‑posts moving as fidelity rises.

For filmmakers, the takeaway is pragmatic: focus on story and pacing. Audiences forgive seams when they care about the narrative; they don’t forgive boredom.

“Don’t fight pretraining”: prompt‑craft take making rounds

“Going against what the model was pre‑trained on is almost always a losing strategy,” one founder vented, capturing a familiar pain for prompt‑designers who over‑specify or misalign instructions Prompting advice.

So what? Work with model priors. Constrain tasks to where the model’s latent knowledge helps, not hurts, and test minimal prompts before adding control handles.

Creator breakdown: Coca‑Cola’s AI playbook favors early, messy testing

A thread recapping a Coca‑Cola marketing talk argues the brand stays culturally relevant by grabbing alpha/beta AI access, running 20+ concurrent experiments, and partnering with indie creators and labs—not waiting for perfect tools Keynote recap.

For marketers and studio leads, the advice is actionable: treat AI like a test bench, measure dwell time and consented UGC, and ship pilots instead of decks.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught