Lightricks LTX‑2 open-weights 20s 4K AV model – RTX gains 3× speed

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Lightricks is turning LTX‑2 into an open AV stack: full weights, a distilled variant, LoRAs, and multimodal trainer ship together, targeting ~20s 4K synchronized audio‑video in a single pass; ComfyUI adds Day‑0 node graphs with canny/depth/pose control and keyframing, while NVIDIA‑tuned NVFP4/NVFP8 checkpoints report ~60% lower VRAM use and up to 3× speedups on RTX GPUs. CEO Zeev Farbman frames the move as a response to calls for “DeepSeek moments” in creative video—shifting LTX‑2 from hosted endpoints to something teams can audit, fine‑tune, and run locally; early community clips emphasize identity persistence, strong lip‑sync, and fp8 “turbo” image‑to‑video flows on consumer cards.

• Kling and peers: Runware adds 30s one‑take Motion Control; OpenArt showcases Voice Control for cross‑scene avatars; PixVerse’s MIMIC and BytePlus Seedance 1.5 Pro lean into motion‑clone and millisecond lip‑sync.

• Hardware, worlds, policy: Tencent’s HY‑World 1.5 opens its world‑model stack with a 5B Lite; NVIDIA’s Rubin NVL72 hits 3.6 EFLOPS inference; Hollywood’s 2026 AI calendar bunches DOJ, SAG‑AFTRA, Oscars, and contract‑expiry fights.

Open AV and world models plus Rubin pods widen who can run video and spatial workloads.

Feature Spotlight

LTX‑2 goes truly open: local AV video for creators

Open weights + trainer and NVIDIA‑optimized ComfyUI make LTX‑2 the first practical, open, local AV video model—4K, keyframes, and synced audio—putting studio‑grade control on creators’ RTX PCs.

Cross‑account story. Lightricks’ LTX‑2 ships open weights + full trainer, Day‑0 ComfyUI support, and NVIDIA‑optimized checkpoints—native, synchronized audio+video at 4K with keyframe/control nodes. Big, practical leap for indie film/music video workflows.

Jump to LTX‑2 goes truly open: local AV video for creators topicsTable of Contents

🎬 LTX‑2 goes truly open: local AV video for creators

Cross‑account story. Lightricks’ LTX‑2 ships open weights + full trainer, Day‑0 ComfyUI support, and NVIDIA‑optimized checkpoints—native, synchronized audio+video at 4K with keyframe/control nodes. Big, practical leap for indie film/music video workflows.

Lightricks open-sources LTX-2 audio-video model with full trainer

LTX-2 (Lightricks): Lightricks has released LTX-2 as a truly open-source audio‑video generation model, including full weights, a distilled variant, controllable LoRAs, and a complete multimodal training stack—following up on synced audio, where fal first exposed LTX‑2 via hosted endpoints. open source thread The model targets up to ~20 seconds of synchronized motion, dialogue, sound effects, and music in a single pass, with support for native 4K and up to 50fps in downstream pipelines. performance thread

• Full-stack release: Lightricks is shipping full model weights plus a distilled version, camera/structure/conditioning LoRAs, a multimodal trainer, benchmarks, and evaluation scripts, as detailed in the open release breakdown. (open source thread, release contents) • Native AV generation: The model generates tightly synchronized audio and video together (rather than stitching), with multi‑keyframe control and fine‑grained conditioning built in rather than added post‑hoc. (av capabilities, performance thread) • Openness rationale: CEO Zeev Farbman frames this as a response to calls for "DeepSeek moments" in AI and argues that creative AV models must be open, inspectable, and runnable on local hardware to evolve with real production constraints. (openness Q&A, deepseek answer) • Docs and access: Lightricks points creators and developers to the LTX‑2 model page and documentation, which consolidate downloads, trainer instructions, and examples of extending the camera and structure controls. (community message, model page) The launch shifts LTX‑2 from a closed API experience into something teams can audit, fine‑tune, and slot directly into their own creative pipelines.

ComfyUI and NVIDIA ship optimized local LTX-2 pipelines for RTX GPUs

LTX-2 in ComfyUI (ComfyUI/NVIDIA/Lightricks): ComfyUI now exposes LTX‑2 as a native, Day‑0 pipeline with canny/depth/pose video‑to‑video control, keyframe‑driven generation, built‑in upscaling, and prompt enhancement, while NVIDIA and ComfyUI add NVFP4/NVFP8 checkpoints that cut VRAM use by ~60% and speed up local RTX workflows up to 3×. (comfyui launch, nvfp4 nvfp8 note) NVIDIA is simultaneously pitching RTX AI PCs as capable of "fast" LTX‑2 video generation with an optimized ComfyUI graph and tutorial content for text‑to‑video starters. (rtx ai pcs, nvidia how-to)

• Control surface for creators: ComfyUI’s node graph supports canny, depth, and pose conditioning for video‑to‑video, keyframe‑driven shots, and native upscaling plus prompt enhancement in a single LTX‑2 pass, aiming at precise camera and motion control for creative work. comfyui launch • Performance and specs: ComfyUI highlights up to 20 seconds of synchronized audio‑video, native 4K resolution, and up to 50fps output, while also claiming roughly 50% less compute and up to 6× faster generations than comparable models in their tests. performance thread • RTX‑focused quantization: New NVFP4 and NVFP8 LTX‑2 checkpoints are tuned for RTX consumer GPUs; ComfyUI reports up to 3× faster runs with about 60% lower VRAM usage when using NVFP4 versus full‑precision weights. nvfp4 nvfp8 note • Local→cloud hybrid pitch: The team describes LTX‑2 as running efficiently on consumer‑grade GPUs while also fitting cleanly into local‑to‑cloud hybrid pipelines, so teams can prototype on desktops and scale or batch jobs remotely as needed. performance thread Taken together, LTX‑2 plus ComfyUI and NVIDIA’s quantized checkpoints position high‑quality, controlled AV generation as something that can live on an individual creator’s RTX PC instead of only behind cloud APIs.

Early LTX-2 demos show strong identity, lip-sync, and fast I2V

LTX-2 creator adoption (community): Early testers are posting LTX‑2 clips that emphasize identity preservation, facial nuance, and fast image‑to‑video runs, with several calling the open release a major step for independent creators. (ostris demo, kimmonismus reaction) Feedback centers on character stability in 4K, responsive prompt following, and the practicality of fp8 "turbo" variants on strong GPUs.

• Perceived quality: One creator notes that "facial mimics, prompt following, identity preservation are all really good, even in 4K" when using LTX‑2 on complex shots. creator feedback • Speed and fp8 modes: Another tester describes an "fp8 turbo" image‑to‑video configuration as "So fast. So amazing!", underscoring that quantized checkpoints feel viable for real work rather than only benchmarks. fp8 turbo test • Open-source sentiment: A prominent community voice frames the release as "open source video generation just took a massive leap", tying the creative possibilities directly to the fact that weights and training code are available instead of locked in a SaaS tier. kimmonismus reaction • Heavy-hardware experiments: Japanese users report running 1280×720, 121‑frame clips with LTX‑2 I2V on ~100GB VRAM DGX‑class setups, signaling interest from high‑end experimenters pushing resolution and duration. japanese i2v test These early anecdotes are promotional rather than systematic benchmarks, but they suggest that LTX‑2’s open release is already feeding into image‑to‑video, 4K portrait, and character‑driven workflows across the indie creator community.

🎥 Kling 2.6 adoption: Runware support and CES spotlight

Excludes LTX‑2 feature. Today’s updates push Kling 2.6 beyond a trend: Runware adds Motion Control with 30s takes, OpenArt showcases Voice Control consistency, and Kling AI announces a CES creator panel.

OpenArt showcases Kling 2.6 Voice Control for consistent dialogue across clips

Kling 2.6 Voice Control (OpenArt): OpenArt is promoting Kling 2.6 Voice Control as a way to keep the same synthesized voice and character consistent across multiple clips and scenes, emphasizing cross‑scene identity in its short demo voice control clip. The feature runs as a public preset on OpenArt’s video generator, where creators can try voice‑driven performances from a browser UI video generator page.

• Cross‑clip consistency: The demo text stresses "Same voice. Same character. Across clips. Across scenes.", with a ~20‑second sequence showing the same animated persona speaking through several camera setups while retaining timbre and delivery voice control clip.

• Creator workflow surface: OpenArt routes users to a dedicated Kling 2.6 I2V page with presets for character, audio, and motion, positioning this as a tool for avatar‑driven explainers, series intros, or recurring characters rather than one‑off tests video generator page.

For storytellers building episodic content or branded hosts, this indicates Kling is being treated not just as a shot generator but as an identity‑aware dialogue engine inside mainstream creator platforms.

Runware adds Kling VIDEO 2.6 Motion Control with 30s one‑take shots

Kling VIDEO 2.6 Motion Control (Runware): Runware has integrated Kling VIDEO 2.6 Motion Control into its model catalog, letting creators drive character performance from a reference video and render up to 30‑second, single‑take clips with synced motion, expressions, and lip sync, as outlined in the launch copy Runware launch. This gives small teams a way to prototype dance, martial arts, or talking‑head pieces without keyframing, inside the same Runware workflow they already use.

• Shot duration and control: The integration highlights one‑take outputs "up to 30s", with the model following a driver video for global body movement while preserving the target character’s look Runware launch.

• Creator‑oriented stack: By exposing Kling 2.6 alongside other hosted video models, Runware positions it as a plug‑in option for existing pipelines rather than a separate app, and links directly to the Motion Control endpoint for experimentation model page.

For AI filmmakers and motion designers, this adds another accessible surface where Kling’s reference‑driven animation can be tested against existing storyboards and edit timelines.

Kling 2.6 Motion Control keeps spreading through baby dances and glow‑up spells

Motion Control adoption (Kling): Creators continue to push Kling 2.6 Motion Control into everyday meme formats and test shots, extending the trend coverage from Kling trend where it was highlighted as a 2026 staple for CES‑era creators. Kling’s own promo leans into an upload‑photo‑pick‑template flow, showing a baby photo driving a short dance routine, with Motion Control handling the body movement from a template clip baby dance promo.

• Template‑driven virality: Kling’s marketing invites users to "watch your cute baby bust out a cool dance" by pairing a still image with a curated motion video, illustrating how non‑technical parents or creators can generate ~15‑second character dances without posing rigs baby dance promo.

• Precision motion mimic: A Japanese creator testing Motion Control reports that a character image plus a reference video yields motion that follows "その通りに動いてくれた" (moved exactly as intended), calling the accuracy high and remarking that it’s striking this level of control is already available creator test.

• Stylized transformations: Community clips like a "magical transformation spell" where a woman changes outfits mid‑shot demonstrate that the same Motion Control core can support anime‑style glow‑ups and cosplay transitions, not only realistic dance transformation demo.

For AI filmmakers and short‑form storytellers, these examples show Kling 2.6 settling into repeatable recipes—dance templates, transformations, and reference‑driven acting—rather than remaining a one‑off technical showcase.

Kling AI schedules CES 2026 creator panel on GenAI’s impact

CES creator panel (Kling AI): Kling AI announced a CES 2026 Creator Stage panel titled "How GenAI Is Transforming the Creative Industry", set for January 7, 2026, 3:00–3:30 pm PST at LVCC Central Level 1 panel details. Speakers include Emmy‑winning director Jason Zada, Genvid CCO Stephan Bugaj, Higgsfield CEO Alex Mashrabov, and Kling’s Tony Pu, signaling a push to frame Kling 2.6‑style tools as part of a broader pro‑creator stack rather than novelty filters.

The announcement positions Kling alongside partners like Higgsfield and other production veterans, with the session billed as a discussion of how GenAI is changing workflows for commercial and entertainment work rather than a product demo panel details. For filmmakers and designers watching Kling’s trajectory, this is another sign that the company is leaning into industry‑facing messaging at CES rather than focusing only on consumer virality.

🧰 Relight and production prompts: faster look control

A workflow‑heavy day: Higgsfield Relight adds studio‑style lighting control to stills; Luma’s Ray3 Modify shows virtual relighting in video; NB Pro posts cover JSON prompting, vector‑to‑plush pipelines, and collage/grids for consistent looks.

Higgsfield’s Relight turns still images into 3D‑lit “virtual sets”

Relight (Higgsfield AI): Higgsfield introduced Relight, a still‑image lighting tool that lets creators pick light direction, adjust intensity and color temperature, and toggle soft‑to‑hard shadows across six presets, effectively treating a flat render like a simple 3D scene according to the feature rundown in Relight launch; Techhalla’s breakdown shows an arrest photo being reshaped by dragging a virtual key light around the subject to add contrast and color without re‑rendering or reshooting in Relight tutorial. This gives photographers, illustrators, and filmmakers late‑stage control over mood and storytelling beats that used to require new lighting setups.

fofrAI turns Nano Banana JSON prompting into a public guide and app

JSON Prompting (Nano Banana Pro): Following up on earlier JSON prompting experiments for vibe‑locked renders in JSON pipeline intro, fofrAI has packaged their Nano Banana Pro specification into a detailed blog post and an AI Studio app, using a starship‑approaching‑black‑hole shot as the running example in JSON prompting demo. The JSON spec nails down view (third‑person from behind), relative scale between ship, settlement, and black hole, plus lens and flare notes so reruns or variations preserve framing and mood, as shown by the matching still in

, and the full structure is published in the JSON prompting blog.

Vector‑to‑plush: Illustrator + Nano Banana + Weavy yields full merch shots

2D Vector‑to‑Plush (Ror_Fly): Ror_Fly lays out a six‑step workflow where a hand‑drawn Illustrator vector (Cartman) is turned into an 8K plush render with Nano Banana Pro, then pushed through Weavy and a compositor to generate contact sheets, scale diagrams, and lifestyle photos with real dogs in Vector to plush overview. The node graph and resulting grid show how one system prompt enforces material, seams, proportions, and lighting consistency while downstream prompts spin out Mastiff‑cuddling, greyhound‑chasing, and shelf‑display shots at matching scale, as illustrated in

.

Free “Candid Moments” PDF teaches Nano Banana Pro candid photo prompts

Candid Moments Guide (Nano Banana Pro): ai_artworkgen released a free PDF called Mastering Photography with AI: Candid Moments that focuses on prompt structures for NB Pro images that feel like unposed, in‑between shots instead of stiff portraits, as described in Candid guide intro. The thread collects multiple examples and links a consolidated wrap‑up post in Guide wrap thread, and the PDF itself is available via the candid guide.

K‑pop dance spec shows Nano Banana Pro character consistency across a 2×2 grid

K‑pop Grid (Nano Banana Pro): A highly structured prompt for a young K‑pop idol dancer combines wardrobe, camera (Sony A7R IV at 85mm, f/2), studio setting, LED floor strip, and lighting notes with pose and expression details to produce a hyper‑realistic hero shot in K‑pop scene prompt. A companion instruction then asks for a 2×2 grid of 3:4 verticals with different poses and angles while keeping face, outfit, lighting, and overall style locked, producing four consistent frames that could be a mini lookbook or choreography storyboard in

.

Luma’s Ray3 Modify shows pure virtual relighting of interior footage

Ray3 Modify (LumaLabsAI): Building on earlier environment‑swap tests in Dream Machine, Luma’s latest Ray3 Modify clip focuses on virtual relighting alone, fading a modern kitchen from bright daytime to dark, moody and then warm evening looks while keeping camera motion and composition intact, following up on scene swap demo that highlighted broader scene changes. The new example reinforces that Ray3 can be used as a post‑production lighting pass for interiors, not only for changing locations, as the kitchen’s layout and objects stay stable while only light direction and ambiance shift in Ray3 relight clip.

NB Pro scrapbook prompt builds a 9:16 “day in my life” collage

Scrapbook Collage (Nano Banana Pro): IqraSaifiii shares a detailed JSON‑style prompt for a vertical 9:16 social “scrapbook collage” with four overlapping panels, sticker text like “a day in my life”, and mixed‑media graphics on a blurred foliage background, all driven from one subject spec in Scrapbook prompt. The recipe locks down demographics, hair, wardrobe, matcha cup prop, smartphone‑style lens/aesthetic, and lighting (dappled afternoon sun in a garden café), plus per‑panel pose and expression notes, so the four photos read as a cohesive vlog sequence rather than unrelated shots.

3D crystal figurine prompt turns popular characters into glass collectibles

Crystal Figurines (Nano Banana Pro): IqraSaifiii also publishes a prompt pattern that renders characters like Zootopia’s Nick and Judy, Spider‑Man, or Hello Kitty as translucent colored glass figurines with glossy “crystal” material, floating hearts or apples, and pastel pink studio backdrops in Crystal figurine examples. The template leaves slots to insert any subject and preferred decorative elements while keeping material, lighting, and composition consistent so illustrators or merch designers can quickly spin out coherent collectible series.

Creators port Midjourney looks into Nano Banana Pro for cinematic realism

Style Transfer (Nano Banana Pro): WordTrafficker shows a Nano Banana Pro render labeled “Cinematic realistic style render” that matches a Midjourney source style while sharing that they are doing broader style transfers from MJ to NBP in Style transfer note. The posted frame—a dramatic low‑key portrait—demonstrates that once a style vocabulary is translated into NB Pro prompt language, artists can get similar look and feel while benefiting from Nano Banana’s controllable camera and production‑oriented JSON setups in

.

Mixed‑media portrait prompt overlays drawn “glitch frames” on a photo

Glitch Frames Portrait (Nano Banana Pro): Another NB Pro spec from IqraSaifiii defines a tight, golden‑hour headshot of a woman with specific facial features, copper‑lit hair, and chiaroscuro side lighting, then layers in two hand‑drawn oil‑pastel rectangles over one eye and part of the mouth so the sketched features diverge slightly from the underlying photo in Glitch frame recipe. The JSON breaks out subject anatomy, lighting, exact frame placement, and even a red handwritten signature, turning what would be a single still into a repeatable mixed‑media series template people can reuse in Portrait prompt repost.

🎛️ Other gen‑video engines: mimicry, shot control, worlds

Excludes LTX‑2 and Kling. Roundup of non‑feature tools creatives can use today: PixVerse’s MIMIC animates a still from a driving video, Seedance 1.5 Pro emphasizes shot‑level direction + lip‑sync, and HY‑World 1.5 lowers the bar for world generation.

Tencent’s HY-World 1.5 opens its world model stack for creators

HY-World 1.5 (Tencent Hunyuan): Tencent upgrades its HY-World spatial world model with open training code, faster inference, and a new Lite 5B variant aimed at "small-VRAM GPUs," while removing the waitlist for its online app so anyone can try scene generation and interaction in the browser HY-World 1.5 update. This release positions HY-World as an open, community-driven base for 3D world building and "spatial intelligence," rather than a closed demo.

• Open training stack: Tencent shares fully customizable training code so teams can build and fine-tune their own world models on top of HY-World’s recipe, instead of treating it as a black box HY-World 1.5 update.

• Performance and access: A Lite 5B model targets lower-VRAM GPUs, while accelerated inference and a zero-waitlist web app focus on real-time interaction and quick iteration for designers and game or film pre-vis work HY-World 1.5 update.

The combination of open code, lighter checkpoints, and immediate web access moves HY-World into the same conversation as other creator-facing world engines, but with more emphasis on transparency and tinkering.

BytePlus Seedance 1.5 Pro leans into shot-level control and tight lip-sync

Seedance 1.5 Pro (BytePlus): Building on Seedance intro where BytePlus framed Seedance 1.5 Pro as the way to lose "AI demo" vibes, the latest promo stresses millisecond‑precise lip-sync, multi-speaker multilingual dialogue, shot-level directorial control, and end‑to‑end audiovisual alignment for cinematic work on ModelArk Seedance spec spot. The message is that teams no longer have to trade visual quality for speed, with the model pitched as handling both.

• Audio‑video alignment: The spot calls out "millisecond‑precise" lip-sync plus multi-speaker dialogue and sound design that follow the cut, positioning Seedance as a tool for scripted scenes where audio timing and shot rhythm matter as much as the frames themselves Seedance spec spot.

• Director-style controls: BytePlus repeats that creators can describe shot structure and have the model respect those directions, framing it as shot-level control rather than single-prompt clips meant only for demos Seedance spec spot.

• Cultural framing: A separate BytePlusX ad recaps 2025’s "brainrot era" of AI memes, micro‑films, and deepfake battles, tying Seedream 4.5 and related tools into a creator culture where one person often stands in for a whole studio Seedream culture ad.

Together, these posts reinforce Seedance 1.5 Pro as a production‑oriented engine for teams that care about dialogue, edit rhythm, and client‑ready polish, not only eye‑catching one‑offs.

PixVerse’s MIMIC turns one driving video into many animated avatars

MIMIC (PixVerse): Following up on MIMIC launch where PixVerse tied its new motion-clone feature to CES credits, today’s posts focus on how creators can feed a single driving clip into AI Motion Mimicry and have a still image copy movements, expressions, and lip-sync for one‑click avatar spokesperson videos PixVerse feature brief. The feature pitches itself as a way to reuse one performance across many characters without keyframing or manual rigging MIMIC analysis.

• One video, many looks: PixVerse shows a static portrait that precisely follows the head motion, facial expressions, and mouth shapes from an input video, with on-screen copy reinforcing "one video drives your image" as the core mental model MIMIC analysis.

• Avatar and spokesperson focus: Marketing copy frames MIMIC as a tool for AI avatar presenters and reaction clips—"one-click generate AI avatar spokesperson videos"—rather than a general-purpose VFX system, pointing creatives toward explainers, shorts, and social formats PixVerse feature brief.

• Ecosystem signal: CES photos underline that PixVerse is pushing this as part of a broader "video is something you play with" positioning, connecting MIMIC to live creator workflows rather than isolated tests PixVerse CES photos.

For filmmakers and social video teams, MIMIC’s promise is about scaling performances across characters and formats while keeping the original acting pass as the single source of truth.

🖌️ Reusable looks: Midjourney styles and art prompts

Mostly styles and templates for visual art. New MJ sref with iridescent grain, a versatile charcoal sketch prompt, community geisha/dragon QTs, plus a translucent crystal figurine look for character renders.

Charcoal sketch prompt pack offers reusable raw, textured illustration look

Charcoal sketch prompt (Azed_ai): Azed_ai shares a generic "charcoal sketch" prompt template aimed at any subject, emphasizing raw, textured linework, expressive shading, and smudged gradients that leave visible sketchbook marks, with multiple example outputs across dancers, knights, clowns, and musicians in the prompt share.

Because the core description is subject-agnostic, illustrators and filmmakers can reuse it for concept art, mood boards, and title illustrations while swapping only the subject, keeping a consistent gritty, analog look around otherwise fully digital projects.

Midjourney sref 1275745115 nails classic European animation aesthetics

Midjourney sref 1275745115 (Artedeingenio): Another Midjourney style ref, --sref 1275745115, is highlighted for capturing classic European animation and Franco‑Belgian comic sensibilities—clean lines, painterly shading, and 70s–80s auteur animation vibes that feel close to Tintin-era films in the style breakdown.

For storyboarders and indie animators, this gives a single handle to lock a whole project into a cohesive retro-European look across characters, crowd scenes, and dialogue shots without hand-tuning every prompt.

Midjourney sref 6139537108 adds iridescent grain and foam-like forms

Midjourney sref 6139537108 (Azed_ai): A new Midjourney style reference, --sref 6139537108, focuses on soft, foam-like abstract forms with heavy grain, iridescent color shifts, and spectral flares, giving artists a reusable look for dreamy, tactile motion graphics and key art as shown in the style thread and reinforced by the later style repost.

This look is tuned for close-up, texture-first compositions, which can double as backgrounds, title cards, or overlays in music videos and film posters where designers need consistent but non-literal visual language.

Nano Banana Pro prompt turns characters into translucent crystal figurines

Crystal figurine render (IqraSaifiii): A detailed Nano Banana Pro prompt recipe shows how to turn existing IP or OCs into stylized translucent glass figurines—crystal bodies, glossy specular highlights, floating hearts, stars, or apples, and pastel backdrops under bright studio lighting—demonstrated with Zootopia, Spider‑Man, and Hello Kitty in the crystal figure set.

This gives character artists and product designers a repeatable way to previsualize collectible toy lines, limited-edition merch, or in-world props with a single aesthetic that still respects each character’s recognizable silhouette and palette.

“QT your dragon” prompt crystallizes a pastel plush mini-dragon look

Pastel plush dragon (Azed_ai): The "QT your dragon" call results in a now-replicable style for tiny, plush-like dragons—soft white bodies, fuzzy texture, glittered pink accents, and a neutral studio backdrop—that reads like toy photography in the dragon base and is echoed in community replies such as dragon variation.

For merch mockups, kidlit covers, and mascot concepts, this prompt pattern gives artists a consistent cute-creature look they can remap onto different color schemes or accessories without rebuilding the aesthetic.

“QT your geisha” thread spins up a watercolor ink-splash portrait style

Geisha watercolor look (Azed_ai): A "QT your geisha" prompt thread showcases a reusable watercolor/ink-splash style—off-center geisha figure, loose brushwork, parasol, and dripping blues and pinks—that leans into high-contrast silhouettes and painterly splatters as seen in the geisha starter.

This style is well-suited for poster art, chapter cards, or character introductions where creators want an expressive, semi-abstract portrait treatment rather than literal 3D renders.

Mixed-media glitch-frame portrait prompt blends photo and oil-pastel overlays

Glitch-frame portrait (IqraSaifiii): A long-form Nano Banana Pro prompt describes a mixed-media portrait style where hyper-real photography is overlaid with hand-drawn oil pastel boxes over one eye and part of the mouth, plus crosshair lines and a red handwritten signature, lit by hard golden-hour side light for a chiaroscuro effect, as detailed in the art overlay prompt and echoed in the supporting job thread.

This recipe effectively packages an album-cover-ready look—half real, half sketched—so photographers, musicians, and filmmakers can generate series of portraits that all share the same glitch-art framing and light while changing only the subject.

NB Pro scrapbook collage prompt captures Gen Z “day in my life” aesthetic

Scrapbook collage layout (IqraSaifiii): Another Nano Banana Pro prompt defines a vertical 9:16 "social media scrapbook" style: four overlapping photo panels, blurred green foliage background, Gen Z vlog stickers like "a day in my life" and "sunny monday", plus doodled outlines and camera icons around a flannel-wearing subject holding a matcha drink, all specified down to lens, ISO, and sunlight quality in the scrapbook spec.

Because the structure, overlays, and camera settings are baked into the text, creators can drop in new outfits or faces while preserving a consistent vlog-collage brand look for shorts, story covers, or campaign templates.

🧱 From one image to 3D and stylized character reels

Compact 3D beat today: local Sharp UI for single‑image‑to‑3D, an end‑to‑end day‑build from images to FBX animation, and stylized character vids blending MJ, NB Pro, Hailuo, and ElevenLabs.

Local Gradio UI lets Apple’s Sharp turn a single image into 3D on your PC

Sharp Gradio UI (Apple / community): A community dev has built a local Gradio web UI for Apple’s Sharp model so you can generate 3D assets from a single image directly on your own machine, rather than relying on a hosted demo, as highlighted in the Sharp 3D UI mention. This puts single-image‑to‑3D in reach for artists who already have a decent GPU and want to iterate on characters, props, and product mocks without cloud latency or per‑asset fees.

For AI creatives, this means Sharp shifts from research paper to practical tool: drop in a concept render or photo and get a starting 3D mesh that can feed into Blender, Unreal, or game engines—tightening the loop between 2D ideation and 3D production.

One‑day pipeline turns Nano Banana images into FBX animated models via Tripo

Image‑to‑FBX pipeline (Techhalla): Techhalla breaks down a one‑day pipeline where Cursor and Nano Banana Pro concepts are turned into usable FBX animated models via Tripo, then dropped into interactive environments, as shown in the Banana FBX workflow. The demo walks from coding the app with Cursor, to generating stylized banana character references in Nano Banana Pro, to converting a single image into a rigged 3D mesh in Tripo, ending with the character walking around in a simple scene.

• End‑to‑end stack: Cursor handles code for the interactive viewer; Nano Banana Pro provides consistent character art; Tripo performs image‑to‑3D with animation‑ready output; the result is exported as FBX and dropped into a lightweight environment in under a day Banana FBX workflow.

• Why it matters: For indie game devs, motion designers, and small studios, this shows that a single creator can go from concept art to a playable, animated character without traditional modeling or rigging—compressing work that used to take days into a single intense build session.

The clip does not expose polygon counts or deformation quality, so mesh cleanliness and suitability for high‑end production remain open questions, but the pipeline demonstrates that image‑driven 3D character prototyping is now realistic on a tight schedule.

“Riven Black” reel showcases MJ → Nano Banana → Hailuo → ElevenLabs character stack

Riven Black character stack (Heydin_ai): Creator Heydin details the full toolchain behind the “Riven Black” character reel, combining Midjourney for the base look, Nano Banana Pro on Freepik for image development, Hailuo 2.0/2.3 for video, and ElevenLabs for sound design Riven Black clip. The final result is a slow, cinematic 3D‑style turn of a white‑haired, armored character in smoke, fully scored with custom SFX.

• Visual pipeline: A still from Midjourney sets the character design; Nano Banana Pro refines detail and consistency on the key frame; Hailuo 2.x animates that frame into a smooth video shot while preserving the stylized look Riven Black clip.

• Audio layer: ElevenLabs provides the sound effects bed, turning what might have been a silent character turntable into a more finished teaser shot ready for inclusion in a reel or mood piece.

For filmmakers and character designers, the stack shows how to blend separate best‑in‑class tools into a cohesive stylized character moment: static generative art for design, another model for polish, a third for motion, and an AI audio engine for atmosphere.

📖 Prompted shorts: Grok shots and indie experiments

Narrative experiments rather than model releases. Grok Imagine nails a 360° action spin other models missed; poetic anime OVA‑style disintegrations; plus a $300 Zelda‑style teaser built in 5 days with Freepik.

$300, 5‑day Zelda‑style teaser built entirely in Freepik

Zelda‑style teaser (Freepik): Filmmaker PJ Accetturo posts a Legend of Zelda–inspired micro‑trailer built entirely inside Freepik in five days on roughly a $300 budget, contrasting that with Nintendo taking 40 years to greenlight an official movie zelda thread. He frames the piece as a gritty, grounded re‑imagining of Hyrule—Zelda sees her home destroyed and has to act—mapped across beats of Fear → Ruin → Rage → Capture → Confrontation, with the teaser cutting between shots of Link on horseback, Hyrule architecture, glowing swords, explosions, and bold text callouts like “HYRULE LORE” that read like a studio‑scale blockbuster.

For indie filmmakers and trailer editors, this thread functions as a case study that a single creator can now block out a franchise‑level look and feel using stock‑plus‑AI tooling, detailed story beats, and aggressive editing rather than access to a large on‑set crew.

Grok Imagine nails a 360° action spin other models botch

Grok Imagine 360° shot (xAI): Creator cfryant shows Grok Imagine executing a full 360‑degree rotating arena shot around a chrome‑and‑purple robot, keeping limbs, pose, and environment stable as the camera orbits—other “top models” reportedly broke anatomy or continuity on the same specification in his tests 360 spin demo, anatomy reflection . Following up on prompt control, which highlighted Grok’s adherence on complex prompts, this clip underlines that it can maintain coherent geometry during extreme virtual camera moves that push most current video models beyond their implicit 3D understanding.

For action directors, previs artists, and game trailer teams, this positions Grok Imagine as a candidate for hero shots that orbit characters without cutting away to hide model failures.

Grok Imagine powers poetic 80s OVA‑style vampire and flower shorts

OVA vampire shorts (Grok Imagine, xAI): Artist Artedeingenio uses Grok Imagine to stage an 80s anime OVA‑style scene where a vampire steps from deep shadow into the first light of dawn and slowly disintegrates into drifting ash and light particles, with wind‑blown remnants fading to nothing over a few seconds vampire short. A separate clip shows a glowing flower blooming and rotating against a dark background, which he cites as evidence that he is “managing to create really beautiful, poetic animations” in Grok that he says he could hardly match with other apps flower short.

For storytellers experimenting with anime‑influenced shorts, these prompts demonstrate that the model can handle slow, emotionally driven visual beats—not only fast, flashy transitions—while keeping style, motion, and particle effects aligned with a clear narrative idea.

Diesol and peers stress film craft alongside AI tools

AI + film craft mindset (Diesol): Filmmaker Diesol outlines a 2026 philosophy for creatives, arguing that people learn camera work, lighting, editing, screenwriting, sound, and music in parallel with re‑learning how to do “it all in AI,” because “story is the game; tools will continue to update,” which in his view makes hybrid skills the safest bet craft advice. In a separate reflection on how embedded AI already feels in daily life—from ChatGPT prompts to shopping and memes—he pushes the idea that it is “time to make AI work for you,” rather than treat it as a far‑off trend ai now reflection. TheoMediaAI reinforces the perspective by noting that “you’ve got [a camera] in your pocket right now” and that a phone can be “kitted out to become a cinematic powerhouse” when paired with AI post‑workflows phone camera remark, while Diesol punctuates the ongoing experiments with a simple clapperboard emoji as he continues shipping AI‑assisted shorts film emoji.

For writers, directors, and editors experimenting with AI, these posts frame the technology as an additional layer on top of classical craft rather than a replacement for it, and as something already woven into day‑to‑day creative work rather than a future milestone.

Grok Imagine doubles as an anime villain design and music sketchpad

Anime villain reel (Grok Imagine, xAI): Another Artedeingenio post compiles a rapid sequence of stylized anime villain portraits generated in Grok Imagine, each framed as a tight character shot with distinctive costume design, lighting, and mood, overlaid with the app’s “GROK IMAGINE” branding villain compilation. The same creator also praises Grok Imagine as “really good for music and songs” after having it generate an 80s‑style power ballad about a dog finding a tennis ball, complete with on‑screen lyrics and playback music demo.

Taken together, these clips present Grok Imagine as a mixed‑media sketch environment where character designers and writers can iterate on villain looks, tone, and soundtrack ideas inside a single tool rather than jumping between separate image and audio workflows.

Mind Tunnels: Extraction leans on Midjourney for sci‑fi set pieces

Mind Tunnels stills (Diesol): Director‑creator Diesol shares additional AI‑generated shots “from the cutting room floor” of his Mind Tunnels: Extraction project, crediting Midjourney with continuing to “create spectacles like no other” for large‑scale sci‑fi imagery mind tunnels shots, more stills link . The shared work focuses on richly lit, surreal environments and dramatic compositions rather than character animation, signaling that Midjourney is being used here as a concept‑art and keyframe engine feeding a longer pipeline, not as an end‑to‑end video model.

For sci‑fi storytellers, this shows one pattern where AI image tools handle the heavy lifting on vistas and set pieces, while more traditional editing, compositing, or separate video models handle motion and final cuts.

AI‑driven music video teaser mixes live performance and abstract visuals

AI music video WIP (pzf_ai): Creator pzf_ai teases a new music video now in production, sharing a brief clip that opens on a straightforward studio lip‑sync performance before snapping into a bright, abstract, generative environment where the same performer continues singing amid shifting shapes and colors music video tease. The post does not spell out the exact model stack, but his broader feed leans on modern video generators and enhancement tools, so this functions as another example of musicians wrapping full releases in AI‑heavy visual treatments rather than reserving them for lyric clips.

For artists and labels, it highlights how AI visuals are showing up directly in primary music videos, blending recognizably human performances with stylized, model‑driven worlds.

⚖️ Promptcraft integrity and 2026 Hollywood AI calendar

Community debates and policy timelines: a call‑out against prompt plagiarism, a PSA debunking Grok safety‑setting myths, and a timeline of DOJ/SAG‑AFTRA/Oscars milestones that may reshape AI use in film.

Hollywood’s 2026 AI calendar lines up DOJ action, SAG-AFTRA talks, and Oscars test

Hollywood 2026 AI calendar (AI Films/Zaesarius): Commentator Zaesarius lays out a tight 2026 schedule where US regulators, unions, and awards bodies collide over AI in film, highlighting a DOJ AI Task Force move on January 10 targeting state performer‑likeness protections, SAG‑AFTRA negotiations starting February 9 under Sean Astin, a March 15 Oscars test of how AI‑assisted films are treated, and June 30 as the date when major union contracts expire if no new AI language is agreed, as summarized in the timeline tweet and expanded in the Hollywood AI blog. He frames 2026 as a "reset" year where assumptions about AI in performance, post, and authorship either get rewritten in contracts or dug in as lines in the sand.

• Key dates: Jan 10 DOJ AI Task Force action against California‑style performer laws; Feb 9 start of SAG‑AFTRA talks on AI use; Mar 15 Oscars decision on what counts as an AI‑eligible film; Jun 30 expiration of current union agreements if no AI clauses are updated, according to the timeline tweet.

• Issues in play: The blog points to tensions between federal attempts to pre‑empt strong state likeness protections, the need to define AI use and residuals inside union contracts, and pressure on studios to show real ROI on large AI investments like Disney’s reported OpenAI spend, as discussed in the Hollywood AI blog.

For AI‑heavy filmmakers and studios, this calendar sketches when legal risk and cultural acceptance around AI‑generated actors, voices, and shots are likely to be contested in public rather than in isolated deals.

Prompt originality debate flares as Azed AI condemns prompt copying

Prompt ethics and originality (azed_ai): Azed AI publicly pushes back on the trend of copying other people’s prompts, tweaking a few words, and presenting them as original work, arguing that “make your own prompts” is about basic integrity rather than gatekeeping, and that small rephrases do not change authorship, according to the prompt integrity rant. He says this behavior is common rather than rare and adds that writing your own prompts is actually easier than reverse‑engineering and renaming someone else’s ideas.

He reinforces the point by continuing to share free, detailed prompt templates like his "charcoal sketch" recipe for raw, textured drawings, which he offers as inspiration for people to build from instead of clone, as shown in the charcoal prompt share and echoed in the charcoal prompt repost. For AI artists and filmmakers who rely on promptcraft as a creative skill, the thread frames originality and attribution as social norms the community is still negotiating, not solved problems.

Ozan Sihay debunks Grok “bikini safety” settings and calls for better research

Grok safety myths (Ozan Sihay/xAI): Turkish creator Ozan Sihay criticizes a wave of near‑identical tech videos that claim changing specific Grok settings will stop the model from generating bikini versions of user photos, calling these claims entirely false and explaining that the toggles in question only control whether user data may be used to improve the AI, not its safety boundaries, per the grok settings PSA. He says he has seen the same misleading tutorial repeated across “many” creators, and urges them not to publish configuration advice without actually understanding what the options do.

He follows up by mocking the trend as an "Exorcist"‑style scare story, reinforcing that these switches are about training consent, not content filters, in the exorcist comment. For creatives using Grok Imagine or similar tools on personal imagery, the thread underlines how misreading UX copy can create a false sense of safety and shows how quickly configuration myths can spread when multiple influencers recycle the same script.

🎙️ Voices and quick scores for creators

Few but useful items: a real deployment case for voice agents in sales ops and a community nod to Grok’s fast song creation for temp tracks and memes.

ElevenLabs voice agents now power 3M+ minutes of CARS24 sales calls

ElevenLabs Agents (ElevenLabs): CARS24 reports that its AI voice agents now handle over 3 million minutes of customer conversations, assisting 45% of sales and cutting calling costs by 50%, while escalating complex cases to human teams when needed according to the CARS24 metrics.

This deployment is framed as end-to-end assistance—guiding buyers, addressing objections, and routing edge cases—rather than a narrow IVR, and the company links to a longer discussion of the workflow and results in the attached customer interview.

Grok Imagine gets praise for fast, on-style song generation

Grok Imagine music (xAI): A creator highlights Grok Imagine’s ability to generate catchy, on-brief songs—showing an 80s power ballad about a dog and its tennis ball with lyrics appearing in sync as the track plays in the app UI in the music demo.

The post emphasizes how well the model hits style plus topic for quick music and meme content, with the creator saying it is "really good for music and songs" as a practical scoring tool for short-form projects and jokes music demo.

📣 Creator promos and CES tie‑ins

Short‑term offers and presence. Today includes Relight credit giveaways, PixVerse’s CES push and media moments, and ApoB’s 24‑hour credit promo for persona‑driven motion posts.

Higgsfield dangles 220‑credit Relight giveaway to push AI lighting tool

Relight (Higgsfield): Higgsfield is promoting its new Relight image‑relighting tool with a social giveaway that offers 220 credits to users who retweet, reply, follow, and like the launch post, tying a concrete on‑ramp to a pro‑grade feature set credit giveaway. The tool gives creators studio‑style control over light direction, intensity, and color temperature on existing images, with six presets, 3D positioning, and soft‑to‑hard shadow control shown in the launch clip

.

• Creator workflow angle: Independent creators like Techhalla are already using Relight to re‑light news‑style arrest photos by dragging a virtual key light around the frame and showing how shadows update realistically, as detailed in the workflow thread; they then link a step‑by‑step Higgs workflow that walks through going from a base image to a finished relit shot in minutes workflow guide.

• Broader framing: Commentators frame Relight as turning lighting from a capture‑time constraint into a post‑production decision, highlighting backlit portraits, product shots, and stylized color washes as key use cases for the credits‑driven trial ai recap.

The net effect is a classic "engage to earn" promo wrapped around a tool that directly targets cinematographers, photographers, and AI illustrators who care about light as the primary storytelling lever.

PixVerse leans on CES 2026 presence to sell “video you play with” vision

PixVerse at CES 2026 (PixVerse): PixVerse is using its CES 2026 presence to pitch the line that "video is no longer just something you watch, it’s becoming something you play with," expanding on its earlier booth‑gift strategy at the show PixVerse CES. The team posted photos of founders and creators around a poolside media area, an indoor presentation screen with the PixVerse logo, and an outdoor interview setup with camera crews filming in front of a pool backdrop ces recap.

MIMIC feature framing (PixVerse): Alongside the on‑site push, AI commentators spotlight PixVerse’s new MIMIC capability, which lets a single driving video control an image’s body movement, facial expressions, and lip‑sync for spokesperson‑style clips mimic explainer; the demo shows a static portrait coming to life in sync with a reference performance, summarized as "one video drives your image"

.

For filmmakers and social video creators, the combination of CES stage time, live interviews, and highly shareable MIMIC demos positions PixVerse as an AI video brand aimed at play and interactivity rather than pure behind‑the‑scenes tooling.

Apob AI re-ups 1,000‑credit Remotion offer around persona consistency pitch

Remotion (Apob AI): Apob AI is again offering 1,000 credits for 24 hours to users who retweet, reply, follow, and like its Remotion promo, this time framing the tool explicitly as a solution to "the biggest struggle for AI creators"—keeping characters visually consistent across content remotion promo. This builds on the earlier Remotion launch and credit drive that focused on studio‑quality headshots and simple portrait animation ApoB promo.

• Brand persona angle: The new clip shows a stylized 3D avatar speaking with synchronized facial movement and overlays like "Character Consistency Solved" and "Create Once, Deploy Everywhere," positioning Remotion as infrastructure for a single digital persona that can appear across platforms without constantly re‑prompting remotion promo.

• Creator economy framing: The messaging ties the short‑term credit boost directly to long‑horizon creator branding, arguing that one persistent avatar can front a "24/7 content house" while remaining on‑brand, which is a clear pitch to influencers, VTubers, and small studios trying to scale output without fracturing identity.

For storytellers building recurring characters or virtual hosts, the refreshed promo highlights that Apob is as focused on marketing the persona use case as on the underlying face‑animation tech.

Dreamina AI wraps New Year fireworks challenge with 1,000‑credit winner

Fireworks Challenge (Dreamina AI): Dreamina AI announced the winner of its New Year "AI Fireworks" challenge, selecting creator @WindEcho87 for a 1,000‑credit prize and closing a week‑long event that asked users to generate fireworks‑themed images or videos with Dreamina winner announcement. The contest ran from Dec 31, 2025 to Jan 7, 2026, with one winner, results promised a day after the event, and rewards to be distributed within three working days as laid out in the original rules winner announcement.

The promo leaned on guest judge @AllaAisling and New Year timing to encourage experimentation with Dreamina’s image/video capabilities, giving AI artists and motion designers a clear, time‑boxed brief plus a tangible credit reward that can roll back into future projects on the platform.

Producer.ai spotlights community-built Spaces after first challenge winners

Spaces community push (Producer.ai): Producer.ai is following its first Spaces community challenge—where they named winners like grufel, ChaoticGood, scuti0, VOXEFX, and SplusT—with a new spotlight on creator‑built Spaces such as a mini piano and a "Keyboard Hero" rhythm game, treating these as early examples of artists turning AI into playable instruments and tools spaces spotlight.

The team emphasizes that Spaces let artists build interactive instruments, games, and plugins that others can use directly on the platform, and the staff‑pick promotion plus earlier challenge prizes create a loop where builders get visibility while Producer.ai curates a gallery of AI‑powered toys and utilities for other creatives to explore.

🏗️ Studios, hardware, and robotics in creative pipelines

Business/infrastructure items relevant to media makers: Runway shares a customer case, NVIDIA Rubin perf numbers surface, and robotics stacks integrate open components for creator‑friendly demos.

NVIDIA Vera Rubin NVL72 specs land: 3.6 EFLOPS inference, 1.6 PB/s bandwidth

Vera Rubin NVL72 (NVIDIA): New slides from CES detail NVIDIA’s Vera Rubin NVL72 inference system at around 3.6 EFLOPS inference and 2.5 EFLOPS training performance with an internal fabric of 1.6 PB/s bandwidth, plus 54 TB of LPDDR5X and 20.7 TB of HBM across the pod according to the shared spec image in the rubin spec slide.

• Per‑GPU Rubin numbers: The same material calls out a single Rubin GPU at 50 PFLOPS NVFP4 inference, 35 PFLOPS NVFP4 training, 22 TB/s of HBM4 bandwidth, 3.6 TB/s NVLink, and 336B transistors—roughly 2.8× the HBM bandwidth and 5× the NVFP4 inference of Blackwell according to the slide in the rubin spec slide.

• Why creatives care: These numbers frame what "top shelf" compute looks like for next‑gen video and world models—Runway’s Gen‑4.5 and similar systems targeting Rubin-class hardware can reasonably assume multiple EFLOPS of budget for multi-minute, multi-character shots, rather than the tens of PFLOPS available on a single current GPU.

For studios planning 2026+ render farms or cloud deals, Rubin NVL72 effectively sets the ceiling for how heavy an AI-first film or series pipeline can get before compute—not model capacity—becomes the main constraint again.

Boston Dynamics puts Atlas humanoid into production with Gemini Robotics and Hyundai plan

Atlas humanoid (Boston Dynamics): Boston Dynamics is moving its Atlas humanoid into production with specs like 360° torso rotation, a 4‑hour battery, and a 110‑pound lift capacity, in parallel with a partnership where Google DeepMind’s Gemini Robotics models will handle industrial tasks, according to the summary in the atlas product specs.

Following up on Atlas partnership that flagged the Gemini Robotics tie‑in, the new update adds a deployment roadmap: joint research on automotive manufacturing begins this year, with Atlas fleets slated for Hyundai facilities from 2028, giving a concrete timeline for when AI‑driven humanoids might show up alongside humans on factory‑style sets atlas product specs.

For filmmakers, experiential designers, and theme-park style builders, a production Atlas with learned behaviors from Gemini moves robots from one‑off stunt rigs into something that could be rented, scripted, and choreographed like any other performer—backed by a published hardware spec instead of a black‑box prototype.

NVIDIA and Hugging Face fuse open Isaac robotics into LeRobot for creator demos

Isaac × LeRobot (NVIDIA / Hugging Face): NVIDIA Robotics and Hugging Face are integrating NVIDIA’s open Isaac robotics stack directly into the LeRobot library, turning Reachy Mini and similar bots into open, scriptable platforms that can be driven by cloud models and HF tooling, as teased in the isaac lerobot note and amplified in the robotics collab.

• Robots as an "app store": HF’s team describes Reachy Mini as heading toward an "App Store of Robotics," where owners can build and share apps that run on real hardware—early signs are community-made reactions and behaviors surfacing over the holidays, as highlighted in the reachy mini apps.

• Dev surface for creatives: Arm’s demo space and code for Reachy reactions, wired through Hugging Face Spaces and Isaac, show how animators or interaction designers can prototype gestures and performances in Python notebooks or web UIs instead of proprietary vendor tools, then push those to physical robots on stage or on set reachy demo link.

For studios experimenting with physical AI characters—installations, live events, or hybrid film/robotic performances—this Isaac–LeRobot stack points to a future where "rigging" a robot could feel a lot closer to rigging a character in a DCC than wiring a one‑off ROS stack.

Runway spotlights OBSIDIAN studio’s AI-first campaigns for Disney, Nike, Wrangler, Hyundai

OBSIDIAN workflows (Runway): Runway is showcasing how creative studio OBSIDIAN has built full campaign workflows for brands like Disney, Nike, Wrangler, and Hyundai around its video models and tools, with 15+ projects already shipped using this stack according to the customer story in the runway obsidian story and the detailed runway case study.

• Director-led, AI-heavy pipeline: Obsidian runs small (10–15 person) director-led teams that mix live action, traditional post, and Runway generative tools in the same timeline, letting directors iterate on casting, character design, and beats directly in the AI stack rather than handing off long spec docs—see the process breakdown in the runway case study.

• From prepro to post: The case study describes AI use from previsualization (concept frames, design passes) through production-time shot creation and real-time editing, then into post for VFX and polish, so the same AI environment stays present from first pitch to final delivery runway obsidian story.

For filmmakers and studio leads, this positions Runway less as a single tool and more as the backbone of a compact, always-on campaign studio rather than a series of disconnected "AI passes."

Hedra recaps Character-3, Hedra Studio, Live Avatars as it tees up 2026

Hedra platform (Hedra Labs): Avatar platform Hedra is marking 2025 as its biggest year so far, calling out the launch of its Character‑3 model, the Hedra Studio web environment, and its Live Avatars feature as key building blocks for creators, in a short recap reel aimed at the community in the hedra 2025 recap.

The team stresses that these tools already underpin a stream of viral clips, animations, and podcast‑style content from users, and that they are "deep in the office" working on the next wave of capabilities for 2026, without yet giving concrete feature or pricing details hedra 2025 recap.

For small studios and solo creators, this reinforces Hedra’s positioning as a dedicated avatar stack rather than a generic video model—something closer to a standing virtual talent roster plus production UI than a raw API.

Pictory case: doctor grows to 2,000 followers with text-to-video health shorts

Pictory AI Studio (Pictory): Pictory is sharing a case study where Dr. Neejad Chidiak scaled from zero to over 2,000 followers by using its text‑to‑video tools to produce hundreds of short, educational health videos without traditional editing experience, as outlined in the pictory doctor case and the linked pictory case study.

The promo ties this back to Pictory’s broader pitch that scripts, blogs, or rough ideas can flow directly into short-form content that feels "ready for social" through automatic editing, voice, and layout, which the team frames as enough for a solo professional to keep up with audience expectations on platforms that reward near‑daily posting pictory doctor case.

For educators, coaches, and niche experts considering AI-first production, this is another signal that turnkey studios like Pictory are leaning hard on real‑world creator examples rather than pure tech demos to show how far you can push content volume from a laptop.

🧪 Multimodal and control research worth bookmarking

Paper links and demos that could shape tools: unified AR text‑image modeling (NextFlow), small‑model reasoning (Falcon‑H1R), omni‑modal generators (VINO), scene manipulation via RL (Talk2Move), and long‑context inference (RLMs).

NextFlow proposes unified autoregressive model for text–image tokens

NextFlow multimodal transformer: The NextFlow paper proposes a single decoder-only autoregressive model that jointly handles interleaved text and image tokens, trained on 6 trillion discrete tokens to support unified multimodal understanding and generation, as summarized in the paper thread and detailed in the ArXiv paper. They introduce next-scale prediction for images instead of raster scan; the authors report 1024×1024 image generation in around five seconds, positioning this as a candidate backbone for future creative tools that mix long-form text with dense visual content.

Tencent’s HY‑World 1.5 opens code and adds 5B lite world model

HY-World 1.5 world model (Tencent Hunyuan): Tencent’s Hunyuan team announces HY-World 1.5, highlighting open training code for its world model stack, accelerated inference, and a new Lite 5B model designed to fit smaller VRAM GPUs while supporting interactive spatial environments, as outlined in the HY-World update. The release also removes the online waitlist so anyone can try the web app, framing HY-World as an open, community-driven platform for spatial intelligence that could underpin future simulation-heavy creative tools.

Falcon-H1R shows small 7B model can rival larger reasoners

Falcon-H1R small reasoning model: The Falcon-H1R report introduces a 7B-parameter language model that aims to match or beat state-of-the-art reasoning performance from models 2–7× larger by focusing on efficient test-time scaling and a tailored training recipe, according to the paper thread and the accompanying ArXiv paper. The work leans on supervised fine-tuning, reinforcement-learning-based scaling, and careful data curation for reasoning-heavy tasks, suggesting that future creative and coding assistants may not always need frontier-sized models to deliver strong step-by-step reasoning.

LG’s K‑EXAONE MoE model targets 256k‑token multilingual reasoning

K-EXAONE MoE model (LG AI Research): LG AI Research’s K-EXAONE technical report describes a Mixture-of-Experts language model with 236 billion total parameters but only 23 billion active per inference, built for long-context (up to 256,000 tokens) and six-language coverage including Korean, English, Spanish, German, Japanese, and Vietnamese, according to the paper summary and the linked ArXiv report. Benchmark results in the report place K-EXAONE alongside other large open-weight models on reasoning and general understanding tasks, making it a notable candidate foundation for multilingual creative and coding tools that need very long scripts or documents in context.

Talk2Move uses RL to move objects in scenes via text

Talk2Move scene control RL: Talk2Move explores reinforcement learning for "text-instructed object-level geometric transformation in scenes," training agents to move objects inside 3D environments in response to natural-language instructions, as introduced in the paper teaser. The demo shows objects being repositioned and rotated to satisfy commands like moving items around a room, signalling a research path toward finer-grained, instruction-driven scene editing for 3D layouts and potentially video.

VINO unifies visual generation with interleaved omni-modal context

VINO unified visual generator: The VINO project presents a "Unified Visual Generator with Interleaved OmniModal Context" that can insert characters, change styles, and swap identities within a scene while keeping layout and lighting consistent, as shown in the Akita dog, anime, and Goku examples in the VINO examples. The grid illustrates how a single living-room shot can be reused while the subject morphs from a dog to different human and anime characters, highlighting a direction where future tools may treat object insertion, character replacement, and style transfer as one coherent generative operation.

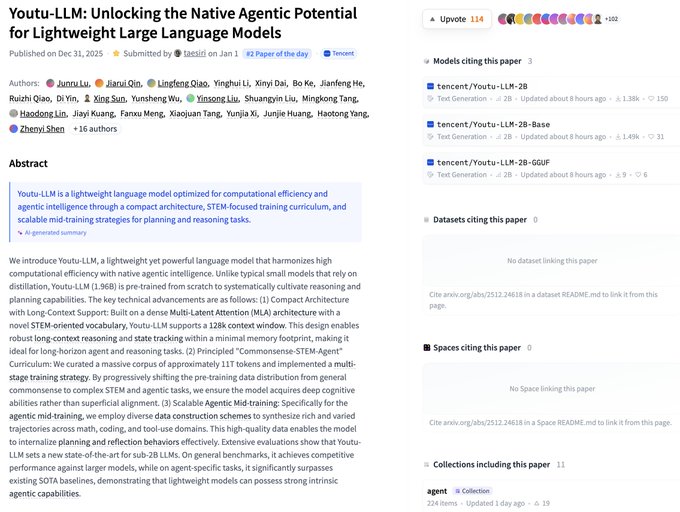

Youtu‑LLM explores agentic intelligence in a 2B‑parameter small model

Youtu-LLM lightweight agentic model (Tencent): The Youtu-LLM paper introduces a 1.96B-parameter model trained from scratch with a Multi-Latent Attention architecture, a 128k-token context window, and a roughly 11-trillion-token curriculum that shifts from commonsense to STEM and agent-style tasks, as summarized in the paper highlight and expanded in the ArXiv paper. The authors emphasize "native agentic potential" via a Commonsense-STEM-Agent curriculum and scalable agentic mid-training, suggesting that small models on consumer hardware could increasingly handle planning and tool-using behavior for creative workflows rather than serving only as slim chat front ends.