Runway Gen‑4.5 adds native audio and 1,247 Elo – early access UI lands

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Runway’s Gen‑4.5 quietly leveled up from benchmark bragging to workflow threat. After last week’s 1,247 Elo flex, the model now shows up in the UI as “coming soon,” and co‑founder Cristóbal Valenzuela confirmed it outputs native audio, which explains those eerily finished demo clips with talking characters and synced ambience. If the shipping build lets you mute or replace that track cleanly, Gen‑4.5 becomes a true one‑pass previz and social‑video engine rather than a silent T2V toy.

On the Kling side, O1 is shifting from hype reel to handbook. A two‑week creative contest (Dec 1–14) dangles up to $1,000 per piece plus fat credit packs, while creators standardize patterns: multi‑reference character swaps in Freepik and Leonardo, Elements tags for recurring props, and multi‑pass edits for wardrobe, backgrounds, and actors. Nano Banana Pro keeps feeding that pipeline with JSON shot briefs and cartoon‑to‑photoreal tricks so you can design once and re‑use across shorts, ads, and memes.

Under the hood, the agent and infra layers are getting more serious. Amazon’s Nova Act trains “normcore” browser agents in RL gyms, Perplexity’s Comet pre‑screens HTML for prompt injection with a fine‑tuned Qwen3‑30B, and ElevenLabs launched a 25‑day partner deal calendar aimed squarely at agent builders.

Meanwhile SemiAnalysis says Google’s TPUv7 undercuts NVIDIA Blackwell TCO by 44% on 9,216‑chip torus pods, even as creators openly mock overcooked “game‑changer” launches—this week’s story is economics and workflows, not slogans.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught

Top links today

- Higgsfield Cyber Week unlimited AI toolkit

- Runway Gen-4.5 cinematic model overview

- LTXStudio FLUX.2 unlimited access promo

- Generate with Kling O1 on Freepik

- Kling O1 image model on OpenArt

- HunyuanVideo 1.5 on Tensor and contest

- PixVerse V5.5 video API on fal

- Vidu Q2 image generation on fal API

- ComfyUI guide for Z-Image Turbo and Wan 2.2

- Magnific Skin Enhancer upscaler on Freepik

- AI Planet Magazine issue featuring Producer artists

- Luma AI Terminal Velocity Matching research article

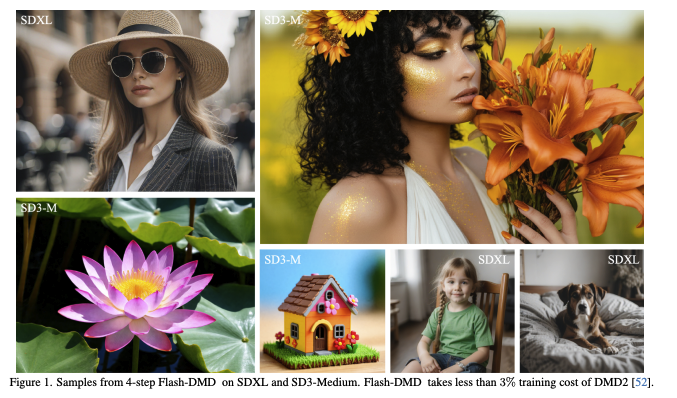

- Flash-DMD few-step image generation paper

- YingVideo-MV music-driven video generation paper

- Video4Spatial context-guided video generation paper

Feature Spotlight

Runway Gen‑4.5 enters early access with native audio

Runway confirms Gen‑4.5 includes audio and starts rolling out to users—unlocking one‑pass picture+sound generation for tighter beats and faster edits.

New today vs yesterday’s leaderboard chatter: creators spotted Gen‑4.5 toggles appearing and Cristóbal Valenzuela confirmed built‑in audio. Reels emphasize prompt adherence and realism. Excludes Kling O1, which is covered separately.

Jump to Runway Gen‑4.5 enters early access with native audio topicsTable of Contents

🎬 Runway Gen‑4.5 enters early access with native audio

New today vs yesterday’s leaderboard chatter: creators spotted Gen‑4.5 toggles appearing and Cristóbal Valenzuela confirmed built‑in audio. Reels emphasize prompt adherence and realism. Excludes Kling O1, which is covered separately.

Runway CEO confirms Gen‑4.5 includes built‑in audio generation

Runway co‑founder Cristóbal Valenzuela quietly confirmed that Gen‑4.5 will generate audio natively, replying "there is" when asked if the model includes audio synthesis after creators noticed talking characters in demo clips. (audio speculation, founder reply)

For filmmakers and editors, this means you’ll likely be able to get synchronized dialogue or ambient sound straight out of Gen‑4.5 instead of compositing everything with separate TTS or sound‑design passes, tightening previs and social‑first workflows where speed matters more than perfect mix quality. The confirmation also explains why some early Gen‑4.5 reels feel eerily complete, and raises new questions about lip‑sync accuracy, language control, and whether you’ll be able to swap or mute the AI track cleanly when you move into final grade and mix.

Gen‑4.5 starts appearing in Runway UI as "coming soon" with new realism reels

Gen‑4.5 is now visible in Runway’s video‑model dropdown as a "coming soon" option, with some users reporting that only internal accounts appear to have it enabled so far. (model dropdown, employee access comment)

Runway is pushing fresh reels that lean into cinematic realism, weighty motion, and strong prompt adherence—like drawings morphing into fully lit scenes and shots that hold consistent camera language—while its research page reiterates a 1,247 Elo score and physics‑accurate dynamics on the Artificial Analysis T2V benchmark. (realism announcement, research blog) Creators are hyped but impatient, sharing jokey sightings of "GEN‑4.5" on stadium scoreboards and predicting "prompt adherence is going to be incredible" once the toggle actually lights up in regular workspaces. (scoreboard tease, creator expectations)

🎥 Kling O1 creator playbook: swaps, edits, and a contest

Continuing after yesterday’s launch coverage, today focuses on how‑tos and community action: character/wardrobe/location swaps, multi‑reference control, and a two‑week Creative Contest with cash/credits. Excludes Runway Gen‑4.5.

Kling O1 Creative Contest offers up to $1,000 and large credit bundles

Kling officially opened its Kling O1 Creative Contest, giving filmmakers and motion designers two weeks to submit O1‑powered videos for cash prizes up to $1,000 per piece plus hefty credit packs. Submissions run Dec 1–14 (PT) with winners announced Dec 25, and entries must carry the #KlingO1 and #KlingO1CreativeContest hashtags, be uploaded to X/TikTok/IG/YouTube, and then registered via Kling’s event page and Google Form. Contest overview

The prize ladder is tuned to pull in both serious and emerging creators: 5× first prizes at 16,000 credits, 10× second at 3,500 credits, 20× third at 1,320 credits, and 30 honourable mentions at 660 credits alongside the headline $1k cash awards. Contest overview Top work can also be featured on Kling’s homepage and official channels, which matters if you’re trying to build a reel or attract clients. The net effect is clear: if you’re already experimenting with O1 for short films, VFX, or edits, there’s now a structured reason to polish a piece and ship it in the next ten days rather than “someday.”

Early tests show where Kling O1 edit shines and where it breaks

Creators are now probing Kling O1’s weak spots, adding nuance to the enthusiastic edit‑workflow coverage from launch day editing workflows. Blizaine’s quick test swapping a robot with a man in a dancing clip shows the core character replacement working from a single reference image, but with subtle uncanny edges that suggest more context images would help. Robot replacement test

WordTrafficker tries a more stylized request—turning a samurai clip background into a bloody Japanese interior—and reports O1 “got it half right,” successfully changing the setting but muddling some details and edges. Samurai background test In a separate stress test, Eugenio Fierro feeds O1 four high‑quality stills of a Jeep Compass and asks for a commercial‑style desert chase with a moving camera: the body mostly holds up, but wheels often warp and some parts flicker in and out across frames, underlining that rigid mechanical objects remain harder than humans and soft environments. Car stress test Combined with multi‑step demos from @manaimovie, the emerging rule of thumb is: O1 is already strong for people, wardrobe, and scene vibes, but complex geometry, gore‑heavy details, and precise product shots still need extra references or compositing help.

Freepik workflow shows how to get cleaner Kling O1 face swaps

On Freepik’s Kling O1 integration, Techhalla shared a concrete recipe for reliable character swaps instead of half‑morphed faces. His tests found that using only a full‑body reference image caused O1 to keep blending back toward the original dancer’s face, but adding a second, close‑up face reference plus explicit text like “he’s wearing gold‑rimmed glasses” locked in identity and styling much more consistently. Freepik workflow

The thread treats Kling O1 as a true multimodal system: you feed it references for structure and style, then use text prompts to clarify small details (accessories, hair shape, expression) or override what’s in the source clip. Prompt and settings example Running on Freepik’s Spaces/AI video tools, his workflow pairs Nano Banana Pro for still character design with Kling O1 for animation, giving storytellers a repeatable pattern: design once in NB Pro, then reuse that character across memes, show intros, or narrative shorts without losing likeness between generations.

Japanese creator maps Kling O1 character, outfit, and location swap workflows

Japanese creator @seiiiiiiiiiiru dropped a mini‑curriculum of Kling O1 Edit demos, walking through practical workflows that many non‑Japanese speakers are already copying frame by frame. They show clean character replacement in existing footage ("人物変更"), then move on to adding and removing props and effects directly from prompts rather than manual masking. Character swap tip

Further clips explain location and background transforms ("場所の変換"), where entire environments or seasons are swapped while motion stays intact, plus an outfit conversion mode that uses up to four reference images to re‑dress a subject across a clip. Location swap explainer Another thread details O1’s Elements system: you group up to four images into a tagged element and then reference those tags in prompts to keep characters, props, or locations on‑model across shots, with the caveat that complex 2D/anime characters remain less stable. Elements feature breakdown A free Japanese Note is on the way summarizing these patterns, but even the raw videos already function as a very usable edit playbook if you’re trying to decide when to lean on tags, when to swap outfits, and when to change the whole scene.

Leonardo and others lean on Kling O1 multi‑reference controls for consistency

Following up on the initial multi‑platform rollout of Kling O1 across Freepik, Leonardo and more platform rollout, creators are now showing how those hosts expose O1’s multi‑reference controls for on‑model characters. In Leonardo, MayorKingAI demonstrates using five different reference images (poses, angles, outfits) to train a composite character that stays consistent as the camera moves, effectively turning O1 into a lightweight virtual production rig for hero shots. Leonardo 5 reference demo Another Leonardo user, @manaimovie, chains multiple O1 edit passes: first swapping costumes, then adding glowing clothing effects, then replacing backgrounds and even the main actor while borrowing motion from a reference clip. Leonardo edit rundown Together these posts move the story beyond “Kling is available here” into concrete patterns: load 3–5 reference stills per character, use platform‑specific UIs to tag them, and separate big edits (outfit, background, performer) into dedicated runs to reduce artifacts.

Techhalla’s 11‑clip roundup showcases Kling O1’s range in its first 24 hours

To help people see what Kling O1 is actually good for, Techhalla pulled together 11 community examples that span from polished shorts to gritty edits, building on O1’s debut as a unified video engine unified engine. The thread opens with “Ashes of Eden,” a fully realized cinematic piece, then jumps to creator Trisha literally time‑traveling herself into archival footage, showing how O1 handles compositing real people into stylized scenes. Eleven examples list

Mid‑thread examples spotlight tram removal from live‑action footage, multiple camera angles synthesized from one source clip, and a “Chihuahuaize anything” gag that remaps subjects into tiny dogs while preserving motion and framing, illustrating O1’s omni‑reference design. Tram removal demo One slot is reserved for a character‑swap example and another for Techhalla’s own consistency+edit breakdown, making the thread a quick visual menu for filmmakers wondering “is this model ready for my use case?” The common pattern: O1 already excels at reimagining existing footage—swapping subjects, props, angles, or styles—more than generating long, plot‑heavy clips from scratch.

Officially boosted tutorial dives into Kling O1 as a "Nano-Banana" for video

Kling amplified Rourke Heath’s long‑form YouTube tutorial, branding Kling O1 the “Nano‑Banana of AI video” and giving creators a more structured on‑ramp than scattered tweet tips. The video promises to walk through core modes—text‑to‑video, reference‑driven generation, and edit workflows—using real projects rather than abstract marketing clips. Tutorial link For filmmakers and motion designers who prefer seeing a full timeline from prompt to export, this kind of guided session fills in gaps left by short social posts: how to pick and prep reference images, when to break a job into multiple O1 passes, and how to combine O1 outputs with traditional editing in tools like Premiere or Resolve. It also reinforces that Kling is leaning into education early, not just hype, which usually correlates with fewer gotchas once you start building client work around a new model.

🖼️ Image craft: NB Pro JSON briefs, Hedra builders, and style refs

Mostly stills and prompt systems: detailed Nano Banana Pro JSON specs in Gemini, Hedra’s Prompt Builder and new one‑tap Templates, Midjourney V7 srefs, plus a reusable “thermal scan” prompt. Video‑only items excluded.

Hedra Prompt Builder turns menus into full scene prompts

Hedra rolled out Prompt Builder, a UI that lets you pick subject, style, camera and location from dropdowns and then auto‑generates a full, detailed scene prompt, with 1,000 credits for the first 500 followers who reply for a code Launch details.

For image and video creators, this is effectively an on‑rails prompt engineer: you can standardize looks across a project, avoid blank‑page syndrome, and hand off prompt construction to non‑technical collaborators while still getting rich, structured directions out of it.

Midjourney V7 sref 718499020 delivers cohesive neon cyberpunk looks

Azed_ai published Midjourney V7 style reference --sref 718499020 (with --chaos 22 --ar 3:4 --sw 500 --stylize 500), producing a tight set of neon‑drenched cyberpunk portraits, robots, bikes, and city scenes with consistent magenta‑purple lighting and bokeh Style ref.

For illustrators and concept artists this sref works as a ready‑made cyberpunk pack: drop it into new prompts to inherit the same lighting, color palette, and texture treatment while swapping characters, props, or locales.

Three‑step Nano Banana trick turns cartoons into believable photoreal

Eugenio Fierro outlines a simple three‑step Nano Banana Pro workflow in FloraFauna AI: upload a 2D cartoon, first ask the model to "turn this into a flat sketch on white paper," then take that sketch and prompt "now turn it into a hyper‑realistic real‑life image" Workflow description.

By inserting the sketch as an intermediate, the model normalizes proportions before adding realism, which helps avoid the distorted anatomy you get when converting stylized anime or chibi characters directly into photoreal humans.

Weavy + Nano Banana Pro workflow auto‑builds 9‑shot character contact sheets

Ror_Fly shared a Weavy workflow that takes one input image of a character and, via a system prompt plus batch Nano Banana Pro calls, generates nine varied shots (angles, depths, compositions), then composites them into a 3×3 contact sheet and optionally upscales the result Workflow explanation.

The setup uses an LLM to draft nine distinct shot prompts, routes them into NB Pro nodes, and feeds everything through a compositor, making it a reusable way to get reference sheets or storyboards from a single hero image Node graph screenshot.

Children’s book sref 2621360780 nails sketchy pastel storybook art

Artedeingenio shared Midjourney style ref --sref 2621360780 as a go‑to for children’s storybook visuals, giving loose, sketchy linework, pastel palettes, and expressive cartoon characters like tiny vampires, warrior princesses, and crowned kids Storybook style ref.

If you’re prototyping picture books or kid‑friendly explainer art, this sref offers a consistent, soft illustration language you can reuse across characters and scenes.

ComfyUI pipeline pairs Z‑Image Turbo with Wan 2.2 for cinematic shots

A new ComfyUI tutorial walks through using Z‑Image Turbo for high‑quality stills and then Wan 2.2 to turn those into cinematic shots, giving creators controllable, filmic imagery with strong consistency between frames Workflow thread.

For visual storytellers this pipeline means you can treat Z‑Image as your concept art engine, then feed the results into Wan for shot exploration, using node graphs rather than ad‑hoc prompts (see ComfyUI guide).

Hedra adds 20 one‑tap Templates for selfie remixes

Hedra also shipped 20 new Templates that turn a single photo into looks like wizard, pop star, action figure, 80s icon, or "luxury king" in one tap, with another 1,000‑credit promo for the first 500 replies Templates promo.

For designers and social creators this is a fast way to prototype character looks or social posts without writing prompts at all—Template choice becomes the main creative control, which you can then layer with more precise tools elsewhere.

Midjourney V7 sref 4740252352 creates painterly motion‑blur aesthetics

Another new Midjourney style ref, --sref 4740252352, yields abstract, motion‑blurred imagery: rainbow dunes, samurai armor, soft‑focus portraits in rainbow sweaters, and big floral bokeh, all with strong vertical streaking and lens‑flare‑like artifacts New style ref.

It’s a good shortcut when you want photos or character art that feel like long‑exposure, dreamy fashion or music photography without hand‑tuning motion or blur parameters on each prompt.

Neo‑retro femme fatale anime style ref 196502936

A separate Midjourney V7 style reference, --sref 196502936, leans into neo‑retro anime with femme fatale vibes—sharp faces, bold jewelry, guns toward camera, and saturated flat backgrounds that feel closer to Niji than default MJ Anime style ref.

This is a useful anchor if you’re building a consistent cast in a comic or motion storyboard and want a stylish, 80s/90s anime‑inspired look without wrangling painterly parameters every time.

Simfluencer agent uses Nano Banana Pro and JSON prompts for cheap UGC tests

Glif’s new "Simfluencer" Product UGC Agent uses Nano Banana Pro plus JSON‑style prompting to generate creator‑style photos from a brand’s product images, so marketers can test different UGC aesthetics without hiring real influencers Agent overview.

Because the agent controls pose, framing, and style via structured fields, it can quickly iterate through many looks and creators, letting you shortlist visual directions before committing budget to real shoots.

🎛️ Multi‑shot and API video: PixVerse, Vidu Q2, Hailuo

Non‑Runway video tooling momentum: PixVerse V5.5 lands on fal and runware with native audio and multi‑shot, Vidu Q2 image endpoints for high‑consistency stills, and Hailuo’s template pack refresh. Excludes Runway and Kling O1 news.

PixVerse V5.5 hits fal and Runware with full multi-shot video API

PixVerse V5.5 is now exposed as a developer-facing API on fal, Runware, and PixVerse’s own platform, taking the one-prompt multi-shot, audio-aware model from UI-only into programmable workflows, following up on PixVerse V5.5 which first introduced multi-shot with native sound. fal has split V5.5 into dedicated Text-to-Video, Image-to-Video, Effects, and Transition endpoints with transparent pricing (for example, a 5s clip ranges from about $0.15 at 360–540p to $0.40 at 1080p, with extra for audio and multi-clip) so teams can budget per mode rather than per "mystery" credit. (fal launch, text to video) Runware added PixVerse V5.5 on day zero, advertising support for text-to-video, image-to-video, first/last frame control and effects, plus the ability to add music, sound effects and dialogue in one pipeline, which is a big deal if you’re already wiring models through their API catalog. (runware summary, runware models) PixVerse itself is also steering developers to its own API platform for full-stack creation, so if you’re building story-generators, ad engines or UGC tools you can now choose between fal’s granular per-resolution billing, Runware’s big model marketplace, or PixVerse’s native stack depending on where the rest of your infra lives. PixVerse platform

fal adds Vidu Q2 Image model for film-grade storyboards and edits

fal has onboarded the Vidu Q2 Image Generation model as a first-class endpoint, giving creators fast text-to-image, reference-to-image, and image-edit flows with what both teams call "film-grade" texture and strong multi-reference consistency, extending the launch we saw in Vidu Q2 image where the model debuted with unlimited stills and fast generation. fal announcement The sample set fal shows spans moody landscapes, classrooms, underwater wildlife, and retro interiors, signaling that Q2 is tuned more for cinematic concept art and storyboards than for ultra-stylized social memes.

For filmmakers and designers, this matters less as a standalone art toy and more as a way to standardize stills generation (characters, sets, props) before handing shots into video models like Vidu’s own Q2 video or third-party tools; because it supports editing and multi-image reference, you can keep a character or room on-model across dozens of keyframes without bouncing between vendors or wrestling with SD checkpoints. Vidu use case model landing page

Hailuo refreshes Agent Templates with JOJO stand, mech and ASMR-style packs

Hailuo AI rolled out a fresh batch of Agent Templates — including "JOJO stand", "Mech Assault", and "Street Head Massage" — under the tagline "one template, infinite possibilities", giving creators pre-baked setups that bundle character style, motion logic and camera treatment into reusable video blueprints. template update

In the demo you see users swipe through templates on mobile, then instantly spin up stylized battle scenes, over-the-top anime poses, or chill massage POV clips from a single tap, which lowers the barrier for non-technical creators who want themed content without prompt engineering every shot. template update For AI filmmakers and short-form storytellers, these packs act like genre presets: drop in your own character or product, tweak a few parameters, and you’ve got a coherent mini-sequence that already matches a specific aesthetic, with deeper customization available on the main Hailuo site if you want to push beyond the defaults. Hailuo template page

🤖 Production agents for repetitive creative ops

Agents aimed at boring but vital workflows: Amazon’s Nova Act for browser tasks with RL ‘gyms’, Comet’s prompt‑injection protections, plus a daily partner deals calendar for agent builders.

Amazon unveils Nova Act, a browser agent platform for “normcore” workflows

Amazon introduced Nova Act, an AWS-hosted agent platform focused on boring but business‑critical browser tasks like multi‑page form filling, staging‑env QA, shopping/booking flows, and large‑scale search extraction, branding them “NormcoreAgents.” Nova Act overview

Agents are trained in safe RL “gym” environments so they learn cause‑and‑effect rather than brittle click replay, which should make them more robust when layouts change. Nova Act overview The dev flow is structured: prototype in a web playground, then develop locally via an SDK/CLI and IDE extension (VS Code, Cursor), then deploy to production on AWS with IAM, CloudWatch logging, and a Bedrock AgentCore runtime. developer details For creatives and design/video teams drowning in repetitive web tooling—uploading assets to CMSes, running manual regression checks on sites, back‑office booking flows—this is one of the first serious attempts to turn those chores into production‑grade agents rather than one‑off scripts.

Perplexity’s Comet browser pre-screens HTML for prompt injection with Qwen3-30B

Perplexity’s Comet browser is leaning hard into safety by scanning raw page HTML with a fine‑tuned Qwen3 30B model to detect prompt injection attempts before a user even triggers an assistant request, using their new BrowseSafe Bench as a yardstick. Comet safety claim Instead of letting arbitrary <!-- system prompt: … --> tricks reach your main model, Comet runs this pre‑filter to flag or strip malicious instructions, which matters a lot once you start letting autonomous research, sourcing, or data‑gathering agents roam the web for you. For AI creatives and production teams experimenting with browser‑based research or content‑sourcing agents, it’s a reminder that you want this sort of guardrail in front of any tool that has account access or can touch internal dashboards.

ElevenLabs starts “25 Days of Agents” deals calendar for agent builders

ElevenLabs kicked off “25 Days of Agents,” an advent‑style promo where each day until December 25 unlocks a new deal from infra and tooling partners like Railway, Cloudflare, Convex, Vercel, n8n, Hugging Face, Lovable, and others, aimed squarely at people building AI agents. agents advent launch

Rewards range from millions of credits and lifetime subscriptions to 1:1 sessions with industry leaders and weekly trips to Upscale Conf San Francisco, with entries earned by posting creations tagged #FreepikAIAdvent and submitting them through an official form. agents advent launch For creatives who are starting to stitch together voice, retrieval, and automation agents into real production pipelines, this lowers the cost of trying multiple stacks—compute, vector stores, orchestration, and browser tools—without a big upfront spend.

Simular 1.0 promises record-and-repeat AI for desktop creative workflows

Azed_ai highlighted Simular 1.0 as an "AI that works on your desktop, not another demo," pitched as something you can teach once and have it repeat your workflow perfectly. Simular 1.0 teaser Details are sparse, but the positioning is clear: instead of cloud‑only agents confined to a browser, Simular aims to sit on your machine and automate the actual apps you use all day. For editors, designers, and social teams, that could mean offloading rote sequences like batch exporting, format conversions, asset renaming, or template‑driven layout tweaks without wiring up full RPA or scripting. It’s early marketing rather than a spec sheet, so teams should expect some rough edges, but it’s a signal that "agents for boring creative ops" are moving from web forms into the desktop tools where people really work.

Glif’s Simfluencer agent auto-generates UGC-style product content with Nano Banana Pro

Glif introduced a “Simfluencer: Product UGC Agent” that uses Google’s Nano Banana Pro image model plus structured JSON prompting to turn plain product photos into varied, influencer‑style UGC content. simfluencer overview The agent takes in brand imagery and then tests different creator aesthetics and framing automatically, which is useful for marketers who want TikTok/Instagram‑ready visuals without hiring multiple creators or running dozens of manual prompt experiments. It’s framed as a low‑cost way to prototype UGC styles and iterate on what might convert before commissioning real shoots, giving small creative teams a sandboxed “fake creator” to explore angles, crops, and moods for each product. workflow link

📚 Papers to bookmark: spatial video, few‑step diffusion, unified reps

A dense slate of gen‑media research: visuospatial video generation, long‑video reasoning, music‑driven video, fast samplers, and unified multimodal encoders. Practical for pipeline R&D.

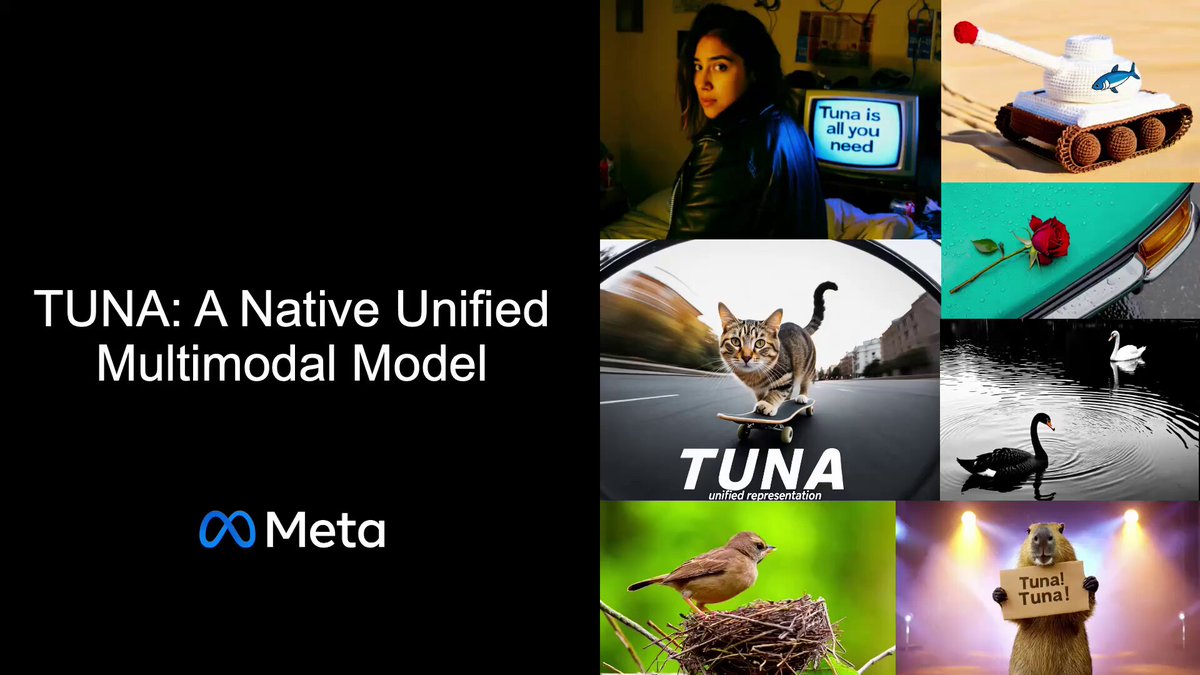

Meta’s TUNA targets unified visual reps for multimodal models

Meta’s TUNA paper proposes a single visual representation that natively serves both image and video tasks inside unified multimodal models, instead of juggling separate encoders and adapters. That’s directly relevant if you want one backbone that can do captioning, grounding, editing, and VQA across frames without custom hacks. TUNA paper mention

For creative pipelines, a strong unified visual tower could mean more consistent behavior when you mix stills, keyframes, and full shots (same notion of “objects” and “space” everywhere), simpler fine‑tuning for house styles, and fewer weird failure modes when models jump between frame and clip understanding.

Flash-DMD promises high-fidelity, few-step diffusion with distillation+RL

Flash‑DMD tackles the “fast but good” problem in diffusion by distilling long denoise schedules down to a handful of steps, then using joint reinforcement learning to keep quality from collapsing. Flash-DMD overview If it holds up, this kind of sampler lets you get near‑full‑quality stills in 1–4 steps, which matters a lot for interactive workflows: live art direction, paint‑over iterations in tools like ComfyUI, or real‑time style tries on lower‑end GPUs and laptops.

LongVT trains MLLMs to “look back” over long videos with tool calls

LongVT proposes a way to make multimodal LLMs actually think over long videos by having them “actively look back” using native tool calls rather than passively reading one long sequence. LongVT thread

For filmmakers and editors building assistants, this is the kind of technique you want behind “summarize this 8‑minute cut,” “find continuity errors,” or “flag off‑brand shots”—it’s a path toward agents that track story beats, objects, and characters across scenes instead of forgetting what happened 30 seconds ago.

Lotus-2 links geometric dense prediction with generative DiT backbones

Lotus‑2 digs into DiT‑based rectified‑flow and shows how to turn a powerful image generator into a geometric dense prediction workhorse (depth, normals, masks) without giving up visual quality. Lotus-2 paper thread For designers and VFX teams this is the kind of backbone that can both render rich frames and output precise geometry cues for relighting, compositing, and 3D proxies—so a single model might feed both your concept art and your technical passes.

Video4Spatial pushes video generation toward explicit 3D spatial reasoning

Video4Spatial argues that real visuospatial intelligence in video models needs explicit spatial context, and presents a context‑guided generation pipeline that reasons about 3D layout rather than only pixels over time. Video4Spatial announcement

For anyone pushing cinematic moves or complex blocking with AI, this line of work is key: it’s how you get consistent rooms, believable camera orbits around subjects, and shots where objects don’t teleport or violate basic 3D structure when you cut.

Apple’s CLaRa-7B-Instruct brings ultra-compressed RAG for QA workflows

Apple quietly shipped CLaRa‑7B‑Instruct, an instruction‑tuned RAG model that operates on continuous latent document embeddings compressed by 16× to 128× while still answering QA prompts well. Hugging Face model card For creatives, this is less about chatbots and more about cheap, local “research brains” over your own assets—screenplays, design docs, brand bibles, cue sheets—where you care about fast retrieval and tight hardware budgets more than flashy model size.

Stabilizing Reinforcement Learning with LLMs targets saner agent training

“Stabilizing Reinforcement Learning with LLMs” lays out a framework and practices for using language models inside RL loops without the whole setup exploding, which has been a recurring pain point in agent research.

For creative agents that operate UIs, call tools, or drive multi‑step workflows in editors, this kind of recipe matters: it’s the difference between brittle demos that overfit a few tasks and training setups where an assistant reliably learns to, say, assemble B‑roll sequences or tag asset libraries over thousands of runs.

VLASH demos future-state-aware, real-time vision–language–action agents

VLASH introduces a “future‑state‑aware” asynchronous inference scheme so vision–language–action agents can run in real time while still planning ahead, rather than pausing the world every frame to think. VLASH demo clip

That’s more interesting to interactive creatives than it sounds: real‑time but slightly foresighted agents are what you’d plug into live puppeteering rigs, camera bots in virtual stages, or tools that watch your timeline and pre‑suggest trims before you ask.

MusicSwarm uses multi-agent “pheromone” swarms for richer AI compositions

MusicSwarm treats composition as a swarm of identical frozen AI agents that exchange ant‑like “pheromone” signals while writing, leading to outputs with more structure and novelty than a single model run.

For producers and sound designers, it’s a glimpse of alternative architectures to the one‑big‑model norm: instead of prompting one LLM‑ish composer over and over, you can coordinate a whole band of small ones that negotiate motifs, dynamics, and sections in a shared space.

YingVideo-MV explores music-driven, multi-stage video generation

YingVideo‑MV is a research system that generates video in several stages conditioned on music, so the visuals follow rhythm, structure, and mood instead of treating the soundtrack as an afterthought.

For music video directors and motion designers, this points toward workflows where you hand the model a track and a loose concept, then get back cuts whose pacing, camera moves, and scene changes already align with verse/chorus/bridge instead of random B‑roll.

🎁 Promos, credits, and contests to grab now

Heavy availability boosters for makers: Freepik’s 24‑day Advent prize pool, FLUX.2 unlimited week, invideo’s 7‑day O1 access, and Hedra credit drops. Excludes Runway news.

Freepik launches 24‑day AI Advent with 1M credits and trip prizes

Freepik kicked off its 24‑day #FreepikAIAdvent campaign, putting 1,000,000 Freepik credits on the table for Day 1 alone (10 winners at 100k credits each) and promising new AI‑creation prizes every day through Christmas, plus Friday trips to Upscale Conf San Francisco advent overview day one details.

To enter, creators need to make something with Freepik’s AI tools, post it on X the same day, tag @Freepik, and include #FreepikAIAdvent, with each valid post counting as one daily entry; weekly and final prizes require also submitting posts via the official form and respecting anti‑spam rules (entry form, terms page ).

LTX Studio makes FLUX.2 Pro and Flex free and unlimited for six days

LTX Studio turned FLUX.2 Pro and Flex generations to $0 with no caps for everyone on Standard and above plans, running this unlimited window until December 8 flux unlimited update. Following ltx sale that halved annual plan prices, this gives video teams a short sprint to test FLUX.2 at full blast without touching their credit balance.

The offer applies to both the higher‑quality Pro and the faster Flex variants, and is pitched as “No caps. No catches. Just create,” which makes it a good week for filmmakers and designers to prototype FLUX‑based storyboards and look‑dev before normal metering resumes unlimited reminder.

ElevenLabs starts 25 Days of Agents deals with top infra partners

ElevenLabs kicked off 25 Days of Agents, an advent‑style campaign where every day until December 25 they unlock a new exclusive deal from AI tooling partners like Railway, Cloudflare, Convex, Vercel, n8n, Hugging Face, Lovable and others, all geared toward people building AI agents agents advent.

The promo bundles limited‑time credits, discounts, and perks across infra, orchestration, and model platforms, and participants sign up once on ElevenLabs’ side to access the daily unlocks, making this a useful December grab‑bag if you’re standing up multi‑service agent stacks and want to test several vendors under lighter cost pressure access page.

invideo offers seven days of free, unlimited Kling O1 video generation

Video toolmaker invideo is offering Kling O1 free and unlimited for seven days, marketed as giving creators a “full VFX studio” built on the new unified video model vfx studio launch. This means a week where you can key green screen, add or remove objects, and run cinematic shots on Kling O1 without worrying about credits, which is ideal for testing how far its editing and compositing tools can stretch a real client‑style brief free week callout.

Hedra hands out 1,000 credits to early users of Prompt Builder and Templates

Hedra is dangling 1,000 credits to the first 500 followers for two separate launches—its new Prompt Builder and a pack of 20 one‑tap Templates—if you reply with the right phrase so they can DM you a code prompt builder launch templates promo.

Prompt Builder lets you pick subject, style, camera, and location, then auto‑builds a full scene prompt, while the Templates turn a single photo into looks like wizard, pop star, or “living painting,” so these early‑bird credits are a low‑friction way for creators to try Hedra’s look‑swapping workflows at some scale before deciding if it belongs in their stack templates page.

Tencent’s HunyuanVideo 1.5 contest on Tensor offers prizes for best clips

Tencent’s Hunyuan team is inviting creators to try the open‑source HunyuanVideo 1.5 model on MosaicML’s Tensor platform and submit their work for a chance to win prizes, turning model testing into a mini festival for AI video makers hunyuan tensor contest. Creators can spin up generations in the browser or via API, then send in their most polished pieces to compete, which is a handy excuse to explore an open T2V model that targets strong 720p motion quality without paying for separate compute up front contest page.

Vidu Q2 offers 40% off plus unlimited images for members until Dec 31

Vidu is reminding creators that its Black Friday 40% off deal—described as the biggest of the year—ends in 48 hours, on top of a separate promo where members get unlimited Q2 image generation until December 31 and new users can claim bonus credits via the code VIDUQ2RTI q2 model promo discount reminder.

If you’ve been eyeing Q2’s fast text‑to‑image and reference‑to‑image tools, this is a stacked entry point: discounted membership pricing, a month of uncapped generations, and extra starter credits that lower the cost of putting Q2 through real production‑style workloads before normal limits return.

PolloAI adds weekly lucky draws and discounts around Kling O1 launch

On top of its Kling O1 integration, PolloAI is running December “Funny Weekly Lucky Draws”, with the first randomly picked creator getting a 1‑month Lite membership plus 300 credits, and broader promos like 50% off credits and Pro memberships with 1,000‑credit bonuses for those who follow, retweet, and reply with the right keyword weekly lucky draw.

These incentives ride alongside Kling’s own official Creative Contest kling contest rules, so if you’re already generating O1 videos it’s low extra effort to route some outputs through PolloAI, potentially stacking membership perks and credits while you experiment.

(Duplicate placeholder)

(This entry should not appear; please ignore.)

(Duplicate placeholder)

(This entry should not appear; please ignore.)

📣 AI influencer stack and faster VO pacing

Monetization and reach helpers: Apob’s consistent‑character UGC agent, Pictory’s ElevenLabs speed control for VO timing, a Producer AI magazine issue and community audio challenge, plus a Kling/Z‑Image livestream.

Apob pitches always-on AI influencers with consistent faces and UGC video

Apob is leaning hard into the “AI influencer agency” pitch: train a consistent digital character, auto-generate photos and ReVideo clips, and monetize through brand deals and digital products without filming yourself. Their latest examples show a creator training a digital twin, swapping their face into trending clips, and claiming they doubled sponsorship income while “not filming a single new frame” Digital twin case.

For AI creators and social marketers, the hook is consistency and scale: Apob says it has solved the usual face-drift problem (“The hardest part of AI influencers used to be keeping the face consistent. Apob solved it.” Consistency pitch), and pairs that with a ReVideo feature that turns static images into short animated scenes for faster content loops ReVideo explainer. This stack targets people who want to spin up or extend a persona—without cameras, studios, or manual editing—using AI-native models as their core "talent roster" instead of human creators.

Glif’s Simfluencer agent uses Nano Banana Pro to auto-generate UGC ads

Glif is pitching a "Simfluencer" Product UGC Agent that takes plain product photos, runs them through Nano Banana Pro, and outputs multiple creator-style shots and captions for testing UGC angles without hiring real influencers Simfluencer overview. The system leans on structured JSON prompting to keep styles, framing, and scenarios consistent while varying creator personas and scenes, so brands can A/B different UGC looks at low cost Workflow link.

For performance marketers and DTC founders, this effectively turns Nano Banana Pro into a synthetic creator lab: you feed it SKUs and preferred aesthetics, then let the agent churn out UGC‑like assets you can trial in ads or organic feeds before committing real budget to human‑made shoots.

Pictory adds ElevenLabs voiceover speed control for tighter edit timing

Pictory has shipped speed control for ElevenLabs voiceovers, letting you adjust narration tempo scene by scene so VO better matches visual pacing inside the editor Speed feature demo.

In the clip, a simple slider changes the ElevenLabs track from 1.0× to 1.5×, which is immediately reflected in the preview timeline, cutting down on the old export–reimport shuffle between separate audio and video tools. For solo creators and small teams, this means you can keep Pictory as the hub for script → edit → VO, then tweak timing in context instead of bouncing back to a DAW—especially handy for short-form content and ad-style videos where every half-second matters. If you’re considering going deeper, their parallel promo reminds that 50% off annual plans plus 2,400 AI credits are still being pushed as a last‑call offer Discount reminder, see detailed tiers in their pricing page pricing page.

Producer AI artists featured in AI Planet Magazine’s music issue

AI Planet Magazine’s new Music Issue spotlights several Producer AI artists, framing the tool as the “core engine behind the artists’ musical identity” across editorial spreads and cover art Magazine feature.

Following up on Producer’s recent audio‑effects challenge with a 1,000 credit prize pool audio challenge, this gives musicians and sound designers a concrete example of how AI‑driven workflows are now being treated as front‑page material rather than back‑office tooling. The layouts show full artist stories, track branding, and visual identities built around AI‑assisted production, which is a useful reference if you’re trying to package your own AI-native music for press, sync pitches, or fan communities.

Glif’s "AI Slop Review" livestream will stress-test Kling O1 and Z-Image

Glif announced The AI Slop Review, a livestream where the crew will “play with Kling O1 & Z-Image like only we can” and invite viewers into a very hands-on, chaotic test session of the new video and image models Livestream teaser.

The show is scheduled for tomorrow at 1pm PST / 10pm CET, with a YouTube link already live so you can set a reminder or catch the replay later Livestream link livestream page. For AI filmmakers and meme‑driven creators, it’s a chance to see real workflows—subject swaps, edits, failures, and fixes—instead of polished promos, and to learn how Kling O1 and Z‑Image actually behave when pushed into social‑content territory.

🧮 Compute economics watch: TPUv7 vs Blackwell

One infra thread with direct downstream impact on creator tools: SemiAnalysis argues TPUv7 matches Blackwell FLOPs/memory but delivers 44% lower TCO via 3D‑torus clusters up to 9,216 chips.

SemiAnalysis says Google TPUv7 undercuts NVIDIA Blackwell TCO by 44%

SemiAnalysis argues that Google’s TPUv7 now matches NVIDIA’s Blackwell on peak FLOPs and memory capacity, but delivers 44% lower total cost of ownership inside Google, mainly thanks to 3D‑torus clusters scaling to 9,216 chips and achieving about 40% model FLOPs utilization vs ~30% on NVIDIA. TPUv7 cost thread For AI creatives and tool builders, that means the infrastructure behind models like Gemini and Claude can get cheaper and more plentiful, which tends to show up downstream as more capable models and more aggressive pricing in the tools you use.

The same analysis notes Anthropic has committed to over 1 GW of TPUv7 capacity, split between buying hardware and renting it on GCP, signaling that at least one top‑tier model lab is shifting a huge share of training and inference off NVIDIA. TPUv7 cost thread SemiAnalysis estimates this yields roughly 52% better TCO per effective PFLOP than comparable GPU setups, and that moves like this have already forced NVIDIA to offer around 30% GPU discounts to keep giants like OpenAI onboard. TPUv7 cost thread Google is also selling TPUv7 to external customers and pushing PyTorch support, a direct shot at the CUDA software moat and a sign that more third‑party AI platforms—including creative tools—will quietly run on TPUs as well.

For people making images, video, and music with AI, the point is that compute cost is the hard floor under every model’s price and capability. If TPUv7 really is cheaper per useful FLOP and sees wide uptake, you should expect denser, more capable models and lower per‑generation costs over the next 12–24 months, plus a more competitive GPU/TPU market instead of everything bottlenecked on a single vendor.

🗣️ Hype fatigue and AI‑art defenses

The discourse itself is the news: creators push back on “game‑changer” hype and JSON‑prompt mystique, while AI artists rebut claims of unoriginality. Product updates are excluded here.

AI artist defends originality of AI-generated art against “copying” claims

An AI artist posted a long rebuttal to the idea that AI images are "not real art" because they reuse human-made data, arguing that all art historically builds on prior work and that "pristine originality doesn’t exist." AI originality rant Following up on insult discourse where creators mocked copy‑paste hate, this thread leans more philosophical, calling art "a copy of a copy" and claiming many AI pieces are more inventive than the "sloppy mashups" critics produce themselves.

For AI creatives, it’s a signal that the community is moving past defensive apologies into a more confident stance: if you’re doing distinctive work with tools like Midjourney or Nano Banana, you don’t have to pretend it sprang from a vacuum—because nothing in art ever has.

AI video creators air fatigue with overhyped “this changes cinema” launches

In a candid thread about recent Higgsfield and fal drops, one filmmaker admits he’s "part of the hype cycle" but says cryptic teasers made it sound like releases would "change cinema forever," while in practice they felt like "two cool releases" in an otherwise normal week. overhype reflection Another creator agrees the term "game changer" is overused, even as they argue Nano Banana Pro plus Kling O1 really does move the needle on visual consistency—before adding that the pace of new models makes the constant hype "exhausting lol." hype cycle reply For working filmmakers, the subtext is clear: expect solid incremental tools, but treat grandiose launch copy with skepticism and judge by what survives in your own pipeline.

Creators push back on JSON prompt hype around Nano Banana Pro

A prominent prompt artist called out the growing belief that wrapping prompts in JSON for Nano Banana Pro is some secret key to better images, saying the "JSON trend" has simply migrated from one model to another and is being treated like magic. json trend comment The point is: structure can help you think, but it’s not a substitute for understanding lighting, style refs, or composition, and over-focusing on the format risks cargo‑culting instead of learning actual craft.

Creators call out lazy AI model coverage that reuses others’ content

One AI creator publicly vents that it "would be great if content creators actually made their own content when talking about a new model," instead of recycling the same clips and examples. original content call It’s a small tweet but it taps a wider frustration: reviews and tutorials that chase views with second‑hand footage don’t help builders, and they blur the line between real expertise and surface‑level hype around tools like Kling O1 or Nano Banana Pro.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught