Nano Banana Pro gets coord‑to‑reality and Adobe unlimited – 14GB video joins

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Nano Banana Pro leveled up again this week in two directions that actually change how you work. Creators are showing it can turn nothing but latitude/longitude and a timestamp into historically coherent photos—Hindenburg, Trinity, Bastille Day, even the 1896 Olympics—so “40.03 N, 74.32 W, 1937‑05‑06 evening” is suddenly a full prompt, not trivia. At the same time, Adobe quietly dropped NB Pro into Photoshop, Firefly, and Firefly Boards as a selectable model with reportedly unlimited use for existing subscribers, so you can spam variations and extensions without watching a credit meter.

That Adobe move pairs nicely with more precise control elsewhere. Photoshop now surfaces Topaz tools in‑app, and Lovart’s Touch Edit—already in use by 100k+ creators—lets you tap a sleeve, logo, or headline and rewrite that region only, instead of re‑rolling the whole frame. The net effect: more time on composition and story, less on “one wrong detail, back to square one.”

On the motion side, Tencent’s HunyuanVideo 1.5 squeezes an 8.3B‑parameter T2V model into ~14 GB GPUs for 720p clips with built‑in 1080p upscaling, while ByteDance’s Vidi2 focuses on the opposite problem—understanding video well enough to find exact moments and subjects in 10–30 minute edits. As Kling teases an “Omni Launch Week,” it’s clear both generation and comprehension layers are racing ahead in parallel.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught

Top links today

Feature Spotlight

Context-to-reality images from coords + time (NB Pro)

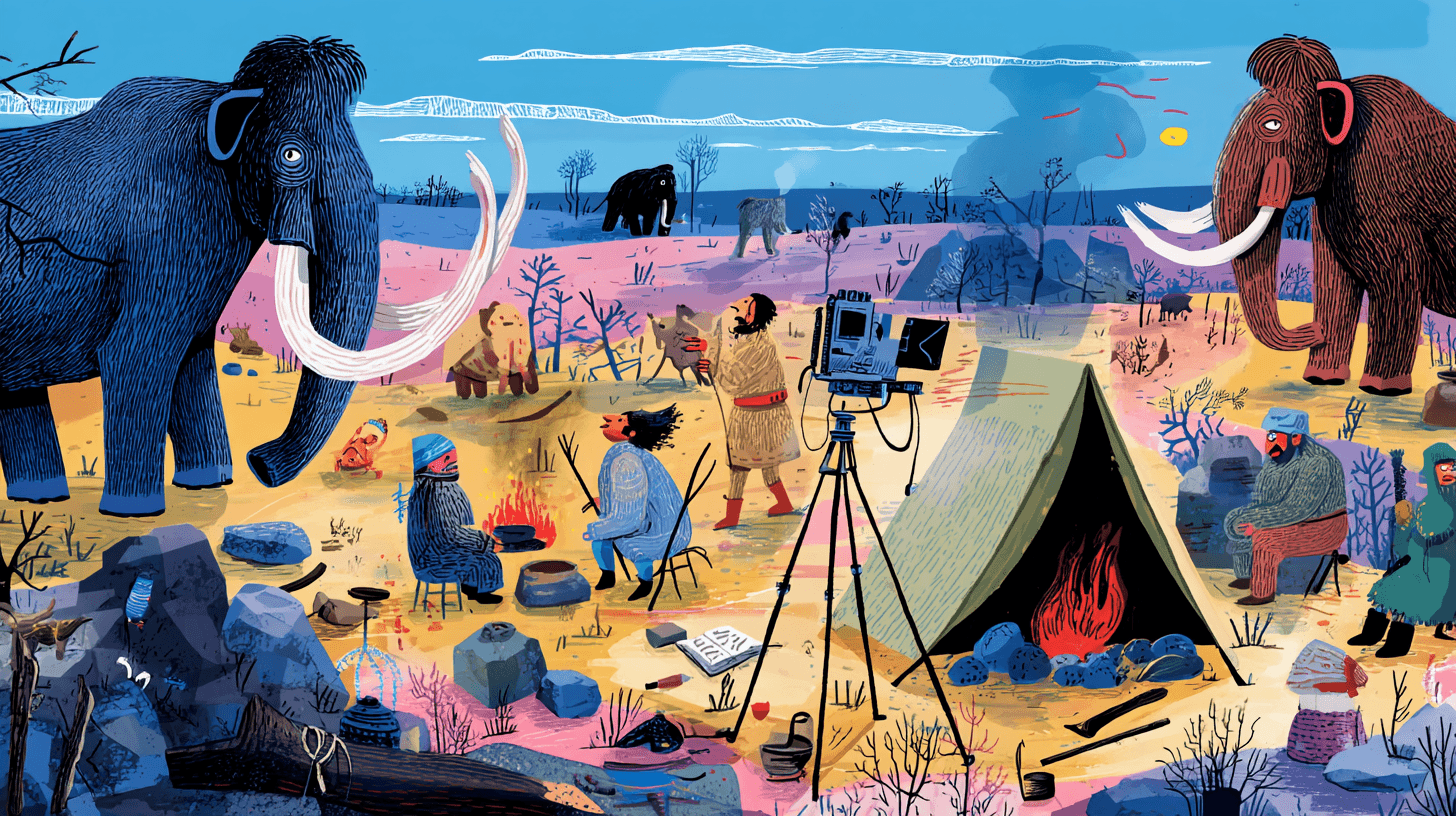

NB Pro can render historically correct scenes from just coordinates and a date/time—creatives get docu‑grade visuals without long prompts, unlocking fast concepting for films, games, and education.

Big creative thread today: multiple creators show Nano Banana Pro turning only latitude/longitude + timestamp into historically coherent, photoreal scenes (no long prompts). Heavy NB Pro demos; excludes video launches.

Jump to Context-to-reality images from coords + time (NB Pro) topicsTable of Contents

🗺️ Context-to-reality images from coords + time (NB Pro)

Big creative thread today: multiple creators show Nano Banana Pro turning only latitude/longitude + timestamp into historically coherent, photoreal scenes (no long prompts). Heavy NB Pro demos; excludes video launches.

Nano Banana Pro turns coords+time into historically accurate photos

Creators are discovering that Nano Banana Pro can generate historically coherent, photoreal scenes from nothing but latitude/longitude and a timestamp, treating coordinates plus date as a full prompt. In one set of tests it recreates the Hindenburg disaster from 40.03035° N, 74.32575° W, May 6, 1937, ~7:25 p.m. EST, closely matching famous news photos Hindenburg example, and in another it renders the Trinity nuclear test from the Trinity Site coordinates and the precise 1945‑07‑16 05:29:45 detonation time as a dawn mushroom cloud over the desert Trinity shot. A longer thread shows the model doing the same for Columbus’ Caribbean landfall, the first Moon step, the Storming of the Bastille, and the 1896 Athens Olympics based solely on coord+time strings, with era‑correct ships, clothing, flags, stadiums, and lighting Historical thread.

For AI photographers, filmmakers, game artists, and educators this drastically cuts prompt overhead for docu‑style frames: you can type place+date, then focus your effort on camera angle, lens, and mood instead of manually listing every period detail. It also hints at deeper geo‑temporal reasoning inside NB Pro that you can lean on for visual continuity across sequences (for example, consistent uniforms across a whole battle, or matching exploration gear across different landings) while you handle story, pacing, and polish in your normal tools.

🎬 Video models and maker workflows heating up

Busy day for T2V/I2V: Kling teases Omni launch week; lightweight local video model news; multi‑image story builds; leaderboard chatter; plus short Grok Imagine clips. Excludes NB Pro coord‑to‑reality feature.

Kling teases Omni launch week as creators brace for new video model

Kling AI started a full-on tease cycle for Kling Omni, promising “Omni Launch Week” and declaring that the “Omniverse begins,” with multiple creators expecting a major AI video reveal in the coming days Omni launch copy. The promo art and follow‑up posts confirm the launch is scheduled for next week, with community comments like “Kling coming out swinging” underscoring how seriously video makers are taking this one Omni date tease creator reaction.

For filmmakers and motion designers, the signal is simple: if you’re mid‑pipeline decisions for 2026 projects, you probably want to hold off locking your default T2V stack for another week to see what Omni actually is. Kling 2.5 Turbo is already competitive on quality and speed; Omni sounds like a unification or step‑change model rather than a minor tweak, and the fact Kling is branding a whole “launch week” suggests multiple capabilities (likely higher resolution, longer clips, or tighter character consistency) will roll out together.

Tencent’s HunyuanVideo 1.5 brings 720p T2V to ~14 GB consumer GPUs

Tencent released HunyuanVideo 1.5, a roughly 8.3B‑parameter video model designed to run on consumer‑grade GPUs with around 14 GB of VRAM, targeting 720p output with built‑in 1080p super‑resolution Hunyuan summary. It supports both text‑to‑video and image‑to‑video in one framework and leans heavily on a compact DiT backbone, 3D VAE and a selective sliding‑tile attention scheme to keep memory use and latency down.

For indie filmmakers and animators, the key shift is that you no longer need data‑center access to experiment seriously with generative video. HunyuanVideo 1.5 can generate ~10 second, 720p concept clips and then upscale them, and cache plus distilled variants reportedly offer close to a 2× speedup on those runs Hunyuan summary. It’s already wired into tools like ComfyUI, LightX2V and Wan2GP, so if you’ve been prototyping storyboards with still images, this is a natural next step to test end‑to‑end previsualization on your own hardware.

Kling 2.5 Turbo fuels Stranger Things spec game, K‑pop MV, and anime mascots

Creators continue to pile onto Kling 2.5 Turbo, using it for increasingly polished, very specific “fake footage.” One reel reframes Stranger Things into moody game-style footage, with Nano Banana Pro handling look‑dev and Topaz doing the final polish Stranger Things clip. Another turns a K‑pop music video concept into a full AI production using Kling 2.5 Turbo, Kling’s avatar system, Lovart AI images (Nano Banana Pro), and Suno AI for the song Kpop MV. Kling’s own feed is also pushing anime mascot snippets cut with 2.5 Turbo anime character clip.

Following photoreal microspots, where NB Pro + Kling 2.5 via Freepik hit near‑live‑action realism, today’s clips show the other side of the curve: hyper‑stylized, IP‑flavored pieces that look like game trailers, MVs, or TV openers. The pattern is clear for working teams:

- Do style in image models (NB Pro, MJ), then animate in Kling.

- Use enhancement tools (Topaz, Magnific, etc.) for broadcast‑ready sharpness.

- Treat Kling as the motion layer in a multi‑tool stack, not a one‑click solution.

Mystery “Whisper Thunder (David)” tops Artificial Analysis T2V leaderboard

A previously unknown video model labeled Whisper Thunder (aka David) jumped to the top of Artificial Analysis’s text‑to‑video leaderboard with an ELO of 1,247, edging out Google’s Veo 3 (1,226) and Kling 2.5 Turbo 1080p (1,225) leaderboard post. The board lists thousands of head‑to‑head matchups, and creators immediately started speculating that Whisper Thunder might be an unreleased Kling model based on its style and performance.

The interesting part for working filmmakers is not the mystery branding but what it implies: there is at least one production‑quality model already testing above today’s public leaders on perceived quality. If you care about being early, December looks like the month to run controlled A/Bs between Veo 3.x, Kling 2.5, whatever Omni ends up being, and this Whisper Thunder model once its identity and access path become public.

Vidu Smart Multi-Frame turns 2–10 stills into a coherent story video

Vidu introduced Smart Multi-Frame, a mode where you upload 2–10 images and let the AI infer the story between them, generating a single coherent, pro‑feeling video from the sequence Vidu feature thread. It’s part of the Vidu API/Q2 stack, so you can drive it from your own apps rather than clicking around a web UI Vidu API.

Following one-photo avatars, where Vidu Q2 turned single photos into talking explainers, this is the obvious next rung up the ladder: instead of animating one frame, you give it a whole beat sheet. For designers and storytellers, that means you can rough‑in a scene with keyframes (or even AI stills), hand them to Smart Multi-Frame, and get a first‑pass motion cut without touching a traditional NLE. It’s especially interesting for:

- Rapidly cutting social ads or explainers from a deck of product stills.

- Turning comic or storyboard panels into motion tests before committing to full animation.

- Letting clients “see” a sequence from your moodboard without a manual animatic pass.

Anima Labs pipelines Midjourney, Nano Banana 2 and Kling 2.5 into 3D Hollow Knight riff

Anima Labs showed a “What if Hollow Knight was 3D?” concept reel built with a three‑step AI stack: Midjourney for look‑dev, Nano Banana 2 for additional imagery, and Kling 2.5 for the final video Hollow Knight reel. The result reads like a pitch trailer for a 3D reboot of the 2D indie classic rather than a flat fanart clip.

For game artists and cinematic directors, this is a nice example of how to chain tools: lock style in an image model first, use a second model for variants or character tweaks, then hand a curated set of frames to a video model that respects that style. It’s also a reminder that IP‑adjacent concept work is moving from still art to full motion—helpful for pitch decks and mood reels, even if nothing ships commercially with those assets.

Grok Imagine micro-clips spread as creators push for 10-second videos

Short, highly stylized clips from Grok Imagine are starting to circulate, with artists using the model for things like retro‑futuristic starfighter races and liminal ‘poolrooms’ found‑footage edits starfighters clip poolrooms clip. The standout starfighter prompt leans on dynamic camera tilts, detailed industrial linework and “high-speed chase tension,” and the creator immediately asks, “When are we getting 10-second videos in Grok Imagine?” starfighters clip.

Right now these clips feel more like GIF‑length visual experiments than full shots, but they already show clear strengths in style consistency and motion framing. For storytellers, the limitation is duration: you can test a look, a camera move, or a texture, but you can’t yet block a whole beat. If xAI extends lengths to 10 seconds per shot, Grok Imagine could become a serious option for pre‑vis, music visualizers, and short motion loops that sit between image models and today’s heavier video stacks.

Indie short “Le Chat Noir” stacks NB Pro, Veo 3.1, Kling 2.5 and Hailuo 2.3

Filmmaker David Comfort shared “Le Chat Noir”, a noir‑style cat short film built as a deliberate multi‑model experiment: Nano Banana Pro for key imagery, Veo 3.1 and Kling 2.5 for different motion beats, MiniMax Hailuo 2.3 for additional shots, and Magnific AI’s new Skin Enhancer for the final polish Le Chat Noir short. The goal was explicitly to chase a cohesive cinematic look rather than a “look what the model can do” demo.

This is a good snapshot of where serious indie workflows are heading. Instead of committing to one T2V engine, you mix and match:

- Pick whichever model nails a specific shot type (slow push‑ins vs. kinetic moves).

- Use a still‑image model to design consistent characters and lighting, then force the video to respect that.

- Reserve post tools like Magnific or Topaz for skin, grain and detail work.

If you’re building your own pipeline, this short is a reminder that a “cinematic” feel often comes from that last 10–20% of specialty tools, not from the base model alone.

🛠️ Pixel‑precise edits: Adobe + NB Pro, Lovart Touch Edit

Today’s hands‑on workflow wins: NB Pro shows up inside Photoshop/Firefly dialogs, and Lovart’s Touch Edit enables point‑to‑edit and remix. More editing control; fewer re‑gens.

Adobe bakes Nano Banana Pro into Photoshop, Firefly, and Boards

Nano Banana Pro now shows up directly inside Photoshop’s Generative Fill dialog as a selectable "Gemini 3 (with Nano Banana Pro)" partner model, alongside Gemini 2.5 (Nano Banana) and FLUX Kontext Pro. That means you can regenerate or extend whole images with NB Pro without leaving your core editing workflow or juggling external sites. Photoshop NB Pro

The same thread notes that Adobe has also wired NB Pro into Firefly’s web app and Firefly Boards, so you can combine multiple reference images for concepts or moodboards and still have NB Pro do the heavy lifting on style and realism. On top of that, Photoshop now surfaces Topaz Gigapixel and Bloom tools inside the environment for high‑quality upscaling and detail restoration, turning the app into more of an end‑to‑end AI image finishing suite than a generator‑plus‑export hop. Photoshop NB Pro For working artists, the big deal is economics and friction: NB Pro usage is currently described as unlimited for existing Adobe subscribers, with no extra token pack or per‑image caps mentioned. That shifts NB Pro from a separate metered tool to something you can hit over and over during pixel‑perfect cleanup, layout exploration, or subtle relights, while keeping all your masking, layers, and history in one place.

Lovart’s Touch Edit gives 100k+ creators point‑to‑edit image control

Lovart has rolled out "Touch Edit", a feature already used by over 100,000 creators that lets you tap or marquee specific regions in an image—clothes, objects, bits of text—and rewrite only that area with a prompt, instead of regenerating the whole frame. This is aimed squarely at designers and thumbnail makers who keep fighting tiny details. (Lovart feature video, Touch Edit thread)

The Mark & Edit flow behaves like an AI‑powered brush: you mark a sleeve, logo, or prop and ask for changes, and the model respects the rest of the composition. The Select & Remix flow goes further, letting you pull elements from multiple source images and fuse them into a single, consistent composite in one go, which is ideal for speculative ad layouts, moodboards, or character mashups where you want tight control over positioning and style. Lovart feature video For creatives, this closes a long‑standing gap between loose prompt‑based generation and old‑school pixel pushing. You keep the exact framing and lighting you like, surgically swap what you don’t, and avoid the "one wrong detail means full reroll" loop that slows down production art and social creatives.

🎨 Reusable MJ looks: OVA anime, papercraft, pop‑up books

Creators share Midjourney srefs and prompt frameworks—classic 80s‑90s OVA fantasy, 3D papercraft/children’s styles, pop‑up book scenes, plus a V7 light‑trails recipe. Mostly style systems; minimal tooling news.

Midjourney sref 1851216167 locks in 80s–90s dark OVA fantasy

Midjourney creators get another dedicated OVA anime style ref, --sref 1851216167, tuned for 80s–early‑90s dark and heroic fantasy in the vein of Record of Lodoss War, Bastard!! and Vampire Hunter D anime sref post.

Following style pack, this gives anime illustrators and storytellers a second, more specific look they can lock into for character sheets, key art, and scene stills while keeping faces, armor, and painterly backgrounds cohesive across a full project.

Reusable pop‑up book prompt spreads as a layered paper look

A detailed “3D pop‑up book illustration” prompt, with slots for subject and color palette plus notes on layered paper elements and soft lighting, is turning into a reusable recipe for storybook and title‑card work across models prompt template.

Follow‑up posts show creators adapting it for haunted houses, parks, jungles, winter villages, and even custom character books like COCO, all while preserving the same tactile, folded‑paper depth coco pop-up video polar bear pop-up. This is an easy drop‑in style for children’s illustrators and motion designers who want consistent pop‑up worlds over a whole series.

Midjourney sref 2471566611 delivers 3D children’s papercraft worlds

Artedeingenio highlights --sref 2471566611 as a Midjourney style that produces charming 3D digital papercraft characters, pets, and props, ideal for kids’ books, pop‑up concepts, or paper‑cut stop‑motion pre‑viz papercraft sref post.

The examples range from a tiny astronaut to a kimono girl, vampire, and puppy, all with clean shapes, layered cardstock shading, and warm, simple backgrounds, giving designers a dependable “children’s toy” aesthetic they can reuse across casts and scenes.

New Midjourney sref 7361206097 anchors bold inked sketch portraits

Azed shares a fresh Midjourney style reference, --sref 7361206097, that yields high‑contrast inked portraits and sketchy line art across very different subjects, from tattooed boxers to elderly gunfighters and soft anime close‑ups new sref examples.

Other artists are already reusing it as a style ref in tools like Ideogram for graphic lighting experiments and superhero fan art ideogram adaptation captain america test, turning it into a go‑to look for gritty posters, covers, and character studies that still feel hand‑drawn.

Shared V7 recipe with sref 1623337167 creates luminous light‑trail figures

For Midjourney V7, Azed posts a full prompt recipe—--chaos 12 --ar 3:4 --exp 15 --sref 1623337167 --sw 500 --stylize 500 --weird 2—that generates a coherent series of glowing light‑trail angels, silhouettes, flowers, and cityscapes v7 light trails prompt.

The grid shows how the style reference plus high stylize and expression settings push subjects into translucent, motion‑blurred filaments, which is useful for title cards, album art, or motion‑design boards where you want an abstract, ethereal through‑line across many frames.

⚖️ Copyright reset signals + ad trust questions

Policy and trust news relevant to creatives: UK minister signals paying artists for training data; new strings point to ChatGPT Android ads; one UX complaint on paid watermarks. Continues yesterday’s ads debate with fresh code details.

UK minister signals copyright ‘reset’ on AI training data and creator pay

UK technology secretary Liz Kendall says the UK will “reset” how AI models use copyrighted work, siding with artists who want transparency and payment when their material is scraped for training. She points to Anthropic’s recent $1.5B settlement, which exposed a database of ~500,000 training books and offers affected authors about $3,000 each as a template for how rights-holders could be compensated at scale FT copyright piece.

For creatives, that means two likely shifts: legal backing for knowing whether your work is in a training set, and a path to getting paid instead of having to opt out after the fact. Kendall promises an initial policy report before year‑end and a fuller framework by March 2026, while figures like Beeban Kidron push for immediate steps such as blocking public contracts with AI vendors that are still in copyright disputes FT copyright piece.

ChatGPT Android beta shows explicit search‑ad scaffolding in the app code

Decompiled strings from ChatGPT’s Android APK v1.2025.329 reveal a dedicated ads subsystem with classes like ApiSearchAd, SearchAdsCarousel, and BazaarContentWrapper, hinting at carousel-style paid placements tied to product and shopping queries Android ads code. This deepens the picture from earlier generic ad hooks, suggesting OpenAI is building something closer to Google‑style commercial search inside the assistant, not random banner units ads code.

For creatives and small studios who rely on ChatGPT as a neutral research or ideation partner, this raises two questions: how clearly will sponsored results be labeled, and will ad logic start nudging recommendations toward brands that pay rather than what’s best for the work. The thread also assumes paid tiers stay ad‑free, turning the free ChatGPT into a hybrid of assistant and search engine while power users pay to keep the workspace clean Android ads code.

Creators push back on visible watermarks in paid DeepMind tools

A paying user of a Google DeepMind product complains that a prominent watermark on the “MCP Client (Connect Tools)” card makes the interface feel like a freemium experience, arguing that such branding is fine for free tiers but undermines pros who pay for clean, production‑grade tools Watermark UX complaint.

For designers and developers using these tools inside client workflows, the concern is both aesthetic and trust‑related: watermarks can clutter screenshots, decks, and live demos, and they signal that even enterprise‑oriented AI surfaces may prioritize marketing optics over creator experience. The pushback hints at a broader expectation that if regulators or platforms require “AI used” disclosures, they should be controllable overlays, not hard‑baked UI elements in paid environments Watermark UX complaint.

🔬 Video understanding: Vidi2’s spatio‑temporal grounding

ByteDance’s Vidi2 focuses on understanding, not just generating: frame‑accurate grounding and temporal retrieval that beat Gemini 3 Pro/GPT‑5 on new VUE benchmarks; practical uses for editors. New vs yesterday: fresh demos/bench links.

ByteDance’s Vidi2 tops Gemini and GPT‑5 on video understanding benchmarks

ByteDance’s new multimodal model Vidi2 is built to understand video, not generate it, and it now leads on two fresh benchmarks for temporal retrieval and spatio‑temporal grounding, reportedly beating proprietary models like Gemini 3 Pro and GPT‑5 on long‑form content. overview thread The official Vidi2 page highlights VUE‑TR‑V2 (finding the exact moment an event happens in 10–30 minute videos) and the new VUE‑STG benchmark, where Vidi2 can return frame‑accurate timestamps plus dynamic bounding boxes around objects or characters. project page

For editors and creators, this sort of “where and when” intelligence unlocks practical tools: Smart Split that can auto‑cut long recordings into shorts based on meaningful beats rather than fixed time slices, composition‑aware reframing that keeps the right subject in frame when converting widescreen to TikTok/Reels, and query‑driven editing like “find every shot where the host holds up the product” instead of scrubbing by hand. overview thread Because Vidi2 is focused on retrieval and grounding rather than flashy generative shots, it slots in as an assistive layer inside existing workflows—helping you navigate, tag, and re‑cut footage faster, especially on projects with hours of material where manual logging is painful.

📈 Money & momentum: funding, adoption, confidential compute

Business signals creatives should track: BFL raises $300M for FLUX/visual intelligence; FT charts show Gemini catching ChatGPT in downloads; Telegram’s Cocoon confidential compute network goes live.

Black Forest Labs pulls in $300M to double down on FLUX

Black Forest Labs, makers of the FLUX image models, has closed a $300M Series B at a reported $3.25B post‑money valuation, led by Salesforce Ventures and Anjney Midha’s AMP fund. funding announcement The company says FLUX is already used by “millions every month” and embedded in major creative platforms like Adobe, Canva, Meta and Microsoft, positioning it as a core piece of the visual stack for designers, filmmakers, and agencies. funding blog For working creatives this signals that FLUX isn’t a short‑lived experiment but a heavily funded bet on "visual intelligence"—models that not only generate pictures but also understand scenes, intent, and edits. Expect tighter FLUX integrations in big‑name tools, faster iteration on open and hosted checkpoints, and more enterprise‑grade workflows (approvals, safety filters, usage rights) as BFL spends this money on research and productizing its models.

Gemini narrows the gap with ChatGPT in downloads and engagement

Fresh Sensor Tower data in the Financial Times shows Google’s Gemini app rapidly closing in on ChatGPT’s monthly downloads, with Gemini approaching ~70M vs ChatGPT’s ~100M by late 2025. ft adoption chart A second chart tracks average minutes per visit on web, where Gemini and ChatGPT are now neck‑and‑neck around 7–7.5 minutes, with Claude slightly behind, suggesting users aren’t just trying Gemini—they’re sticking around.

For creatives and studios, this matters because distribution drives where AI‑native formats and monetization land first: if Gemini keeps gaining share, expect more brand budgets, creative tools, and partner integrations to skew toward Google’s ecosystem (Android, YouTube, Workspace) rather than only OpenAI‑first experiences. It also means clients and collaborators are increasingly likely to show up with Gemini as their default assistant, so testing your prompts and workflows there—not only in ChatGPT—starts to look like table stakes.

Telegram’s Cocoon network goes live for confidential AI compute

Telegram has turned on Cocoon, a decentralized confidential compute network where AI requests are processed with claimed 100% confidentiality while GPU owners earn TON for serving workloads. cocoon launch summary The launch graphic positions Cocoon between "Apps" and "GPU owners," pitching it as an alternative to centralized clouds like AWS and Azure that sit in the middle and add cost and data exposure.

For creators handling unreleased scripts, storyboards, brand campaigns, or sensitive client data, this is an early sign that privacy‑first AI infrastructure might soon be a practical option rather than a research topic. If Cocoon scales GPU supply and keeps latency reasonable, you could route certain high‑risk generations—e.g., confidential pitches, unreleased character designs—through a network that’s economically incentivized to keep your data opaque to the operator, rather than trusting yet another centralized SaaS.

📣 Creator studios: AI influencers + motion text for promos

Marketing‑centric tools: Apob pitches hyper‑real AI influencer pipelines (face swaps to revenue); Pictory shares motion text tutorial and a legal‑focused webinar CTA. Mostly promo craft; no model news here.

Pictory shows creators how to animate titles and captions in seconds

Pictory Academy published a step‑by‑step guide for animating titles, captions, and subheadings inside its editor, aimed at social creators who want motion text without learning motion design tools. The walkthrough covers entry/exit styles like fade, wipe, and typewriter, plus speed and direction controls, so promo makers can punch up key phrases directly in Pictory instead of bouncing to After Effects text animation guide (academy tutorial).

For AI filmmakers and marketers, this means you can keep script-to-video, b‑roll, voiceover, and now text animation in one pipeline, which cuts export/reimport cycles when iterating hooks and call‑to‑action overlays.

Pictory schedules Dec 10 webinar on AI video and SEO for law firms

Pictory is hosting a live webinar on December 10 at 1 pm EST focused on how law firms can turn existing site content into AI‑generated explainer videos that rank and convert in a world of Google AI Overviews webinar invite. The pitch leans on the stat that 60% of legal consumers search online and argues that static 2024 content strategies are no longer enough, with the session framed around using video to capture qualified traffic rather than broad awareness.

For storytellers and legal‑niche studios, this is a signal that buyers are starting to expect AI‑assisted video as table stakes in professional services marketing, and that there’s budget for teams who can package complex topics into short, trustworthy clips.

🗣️ Creator sentiment: MJ value debate and AI art backlash

Community discourse is news today: a long critique of Midjourney’s value/censorship; designers clapping back at AI fear; memeable ‘AI Hater Bingo’. Culture beat; no product updates folded in.

Creators question whether Midjourney is still worth a paid subscription

A long thread from a longtime user lays out why they’ve almost cancelled Midjourney “5 times” and now see it only as an exploration/ideation tool, not something they can ship with anymore. They praise the magic of discovery but slam the paid value once you hit production: built‑in upscaling is “unusable”, video/animation feels slow and over‑guardrailed, hands are still unreliable, and every serious workflow means exporting to other tools for fixing, upscaling, and motion. Midjourney value thread They also argue the community strategy feels like a missed chance: no real monetization or rewards for people who built entire followings around Midjourney, a weak on‑platform profile system, and censorship that keeps tightening. The punchline is pretty clear: paying a premium just for inspiration feels harder to justify now that other generators handle realism, text, and production‑grade outputs by default. For working creatives, this is a nudge to audit where Midjourney still earns its spot in the stack—and where it doesn’t.

Veteran designer tells peers to stop fearing AI and go hybrid

A Turkish graphic designer with around 25 years in the field posts a heated rant aimed at younger colleagues who mocked his “legendary thumbnail” YouTube video, arguing they’re clinging to purity while he’s busy integrating AI into design, photography, and videography. He name‑checks starting on FreeHand and early Photoshop, then points out that design, photo, and video are exactly the sectors most threatened by AI—and that his response has been to go hybrid instead of hiding. Designer AI rant He calls anti‑AI designers “Don Quixotes” tilting at tech they can’t stop, says fear of AI is really fear of their own replaceability, and insists prompt craft is what keeps work from all looking the same. The cover image for his rant was made with his own prompt‑maker tool, which he holds up as proof that AI work can still be personal and distinct.

For other creatives, the message is blunt: the people who lean into AI as part of their toolkit will likely outpace those who spend their energy gatekeeping instead of learning.

‘AI HATER BINGO’ meme crystallizes standard anti‑AI art talking points

A comic‑style “AI HATER BINGO” card is making the rounds, neatly packaging the most common anti‑AI lines into a 3×4 grid: “NOT REAL ART”, “PRESSING A BUTTON ISN’T TALENT”, “IT’S JUST PLAGIARISM WITH EXTRA STEPS”, “JUST LEARN TO DRAW”, “YOU DIDN’T MAKE THIS”, and more.

It turns years of discourse into a meme that AI creators can instantly point to whenever criticism hits those same, predictable beats. Alongside snarkier posts complaining that haters aren’t even creative in how they insult, the bingo card shows how the AI art community is coping with backlash through humor and self‑awareness. For artists, filmmakers, and designers using these tools, it offers a bit of cultural armor: the arguments against them are now so standardized they fit on a bingo sheet, which makes them easier to shrug off and keep working.

AI artists mock the tired, copy‑paste insults from AI haters

One creator vents that if AI haters are going to attack, they should at least “learn how to insult properly,” because seeing the same recycled lines over and over is boring. They joke that if this is the level of creativity haters bring to insults, their drawing probably isn’t much better, flipping the usual “AI users aren’t real artists” narrative back on critics. Haters insult comment Paired with other defenses like the viral “AI artists can’t draw” rebuttal that compares mediums (sculptors vs painters, photographers vs animators), the tone from AI users is shifting from defensive to openly dismissive. Cant draw rebuttal For working illustrators and designers experimenting with models, this captures the current vibe: people are tired of re‑litigating whether using AI is “real art” and are starting to treat those arguments as noise rather than serious critique.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught