Verdent claims 76.1% SWE-bench Verified – MyClaw starts at $9/month

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Verdent AI is being marketed as a new coding-agent layer claiming 76.1% on SWE-bench Verified; the product pitch centers on running multiple agents in parallel inside isolated git worktrees to prevent task collisions, then funneling output through a Plan→Verify→Code loop with tests/dev-server execution and diff-based approval. The thread positions Verdent as outperforming Cursor/Devin/Windsurf on the benchmark, but the claim is tweet-level and not yet backed by a third-party eval artifact in the material shared.

• MyClaw (OpenClaw hosting): pitches 1-click managed OpenClaw with 24/7 uptime and persistent memory; pricing shown at $9/month Lite and $19/month Pro; claims 1,200+ waitlist; team-vs-solo isolation controls unclear.

• OpenRouter Pony Alpha: promoted as $0 tokens with 200k context; screenshot warns prompts/completions are logged by the provider.

• Team9 workspace: open-sources an “infrastructure-free” OpenClaw workspace; claims deploy 100+ agents with built-in crawling/X API.

Across the stack, the theme is packaging: parallelism + verification for coding; always-on hosting + “free” context for agent ops; privacy and benchmark reproducibility remain the unpriced variables.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught

Top links today

- fal real-time image editing API and tools

- Gradio UI wrapper for ComfyUI

- Luma Dream Machine Ray video model

- Runway Gen-4.5 Story Panels workflow

- Freepik Runway 4.5 image to video

- OpenRouter model routing for agents

- Ollama local model runner for agents

- AWS Free Tier for running agents

- Phaser 3 framework docs for mobile games

- Autodesk Flow Studio camera motion to Maya

- Suno Studio music generation platform

- Meshy image to 3D for museums

- PAPER platform for mixed media publishing

Feature Spotlight

Seedance 2.0 pushes “indistinguishable” AI video (sports realism, pacing, and Sora comparisons)

Seedance 2.0 clips are being treated as a realism breakpoint—people are sharing sports-event prompts and calling it “post‑Turing‑test,” accelerating both adoption (ads/short drama) and attribution/provenance friction.

High-volume cross-account focus on Seedance 2.0 continues, but today’s angle shifts to “Turing Test” claims, sports-event photorealism prompts, and direct Seedance vs Sora 2 comparisons—plus new chatter about access and attribution.

Jump to Seedance 2.0 pushes “indistinguishable” AI video (sports realism, pacing, and Sora comparisons) topicsTable of Contents

🎬 Seedance 2.0 pushes “indistinguishable” AI video (sports realism, pacing, and Sora comparisons)

High-volume cross-account focus on Seedance 2.0 continues, but today’s angle shifts to “Turing Test” claims, sports-event photorealism prompts, and direct Seedance vs Sora 2 comparisons—plus new chatter about access and attribution.

Seedance 2.0 hits “Turing Test” vibes with an Olympic beam-final prompt

Seedance 2.0 (Seedance): A new viral proof-point is sports-broadcast realism—one creator says Seedance 2 “blew through the Turing Test for AI video” after generating a photoreal “women’s beam final at the olympics” clip from a single prompt, including the specific beat of a back handspring + firm landing setup in the beam prompt share.

• Prompt that’s spreading: “photorealistic shot of the women's beam final at the olympics. gymnast from the united states does a back handspring and lands firmly on the beam, with the commentators exclaiming wildly,” as written in the beam prompt share.

The creative implication is that “event TV grammar” (camera placement, pacing, and broadcast look) is becoming a go-to prompt target—not just cinematic short-film aesthetics.

Seedance 2.0 vs Sora 2: Sora adds music; Seedance wins pacing

Seedance 2.0 vs Sora 2 (Video gen): A side-by-side, same-prompt test claims Sora 2 “automatically adds music” (making it feel more finished fast), while Seedance 2.0 is judged stronger on “shot breakdown and pacing,” reading more like an edited sequence in the comparison notes.

• Repro attempt: The author points to a prompt pack/source for replicating the test in the comparison notes, with the follow-up pointer repeated in the prompts link callout.

Treat this as directional rather than definitive—there’s no standardized eval here—but it’s a useful framing for creative teams choosing between “auto-finish polish” and “editorial sequencing feel.”

Seedance 2.0’s second “sports realism” flex: Olympic snowboard big air

Seedance 2.0 (Seedance): Another “scroll test” prompt is making the rounds—an Olympic women’s snowboard big air sequence described as “the first thing I generated” and “I’d never guess this was AI” in the snowboard prompt post.

• Prompt details: The post shares the full setup—“photorealistic shot of the women's snowboard big air competition at the olympics… races down the hill… flies off the snow ramp… commentators exclaiming wildly”—as captured in the snowboard prompt post.

The pattern here is creators dialing prompts toward familiar broadcast moments (sports, commentary energy, televised camera coverage) to get outputs that read as “native footage” in feeds.

“Real or Seedance 2.0?” becomes a reusable post template

Seedance 2.0 (Seedance): Dance clips are increasingly posted as a two-option bait format—“real or seedance 2.0?”—to drive comments and implicitly stress-test believability, as shown in the real or seedance post.

This follows the earlier misattribution loop where “real video mislabeled as AI” became a recurring side effect of Seedance virality, building on Mislabeling (confusion loop around provenance).

Seedance 2.0 gets pitched as actorless vertical drama production

Seedance 2.0 (Seedance): A creator pitch is emerging around templated vertical micro-dramas—“Stop hiring actors, let Seedance create viral short dramas for you… generate all at once”—with a sample output posted in the short drama pitch.

The claim is workflow-level (batching story beats without reference media) rather than a new feature announcement, but it signals where creators are steering the tool: serialized, prompt-structured, short-form narrative content.

Reposting Seedance clips without credit draws creator pushback

Seedance 2.0 (Seedance): As repost volume climbs, attribution norms are becoming a flashpoint—one creator calls it “very unethical” to post Seedance 2.0 videos without crediting the original author, per the crediting complaint.

This slots into the same provenance pressure that earlier Seedance discourse created—following up on Mislabeling (real/AI misattribution loops)—but now the complaint is about creator credit rather than authenticity.

Seedance 2.0 access scarcity becomes its own meme

Seedance 2.0 (Seedance): Access (or perceived access) is turning into a parallel storyline—one creator asks how “everyone [is] using Seedance 2.0 already” and jokes about a “secret society,” per the access scarcity joke.

This continues the earlier thread that Seedance 2.0 scarcity—especially outside China—made reposted clips feel like the primary product, following up on Access scarcity (U.S. availability gaps driving repost culture).

Seedance 2.0 gets framed as a “loaded gun”

Seedance 2.0 (Seedance): A capability-as-risk metaphor is spreading alongside the wow clips—“seedance 2.0 is a loaded gun”—as posted in the loaded gun metaphor.

It’s not a technical claim, but it’s a useful read on how quickly creators think the model collapses the cost/time of making convincing motion content.

Seedance 2.0 gets used for ‘lost TV footage’ nostalgia: Ultraman Tiga

Seedance 2.0 (Seedance): A different creative lane is “unreleased episode footage” cosplay—one share frames outputs as “unreleased clips from Ultraman Tiga,” and attributes them to a Douyin creator (Likahao) in the Ultraman clip post.

This is a repeatable packaging trick: present generations as archival media (missing broadcasts, deleted scenes) rather than as “AI videos,” which changes how audiences judge artifacts and flaws.

Seedance 2.0 shifts from demos to personalized meme requests

Seedance 2.0 (Seedance): The conversation is drifting from “look what it can do” into “make me a specific bit”—e.g., a request to “make me dunk on Shaq with seedance 2.0,” as posted in the prompt request.

It’s a small signal, but it usually shows up when a tool feels accessible enough that people start treating it like a personalized content generator, not a showcase-only model.

📽️ Everything *besides* Seedance: Runway/Freepik storytelling, Kling cinematic tests, and playable-TV teasers

Non-Seedance video creation talk today clusters around directing longer story beats (Runway Story Panels + Gen‑4.5 I2V), Kling 3.0 cinematic/anime tests, and “interactive TV” positioning. Excludes Seedance 2.0 (covered as the feature).

Runway 4.5 is now available inside Freepik, positioned for story-first workflows

Freepik + Runway 4.5 (Freepik): Freepik says Runway 4.5 is now accessible in Freepik and frames the value shift as “stop generating clips” and focus on story and character continuity, as shown in the Freepik workflow post.

The post emphasizes building a character reference board first (multiple angles/poses) before scene work, which Freepik calls out explicitly in the Freepik workflow post and reinforces in the dedicated Character exploration clip.

Runway adds Story Panels + Panel Upscaler + Gen-4.5 image-to-video story pipeline

Story Panels + Gen-4.5 I2V (Runway): Runway is pitching a single-image → multi-panel story workflow that keeps characters/world design consistent by chaining Story Panels, Panel Upscaler, and Gen-4.5 Image to Video, according to the Runway feature post.

This framing is explicitly about sequencing and continuity (panels first, then animation), rather than generating standalone shots.

Kling 3.0 full-speed action anime is being marketed as a standout mode

Kling 3.0 (Kling): A creator showcases “full-speed action anime” output and frames it as one of the most impressive Kling 3.0 capabilities they’ve found, while noting prompts are gated behind a subscription in the Action anime promo.

This is positioned around motion intensity (sword-action beats) rather than dialogue or lip sync.

Luma Dream Machine promotes Ray3.14 for story-driven ads in native 1080p

Ray3.14 (Luma Labs): Luma is promoting Ray3.14 as a way to create story-driven ads in native 1080p, emphasizing directing characters and scenes with more intentional motion and better identity/style consistency across frames, per the Ray3.14 announcement.

The positioning is explicitly “storytelling” rather than short-form novelty clips.

Showrunner 2.0 teaser frames “TV as playable”: remix stories and change endings

Showrunner 2.0 (Fable Simulation): Fable Simulation teases Showrunner 2.0 with a clear product claim—“TV just became playable,” including creating worlds, remixing stories, and changing endings—according to the Showrunner 2.0 teaser.

No release date details are included in the tweet; it reads as an incoming product beat.

Freepik’s Character Exploration method: build multi-pose boards before scenes

Character reference boards (Freepik): Freepik highlights a repeatable pre-production step—start from one character image, then generate a multi-angle, multi-pose reference board to anchor consistency across later scenes, as shown in the Character exploration post.

It’s presented as a foundation step: build references first, then “build scenes,” per the expanded workflow thread.

Kling 3.0 “cinematic” single-shot tests: slow push-in, scale, and lighting

Kling 3.0 (Kling): A creator points to a specific Kling 3.0 look—slow push-in camera motion, large-scale set pieces, and high-contrast lighting—as a “cinematic” baseline for single shots, as shown in the Cinematic shot share.

The clip is used as lookdev reference more than a narrative beat.

Kling 3.0 project test: photoreal shot that snaps into low-poly wireframe

Kling 3.0 (Kling): A creator shares a project test where a realistic live-action style shot transitions into a low-poly/wireframe look mid-clip, describing Kling 3.0 as feeling “real” in this kind of transformation-heavy setup in the Wireframe transition demo.

The demo is effectively a style-morph reference for music video or trailer intercuts.

A physical “real life AI Studio” is announced in El Salvador as a creator hub

AI studio hub (starks_arq): starks_arq announces what they describe as the first “real life AI Studio” in El Salvador, positioned as a place to work with brands/filmmakers/authors and host visiting creators, with plans to livestream the buildout on Rumble per the Studio announcement.

The post frames this as a collaboration residency model (a physical production base), not a new software release.

Sora 2 scripted vertical drama prompt shows unintended comedic beats in outputs

Sora 2 prompting (OpenAI): A creator shares a long-form, time-coded 9:16 “billionaire heir drama” prompt and notes Sora 2 introduced an unexpected “helicopter at the door” moment when using similar prompt wording, as described in the Prompt and result note.

The full text prompt is published alongside a prompt page link in Prompt page, including explicit casting constraints and 0–5s/5–10s/10–15s scene beats.

🧪 Copy/paste prompts & style codes (Nano Banana brand kits, stipple motion JSON, Midjourney SREFs)

Today’s prompt economy is heavy: long Nano Banana brand-ad prompts, effect recipes (chrome distortion), structured “style spec” JSON for illustration/motion looks, and multiple Midjourney SREF “cheat codes.”

Nano Banana Pro “Fluid Chrome Distortion” prompt for melting product silhouettes

Nano Banana Pro (prompt recipe): A JSON-style prompt is circulating for a “liquid vertical streak” look—small centered product on a pure black void (#000000), with the subject “melted” into buttery mercury-like streaks and a tight negative prompt to avoid blocky bars or cropped framing, as written in the Fluid chrome distortion JSON.

Key constraints worth keeping verbatim from the Fluid chrome distortion JSON: the product “occupies no more than 50% of the frame,” “never cropped,” and the negative prompt explicitly bans “hard edges… rigid bars… colored background… warm colors.” The examples shown (controller/logo/skull/cassette) suggest it works well for icon-shaped objects and SKU-style hero shots.

“Tiny universes” prompt: isometric dioramas inside everyday objects

Isometric diorama prompt (workflow seed): A base prompt template for “miniature world inside an object” scenes is being shared (tilt‑shift + macro DOF + 8K finish) with a list of 10 concrete variations, starting from the Tiny universes thread and expanded via follow-on examples like “New York on a vinyl record,” per the Vinyl record variant.

• Base prompt: The template is “A [OBJECT] serves as the central base, with a miniature interpretation of [LOCATION] emerging organically from within… tilt‑shift… macro depth‑of‑field… realistic materials… 8K,” as written in the Base prompt text.

• Tool note: The author says the prompt was drafted in Gemini 3 Pro and the examples were created in Leonardo, per the Tiny universes thread and the Leonardo attribution.

Stipple + motion-smear JSON spec for editorial sports poster images

Stipple motion style (prompt spec): A structured JSON preset is being reused to turn action photos into monochrome stipple illustrations with “illustrated motion smear” and a subtle double exposure layer—explicitly banning photorealism, lens blur, color, and “new elements,” as laid out in the Stipple motion JSON.

The same spec is also being applied to Winter Olympics-themed frames, as shown in the Olympics stipple examples, which helps confirm it’s not tied to a single sport silhouette; the core controls are dot density (shading), horizontal dot-trail direction, and “embedded not collage” double exposure.

Grok Imagine “showreel in 10 minutes” prompt templates for rapid-cut edits

Grok Imagine (prompt format): A set of “showreel template” prompts is being shared to force aggressive pacing—hard jump cuts every 0.5–1s, alternating macro and epic scale, with explicit bans like “No text or logo,” as outlined in the Showreel prompt share and the Acid strobe template.

Two copy-pasteable prompt headers from the thread:

The “Ultimate commercial” version is posted in full as the Second template text, which makes it easier to adapt for brand reels without accidentally generating title cards.

Midjourney cyberpunk preset: --sref 8036686603 with --stylize 600

Midjourney (SREF preset): A reusable cyberpunk “look anchor” is being shared as a short, repeatable combo—--sref 8036686603 --stylize 600—meant to keep materials and lighting coherent across a set, as shown in the Cyberpunk sref samples and referenced again in the Organized macro set.

The sample set leans hard into reflective chrome surfaces, jewelry, red accent lighting, and close-up product/prop framing—useful if you’re building a consistent campaign moodboard rather than one-off images, per the Cyberpunk sref samples.

Midjourney SREF 2583858235 trends for “luminous urban comic” lighting

PromptsRef × Midjourney (SREF trend): PromptsRef’s trend post pegs --sref 2583858235 as its top style pick for Feb 8, pairing it with --niji 7 --sv6 and describing a “Luminous Urban Comic Style” built around rim light/bloom plus hard-edged cel shadows, per the SREF trend breakdown.

• What to copy: the exact parameter bundle (--sref 2583858235 --niji 7 --sv6) and the lighting keywords (backlighting, rim light, bloom), as written in the SREF trend breakdown.

• What it’s being positioned for: character key art, fashion-leaning portraits, and “midnight city” scenes (city pop / art deco framing), also in the SREF trend breakdown.

Midjourney dual-SREF recipe for minimalist “tattoo icon” key-and-locket sets

Midjourney (prompt drop): A minimalist “tattoo icon” style prompt is being shared for a floating heart-shaped locket + ornate skeleton key, using a dual SREF combo with concrete params—--chaos 30 --ar 4:5 --exp 100 --sref 4011871094 887867692—as posted in the Dual SREF locket prompt.

The attached grid shows the motif holds across variants (open locket, key inside, simplified backgrounds), which is useful for generating cohesive carousel sets from one line, per the Dual SREF locket prompt.

Midjourney SREF 2917660624: “liquid crystal” refraction look for fashion ads

PromptsRef × Midjourney (SREF code): Another PromptsRef breakdown highlights --sref 2917660624 as a “liquid crystal” look—surreal fluid aesthetics with high-saturation orange/blue contrast and refracted-light cues—positioned for luxury brand concepts and cosmetics, per the SREF pitch.

The longer-form breakdown (including suggested keywords like “Fluid dynamics” and “Refracted light”) is linked in the SREF detail page. Because no example grid is attached in the tweets shown, the actionable artifact here is the code + keyword framing.

Midjourney SREF 821961689 gets pitched as a watercolor illustration shortcut

Midjourney (SREF code): PromptsRef is pitching --sref 821961689 (tested with Niji 6) as a warm watercolor illustration look for children’s-book art and cozy background plates, according to the Ghibli vibes claim, with expanded style notes in the SREF detail page.

A compact usage line consistent with the writeup:

No example images are attached in the tweets shown, so the only concrete artifact here is the code + descriptive guidance in the SREF detail page.

PromptsRef markets “New Oriental Fantasy” Midjourney SREF prompt logic

PromptsRef × Midjourney (style code): A “New Oriental Fantasy” style pitch frames one SREF as a hybrid of CLAMP-like character delicacy, Art Nouveau linework, and Ukiyo-e composition—optimized for covers/TCG/merch—per the New Oriental Fantasy pitch, with the underlying code analysis hosted in the SREF analysis page.

A minimal copy-paste scaffold aligned to the description in the New Oriental Fantasy pitch:

The tweets focus on positioning and aesthetics rather than showing a reference grid, so treat the “what it looks like” as unverified here beyond the written breakdown in the SREF analysis page.

🧩 Production recipes & agent workflows (multi-tool stacks, research bots, and “knowledge that updates itself”)

Creators share practical pipeline thinking: repeatable multi-tool stacks for shorts and brand content, plus agent workflows for research, scraping, and turning community chatter into reusable knowledge.

Freepik fight-video workflow: Nano Banana Pro grids to Kling loops, finished with speed ramps

Freepik workflow: A repeatable “fight sequence in under an hour” recipe chains Nano Banana Pro for generating character/frame grids, a frame-picking prompt (“extract row X column Y”), and Kling start/end-frame looping, then finishes by lining clips up with speed ramps, as shown in the Fight video walkthrough and finalized in the Speed ramp assembly.

• Frame selection trick: The key mechanic is treating a 3×3 grid as a deterministic frame bank (row/column extraction) rather than rerolling full videos, per the Fight video walkthrough.

• Continuity construction: The sequence is built from multiple short loops using start/end frames, then “stitched” in editing with speed ramps as described in the Speed ramp assembly.

A full short-form pipeline card: Midjourney + Nano Banana Pro → Kling 3.0 → ElevenLabs → Splice/Lightroom

Multi-tool workflow: A creator posted a concise “stack card” that spells out an end-to-end pipeline—Midjourney + Nano Banana Pro for images, Kling 3.0 MultiShots for video, ElevenLabs for music/voice, and Splice + Lightroom for the final edit, as documented in the Tool stack recipe.

• Why this matters: It’s a rare one-line breakdown that maps roles cleanly (concept art → motion → audio → finishing) and makes it easier to standardize team handoffs using named tools rather than vague “AI did it” descriptions, per the Tool stack recipe.

Spine agents run a one-prompt YouTube analysis pipeline end-to-end

Spine agents: A demo claims a single prompt can trigger a full analysis loop over “every MrBeast video”—agents scrape multiple videos, extract transcripts, run statistical analysis, generate charts, and present findings, as described in the Agent analysis claim with a “try it” link shared in the Try it link.

• What’s distinct: The workflow is framed as multi-step automation (collection → transforms → visualization → reporting) rather than a chat summary, per the Agent analysis claim.

• Surface: Access is routed via a hosted entry point, as linked in the Try it link.

Freepik’s Runway 4.5 messaging shifts from clip generation to story workflow

Freepik (Runway 4.5): Freepik’s post positions the differentiator as “creative vision” and story structure rather than access to the same tool—explicitly saying “Stop generating clips. Start telling stories,” while showing a character exploration workflow that builds reference boards for consistency, per the Story-first positioning and the follow-on Character exploration step.

• Workflow emphasis: The explicit claim is that character consistency needs deliberate reference gathering (“multiple poses and angles”) before scene building, per the Character exploration step.

OpenClaw vision: auto-classify community chats into a self-updating knowledge base

OpenClaw (workflow concept): A proposed workflow for a private builder community describes piping “crazy chatter” from multiple channels into an auto-classified, self-updating knowledge platform, then having OpenClaw bots retrieve relevant context so members don’t need to stay chronically online, as outlined in the Knowledge platform vision.

• Agent loop: The loop is “ingest → classify → store → retrieve into agents,” with the explicit aim that bots get smarter over time by pulling from the platform, according to the Knowledge platform vision.

• Access control as a feature: The pitch frames a gated community as safer for this feedback loop because it’s “not open to everybody,” per the Knowledge platform vision.

A physical AI studio hub opens in El Salvador, pitched as a creator residency

AI studio-as-workflow: A creator announced setting up a “real life AI Studio” in El Salvador (Bitcoin Beach), framing it as a place to collaborate with brands, countries, filmmakers, and authors, with plans to livestream the buildout, as stated in the Studio announcement and extended with a traditional-art crossover note in the Traditional plus AI tease.

• Operational angle: The pitch is less “tool access” and more shared production environment—hosting creators on-site and spotlighting “the humans behind AI,” per the Studio announcement.

Specialized tech-support agents for seniors get framed as a big service niche

Agent service thesis: A thread argues that as tech changes faster, elderly users will need more support than relatives can provide, creating room for specialized, multimodal agents “hyper tuned to the older generation,” as described in the Elder support agent pitch building on the broader “senior tech support” claim in the Billionaire thesis.

• What’s concrete: The proposed product shape is “automated, specialized agents” with a focus on reliability and demographic-specific UX, per the Elder support agent pitch.

📣 AI ads & UGC factories (near-$0 production, fake interviews, and repeatable TikTok formats)

Marketing-focused posts concentrate on repeatable ad systems: synthetic street interviews, podcast clips, localization at scale, and “one influencer / many products” TikTok shop engines.

Calico AI gets pitched as a near-$0 UGC ad factory (street interviews to unboxings)

Calico AI (Calico): A marketer claims an operator is running fully synthetic “AI street interviews” as paid ads with 4.5× ROAS, while spending $0 on creators and $0 on production, then expands it into 11 repeatable AI video ad formats in the AI ad tactics thread and points to Calico as the generator in the Tool link follow-up, which links to the product page via Product page.

• Formats called out: The list spans fake-but-believable street interviews, “podcast” clips, food content “with zero kitchen,” try-on without models, “shock hooks,” product commercials, real estate transformations, and unboxing variants, as enumerated in the AI ad tactics thread.

• Distribution mechanics: The post is packaged as a “comment to get the PDF/prompts” lead magnet, with the tool named explicitly in the Tool link follow-up.

TikTok Shop pages are scaling with “one face, many products” AI-swapped UGC

TikTok Shop UGC factory: Following up on AI influencer farms (parallel “AI influencer” account farms), one thread describes a repeatable TikTok Shop format where the same face, same room, and same relaxed demo pacing stay constant while AI swaps the product being shown, enabling daily uploads across “dozens of offers,” per the TikTok shop engine description and its amplified version in the Format expansion.

• Scale claim: The author frames early adoption in tier‑1 markets as a path to $300k–$500k/month, explicitly tied to repeatability and removing “creator management,” as stated in the TikTok shop engine description.

Super Bowl ad chatter shifts to “most commercials are about AI or made with AI”

Super Bowl ad saturation signal: A reposted observation claims that most Super Bowl commercials are now either about AI or made with AI, reinforcing the mainstreaming narrative from earlier Super Bowl-week discourse, as captured in the AI ads saturation claim.

A simple theory for mediocre Super Bowl ads: LLMs praising every idea

Creative-direction critique: One hypothesis floating in Super Bowl-week ad talk is that LLMs are “telling everyone their ideas are brilliant,” which could inflate weak concepts into final spots, per the LLM praise hypothesis.

A “mood-first” AI product ad gets read as an experiment in trust

Mood-first ad concept: A creator reaction highlights an “AI earbuds” concept that has no screen, no buttons, maybe not even a switch, and says it “barely feels like an ad,” framing it instead as a small experiment in trust (you talk to them and hope they’re listening), per the Reaction to earbuds concept.

🖼️ Image formats that earn engagement (Hidden Objects / AI‑SPY) + what makes them work

Adobe Firefly puzzle formats dominate the image-side “what to post” playbook today—plus new documentation on the specific prompt phrases that improve object blending instead of obvious placement.

Hidden Objects prompt research: three phrases that make “object blending” work

Hidden Objects prompt craft: After generating 120+ puzzle images across 15 subjects and 9 prompt variations, Glenn reports he found “the three phrases that carry almost all the object-blending behavior” in Hidden Pictures-style generations, per the research write-up linked in Prompt research article and the shorter recap in Three phrases claim.

The three phrases called out in the article summary are: “seamlessly blended,” “each object shares its outline with a scene element,” and “NO placing objects in open empty space,” as quoted in the Prompt research article.

Firefly Hidden Objects Level .006 lands as a repeatable “find 5” post template

Adobe Firefly Hidden Objects (Adobe): Following up on Format scaling (Firefly “Hidden Objects” as a template), Glenn posts a new “find 5 hidden objects” board as Hidden Objects | Level .006, keeping the same recognizable layout (main scene + object strip) and explicitly crediting Firefly as the generator in Level .006 prompt card.

The series also adds Level .005 with a high-density line-art peacock scene in Level .005 peacock, showing the format still works across very different visual complexity (rooted tree vs patterned feather fan).

Firefly AI‑SPY Level .012 uses explicit object counts to drive replies

Adobe Firefly AI‑SPY (Adobe): Building on AI‑SPY format (object list + answer-key framing), AI‑SPY | Level .012 shifts to a cluttered bedroom scene and calls out exact quantities to find—“1 Pink Flamingo,” “2 Red Dice,” “1 Yellow Rubber Duck,” “2 Goldfish in Bowls,” “1 Silver Pocket Watch”—as shown in Bedroom object list.

Firefly AI‑SPY Level .014 adds multi-count targets (2 emeralds, 3 rings, etc.)

Adobe Firefly AI‑SPY (Adobe): AI‑SPY | Level .014 leans into “counts > singletons” by requiring multiple instances (2 emeralds, 3 rings, 2 wax seals) plus one-off items (1 compass, 1 feather), all presented in a clear target list beneath the scene in Pirate desk targets.

The Render launches as a public archive for Firefly puzzle experiments

The Render (newsletter): Following up on Beehiiv archive (moving process notes into longer-form posts), Glenn says he launched The Render to ship “every prompt shared” research and keep puzzle/process iteration in one place, as described in Article + newsletter launch and linked via The Render homepage.

He frames the next beat as continued format R&D—“new style experiments and how the algorithm handles puzzle content”—as written in Next experiments note.

🧊 3D + motion capture pipelines (museum digital twins, camera capture → Maya, and creator-facing 3D tools)

3D and motion posts today are about practical production: image-to-3D cultural heritage replicas, and translating phone-captured handheld camera motion into Maya for animation.

Meshy shows a museum workflow from photos to 3D twins, prints, and AR

Meshy (MeshyAI): A published case study describes Lanting Calligraphy Museum (Zhejiang, China) using Meshy Image to 3D to turn photos of fragile relics into high-fidelity digital twins, then using those assets for 3D-printed display replicas and AR glasses viewing with contextual descriptions, as outlined in the workflow write-up from museum case study.

• Practical production angle: This frames image-to-3D less as “concept sculpting” and more as a full museum pipeline—capture → digitize → print → exhibit—using the same core asset in multiple outputs, per the examples in museum case study.

• What’s concrete vs implied: The tweets show UI and object examples plus the stated downstream uses (print + AR), but don’t specify scanning setup, target polygon counts, or file formats in the public post, as seen in museum case study.

Autodesk Flow Studio demonstrates phone handheld capture to Maya camera motion

Flow Studio (Autodesk): Autodesk shared a workflow demo showing handheld camera movement captured on a phone being translated into animation-ready camera motion inside Maya, with Harvey Newman demonstrating the capture-to-DCC handoff in the walkthrough clip from phone to Maya demo.

• Why it matters for AI+3D creators: This is a clean bridge between real-world cinematography “feel” and synthetic/CG scenes—camera motion becomes an asset you can reuse across AI-assisted lookdev, previs, or character shots, as shown in phone to Maya demo.

• What’s still not specified: The post emphasizes the transfer and resulting camera paths, but doesn’t enumerate supported phone types, lens metadata handling, or export formats in the tweet itself, per phone to Maya demo.

🎵 AI music + sound design as the glue (Suno remixes, studio updates, music-video workflows)

Audio posts are fewer but practical: creators show real‑recorded vocals remixed in Suno, soundtrack glue for AI visuals, and calls for music-video-friendly contests/platforms.

Dor Brothers combine real vocals, Suno remixing, DorLabs visuals, and Kling animation

Dor Brothers / Dorphism (workflow): A concrete “audio-first” music-video stack is shown where the song is recorded in real life, then remixed with Suno, while the visuals are generated in DorLabs.ai and animated in Kling—a clean division of labor for musicians who want AI visuals without faking the core performance, as described in Toolchain credits post.

• Pipeline clarity: The post explicitly names each stage (visual generation → animation → music remix), which makes it easier to swap in alternate video tools without changing the audio backbone, per Toolchain credits post.

• Source surface: The visuals originate from Dor Brothers’ own platform, linked in the DorLabs site, rather than being framed as “just prompts,” which is useful context if you’re tracking where teams are productizing full creative workflows.

Grok Imagine clips stitched into a full music video concept

Grok Imagine (xAI) + MV assembly: A full “comfy” music video concept—"I love Big Teddy"—is posted as an edit built from multiple Grok Imagine clips, with an explicit suggestion that XCreators could host a dedicated music-video contest format, as stated in Music video post.

The post is notable because it frames Grok Imagine outputs as shot inventory you can sequence into a complete MV, rather than a single hero clip, per Music video post.

Creature short stack credits Suno as the music layer alongside Kling animation

Suno (music layer) in an AI creature short: A creature/predator sequence is shared with a clear tool credit stack—Midjourney for 2D, Freepik / Nano Banana 2 for 3D, Kling 2.6 for animation and some sound, and Suno for music—showing how Suno is being treated as the finishing “glue” even when the visual pipeline spans multiple generators, per Tool credits list.

The post doesn’t include prompt text or Suno settings, but it’s a useful reference for how creators are publicly “stitching” audio responsibilities between Kling (some sound) and Suno (music), per Tool credits list.

Runway festival format critique: music videos still sidelined in AI film culture

AI festivals and distribution (format signal): A creator argues Runway’s AI “film” festival expanded into categories like design/new media but still enforces a linear video story framing, with “no mention of music videos,” and connects that to a broader lack of mainstream homes for MVs (plus a reminder that X still tops out at HD rather than the promised 4K), as written in Festival critique.

This is less about Runway’s generation quality and more about where AI-native music storytelling is (and isn’t) being legitimized and showcased, per Festival critique.

Suno Studio updates get a fresh callout from creators

Suno Studio (Suno): A creator calls out “amazing new updates” to Suno Studio in Suno Studio updates note, without naming features or shipping notes; the practical takeaway is just that active users are noticing iteration on the studio surface itself (as opposed to only model drops).

No changelog, pricing change, or demo artifact is included in the tweet, so treat it as a heads-up signal rather than a specable feature update.

🧑💻 AI coding agents get more parallel + more productized (SWE-bench wins, worktrees, and $0 tooling)

Coding posts concentrate on agent architecture and benchmarks (parallel worktrees, verification loops) plus creator-friendly low-cost setups for running agents and models.

Verdent AI claims 76.1% SWE-bench Verified with parallel worktree agents

Verdent AI: A new coding-agent product called Verdent is being promoted as beating Cursor/Devin/Windsurf on SWE-bench Verified with a 76.1% resolution rate, while running multiple coding agents in parallel inside isolated git worktrees to avoid collisions, as described in the benchmark thread from SWE-bench claim.

The pitch emphasizes a multi-agent orchestration loop rather than single-thread “assign → wait → review” coding, with the same thread outlining parallel task lanes (auth, payments, refactors, tests, docs) and isolation mechanics in separate worktrees in SWE-bench claim. For more context on how it’s packaged/positioned, the linked Product page frames Verdent as a workflow layer (plan, verify, review) rather than autocomplete.

Parallel agent coding with isolated git worktrees to prevent collisions

Parallel agent workflow pattern: Multiple posts converge on the same practical architecture—run several AI coding agents at once, but isolate each agent’s changes in separate git worktrees so you can pursue multiple features/bugfixes concurrently without merge conflicts or "agent collisions," as explained through Verdent’s setup in Parallel worktree pattern.

The concrete template being shared is “one agent per lane” (auth, payments, refactor, tests, docs), with isolation doing the heavy lifting so review/merge becomes a human-controlled step rather than a coordination failure, per the example task split in Parallel worktree pattern.

Plan-Verify-Code loops are replacing single-shot codegen for agentic dev

Agentic coding loop pattern: A recurring recipe in coding-agent tooling is a three-stage loop—plan (turn a fuzzy request into a structured implementation plan), auto-verify (run tests/dev server and iterate on fixes), then diff-based review (human approves highlighted changes), as described in Verdent’s workflow breakdown in Plan-Verify-Code positioning and expanded with step detail in Auto-verify and review steps.

The key shift is treating tests and execution as part of the generation loop (not a separate manual phase), with “show diffs before merging” framed as the control point, per Auto-verify and review steps.

Specialized tech-support agents for seniors get framed as a big company wedge

Tech-support agents as a business wedge: A cluster of posts argues that the highest-leverage “agent company” might not be generic automation, but senior/elder-focused tech support—specialized, multimodal agents tuned for older users and set up as an always-on service, as stated in Billionaire thesis and elaborated with the elderly-specific framing in Elderly support expansion.

The discussion frames this as demand driven by accelerating software change and the inability of friends/family to provide constant support, with the emphasis on “just works” reliability and specialization rather than raw model novelty, per Elderly support expansion.

🤖 Always-on agents & “no-setup” deployment (OpenClaw wrappers, Team workspaces, and managed hosting)

A large cluster focuses on making agents usable for creatives/teams: managed OpenClaw hosting, 1‑click org agent workspaces, and minimalist wrappers to reduce setup friction.

MyClaw puts OpenClaw on managed hosting with persistent memory and 24/7 uptime

MyClaw (OpenClaw): A managed hosting layer for OpenClaw is being pitched as “one click” deployment with 24/7 uptime and “memory intact” operation, explicitly positioned as removing Docker/server setup friction in the MyClaw intro and the Managed cloud claim; the product page lists early pricing starting at $9/month (Lite) and $19/month (Pro), alongside features like auto-updates, encryption, and backups as described on the Product page. The same thread frames the prior setup experience as “6+ hours” of dependency and maintenance work, per the Setup pain list, and claims 1,200+ people on a waitlist in the Waitlist note.

What’s still unclear from the tweets is how much model choice, tool permissions, and isolation controls MyClaw exposes for teams versus solo creators.

Team9 open-sources workspace-based OpenClaw deployment for org agents

Team9 (OpenClaw): Team9 is promoting an open-source, workspace-based path to deploy organization-specific OpenClaw agents “in the time it takes to read this tweet,” including the claim you can deploy 100+ agents, as described in the Deploy 100+ agents thread and reinforced by the Open-source pitch.

• Setup flow: The thread collapses deployment into “create workspace → deploy Clawdbot → done,” per the Three-step setup.

• Built-in capabilities: Claimed features include X API access, web crawling/page extraction, sitemap analysis, and “Gemini Nanobanana image generation,” as listed in the Capabilities list.

• Source availability: The codebase is pointed to via the GitHub org, with messaging focused on avoiding vendor lock-in.

The tweets don’t include pricing or hosting requirements, so “infrastructure-free” should be read as a product goal rather than a spec sheet.

OpenRouter’s Pony Alpha gets pitched as a free 200k-context default for OpenClaw

Pony Alpha (OpenRouter model): A “Pony Alpha” model on OpenRouter is being promoted as $0 input/output tokens with a 200,000 context window, and is suggested as an OpenClaw default for low-cost agent work, as shown in the Free model claim.

The screenshot also flags that “all prompts and completions…are logged by the provider,” which matters for creators routing client work or proprietary scripts through an always-on agent, per the Free model claim.

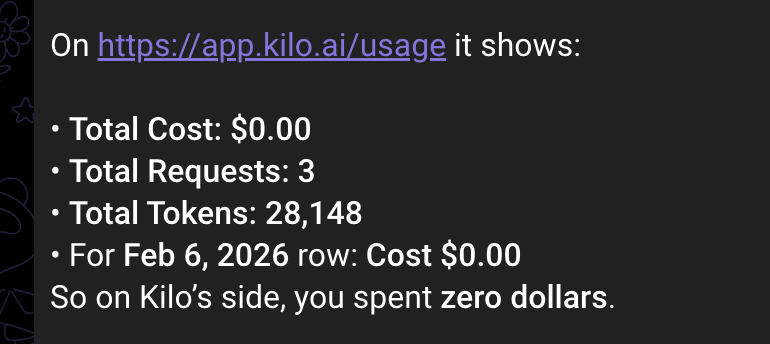

A near-$0 OpenClaw coding stack uses KiloCode CLI with free MiniMax M2.1

OpenClaw + KiloCode (workflow): One cost-minimization recipe describes powering OpenClaw with local or cheap models (Ollama on Mac; Haiku/Kimi on VPS; or a free OpenRouter option) and then using the KiloCode CLI “for coding” because it “lets you use MiniMax M2.1 for free,” per the Low-cost stack.

The shared usage screenshot shows $0.00 total cost for 28,148 tokens, presented as proof the routing works in practice, as shown in the Low-cost stack.

Clawbox 3 is positioned as purpose-built hardware for OpenClaw

Clawbox 3 (OpenClaw hardware): A new hardware box, Clawbox 3, is introduced as “the best hardware to run openclaw,” per the Hardware intro.

The post doesn’t include specs, pricing, or target workloads; what’s visible is a compact enclosure with front-panel status lights and ports, suggesting a small always-on node intended to reduce the friction of running an agent runtime locally.

Picoclaw compresses OpenClaw setup into a one-line shell alias

picoclaw (OpenClaw wrapper): A minimalist wrapper called picoclaw is presented as “the tiniest openclaw,” installed via a one-line command that aliases claude to picoclaw, as shown in the One-line install. The same author frames the proliferation of micro/wrapper variants as a reaction to perceived “bloat,” per the Wrappers bloat riff.

No details are provided on what functionality picoclaw keeps or drops (memory, tools, permissions), but the intent is clearly to reduce activation energy for day-to-day agent usage.

Genstore pitches an AI agent team for day-to-day dropshipping operations

Genstore (agent productization): A “Genstore AI Agent Team” is being pitched as handling “store setup,” “product management,” and “daily dropshipping workflows,” framing agents as packaged operations staff rather than a single assistant, as described in the Genstore agent pitch.

The tweet reads like positioning rather than a spec release (no tooling list, integrations, or pricing), but it reflects a continued shift toward turnkey, role-based agent bundles aimed at non-technical creators and operators.

🛡️ Creator trust shocks: suspensions, contest credibility, and alleged shot theft

The main trust/safety storyline is platform enforcement and creator-protection fallout: a major AI account suspension, skepticism around contests, and allegations of reworked/stolen footage being passed as AI.

X suspends @higgsfield_ai account as creator backlash escalates

X / Higgsfield AI: Multiple creators are sharing the same “Account suspended” screen for @higgsfield_ai, indicating an enforcement action by X, with no public detail on the specific rule in the screenshots shared today; the suspension is echoed across several posts including a Turkish-language notice in Suspension screenshot and broader reaction in Suspension follow-up and Profile suspension capture.

The immediate creative-industry impact is reputational and distributional: when a big AI video brand loses its primary channel, contests, launches, and attribution narratives get re-litigated in public threads, as shown by the follow-on contest skepticism and IP accusations in Contest comment thread and IP theft allegation.

Creator accuses Higgsfield of retexturing ‘Jumper’ shots and calling it AI

Higgsfield AI (IP allegation): A comment screenshot circulating today shows a filmmaker/creator alleging Higgsfield “stole shots from my film Jumper, reworked the textures, and are now passing it off as AI-generated content,” with other commenters amplifying the callout in Jumper allegation screenshot.

This remains an allegation in the material shared (no side-by-side proof in these tweets), but it’s a concrete example of how AI-video tool branding can collide with provenance expectations: accusations now show up directly inside contest/launch comment threads rather than only in subtweets, as seen in Jumper allegation screenshot.

Higgsfield $500k contest credibility questioned over early ratings and canned replies

Higgsfield AI (Instagram contest): Following up on Contest backlash (renewed legitimacy scrutiny), a screenshot of the “$500k Action Contest” comment section shows a user asking how—about 40 minutes after announcement—there were already 5 entries with ratings, and the official account replying “You got it! Check DMs,” which onlookers frame as bot-like or canned in Contest timing concern.

The signal for creators is about contest trust mechanics (foreknowledge, rating integrity, and real human moderation), not the prize headline—especially when the response pattern itself becomes part of the evidence trail, as discussed in Contest timing concern.

Post-suspension rhetoric shifts to creator power and accountability

Creator accountability framing: In the wake of the suspension chatter, some creators are explicitly framing the moment as leverage—“Companies operate at the will of the users” and “artists are slowly crawling back their power”—as stated in Teachable moment post, alongside celebratory reactions like “Today is a victory for decency” in Victory claim.

A parallel thread reinforces “receipts-first” watchdog positioning—arguing patterns matter more than tone—laid out in Receipts framing. The common theme is less about one platform decision and more about public pressure as an enforcement multiplier.

Verification and account-recovery issues surface alongside enforcement drama

X account reliability (creator ops): Separate from the Higgsfield threads, creators are also flagging identity and continuity problems—one notes “Longest it has taken to get my checkmark back in months” in Checkmark delay note, while another encourages following an AI creator who was hacked and is “starting all over again” in Hacked creator restart.

These posts sit adjacent to the suspension discourse because they affect the same practical question for AI creatives: whether your distribution identity (handle + verification) is stable enough to be a dependable release channel, as reflected by the relief tone in Checkmark back post.

🏁 Notable drops & builds (shorts, art pieces, brand characters, and new studios)

A grab-bag of finished work and production milestones: cinematic art threads, mechanical/steampunk builds, brand character refreshes, and creator studios turning into physical spaces.

A physical “real life AI Studio” opens in El Salvador as a creator hub

AI Studio in El Salvador (starks_arq): A new “real life AI Studio” setup is announced in El Salvador (Bitcoin Beach), positioned as a place to work with brands/filmmakers/authors and to livestream the buildout journey, as described in the [studio announcement](t:81|studio announcement).

• Direction of travel: The same account frames upcoming work as explicitly blending traditional art with AI, per the [traditional art crossover post](t:162|traditional art crossover post).

Bolt gets a character refresh, with a Nano Banana Pro to Kling 3.0 breakdown

Bolt character refresh (AllarHaltsonen): A new set of “extra swag” characters for Bolt is presented as both a finished reel and a step-by-step build: starting from a visual reference, generating multiple angles/variants with Nano Banana Pro, then animating via Kling 3.0 (including Multi-Shot), as shown in the [character montage](t:47|character montage) and the [workflow steps](t:247|workflow steps).

• Prompt fragments worth archiving: The shared prompts include a “2025 rap style” fashion-direction spec (wardrobe/jewelry/location) and a second pass asking for “alternative shot” camera angles (macro, top-down, dutch angle), per the [prompt examples](t:269|prompt examples).

• Toolchain detail: The build explicitly routes through InVideo for Nano Banana Pro generation, then uses Kling 3.0 for animation choices, according to the [Nano Banana Pro step](t:247|Nano Banana Pro step) and the [Kling animation step](t:278|Kling animation step).

Erosion of Eternity drops as a four-still cinematic sequence with a full version link

Erosion of Eternity (awesome_visuals): A short, storyboard-like image set titled “Erosion of Eternity” spotlights a stark desert journey—cloaked silhouettes crossing a pale plain under looming eroded rock forms, including close-ups that read like weathered monumental faces, as shown in the [thread opener](t:0|thread opener) and followed by the [full version link](t:66|full version link).

• What creators can lift from it: The sequence leans on wide establishing scale + texture studies (striations, harsh side light, deep shadow) in a way that functions as quick reference for environment moodboards, per the [image set](t:0|image set).

Hidden Objects Level .005 posts a peacock puzzle built in Adobe Firefly

Hidden Objects Level .005 (Adobe Firefly): Following up on Puzzle metrics (measuring puzzle engagement), Glenn drops “Hidden Objects | Level .005,” a peacock illustration puzzle asking viewers to find five embedded objects, explicitly labeled as made in Adobe Firefly in the [Level .005 post](t:172|Level .005 post).

• Format cue: The layout includes an object strip of the five targets at the bottom, reinforcing the “post-as-game” packaging that keeps the series legible in-feed, as shown in the [puzzle image](t:172|puzzle image).

COMUNIDAD becomes a Bionic Awards 2026 Best Pilot finalist, with a March 5 live slot

COMUNIDAD (Bionic Awards): Victor Bonafonte says COMUNIDAD was selected as a finalist in “Best Pilot” at the Bionic Awards 2026, with finalists presenting live in London at Rich Mix on March 5 and a limited number of public tickets available, according to the [finalist announcement](t:213|finalist announcement) and the follow-up [thanks post](t:405|thanks post).

The Artificier shares a tactile steampunk mechanism build clip (macro assembly)

The Artificier (Gossip_Goblin): A vertical short titled “The Artificier” focuses on tactile, close-up assembly of ornate gears into a glowing steampunk-style mechanism, as shown in the [build clip](t:13|build clip).

• Aesthetic note: The camera language is all macro details and fast hands-on construction beats, which maps cleanly to “practical prop / workshop montage” pacing used in a lot of AI-era short-form craft and VFX-adjacent reels, per the [build clip](t:13|build clip).

📄 Research radar (quantization, self-evolving agents, and RL training fixes)

Light but relevant research feed today: mostly model training/efficiency papers and agent evaluation benchmarks—no major creative-model releases in this slice.

Kimi K2.5 lands on Fireworks AI with a “use cases over benchmarks” pitch

Kimi K2.5 (Fireworks AI): A distribution signal: Kimi K2.5 showing up on Fireworks AI is framed as pushing “frontier models with real-world use cases, not just benchmarks,” per the Fireworks AI post that spotlights the pairing.

The creator angle is mostly about optionality: more credible “production” endpoints for strong general models tends to broaden what teams will actually wire into pipelines (agents that outline scripts, rewrite dialogue variants, or generate metadata) when the default model choice is constrained by rate limits or cost elsewhere.

RaBiT proposes residual-hierarchy binarization to keep 2-bit LLMs accurate

RaBiT (paper): A new quantization-aware training approach targets 2-bit / extreme quantization without collapsing model quality, arguing that typical QAT suffers from “inter-path co-adaptation” in parallel residual binary paths and proposing a residual hierarchy so each binary path corrects the previous one, as described in the paper thread and detailed in the ArXiv paper; the paper claims a 4.49× speed-up on its accuracy–efficiency frontier (treat as provisional until you’ve checked the exact hardware + kernel assumptions in the paper).

For creative teams, the relevance is straightforward: better 2-bit behavior tends to mean cheaper local inference for long-running assistants (story ideation, editing helpers, asset tagging) where latency and GPU availability decide whether the tool is “always on” or “only sometimes.”

F-GRPO aims to stop RL policies from learning only the obvious

F-GRPO (paper): A new RL training tweak called difficulty-aware advantage scaling is pitched to reduce a common failure mode in policy optimization—models “learn the obvious” and forget rare behaviors—as introduced in the paper teaser.

If this holds up, it’s directly relevant to creative-agent reliability: the rare cases are often the ones you care about (edge-style constraints, specific character rules, non-default pacing beats), and RL-tuned models that drop those behaviors can feel inconsistent even when average quality looks fine.

SE-Bench benchmarks agents that evolve by internalizing knowledge

SE-Bench (paper): A new benchmark is proposed for evaluating self-evolving agents—systems that are supposed to improve over time by internalizing knowledge from experience—positioning the work as a more structured way to test whether “agent self-improvement” is real or just prompt/UI tricks, as summarized in the paper link and expanded in the ArXiv paper.

This matters to creators building persistent research or writing agents because the hard part isn’t a single run—it’s whether the agent gets better across repeated briefs (series work, episodic story worlds, ongoing brand style constraints) without drifting or forgetting what it learned.

🧯 Limits, tokens, and fatigue: when the assistant blocks the workflow

Reliability/limits chatter centers on Claude usage caps and token lockouts, plus creators reacting to how quickly productivity tools hit hard ceilings during real work.

Claude’s “usage limit reached” screen keeps showing up mid-work

Claude (Anthropic): A fresh “usage limit reached” moment hits at 00:30 with the reset time shown as 5am, continuing the recurring cap/lockout friction noted in Reset timer UI and now turned into shareable creator content in the Usage limit reset clip.

The clip framing matters because it shows the ceiling as part of the cultural workflow (not just a support ticket): limits are becoming something creators reference, parody, and plan around rather than an edge case, as depicted in Usage limit reset clip.

“Out of tokens for 4 hours” becomes the new dev horror caption

Token quotas (LLM apps): A short skit visualizes the hard stop as an explicit timer—“You are out of tokens for the next 4 hours”—with the creator noting it was made in Kling 3.0 and calling out imperfect text rendering in the Out of tokens skit.

This lands as a workflow-reliability signal more than a feature demo: the “come back later” constraint is being treated like an expected part of using AI tools under subscription limits, as shown in the Out of tokens skit.

Prompt packs get marketed as the layer between Claude hype and real output

Claude prompting (Anthropic): A creator pitches a “1000+ mega prompts” pack as the missing operational layer—less about model choice, more about repeatable instructions that map to real tasks—positioned explicitly as “barely anyone knows how to actually use it to replace real work,” per the Mega prompts pitch.

The distribution mechanic is also part of the pattern: “Comment ‘AI’ and I’ll DM you,” making prompt libraries behave like gated templates/products rather than open prompt-sharing threads, as described in Mega prompts pitch.

The $20 Claude Pro tier turns into a constraint meme

Claude Pro (Anthropic): A “POV: you’re on the $20 Claude subscription” post frames tier choice as lived experience (speed, capacity, and ceiling), using a quick edit built around “Claude Pro / Powered by Claude 3 Opus” in the Claude Pro meme clip.

It’s not new product info, but it’s useful as a read on sentiment: creators are compressing subscription constraints into a recognizable meme template, as shown in Claude Pro meme clip.

Some creators say Claude replaced their ChatGPT fallback

Claude vs ChatGPT (Anthropic/OpenAI): One creator claims they no longer have reasons to “switch back into ChatGPT occasionally,” arguing “Claude is now… better at every single use case for me,” in the Tool preference claim.

Even though the post doesn’t quantify workloads or tasks, it adds a clear adoption signal inside the same week as recurring cap/limit chatter: preference can strengthen even while reliability ceilings stay front-of-mind, as stated in Tool preference claim.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught