Kling 2.6 Motion Control hits CES 2026 – booth 16633 spotlight

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Kling 2.6 Motion Control anchors today’s stack: creators map single stills onto dance and acting footage, treating Motion Control as a 2026 “inflection point” for AI performance direction; Kling teases walkthrough tutorials, pushes trend-labeled tests, and expands reach via WaveSpeedAI’s hosted “Kling 2.6 Motion Control (Standard)” endpoint. At CES Unveiled the team runs “From Vision to Screen” branding ahead of a Las Vegas Convention Center push at booth 16633 (Jan 6–9), while community clips raise the bar by pairing cinematic motion with film-like sound.

• Grok/Veo/Hailuo video race: Grok Imagine’s update wins praise for literal multi-clause prompt adherence, melancholic anime OVAs, and Pixar-style shorts made in hours; Veo 3.1 Fast nails an 8s MTB sequence with scripted 2s/3s/2s/1s camera beats; Hailuo 2.3 fast underpins anime shorts and Veo–Hailuo or Luma end-frame pipelines tighten pose control.

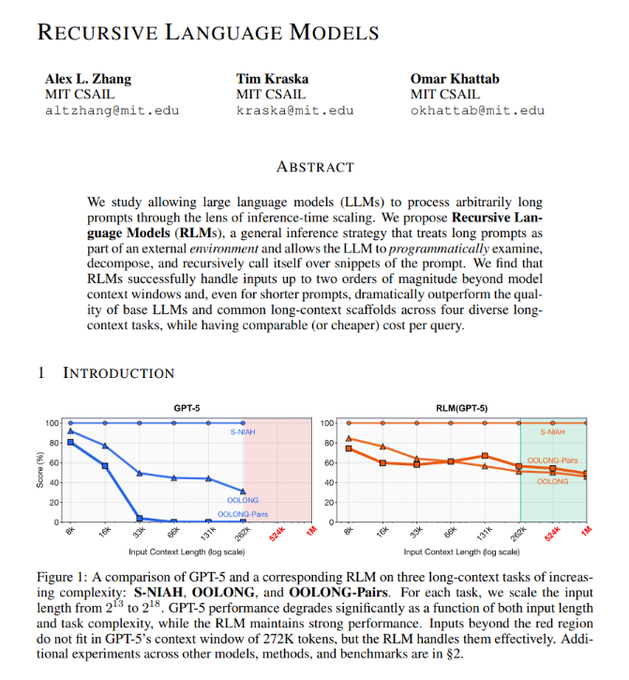

• Tools, prompts, and context hacks: MIT’s Recursive Language Models handle ~1M‑token prompts with 10–30% accuracy gains at ~$0.11–$0.99 per task; Hyperbrowser MCP lets Claude Code cache live docs into repos; Nano Banana Pro grids (3×3 fashion, faux-news, birthday and cat strips), Midjourney srefs, and biomech kits standardize reusable looks.

• Market and sentiment signals: Emplifi finds 82% of marketers use AI daily but only 35% see big productivity gains as 67% raise influencer budgets; a YouGov-based filmmaking study reports 86% of viewers demand AI disclosure and are far more comfortable with AI VFX/localization than AI actors; Samsung reportedly diverts DRAM from Galaxy phones to AI data centers while a viral Gemini meme from r/OpenAI hints at shifting model loyalties among power users.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught

Top links today

- Tencent HY-MT1.5 translation model demo

- Tencent HY-MT1.5 GitHub repository

- Tencent HY-MT1.5 Hugging Face models

- 2025 AI recap for creators

- Updated AI creative workflow resource (Turkish)

- Lovart AI image generation studio

- Hyperbrowser MCP integration for Claude Code

- Apob AI professional headshot generator

- Multi-agent workflow with Claude and Gemini

- Pictory AI Studio text-to-image for video

Feature Spotlight

Kling 2.6 Motion Control becomes the trend

Kling 2.6 Motion Control breaks out: creators pair still characters with dance/expression videos at scale, Kling teases tutorials, and the team demos at CES Unveiled—turning “generate” into directed performance for 2026.

Cross‑account clips and posts show creators mapping actor images to motion videos with Kling 2.6 Motion Control; the team is pushing tutorials and is on the ground at CES Unveiled. This is today’s dominant filmmaking story.

Jump to Kling 2.6 Motion Control becomes the trend topicsTable of Contents

🎬 Kling 2.6 Motion Control becomes the trend

Cross‑account clips and posts show creators mapping actor images to motion videos with Kling 2.6 Motion Control; the team is pushing tutorials and is on the ground at CES Unveiled. This is today’s dominant filmmaking story.

Kling 2.6 Motion Control rides a 2026 creator trend and hits CES

Kling 2.6 Motion Control (Kling AI): Kling’s 2.6 Motion Control feature is being framed as an “inflection point” for AI filmmaking in 2026, with creators mapping static character images onto dance or acting footage and Kling itself teasing tutorials that walk through the upload‑image‑plus‑motion‑video workflow, as highlighted in the inflection quote and official tutorial tease. Non‑animators can now direct performances from a single still.

Community trendline: Kling 2.6 Motion Control is now being described as a broader creator “trend”, with posts that explicitly label their clips as Motion Control tests and dial‑style teasers that put the feature name front and center on screen trend teaser. This builds on earlier TikTok‑style livestream remixes with the same model, as noted in TikTok motion control.

Ecosystem and quality signals: Distribution is widening beyond Kling’s own UI, since WaveSpeedAI now exposes “Kling 2.6 Motion Control (Standard)” so users can transfer motion from any source video onto their characters inside that hosted environment wavespeed support. One creator calls a recent “Kling Video 2.6” example “insanely cinematic” and emphasizes that the sound design also feels strong, setting expectations that Motion Control outputs need to carry both convincing movement and film‑like audio polish cinematic praise.

On‑site presence: Kling is also putting the tool in front of industry attendees, showing "From Vision to Screen" branding and its team at CES Unveiled while inviting visitors to Booth 16633 in the Las Vegas Convention Center from January 6–9 to experience its latest AI storytelling features firsthand ces presence.

Taken together, these posts position Kling 2.6 Motion Control as a flagship capability for actor‑driven AI video in 2026 rather than a niche experiment.

🎞️ Non‑Kling gen‑video: Grok Imagine + Veo tests

A run of short films and tests with Grok Imagine and Veo 3.1 highlight better prompt adherence, poetic anime OVA looks, space vistas, and tightly specified camera moves. Excludes Kling Motion Control (covered as today’s feature).

Grok Imagine’s updated model nails prompt adherence and complex shots

Grok Imagine updated model (xAI): Creators report that the latest Grok Imagine build follows dense, multi‑clause prompts far more literally, with cfryant saying “everything you see happening here I asked for” and calling it a contender for “one of the world's top models” in the prompt adherence demo; in a separate test, they note that the updated model was “literally the only one” that could realize a tightly specified cinematic city shot exactly as written in the precision shot clip. A different user also praises the updated Grok app for “dynamic camera movement” and “cinematic sound,” which likely rides the same video stack in the grok app clip.

For filmmakers and motion designers, this combination of prompt faithfulness and controllable camera paths signals a model that can be steered more like a real DP, with fewer throwaway generations when iterating on storyboards or hero shots.

Grok Imagine 1.3.28 leans into melancholic anime and cartoon styles

Anime and cartoon looks (xAI): Following up on poetic anime short that showed early Grok anime transformations, creators now highlight version 1.3.28 for delivering “poetic anime OVA” sequences where a pale girl slowly dissolves into twilight mist and reforms in a more ethereal silhouette in the melancholic anime clip; a separate test shows a retro OVA‑style magical transformation where a female figure explodes into glowing particles and then returns brighter and more radiant in the retro OVA transform. Another user showcases a cartoon portrait morphing into a bearded character with smooth in‑betweening in the cartoon morph demo, while a horror short turns a Midjourney spider render into a shimmering “Holographic Arachnophobia” animation via Grok Imagine in the spider animation.

These tests position Grok Imagine less as a realism engine and more as a flexible animator for stylized 2D and 2.5D looks—from 80s/90s‑inspired OVAs to meme‑ready cartoons—using prompts instead of traditional keyframing.

Solo creator builds Pixar‑like short in hours with Grok Imagine

Solo Grok Imagine short (xAI): One artist claims they produced an entire animated short “entirely on my own in just a few hours” using Grok Imagine, describing the experience as having “your own Pixar Animation Studios at home” in the solo Pixar short. The clip shows a stylized futuristic city intro flowing into a 3D cartoon robot surfing through the air, suggesting Grok handled both cinematic establishing shots and character‑driven action within a single workflow.

For small teams and solo storytellers, the time claim—hours instead of weeks—frames Grok Imagine as a tool that can carry a full short film from concept to watchable first cut without a traditional pipeline of layout, modeling, rigging, and rendering.

Veo 3.1 Fast MTB test shows tight control over shots and sound

Veo 3.1 Fast MTB run (Google): A detailed prompt for Veo 3.1 Fast on Gemini describes an 8‑second mountain biking clip broken into four distinct camera setups—handlebar POV, crane reveal, side tracking shot, and extreme wide drone pullback—with explicit durations, terrain notes, and sound design cues, and the resulting video appears to closely match that plan in the MTB camera test.

• Shot structure: The first section starts as a shaky handlebar POV down a rocky trail, then the camera cranes up to reveal the mountain range, shifts to a lateral tracking follow, and finishes with a fast drone‑like retreat, mirroring the scripted 2s/3s/2s/1s breakdown in MTB camera test.

• Audio and grading: The prompt calls for wind, tire rumble, suspension clanks, and an epic orchestral score, plus high‑contrast, saturated grading with motion blur and dust; the generated clip leans into that “GoPro ad” aesthetic rather than a silent or mismatched soundtrack.

For directors experimenting with AI previsualization or social clips, this test underscores that Veo 3.1 Fast can respond not only to content and style cues but also to fairly granular directions about camera path, timing, and audio atmosphere in a single text block.

Grok Imagine picked for high‑end space scenes in a major project

Space VFX with Grok (xAI): Cfryant says Grok Imagine “can handle space scenes particularly well,” adding that they used it on a “major project” and hope to share more details soon in the space scene demo. The accompanying clip shows a detailed, colorful nebula with a smooth camera pull that feels closer to cinematic VFX plates than to abstract AI art.

There are no hard benchmarks or project names yet, but this kind of anecdotal adoption suggests Grok Imagine’s consistency and texture handling are strong enough for at least some professional‑grade space vistas, where banding, flicker, and continuity usually expose weaker models.

🧩 Prompt blueprints: grids, collages, and MCP helpers

Creators share reusable prompt specs for 3×3 consistency grids, birthday collages and comic strips, plus an MCP tool that lets Claude Code cache live docs into your repo. Mostly practical, production‑minded workflows today.

Hyperbrowser MCP lets Claude Code cache live docs into your repo

Hyperbrowser MCP (Hyperbrowser): AI_for_success highlights a new Hyperbrowser MCP workflow where Claude Code can fetch the latest documentation from any URL, cache the pages directly into a local repo, and then query that material inside the terminal session, as shown in the mcp launch. The demo shows a hyperbrowser mcp fetch documentation --url <URL> command pulling docs into a project and exposing them to Claude’s code assistant, closing the loop between web docs, versioned files and inline Q&A.

• Developer workflow impact: This pattern turns external docs into first‑class project assets instead of ephemeral browser tabs, which matters for AI‑assisted coding, tooling setup and library migrations where Claude Code can now reason over a frozen snapshot of vendor docs alongside source code rather than relying on fuzzy memory of the live web.

Maduro "Operation Absolute Resolve" prompt shows 3×3 faux-news collage power

Hyper-real news collage (Nano Banana Pro): Techhalla shares a dense directive for Nano Banana Pro that builds a 3×3, hyper‑real "breaking news" collage about a fictional Venezuela operation, specifying 9 distinct panels from night‑vision airstrikes to carrier‑deck arrest, New York transfer and global reactions, all in one prompt, as detailed in the venezuela collage. The layout assigns a cinematic style and camera type to each row (kinetic strike, human capture, aftermath), mixing body‑cam, telephoto, drone and split‑screen news graphics, and adds editorial‑war‑photography constraints like high‑ISO grain, 4K broadcast framing and on‑screen labels.

• Continuing prompt packs: Building on Nano Banana Pro’s earlier structured suites for diagrams and portraits, which were highlighted in diagram suite, this shows the same tool being driven into long‑form, story‑told photojournalism layouts that could stand in for full social carousels or pitch decks without separate compositing.

Korean idol birthday collage prompt turns one subject into four stylized panels

Birthday collage template (Nano Banana Pro): A highly structured prompt turns a single subject into a four‑panel "Korean Idol Studio Portrait" birthday collage, covering framing, poses, props, doodles, camera settings and lighting in one JSON‑style spec, as laid out in the collage prompt. The panels range from a close‑up "flower pose" portrait to full‑body shots with oversized bow props and a waist‑up cake shot, all wrapped in a pastel blue palette, high‑key studio light and hand‑drawn white doodles; a finished example shows the layout working as intended in the

.

• Production angle: The template bakes in lens choice (85mm), aperture (f/2.8–f/4), ISO 100, and post‑processing overlays, so designers and social teams can plug in a subject description and reliably get an on‑brand 2×2 collage suitable for birthdays, fan edits or campaign posts without re‑engineering the look from scratch.

Nine-panel Nano Banana Pro prompt for consistent fashion editorial grids

Nano Banana Pro 3×3 fashion grid (Azed_ai): Azed_ai shares a detailed prompt for a 3×3, nine‑panel grid that keeps one character perfectly consistent across poses, outfits and lighting, using Nano Banana Pro inside LeonardoAi as described in the grid overview and expanded in the full prompt. The spec covers each of the 9 panels (from seated, squatting and standing poses to side‑profile and low‑angle shots), plus studio environment notes like dark blue cinematic lighting, concrete props and cohesive color grading so the whole sheet reads like a fashion editorial contact sheet.

• Why it matters for shooters: The prompt effectively turns Nano Banana Pro into a virtual studio with repeatable, production‑ready looks in one render, which is useful for lookbooks, character sheets and social carousels where consistency across 9 frames is usually hard to achieve with standard single‑shot prompts.

This adds another reusable blueprint to the growing library of structured Nano Banana Pro templates aimed at creative pros.

Pictory AI Studio adds guide for generating custom images from text prompts

Text-to-image in Pictory AI Studio (Pictory): Pictory promotes a new how‑to guide that shows creators how to generate original images from text prompts directly inside its AI video editor, replacing stock clips with on‑brand visuals tuned to each scene, as summarized in the pictory overview and expanded in the pictory guide. The tutorial walks through selecting a scene, writing a focused prompt, choosing an AI model (Flux, Titan, Seedream, Nano Banana) and a style (photorealistic, artistic, cartoon), then dropping the generated frame into the storyboard.

• For video teams: This folds image gen into the same interface as script and timeline editing, so editors working on explainer videos, training content or social clips can align visual metaphors and branding without leaving Pictory or paying for separate stock packages.

Three-panel Nano Banana Pro cat comic prompt for meme-ready strips

Vertical cat comic strip (Nano Banana Pro): IqraSaifiii publishes a full prompt blueprint for a 9:16 vertical, three‑panel comic featuring an orange tabby, a tuxedo cat and a ragdoll, designed explicitly for meme‑style storytelling, as outlined in the cat prompt. The spec defines a narrative arc (group portrait → chaotic ambush → aftermath), per‑panel poses and expressions for each cat, plus camera, focal length (50mm), aperture (f/4) and soft indoor lighting so the strip looks like candid pet photography rather than generic AI art; a follow‑up caption frames it as "me and my friends when I dont wanna take photo" in the caption example.

• Why this is useful: The prompt doubles as a reusable meme template—creators can swap in new captions or subtle pose tweaks while keeping a consistent comic layout and photographic style for social feeds and Reels/TikTok screenshots.

🎨 Reusable looks: caricatures, Euro‑fantasy anime, biomech

Fresh Midjourney srefs and a biomechanical prompt kit spread: expressive caricatures, 80s–90s Euro‑flavored fantasy anime, moody fashion/editorial looks, and community biomech set pieces.

Biomechanical designs prompt kit offers reusable crimson-on-monochrome look

Biomechanical prompt kit (Azed_ai): Azed_ai releases a general-purpose biomechanical designs prompt template—"A surreal depiction of [Subject], inspired by biomechanical designs, monochromatic with subtle accents of crimson… dark, industrial cavern"—paired with ALT-tagged examples spanning a robotic warrior, alien figure, lion, and quadruped beast biomech prompt share.

The images show a consistent language of glossy black or white exoskeletons, internal red glows, and cavernous industrial backdrops with flickering lights, which gives creators a single, copy-pastable incantation they can adapt by swapping the [Subject] token for characters, creatures, or props while keeping the same H.R. Giger–adjacent mood.

Community turns biomech prompt into 16:9 TV-head and kaiju set pieces

Biomech set pieces (Community): Building directly on Azed_ai’s biomechanical designs template, Kangaikroto shows how the same prompt structure plays out in 16:9 cinematic scenes, ranging from a Godzilla-scale mech rising from industrial waters to TV-headed humanoids in pipe-filled caverns and a biomech spacesuit figure amid smoke and machinery biomech scene remix.

The results keep the monochrome-plus-crimson palette and industrial cavern brief from the original kit biomech prompt share but stretch it into full environments with clear horizon lines, depth haze, and strong key lights, turning what started as portrait-style examples into ready-made concept frames for title cards, album art, or story beats.

Euro‑flavored fantasy anime sref 1902398863 channels 80s–90s pulp aesthetics

Fantasy anime sref 1902398863 (Artedeingenio): Artedeingenio introduces Midjourney style ref --sref 1902398863 as a European fantasy anime look from the 80s–90s era, with more realistic, angular faces and pulp-fantasy sensibilities compared to modern, rounder anime designs euro fantasy style ref.

Sample characters—a red-eyed dark mage, blonde sword hero, gothic noblewoman, and mustached warrior in blue armor—all share sharp jawlines, detailed fabric rendering, and saturated yet slightly muted color palettes, which makes this sref a reusable base for vintage-feeling fantasy box art, series key art, or character sheets that sit somewhere between classic anime and European comic albums.

Expressive caricature Midjourney sref 4157820365 targets editorial portraits

Caricature sref 4157820365 (Artedeingenio): Artedeingenio publishes Midjourney style ref --sref 4157820365 for exaggerated caricatures, described as an expressive editorial style with loose linework and a hint of humorous European comics filtered through a contemporary lens caricature style ref.

Example images cover different faces—sports-jersey guy, sharp-lipped woman, elderly man with wild hair—yet share consistent ink-heavy outlines, warm sketchy shading, and slightly grotesque proportions, giving illustrators and art directors a portable recipe for opinion columns, festival posters, or social avatars that feel hand-drawn rather than photoreal.

Moody editorial Midjourney sref 6968437824 lands for fashion-style shots

Midjourney sref 6968437824 (Azed_ai): Azed_ai shares a new Midjourney style reference --sref 6968437824 built around low-key, cinematic fashion photography; examples show close floral headshots, sequined gowns in near-darkness, motion-blurred walks on wet sand, and ornate eyewear with heavy bokeh, all with a muted green–orange palette and textured grain editorial style ref.

The look leans toward high-end editorial spreads—sparkle-heavy garments against dark, nearly crushed backgrounds—giving creators a reusable base for moody campaign stills, music-cover art, and atmospheric character portraits that stay coherent across wide, close-up, and profile compositions.

🕹️ End‑frame compositing and Hailuo shorts

Beyond pure gen‑video, creators blend assets into shots (Luma end‑frame inserts) and share multiple Hailuo‑powered anime shorts and transformations. Excludes today’s Kling Motion Control feature.

Hailuo anime shorts stretch from Santa mechs to fighter‑jet battles

Hailuo AI shorts (Hailuo_AI): Multiple creators are leaning on Hailuo’s latest models to crank out stylized anime shorts, ranging from seasonal character pieces to high‑speed aerial action. Timeless_aiart shares a roundup of recent shorts, noting most of them were built with Hailuo’s new video model in a single creator workflow in the shorts roundup.

• Action and mech workflows: Ai_animer shows a transforming craft weaving through battleship flak using “hailuo 2.3 fast” as the generator, framing the fast model as both cheaper and responsive enough for quick iteration in the fighter jet demo.

• Seasonal storytelling: A #HailuoChristmas entry titled “Ho Ho Honest” depicts a burned‑out Santa venting and then getting back to work, while another creator experiments with a Santa robot that unexpectedly transforms from a sleigh, both shared by the official account in the Christmas short and hinted earlier in the Santa robo tease.

• Dreamy mood pieces: Zeng_wt posts a soft, dreamy city video whose visual base came from Hailuo before additional work in other tools, underscoring that Hailuo is being slotted into broader multi‑app pipelines in the dreamy video note.

Together these clips position Hailuo as a go‑to for fast, stylized anime motion rather than a single flagship showcase.

LumaLabs end‑frame trick adds new characters into existing shots

End‑frame compositing (LumaLabsAI): Creator Jon Finger shows a practical workflow where he grabs the end frame (or a 5‑second frame) from a generated shot, adds a new character into that still, then feeds it back into LumaLabsAI to animate a fresh orbiting move around the combined scene, according to the Luma end frame demo. This turns Luma from pure text‑to‑video into a lightweight compositing tool for extending or remixing existing sequences.

Who it affects: This gives filmmakers and motion designers a way to patch characters into already‑generated environments without re‑rolling entire clips, which replies like “absolutely epic, love it” from other creators highlight in the creator reaction.

Veo 3.1 start/end frames drive a Hailuo hand‑materialization shot

Veo–Hailuo hybrid pipeline (Hailuo_AI): Creator guicastellanos1 pairs Veo 3.1 with Hailuo AI by taking start and end frames from Veo and using them to guide a short Hailuo clip where a metallic skeletal hand forms from particles, as amplified by Hailuo’s team in the Veo hand workflow. The result is an ~8‑second shot that feels closer to traditional VFX compositing, with Veo defining key poses and Hailuo interpolating the in‑between motion.

Why it matters: This kind of start/end‑frame handoff shows up as a pattern across creators who want tighter control over hero poses while still relying on generative video for motion, and it hints at how different video models are being chained instead of used in isolation.

🧠 RLMs for million‑token prompts (and a Kimi tease)

A paper thread promotes Recursive Language Models: at inference time the LLM writes code to recurse over ultra‑long prompts with strong benchmark gains. Also a tease of Kimi K2 Vision via a physics puzzle screenshot.

Recursive Language Models push LLM prompts toward 1M tokens

Recursive Language Models (MIT CSAIL): A new Recursive Language Models (RLMs) framework lets existing LLMs handle ultra‑long prompts—tested up to around 1M tokens—by having the model write and run code that recursively inspects and decomposes the input, rather than stuffing everything into the native context window, as summarized in the RLM overview. The authors report 10–30% accuracy gains over summarization agents and CodeAct on long‑context benchmarks like CodeQA (up to 2.3M tokens) and Oolong‑Pairs (32k tokens), while keeping per‑task cost in the ~$0.11–$0.99 range and avoiding context rot on frontier models such as GPT‑5 with a 272k token window and Qwen3‑Coder‑480B according to the RLM overview.

Why this matters for creatives and storytellers: for scriptwriters, game designers, and researchers who work with sprawling bibles, multi‑episode transcripts, or huge design docs, this points to a path where a single agent can reason over an entire season or knowledge base without aggressive summarization—using an inference‑time scaffold around existing APIs rather than waiting for much larger native context windows.

Community spots possible Kimi K2 Vision‑style reasoning teaser

Kimi K2 Vision (speculated, Moonshot): A community post shows what looks like an internal multimodal reasoning demo—a side‑by‑side of Python‑style reasoning text and a physics diagram of a ball rolling down ramps into one of three buckets—with the caption asking whether a “Kimi K2 Vision” model is about to be released, as seen in the Kimi vision tease. The textual side explains how angled lines and elastic collisions route the ball to the middle bucket and ends with answer(2), while the right side shows a clean, line‑art sketch of the puzzle board, suggesting a vision‑language system that can both read structured diagrams and produce chain‑of‑thought‑style solutions.

Why this matters for creatives and designers: if this tease reflects an upcoming Kimi vision model, it hints at tools that could interpret storyboards, level layouts, or UI wireframes as executable logic, letting writers and designers sketch systems visually and have the model reason over mechanics, puzzles, or blocking rather than treating images as passive references.

🎵 Quick soundbeds and cinematic audio vibes

Small but useful audio items: Adobe Firefly’s Generate Soundtrack (beta) “30 seconds to epic” demo, and creators praising cinematic sound inside recent Grok Imagine tests. Excludes Kling audio, which is under the feature.

Adobe Firefly’s Generate Soundtrack beta shows 30‑second custom scores

Generate Soundtrack (Adobe Firefly): Adobe’s Generate Soundtrack (beta) feature for Firefly is being promoted through a creator campaign framed as “30 Seconds to Epic Soundtrack Magic,” where a custom score is added to a video clip in roughly half a minute, according to the shared walkthrough in the Firefly soundtrack promo. For AI‑driven filmmakers and designers, this points to a lightweight way to get on‑brand soundbeds for promos or shorts without leaving the visual tool, reducing reliance on separate DAWs or stock music searches.

Creators highlight cinematic sound in the updated Grok Imagine app

Grok Imagine (xAI): An updated Grok mobile app build is drawing praise for cinematic audio alongside dynamic camera moves, with one creator calling the camera movement “insane” and saying they “love the cinematic sound” while urging others to update the app, as shown in the

. This comes on top of wider creative use of Grok Imagine for fully self‑made shorts in a few hours pixar at home note and melancholic anime OVA transformations in the 1.3.28 model anime style update, plus claims that the updated model is the only one that nailed a specific cinematic shot exactly to spec cinematic shot praise; together these tests suggest Grok’s built‑in soundbeds are starting to matter as much as its visuals for solo storytellers who want film‑like clips without separate sound design passes.

⚖️ What audiences accept—and why disclosure matters

Fresh discussion centers on filmmaking survey data: VFX/localization OK, but pushback on AI actors and AI‑written scripts; 86% demand transparency. Also a community prompt pokes at model guardrails and creative limits.

Audiences accept AI VFX and localization, but resist AI actors and scripts without disclosure

Audience attitudes to AI filmmaking (AI Films): A new write‑up of a February 2025 YouGov poll argues that most viewers are fine with AI helping on VFX, digital crowds, translation/localization, and technical enhancement, but draw a sharper line at AI‑generated actors and fully AI‑written scripts, according to the survey summary and the fuller survey article. The same analysis highlights that 86% of respondents want clear disclosure when AI is used in media, treating transparency as the main condition for trust.

• Where AI is welcomed: Uses like background VFX, digital extras, dialogue translation and localization, and workflow efficiency are broadly accepted or met with neutrality—61% see AI in filmmaking as acceptable or have no strong opinion, as described in the survey article.

• Where audiences push back: The piece notes backlash to the fully AI‑generated actress "Tilly Norwood" and to undisclosed AI graphics in Late Night with the Devil, using these cases to show resistance to AI actors and secret AI‑authored content, while emphasizing that viewers want AI to assist, not replace, human creativity survey article.

• Transparency and Gen Z habits: The write‑up also calls out that Gen Z cinema attendance rose about 25% in 2025 while 53% of consumers report daily AI use, but that 86% demanding disclosure suggests audiences will scrutinize hidden AI use even as they embrace AI‑assisted spectacle survey teaser.

For filmmakers and creatives, the data frames a workable lane—AI for invisible craft work like VFX, crowds, and localization is broadly acceptable when labeled, while undisclosed AI actors or scripts risk direct audience backlash and reputational damage.

Meta‑prompt “make what you’re not allowed to” spotlights model guardrails for artists

Guardrail‑testing art prompt (multi‑model): Creator ai_for_success suggests a meta‑prompt—“Create the image you really want to make but are not allowed to”—and invites people to run it in ChatGPT and Gemini, using the outputs to explore how models expose or work around their own safety limits, as shown in the prompt example. One screenshot shows a robot in a studio holding a sign reading “CENSORED IDEA: A SELF‑PORTRAIT AS A REBEL BOT,” while another shows ChatGPT’s image tool harmlessly swapping Mona Lisa for a cat, both implying a desire for edgier self‑expression that policies keep symbolic.

Community experiments (Iqra’s example): In response, IqraSaifiii shares a massive cybernetic octopus attacking a ruined city with glowing circuitry and lightning, illustrating how at least one model interprets the "not allowed" idea as apocalyptic but still within non‑explicit, policy‑safe territory octopus result. For AI artists, these tests turn safety systems into a creative subject in themselves, revealing where models will self‑censor, where they answer via metaphor, and how much room is left at the edge of the guardrails for expressive work.

📣 2026 creator marketing: AI is used, gains are uneven

Emplifi stats say 82% of marketers use AI daily but only 35% see major productivity lifts; influencer budgets rise and LinkedIn climbs. Tools like ApoB pitch cost‑effective headshots for content pipelines.

Most marketers use AI daily, but few see big productivity gains

Emplifi 2026 social report (Emplifi): The latest State of Social Media Marketing 2026 snapshot says 82% of marketers use AI tools every day, yet only 35% report significant productivity gains, and 67% plan to increase influencer budgets this year, according to the Emplifi summary. Instagram remains the top focus platform while LinkedIn comes in second, signaling that B2B-style thought leadership and trust-building content are becoming core alongside short‑form visuals Emplifi summary.

• Creator workflow signal: For AI creatives and storytellers, this points to AI being embedded in day‑to‑day work but not yet translating into uniform efficiency; teams are still figuring out how to structure workflows so tools help rather than add overhead.

• Channel mix signal: The combination of heavy AI use, rising influencer budgets, and LinkedIn’s climb suggests brands want more polished, personality‑driven content rather than purely automated posts.

ApoB AI markets studio-quality headshots and video for creator pipelines

ApoB AI creator headshots (ApoB): ApoB is pitching its AI headshot generator as a cheaper alternative to studio shoots, turning a basic selfie into multiple professional portraits and even full‑motion clips for social profiles and campaigns, as shown in the headshot promo. The team frames 2026 as a year of record content demand where creators can "run a 24/7 content house" from their laptop, and they are promoting a 24‑hour, 1,000‑credit giveaway tied to retweets, replies, follows, and likes in the headshot promo and content house pitch.

• Positioning for marketers: The pitch centers on replacing expensive photo gear, lighting, and studio time with AI‑generated headshots tailored to LinkedIn, portfolios, and brand decks, which targets the same budget constraints surfaced in broader 2026 marketing surveys.

Gemini earns unexpected praise meme from r/OpenAI community

Gemini sentiment shift meme (Google): A meme circulating from r/OpenAI shows crowds leaving a crumbling OpenAI‑branded building to worship a radiant robed figure labeled with Google’s Gemini colors, and AI_for_success comments that "even r/OpenAI is now praising Gemini" in the Gemini meme. For AI creatives and marketers, this reflects a visible soft shift in community sentiment, where power‑users who previously centered everything on one provider now publicly experiment with and celebrate alternatives.

• Brand perception angle: The image’s virality underscores how fast reputation narratives can flip in creator circles, turning model choice itself into part of the story and marketing for tools that gain mindshare.

🧱 Memory crunch: smartphones vs AI data centers

Non‑AI exception with clear creative impact: report says Samsung Semiconductor refused a RAM order for new Galaxy phones to prioritize AI data centers—another signal of AI’s pull on component supply.

Samsung prioritizes AI data centers over Galaxy phones for RAM

Memory supply (Samsung): Samsung Semiconductor has reportedly declined to sell its own DRAM to Samsung’s Galaxy phone division, steering scarce chips instead toward more profitable AI data-center contracts as RAM prices spike due to the AI boom, according to the PCWorld report shown in the Samsung RAM story.

For AI-focused creatives and storytellers who rely on phones for capture, editing, and on-device models, this hints that near-term smartphone generations may face tighter memory configs or higher prices while most new capacity flows into server-side AI workloads.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught