.jpg&w=3840&q=75&dpl=dpl_3ec2qJCyXXB46oiNBQTThk7WiLea)

HY‑Motion 1.0 opens 1B+ text‑to‑3D motions – 200+ categories

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Tencent Hunyuan open‑sources HY‑Motion 1.0, a 1B+ parameter Diffusion Transformer with flow matching that turns prompts like “martial arts combo” into skeleton‑based 3D clips; the model spans 200+ motion categories across six classes and runs a full Pretrain→SFT→RL loop to tighten both physical plausibility and instruction following. Outputs retarget cleanly into Blender, Maya, and game engines; Tencent ships GitHub code, a Hugging Face entry, and docs so studios can self‑host or fine‑tune. Indie creators frame it as a fast text‑to‑motion blockout tool that generates usable animation assets instead of research‑only samples, pushing text‑driven character motion closer to standard 3D pipelines.

• Coding and web agents: MiniMax open‑sources M2.1 with 72.5% SWE‑multilingual, 74.0% SWE‑bench Verified, and 88.6% VIBE; agent demos auto‑build physics, fireworks, and music‑visualizer web apps, while a Hyperbrowser gift‑finder agent ranks live web products from natural‑language briefs.

• Voice, realtime video, and routing research: ElevenLabs reports 3.3M agents built in 2025 and rolls out a new SOTA Speech‑to‑Text stack; LiveTalk claims ~20× lower latency interactive video diffusion, Stream‑DiffVSR targets streamable super‑resolution, SpotEdit adds training‑free regional edits, and ERC loss tightens expert–router coupling in MoE models.

Alongside HY‑Motion, these pieces sketch an ecosystem where open coding models, voice agents, and low‑latency video backends are converging into full production stacks for games, film, and support operations.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught

Feature Spotlight

Text‑to‑3D Motion goes open source: HY‑Motion 1.0

Open‑sourcing HY‑Motion 1.0 puts high‑quality, instruction‑following 3D character motion in every creator’s toolkit—faster previz, prototyping, and indie animation without studio‑scale budgets.

Big for animators: Tencent Hunyuan open‑sources HY‑Motion 1.0, a 1B+ DiT text‑to‑motion model with a full Pretrain→SFT→RL loop and 200+ categories; outputs drop straight into standard 3D pipelines. Multiple creators echo why this matters for indie teams.

Jump to Text‑to‑3D Motion goes open source: HY‑Motion 1.0 topicsTable of Contents

🕺 Text‑to‑3D Motion goes open source: HY‑Motion 1.0

Big for animators: Tencent Hunyuan open‑sources HY‑Motion 1.0, a 1B+ DiT text‑to‑motion model with a full Pretrain→SFT→RL loop and 200+ categories; outputs drop straight into standard 3D pipelines. Multiple creators echo why this matters for indie teams.

Tencent open-sources HY-Motion 1.0 text-to-3D motion model

HY-Motion 1.0 (Tencent Hunyuan): Tencent Hunyuan released HY-Motion 1.0 as an open-source, 1B+ parameter text-to-3D motion model built on a Diffusion Transformer with flow matching, targeting high-fidelity, instruction-following character animation from natural language prompts like “dance” or “martial arts combo” according to the release thread. The team highlights a full Pre-training → SFT → RL loop to improve both physical plausibility and semantic accuracy, plus coverage of 200+ motion categories across six broad classes, with assets designed to drop into standard 3D pipelines per the tech report.

• Production-ready assets: Outputs are skeleton-based 3D motions that can be retargeted to typical rigs and integrated into game engines or DCC tools like Blender or Maya, which Tencent frames as “seamless” for existing pipelines in the release thread.

• Open ecosystem: Tencent published a project hub, GitHub code, a Hugging Face entry, and documentation so teams can inspect, fine-tune, or self-host the model, as shown by the linked resources in the project page and github repo.

The open release gives animators, small studios, and tool builders a heavy-weight but accessible baseline for text-driven motion that previously lived mostly behind proprietary APIs.

Creators frame HY-Motion as a fast text-to-motion blockout tool

Creator workflows (Tencent HY-Motion 1.0): Independent commentators describe HY-Motion 1.0 as a way for artists to type plain-language prompts like “sit on a chair”, “run”, or “block with a shield” and receive skeleton motion clips that can be retargeted into Blender, Maya, or game engines, dramatically speeding up motion blocking and previs for small teams, as explained in the creator breakdown. One summary aimed at creatives emphasizes that the model’s 200+ motion categories span from atomic actions to longer sequences, and that its outputs are already formatted as usable 3D animation assets rather than pure research demos creator breakdown.

For AI-heavy game, VR, and digital human pipelines, this framing positions HY-Motion as a practical building block for rapid iteration on character performance before higher-cost keyframing or mocap work.

🎬 Director’s toolkit: motion control, identity edits, and agent storyboards

Practical workflows for short‑form and scenes: Kling 2.6 Motion Control how‑tos, Luma Ray3 Modify identity edits, Vidu Agent storyboards, a platform short, and JP stop‑motion looks. Excludes HY‑Motion (covered as the feature).

Kling AI’s “One More Time” film leans on AI to relive memories

“One More Time” annual film (Kling AI): Kling AI releases its 2025 annual film "One More Time," a 232‑second piece built with Kling that recreates intimate personal memories from 12 creators, focusing on emotional beats rather than spectacle or tech demos annual film. The narrative weaves stories of lost pets, missed dances, and absent parents into softly stylized vignettes, with AI used to stage scenes that never got filmed but live vividly in memory.

• Emotion over novelty: The thread stresses that the value this year was not AI’s ability to generate anything, but its capacity to "gently hold our regrets" and visualize quiet what‑ifs, positioning the model as a tool for reflective storytelling rather than only for flashy experiments annual film.

• Global creator cast: Contributors span China, Spain, France, and the UK, with Kling AI crediting them as co‑authors and releasing an accompanying short‑film collection on its site so others can explore similar "one more time" scenes built with the same tools annual film.

The film functions as both brand piece and proof of concept for directors wondering whether current AI video is ready for emotionally grounded, dialogue‑light short narratives.

Higgsfield shows Nano Banana Pro + Kling Motion Control music‑video workflow

Kling Motion Control workflow (Higgsfield): Creator Techhalla details a practical music‑video pipeline that combines Nano Banana Pro for stylized stills with Kling Motion Control for motion transfer on the Higgsfield platform, positioning it as a template for how many music videos will be produced by 2026 according to the workflow overview. The flow is simple: record up to 30 seconds of yourself performing with good lighting, convert a first frame into a stylized performer using Nano Banana, then feed both the clip and the stylized still into Kling Motion Control on Higgs to animate the character with your original timing and gestures as shown in the step sequence.

• 30‑second capture loop: The guidance is to sing, dance, or play for a sub‑30‑second take so Motion Control can handle the full performance in one generation while preserving beats and camera framing workflow overview.

• Two‑model pipeline: Nano Banana establishes consistent character design and setting, then Kling Motion Control handles pose and lip/mic sync, with Techhalla linking directly to the Higgs preset so others can reuse the exact setup via the Higgs workflow.

The thread frames this not as a one‑off trick but as a repeatable director’s pattern for turning raw phone footage into stylized, multi‑angle music content without traditional compositing.

Vidu Agent launches worldwide with multi‑scene, storyboard‑driven video creation

Vidu Agent (Vidú): Vidú moves its previously teased “one‑click short film” tool into a full worldwide release, pitching Vidu Agent as a professional video creation partner that turns ideas into finished videos with storyboard control one-click short. The launch highlights multi‑language voice support (20+ languages and 200+ voice styles), multi‑model templates for commercials and story films, and a built‑in storyboard editor that lets creators adjust sequences and beats from a script‑like view Vidu announcement.

• Voice and language layer: A promotion panel shows selectable timbres like "Second Prince of Saudi" and character‑type labels such as "white‑collar worker" across languages including English, Chinese, Korean, Russian, and Japanese, signalling a focus on global localization workflows as seen in the Vidu announcement.

• Scene and model selection: Another graphic advertises "Multi‑Model Multi‑Scene Creation" with avatar grids for different on‑screen presenters, and a storyboard UI where script text automatically maps to shot thumbnails, indicating that agents handle both casting and scene breakdown.

For directors and marketers, the update turns Vidu Agent from a vague concept into a concrete storyboard‑centric system that can output voiced multi‑scene pieces from a high‑level idea.

Kling 2.6 image‑to‑video powers a John Wick‑style action beat

Kling 2.6 image‑to‑video (Kling): Artedeingenio shares a short John Wick‑inspired animation generated from a still image using Kling 2.6’s image‑to‑video mode, with the exact prompt gated for subscribers John Wick demo. The sequence shows a suited Wick‑like character drawing and firing a handgun with stylized muzzle flashes, camera pushes, and a brief character close‑up before cutting back to action.

• Prompt‑driven choreography: The creator emphasizes that the motion, cuts, and stylized timing come entirely from text plus a single reference frame, rather than manual keyframing, which is aimed at illustrators and directors who want to turn key art into motion beats John Wick demo.

• Shot‑design reference: The clip serves as a compact example of how Kling interprets action verbs (draw, aim, fire) and camera language (close‑up vs medium shot), giving storytellers a sense of how literal or abstract to be when describing combat scenes.

For filmmakers experimenting with AI previz, it offers a concrete reference for what a fast, gun‑centric action insert can look like when driven by Kling 2.6.

Kling O1 nails LEGO‑style stop‑motion with promptable layouts

Kling O1 stop‑motion (Kling): A Japanese creator’s demo, amplified by Kling, shows Kling O1 generating LEGO‑like stop‑motion videos where characters pop between poses and positions in a way that mimics frame‑by‑frame brick animation Kling O1 stop motion. The clip features colorful brick figures moving in discrete steps with shallow depth‑of‑field bokeh and shot composition controlled via text prompts.

• Prompt‑driven layout control: The quoted commentary notes that lens blur, character placement, and even stop‑motion spacing respond well to prompt instructions, allowing directors to specify where figures should appear in the frame while still getting that handmade jitter Kling O1 stop motion.

• Automatic style matching: The user also observes that Kling O1 "automatically adjusts the style" to fit each character, suggesting the model can harmonize lighting and texture across multiple brick figures without manual grading.

This positions Kling O1 as a practical way to prototype toy‑style sequences or title cards without physically animating bricks.

Ray3 Modify turns casual footage into a superhero transformation shot

Ray3 Modify (LumaLabs): Building on earlier Dream Machine demos that kept actors consistent across very different scenes identity edits, Luma now showcases Ray3 Modify handling a full superhero outfit transformation within a single continuous shot Ray3 clip. The clip starts with a person in everyday clothes and then rapidly morphs them into a fully armored hero before snapping back, with the identity and camera motion preserved while clothing, materials, and effects change.

• Single‑shot identity editing: The transformation plays out without visible hard cuts, which indicates Ray3 Modify is editing appearance frame‑by‑frame while maintaining consistent face structure and pose continuity Ray3 clip.

• Costume‑swap use case: For directors, this highlights a concrete workflow where one performance pass can yield multiple wardrobe or character variants, useful for low‑budget superhero beats, magical transformations, or fashion ads that need the same actor across looks.

The example shifts Ray3 Modify from abstract identity‑locking tech into a specific visual trope filmmakers can immediately map onto storyboards.

Lovart’s 2025 recap short spotlights AI design work as a filmic story

2025 recap short (Lovart): Lovart posts a "First we imagined. Then, we built" 2025 recap film that frames its AI design platform through a cinematic arc rather than a feature checklist Lovart recap. The piece opens with animated lines forming the word "IMAGINE," then cuts between people working with tech and on‑screen builds before landing on "BUILD," effectively treating the year’s shipping work as a narrative montage.

• Platform as storyline: The spot positions Lovart less as a set of tools and more as a creative journey from concept to finished visuals, which signals how AI design platforms increasingly market themselves with short‑film language instead of static product tours Lovart recap.

For directors and motion designers, it serves as a reference for how to structure an AI‑centric brand recap into a tight, watchable story rather than a slide deck.

🎭 One‑image character consistency for video

Continues the identity‑consistency arc with OpenArt Character 2.0: a single reference locks face/style across shots, then Seedance 1.5 Pro adds motion and audio for quick IP teasers.

OpenArt Character 2.0 turns one image into consistent, animated IP teasers

OpenArt Character 2.0 (OpenArt): AzEd walks through a pipeline where one reference image is enough to build a reusable character, generate multiple angles, and then animate them into short clips with sound, all in under 30 minutes workflow explainer; they frame it as “creating videos with true character consistency is finally real in 2025” creator sentiment.

• One-image character builder: The flow starts in OpenArt’s Character tool—upload or describe a character, click “Build my character,” review auto-generated angles and confirm “looks good,” then save it as a reusable identity for future prompts, as shown in the character creation and the linked character page.

• Prompted scene variety: With the saved character handle (e.g. @Mia), they show simple prompts for close-ups, full-body walks, and UI-layered shots that preserve face and style while changing framing, lighting, and emotion mia prompts.

• Animation and editing stack: Still frames are animated in Seedance 1.5 Pro with SFX and audio specified directly in the text prompt, then assembled with transitions and music in CapCut, producing a finished character-driven teaser in a single session animation recap.

The thread positions this as a solo-friendly way to prototype original IP and recurring characters for short-form video without custom training or heavy manual compositing.

🖼️ Reusable looks: blueprints, cinematic comics, crayons, and monochrome packs

A rich round of styles and prompts for illustrators: blueprint patents, filmic comic panels, children’s crayon aesthetics, stark B/W srefs, plus a Gemini emoji‑directed poster workflow.

AzEd’s blueprint schematic prompt becomes a reusable patent-style look

Blueprint schematic prompt (AzEd): AzEd follows up on the earlier character‑sheet work in blueprint style with a general "blueprint schematic of a [subject]" prompt in early‑20th‑century industrial patent style that artists can drop any object into, from bikes to robots, as detailed in the Blueprint prompt post. The core recipe fixes the medium (crisp blue background with white technical lines, exploded views, angular labels, diagram codes) so only the subject and composition change.

• Community remixes: Replies show the same text pattern holding up across a powered exoskeleton suit sheet Exoskeleton example, a whimsical animatronic "killer cat" blueprint Robot cat blueprint, and even Star Wars spacecraft reimagined as industrial patents Star Wars blueprint, turning this into a de facto reusable style pack for product, mech, and sci‑fi concept art.

Gemini emoji-directed poster prompt turns selfies into 2026 art prints

Emoji‑driven poster workflow (Gemini): Ozan Sihay shares a Turkish prompt template for Gemini where users upload their own photo and steer the entire art direction using a short row of emojis, with Gemini keeping the person’s identity and rebuilding clothes, pose, and setting to match the emoji story Gemini poster prompt. The prompt enforces a vertical 4:5 layout, an award‑style art poster look, and big 3D "2026:" typography followed by two positive Turkish words that Gemini chooses.

• Reusable layout recipe: The examples show consistent framing, emoji icons in a styled header, and bold 3D type at the bottom Gemini poster prompt, giving designers and influencers a single prompt they can reuse for yearly themes or campaigns by swapping the emojis and letting Gemini remix outfit, environment, and tagline while preserving facial likeness.

Midjourney Narrative Comic Art sref 1087357869 nails cinematic comic panels

Narrative Comic Art sref 1087357869 (Artedeingenio): Artedeingenio surfaces a Midjourney style reference that makes each frame read like a modern comic panel frozen mid‑scene, with extreme close‑ups, low angles, and aggressive directional lighting across superhero and genre portraits, as described in the Comic style explainer. The style leans hard into film language—over‑the‑shoulder shots, dramatic framing, and strong contrast—so outputs feel like story beats rather than generic key art.

• Use cases: The examples show it handling caped vigilantes, Venom‑like creatures, and armored Templar knights with equal consistency Comic style explainer, giving illustrators a single sref they can reuse for comic covers, narrative one‑shots, or storyboard‑style social posts.

Monochrome Midjourney sref 7291264699 delivers stark fantasy black‑and‑white pack

Monochrome fantasy pack sref 7291264699 (AzEd): AzEd shares a Midjourney style reference that locks into high‑contrast black‑and‑white illustration, producing muscular warriors, ravens over misty monasteries, hulking cattle, and forest spirits with graphic, almost etched rendering Monochrome style post. The look strips out color entirely and focuses on heavy shadow shapes, fine texture, and bright fog or mist to separate foreground from background.

• Storytelling applications: The consistency across character close‑ups, animal portraits, and wide environmental shots Monochrome style post positions this sref as a reusable base for fantasy one‑sheets, interior book art, tarot‑like cards, or moody key frames where creators want a unified monochrome world.

Wax-crayon children’s book sref 1086610381 standardizes naive illustration look

Children’s crayon style sref 1086610381 (Artedeingenio): A new Midjourney style reference focuses on deliberately naive, hand‑drawn children’s illustration, with wax‑crayon textures, clumsy‑charming proportions, and bright flat backgrounds, according to the Crayon style explainer. The reference set covers a shaggy dog, a wide‑eyed cat, a kid astronaut, and a pirate, all rendered with the same chunky line, scribbled shading, and bold color blocks.

• Children’s IP ready: Because the style keeps faces, eyes, and props readable while staying loose Crayon style explainer, it functions as a reusable pack for picture books, early‑reader covers, classroom posters, and social content that needs a kid‑authored feel without complex prompt engineering.

🗣️ Voices and agents at scale

ElevenLabs adds a new SOTA Speech‑to‑Text to power its Agents Platform and recaps 3.3M agents built in 2025 with auto language switching, turn‑taking, testing/versioning, and WhatsApp/IVR support.

ElevenLabs hits 3.3M agents and adds SOTA Speech-to-Text

ElevenLabs Agents Platform (ElevenLabs): ElevenLabs reports that enterprises and developers created more than 3.3 million agents on its voice-first Agents Platform in 2025, and is now rolling out a new state-of-the-art Speech-to-Text model that powers those agents end-to-end, as outlined in the feature recap and the stt blog. The company’s 2025 wrap-up highlights Automatic Language Detection for on-the-fly code-switching, a dedicated turn-taking model for more natural dialog, Agent Workflows for tightly controlled conversation flows, plus Testing, Versioning, Chat Mode, and integrations into WhatsApp and IVR navigation, summarized in the agents recap.

• Contact center angle: The combination of large-scale usage (3.3M agents), multi-language STT, testing/versioning, and WhatsApp/IVR channel support positions the platform squarely at call-center and support-style deployments where reliability and auditability matter feature recap.

🤖 Coding models and agents for builders

For creative coders: MiniMax M2.1 open‑sourced with strong multilingual/code benchmarks and agent demos; plus a Hyperbrowser shopping agent that ranks web results.

MiniMax open-sources M2.1 coding model with strong multilingual and tooling scores

M2.1 coding model (MiniMax): MiniMax has open-sourced M2.1, a multilingual coding model positioned as competitive with top closed models on standard benchmarks, while explicitly targeting real-world, multi-language software work across Rust, Java, Go, C++, Kotlin, TypeScript, and JavaScript as described in the M2.1 overview. It scores 72.5% on SWE-multilingual, 74.0% on SWE-bench Verified, and 88.6% on the VIBE full‑stack benchmark, with separate Android (89.7%) and iOS (88.0%) tracks that highlight mobile app capability according to the benchmark stats.

• Tool and system tasks: The team reports 47.9% on Terminal-bench 2.0 for command-line and system operations, plus 43.5% on Toolathlon for long-horizon tool use, framing M2.1 as suitable for agents that must orchestrate shells and utilities over many steps, as outlined in the benchmark stats.

• Token efficiency and speed: M2.1 is said to match M2’s quality while using fewer tokens and running faster in practice, suggesting lower cost and latency for code-generation workflows in the M2.1 overview.

• Agent framework coverage: MiniMax notes that M2.1 was exercised under multiple open agent harnesses—Claude Code, Droid, and mini-swe-agent—where it showed consistent behavior across scaffolds, which matters for builders standardizing on those ecosystems per the M2.1 overview.

The thread frames M2.1 as an open, multi-language coding workhorse rather than a Python-only specialist, though independent third-party evaluations beyond the shared numbers have not appeared in these tweets yet.

MiniMax uses M2.1 Agent to auto-build physics, fireworks, and music apps

M2.1 agent demos (MiniMax): Using the new M2.1 model through MiniMax’s Agent platform, a creator reports building three interactive web demos—an interactive physics sandbox, a New Year fireworks simulator, and a music visualizer—mostly by describing the desired behavior in natural language instead of writing all the boilerplate code, as shown across the physics demo, fireworks demo , and visualizer demo. The workflow runs entirely through MiniMax’s hosted agent interface, with no local setup or API key configuration required according to the M2.1 overview.

• Physics simulation: The interactive physics tool exposes four different simulation modes, wiring up the correct equations and parameter controls so users can tweak values and immediately see behavior change, with the author emphasizing that all core logic came from the agent in the physics demo.

• Fireworks sandbox: For the holiday fireworks example, the agent generated a simulation with realistic launch arcs and explosions, again including parameter sliders for experimentation while the human supplied only a high-level prompt, as seen in the fireworks demo.

• Music visualizer: The music visualizer lets users upload an audio file and see three different visualization modes—frequency bars, circular patterns, and particle effects that react to the beat—after just two prompts to the agent, according to the visualizer demo.

Taken together, these demos illustrate how M2.1 is being used not only for solving benchmark issues but also for end-to-end scaffolding of small, interactive web apps that are especially relevant to creative coders and educators.

Hyperbrowser-powered gift finder agent ranks products from web context

Gift finder agent (Hyperbrowser): A developer showcases a Hyperbrowser-based AI gift finder that takes a natural-language brief about a person—their age, interests, and budget—then searches the web, analyzes the options, and returns a ranked list of gift ideas tailored to that profile, as demonstrated in the gift finder demo. The tool runs as an autonomous browsing agent rather than a static recommender, using live web pages as its source of truth.

The example emphasizes that the agent both discovers and filters products in context (instead of relying on a fixed catalog), then narrates why each result fits the described person, which aligns Hyperbrowser with broader efforts to turn LLMs into practical research and shopping assistants for builders who want to wire similar workflows into their own apps.

📣 AI in brand films and advertising

Case studies for marketers: Lexus × AKQA’s winter film, Telefónica Movistar’s coherent AI campaign, and Old Spice’s overtly AI, absurdist spot—each treats AI as a creative language, not a shortcut.

Lexus and AKQA use AI to deliver a cinematic winter brand film

Built for Every Kind of Wonder (Lexus × AKQA): Lexus and AKQA released a winter brand film built with generative AI that still plays like traditional cinema, framing a snow‑globe journey through frozen lakes and night drives before revealing it all inside a child’s hand‑held globe Lexus AKQA post. The AI work sits inside AKQA’s Virtual Studio pipeline, mixing image‑to‑video tools with standard art direction so the spot can run across Lexus’ owned digital and social channels in EMEA.

• AI as enabler, not subject: The campaign copy stresses that AI increased efficiency and flexibility while the creative team kept control of story, pacing, and framing, with technology described as supporting rather than defining the piece Lexus AKQA post.

• Budget and scope implications: The thread argues that this kind of workflow lets brands deliver dreamlike, location‑heavy films that previously needed large shoots, as explained further in the shared AI UGC methodology article workflow article.

The film positions large brands to treat generative video as another tool in the cinematic toolbox rather than a shortcut that cheapens the look.

Telefónica Movistar’s AI campaign shows brand-safe, coherent generative spots

AI brand film (Telefónica Movistar): Telefónica Movistar launched a generative‑AI campaign produced with Mito Films and executed by SuperReal that focuses on rhythm, animation, and narrative coherence instead of spectacle, using AI to speak the brand’s existing visual language rather than chase novelty Movistar case study. The creative team explicitly worked to avoid uncanny faces and odd motion so the finished film feels like a polished Movistar spot that happens to be AI‑assisted.

• Craft over wow factor: The breakdown highlights shot‑to‑shot continuity, shot rhythm, and animation choices as the main achievements, saying the goal was to keep viewers inside the story instead of pausing on AI artifacts Movistar case study.

• Signal for marketers: The post frames the project as evidence that generative AI can become a "mature, expressive tool" aligned with brand culture when guided by strong art direction, building on the broader AI‑for‑ads workflow described in the linked methodology article workflow article.

For advertisers, it’s an example of AI used to maintain and extend a brand’s visual identity rather than replacing it with generic AI aesthetics.

Old Spice’s AI goat commercial leans into visible weirdness

Don’t take the goat for a walk (Old Spice): Old Spice and Don by Havas released a fully AI‑generated spot built around the line "Don’t take the goat for a walk," deliberately letting the AI‑ness show instead of hiding behind realism Old Spice goat spot. The film keeps the brand’s long‑running surreal, absurd humor and uses generative visuals as part of the joke rather than trying to pass as live‑action.

• AI as part of the gag: The commentary notes that the spot has small visual flaws and doesn’t chase hyper‑realism, but argues it still succeeds because the visible AI artifacts match Old Spice’s offbeat tone Old Spice goat spot.

• Transparent use of tools: AI is framed as a creative language in its own right, with the post contrasting this open approach to campaigns that quietly use AI while pretending to be traditional shoots, referencing the same broader workflow article for context workflow article.

The campaign offers a template for brands that are comfortable letting audiences know an ad is AI‑made and folding that fact into the humor.

Picsart AI UGC workflow shows how influencer-style ads can fool viewers

AI UGC case study (Picsart collaboration): A detailed case study walks through an AI‑generated influencer vlog so realistic it reportedly fooled both casual viewers and experienced AI users, showcasing a pipeline built in partnership with Picsart UGC workflow thread. The creator designed a persona called Anna (18‑year‑old Italian‑American vlogger), generated her from multiple neutral angles, mapped several emotional states, and then produced smartphone‑style scenes where AI controls both subject and environment.

• Method over tools: The piece emphasizes story, character backstory, emotional mapping, and textual storyboards before generation, then layers in "wrong" quality—rough cuts, unplanned gestures, dust on mirrors—to mimic imperfect UGC instead of polished ads UGC workflow thread.

• Tool stack for marketers: The workflow credits Nano Banana for consistent subject rendering and Veo 3.1 for native audio and smooth scene continuity, presented in more depth in the linked article that also underpins the Lexus, Movistar, and Old Spice breakdowns workflow article.

For brand and UGC teams, it documents how generative tools can produce sponsored‑content‑style videos that are hard to distinguish from real influencer footage when paired with careful narrative design.

🧪 Realtime diffusion, selective edits, and MoE routing

Mostly video‑gen and editing research: on‑policy distilled realtime video diffusion, transparent object depth/normal estimation, streamable VSR, selective region editing for DiTs, and MoE expert‑router coupling.

LiveTalk targets 20× lower latency for interactive multimodal video diffusion

LiveTalk (research collaboration): LiveTalk proposes a real‑time, multimodal interactive video diffusion system that uses improved on‑policy distillation to reduce inference cost and latency by about 20× versus full‑step bidirectional diffusion baselines, according to the paper mention and the detailed description on the paper page. It focuses on avatar‑style human–AI conversations conditioned on text, images, and audio, while explicitly tackling artifacts like flicker and black frames during fast updates.

• Realtime creative use cases: The paper frames applications such as talking heads, live streaming presenters, and interactive characters where creators need responsive, lip‑synced video that behaves more like a call than an offline render, as outlined in the paper page.

“Diffusion Knows Transparency” turns video diffusion into a transparent-depth estimator

Diffusion Knows Transparency (research group): The “Diffusion Knows Transparency” paper repurposes pre‑trained video diffusion models to estimate depth and surface normals for transparent objects, turning a generative model into a 3D perception tool for glass, liquids, and other refractive materials, as introduced in the paper intro and summarized on the paper summary. That gives VFX, compositing, and AR pipelines physically meaningful passes for hard‑to‑capture transparent regions without retraining a dedicated 3D model from scratch.

SpotEdit enables training-free selective region editing in diffusion transformers

SpotEdit (research collaboration): SpotEdit presents a training‑free selective region editing framework for diffusion transformers, letting users modify only chosen parts of an image while keeping untouched areas nearly identical, according to the paper intro and the methodology in the paper summary. It combines a SpotSelector module that detects stable regions which can skip recomputation with a SpotFusion mechanism that blends edited and original tokens so composition and context remain coherent for creatives who want local changes without global drift.

• Efficiency and quality: By avoiding denoising in stable areas, the approach cuts compute while preserving fine details in backgrounds and unedited subjects, which matters for iterative design and art‑direction workflows highlighted in the paper summary.

Stream-DiffVSR proposes low-latency, streamable diffusion video super-resolution

Stream-DiffVSR (research group): Stream‑DiffVSR introduces a low‑latency, streamable video super‑resolution method that applies auto‑regressive diffusion over incoming frames so high‑resolution outputs stay in sync with playback, rather than requiring offline batch processing, as described in the vsr mention and the associated paper summary. The work emphasizes temporal consistency and small per‑frame compute, which is directly relevant for live previews, streaming upscalers, and interactive editing viewports where delay is highly visible.

ERC loss tightens expert–router alignment in mixture-of-experts models

ERC loss for MoE (research group): The expert‑router coupling (ERC) loss introduces a lightweight auxiliary objective for mixture‑of‑experts models that encourages router embeddings to reflect what each expert actually does, aligning routing decisions with expert capabilities as outlined in the paper intro and the paper summary. It treats each expert’s router embedding as a proxy token, then enforces that experts respond most strongly to their own proxies and that each proxy activates its corresponding expert more than any other, which aims to improve specialization and overall compute efficiency without redesigning the core MoE architecture.

🎁 Creator promos, credits, and quick‑start kits

Consumer‑facing offers and funnels: Pollo 2.5 free tries, Lovart credit cuts up to 70%, InVideo’s Vision one‑prompt multi‑shot tool, ApoB face‑swap credits, and Pictory’s script‑to‑video CTA.

InVideo’s Vision tool turns one prompt into nine connected story shots

Vision multi‑shot generator (InVideo): InVideo is promoting its new Vision tool, which takes a single text prompt and generates nine connected shots designed to form a coherent mini‑story, as outlined in the partner walkthrough. The showcased example uses a "Terminator in his daddy era" idea, with Vision keeping character appearance stable across all shots and then dropping them into InVideo’s editor to assemble a finished scene in minutes, according to the followup demo.

This positions Vision as a quick‑start kit for UGC‑style ads, short skits, and narrative social clips where creators want storyboarded variety without manually scripting or cutting every shot.

Lovart cuts Nano Banana and video model credit usage by up to 70%

Credit cuts for Nano Banana and video models (Lovart): Lovart says it has reduced credit consumption by up to 70% across its AI design agent, cutting Nano Banana Pro usage by 50% and slashing usage for Veo 3, Sora 2, Kling O1 and other models by 40–70% in the credit update. The company frames this as "10x creative power" heading into 2026 and is pushing users to upgrade plans while the discounted consumption is live via the pricing page.

For image, video, and 3D creators already building on Lovart’s multi‑model stack, these changes effectively increase how many shots, style variations, or iterations they can generate per month at the same budget.

Pictory pushes script‑to‑video funnel for multi‑input AI clips

Script‑to‑video funnel (Pictory): Pictory is running a marketing push for its AI pipeline that turns written scripts into ready‑to‑publish videos, emphasizing that users can start from text, URLs, PowerPoint decks or image collections and have the system add subtitles, AI voiceovers and music, as described in the script promo. The linked product page positions Pictory as a hub for script‑to‑video, URL‑to‑video, PPT‑to‑video and images‑to‑video flows aimed at social feeds and internal communications, according to the product homepage.

The campaign targets non‑editor creatives who want to repurpose existing written or slide content into short AI‑assembled videos without learning a full NLE.

Pollo 2.5 New Year Special offers free tries and 77‑credit giveaway

Pollo 2.5 New Year Special (itsPolloAI): itsPolloAI is promoting a "New Year Special" collection powered by its Pollo 2.5 video model, offering three free generation tries for every template plus a 12‑hour event where users who follow, retweet and reply "ReadyFor2026" can win 77 credits, according to the New Year promo. The campaign stresses instant, social‑ready vertical clips with festive background music and TikTok/Reels‑native formatting, as reinforced in the feature recap.

For AI creatives and social video makers, this temporarily lowers the barrier to testing Pollo 2.5 for short New Year‑themed ads and templates before committing paid credits.

💬 Creator mood: end‑of‑year picks and pro‑AI swagger

Cultural pulse items: a poll to crown the single best AI release of 2025 and blunt pro‑AI posts dismissing ‘AI steals/water’ critiques—engagement heavy, opinionated, and very creator‑core.

Pro‑AI creators shrug off ethics debates and mock “slop” worries

Pro‑AI stance (multiple creators): Several creators leaned hard into a dismissive posture toward AI criticism, with one saying that even if AI "steals" or consumes water they "don’t give a damn" and will keep using it "happily and without a second thought" ethics dismissal. Following up on ai art economy, which framed opponents as jealous and unproductive, these new posts move from economic justification to open mockery of people trying to argue against gen‑AI in the comments.

• Ethics fatigue: The recurring complaints about training data, energy use, and environmental impact are portrayed as irrelevant to actual usage decisions, with the author emphasizing that they and other users will continue regardless of such debates ethics dismissal.

• Hater ridicule: Another thread laughs at lone negative comments under high‑engagement AI posts, calling the critics' behavior "embarrassing" and suggesting the broader audience response makes their objections socially marginal hater mockery.

• Slop debate: A separate creator pushes back on "AI slop" discourse by arguing that low-effort, mass-produced content has existed for years in short-form video feeds, and that high-quality work still stands out even as generative tools ramp volume slop comment.

Creators debate best AI release of 2025 in single-pick poll

Best-of-2025 poll (AI for Success): Creator account @ai_for_success asked followers to name the single best AI release of 2025, giving their own vote to Nano Banana Pro and pulling in hundreds of replies that read like a year-end canon for working creators poll question. The conversation clusters strongly around image and video tools rather than base LLMs, highlighting where many designers and storytellers feel the biggest shift in their daily workflows.

• Emerging favorites: Nano Banana Pro shows up repeatedly in prompt threads and workflows for stills and video hybrids nano banana prompt; Veo 3.1 is explicitly called out as "still one of the best" video models in a short reel veo sentiment; GPT Image 1.5 gets name-checked as a personal top pick in replies gpt image pick, together sketching a creator-side ranking that prioritizes cinematic video and high-fidelity art over raw benchmark charts.

📊 Progress snapshots and 2026 outlooks

Light evals and sentiment: METR’s task‑horizon chart trending toward harder software tasks, a community check on ‘which AI level’ we’re at, and users re‑upping that Veo‑3.1 still feels top‑tier.

METR chart points to multi‑hour software tasks at 50% success by 2026

Task horizon chart (METR): A community share of METR’s "time-horizon of software engineering tasks" graphic shows newer LLMs pushing toward 50% success on tasks estimated to take up to several hours, trending from GPT‑2 through projected 2026 systems, as shown in the METR chart share. Following up on Claude tasks which highlighted Claude Opus 4.5 reaching roughly 4h49m bug‑fixing work, this snapshot reinforces how quickly end‑to‑end software capabilities have climbed over about five years and is shaping how builders price the next year of progress.

Stanford experts see no AGI in 2026 but rising AI sovereignty and measured impact

2026 AI outlook (Stanford experts): A Stanford‑linked highlights reel distills expert predictions that artificial general intelligence will not arrive in 2026, while national "AI sovereignty" efforts and local model stacks are expected to accelerate on the back of 2025 investments by countries such as the UAE and South Korea Stanford outlook. The summary also forecasts modest, uneven productivity gains centered on programming and call centers, more realistic debates over environmental cost and ROI, a potential "ChatGPT moment" for medical AI via self‑supervised healthcare models, and the emergence of real‑time dashboards to track AI’s economic and labor effects.

Veo‑3.1 still framed as one of the strongest video models by creators

Veo‑3.1 video model (Google): Creators continue to describe Veo‑3.1 as a top‑tier video generator, with one post flatly saying it is "still one of the best model out there" while showing a polished, abstract color‑wave demo Veo praise. The model also sits at the core of more elaborate workflows where Nano Banana handles subject consistency and Veo‑3.1 delivers native audio, temporal continuity, and scene‑to‑scene realism for brand UGC and major ad campaigns, including a Telefónica Movistar spot and a highly realistic vlog‑style project Movistar campaign and UGC workflow.

Creators debate whether 2025 AI sits at “reasoners” or “agents” stage

AI future levels (OpenAI concept): A creator resurfaced Bloomberg’s "OpenAI Imagines Our AI Future" table that splits progress into five stages—Level 1 chatbots, Level 2 reasoners, Level 3 agents, Level 4 innovators, and Level 5 organization‑scale AI—and asked which level best describes 2025 systems AI levels question. The prompt explicitly contrasts Level 2 "reasoners" with Level 3 "agents", nudging practitioners who work with tools like GPT, Claude, and Gemini to judge whether day‑to‑day use already qualifies as agentic (systems taking meaningful actions) or still feels like advanced conversational help.

Demis Hassabis outlines world‑model route to AGI and post‑AGI society

AGI trajectory (Demis Hassabis, Google DeepMind): Notes from a Demis Hassabis conversation emphasize a 50/50 strategy between scaling compute and developing new architectures, with world models that understand physics framed as crucial for robotics and "universal assistant" behavior Demis summary. The recap credits Gemini 3 with leading multimodal capabilities, highlights simulation systems like Genie, discusses jagged intelligence where models ace IMO‑level problems yet miss basic reasoning, and suggests AGI convergence could come "in the coming years" while calling for international cooperation, ethical development, and long‑term well‑being‑focused AI design AGI talk notes.

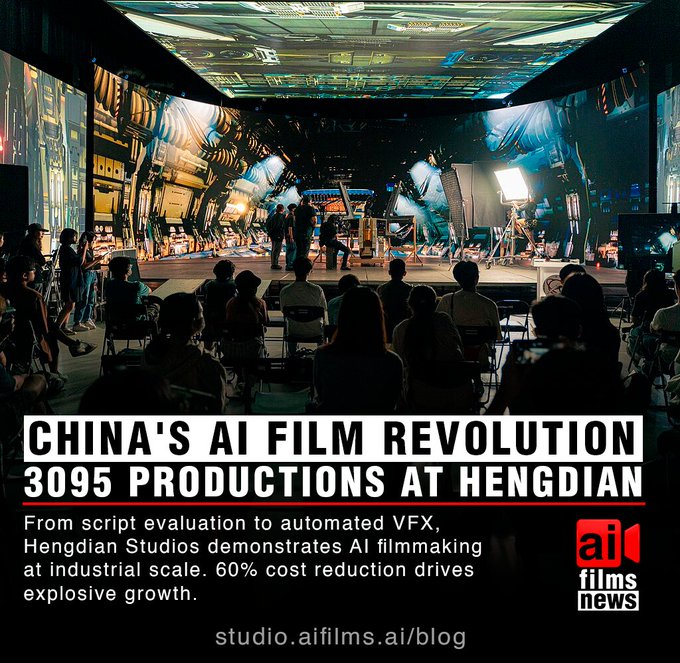

Hengdian’s AI‑assisted micro‑drama boom shows industrial‑scale AI filmmaking

AI‑driven micro‑dramas (Hengdian Studios): Commentary on Hengdian World Studios describes an AI‑heavy boom in Chinese "micro‑drama" production, citing 3,095 AI‑supported projects and a roughly $7 billion market in 2024, with around 647,000 jobs tied to the ecosystem and production costs about 60% lower than comparable overseas work Hengdian summary. The linked analysis explains that custom LLMs for script evaluation, AI for VFX, digital crowds, real‑time virtual production, and automated copyright monitoring let teams analyze scripts up to 1.2 million words in hours across more than 20 production bases, signalling what industrial‑scale AI filmmaking looks like heading into 2026 Hengdian blog.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught