Kling 2.6 Motion Control fuels 12s dramas – Reachy ships robots

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Kling 2.6 broadens beyond raw Motion Control tests into templates and story tools: a new “2025 recap” effects pack targets TikTok/Reels year‑in‑review clips; Kling O1 turns Freepik image grids into single‑step cinematic scenes; anime prompts highlight quiet, painterly transformations alongside memeable Trump and Harley Quinn dance remixes and a drifting race car stress test that keeps parallax and smoke coherent. Seedance 1.5 Pro counters with a 12s Korean drama micro‑trailer where dialogue, camera, and “perfect” lip‑sync come from one text prompt; creators claim stable multi‑shot identity and native voices across Korean, Spanish, and Mandarin for talking‑character shorts.

• Video ecosystem and tools: Higgsfield Cinema Studio powers a “Fight or Disappear” cosmic jellyfish short; Grok Imagine, Veo 3.1, WAN 2.6, and Minimax Hailuo 02 anchor noir, wildlife, “unlimited” style, and anime action looks; Gamma hooks into Nano Banana Pro for editable decks, Pictory ships Brand Kits, Replicate launches Screenshot‑kit, while Claude’s Chrome plugin draws UX complaints.

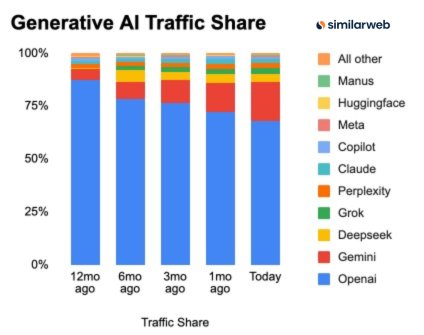

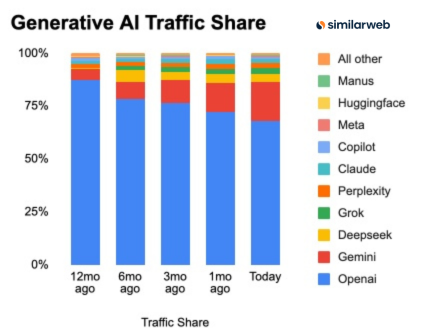

• Robots, platforms, and community: Pollen Robotics ships 3,000 Reachy Mini units and wires MCP and GradiumAI into live demos; Similarweb shows OpenAI traffic share down ~19.2 pts as Gemini gains ~12.8; GLM‑4.7 rises on open‑weight indices; Midjourney teases better text and new tarot/Ghibli‑style srefs; LumaLabs pushes “multiplayer AI” scenes; NoSpoon’s Infinite Films contest and Azed_ai’s 2025 art call turn year‑end model gains into public showcases.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught

Top links today

Feature Spotlight

Kling 2.6 Motion Control: pop‑remix clips and new recap effects

Kling 2.6 dominates feeds again—now with a “2025 recap” effects drop and pipelines from image grids—fueling polished pop remixes and anime transforms that signal it’s the default short‑form director’s tool.

Today’s clips show creators leaning on 2.6 for memeable pop culture remixes and anime transformations, plus a fresh “2025 recap” effects drop and an O1 workflow turning image grids into scenes—new angles beyond yesterday’s orientation tips.

Jump to Kling 2.6 Motion Control: pop‑remix clips and new recap effects topicsTable of Contents

🎬 Kling 2.6 Motion Control: pop‑remix clips and new recap effects

Today’s clips show creators leaning on 2.6 for memeable pop culture remixes and anime transformations, plus a fresh “2025 recap” effects drop and an O1 workflow turning image grids into scenes—new angles beyond yesterday’s orientation tips.

Kling 2.6 drops new 2025 recap effects pack

Kling 2.6 recap effects (Kling_ai): Kling_ai is teasing a fresh set of "New effects" aimed at year‑end recap videos, showing a creator walking into frame before a bold "2025" slate hits, framed like a vertical social clip Recap effects tease. The implication is that 2.6 now ships with additional recap‑style looks or presets targeted at TikTok/Reels‑style end‑of‑year edits.

For motion designers and editors, this points to Kling leaning into templated, narrative‑friendly effects rather than only raw Motion Control fidelity, making it easier to package highlight reels or personal "year in review" stories directly from generative shots.

Kling O1 turns Freepik image grids into cinematic movie scenes

Image‑grid to scene (Kling O1): Creator Jerrod Lew demonstrates a workflow where Freepik Spaces is used to assemble still image grids, and Kling O1 then converts those grids into fully animated movie scenes Grid to scene workflow. The setup reportedly "requires only a single" pipeline step once the grid is ready, effectively turning a storyboard‑like collage into a coherent shot.

This positions Kling O1 as a bridge between image‑generation tools and video, letting art teams design compositions and character poses in a grid first, then hand the layout to Motion Control for timing, camera moves, and performance in one pass.

Anime transformation prompt shows Kling 2.6 can stay quiet and cinematic

Anime transformations (Kling 2.6): A creator continues to experiment with anime transformation scenes in Kling 2.6 and reports "excellent results," sharing a detailed prompt focused on a quiet warrior, glowing markings, armor dissolving into light, and a slowly emerging mythical silhouette Anime prompt details. The clip emphasizes restrained camera moves and soft lighting over explosive action, framing 2.6 as capable of subtle, sacred‑feeling transformations instead of only high‑energy spectacle.

The prompt also stresses a hand‑drawn look, painterly 2D backgrounds, and gentle camera orbits, and Kling_ai’s own repost signals that Motion Control is being positioned as a serious anime‑style storytelling tool rather than purely a meme engine Kling repost.

Kling 2.6 Motion Control powers memeable pop‑culture dance remixes

Pop remix clips (Kling 2.6 Motion Control): Multiple creators are leaning on Kling 2.6 Motion Control for tongue‑in‑cheek character dance remixes, from a Trump hotel‑lobby dance to a Harley Quinn spin‑and‑pose shot, all carrying the "Kling 2.6 Motion Control" branding Trump dance example Harley Quinn example. These clips show 2.6’s ability to track full‑body performance, props and hair while staying on‑model for stylized or comic‑book characters.

• Trump lobby dance: A Trump likeness waves a white cloth and shuffles in a lobby, with motion matching a reference dance and leg/arm timing preserved for comedic beats Trump dance example.

• Harley Quinn spin: A close‑up spin transitions to a wider frame where Harley lands in a bat‑over‑shoulder pose, keeping costume details and head orientation locked through the move

.

• Anime shrine dance: A Japanese creator notes that re‑running a 2.6 Motion Control dance with a different character (Makima at a shrine) stays entertaining, hinting at reliable retargeting for anime IP Makima dance comment.

For storytellers and meme editors, these examples highlight that 2.6 can carry a single dance performance across radically different characters and settings while staying expressive enough for punchline‑driven remixes.

Drifting race car reel stress‑tests Kling 2.6 motion tracking

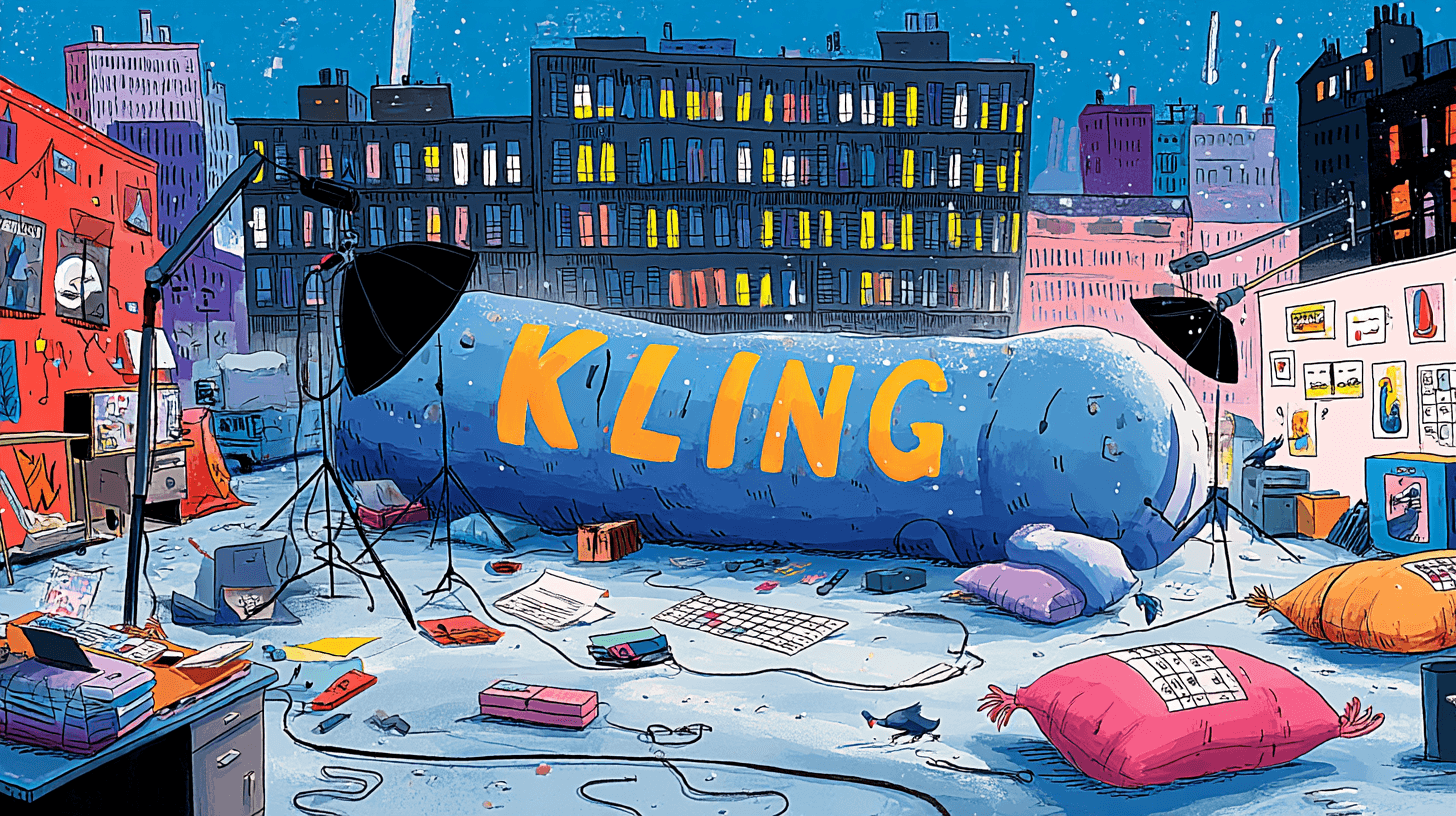

Automotive stunts (Kling 2.6): A new reel shows a blue‑and‑white race car spinning and drifting across asphalt, kicking up dust while a "KLING 2.6" mid‑card flashes on screen Car drift comment. The car’s rotation, tire smoke, and camera parallax all stay coherent through the maneuver, which functions as a stress test for fast lateral movement and physics‑heavy scenes.

For filmmakers working on action or motorsport sequences, this suggests 2.6 can keep vehicle silhouettes stable and wheels grounded even in tight, 1:1 square framing, which is a frequent weak spot in earlier video models.

🎙️ Seedance 1.5 Pro: native dialogue micro‑trailers

New examples emphasize text‑to‑video with built‑in voices and perfect lip‑sync: a 12s Korean drama trailer and creator threads pointing to multi‑language support and try links. Excludes Kling (covered as the feature).

Seedance 1.5 Pro demos Korean drama micro-trailer with native dialogue

Seedance 1.5 Pro (Higgsfield): Higgsfield’s video model is now showcased in a 12‑second Korean drama micro‑trailer with fully generated dialogue and synced lip motion, following up on Freepik launch that highlighted its native audio‑video generation on creative platforms. The scene—sunset train platform, sakura petals, and two schoolkids whispering breakup lines in Korean—is driven entirely from a long text prompt specifying visuals, camera drift, and exact dialogue beats, as detailed in the Korean trailer clip and the expanded Prompt text detail.

• Native talking heads and lip‑sync: A creator thread describes Seedance 1.5 Pro as “the first AI model that generates talking content with perfect lip-sync and native voice,” stressing that audio and video are generated together in one pass with “no dubbing, no fixes, no uncanny valley,” and showing both realistic and anime talking shots to back the claim, as framed in the Creator feature thread and reinforced by the Announcement recap.

• Multi‑language and storytelling use: The same thread notes that Seedance 1.5 Pro currently supports additional languages like Spanish and Mandarin for native‑sounding speech, and pairs this with claims of stable multi‑shot storytelling—cutting between angles while keeping character identity and timing consistent—which is echoed in the retweeted summary about “stable multi-shot storytelling in Seedance 1.5 Pro” in the Storytelling highlight.

Together, these demos position Seedance 1.5 Pro as a text‑to‑video tool aimed squarely at short narrative pieces—micro‑trailers, K‑drama beats, and talking‑character scenes—where voice, lip‑sync, and cinematography all come from a single prompt rather than a stitched pipeline.

🎥 Stylized video looks beyond Kling: Grok noir, VEO 3.1, WAN 2.6

A grab‑bag of non‑Kling models delivering cinematic aesthetics: Grok Imagine’s neo‑noir reels, VEO 3.1’s wildlife slow‑mo, WAN 2.6’s new unlimited mode, and a Higgsfield cosmic jellyfish short. Excludes Seedance (separate) and Kling (feature).

Grok Imagine leans into neo‑noir and femme fatale shorts

Grok Imagine (xAI): Grok Imagine is emerging as a go‑to for stylized neo‑noir shorts, with creators animating fedora silhouettes, grainy city shots, and hard light transitions, as shown in the Grok noir clip and reinforced by the Grok femme fatale. This model also handles glossy genre beats like futuristic ship fly‑throughs, giving storytellers a palette that ranges from noir to stylized sci‑fi per the Grok sci-fi shot.

The tone leans hard into classic noir, which gives AI filmmakers and motion designers a ready‑made visual language for crime stories, thrillers, and moody title sequences without needing complex grading or compositing work.

Higgsfield Cinema Studio powers “Fight or Disappear” cosmic jellyfish short

Cinema Studio (Higgsfield): Creator Kangaikroto used Higgsfield’s Cinema Studio to build a narrative short where cosmic jellyfish tear through space and attack Earth under the tagline “Fight or Disappear,” combining nebula environments, tentacled creatures, and planetary destruction in one cohesive edit shown in the Higgsfield jellyfish film. The clip mixes title cards, wide environment shots, and close‑ups of Earth under assault, giving sci‑fi storytellers a template for apocalyptic trailers built entirely from AI‑generated footage.

Every shot leans toward dramatic spectacle, so filmmakers and animators can study it as a reference for pacing, shot variety, and transitions when they plan their own high‑stakes cosmic or disaster pieces.

VadooAI launches “UNLIMITED WAN 2.6” as its most unrestricted video model

WAN 2.6 (VadooAI): VadooAI announced “UNLIMITED WAN 2.6,” describing it as their most unrestricted video model with enhanced visuals and a freedom‑first positioning that “sounds illegal, but it’s not,” as framed in the Wan 2.6 launch. For AI filmmakers this signals a push toward looser content and style safeguards, with WAN framed as a playground for bold or experimental looks compared to stricter, brand‑safe video tools.

The branding targets adventurous video creators, suggesting WAN 2.6 is where people will probe the edges of what stylized, high‑impact AI video can depict before more conservative platforms catch up.

Veo 3.1 prompt shows ultra‑slow‑motion bald eagle cinematography

Veo 3.1 (Google): A detailed prompt for Veo 3.1’s “majestic bald eagle” scene highlights how the model can render ultra‑slow‑motion wildlife aerials with sweeping wide shots and cinematic atmosphere, combining depth, motion blur, and large‑scale skies in one pass, according to the Veo eagle prompt. The setup focuses on aerial perspective and ultra‑slow motion, aimed squarely at nature docs, title cards, and prestige‑style B‑roll.

The look is slow, smooth, and theatrical, which gives filmmakers and editors a concrete recipe for animal or aerial inserts that feel like high‑end drone footage rather than generic stock.

Minimax Hailuo 02 nails dynamic anime‑style snowball fight motion

Hailuo 02 (Minimax): A Japanese creator demoed Minimax’s Hailuo 02 with a “dynamic snowball fight tournament,” showing characters sprinting, dodging, and hurling snow in a highly energetic outdoor sequence that stays coherent across the frame, as seen in the Hailuo snowball battle. Rapid camera moves and exaggerated character motion create a slapstick anime tone suited to short‑form comedy, sports parodies, or action‑heavy seasonal clips.

The energy stays high throughout the clip, which highlights Hailuo 02 as a useful option when creators want kinetic, character‑driven action that feels closer to hand‑animated anime than static talking‑head video.

🖼️ Reusable looks: epic cinematic fusion, tarot srefs, Ghibli calm

Today’s stills center on shareable prompt kits: a film‑stock “Epic Cinematic Fusion,” a vintage tarot sref for deck building, and a soft Ghibli‑inspired anime sref; Midjourney hints better text rendering next version.

Midjourney says upcoming version will significantly improve in‑image text

Upcoming Midjourney version (Midjourney): Responding to user criticism about weak typography, the Midjourney account states that "our next version should have much better text" and admits text "was never a focus" because polls pushed the team toward other priorities, as explained in the reply thread in Text roadmap.

• Creator pressure: Power user Oscar / Artedeingenio replies that even if overall aesthetics matter more, in‑image text rendering is something Midjourney "really need[s] to improve as soon as possible," capturing a common frustration for designers working on posters, cards and UI screens in User feedback.

• Impact for prompt‑kit workflows: Stronger native text would make style‑reference prompts like tarot decks or faux book covers more production‑ready, reducing the need for manual Photoshop fixes or overlaid vector type that many visual storytellers rely on today in Text roadmap.

For AI creatives who care about on‑image lettering—title cards, signage, product labels—this signals that typography fidelity is finally on Midjourney’s near‑term roadmap rather than a long‑term wish.

Epic Cinematic Fusion prompt offers film‑stock hero portraits with 35mm grain

Epic Cinematic Fusion prompt (Azed_ai): Azed_ai packages a reusable "Epic Cinematic Fusion" prompt for Midjourney‑style image generation that blends cultural archetypes, symbolic armor, chiaroscuro lighting and sweeping camera angles into moody hero portraits with grainy 35mm film stock aesthetics, as laid out in the prompt share and sample images in Prompt details.

• Look characteristics: The kit locks in a dramatic sky backdrop, low or sweeping camera, engraved armor, and vintage film color science so different subjects—from a cybernetic pharaoh to a Viking queen or neo‑Tibetan monk—still feel part of one cinematic universe in Prompt details.

• For storytellers and concept artists: The recipe gives narrative teams, cover artists and character designers a fast way to keep tone consistent across casts and locations while still varying ethnicity, costume and setting per shot in Prompt details.

For AI creatives, this functions as a ready‑to‑reuse "house style" for epic, character‑driven key art without hand‑tuning every new prompt from scratch.

Vintage tarot Midjourney sref 3746313528 standardizes Belle Époque deck look

Tarot deck sref 3746313528 (Artedeingenio): Oscar / Artedeingenio surfaces a Midjourney style reference --sref 3746313528 that produces late‑19th/early‑20th‑century‑inspired tarot illustrations with Art Nouveau, Symbolist and Pre‑Raphaelite influences, and shares a concrete card prompt formula in Tarot sref prompt.

• Visual language: The examples for The Devil, The Death, The Lovers and The Sun show aged borders, etched linework, muted yet rich inks and period‑authentic figure styling, matching Belle Époque editorial and esoteric illustration as described in Tarot sref prompt.

• Deck production recipe: The suggested pattern—"Tarot card of The Lovers with the text "THE LOVERS" --ar 9:16 --raw --sref 3746313528"—gives designers a repeatable way to render an entire major arcana set with consistent typography placement, framing and tone in Tarot sref prompt.

This turns Midjourney into a practical tool for building cohesive tarot or oracle decks, print series, and in‑world divination props for films and games.

Ghibli‑like Midjourney sref 1932564342 captures soft fantasy anime calm

Ghibli‑calm sref 1932564342 (Artedeingenio): A second Midjourney style reference from Artedeingenio, --sref 1932564342, is highlighted for "classic illustrative fantasy anime" that feels clearly inspired by Studio Ghibli’s calm, innocent, gently melancholic aesthetic in Ghibli style sref.

• Mood and palette: Sample portraits—a coat‑collared girl, a witch with a pumpkin and cat, a Viking‑like shield‑bearer and a soft angel—share clean linework, pastel‑leaning colors and open white backgrounds that keep focus on character emotion in Ghibli style sref.

• Use cases for storytellers: The thread notes it works across medieval, everyday or magical fantasy, giving authors and directors a unified look for covers, key art or character sheets when they want something gentle rather than high‑contrast or edgy in Ghibli style sref.

For AI illustrators building worlds, this sref acts as a plug‑in "house style" for warm, approachable fantasy art that can span cast members without visual drift.

🕹️ Multiplayer AI scenes: LumaLabs experiments in shared play

DreamLabLA’s LumaLabsAI experiments argue AI’s future is multiplayer, not solo with NPCs—posting the core reel and follow‑ups with more examples and an explainer for the “multiplayer” reference.

DreamLabLA and LumaLabs pitch “multiplayer AI” for shared creative scenes

Multiplayer AI concept (DreamLabLA × LumaLabsAI): DreamLabLA frames the future of AI-assisted storytelling as multiplayer—multiple humans co-creating in the same AI-driven scene—rather than solo users surrounded by AI NPCs, using LumaLabsAI video tools to illustrate the idea in a stylized experiment, as shown in the Multiplayer reel. Follow-up posts expand with more example clips and a short explanation thread to clarify what “multiplayer” means for skeptical viewers, emphasizing shared agency and coordinated prompts across participants rather than passive character control in isolation More examples and Multiplayer explainer.

• Creative angle for teams: The thread positions this workflow as a way for filmmakers, animators, and game designers to prototype scenes where several creators can effectively “play together” inside one generative environment, hinting at future tools where multiple users steer camera, tone, and character actions in parallel rather than handing control to a single operator Multiplayer reel.

🛠️ On‑brand deliverables: Gamma × Banana Pro, Pictory kits, and capture APIs

Creators share practical pipelines: Gamma adds Nano Banana Pro for slide decks, Pictory’s Brand Kits keep videos on‑brand, Screenshot‑kit offers one‑call captures, and hands‑on notes critique the Claude Chrome plugin UX.

Gamma taps Nano Banana Pro for editable AI slide decks

Gamma × Nano Banana Pro (Gamma, Google): A creator reports that Gamma now supports Nano Banana Pro for AI slide generation, using it to auto‑draft an 8‑page deck on the "most up‑to‑date Instagram algorithm" while keeping all text fully editable for tweaks before and after generation, as shown in the Gamma Nano demo. They contrast this with NotebookLM, which they say generates comparable outlines but locks the prose, positioning Gamma as a more flexible surface for social‑strategy decks built on Google's flagship model.

For marketers and designers, this ties a familiar slide editor to a strong multimodal model so that both structure and copy stay under their control instead of frozen inside an AI summary.

Pictory Brand Kits promise faster on‑brand AI video output

Brand Kits (Pictory): Pictory is promoting a Brand Kits feature that lets video creators lock in logos, color palettes, fonts and voice settings so AI‑generated clips inherit a consistent look and feel by default, according to the Pictory brand kits promotion and the attached guide in the brand kits guide. The example UI shows title cards and lower thirds updating in sync with selected brand colors and typography, turning Pictory into more of a brand‑memory layer than a one‑off template tool.

For teams producing recurring social, training, or L&D videos, this reduces the manual work of re‑applying style rules on every project while keeping AI‑cut content visually aligned with existing brand systems.

Claude Chrome plugin draws mixed reviews from early creative users

Claude Chrome plugin (Anthropic): A power user testing the Claude Chrome extension inside Microsoft Edge describes it as "incredibly slow" for design work, noting that a request to summarise a YouTube video into a slide deck produced bland Google Slides rather than a styled Canvas‑like layout, with repeated failures to improve aesthetics despite multiple prompts, as detailed in the Claude Chrome issues. They also report that the "Act without asking" setting often fails to interact with pages, the sidebar disappears when tabs change, and the model—Haiku 4.5 in this case—sometimes does not recognise obvious elements like an embedded video on a slide.

In a follow‑up, the same user argues that a browser plugin is more compelling than a separate AI browser because it preserves years of extensions and profiles while still letting Claude Code generate structured action‑item documents inside web apps, as shown in the Claude plugin view and the task list example in the Claude task list; overall sentiment is that the idea is strong but current UX and model choice limit its usefulness for on‑brand, design‑sensitive work.

Replicate’s Screenshot‑kit offers one‑call web screenshots and videos

Screenshot‑kit (Replicate): Replicate announced Screenshot‑kit, an API that returns website screenshots and short capture videos from a single request, avoiding the need to script browser automation or maintain headless Chrome on their own, as described in the Screenshot kit launch. The framing targets teams that need many consistent captures of sites or app states for UX reviews, marketing pages, documentation, or dataset building, but do not want to own the underlying capture infrastructure.

For AI creatives and toolmakers, this offers a straightforward way to wire automated visuals of live pages into content workflows, from auto‑updating product shots to reference clips for training or QA.

🤖 Physical assistants for creatives: Reachy Mini + MCP in the wild

Agentic robotics pops up for studio workflows: fast MCP tool wiring, live demo integrations, and 3,000 units shipping—hinting at voicey, on‑set helpers. Distinct from creative model updates elsewhere.

3,000 Reachy Mini units are now shipping to customers

Reachy Mini rollout (Pollen Robotics): A logistics photo shows a palletized stack of labeled cartons with a caption that "3000 Reachy Mini" are on their way, indicating a substantial production and shipment wave for the small humanoid platform in one batch, as seen in the Reachy shipment update. For creative teams, this scale hints that Reachy Mini is shifting from rare lab hardware to something more like a standard prop or assistant you can expect to encounter on sets, in classrooms, and at live events.

The volume matters because it supports a shared ecosystem of MCP tools, motion behaviors, and creative workflows around the same physical body plan, rather than each studio hacking on a one‑off robot.

DuckDuckGo MCP tool wired into Reachy Mini in about 10 minutes

Reachy Mini MCP (Pollen Robotics): A short engineering update shows an MCP tool for DuckDuckGo search being built and connected to Reachy Mini in roughly ten minutes, framed as making the robot a "drop-in Alexa replacement" with personality, according to the quick build note in the MCP DuckDuckGo demo. For creatives, this points to on‑set robots that can be given new "skills" (like web search, knowledge lookups, or pipeline triggers) via small MCP tools instead of custom firmware.

The point is: wiring new capabilities into a physical assistant is starting to look like normal agent tooling rather than robotics R&D, which lowers the barrier for studios that already use MCP‑style tools in their software workflows.

GradiumAI conversational agent drives live Reachy Mini demo

Reachy Mini × GradiumAI (Pollen Robotics, GradiumAI): A live stage demo plugs GradiumAI’s conversational system directly into Reachy Mini, with the team stressing that live demos are risky but worth it when the robot responds in real time to spoken interaction, as described in the Gradium live demo. For filmmakers, performers, and installation artists, this highlights a path where a single conversational backend can puppeteer both dialogue and physical gestures on a humanoid rig.

This kind of wiring suggests near‑term use as a voice‑driven prop or host character: the same agent that answers questions could trigger arm poses, head turns, or simple stage business without a dedicated puppeteer.

Comment argues a wheeled base makes Reachy Mini studio‑useful like PR2

Reachy Mini mobility (Pollen Robotics): A practitioner notes that with a wheeled base attached, Reachy becomes "as functional as a PR2" in their view, referring to the classic Willow Garage research robot that inspired many early manipulation and service‑robot projects, as mentioned in the Wheeled base remark. The comment points toward a practical configuration where Reachy Mini could roll around stages or studios instead of being fixed on a table or stand.

For creatives and designers, that framing suggests roles beyond static demos—such as mobile assistants that can move gear, approach performers, or participate in blocking—once locomotion hardware catches up to the already‑shipping upper‑body platform.

📈 Leaderboards and market share: who won 2025 for creators?

Metrics and rankings hit feeds: Similarweb traffic share shifts (OpenAI ↓19.2 pts; Gemini ↑12.8), GLM‑4.7’s open‑weight standing, and creator scorecards for ‘best model’ winners; plus tests suggesting readers favor AI prose in blind trials.

OpenAI’s traffic share falls while Gemini gains, reshaping 2025 AI usage

GenAI traffic share (Similarweb): A bar chart circulating from Similarweb shows OpenAI’s share of generative‑AI web traffic dropping roughly 19.2 percentage points over the last 12 months, while Google’s Gemini gains about 12.8 points, still leaving OpenAI in the lead but with a much smaller margin Traffic chart post. Creators in the discussion frame this as evidence that "Google won 2025 in a big way" and speculate Gemini could reach 50% share by end of 2026 Share prediction, with one commenter flatly stating "Google will win this" Google comment.

For AI creatives, this points to a concrete shift in where mainstream users access models for coding, art, and research, even though these are traffic estimates rather than audited user counts; the data implies a diversifying market where Gemini has become the clear number‑two destination rather than a distant follower.

Influencer scorecard names 2025’s best AI models for creative work

2025 model rankings (community): An end‑of‑year recap from a popular AI commentator lays out an informal leaderboard of "best" models by use case, naming Anthropic Opus 4.5 as best for coding, Google Nano Banana Pro for image generation and editing, Kimi for writing, and Kling plus Veo 3.1 for video Creator recap list. The same list calls Gemini 3.0 Flash the best overall model on performance versus cost, Gemini Live the top voice assistant, Qwen the "open source king", and brands Meta AI the "biggest flop" and GPT‑5’s release the year’s "biggest disappointment" Creator recap list; a retweet amplifies the ranking to a wider creator audience Recap reshared.

For designers, filmmakers, and storytellers, this community scorecard is not a formal benchmark but a snapshot of sentiment from an active practitioner, showing which stacks creators say they reached for most often in 2025 across coding, art, video, open source, and real‑time assistants.

Blind tests suggest readers often favor AI‑generated fiction over originals

AI fiction reception (New Yorker): A long recap of Vauhini Vara’s New Yorker essay describes classroom experiments where fine‑tuned GPT‑4o rewrote missing scenes in the styles of authors like Han Kang, with roughly two‑thirds of creative‑writing students in blind tests preferring the AI‑generated scenes to the originals, calling them more "powerful" and "emotionally affecting" Essay recap. The thread notes similar results for styles imitating Tony Tulathimutte and Junot Díaz, while also highlighting strong pushback from authors such as Díaz and Sigrid Nunez, who judged the imitations as culturally off or "banal" despite student reactions Essay recap.

Beyond the classroom, the recap cites estimates (using the Pangram detector) that around 20% of recent self‑published Amazon genre titles may include AI‑generated text, against separate claims exceeding 70% in some 2025 commentary, and frames this alongside arguments that AI could make storytelling more accessible while risking a flood of homogenized prose Essay recap. For working and aspiring writers, the piece underlines how close AI style imitation has come in practice while also recording a clear split between reader preferences in blind tests and author concerns about authenticity and cultural nuance.

GLM‑4.7 secures leading open‑source spot on AAII model index

GLM‑4.7 (Zhipu AI): The GLM‑4.7 model is now featured on the Artificial Analysis Intelligence Index as a leading open‑source entry, reinforcing earlier claims that it sits near the top of multi‑model leaderboards for overall capability AAII mention, following up on GLM 4.7 where it was reported as #2 overall and top open‑weight on Website Arena. The highlighted placement on AAII signals that, for creators who prefer or require open weights, GLM‑4.7 is being treated by third‑party evaluators as one of the strongest generally available options rather than a niche research model.

This dual recognition across Website Arena and AAII positions GLM‑4.7 as a serious contender for studios and indie builders who want competitive performance without being locked into a closed commercial stack.

⚖️ Platform quality and AI jobs blowback

Two caution flags for creatives’ distribution and teams: a study says >20% of videos shown to new YouTube users are ‘AI slop,’ and a flagged HN post claims Salesforce regrets firing 4,000 staff in favor of AI.

Study says over 20% of videos shown to new YouTube users are ‘AI slop’

YouTube recommendations and AI “slop”: A Guardian‑reported study of fresh YouTube accounts finds that more than 20% of videos shown to brand‑new users qualify as low‑effort AI‑generated “slop,” meaning formulaic synthetic clips are a significant share of first‑impression recommendations rather than a fringe category, as highlighted in the Guardian study; for independent filmmakers, animators, and music channels, this implies that early discovery and watch time are competing directly against mass‑produced AI content that may dilute perceived platform quality.

Flagged HN post alleges Salesforce regrets replacing 4,000 staff with AI

Salesforce layoffs and AI backlash: A flagged Hacker News thread titled “Salesforce regrets firing 4000 experienced staff and replacing them with AI” claims the company is now struggling with cultural damage and work quality after substituting thousands of experienced employees with AI‑driven workflows, as shown in the HN thread; while the post’s accuracy is unverified and it was moderated as controversial, the anecdote captures a growing fear among creative and product teams that aggressive AI‑first staffing decisions can erode institutional craft and prove hard to reverse.

📣 Calls, contests, and showcases for AI creatives

Community prompts to publish: NoSpoon × Infinite Films contest enters final hours, standout entries get spotlights, and an open call invites artists to share their 2025 favorites.

NoSpoon × Infinite Films contest enters final day with standout AI film entries

AI film contest (NoSpoon × Infinite Films): Following up on the creator contest that first framed the Tournament of Champions, NoSpoon Studios is now in the last ~24 hours of its Infinite Films AI trailer competition and is heavily spotlighting standout submissions to drive final entries. OBD’s Goodfeather-themed short is promoted as an "absolutely incredible" entry and a must-follow creator in the call for last‑minute submissions, as described in the Goodfeather entry.

• Entry spotlights: Green Frog Labs is praised for "legendary" work and Guido’s animated piece is called both adorable and thought‑provoking, with NoSpoon explicitly reminding creators that the deadline is 12/28 at 11:59 pm PST in the Green Frog entry and Guido deadline note.

• Broader participation: Additional trailers like "UNCLAS/LIMDIS" and other contest pieces are being amplified to show range and raise the bar for narrative AI video craft, as seen in the UNCLAS trailer.

The push signals an active ecosystem where AI filmmakers are not only competing for prizes but also using the contest as a showcase for professional‑grade agent‑driven and text‑to‑video workflows.

Azed_ai launches open AI Art Challenge for creators’ favorite 2025 works

AI Art Challenge (azed_ai): Azed_ai opened a year‑end AI Art Challenge asking artists to post their favorite AI‑generated pieces from 2025, pairing the call with a fast‑cut montage of diverse styles to set expectations for quality and range in the AI art call.

The thread invites replies with artworks that Azed_ai will personally review and engage with, and early responses from creators planning to share pieces and bookmarking the call suggest it is becoming a community gallery rather than a narrow contest, as reflected in the creator bookmark and supportive replies in the appreciation reply and thanks reply.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught