Kling 2.6 voice control locks casts – StoryMem lifts consistency 28.7%

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Kling 2.6 becomes the de facto holiday workhorse: new Voice Control binds characters to named synthetic voices via [Character@VoiceName], fixing cross‑scene voice drift in character‑driven shorts; tri‑model dance shootouts on fal pit Kling 2.6 Pro against Steady‑dancer Turbo and Wan Animate 2.2, with creators highlighting finer limb tracking and motion stability; anime tests range from millisecond‑paced sea chases to slow, poetic landscape push‑ins; mocap‑driven Motion Control slots into VFX‑style pipelines, while Freepik workflows package Kling into repeatable, slider‑tuned templates.

• Seedance 1.5 Pro and peers: BytePlus exposes a full ModelArk API for text/image/multi‑shot video; Pollo AI, Lovart, SJinn, and Higgsfield Cinema Studio wrap Seedance into promos, agents, mobile‑style toggles, and melancholic “forgotten character” shorts, while Dreamina Video 3.5 pushes all‑in‑one AV generation.

• Continuity and editing stack: StoryMem reports 28.7% higher cross‑shot consistency via keyframe memory; InVideo Vision expands one prompt into 9 coherent shots; ComfyUI adds Qwen‑Image‑Edit‑2511 and Qwen‑Image‑Layered for instruction edits plus RGBA decompositions; OpenArt’s Character 2.0 claims 10× character consistency; NextStep‑1.1, Qwen Image FAST (~1.6s gens), and an Nvidia–Groq inference licensing deal collectively signal cheaper, sharper, and faster image/video pipelines.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught

Feature Spotlight

Kling 2.6 Motion + Voice Control is the holiday workhorse

Kling 2.6 dominates creator feeds with precise motion control and new voice consistency—beating rivals in side‑by‑sides, handling MoCap, and spanning frenetic to lyrical shots—making it the go‑to for fast, controllable cinematic clips.

Heavy creator traffic today: multiple side‑by‑sides vs Runway/WAN, MoCap driving, and both high‑speed and poetic anime tests. New Voice Control locks character VO across cuts. Practical Freepik workflows shared.

Jump to Kling 2.6 Motion + Voice Control is the holiday workhorse topicsTable of Contents

🎬 Kling 2.6 Motion + Voice Control is the holiday workhorse

Heavy creator traffic today: multiple side‑by‑sides vs Runway/WAN, MoCap driving, and both high‑speed and poetic anime tests. New Voice Control locks character VO across cuts. Practical Freepik workflows shared.

Kling 2.6 Voice Control debuts to lock character voices across scenes

Kling 2.6 Voice Control (Kling): Kling is rolling out Voice Control for 2.6, a feature that lets creators bind specific synthetic voices to characters using a simple [Character@VoiceName] syntax so that dialogue stays identical across every shot, according to an overview from a creator calling it a fix for “voice drift between scenes” in the voice consistency. A demo clip shows the same animated character speaking twice with audibly matching timbre and delivery, underscoring the consistency claim in the

.

Kling’s own channel amplifies a thread that frames this as a milestone for character‑driven shorts and series, emphasizing consistent casting for custom voices rather than ad‑hoc TTS per clip in the voice thread; for filmmakers and animators, this reduces the manual re‑dubbing and sound design needed when cutting multi‑shot stories from separate generations.

Tri-model dance tests pit Kling 2.6 against Steady-dancer Turbo and Wan 2.2

Kling 2.6 vs rivals (fal, community): Multiple creators are running side‑by‑side motion control shootouts between Kling 2.6, Runway, and Wan 2.2, with many noting Kling’s tighter tracking of fine movements such as fingers in the motion compare and broader commentary in the Runway comparison. fal adds a focused benchmark where identical robot dance moves are rendered by Steady-dancer Turbo, Kling 2.6 Pro, and Wan Animate 2.2 Turbo, letting users visually judge stability and adherence to the source motion in one clip.

The same three endpoints are exposed on fal so creators can reproduce the tests and swap models from one UI, as outlined in the fal showdown along with model playground links for Steady-dancer and Wan Animate in the Steady Dancer page and Wan animate docs; additional Reze dance comparisons from Japanese creators reinforce the pattern of Kling 2.6 better preserving complex limb choreography across frames in the reze comparison.

Kling 2.6 shows both extreme speed and quiet mood in anime camera tests

Anime motion tests (independent creators): New prompts show Kling 2.6 handling both chaotic and contemplative anime sequences, giving storytellers a sense of its range. One clip uses a detailed prompt for a high‑speed sea chase with multiple speedboats, towering waves, near‑collisions, and a camera skimming inches above the water, emphasizing exaggerated momentum and spray in the speed prompt.

A second test goes in the opposite direction—a dreamlike forward glide through an open landscape toward a lone character, with subtle parallax, grass and particles moving gently in the foreground, and no cuts—designed to check whether Kling can sustain a slow, poetic push‑in without jitter or pacing issues in the poetic prompt.

Together these experiments suggest Kling 2.6 can support both trailer‑style action beats and quieter character moments in anime‑inspired projects from a single tool.

MoCap driving Kling 2.6 puts Motion Control into a VFX-style pipeline

Kling 2.6 Motion Control (Kling): Creators are starting to pair traditional motion-capture suits with Kling 2.6 Motion Control, using recorded body performance to drive AI‑generated animation as shown in the mocap pairing. A demo clip shows an actor in a mocap suit performing an exaggerated walk while a Kling 2.6 Motion Control title card ties the capture to the model’s output, hinting at workflows where precise human timing and nuanced gestures feed directly into stylized AI video.

For AI filmmakers and animators, this points toward more conventional VFX pipelines—record once, restyle many times in Kling—rather than relying only on text prompts for motion.

Freepik creators share a practical Kling 2.6 Motion Control workflow

Kling 2.6 on Freepik (Freepik, creators): Following up on the platform‑wide rollout of Kling 2.6 Motion Control to Freepik users Freepik Kling rollout, creator ProperPrompter publishes a short walkthrough showing how to get reliable, repeatable motion from Kling inside Freepik’s interface. The screen‑recorded demo walks through selecting Kling 2.6 Motion Control, tuning a few key settings, and iterating on outputs until motion looks stable, with on‑screen text calling the model “WILD” while the variations render in the freepik workflow.

This shifts Kling 2.6 from a raw model into a packaged workflow for Freepik’s audience—template‑based creators can now use an opinionated setup rather than guessing at controls for each project.

🎥 Seedance 1.5 Pro momentum: APIs, tools, and reasons to switch

Broader availability today with concrete creator hooks—platform API access, tool support, and use‑case threads. Excludes Kling 2.6 feature which is covered separately.

BytePlus ModelArk ships Seedance 1.5 Pro API for text, image, and multi‑shot video

Seedance 1.5 Pro API (BytePlus): BytePlus has released a full Seedance 1.5 Pro API on its ModelArk platform, exposing text‑to‑video, image‑to‑video, multi‑shot control, and multilingual lip sync in a developer‑ready endpoint—expanding on the broader co‑launch across creator tools noted in co-launch, which focused on initial partner integrations api announcement.

• Programmable storytelling: The announcement describes support for scripting shot sequences, feeding in existing images, and controlling lipsync across multiple languages through standard API calls, allowing SaaS video editors and in‑house tools to build Seedance timelines into their own UIs api announcement.

• Infra positioning: BytePlus frames this as "video generation designed for scale, reliability, and real production use", with ModelArk acting as the managed serving layer, according to the attached api overview.

For teams building their own editors, campaign tools, or game trailers, this is the first time Seedance 1.5 Pro has been clearly positioned as a plug‑in infrastructure service rather than something you only touch through third‑party SaaS frontends.

Dreamina Video 3.5 impresses with native dialogue and SFX generation

Video 3.5 (Dreamina): Dreamina is highlighting its Video 3.5 model’s ability to generate native audio—including dialogue and sound effects—directly alongside visuals, with creator Hashem Al‑Ghaili calling the results "incredible" in an early test that follows earlier Seadance 1.5 Pro comparisons in real-world test dreamina demo.

The demo clip pairs cinematic shots with character speech and ambient SFX produced in one pass, and the official link positions Dreamina as an "all‑in‑one" suite where text‑to‑image, image‑to‑image and video live under the same roof, according to the Dreamina suite page. For storytellers and YouTube‑style creators, this points to a trend where Seedance‑class AV stacks are no longer alone in offering end‑to‑end audio plus video, raising the bar for expressiveness and post‑production speed.

Pollo AI pushes Seedance 1.5 Pro with 50% off and 115‑credit blitz

Seedance 1.5 Pro (Pollo AI): Pollo AI has turned on Seedance 1.5 Pro for all users with 50% off pricing this week plus a 12‑hour launch blitz that gives 115 free credits to anyone who follows, reposts, and replies "PolloxSeedance", framing it as a way to "master the art of audio‑visual storytelling" according to the Pollo launch.

• Narrative and acting focus: Pollo’s follow‑up thread says 1.5 Pro captures subtle micro‑expressions and complex emotions even without dialogue, positioning it as a fit for character‑driven shorts and emotional ads rather than only spectacle shots feature rundown.

• Camera and motion handling: The same rundown highlights support for high‑speed action and dynamic tracking shots, suggesting the model keeps characters stable under fast moves and complex blocking, which matters for music videos and kinetic trailers feature rundown.

• Global language and sound: Pollo emphasizes multi‑language and dialect support (including regional Chinese varieties) and tightly synced spatial SFX, so creators can cut fully finished sequences without sending audio to a separate toolchain feature rundown.

For filmmakers and editors, this turns Pollo into a relatively low‑risk way to trial Seedance 1.5 Pro’s strengths end‑to‑end—story, motion, dialogue, and mix—within a single credit‑based account.

Lovart exposes Seedance 1.5 Pro as film‑grade AV engine in its design agent

Seedance 1.5 Pro (Lovart): Lovart has wired Seedance 1.5 Pro into its "design agent" stack, advertising "film‑grade video + audio", "perfect sync", and "zero stitching" for AI‑generated sequences, which means one model now handles both visuals and audio inside their creative workspace Lovart announcement.

• Single‑pass AV output: The promo clip shows camera‑driven footage with matching sound baked in, instead of the common two‑step "video then soundtrack" flow, which reduces round‑tripping for ad spots and social edits Lovart announcement.

• Agent‑first framing: Lovart’s broader pitch around a "design agent" that can output images, video and more suggests Seedance sits alongside other generative tools rather than as a separate specialist app, see the design agent page for how video slots into their pipeline.

For designers and brand teams already living in Lovart, this effectively upgrades the platform from static layout and illustration into a place where storyboard ideas can become fully mixed clips without leaving the tool.

Seedance 1.5 Pro powers melancholic “forgotten character” shorts via Cinema Studio

Seedance 1.5 Pro (Higgsfield Cinema Studio): Creator Kangaikroto is using Seedance 1.5 Pro inside Higgsfield’s Cinema Studio to tackle the "forgotten character" trend—presenting once‑iconic figures now standing motionless "in the dust of old memories"—as a way to show the model’s ability to hold mood and framing over the length of a short forgotten character post.

The clip keeps a static, introspective composition while environmental dust and lighting shifts carry the emotional beat, contrasting with the faster, action‑heavy Seedance examples shared earlier in the week forgotten character post. For narrative filmmakers and motion designers, it underlines that 1.5 Pro’s camera and acting controls can support slower, contemplative pieces as well as music‑video energy.

SJinn Tool adds Seedance 1.5 Pro switch for mobile‑style workflows

Seedance 1.5 Pro (SJinn Tool): The SJinn Tool app now exposes Seedance 1.5 Pro as a selectable backend, with a simple toggle interface that lets users turn the model on for their projects without touching raw API settings sjinn update.

The short UI demo shows Seedance listed among other video backends, with per‑model switches suggesting creators can rapidly A/B between engines from the same project screen sjinn update. For indie filmmakers and hobbyists who mainly work from phones or lightweight desktops, this folds a high‑end AV model into a more casual tool without forcing a jump into enterprise consoles like ModelArk.

📽️ Multi‑shot continuity: memory systems and AI cinematographers

New tools emphasize real shot‑to‑shot consistency and automatic storyboards. Continues the multi‑shot race without touching today’s Kling feature.

StoryMem memory system boosts multi‑shot video consistency by 28.7%

StoryMem (ByteDance/NTU): Researchers at ByteDance and Nanyang Technological University are sharing StoryMem, a diffusion-based video system that generates ~1‑minute, multi‑shot narratives while keeping characters and scenes visually consistent across cuts via an explicit keyframe memory, with a reported 28.7% improvement in cross‑shot consistency over baselines as summarized in the StoryMem explainer.

The Memory‑to‑Video architecture stores selected keyframes from previous shots, encodes them, and feeds them into later generations so the model "remembers" appearances and layouts instead of re‑inventing them each time, and both code and model weights are available openly for builders in the StoryMem blog.

InVideo Vision turns one sentence into a 9‑shot cinematic storyboard

InVideo Vision (InVideo): InVideo is promoting Vision, a model that takes a single text prompt and expands it into 9 connected cinematic shots with consistent characters, setting, and visual style, effectively acting as an "AI cinematographer" that outputs a full storyboard from one line as shown in the Vision teaser.

Creators access Vision through InVideo’s "Agents and Models → Trends → Vision" flow, where they can type a short idea and receive a multi‑shot sequence designed to live in one coherent world, according to the InVideo access steps.

🖼️ Edit smarter, build faster: Qwen layers in Comfy + NextStep 1.1

Image editing gets precise, layered workflows in ComfyUI, plus a new gen model update. Mostly tooling power‑ups for VFX/brand work with a fresh model RL bump.

ComfyUI adds Qwen-Image-Edit-2511 and new Qwen-Image-Layered for deep compositing

Qwen image tools (ComfyUI): ComfyUI now exposes Qwen-Image-Edit-2511 alongside the new Qwen-Image-Layered model, giving node‑graph creators both higher‑consistency instruction edits and true RGBA layer decomposition in one workflow, following up on Qwen image edit which covered the base model’s consistency gains model integration. The ComfyUI team also published a detailed guide showing how to use layered decomposition for VFX‑style isolation, wardrobe changes, and product recolors within node graphs blog teaser and comfyui blog.

• Instruction editing inside Comfy: Qwen-Image-Edit-2511 brings natural‑language edits (e.g., fabric swaps, prop changes) that better preserve geometry and style than earlier instruction models, demonstrated in the sofa texture swap example where only material and cushions change while lighting and framing stay locked model integration.

• Qwen-Image-Layered for VFX: Qwen-Image-Layered can split a frame into multiple RGBA layers—foreground subject, props, background—so artists can independently recolor outfits, re‑light scenes, or replace environments without re‑generating the whole image, as illustrated by the input→output subject isolation diagrams across several scenes layered overview.

The combination means ComfyUI nodes now cover both quick prompt‑driven tweaks and full compositing‑grade layer control inside one graph, which is a direct fit for brand, product, and film pipelines that already live in Comfy.

OpenArt launches Character 2.0: one reference in, 10× more consistent characters out

Character 2.0 (OpenArt): OpenArt introduced Character 2.0, a character‑locking system that turns a single reference image into a highly consistent persona across new generations—humans, mascots, anime or creatures—claiming 10× higher consistency, faster runs, and lower cost than its earlier character tools feature overview. The feature plugs into OpenArt’s broader generation stack, advertised as working with Nano Banana and Seedream so the same face or mascot can follow users from stills into video feature overview and consistency claim.

• Single‑image training flow: The demo shows one anime‑style girl being re‑generated in many outfits and scenes while holding core identity features (face shape, hair, expression cues) across angles and lighting changes, illustrating the system’s promise of “one image in → unlimited consistent generations out” feature overview and consistency claim.

• Pipeline integration: OpenArt’s homepage emphasizes that Character 2.0 supports downstream tools like music‑video creation, explainer videos, and ASMR clips via Nano Banana and Seedream, which positions it as a central identity layer rather than a standalone toy tool landing.

Pricing and token‑level economics are not detailed in the tweets, but the framing targets studios and solo creators who need recurring characters in campaigns, comics, or episodic shorts without retraining a new LoRA per project.

StepFun’s NextStep‑1.1 uses RL to sharpen visuals and text fidelity over v1.0

NextStep‑1.1 (StepFun): StepFun released NextStep‑1.1, an updated flow‑based text‑to‑image model on Hugging Face that layers a novel RL post‑training stage on top of the original NextStep‑1, claiming cleaner textures, better text rendering, and fewer visual failures compared with the base model model teaser and model card. Side‑by‑side grids show v1.1 producing sharper subjects and more legible signage—especially on prompts like monkeys holding protest signs or wooden crates labeled “FRAGILE: HANDLE WITH CARE” model teaser.

• Visual uplift: Across categories such as axolotls underwater, deer in forests, and apothecary‑style bottle shelves, the RL‑tuned column consistently pushes richer lighting, depth, and material detail than both NextStep‑1 and the 1.1 pretrain variant, which appear flatter or noisier in the comparison composite model teaser.

• Text and labeling: The RL column notably improves text correctness on signs and labels—e.g., multiple crates and placards reading “SCALING TRANSFORMER MODELS IS AWESOME!” and full “FRAGILE: HANDLE WITH CARE” copy—whereas earlier variants show misspellings or partial gibberish model teaser.

The model card frames NextStep‑1.1 as a drop‑in upgrade for existing NextStep‑1 workflows (same architecture and interface) with extra environment notes around Python 3.11 and dependencies, but there are no independent benchmarks yet to quantify how it compares to other current SDXL‑class or FLUX‑class models model card.

Replicate and PrunaAI unveil Qwen Image FAST with 1.6s, near-free inference

Qwen Image FAST (Replicate/PrunaAI): Replicate and PrunaAI announced Qwen Image FAST, a speed‑tuned deployment of Qwen’s image model that hits around 1.6 seconds per generation and is described as “almost free” for a limited time, aimed squarely at high‑volume creative workloads like batch ad concepts or rapid iteration fast variant. For creatives using Replicate as a backend, this slots in as a lower‑latency, lower‑cost option next to heavier Qwen Image variants.

The announcement does not yet share detailed quality trade‑offs or pricing tables, so comparative performance versus full‑fidelity Qwen Image deployments remains unclear from the tweet alone fast variant.

🎨 Reusable looks: Akira sci‑fi, storybook ink, charcoal erasure

A strong day for shareable styles and prompt kits—cinematic anime srefs, whimsical holiday cards, high‑contrast B/W, plus JSON tips for series‑level variability.

Nano Banana Pro JSON prefix keeps a series’ vibe while randomizing content

Series JSON prefix (fofrAI/Nano Banana Pro): FofrAI shared a JSON prompt prefix for Nano Banana Pro that forces new nouns, objects, palettes, and poses while demanding the output “strictly preserve the original vibe, aesthetic, and mood,” as described in the JSON variability tip. The example big‑cat set—snow leopard, black jaguar, tiger in ruins, leopard on rock—all read as distinct shots from the same photographic series.

“Inverted Charcoal Explosion” prompt defines reusable erasure-based B/W drama

Inverted Charcoal Explosion (azed_ai): Azed_ai published a reusable prompt formula for a high‑contrast black‑and‑white style where highlights are carved out by erasure, with smoky, explosive charcoal smudges around a central subject—see the Charcoal prompt share. The pattern uses a [subject] slot plus language like “highlights created by erasure” and “drawn with burning embers and wind” to keep the aesthetic fixed while swapping in monks, dancers, warriors, or portraits.

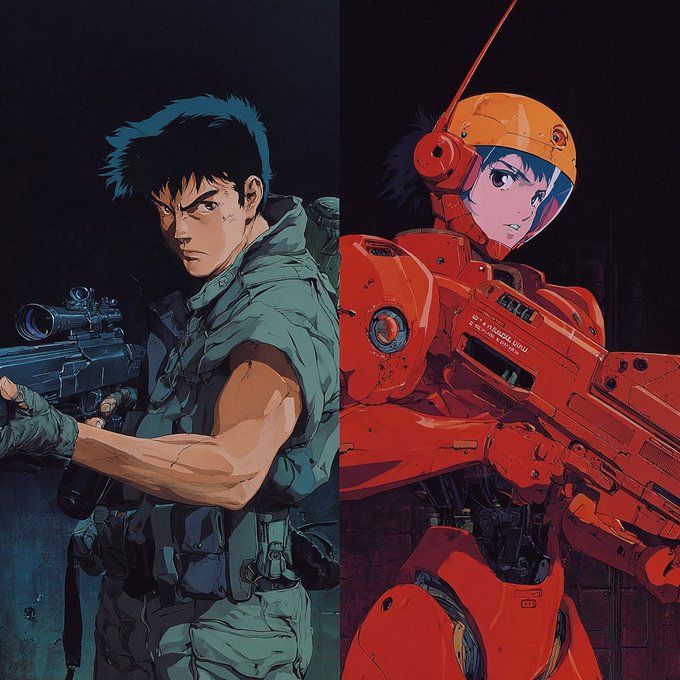

Akira-style Midjourney sref locks in cinematic 80s–90s sci‑fi anime look

Akira-style sci-fi sref (Artedeingenio/Midjourney): Artedeingenio released Midjourney style reference --sref 3725990145, capturing the classic late‑80s/90s OVA look—neon rifles, chunky red bikes, tactical armor, and deep blue night cities—as shown in the Akira sref post. Creators can now bolt this sref onto prompts to keep character design, lighting, and camera language consistent across sequences of cyberpunk storyboards or anime key art.

Coffee/Tea Break prompts turn POV drinks into doodled story overlays

Coffee/Tea Break overlays (azed_ai + community): Azed_ai’s "Coffee Break" Nano Banana Pro prompt turns a first‑person latte photo into mixed‑media art by layering chalk‑style doodles, slogans like “COFFEE BREAK / RELAX / STAY COZY,” and cafe ephemera over a cozy mug POV, as seen in the Coffee break share. Community riffs reuse the same framing and overlay logic for different narratives—from a paranoid spyboard theme (“THEY ARE LISTENING”) to a weekend travel "Tea Break" mood—demonstrating it as a portable layout system rather than a one‑off image, shown by the Spyboard variant.

New Midjourney sref 4972783675 nails moody blue crown-and-samurai portraits

Blue crown samurai sref (azed_ai/Midjourney): Azed_ai introduced Midjourney style reference --sref 4972783675, a cohesive dark‑blue cinematic look spanning veiled queens, crowned figures, and foggy tattooed samurai according to the Blue style sref. The sref locks in cold color grading, directional shafts of light, and soft atmospheric haze so portrait prompts across a project feel like stills from the same moody fantasy film.

Whimsical ink-and-watercolor Midjourney sref gifted for holiday cards and kids’ books

Storybook ink sref (Artedeingenio/Midjourney): As a Christmas "gift", Artedeingenio shared Midjourney style reference --sref 781354363, a loose ink-and-watercolor children’s illustration look tuned for reindeer, Santa, elves, and snowmen according to the Storybook sref gift. The palette, line looseness, and watercolor bleed stay stable across prompts, giving designers a drop‑in style for postcards, campaign mascots, and emotional editorial spots.

🤖 Reference‑driven ad agents and sketch companions

Agentic tools for product shots and speed‑sketching show up in feeds—turn refs into controlled ads or type to start a live drawing flow.

Glif agent turns reference photos into precise “iPhone Air”‑style ads

Product ad agent (Glif): Glif is showcasing an AI agent that uses reference images to generate highly controlled product ads, demonstrated with a fake but convincing "iPhone Air" commercial that matches real‑world lighting, reflections, and framing, while clearly flagged as not an official Apple spot in the creator’s description iPhone Air demo.

The agent relies on one or more reference shots to lock in exact product geometry, surface detail, and studio‑style lighting, producing floating hero shots and clean white‑background compositions that mirror modern device marketing, with the author calling out "product details, exact lighting, clean composition" as the key benefits for creators who want high-end visuals without a live studio setup iPhone Air demo.

Glif’s Drawing Companion turns “I want to draw something” into live sketches

Drawing Companion (Glif): Glif has wired a Drawing Companion directly into its agents, so users can trigger a live sketching flow by typing or saying “I want to draw something!”, with the demo showing an agent turning a rough star into a decorated Christmas tree in seconds drawing companion clip.

The companion runs as part of the broader Nano Banana Pro–driven illustration stack, letting the same agent refine and restyle sketches into polished AI images, as highlighted in the Nano Banana + Drawing Companion teaser and direct agent links for hands‑on testing nano banana mention, sketch agent .

🧼 Cleanup and modify: object removal and seasonal scene swaps

Finishing tools see action—quick object removal for live video and targeted Modify passes for environment changes. Excludes Kling feature.

Luma shows Ray3 Modify cycling one scene through all four seasons

Ray3 Modify (LumaLabs): LumaLabs demonstrated Dream Machine’s Ray3 Modify applying dramatic season swaps to the same outdoor scene—winter, spring, summer, and autumn—within a single continuous camera move, highlighting its role as a finishing tool for large environment changes rather than full re-renders, as seen in the season demo; this follows earlier behind-the-scenes tests of keyed visual transformations in Dream Machine, where creators explored more surgical Modify passes on existing footage, as noted in Ray3 Modify.

The clip underlines that Ray3 Modify can keep composition and motion consistent while swapping atmosphere, foliage, and lighting, which is directly relevant for filmmakers and storytellers who need alternate time-of-year versions of a shot without new location work or reshoots season demo.

🎁 Holiday creators’ corner: templates, contests, and deep discounts

A big day of seasonal offers and challenges: cinematic Santa templates, final advent prizes, GPU price cuts, and unlimited plan deals for creators.

Freepik’s 24 AI Days finale offers lifetime licenses and SF trips

#Freepik24AIDays Day 23 (Freepik): Freepik closed its 24‑day AI Advent with a high‑stakes final round—5 Lifetime Freepik licenses, 5 Creator Studio Pack ULTRA bundles, 5 trips to San Francisco (flight + hotel + “Freepik experience”), plus 20 “I survived Freepik’s 24 AI Days” merch prizes, building on earlier daily drops noted in Day 22 prizes and detailed in Final day prizes.

To enter, AI creatives must post their best Freepik AI creation, tag @Freepik, include the #Freepik24AIDays hashtag, and also submit via the official form described in the contest form; this positions the finale as both a portfolio showcase and a gateway to long‑term assets like lifetime stock access and SF networking.

Comfy Cloud cuts GPU prices by 50% through Dec 31

Comfy Cloud GPUs (ComfyUI): ComfyUI announced a 50% price cut on Comfy Cloud GPU instances running from now until December 31, aiming squarely at holiday rendering sprints and experimentation for node‑based image and video pipelines, as stated in GPU discount.

For AI artists and filmmakers who rely on heavy workflows in ComfyUI—like high‑res stills, batch stylization, or multi‑pass video processing—the temporary halving of GPU rates reduces the cost of pushing more iterations or running longer jobs before year‑end.

Hedra drops Santa’s Freeway Chase template with 30% off for first 500

Santa’s Freeway Chase (Hedra Labs): Hedra released a new “Santa’s Freeway Chase” video template where one uploaded photo becomes a Fast & Furious‑style highway race with Santa riding shotgun, and they are offering 30% off Creator & Pro plans to the first 500 followers who comment “HEDRA HOLIDAYS” according to the promo in Template details.

This template gives AI filmmakers and social video teams a plug‑and‑play way to generate cinematic, on‑brand holiday clips from a single still image, extending Hedra’s earlier Santa jet‑ski card, as highlighted in Santa jet ski.

OpenArt Advent Day 6 adds a free 3‑minute music video gift

Holiday Advent gifts (OpenArt): OpenArt’s Holiday Advent Calendar moved to Day 6, adding a gift of one free music video (up to 3 minutes) for subscribers, on top of a bundle of seven total gifts, top models, and 20k+ credits of value emphasized in Day 6 gift and Upgrade pack, following earlier video‑centric drops like Kling 2.6 slots in Kling advent.

The new perk targets AI musicians and video storytellers who want to test long‑form, synced visuals without burning standard credit pools, and it reinforces OpenArt’s positioning of upgrades as time‑limited access to the full stack of December model and credit bonuses, as outlined in the pricing page.

PixVerse Holiday Encore brings 40% off Ultra with unlimited generations

Holiday Encore Ultra (PixVerse): PixVerse relaunched a Holiday Encore deal where the Ultra Plan gets 40% off yearly pricing and unlocks unlimited generations (no credits required), giving video‑heavy creators a high‑throughput tier for the season as described in Holiday Encore offer.

The offer, pitched to anyone who missed Black Friday, targets AI video editors and social teams who want to push large volumes of short‑form or experimental clips without worrying about per‑clip costs over the next year.

Krea launches Christmas sale with up to 40% off Pro and Max

Christmas discounts (Krea.ai): Krea turned on Christmas discounts offering up to 40% off its Pro and Max subscription plans, framed as a limited‑time holiday promotion in Krea discount.

For designers and motion artists leaning on Krea’s realtime sketch‑to‑image and style tools, the discount lowers the barrier to upgrading into higher‑capacity tiers right as many teams are prototyping new visual systems for 2026 campaigns.

Hailuo runs Christmas wish quote‑post campaign with Santa tie‑in

Christmas wish campaign (Hailuo AI): Hailuo invited users to quote‑repost with their Christmas wish, promising to help “find Santa and make it come true,” turning their feed into a lightweight interactive holiday campaign for AI prompt‑driven art and storytelling, as seen in Wish campaign.

This follows their broader Christmas contest activity in Christmas contest and keeps Hailuo in front of creators over the holidays, even though this particular prompt leans more toward community engagement than explicit discounts or prizes.

⚖️ Creative AI guardrails: brand safety and big‑idea narratives

Discourse and safety reminders surface: a viral ad misstep underscores brand risk, while Noema critiques superintelligence rhetoric shaping policy and power.

Noema’s “Politics of Superintelligence” critiques AI apocalypse talk as power move

Superintelligence narratives (Noema): A Noema Magazine essay argues that talk of inevitable, god-like AI is less a neutral forecast and more a political strategy that helps today’s tech leaders centralize power and soften scrutiny essay summary. The piece traces these ideas from Cold War RAND-style rationalism through Yudkowsky and Bostrom, into effective altruism and the way figures like Altman and Musk warn of future AGI risks while racing to build such systems.

According to the summary, the essay claims this focus on hypothetical superintelligence diverts attention from current harms—worker surveillance, bias, and environmental costs—and suggests alternatives such as indigenous data governance, worker audits, and more democratic control over AI infrastructures essay summary; for creatives and storytellers, it underlines how framing around "inevitable AGI" is itself a narrative choice that shapes regulation, funding, and which AI futures feel thinkable.

“Epstein Holiday” AI ad sparks fresh brand‑safety worries for creatives

Brand safety slip (Community): An AI-generated holiday ad circulating on X crosses a line; it uses the tagline “Nothing beats an Epstein Holiday”, which azed_ai frames as proof that "AI is officially off the rails" and that model power is outpacing responsibility brand risk warning. The spot initially reads as a normal fuzzy Christmas promo, which makes the sudden, inappropriate line feel like a reputational landmine that could easily sit under any real brand’s logo.

For AI filmmakers, designers, and agencies, this clip is functioning as an informal case study in what happens when automated copy, festive visuals, and minimal human review collide; it highlights how guardrails around sensitive names and themes, plus clear internal review standards, are becoming as central to AI ad workflows as model choice or render quality.

🧩 Nvidia × Groq: licensing signal for low‑cost inference

Deal chatter matters for creatives’ pipelines: a non‑exclusive licensing agreement aims to scale fast, cheap inference; key Groq leaders join Nvidia while GroqCloud continues.

Nvidia and Groq sign non‑exclusive inference licensing deal, not a $20B buyout

Nvidia–Groq inference licensing (Nvidia, Groq): CNBC/Reuters coverage framed this as Nvidia buying Groq for about $20 billion, but Groq‑side messaging instead describes a non‑exclusive licensing agreement for its inference technology, aimed at expanding access to high‑performance, low‑cost AI inference workloads, as covered in the acquisition headline and clarified in the licensing summary. Founder Jonathan Ross and president Sunny Madra, plus selected team members, will join Nvidia to develop and scale this tech, while Groq continues as an independent company under new CEO Simon Edwards with GroqCloud services remaining fully operational for existing customers according to the licensing summary.

For AI creatives, filmmakers, designers, musicians, and storytellers, the signal is that Nvidia is formally embracing Groq’s low‑latency, high‑throughput inference approach rather than folding the company in outright; if this tech is integrated into Nvidia’s broader stack, it could lower per‑token or per‑frame costs and reduce wait times for generative video, image, and audio tools that already run heavily on Nvidia GPUs, while GroqCloud’s continued operation means teams experimenting with LPU‑backed pipelines do not face an immediate migration or shutdown risk.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught