xAI Colossus 2 goes live at ~1,600MW – gigawatt cluster claims

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

xAI boosters say Colossus 2 is now up and running; the framing is “first gigawatt AI training cluster,” with an Epoch AI chart screenshot pointing to roughly ~1,600MW in 2026 and a steeper ramp penciled in for 2027; the posts don’t specify GPU counts, interconnect, or what workloads are actually live, so the “first gigawatt” line reads directional rather than audited. If real, multi‑GW sites imply faster training cadence and more headroom for high-volume video inference, but today’s artifacts are mostly social proof.

• OpenAI × Cerebras/Codex: Sam Altman replies “Very fast Codex coming!” under an “OpenAI 🤝 Cerebras” tease; no latency numbers, dates, or product surface details yet.

• Anthropic economics + limits: a cost-math thread claims Claude Max $100/month can be ~30× cheaper than heavy Opus API usage; separately, a 10+ agent Claude Code run hits rate limits and stresses laptop memory/thermals.

• California digital replicas: AB 2602 and AB 1836 are flagged as enforceable Jan 1, 2026; consent/comp terms tighten for living-performer replicas and estate approval is required for deceased likeness use.

Open questions: how much of the cluster chart corresponds to deployed capacity vs planned power; whether “fast Codex” is a model change, serving change, or both.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught

Top links today

Feature Spotlight

Veo 3.1 rollout goes “production-ready”: 4K, Ingredients-to-Video consistency, vertical 9:16, and AI Studio demos

Veo 3.1 is spreading across creator surfaces (Gemini API/AI Studio + partner apps) with 4K, true vertical, and better Ingredients-based consistency—making “directable” short-form production more feasible.

Cross-account story today is Google’s Veo 3.1 moving into more surfaces (Gemini API + Google AI Studio) with a clear push toward continuity (Ingredients) and shippable formats (true vertical + 4K/cleaner 1080p). This category is the Veo-only bucket; other sections explicitly exclude Veo to avoid duplication.

Jump to Veo 3.1 rollout goes “production-ready”: 4K, Ingredients-to-Video consistency, vertical 9:16, and AI Studio demos topicsTable of Contents

🎬 Veo 3.1 rollout goes “production-ready”: 4K, Ingredients-to-Video consistency, vertical 9:16, and AI Studio demos

Cross-account story today is Google’s Veo 3.1 moving into more surfaces (Gemini API + Google AI Studio) with a clear push toward continuity (Ingredients) and shippable formats (true vertical + 4K/cleaner 1080p). This category is the Veo-only bucket; other sections explicitly exclude Veo to avoid duplication.

Veo 3.1 expands in Gemini API and Google AI Studio with Ingredients consistency, true 9:16, and 4K

Veo 3.1 (Google): Google’s latest Veo 3.1 rollout emphasizes “production-ready” outputs—tighter Ingredients-to-Video blending for character/background continuity, plus native vertical 9:16 and 4K alongside a cleaner 1080p enhancement path, as described in the Gemini API + AI Studio recap in Gemini API and AI Studio update and the Ingredients summary in Ingredients to Video upgrade.

• Continuity knobs: Posts frame the change as reduced “drift” when mixing multiple reference inputs (identity + setting), with the continuity claim spelled out in Gemini API and AI Studio update and reiterated in Ingredients to Video upgrade.

• Where it shows up: The surface list called out includes Gemini app, YouTube Shorts/Create, Flow, Google Vids, Gemini API, and Vertex AI, per Ingredients to Video upgrade.

• Transparency layer: Google positions SynthID watermarking and Gemini verification support for video as shipping alongside these capabilities, according to Gemini API and AI Studio update.

Google AI Studio’s “Type Motion” demo chains Gemini 3 Pro Image into Veo 3.1

Type Motion (Google AI Studio): A new AI Studio demo turns a short text phrase into cinematic motion typography by splitting the workflow into two stages—first generating a styled scene with Gemini 3 Pro Image, then animating it with Veo 3.1, as outlined in Type Motion walkthrough.

• How it’s framed: The demo highlights intent-first control (content + text style + optional reference image) before generation, with the “two-step” separation explicitly described in Type Motion walkthrough.

• Access detail: It’s presented as runnable inside AI Studio but requiring a paid API key, per Type Motion walkthrough.

Mitte AI says Veo 3.1 4K is live for all users, with per-setting cost visibility

Veo 3.1 4K (Mitte AI): Following up on 4K rollout (Mitte enabling 4K Veo 3.1), Mitte is now being described as having 4K Veo 3.1 released for all users, with an interface that shows “the exact cost” as you tweak generation settings, per All users rollout note.

The thread also repeats creator-facing quality claims (“cleaner frames, sharper details”), but still without an objective metric or side-by-side artifact in the tweet itself, as echoed in Quality claim recap.

Veo 3.1 4K arrives on Vadoo AI

Veo 3.1 (Vadoo AI): Vadoo AI is claiming 4K Veo 3.1 generation is now available in its product, with the positioning aimed at filmmakers looking for sharper, cinema-style output as stated in Vadoo 4K announcement.

The posts are promotional and don’t include a public spec sheet or pricing details in today’s thread, so the concrete change is primarily the availability claim in Vadoo 4K announcement.

Freepik Variations + Veo 3.1: start/end-frame iteration as a clip factory

Workflow pattern (Freepik → Veo 3.1): A creator workflow is spreading where Freepik Variations is used to iterate on stills and choose start/end frames, then those frames are animated in Veo 3.1 to produce short clips, as described in Variations to Veo workflow note.

This is being framed as an iteration loop (generate options → lock endpoints → animate) rather than prompt-only video, with the “picking start/end frames” emphasis stated directly in Variations to Veo workflow note.

🧍 Kling 2.6 Motion Control in the wild: realism tests, character swaps, and “what’s real anymore?” clips

Today’s Kling chatter is mostly capability demos and Motion Control use-cases (not pricing promos), with lots of “realism” reactions and swap-style workflows. Excludes Veo 3.1 entirely (covered as the feature).

Kling 2.6 Motion Control sparks “best realism” reactions in new tests

Kling 2.6 Motion Control (Kling): A new wave of reposted tests is leaning hard on realism claims—one creator calls Kling 2.6 motion control “the best I’ve seen,” framing it as finally “perfect” for photoreal results in the Realism test reaction, while other viral demos push the same “what’s real anymore” vibe through abrupt style jumps in the Style-jump clip.

The common thread is that creators aren’t just sharing outputs; they’re sharing disbelief as a distribution strategy, which is a useful signal about where Motion Control is landing culturally right now.

Kling reposts amplify synthetic influencer and adult-creator pipelines

Synthetic persona pipeline (Kling): Kling’s account is reposting “AI influencer factory” framing—scrape clips or record yourself, then automate the rest—positioning bespoke personas as something you can assemble end-to-end in the Bespoke influencer playbook. A separate repost in Russian makes the same point with an adult-creator angle (“anyone can become an OnlyFans creator”), as captured in the RU synthetic creator claim.

• Continuation of the identity-swap arc: Following up on Identity swaps (Motion Control pitched for rapid personas), today’s posts shift from “swap tech” to “automated creator business,” with Kling used as the video production layer rather than the only ingredient.

The tweets don’t provide safeguards or disclosure practices; they’re primarily capability marketing via edgy use cases.

Kling’s under‑a‑minute style jump demo goes viral

Style-jump shock demo (Kling): A reposted clip shows a hand‑drawn/cartoon character walking on a branded “Kling” screen and then dissolving into a photoreal forest canopy, pitched as generated in under a minute in the Under-1-min demo. It’s being shared explicitly as a “WHAT IS REAL ANYMORE???” moment—lining up with broader “realism finally clicked” reactions around Kling 2.6 motion control in the Realism test reaction.

Kling Motion Control gets a “double synthetic” source-video twist

Synthetic-to-synthetic transfer (Kling Motion Control): One repost highlights a meta twist: the driving footage used as the motion source was also AI, not a real recording, per the AI driving source twist. That pairs naturally with the broader “record anything, transfer it” pattern described in the No-costume swap tip, but it pushes the idea toward fully synthetic pipelines (AI performance → Motion Control → AI character).

Kling Motion Control spreads a “no-costuming” performance transfer trick

Motion Control workflow (Kling): A practical tip is getting reposted: film yourself “as is,” then use Kling Motion Control to map that performance onto a dressed‑up or transformed character, skipping wardrobe/makeup entirely as described in the No-costume swap tip. In parallel, creators keep framing performance transfer (body + acting beats) as the core creative lever for 2026-style AI video, per the Performance-first prediction.

This continues to shift Motion Control from “cool feature” to “production shortcut”: performance capture first, styling second.

Niji 7 plus Kling 2.6 fuels cel‑shaded “final boss” character tests

Niji 7 + Kling 2.6 (Kling): A cel‑shaded “final boss” character clip made with Niji 7 and Kling 2.6 is circulating as a clean example of toon shading holding up under motion, per the Cel-shaded boss clip; it’s part of a broader pattern of using Motion Control as the glue layer to animate stylized stills without breaking the look, as echoed by “record yourself and transfer it” guidance in the No-costume swap tip.

Kling 2.6 gets a structural-collapse long-shot prompt stress test

Destruction coherence (Kling 2.6): A long-shot prompt describing a tower “buckling and tilting” while shedding floors is being shared as a structural coherence test—i.e., whether Kling can keep geometry readable through a complex collapse sequence, as shown in the Tower collapse prompt. Creators are also pairing these with high-speed camera instructions (forward “detonation” moves) to compound difficulty, as seen in the Prompt Studio speed demo.

No objective evals are attached in the tweets; it’s being used as a community benchmark clip template.

Kling 2.6 Prompt Studio examples highlight speed plus native audio

Prompt Studio i2v (Kling 2.6): A reposted Prompt Studio example emphasizes “Speed” as the organizing concept—describing a camera that “detonates forward” through a narrow corridor—while explicitly calling out image-to-video generation with native audio in the Prompt Studio speed demo. Separate creator toolchains are already stitching Kling into music-video style edits (Suno + Kling + compositing) as credited in the Thailand trip MV credits, which helps explain why “native audio” mentions are popping more often in Kling-centric shares.

The posts don’t include settings or pricing; they read like prompt-sharing for repeatable kinetic shots.

Kling 2.6 prompts lean into hyperspeed FPV cyberpunk flythroughs

Camera motion prompting (Kling 2.6): A hyperspeed FPV prompt for a vertical cyberpunk city is being shared as a reusable template for aggressive camera travel and sense of scale, as posted in the Hyperspeed FPV prompt. The same “velocity-forward” framing shows up again in Prompt Studio examples that describe the camera “detonating forward” through tight spaces in the Prompt Studio speed demo.

These are less about story and more about stress‑testing whether Kling’s motion stays coherent at extreme speeds.

🧰 Repeatable creator pipelines: performance-first video, shot grids, and multi-tool “content factories”

Heavy on practical, multi-step recipes today—especially performance-driven video (generate coverage grids, then transfer acting/lips) and storyboard-style shot planning. Excludes Veo items (feature) and pure prompt drops (handled separately).

Nano Banana Pro shot-grid to Kling 2.6 Motion Control for acting and lip sync

Performance-first video workflow: A control-heavy pattern is getting reiterated as “performance driven AI videos will dominate 2026,” chaining a Nano Banana Pro multi-shot “dialogue” grid (coverage first) into Kling 2.6 Motion Control to transfer your own acting and lip sync onto the generated shots, as described in Workflow steps recap. The emphasis here is reducing randomness by locking shot variety up front, then treating motion as a second, performance layer rather than hoping the model invents it cleanly.

Freepik Spaces to Kling 2.6 Motion Control music-video assembly workflow

Freepik + Kling (workflow): A compact “images-first → animate → assemble” recipe for music videos uses Freepik to generate the stills (in a custom Space), then animates clips in Kling by combining start frames with Motion Control, as shown in Workflow walkthrough.

• Shot construction: The approach describes building environments first, then band members, then alternating angles/compositions to get edit coverage, per the step-by-step thread in Full pipeline notes.

• Performance layer: Motion Control is positioned as the way to “perform” the instrument (record yourself, then clone the movement onto a still), which is the core trick highlighted in Workflow walkthrough.

ShotDeck reference boards to Nano Banana Pro 2×2 cinematic shot grids

Shot-planning pattern: A storyboard-like method uses ShotDeck frames to set composition/color intent, then asks Nano Banana Pro to generate a photoreal 2×2 grid of candidate shots for the same beat—useful for quick coverage exploration and continuity, as outlined in ShotDeck-to-grid setup.

• Grid-to-shot extraction: After generating the grid, the workflow pulls one cell as a single high-detail frame (“give me just the image in cell 3”), which effectively turns the grid into a branching storyboard, as described in Cell extraction step.

It’s a practical way to get “multiple angles of one moment” before you commit to animation or edit decisions.

Spectral Systems Interface prompt template as a reusable HUD scene builder

Diegetic UI / HUD workflow: A reusable “Spectral Systems Interface” template is being shared as a way to rapidly generate holographic HUD-style inserts (subject + two neon colors + floating data layers) that can be reused across different story beats, per the prompt structure in HUD prompt template.

The value for filmmakers/designers is consistency: it’s a repeatable scaffold for command-hub overlays (brain scans, creature readouts, artifact analysis) rather than one-off concept art.

Claude + Arcads + Kling 2.6 pitched as a faceless “AI content factory”

Multi-tool content factory pitch: A stacked pipeline gets promoted as “Claude + Arcads + Kling 2.6 = AI Content Factory,” framing Claude for scripting/automation and Kling Motion Control as the conversion lever for producing faceless, repeatable video ads/content, according to Content factory claim. The post is more a monetization framing than a spec drop—no concrete throughput or quality metrics are provided in the tweet.

Suno + Kling + NanoBanana + AfterEffects used for a full AI music video

AI music video pipeline: A full-length “THAILAND TRIP” music video credits a common creator stack—Suno AI for music, Kling AI for video generation, NanoBanana for image assets, and AfterEffects for finishing—showing how these tools are being chained into complete edits, as listed in Toolchain credits.

🧪 Style refs & prompt drops: OVA sci‑fi, storybook concept art, holographic UI, and gritty editorial packs

Big prompt-and-style day: multiple Midjourney --sref codes, a reusable holographic UI prompt, plus prompt-pack subscription plugs. Excludes tool capability news (handled in other categories).

Azed’s “Spectral Systems Interface” prompt template for holographic HUD scenes

Prompt template (Azed): The “Spectral Systems Interface” prompt is getting shared as a general-purpose holographic HUD builder—swap in a subject plus two neon colors, keep the same “transparent layers / floating data symbols / command hub” scaffold, as outlined in the Prompt share.

• Composable subject slot: The examples show the same structure holding up across very different subjects (wolf, brain, dragon skull, heart), which is the core reason it’s reusable day-to-day per the Prompt share.

• Thread reuse: The prompt is also being re-posted verbatim for remixes and community variations in the Prompt repost, reinforcing it as a “copy → tweak → publish” template rather than a one-off.

It’s essentially a stable “scene grammar” for sci‑fi interface shots, with the subject and palette doing most of the creative work.

Midjourney --sref 1165068537: cinematic storybook concept art with ink sketching

Midjourney style ref (Midjourney): The --sref 1165068537 reference is being passed around as a narrative digital-illustration look—digital paint plus visible ink sketch lines, with “animated film” concept-art framing called out in the Style ref description.

• Cinematic framing: The examples lean hard into staged lighting and story beats (faces lit by sconces, bar-counter props), which is why it’s being described as “storybook-style concept art” in the Style ref description.

• Works for motion tests: Creators are also using the same look as a base for short animated beats (vintage character animation feel) per the Animation example.

Midjourney --sref 2172318582 targets 80s/90s sci‑fi OVA action (Ghost in the Shell vibe)

Midjourney style ref (Midjourney): A new reusable style reference, --sref 2172318582, is being shared as a tight recipe for 80s/90s sci‑fi OVA action—explicitly framed around Ghost in the Shell / Akira energy, plus Cyber City Oedo 808, Patlabor, and Bubblegum Crisis cues in the Style ref writeup.

It’s showing up as a practical way to lock in cel-era military cyberpunk details (visor highlights, hard-surface armor, neon muzzle flashes) while still letting you iterate on character poses and weapon silhouettes quickly, as demonstrated by the example grid in the Style ref writeup.

Niji 7 prompt set: Stranger Things in 80s horror OVA anime style (ALT prompts)

Niji 7 prompts (Midjourney): A full mini-pack of Niji 7 prompts recreates Stranger Things characters as 80s horror OVA frames—Eleven nosebleed closeup, Upside Down lightning, Demogorgon reveal, Vecna villain shot—explicitly shared as usable ALT-text prompts in the Prompt thread.

The styling notes are unusually specific (hand-painted cel look, VHS/DVD screengrab texture, Devilman/Wicked City influence), which makes the pack more of a repeatable “production bible” than a single prompt, as seen in the Prompt thread.

Midjourney --sref 4907281122: moody, blind-striped portrait + neon noir stills

Midjourney style ref (Midjourney): A newly created style reference, --sref 4907281122, is circulating as a “moody cinematic still” preset—harsh window-blind shadows, sweaty closeups, retro nightlife color, and gritty film grain, as shown in the Sref drop and expanded in the More samples.

The consistent signature across the examples is high-contrast practical lighting (striped shadows, neon signage reflections) that reads like staged editorial photography rather than clean studio portraiture, which is why it’s being pitched as a ready-made moodboard seed in the Sref drop.

Nano Banana Pro “luxury packaging reveal” prompt template (Rolex/iPhone/Dior-style unboxing)

Prompt template (Nano Banana Pro): A “luxury packaging reveal” prompt is being shared as a repeatable unboxing/contact-sheet layout—gloved hands opening high-end boxes (Rolex watch box, iPhone Pro, perfume, Dior-style packaging) to generate consistent, premium product stills, as shown in the Prompt share image.

It’s a useful pattern for creators who need multiple “reveal beats” quickly (closed box → opening → hero product), because the template bakes in the props, hand placement, and lighting cues rather than leaving them to chance, which is the core framing in the Prompt share image.

Portrait Prompts Weekly Zine: 12 Midjourney prompts per issue across Fisheye/B‑Movie/Trash

Prompt pack subscription (Portrait Prompts Weekly): A recurring Midjourney prompt zine is being advertised as a weekly drop of 12 prompts per issue across three themes—Fisheye, B‑Movie, and Trash—with the claim of 1,612 weekly readers in the Zine pitch.

Details on the free vs paid tiers (and what you get beyond the weekly PDF) are spelled out on the Gumroad listing linked in the Gumroad product page, with the direct checkout surfaced in the Checkout link.

This is firmly positioned as “editorial portrait prompting as a habit,” not a one-off style drop, based on the cadence described in the Zine pitch.

Midjourney moodboard drop: --profile 7l1rhh3 for “darker side” aesthetics

Midjourney moodboard (Bri Guy AI): A creator is sharing a new Midjourney moodboard profile, --profile 7l1rhh3, positioned as a fast way to push darker, moodier portrait aesthetics without rebuilding a full prompt stack, per the Moodboard drop.

It’s also being used as the context hook for a broader “gritty editorial” prompt-pack pitch in the Newsletter promo, suggesting the profile is meant to slot into a weekly prompt routine rather than stand alone.

🧑🏫 Single-tool how‑tos: local ComfyUI video setup and text-based video editing

A couple of concrete, single-tool tutorials show up: local ComfyUI/LTX-2 setup steps and a text-first editing workflow for quick revisions. Excludes multi-tool pipelines (handled in workflows).

TechHalla posts a from-scratch ComfyUI + Manager install path for LTX-2 templates

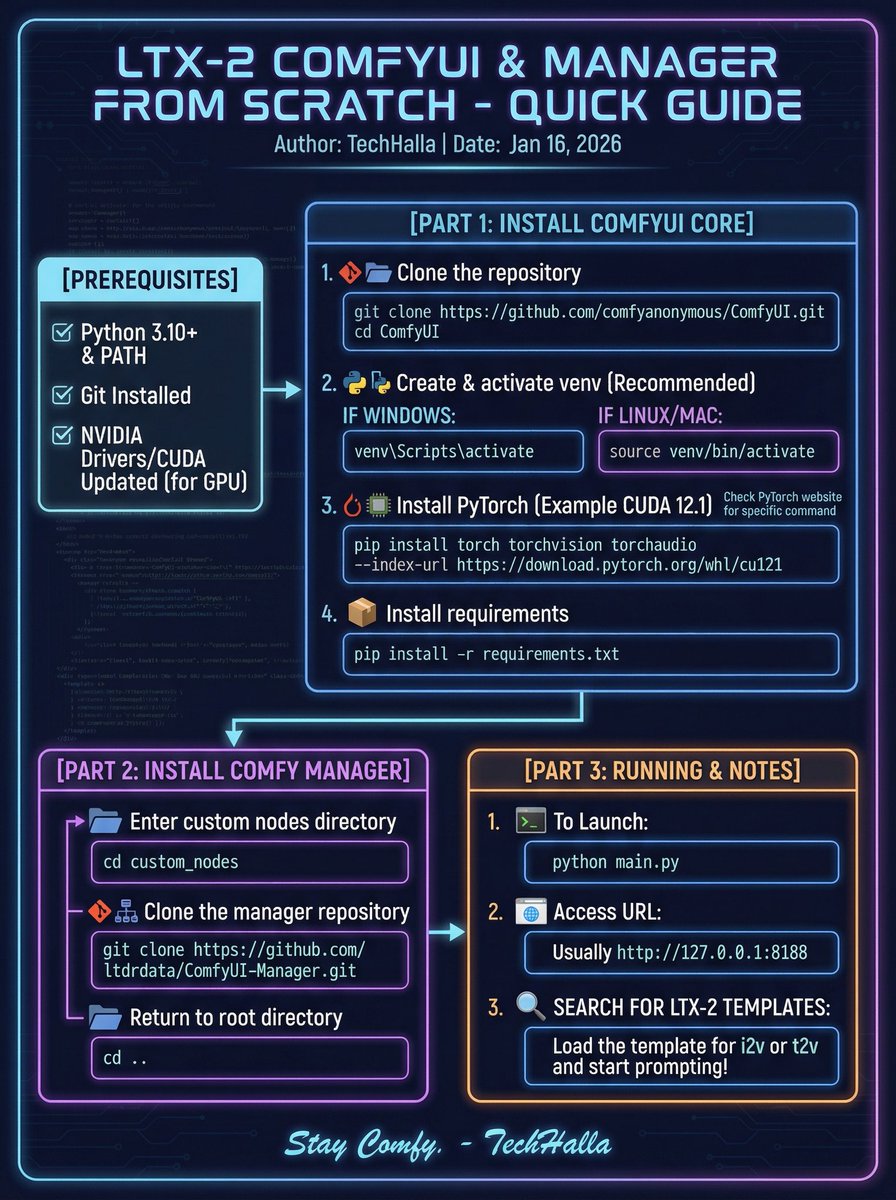

ComfyUI (ComfyUI): Following up on local setup—ComfyUI + open LTX-2—the new drop is an explicit “from scratch” setup checklist: Python 3.10+, Git, updated NVIDIA/CUDA drivers, a sample PyTorch cu121 install command, and the default local UI address (127.0.0.1:8188) as laid out in the install guide graphic.

It also calls out the practical next step once you boot: search for LTX-2 templates inside ComfyUI (i2v/t2v) and start prompting, as shown in the same install guide graphic. The clip attached to that post suggests a terminal-first setup → generation workflow, which is the main “local video studio” framing in install guide graphic.

Pictory demonstrates text-based video editing where script changes update captions and visuals

Pictory (Pictory): Pictory is pushing a text-first edit loop where you change words in the script/transcript and the edit ripples through the cut—captions refresh and visuals re-time/update “instantly,” per the feature demo and the accompanying walkthrough in the text editing tutorial.

This is framed less like generation and more like a lightweight text-based NLE for quick revisions (especially when you’re iterating on messaging), with the on-screen before/after copy swap shown in feature demo.

🎞️ Post tools that save hours: lasso matting, prompt-based modify passes, and cleanup utilities

Today’s post stack is about making footage usable: fast matting/rotoscoping, modify passes that keep motion, and quick cleanup. Excludes Veo (feature) and Kling Motion Control demos (identity category).

StyleFrame adds a lasso tool for fast video matting and RGBA exports

StyleFrame (video matting): StyleFrame demoed a new Lasso Tool for Video Matting that turns “draw a rough lasso” into a tracked mask across an entire clip—positioned as faster than frame-by-frame roto, with multi-layer masks and RGBA image-sequence export for compositing, as described in the lasso matting feature rundown.

The clip suggests a “mask once, propagate, then refine edges (hair)” loop; what’s not shown yet is how it handles occlusions, fast motion blur, or scene cuts (the usual failure modes for automated matting).

Hedra Labs teases “Clean Up,” hinting at an API-first cleanup step

Hedra Labs (cleanup utility): Hedra posted a “Clean Up” demo that ends by explicitly writing “API,” pointing to an API-centric cleanup workflow rather than a purely UI-only feature, as shown in the Clean Up demo clip.

The tweet doesn’t specify what’s being cleaned (artifacts, objects, or temporal issues), but the API emphasis is a clear signal they want this to slot into automated post pipelines.

Luma Modify gets framed as motion-preserving VFX via start/end frame control

Luma Modify (prompt-based post pass): A practical note circulating is that Luma Modify can preserve character movement while still changing the scene, with creators adding VFX by tuning start/end frames rather than regenerating everything from scratch, as summarized in the Modify preserves movement note.

This is a distinctly “post” use case: treat the shot’s motion as sacred, and use text as a controlled stylization / scene-adjustment layer—while accepting that scene drift is still a risk in exchange for speed.

A watermark-removal workflow pairs Nano Banana Pro outputs with Google Magic Eraser

Cleanup workflow (watermarks): A short demo shows a workflow where an image is generated with Nano Banana Pro, then a second tool—described as Google “Magic Eraser”—is used to remove the watermark, per the watermark removal demo.

This is framed as a capability demo, but it’s also an obvious provenance flashpoint: watermark stripping directly undermines attribution and downstream trust signals in creative pipelines.

🧩 Where creators build: workflow engines, touch-based editing surfaces, and hosted edit models

This bucket covers new/expanding creation surfaces rather than model capability details: workflow engines, editing UIs, and hosted model availability. Excludes Veo 3.1 (feature) and creator contests (events).

GMI Studio launches as a dedicated workflow engine, plus a 3‑month Pro giveaway

GMI Studio (GMI Cloud): GMI Studio is now “officially here” as a dedicated workflow engine positioned for “high-end AI creation,” moving beyond the “multimodal hub” framing, as described in the Launch positioning.

• Launch framing: The pitch is about repeatable, production-style workflows rather than one-off prompting, per the Launch positioning.

• Adoption push: GMI is running a 7‑day promo where 10 winners get 3 months of GMI Studio Pro free, as outlined in the Pro giveaway details.

Lovart ships Touch Edit, letting you mark a canvas to drive edits

Lovart (Lovart): Touch Edit is being demoed as a touch-first editing surface where you directly mark what you want changed—“Touch Edit everything you mark”—instead of steering edits purely through text, as shown in the Touch Edit demo.

The clip suggests a two-step interaction loop: establish the visual (“Touch Vision”), then apply targeted edits via on-canvas marks, with the product messaging emphasizing speed and direct manipulation in the Touch Edit demo.

WaveSpeed puts new image-edit models live, highlighting FLUX.2 Klein 4B Edit

WaveSpeed (WaveSpeed): WaveSpeed says new image editing models are now live, explicitly calling out FLUX.2 Klein 4B Edit as a precision/control option optimized for speed and cost-efficiency, per the Model rollout note.

What’s concrete in today’s signal is availability and positioning (precision + control), while details like exact pricing, latency, and edit strength aren’t provided in the tweet thread shared in Model rollout note.

Nebius highlights a collaboration with Higgsfield to scale training without bottlenecks

Nebius × Higgsfield (training scale): Nebius is spotlighting Higgsfield as a customer/partner and claims the two are collaborating to scale model training “without bottlenecks,” framing it as an enabling layer behind creator-facing products, according to the Nebius collaboration claim.

The post is high-level (no cluster size, GPU counts, or cost figures), but it’s a direct signal of infrastructure partnering aimed at faster training throughput, as stated in the Nebius collaboration claim.

🎵 AI music + scoring: Suno music videos and Firefly soundtrack experiments

Audio is lighter today but includes practical creator use: Suno-backed music video production and Adobe Firefly soundtrack scoring tests. Excludes non-audio video tool updates.

“THAILAND TRIP” music video credits Suno AI for the track, with Kling for visuals

THAILAND TRIP music video (Suno): A full-length AI music video drop credits Suno AI for music generation, paired with Kling AI for video, NanoBanana for image work, and AfterEffects for finishing—see the toolchain callout in Toolchain credits.

The practical signal for musicians and editors is the “stacked” pipeline: one model handles the song bed (Suno), while separate tools handle shot generation/animation (Kling) and editorial polish (AE), as shown in Toolchain credits.

Firefly’s Generate Soundtrack (beta) shows up as a fast scoring step

Generate Soundtrack (Adobe Firefly): A creator shares scoring a short horror test (“Woman in Red”) using Firefly’s Generate Soundtrack (beta) via an upload → soundtrack flow, as described in Scoring workflow note.

This reads like an “instant temp score” step for shorts and concept tests—music-first pacing without leaving the Firefly workflow, based on the quick upload-based method referenced in Scoring workflow note.

“FULL THROTTLE” soundtrack-first edit framing circulates via Kling repost

Soundtrack-first cutting: A Kling repost highlights “FULL THROTTLE,” framed as an industrial glitch hip hop track meant to drive the energy of an edit, per the snippet in Track repost.

Even without full tool credits in the repost text, it’s another example of creators treating the track as the primary control surface for rhythm and motion beats (music sets the cut; visuals follow), as implied by Track repost.

💻 Coding agents & model economics: Codex speed, subscription-vs-API math, and ‘skills’ conventions

Creator-adjacent dev chatter today centers on agent tooling economics and Codex acceleration—useful for filmmakers/designers building internal tools. Excludes Claude rate-limit issues (handled in reliability).

OpenAI × Cerebras tease “very fast Codex coming”

Codex acceleration (OpenAI × Cerebras): A new partnership tease suggests Codex performance may jump soon, with Sam Altman’s “Very fast Codex coming!” shown alongside “OpenAI 🤝 Cerebras” in the Cerebras partnership teaser.

This is thin on technical detail (no throughput/latency numbers yet), but it’s directly relevant for creators building internal tooling where coding-agent iteration time becomes the bottleneck.

Claude Max vs API pricing: claim of ~30× cheaper for heavy agent use

Model economics (Anthropic): A widely-shared cost comparison claims Claude Max ($100/month) can be roughly 30× cheaper than paying Opus via API tokens for heavy daily coding-agent usage, based on an estimate of ~$0.80 per request and “a couple hundred requests every day” in the 30x cost comparison.

The same thread frames this as a structural problem for third-party IDE/agent vendors competing on top of Anthropic’s API, since the subscription channel may undercut token-based resale economics, as argued in the 30x cost comparison.

Agent tools converge on “.*/skills/<name>/” folder conventions

Skills conventions (Agent tooling): A developer meme highlights multiple agent products adopting nearly identical “skills” directory layouts—e.g., .claude/skills/<name>/, .codex/skills/<name>/, .cursor/skills/<name>/—as shown in the Skills folder meme.

This signals an emerging de facto packaging pattern for reusable agent behaviors (even if unofficial), which can reduce friction when teams switch tools or run more than one agent stack.

GPT-5.3 rumor watch: creators speculate on how many GPT-5 variants ship

Release cadence chatter (OpenAI): A rumor thread claims people are “hearing rumors about GPT 5.3 now,” raising the question of how many GPT‑5 point releases OpenAI might ship, per the GPT-5.3 rumor.

No corroborating artifacts (benchmarks, model cards, or product surfaces) appear in today’s tweets, so this remains pure speculation for now.

⚡ Compute & training infrastructure signals: gigawatt clusters, CUDA kernels, and scaling partners

Only includes concrete AI compute signals that affect creative tooling capacity (training clusters, kernel updates, scaling partners). Excludes general consumer hardware chatter.

xAI says Colossus 2 is live, framed as the first gigawatt AI training cluster

Colossus 2 (xAI): xAI boosters are claiming Colossus 2 is “up and running” and calling it the “first gigawatt training cluster in the world,” with an Epoch AI chart showing a ~1,600 MW point in 2026 and a steeper ramp penciled in for 2027, as shared in the Colossus 2 claim.

For creative AI tooling, the practical signal is capacity: multi‑GW-scale sites tend to translate into more training throughput and/or more headroom for high-volume video inference—if the buildout matches the curve shown in the

. The tweet itself doesn’t specify GPU counts, network fabric, or what workloads are actually running yet, so treat the “first gigawatt” framing as directional rather than audited.

OpenAI and Cerebras tease “very fast Codex” via partnership post

Codex acceleration (OpenAI × Cerebras): a Cerebras post and Sam Altman reply are being read as a speed-focused infra tie-up—Altman says “Very fast Codex coming!” in a thread quoting “OpenAI 🤝 Cerebras,” as captured in the Codex speed tease.

The tangible creative-side impact is turnaround time for agentic iteration loops (storyboards, shotlists, edit decision lists, batch prompt variants) when Codex-style tooling is in the pipeline; but there are no latency numbers, availability dates, or product surface details in the Codex speed tease, so the scale of the speedup remains unspecified.

Nebius highlights a scaling partnership with Higgsfield to train without bottlenecks

Training scale partnership (Nebius × Higgsfield): Nebius is spotlighting Higgsfield as a customer collaboration aimed at scaling training “without bottlenecks,” positioning the relationship as compute + platform support for a creative AI video company, per the Nebius scaling note.

This matters because creator-facing video tooling is increasingly constrained by training cadence and inference availability during demand spikes; the post is light on concrete capacity numbers (GPUs, cluster size, or timelines), so the main signal from the Nebius scaling note is vendor alignment rather than a quantified throughput jump.

CUDA-L2 kernel update claims broader A100 SM80 coverage with FP32 accumulation

CUDA-L2 (kernel tuning): a performance note claims CUDA-L2 has updates for A100 using a 32-bit accumulator path (SM80_16x8x16_F32F16F16F32) tested across 1,000 M×N×K configurations, as described in the CUDA-L2 update.

The relevance for creators is indirect but real: better GEMM coverage on widely deployed A100s can lower cost/latency for both training and inference on video/image models that still run significant FP16/FP32 mixed math; the CUDA-L2 update doesn’t include benchmark deltas, so the magnitude of gains can’t be verified from these tweets alone.

🛡️ Likeness, consent, and synthetic media boundaries: new CA rules + watermark removal discourse

Policy/legal posts today are directly relevant to AI filmmakers: consent requirements for voice/likeness and questionable watermark removal workflows. Excludes medical and bioscience content entirely.

California AB 2602 and AB 1836 tighten consent rules for AI “digital replicas”

California digital replica law: Posts flag that AB 2602 and AB 1836 are now enforceable as of Jan 1, 2026, with contract and usage rules that directly hit AI film/ads workflows—especially anything that recreates a real person’s voice/likeness, per the contracts warning.

• Living-performer contracts (AB 2602): The claim is that vague “future media” language is void, and using an AI replica requires explicit, specific consent and compensation terms, as summarized in the contracts warning and expanded in the law breakdown.

• Deceased performers (AB 1836): Using a dead actor’s likeness without estate consent is described as illegal under the new rules, with the same operational implication for filmmakers (clear rights chain or don’t ship), as outlined in the contracts warning and the law breakdown.

The practical effect is that “we’ll sort rights later” paperwork becomes harder to defend when a project uses a recognizable real person rather than an original synthetic character.

Musk seeks $79B–$134B damages from OpenAI and Microsoft in fraud suit

OpenAI/Microsoft litigation pressure: A Bloomberg screenshot circulated with the claim that Elon Musk is seeking $79B–$134B in damages from OpenAI and Microsoft, arguing he’s entitled to a piece of OpenAI’s reported $500B valuation, as summarized in the Bloomberg damages claim.

The same post says OpenAI has denied the allegations and called the demand “baseless” and “unserious,” per the Bloomberg damages claim.

Watermark removal workflow goes viral: Nano Banana Pro image then AI eraser cleanup

Provenance and watermarking: A demo shows a two-step workflow—generate an image with Nano Banana Pro, then remove the watermark using an AI “magic eraser” tool, framing it as a quick cleanup technique in the watermark removal demo.

The clip is a reminder that watermarking is only as strong as the surrounding ecosystem: when the same creator stack also includes strong inpainting/erase tools, provenance signals can get stripped before the file ever reaches distribution.

🧯 Reliability & limits: Claude Code rate caps, heat, and long-run instability

A small but clear thread on operational friction: agent swarms hit rate limits and local machines struggle under load. Excludes broader coding model news (covered in coding assistants).

Claude Max rate limits and laptop strain during 10+ agent Claude Code swarms

Claude Code + Claude Max (Anthropic): A creator running a multi-agent coding setup reports “speedrunning” into Claude Max rate limits while watching “10+ agents collaborate on one task,” and calls out Claude Code as a memory hog with laptop fans blaring, per the firsthand run footage in Rate limits report.

• Rate caps as the bottleneck: The practical constraint wasn’t task complexity but request throughput—“Speedrunning my way through my Claude Max rate limits,” as described in Rate limits report.

• Local resource pressure: The same setup appears to stress consumer hardware (memory + thermals), with “my laptop fans are blaring,” according to Rate limits report.

• Long-run iteration: In a follow-up after “my limits reset,” the author says they ran the workload “for a few more hours,” sharing a progress update in Limits reset update.

The thread is anecdotal (no official Anthropic telemetry shared), but it’s a clear signal that multi-agent workflows can shift the failure mode from “model quality” to rate caps + laptop stability fast.

💳 Access levers (high-signal only): credits-for-engagement and expiring creator offers

Kept narrowly to promos that change practical access (credits/unlimited windows), not generic giveaways. Excludes event-based prize challenges (covered in Events).

Higgsfield bumps its engagement-for-credits DM offer to 220 credits and says it’s ending soon

Higgsfield (Higgsfield): Following up on DM bonus (unlimited window plus DM credits), the current engagement gate is now framed as “Retweet & Reply & Like & Follow” in exchange for 220 credits via DM, as stated in the 220 credits instructions; a separate urgency post warns “The offer ends soon. Move.” in the Ending soon note, reinforcing that this is time-boxed access rather than an evergreen perk.

The practical change for creators is the credit amount (220) and the reminder that this specific promo window is closing, with no additional plan details or eligibility constraints provided in today’s posts beyond the engagement steps and DM delivery, as described in 220 credits instructions and Ending soon note.

🗓️ Challenges & calls: $20K–$100K video contests and creator-week prompts

Multiple prize-led challenges circulate today across AI video communities; this category is only for contests/calls with dates, prize pools, or submission mechanics. Excludes pricing promos that aren’t competitions.

Magiclight $100,000 Challenge goes live with showcase promise

Magiclight (Video challenge): The Magiclight $100,000 Challenge is “officially live,” with a $100,000 total prize pool and a promise that winning videos will be showcased, as stated in Challenge is live.

Because the repost doesn’t spell out submission specs (format, length, judging), the operational details appear to sit behind the original Magiclight post referenced by Challenge is live.

Higgsfield launches a $20,000 AI Video Challenge + giveaway

Higgsfield (AI Video Challenge): A new $20,000 AI video challenge is now live, positioned as both a challenge and giveaway, per the reposted announcement in Challenge now live.

The tweet doesn’t include rules or deadlines in-line, so entrants will need to follow the linked flow from the original Higgsfield thread referenced in Challenge now live.

GMI Studio runs a 7-day giveaway: 10 winners get 3 months Pro

GMI Studio (Giveaway): GMI is running a 7-day promo with 10 winners receiving 3 months of GMI Studio Pro, according to the giveaway framing in 7-day giveaway terms.

This is a lightweight “build something + share” style call (exact mechanics not shown in the RT), but it’s still a concrete, time-boxed incentive for creators already trying GMI workflows.

Azed opens a weekly “show your AI art” participation thread

Community call (Azed): A weekly “show your favorite AI-generated art for this week” thread is being used as a lightweight prompt-jam + engagement loop, with the host promising to check out and engage with submissions in Weekly AI art call.

This functions less like a contest (no prize pool cited) and more like a recurring showcase mechanic—post your best work, get eyes, and iterate week over week, as reinforced by the RT in Thread repost.

📣 Growth formats & monetization plays: AI influencers, faceless funnels, and visual-contrast CTR hacks

This bucket is about distribution and monetization tactics (not the underlying model capabilities). Excludes step-by-step multi-tool production recipes unless the primary purpose is marketing strategy.

AIWarper’s “bespoke influencer” factory pitch: scrape clips, automate a persona

Bespoke influencer pipeline: A repeatable monetization pitch is circulating that you can build a custom “influencer” end-to-end with AI by scraping existing clips (or recording yourself) and then automating the rest of the production loop, as laid out in the Bespoke influencer steps repost.

The creative implication is that “influencer” becomes a production system, not a person: the differentiator shifts toward having a consistent character/identity and a scalable clip engine (editing, posting, iterating), rather than one-off hero videos.

Kling repost frames AI video as an OnlyFans-style creator on-ramp

Synthetic persona monetization: A Kling repost highlights a blunt growth narrative—“anyone can become an OnlyFans creator” using AI-generated videos—positioning synthetic characters as a direct on-ramp to paid adult-style creator models, per the OnlyFans creator framing repost.

This sits in the same family as “AI influencer factories,” but with a clearer business model attached (subscription/fan monetization) and a heavier reliance on identity/consistency across clips rather than singular viral moments.

🏁 Finished pieces & filmmaker notes: AI music videos and the craft of framing

This is for named releases or explicit craft breakdowns (not generic ‘cool outputs’). Excludes tool capability demos (covered elsewhere).

ECHOES: Framing note frames AI shots as film-language first, tech second

ECHOES: Framing (Victor Bonafonte): Bonafonte shared a filmmaker note on how framing in ECHOES was designed with a “strictly cinematic mindset”—composition, negative space, and camera position picked for emotion and subtext, then iterated repeatedly until rhythm and weight matched the story, as described in Framing mini-essay.

What’s concrete here is the stated workflow emphasis: iterate shots to move the work away from “novelty” and toward authorship, keeping AI as a production tool rather than the reason a shot exists, as laid out in Framing mini-essay.

THAILAND TRIP drops as a Suno + Kling + NanoBanana AI music video

THAILAND TRIP (seiiiiiiiru): A full-length AI music video titled “THAILAND TRIP” was posted with a clear toolchain credit line—Suno AI for the track, Kling AI for video generation, NanoBanana for visuals/assets, and AfterEffects for finishing—per the release post in Toolchain credits.

The clip reads like a practical “stack snapshot” for music-video creators: generative audio + generative video + image/asset generation, then a conventional compositor to lock pacing, transitions, and typography into something release-ready, as indicated by the explicit AfterEffects credit in Toolchain credits.

📉 Platform reality checks: revenue sharing frustration and engagement-farming fallout

Discourse today focuses on creator monetization and the perception that anti-fraud efforts are reducing payouts for most accounts while engagement farmers persist. Excludes tool pricing changes (handled in pricing/promos).

X Creator Revenue Sharing: creators report “smallest payout in months”

Creator Revenue Sharing (X): A creator reports their latest payout as “such a small payout” and “the smallest in months,” framing it as a broad squeeze rather than a niche fraud fix, in the payout frustration post; in replies, others echo the surprise and dispute prior messaging (“They said they doubled it”) per the reply on payouts reaction.

The thread’s core claim is perception, not measurement: anti-fraud enforcement may be reducing earnings for “almost all creators,” while only a few accounts “who manage to go viral” see meaningful upside, as argued in the payout frustration complaint and reinforced by follow-up commentary in the fraud clamp critique reply.

Engagement farming discourse: “real creators pay the price” while farmers persist

Engagement-farming incentives (X): A follow-on thread argues that “engagement farmers are still doing just fine,” while “real content creators are the ones paying the price,” per the fraud clamp critique reply and the broader reflection that many users “can’t tell an engagement farmer from a genuine creator” in the audience can’t tell note.

A concrete example of the loop shows up in a creator promo that explicitly asks for “Retweet & Reply & Like & Follow” in exchange for “220 creds in DM,” as stated in the credits for engagement post; it’s the kind of mechanic the discussion points to when it says follower behavior enables farmers to keep winning, per the audience can’t tell framing.

• Distribution critique: The thread’s mechanism claim is incentive-driven—people reward low-effort engagement tactics because they’re hard to distinguish at a glance, as described in the audience can’t tell discussion.

• Creator sentiment: The tone is resignation rather than surprise—“Just keep posting” until the platform “can really fix this,” as summarized in the keep posting reply reply.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught