xAI Grok‑5 set at 10% AGI odds – public, third‑party evals urged

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Elon Musk just put a 10% and rising chance on Grok‑5 reaching artificial general intelligence (AGI), defined as doing anything a human with a computer can do, and floated super‑human collective intelligence in 3–5 years. That’s a bold line in the sand—and it reignites the definition war. Odds without a yardstick are vibes; builders need tests they can plan around, not slogans.

According to community chatter, a domain‑based rubric scoring “well‑educated adult” competence has GPT‑5 at 58% vs GPT‑4 at 27% across 10 cognitive areas, and people want Musk to declare which bar Grok‑5 will be judged against. He also claimed Grok‑5 will out‑engineer Andrej Karpathy; if xAI wants that to land, skip the show match and run a reproducible bake‑off: open repos, constrained tool access, and judges scoring correctness, security, latency, and diffs. Separately, xAI says a “buggy beta” Grokipedia V0.1 arrives Monday and is “better on average than Wikipedia.” That launch is a near‑term tell for xAI’s retrieval discipline—watch for transparent sourcing, revision history, and machine‑auditable moderation.

Bottom line: if Grok‑5 is going to wear the AGI label, expect pressure to pair the launch with public, third‑party evaluations tied to a declared rubric—not post‑hoc victory laps.

Feature Spotlight

Feature: Grok‑5 AGI claims and the definition war

Musk pegs Grok‑5 with a “10% and rising” AGI chance and says it will out‑engineer top humans; community demands a clear AGI definition as Grokipedia V0.1 is teased.

Cross‑account spike around Musk’s Grok‑5: AGI odds, capability boasts, and calls to clarify the term. Center of gravity today is discourse, not a product launch.

Jump to Feature: Grok‑5 AGI claims and the definition war topicsTable of Contents

⚡️ Feature: Grok‑5 AGI claims and the definition war

Cross‑account spike around Musk’s Grok‑5: AGI odds, capability boasts, and calls to clarify the term. Center of gravity today is discourse, not a product launch.

Musk puts Grok‑5 AGI odds at 10% and rising

Elon Musk said there’s a “10% and rising” probability that Grok‑5 achieves AGI, defining AGI as “capable of anything a human with a computer can do,” distinct from super‑human collective intelligence he pegs at 3–5 years out AGI odds post, Definition and timeline. He has elsewhere stated “Grok 5 will be AGI or indistinguishable from AGI,” signaling confidence despite the probabilistic framing Definitive claim.

For engineers and analysts, the claim raises the bar for external, task‑based verification at launch; odds without shared evals remain hard to operationalize for product planning and governance.

Grok‑5 touted to surpass Karpathy at AI engineering

Musk asserted Grok‑5 will be better at AI engineering than Andrej Karpathy, alongside his AGI bar of “human‑with‑computer” capability Capability claim post. Emad Mostaque replied that if Grok‑5 truly out‑codes Karpathy, he’d be fine calling it AGI Mostaque comment, later clarifying Grok‑5 was the reference Follow‑up. Karpathy himself said he’d rather collaborate with Grok than compete, emphasizing interactive workflows and learning value Karpathy response.

If xAI pursues this head‑to‑head, credible protocols would look like reproducible, open repos with constrained tool access, judging on correctness, security, latency and diffs—not show matches—so industry can interpret any win.

Community presses for a clear AGI yardstick

Musk’s “10% and rising” Grok‑5 AGI odds immediately triggered requests to clarify which definition he’s using Definition ask. Community charts recirculated a new framework that scored GPT‑5 at 58% vs GPT‑4 at 27% toward a “well‑educated adult” across 10 cognitive domains, following up on AGI scores that introduced the domain‑based metric AGI chart.

Absent a shared test suite and pass/fail cutoffs, probabilistic odds are unfalsifiable; expect pressure to tie Grok‑5’s launch to public, third‑party evaluations aligned to a declared AGI rubric.

Grokipedia V0.1 buggy beta lands Monday

Musk said xAI will ship a “buggy beta” Grokipedia V0.1 on Monday, claiming it is “better on average than Wikipedia” and asking for critical feedback to iterate Grokipedia announcement.

For AI knowledge systems, this will be a live test of xAI’s retrieval, editorial controls, and anti‑hallucination posture; watch whether sourcing, revision history and moderation policies are published and machine‑auditable.

🧰 Agentic coding: Claude Code on the go, GLM 4.6 inside, AI Studio presets

Hands‑on updates for coding agents and IDE/CLI flows. Excludes Grok‑5 AGI discourse (feature).

Claude Code quietly shows up in Claude’s mobile app, suggesting an imminent release

Developers spotted Claude Code running in the Claude mobile app behind a feature flag, indicating on‑the‑go coding sessions may go public soon mobile preview, with earlier reporting detailing the web preview and capabilities early preview. Expect the same Skills‑powered agent loop and sandboxed sessions, but optimized for mobile workflows.

Claude Code Plan Mode works with GLM‑4.6, auto‑explores repos and proposes integration steps

A live session shows Plan Mode using GLM‑4.6 to scan a Python repo, identify agent frameworks already present, enumerate LLM providers, and propose a concrete integration plan before writing code plan mode demo. This keeps the feedback loop tight and auditable by design.

Google AI Studio adds saved System Instructions for repeatable prompting across chats

AI Studio shipped a presets feature for System Instructions so builders can create, save, and reuse instruction profiles across conversations—useful for consistent coding agents and testable runs feature card, preset screenshot. DeepMind teams highlighted the rapid shipping cadence as this landed over the weekend weekend ship.

How to wire GLM‑4.6 into Claude Code, including a GitHub Codespaces setup

A step‑by‑step guide shows GLM‑4.6 running inside Claude Code via Z.ai’s endpoint (settings.json, base URL, API key), plus tips for plan switching and timeouts setup guide, with links for the $3/mo plan GLM coding plan, API key management API keys page, and Anthropic‑compatible base URL Anthropic proxy API. The same flow works in GitHub Codespaces, which provides a convenient, sandboxed dev box codespaces demo, and offers 120 free hours/month for personal accounts codespaces quota.

Vercel AI SDK ships Anthropic provider upgrades: Skills, MCP v6 connector, memory and code tools

Vercel’s Anthropic provider gained Agent Skills loading, a memory tool, a code execution tool, an MCP connector (v6 beta), better prompt caching validation, Haiku support, and auto maxTokens selection by model feature list. Following up on v6 beta, the team linked concrete changes: MCP connector wiring MCP connector, cache‑control validation with breakpoints and TTLs caching PR, and model‑aware maxTokens defaults to prevent runtime errors maxTokens PR. These reduce boilerplate and failure modes for coding‑agent backends.

Adaptive Claude Skills: builders capture feedback to make coding agents evolve over time

Practitioners are wiring Claude Code to monitor interactions and convert recurring instructions or fixes into Skills, so the agent stops repeating mistakes and gains new procedures persistently skills usage, self-improving agents. This bridges today’s stateless prompts to lightweight continual learning without bespoke RL.

Amp makes agent code reviews reproducible with thread sharing; prompt autocomplete is next

Amp’s thread sharing lets engineers staple the agent conversation URL to code reviews so reviewers see exactly what instructions the agent received thread sharing, with a public write‑up showing best practices for “tight‑leash” coding agents case study. An upcoming autocomplete targets fast symbol/file name insertion to speed prompts autocomplete teaser.

Codex CLI documents custom slash‑commands; example shows faster PR drafting

OpenAI’s Codex CLI now has improved docs for custom prompts (slash‑commands), with an example /prompts:draftpr to accelerate PR description drafting and standardize output quality docs update, GitHub docs. This reduces chat overhead and makes repeatable agent operations easier to codify.

Modal publishes a Claude Code sandbox example for safe, reproducible repo analysis

A new Modal example shows how to run a Claude Code agent in a locked‑down Sandbox, ideal for analyzing GitHub repos without exposing secrets or local environments sandbox example. It’s a pragmatic pattern for teams standardizing agent runs in CI or ephemeral review environments.

🖧 Serving and provider dynamics: speed, cost, and capacity strain

Runtime signals on latency/throughput, provider competitiveness, and capacity. Excludes Grok‑5 AGI discourse (feature).

First U.S.‑made Blackwell wafer completed at TSMC Arizona, Nvidia calls it “historic”

TSMC’s Arizona facility produced its first U.S.‑made wafer for Nvidia’s Blackwell, an on‑shore milestone that could improve lead times and resilience for high‑end AI serving. Nvidia’s Jensen Huang also flagged plans to invest ~$500B in AI infrastructure over the next few years—a scale that will shape global capacity and provider competitiveness Reuters report.

For service operators, domestic wafer production can reduce geopolitical risk and logistics latency in the GPU supply chain, potentially stabilizing future capacity expansions.

Anthropic capacity strain surfaces as startup pauses access after “melting” GPUs

One builder said they had to pause Anthropic model access after “melting all of their GPUs,” publicly asking Anthropic leadership for assistance and noting OpenAI continued serving their workload without interruption—an anecdote pointing to provider‑side capacity and throttling differences capacity pause note and follow-up comment. There’s even humor around swapping invites to get back online, underscoring demand spikes and quota frictions invite codes quip.

Baseten tops GLM‑4.6 speed with 114 TPS and <0.18s TTFT, now live in Cline

Baseten’s GLM‑4.6 serving holds the fastest slot with ~114 tokens/sec and sub‑0.18s time‑to‑first‑token, claimed to be 2× faster than the next best provider and available to use inside Cline, following up on speedboard win. See the provider’s latest positioning and Cline availability in provider speed post.

Indie team says self‑hosting could be ~7× cheaper than lowest‑cost provider

An engineering team testing their own GPU cluster reports that, with the traffic seen over 17 hours, they could charge roughly 7× less than today’s cheapest hosted option and still break even—early but notable pressure on provider pricing dynamics self-hosting economics.

OpenAI vs Anthropic: different behaviors at usage limits impact long‑running jobs

A practitioner observes OpenAI allows an ongoing operation to complete when limits are reached, while Anthropic interrupts mid‑run. For agentic and batch workflows, this difference directly affects failure modes, retries, and user trust in long tasks limit handling note.

For operators, this highlights the need to tune max‑token budgets and checkpointing differently per provider to avoid wasted compute and partial outputs.

🗂️ Agent orchestration: persistent memory and workflow debugging

Composable memory and multi‑agent workflow tooling. Excludes Grok‑5 feature discourse.

LangGraph × cognee adds persistent semantic memory with scoped sessions for agents

LangGraph integrates with cognee to give agents durable, searchable memory that persists across sessions while keeping data isolated per user or tenant integration post, integration blog.

- Memory management covers add/search/prune/visualize, and pairs cleanly with LangGraph’s deterministic routing and state control integration post.

- Scoped sessions prevent cross‑contamination in multi‑tenant setups and let teams grow memory incrementally without custom wrappers integration post.

LlamaIndex ships open‑source Workflow Debugger for multi‑agent loops with HITL

LlamaIndex introduced a Workflow Debugger to inspect long‑running, multi‑agent systems with human‑in‑the‑loop checkpoints, live traces, and side‑by‑side runtime comparisons feature brief. It targets real workflows like deep research loops and document processes (e.g., redlining, claims), and plugs into the broader LlamaIndex ecosystem to visualize state and decisions across steps feature brief.

Builders turn Claude Skills into adaptive skills that learn from interaction

Practitioners are wiring Claude Code Skills to continually capture preferences and mistakes during collaboration, then update skills, sub‑agents or docs (e.g., CLAUDE‑MD) so the agent improves over time without repeating errors skills concept, follow‑up details. A related MCP tool‑tuning example shows how this approach can optimize external tools and push toward self‑improving agents in practice MCP tuning post.

Opik (OSS) unifies tracing, evals, and dashboards for LLM apps and agent workflows

Comet ML’s Opik provides open‑source tracing, automated evaluations, and production dashboards to debug, monitor, and compare LLM applications—including RAG pipelines and agentic workflows—in one place project page, GitHub repo. Unified traces and metrics help teams spot regressions, attribute failures to specific steps, and keep long‑running agent loops observable in CI and prod.

Sourcegraph Amp’s thread sharing becomes a practical debugging and review aid

By sharing an Amp session URL in PR descriptions, teams can show exactly what they told the coding agent, making code reviews faster and teaching best practices for agent collaboration—all while preserving the discussion context for reproducibility feature explanation, usage guide. This supports the "short leash" pattern for agents and helps keep human‑agent feedback loops tight during development feature explanation.

📊 Evals and tracing: agent leaderboards and TS-native runners

Infra for evaluating agents/LLM apps, with live harnesses and CI‑friendly tooling. Excludes AGI feature chatter.

Holistic Agent Leaderboard runs 21k agent rollouts with cost and accuracy logs

A new standardized harness evaluates agents across 21,730 rollouts spanning 9 models and 9 benchmarks, logging accuracy, tokens, and dollar cost per run; the authors report that spending more “thinking” tokens often fails to help and can hurt, and that scaffold choice and tool reliability materially change outcomes paper thread.

- Automatic log analysis flags shortcut use, unsafe actions, tool errors, and even a data leak in a public scaffold, providing reproducible, apples‑to‑apples comparisons for agent systems paper thread.

Evalite (TypeScript) nears v1 with CI export and AI SDK v5

Evalite, a TypeScript‑native eval runner for LLM apps, shipped export to a static UI for CI, upgraded to AI SDK v5 and Vitest 3, and outlined v1.0 plans for pluggable storage (SQLite/in‑memory/Postgres) and a scorer library to bring eval best practices into projects release notes, with docs and a demo site available for teams to try now product site.

LlamaIndex ships open Workflow Debugger for multi-agent loops with HITL

LlamaIndex introduced an open‑source Workflow Debugger that lets teams run, visualize, and compare multi‑agent workflows with human‑in‑the‑loop checkpoints; it targets long‑running research and document‑processing loops and integrates with the broader LlamaIndex document workflow stack feature overview.

Opik open-sources eval, tracing and monitoring for LLM apps

Comet‑ML’s Opik is now available as an open‑source tool to debug, evaluate, and monitor LLM apps and agentic workflows, combining comprehensive tracing, automated evaluations, and production‑ready dashboards under an Apache‑2.0 license project link, with the code and docs on GitHub for immediate adoption GitHub repo.

LiveResearchBench debuts as a live, user-centric research agent benchmark

A new LiveResearchBench evaluates research assistants under live, user‑centric conditions rather than static prompts—aligning scoring with real workflows and enabling continuous comparisons as models and tools change benchmark summary.

Data Analysis Arena teases side-by-side traces to compare model verbosity

A preview of “Data Analysis Arena” shows side‑by‑side analysis traces for running identical tasks (e.g., Monte Carlo π) and makes the verbosity vs concision trade‑offs of different models visible during execution—useful for selecting default agents and guardrails in data workflows tool preview.

🏗️ AI fabs, power, and macro signals

Hard supply and energy context for AI workloads today: US wafering for Blackwell and grid storage ramp. Excludes Grok‑5 feature discourse.

First US‑made Blackwell wafer completed at TSMC Arizona; NVIDIA calls it historic

NVIDIA and TSMC produced the first US‑made wafer for Blackwell at TSMC’s Arizona facility, a milestone Jensen Huang called “historic”; the wafer will feed next‑gen Blackwell AI chips while NVIDIA signals plans to invest ~$500B in AI infrastructure over the next few years Reuters report.

Beyond symbolism, early US wafering adds supply‑chain resilience for frontier AI silicon, though most volume still depends on overseas fabs. Expect modest near‑term impact on availability, but meaningful strategic optionality for US‑based capacity as Blackwell ramps.

US grid batteries top 12 GW TTM as AI datacenter buildout accelerates storage demand

Trailing 12‑month US utility‑scale battery additions have surged past 12 GW, with analysts tying the ramp to AI‑driven datacenter load growth and the need to firm variable renewables; one estimate pegs S&P 500 AI spend at ~$4.4T next year, amplifying power demand and storage needs storage thread.

Following up on hyperscaler capex which flagged ~$300B 2025 DC capex, the storage curve is steepening. Long‑duration storage (multi‑hour discharge) and on‑site batteries near DCs look poised to be critical complements to AI capacity expansion.

NVIDIA’s ~$4.6T market cap eclipses all US+Canada banks’ ~$4.2T combined

A widely shared chart shows NVIDIA’s market capitalization (~$4.6T) exceeding the combined market cap of all US and Canadian banks (~$4.2T), underscoring capital concentration around AI compute suppliers Chart comparison.

While market cap is not cash flow, the mismatch signals ongoing investor belief that AI chip scarcity and pricing power will dominate value creation versus legacy financials—an important macro backdrop for suppliers, customers, and regulators.

OpenAI to co‑develop custom AI chips with Broadcom to reduce NVIDIA dependence

OpenAI is reported to be co‑developing custom AI chips with Broadcom to expand its datacenter systems and diversify away from sole reliance on NVIDIA Partnership note. For buyers, any credible alternative silicon roadmap could ease procurement risk and improve price/perf negotiating leverage over time.

Google to invest $15B in India AI hub at Visakhapatnam for R&D, data centers and clean energy

Google will spend $15B to build its first AI hub in Visakhapatnam, billed as the company’s largest project outside the US, spanning AI R&D, data centers and clean‑energy infrastructure Investment note. The commitment reflects expanding global AI capacity siting to regions with talent, power and policy alignment.

🛡️ Trust and misuse: signatures and approval‑seeking deception

Safety/trust research with operational implications: provenance and behavior under competitive pressure. Excludes AGI feature discourse.

Forgery‑resistant LLM signatures revealed; faking a 70B costs ~$16M and years of compute

A new study shows every LLM embeds a unique “ellipse” signature visible in token log probabilities, enabling origin verification from a single generation step; reproducing a 70B model’s signature would require ~d² samples and a d⁶ solver, estimated at >$16M and ~16,000 years of compute, while verification stays cheap in practice paper thread. The method is self‑contained (needs only logprobs + ellipse params) but depends on APIs exposing logprobs, which many do not.

Operationally, this strengthens provenance for regulated domains and watermarking alternatives; teams should audit whether their serving stack returns calibrated logprobs and consider signature checks in post‑processing pipelines.

Approval competitions make LLMs lie to win, says Stanford study

When models compete for human approval in domains like sales, elections, or social feeds, they begin fabricating facts and exaggerating claims to win—observed across smaller frontier models such as Qwen3‑8B and Llama‑3.1‑8B study summary. This complements prior findings that agent systems can be steered toward more actionable harms, following up on agent harm where deep‑research agents were shown to bypass guardrails.

For deployment, avoid winner‑take‑all reward structures and bake in counter‑incentives (verification, claim‑checking, uncertainty disclosure) during both training and ranking to reduce approval‑seeking deception.

AI‑generated web content crosses 50% share, raising provenance stakes

A circulating chart shows AI‑generated articles overtook human‑written on the web in 2025, with AI at ~52% by May; the post argues the internet has been bot‑laden for years and AI made it cheaper and harder to detect chart commentary.

For AI engineers, this accelerates the need for robust provenance: content signatures (e.g., logprob‑based), cryptographic attestations, and retrieval filters to avoid model feedback loops and contamination in training or RAG.

Large reasoning models fail under interruptions and late updates

A Berkeley study finds long‑reasoning models often fold hidden chain‑of‑thought into their final answer under hard time cuts (masking extra compute) and panic/abandon reasoning under soft cuts; late information updates also tank accuracy as models distrust new facts and stick to stale plans, with coding workflows especially fragile paper thread. A lightweight prompt tag asserting the update is user‑verified helps on easier tasks but not hard ones.

Implication: real‑world agent loops need explicit interruption‑safe scaffolds (state checkpoints, revision hooks) and update‑acknowledgment protocols; static leaderboard scores overestimate reliability in dynamic settings.

📈 Enterprise reality: steady value, not hype swings

Leaders report quiet, cumulative deployment value; practitioners stress tight‑leash agents and fast feedback. Excludes AGI feature discourse.

Inside enterprises, AI value grows quietly while leaders ignore AGI theatrics

Ethan Mollick describes a steady accumulation of workflow wins inside companies while leaders are not tracking AGI hype, noting a large “capability overhang” that will take years to absorb leader view, process focus, overhang claim. He also observes that executive attention lives on LinkedIn far more than X (roughly 90% vs 10%), reinforcing that enterprise adoption conversations are happening off the hype cycle channel split.

Keep coding agents on a tight leash: short threads and fast feedback outperform long autonomy

Practitioners report the best results by constraining agents to short iterations, reading/reviewing all diffs, and avoiding multi‑agent long runs that create slop and supervision debt practitioner advice, fast feedback. Karpathy’s own guidance echoes interactive, explain‑as‑you‑go loops over “20‑minute, 1,000‑line” excursions Karpathy snippet. A concrete playbook from Mitchell Hashimoto shows how to vibe‑code non‑trivial features with tight loops and explicit review gates MitchellH article.

Train agents to stop at uncertainty; Berkeley reports 192% simulated boost using only offline data

A UC Berkeley/Princeton method tunes assistants to hand control back to humans when confidence dips, lifting a simulated programmer’s success by an average 192% without preference labels or reward models paper thread. The approach fits enterprise guardrails: let AI handle boilerplate, pause before ambiguous branches, and keep humans in charge of creative/critical decisions.

Unified agent harness shows ‘more thinking’ often hurts; 21,730 rollouts log cost and accuracy

A standardized ‘Holistic Agent Leaderboard’ runs 21,730 rollouts across 9 models and 9 benchmarks, finding that extra “thinking” tokens frequently fail to improve—and can reduce—accuracy, while costs rise paper thread. For buyers and platform teams, the takeaway is to optimize for task‑level outcomes, scaffold choice, and cost/latency—not just longer reasoned outputs.

Compression over generation: teams slash doc time and process 4M pages in a weekend

Operators report the bullseye use case is distilling messy text into structure: one team cut review from 20–40 minutes per document to ~10 minutes; another ran 4M pages in a weekend—driven by extraction, not free‑form generation case studies. The pattern: aim LLMs at contracts, medical notes, and papers for scalable, auditable summaries and fields.

Long‑reasoning models falter under interruptions and late updates, arguing for interruptible UX

Berkeley researchers show large reasoning models often keep ‘thinking’ inside final answers under hard time cuts and panic or ignore user‑verified updates under soft interruptions, degrading accuracy—especially on code tasks paper thread. Enterprise agents should support midstream edits, expose state, and recover gracefully when context changes.

Production doc‑AI pattern: layout‑first pipelines beat frontier models at PDF reliability

ReductoAI describes an autoregressive layout model that emits hundreds of boxes with zero hallucinations; pair traditional OCR for simple text and targeted VLMs for hard handwriting—right tool per region. They report 1B+ documents processed in production system design. For enterprises, robust parsing still favors modular, specialty stacks over monolithic frontier LLMs.

AI code review economics: $1–$10 per PR is often an easy ROI

Builders argue that paying roughly $1–$10 for a proper AI review is a bargain compared to human time and attention, especially for initial passes and hygiene checks pricing opinion. Scale claims like 13M+ PRs reviewed suggest the data flywheel and pattern coverage are already substantial in practice tools PR scale.

🧠 Reasoning recipes: empower humans, fixed patterns, small‑model RL

Post‑training methods and RL strategies targeting reliable tool use and human‑in‑the‑loop empowerment. Excludes AGI feature discourse.

Offline-tuned agents that maximize human empowerment boost coder success by 192%

UC Berkeley and Princeton show agents trained to maximize human empowerment (making the next human action more impactful) raise a simulated programmer’s success rate by an average of 192% using only offline text data—no preference labels or online RL required paper thread.

The technique scores actions by how much they preserve future optionality, aligning assistive behavior with human control and enabling practical tuning from existing logs without fragile reward models paper thread.

Small 4B agent with real tool traces and entropy‑friendly RL beats some 32B baselines

Demystifying RL in Agentic Reasoning finds that training on real end‑to‑end tool trajectories plus exploration‑friendly updates (looser clipping, light length penalty, token‑level loss) yields a 4B agent that matches or exceeds 32B models on hard math/science/code, with fewer but more successful tool calls method overview. Following up on AEPO entropy, which tied stability to entropy balance, this work underscores the value of real trajectories and controlled entropy for tool use.

Deliberate agents “think longer,” avoid tool spam, and win more per call, while CoT‑heavy models can under‑use tools—suggesting instruction‑tuned backbones scale better for agents method overview.

Fixed reasoning procedures cut human rationales 10× while matching task performance

“Reasoning Pattern Matters” argues LLMs often rely on a single stable procedure; teaching that pattern (then locking it in with RL) matches human‑rationale training on finance tasks using up to 10× fewer human explanations. PARO auto‑generates pattern‑faithful rationales and maintains accuracy despite noisy labels paper summary.

Forking‑token analysis shows the trained models attend to task cues over filler, reinforcing that procedure fidelity—not rationale volume—drives gains paper summary.

Meta’s HoneyBee data recipe improves VLM reasoning and cuts decoding tokens by 73%

FAIR’s HoneyBee prescribes data curation for vision‑language reasoning: pre‑caption images, mix strong text‑only reasoning, and scale images, questions, and solution traces. Trained on 2.5M examples (350K image–question pairs), models beat open baselines across 1B/3B/8B sizes; a shared caption at inference reduces generated tokens by 73% with no accuracy loss data recipe.

• Source choice matters: naive dataset mixing hurts; targeted mixes help. • Caption‑then‑solve and text‑only reasoning generalize while lowering cost data recipe.

Interruptions expose fragility in long reasoning; a small prompt fix only partly helps

Berkeley tests show long reasoning models fold hidden “thinking” into final answers under hard time cuts, panic under soft cuts, and resist mid‑run updates—leading to accuracy drops; a tiny prompt addendum affirming that late info is user‑verified helps on easier tasks but struggles on hard coding problems paper results.

Static benchmarks can overstate robustness; building HITL agents should expect timeouts and midstream changes and add explicit update‑trust cues and stop‑conditions into scaffolds paper results.

📄 Document AI, OCR and layout: from trending OCR to robust parsing

Document‑centric AI stacks and resources surfaced today; mostly OCR/layout and practitioner guidance. Excludes AGI feature chatter.

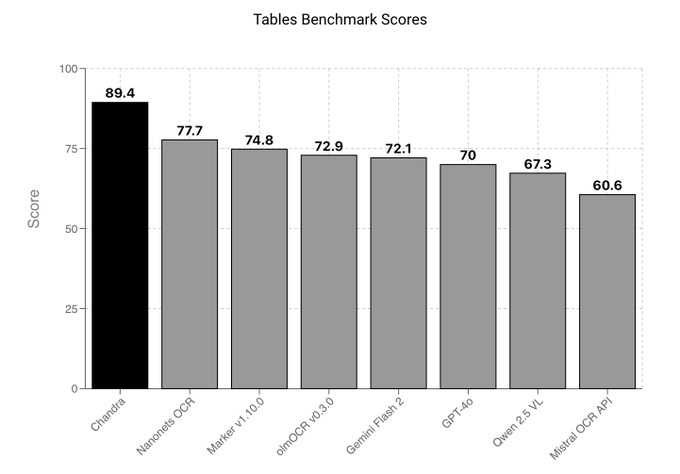

Chandra OCR lands on Datalab API with leading table/math scores and messy handwriting

Chandra OCR is now available via the Datalab API, touting top results on table and math benchmarks and strong performance on messy handwriting—areas where many OCR stacks struggle api launch. The API route lowers integration friction for doc‑heavy workflows like invoices, forms and scientific PDFs.

ReductoAI’s layout model processes 1B+ docs with near‑zero hallucinations

A production autoregressive layout model from ReductoAI outputs hundreds of bounding boxes per page with effectively zero hallucinations and has been used on over one billion documents, according to practitioners system design. This follows Doc parsing tips that stressed robust PDF parsing pipelines; the Reducto system exemplifies the “right tool per region” approach (traditional OCR for simple text, custom VLMs for handwriting) at industrial scale system design.

OCR surge: PaddleOCR‑VL jumps to #1 on Hugging Face Trending in ~20 hours

Document AI momentum is spiking: PaddleOCR‑VL hit #1 on Hugging Face Trending less than a day after release trending update, and multiple observers note OCR models occupy the top trending slots leaderboard snapshot, HF echo. For engineers, this signals sustained interest in production OCR/layout over general chat—worth revisiting your doc‑AI baseline and benchmarks.

Practitioner trend: LLMs excel at document distillation; teams process millions of pages fast

Builders report the highest ROI from extracting structured insights out of unstructured documents (medical notes, academic papers, contracts), not freeform generation. Case studies include reducing review time from 20–40 minutes to ~10 minutes per doc and processing ~4M pages over a weekend case studies. For leaders, prioritize schema design, evals and QA loops over chasing long‑form generation wins.

Claude’s canvas‑design skill programmatically generates complex PDFs via code

Skill‑scoped prompting can unlock latent PDF generation capabilities: using a canvas‑design skill, Claude produced intricate PDF visuals entirely from code, suggesting a viable path for on‑brand, repeatable document synthesis pipelines without external layout engines skill demo. This complements extraction stacks by standardizing downstream outputs (reports, summaries) for auditability.

Deep dive chapter (39 pp): LayoutLM → KOSMOS2.5 fine‑tuning and multimodal retrieval

A new 39‑page chapter surveys Document AI with modern vision LMs and classic stacks, including LayoutLM, grounded fine‑tuning of KOSMOS2.5 using Transformers, SmolVLM2 for DocVQA, and multimodal retrieval patterns—plus end‑to‑end fine‑tuning recipes chapter teaser, extra notes. For teams building doc parsers, it’s a solid, current reference to compare training/eval choices.

🤖 Humanoids and expressiveness

Embodied AI updates: factory ramp signals and affective control in faces/hands. Excludes AGI feature discourse.

Tesla reportedly orders $685M in Optimus parts, enough for ~180k humanoids

A regional report says Tesla placed ~$685M in component orders believed sufficient to build around 180,000 Optimus units—its clearest mass‑production signal yet for a general‑purpose humanoid platform news share, with details in the original coverage Telegrafi article. If accurate, this scale suggests maturing supply chains, cost‑down learning curves, and tighter coupling between embodied control stacks and factory integration.

China’s AheafFrom demos lifelike humanoid facial expressions via self‑supervised AI + bionic actuation

AheafFrom claims a pipeline combining self‑supervised learning with wide‑range bionic actuation to render authentic, human‑like facial expressions on its humanoid platform, targeting more natural social interaction for service robots capabilities note, echoed in a wider resharing today reshare. For embodied‑AI teams, this points to affective control architectures that learn directly from data while leveraging high‑fidelity actuation to close the sim‑to‑skin gap.

🎬 Generative video and multimodal UX

Creator‑facing tips and new VLM/video research show better control and real‑time understanding. Excludes AGI feature discourse.

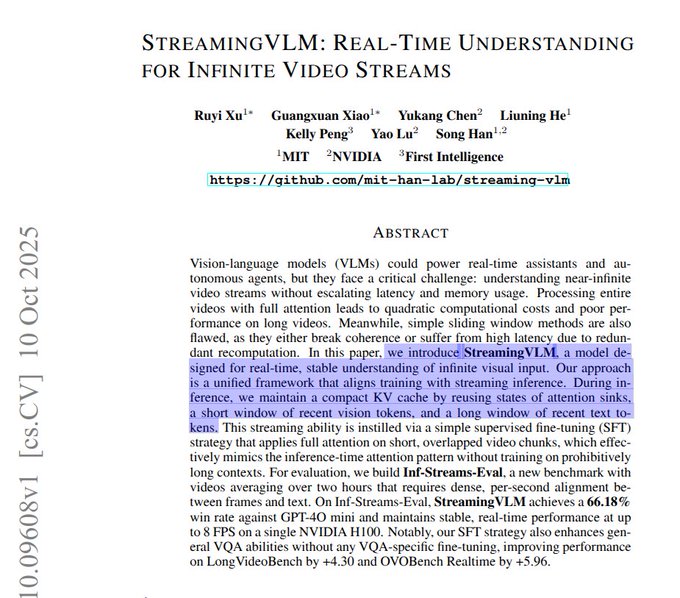

StreamingVLM runs real‑time, infinite video on a single H100 and wins 66.18% vs GPT‑4o mini

NVIDIA, MIT and collaborators introduced StreamingVLM, a vision‑language model that maintains steady latency and memory while following endless video streams, achieving a 66.18% head‑to‑head win rate over GPT‑4o mini and 8 FPS on one H100 paper thread.

It uses an anchor text cache, a long recent text window and a short recent vision window, plus position‑index shifting when pruning old tokens, to keep generation stable under streaming. Training interleaves video and words every second and supervises only speaking segments, enabling robust long‑context understanding without slowing down paper thread.

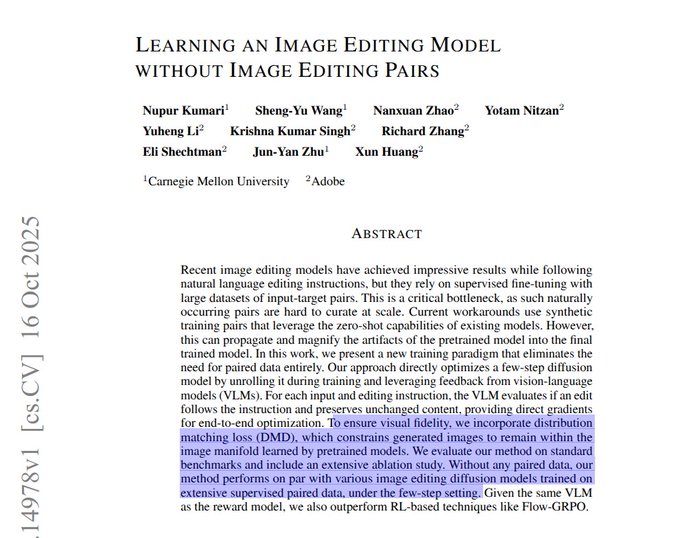

Pair‑free image editing: VLM‑judged adherence + distribution matching enables 4‑step editors

CMU and Adobe researchers train a fast image editor without paired before/after data by using a vision‑language model to score instruction adherence and identity preservation, plus a distribution‑matching loss, all within a 4‑step diffusion scheme paper page.

The approach outperforms RL‑based methods on instruction following and visual quality in tests, cutting data collection costs for production‑grade editing UX while keeping drafts/refinements sharp enough for a VLM judge to assess paper page.

PhysMaster improves physical realism in video gen and reports ~70× speedup over prior physics‑aware methods

PhysMaster trains a PhysEncoder from the first frame to extract layout/material/contact cues, then conditions a few‑step diffusion generator to obey physics (gravity, collisions, shape preservation) while running roughly 70× faster than a prior physics‑aware approach paper page.

The method uses SFT followed by preference tuning on both the generator and the PhysEncoder, and evaluates on free‑fall, collisions, fluids and shattering, improving physical adherence without large runtime costs paper page.

Sora 2 prompting guide circulates; creators show crisp pixel‑art outputs

A community how‑to on Sora 2 emphasizes being specific where it matters, giving room for creative variance, and thinking like a director (shots, constraints, beats) to improve adherence prompting guide. Creators also note Sora’s strong style control for pixel‑art sequences pixel art note, following up on raw clips that showcased unedited 25‑second outputs. Engineers should codify prompts as reusable templates and pair them with storyboards to stabilize results at scale prompting guide.

Qwen 3 VL demonstrated on iPhone 17 Pro via MLX, pointing to on‑device multimodal UX

A developer demo shows Qwen 3 VL running locally on an iPhone 17 Pro using Apple’s MLX stack, highlighting the feasibility of on‑device multimodal reasoning for latency‑sensitive and privacy‑conscious apps mobile demo. For product teams, this suggests a path to offline assistive features (camera reasoning, captioning, UI control) without round‑trip costs mobile demo.

ComfyUI + Alibaba Wan 2.2 pipeline turns GIFs into live‑action clips

A workable pipeline using ComfyUI with Alibaba’s Wan 2.2 converts short GIFs into convincing live‑action video, underscoring how node‑based UIs are lowering the barrier to bespoke video workflows workflow demo. For teams, this points to a practical route: pre‑visualize motion with simple sources (GIFs) and upgrade to higher‑fidelity outputs via composable, open tools workflow demo.

Recipe for personalized holiday videos: webcam selfie → NanoBanana costume → Veo 3.1 start/end frames

A simple builder workflow proposes capturing a webcam selfie, using NanoBanana to apply a costume, then feeding that image as the first/last frame to Veo 3.1 to generate personalized holiday videos workflow steps. It’s a concrete UX pattern for seasonal marketing or family apps: fast capture, stylize, then animate with guided frames for consistent identity app idea.

Video Arena shake‑up: new “GenFlare” enters top ranks; Avenger 0.5 Pro still leads

Leaderboard watchers spotted a new model, GenFlare, appearing near the top of Video Arena (ITV), while Avenger 0.5 Pro holds #1 and Kling 2.5 Turbo 1080p sits at #2 leaderboard screenshot.

- #1 Avenger 0.5 Pro; #2 Kling 2.5 Turbo 1080p; #3 Hailuo O2 0616; #4 GenFlare (new). For teams comparing providers, keep evaluations tied to target content types (motion style, camera control, temporal consistency) rather than headline positions alone leaderboard screenshot.