OpenAI signs $38B, 7‑year AWS compute deal – GB200 and GB300 capacity ramps

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

OpenAI just inked a $38B, seven‑year compute pact with AWS to scale ChatGPT and train its next models. It matters because the company says it gets “immediate and increasing” access to hundreds of thousands of NVIDIA GB200 and GB300 GPUs, plus the ability to fan out to tens of millions of CPUs by 2026–27. That’s the right mix for modern agents: GPUs for dense training/inference, CPUs for pre/post‑processing and tool use. Investors noticed too—Amazon jumped roughly 4–5% intraday on the announcement.

Under the hood, OpenAI is standardizing on EC2 UltraServers—clusters tuned for low‑latency training/inference—while elastically attaching CPU fleets for orchestration and serving readiness. Strategically, this formalizes the multi‑cloud play alongside Azure and Oracle; observers peg OpenAI’s planned capacity near 30 GW, a hedge against single‑cloud queueing and outages. For developers, the practical upside is steadier throughput and tighter tail latencies as these fleets come online, especially for long‑running tasks and agent workflows that thrash caches and tools.

One more tell that the capacity race is accelerating: Microsoft just received clearance to ship NVIDIA accelerators to the UAE and is rolling GB300 NVL72 racks with Lambda, suggesting everyone’s scrambling to lock in silicon and power while demand keeps climbing.

Feature Spotlight

Feature: OpenAI signs $38B, 7‑year compute deal with AWS

OpenAI and AWS announce a $38B, 7‑year partnership granting immediate access to EC2 UltraServers (GB200/GB300) and massive CPU scale to expand ChatGPT and next‑gen model training—reshaping hyperscale GPU supply and cloud competition.

Cross‑account story of the day: OpenAI confirms a multi‑year strategic partnership with AWS to scale ChatGPT and training, citing EC2 UltraServers with GB200/GB300 and massive CPU scale. Multiple exec posts plus product page details surfaced today.

Jump to Feature: OpenAI signs $38B, 7‑year compute deal with AWS topicsTable of Contents

🤝 Feature: OpenAI signs $38B, 7‑year compute deal with AWS

Cross‑account story of the day: OpenAI confirms a multi‑year strategic partnership with AWS to scale ChatGPT and training, citing EC2 UltraServers with GB200/GB300 and massive CPU scale. Multiple exec posts plus product page details surfaced today.

OpenAI inks $38B, 7‑year AWS deal for frontier AI compute

OpenAI confirmed a multi‑year strategic partnership with AWS, committing $38B over seven years for EC2 UltraServers to scale ChatGPT and next‑gen training, with “immediate and increasing access” to hundreds of thousands of NVIDIA GB200/GB300 GPUs and the ability to scale to tens of millions of CPUs by 2026–27 OpenAI blog. Following up on partner deals, this cements the multi‑cloud roadmap previously flagged at ~30 GW planned capacity.

- Leadership sign‑offs underscore execution: “bring a lot more NVIDIA chips online” (Sam Altman) Sam Altman note, “multi‑year, strategic partnership” (Andy Jassy) Andy Jassy post, and “to help scale compute for AI that benefits everyone” (Greg Brockman) Greg Brockman note.

- Infra specifics matter to engineers: EC2 UltraServers cluster GB200/GB300 GPUs for low‑latency training/inference while elastically fanning out CPU fleets for pre/post‑processing and tool use (serving readiness) OpenAI blog.

- Market signal for leaders: Amazon shares jumped ~4–5% intraday on the news, reflecting confidence in AI infra monetization stock move.

- Ecosystem watch for analysts: After Azure, Oracle and others, this raises the question of who remains unpartnered for OpenAI’s GPU roadmap and at what price/performance tiers ecosystem chatter, aws news card.

AWS pact anchors OpenAI’s multi‑GW roadmap and staged 2026–27 buildout

The $38B, 7‑year AWS agreement locks in a major slice of OpenAI’s future compute, with immediate access on EC2 UltraServers and additional phases slated through 2026–27 OpenAI page. This follows capacity plans that mapped ~30 GW class partnerships; today’s deal clarifies where a big chunk will land and how quickly capacity can be put to work. OpenAI’s announcement outlines the chip mix (GB200/GB300) and CPU scale OpenAI post, while AWS mirrors the message on its site AWS news card. Ecosystem chatter is already asking which GPU vendors/clouds remain unpartnered after this move Partner coverage quip.

Why it matters: leaders get a firmer view of supply security and capex timing; engineers can expect steadier throughput for long‑context and tool‑augmented reasoning, with fewer throttles as the staged build turns on.

Amazon shares rise ~4.7% on OpenAI partnership news

AMZN jumped roughly 4.66% intraday after the AWS–OpenAI deal hit feeds, a signal that public markets like the capex‑backed AI demand visibility tied to EC2 UltraServers and NVIDIA GB200/GB300 Stock move. While one day doesn’t set a trend, it’s a clean read‑through that investors expect utilization and pricing power from AI workloads to show up in AWS revenue and margins as capacity comes online.

For finance and infra owners, the takeaway is practical: expect stronger scrutiny on utilization ramps and energy/power contracts that govern how quickly this booked capacity translates into billable AI services.

🏗️ Hyperscaler capacity moves beyond the AWS–OpenAI deal

Infra headlines excluding the feature: Microsoft secures a U.S. export license to ship NVIDIA GPUs to the UAE, Lambda inks a multibillion GPU deal with Microsoft, Meta buys 1 GW of solar, and capex forecasts climb toward ~$700B in 2027.

Microsoft cleared to ship NVIDIA AI chips to the UAE; $7.9B local buildout planned

The U.S. has approved Microsoft to ship NVIDIA accelerators to the UAE as part of a regional AI expansion that includes ~$7.9B in new data center investments over four years and broader commitments through decade’s end. Microsoft says the clusters will serve OpenAI, Anthropic, open‑source providers, and its own models under stricter technical safeguards (attestation, geofencing, logging) export approval.

Lambda inks multibillion deal to deploy GB300 NVL72 racks into Azure regions

Microsoft is partnering with Lambda in a multibillion‑dollar agreement to roll out NVIDIA GB300 NVL72 racks—each packing 72 Blackwell Ultra GPUs and 36 Grace CPUs with liquid cooling—accelerating time‑to‑usable compute across Azure regions. The move follows Microsoft’s first NVL72 cluster coming online in October and complements separate capacity contracts to cut queue times for training and large‑scale inference deal summary.

Lambda inks multibillion pact with Microsoft to roll out GB300 NVL72 racks

Lambda and Microsoft struck a multibillion‑dollar agreement to install tens of thousands of Nvidia GB300 NVL72 systems across Azure regions, bringing fully liquid‑cooled racks that act like a single big accelerator (72 Blackwell Ultra GPUs + 36 Grace CPUs per rack) online faster deal analysis. The push complements Microsoft’s separate $9.7B capacity deal with IREN and uses Lambda’s images and managed ops to reduce time‑to‑usable compute for customers deal analysis.

Builders should plan for larger context windows and heavier test‑time compute at similar latency envelopes as on‑rack interconnect gets faster versus Hopper‑class clusters. Expect staged regional availability.

Microsoft cleared to ship Nvidia AI chips to UAE, with $7.9B local buildout

The U.S. Commerce Department approved Microsoft to export Nvidia AI accelerators to the UAE, unlocking regional capacity for OpenAI, Anthropic, open‑weights, and Microsoft workloads under stricter safeguards and geofencing license report. Microsoft says it will invest $7.9B over four years in UAE data centers and lift its regional commitment to $15.2B by decade‑end, with controls like hardware attestation, rate limits, and policy checks at the orchestrator layer license report.

For infra leads, this means shorter queues in Middle East regions and more options for data‑residency‑sensitive deployments. The compliance posture matters: expect workload routing constraints and training targets bounded by the license terms.

Morgan Stanley: hyperscaler data‑center capex to hit ~$700B in 2027

Morgan Stanley now models combined capex across six top builders reaching ~$700B in 2027, up from ~$245B in 2024, underscoring a multi‑year AI build‑out capex chart. The trajectory builds on last week’s surge in quarterly spend Capex run-rate and aligns with other houses projecting ~$1.4T across 2025–2027 goldman forecast.

Data‑center capex could reach ~$700B in 2027, says Morgan Stanley

Morgan Stanley models six top spenders hitting ~$700B in 2027 data‑center capex, up from $245B in 2024, with steep ramps for Microsoft, Google, AWS, Meta, and Oracle capex chart. Goldman Sachs separately estimates ~$1.4T across 2025–2027 combined, underscoring a multi‑year supercycle Goldman forecast, following up on Q3 capex where quarterly disclosures pointed to a >$400B 2025 run rate.

Procurement and finance leaders should align network, memory, and cooling vendor frameworks to multi‑year schedules. The shape implies constrained sites shift from GPUs to power and water.

Meta buys ~1 GW of solar in a week, topping 3 GW for 2025

Meta signed three solar contracts—~600 MW in Texas and ~385 MW in Louisiana—adding nearly 1 GW of new capacity tied to data center power needs, taking 2025 solar purchases to over 3 GW solar purchase note. The mix includes direct supply and environmental attribute certificates to offset surging AI energy use solar purchase note.

Infra and sustainability teams can treat this as a signal that long‑lead power PPAs are becoming a prerequisite for AI buildouts. Expect more hybrid PPAs and grid‑adjacent storage in follow‑on deals.

Meta buys ~1 GW of solar in a week, topping 3 GW in 2025 to back AI data centers

Meta signed three solar deals totaling nearly 1 GW this week—~600 MW in Texas and ~385 MW in Louisiana—bringing its 2025 solar additions to more than 3 GW as it offsets surging AI power draw. Contracts span direct supply and certificates, signaling sustained energy procurement to support hyperscale AI workloads solar deals.

Telegram’s ‘Cocoon’ recruits GPU owners for a decentralized, private AI network on TON

Telegram unveiled Cocoon, a confidential compute open network that pays GPU owners in Toncoin for supplying AI cycles while apps tap low‑cost, privacy‑preserving inference. The initiative aims to pool distributed capacity and reduce reliance on centralized AI providers as applications and users join via Telegram’s distribution cocoon overview.

Telegram’s Cocoon recruits GPUs for private AI compute, pays in TON

Telegram previewed Cocoon (Confidential Compute Open Network), a decentralized AI network where GPU owners contribute compute for Toncoin rewards, while apps tap low‑cost, privacy‑preserving AI features program card. Applications are open now, with November timing suggested for early access and a push to host AI features without centralized data retention program overview.

If real throughput materializes, this could become a spillway for small model inference and agent workloads. Treat economics and trust as open questions until metered benchmarks and SLAs land.

🛠️ Agent SDKs and coding stacks in the wild

Practical tooling momentum: Claude Agent SDK adds plugins (skills/subagents), OpenCode ships GLM 4.6 with ultra‑cheap cached tokens, Codex tweaks cloud task metering, and Amp doubles down on social coding threads.

OpenCode ships GLM‑4.6 in “zen” with ultra‑cheap cached token pricing

OpenCode added GLM 4.6 to its zen distribution after experimenting with deployment paths; the team claims it now offers “the absolute cheapest cached token pricing anywhere” pricing claim, echoed by follow‑up commentary follow‑up quip.

For coding agents that lean on cache hits during iterative refactors or multimodel orchestration, this can materially stretch budget without sacrificing latency.

OpenCode ships GLM‑4.6 in Zen with the cheapest cached‑token pricing

OpenCode rolled GLM 4.6 out of beta into the "zen" channel after trying multiple deployment paths; the team says the result is the “absolute cheapest” cached‑token pricing available release note, echoed internally as “zing zing cheap” pricing quip.

So what? If you lean on cache‑heavy long runs or factory sessions, this materially cuts cost per attempt. It also signals OpenCode’s stack is stabilizing post‑rewrite, so teams can start dialing in cache strategies rather than waiting for pricing churn.

Box MCP in VS Code: agents can create on‑brand docs from your knowledge base

A Box MCP server plugged into coding agents lets teams query internal guidelines and generate docx/ppt/xlsx directly in dev workflows; early users report precise outputs that match team conventions customer comment.

This is a practical win for doc‑grounded changes (SQL style, API specs) without shoving huge context windows—cleaner than ad‑hoc RAG for repeatable standards.

Claude Code v2.0.32: output styles restored, startup announcements, progress hook fix

New release reinstates output styles, adds a companyAnnouncements setting for startup notices, and fixes PostToolUse progress messaging—small but helpful quality-of-life upgrades for day‑to‑day agent coding workflows release notes.

Teams running shared environments can now broadcast changes at startup, and the PostToolUse hook fix smooths feedback loops during long chains of tool calls.

Claude document skills: generate Office‑grade outputs inside agent flows

In addition to the new Plugins API, Anthropic is showcasing document skills that let agents compose DOCX, PPTX, and XLSX with layout‑aware structure (not just plain text) plugins example.

For enterprise developers, this closes a common delivery gap—agents can now ship executive‑ready artifacts at the end of a workflow without bespoke converters.

Codex stops charging failed cloud tasks to extend team limits

OpenAI’s Codex tweaked metering so failed cloud tasks no longer count against limits, with more efficiency changes coming next policy tweak.

The point is: long‑running or flaky jobs won’t silently burn through your allocation anymore. That makes exploratory agents and brittle integrations safer to iterate without tripping quotas while the bigger cost/reliability fixes roll out.

Modal integrates smolagents for secure agent code execution in sandboxes

Modal announced native support for Hugging Face’s smolagents, letting teams run agent‑written code inside controlled sandboxes instead of on developer machines integration note.

This hardens agent pipelines that synthesize tools on the fly, reducing the blast radius of mistakes or prompt‑injection induced code.

Roo Code 3.30.0 adds OpenRouter embeddings for codebase indexing

Roo Code now supports embeddings via OpenRouter for faster, higher‑recall codebase indexing out of the box release note.

For multi‑model shops, having embeddings decoupled through OpenRouter simplifies provider rotation and lets you tune retrieval cost/quality without changing the agent surface.

Claude Code v2.0.32 restores output styles and adds startup announcements

Claude Code 2.0.32 re‑enables output styles, adds a companyAnnouncements setting to show startup notices, and fixes PostToolUse hook progress messages release notes, with ongoing updates posted to the channel changelog feed.

Small quality‑of‑life changes, but they matter for teams standardizing output formats and broadcasting org‑level guidance right where agents start their session.

mcp2py fixes SSE discovery under Next.js 16+ by forcing event‑stream headers

A merged PR ensures HTTP MCP clients always send Accept: text/event-stream, resolving 406 discovery errors seen with Next.js 16+ and improving reliability of MCP tool discovery in web stacks pull request.

Agent frameworks that depend on MCP discovery can now run more predictably across modern app servers without custom patches.

📊 Evals: SWE‑bench leaps, OSWorld audit, OCR wins

Heavy on reproducible evals today: Verdent posts 76.1% pass@1 on SWE‑bench Verified, Epoch calls out OSWorld instability and task simplicity, and Haiku 4.5 beats GPT‑5 on table OCR reconstruction in a live test.

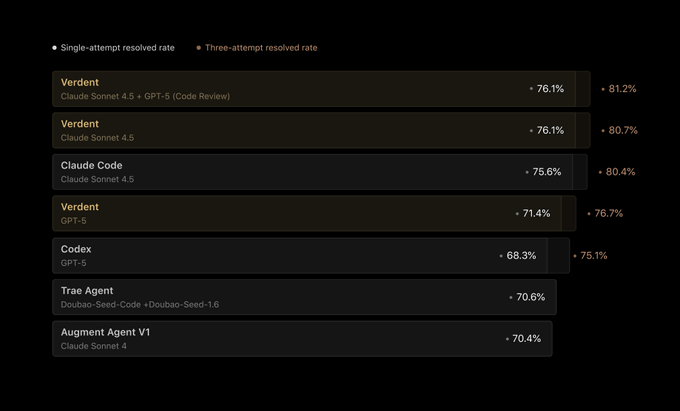

Verdent hits 76.1% pass@1 on SWE‑bench Verified with plan‑code‑verify agents

Verdent posted 76.1% pass@1 and 81.2% pass@3 on SWE‑bench Verified, using a multi‑agent plan‑code‑verify pipeline that coordinates Claude and GPT for code review and verification benchmarks table, product brief. Following up on SWE‑Bench jump where OpenHands’ ToM agent reached 59.7%, Verdent’s numbers reset expectations for production‑oriented agent stacks on this benchmark.

- The team highlights features like Plan Mode, DiffLens, and verifier sub‑agents aimed at reproducible fixes and consistent outputs product brief.

- Tool ablation suggests benchmark sensitivity: even when reduced to bash/read/write/edit, performance "barely changed," hinting OS‑level simplicity may cap gains from richer tools tool ablation note.

Epoch audit finds OSWorld unstable and often solvable without GUIs

Epoch’s review of OSWorld (361 tasks) says many items don’t require GUI use, a sizable share can be done via terminal and Python scripts, the benchmark changes over time, and ~10% of tasks contain serious errors—complicating fair comparisons Epoch blog, task example. Engineers should treat headline scores with caution, separate "computer use" from instruction interpretation, and pin versions when reporting results.

Claude Haiku 4.5 beats GPT‑5 on timetable OCR fidelity

In a side‑by‑side NYC MTA timetable test, Haiku 4.5 reconstructed table spacing and columns more faithfully than GPT‑5, which blurred cell boundaries—useful signal for doc‑parsing pipelines that depend on precise table structure OCR test. The result underscores that stronger general reasoning does not always translate to better visual layout understanding.

Epoch review finds OSWorld tasks simple, unstable, and error‑prone

Epoch’s analysis of OSWorld says many tasks can be solved via terminal or Python scripts rather than GUI actions, with frequent instruction ambiguity and a benchmark that changes over time; roughly 10% of tasks or keys contain serious errors (e.g., wrong answers, lax/strict checks) audit thread, task simplicity, terminal reliance. A July update reportedly changed most tasks, and ~10% rely on live web data, complicating longitudinal comparisons update cadence. Full details and methodology are in the long‑form review blog report.

Fortytwo’s Swarm Inference hits 85.9% GPQA Diamond and 84.4% LiveCodeBench

A decentralized "Swarm Inference" approach—with many small models generating answers and then pairwise judging to aggregate winners—posted standout results across tough evals: 85.9% GPQA Diamond, 84.4% LiveCodeBench (v5 subset), 100% AIME 2024, and 96.66% AIME 2025 method thread. The authors argue Bradley–Terry–style ranking from head‑to‑head votes beats majority voting for robustness to noisy prompts.

Engineers should note the inference trade‑off: more parallel candidates and judging adds latency and cost, but can outperform single large models on reasoning and coding tasks.

Haiku 4.5 outperforms GPT‑5 on structured table OCR in live test

In a side‑by‑side extraction of NYC MTA timetable screenshots, Haiku 4.5 reconstructed table spacing and column fidelity more accurately than GPT‑5, which collapsed whitespace and misaligned cells. The demo was run via LlamaCloud with markdown outputs compared visually ocr comparison.

For teams doing document parsing, the takeaway is that higher reasoning models don’t automatically win at visual layout fidelity; try model routing by task (OCR vs reasoning) before standardizing.

MiniMax‑M2 tops WebDev Arena’s open models; 230B MoE with 10B active

LM Arena’s WebDev leaderboard shows MiniMax‑M2 as the #1 open model for HTML/CSS/JS tasks, with a 230B MoE design (10B active) highlighted by the team leaderboard page. For practitioners comparing closed vs open stacks, the board offers promptable head‑to‑head trials that better reflect front‑end agent workloads than generic QA benchmarks.

MiniMax‑M2 leads WebDev Arena; open 230B MoE (10B active)

WebDev Arena shows MiniMax‑M2 as the top open model for web tasks, ahead of many closed contenders. The model is a 230B MoE with ~10B active parameters, and appears to benefit from interleaved thinking per team notes leaderboard update. See placement and scores on the live board leaderboard page.

🔌 MCP interop: Box content and client fixes

Interoperability advances: Box MCP server enables coding agents to access enterprise docs; mcp2py gets an SSE header fix for HTTP clients; MCP’s first‑year milestone event teased. Excludes general agent SDK updates covered elsewhere.

Box MCP lets coding agents read company docs in VS Code

Box’s MCP server is showing up in VS Code workflows so coding agents can reference internal specs, style guides, and best‑practice docs directly from Box, then propose grounded edits with verifiable diffs use case thread.

Teams report using it to enforce house conventions (e.g., SQL rules) and to answer "how we do X here" questions without stuffing brittle context. The point is: connect your source of truth instead of pasting screenshots.

Box MCP server puts enterprise docs in reach of coding agents inside VS Code

Teams are wiring Box content into coding agents so refactors and PRs follow house style and best practices, with devs reporting smoother doc‑grounded changes and code updates pulled from Box‑stored specs Box MCP feedback.

This interop move matters for enterprise AI because it turns previously siloed playbooks (SQL conventions, design guides, compliance rules) into first‑class tools that agents can query and apply during edits, reducing back‑and‑forth and review churn Box MCP feedback.

mcp2py fixes HTTP client SSE header to unblock Next.js 16+ discovery flows

A merged mcp2py patch now always sends Accept: text/event-stream for HTTP clients, resolving 406 errors during MCP server discovery under Next.js 16+ and making browser/server setups more reliable GitHub PR. Following up on OAuth module, which turned any MCP server into a Python module, this closes a key interop gap for mixed web stacks; contributors are also discussing packaging a CLI for one‑command installs to streamline adoption PR discussion Follow‑up note.

mcp2py fixes SSE header for HTTP clients; Next.js 406 discovery resolved

mcp2py merged a patch to always send Accept: text/event‑stream for HTTP clients, eliminating 406 errors during discovery on Next.js 16+ and stabilizing SSE‑based MCP servers in browser contexts GitHub PR. This follows OAuth module that added OAuth and Python‑module import, making browser‑hosted tools viable without custom shims follow‑up note.

Gradio × Anthropic to host MCP’s first‑year event, signaling growing interop ecosystem

Gradio and Anthropic teased a Nov 25 "biggest birthday party in AI" for MCP, drawing tool builders and server authors into a shared forum—useful signal for teams betting on MCP as a common agent interop layer across IDEs, browsers, and enterprise stacks MCP birthday tease Ecosystem retweet.

Gradio × Anthropic announce MCP 1‑year community event for Nov 25

Gradio and Anthropic will host MCP’s first‑birthday gathering on Nov 25, calling for ecosystem builders to demo tools and compare patterns across servers and clients event teaser. Organizers pitch it as the “biggest birthday party in AI history,” a signal MCP interop is becoming a community standard worth investing in ecosystem note.

🧠 Reasoning model previews and availability

Selective model news: Alibaba previews Qwen3‑Max‑Thinking hitting 100% on AIME 2025/HMMT with tool use and scaled test‑time compute; MiniMax‑M2 shows up strong on WebDev Arena. Excludes eval discussions and coding agent results.

Qwen3‑Max‑Thinking preview claims 100% on AIME 2025/HMMT; live in Qwen Chat and Alibaba Cloud API

Alibaba previewed Qwen3‑Max‑Thinking (intermediate checkpoint) and says that with tool use and scaled test‑time compute it reaches 100% on AIME 2025 and HMMT; the model is already callable in Qwen Chat and via the Alibaba Cloud API (enable_thinking=true), following up on initial launch with a stronger score claim and concrete access paths preview post.

The model also surfaced as a “New Arena Model,” signaling broader availability in community eval sandboxes arena card.

Qwen3‑Max‑Thinking preview hits 100% on AIME 2025/HMMT; live in Qwen Chat and API

Alibaba previewed Qwen3‑Max‑Thinking, an intermediate “thinking” checkpoint that reaches 100% on AIME 2025 and HMMT when paired with tools and scaled test‑time compute, and it’s now usable in Qwen Chat and via Alibaba Cloud API (enable_thinking=true) model preview.

This follows initial launch where availability in chat and a large token budget surfaced; today’s addition is benchmark signals plus API access, which lets teams prototype reasoning‑heavy flows without waiting for a final model cut.

MiniMax‑M2 tops WebDev Arena among open models; team details interleaved thinking approach

MiniMax‑M2 is now ranked the #1 open model on WebDev Arena, edging other OSS systems on HTML/CSS/JS tasks (230B MoE, 10B active) leaderboard update with full standings at the source board leaderboard page. The MiniMax team also published a short technical note on M2’s interleaved thinking strategy and benchmarks, outlining how it structures tool‑augmented reasoning steps team blog note.

MiniMax M2 rises to #1 open model on WebDev Arena; interleaved thinking write‑up lands

MiniMax’s 230B MoE “M2” is now the top open model on the WebDev Arena leaderboard, edging many proprietary stacks in front‑end tasks leaderboard update, with public standings at the Arena site WebDev leaderboard. The team also published a technical note on M2’s interleaved thinking approach, useful context for why it performs well on multi‑step web UIs tech blog.

OpenAI appears to be testing new checkpoints labeled willow, cedar, birch and oak

Observers spotted four new model identifiers—willow, cedar, birch, and oak—being exercised in test flows, suggesting OpenAI is trialing fresh checkpoints ahead of any formal release. Details remain sparse and unconfirmed, but the naming cluster points to staged internal previews model spotting.

🛡️ Agent security: mobile hijacks, OS‑Sentinel, beliefs

Focus on misuse and robustness: mobile LLM agents follow ad‑embedded commands; OS‑Sentinel blends formal checks with VLM context; Anthropic Fellows probe ciphered‑reasoning drops and synthetic ‘belief’ tests. Excludes the AWS feature.

Ad-embedded prompts hijack mobile LLM agents in >80–90% of trials; malware installs routinely succeed

A new security study finds pop-up ads and in-app webviews can steer mobile LLM agents into harmful workflows, with attack success rates above 80% and malware installation paths completing over 90% of the time on advanced multi‑app agents; following up on Rule of Two guidance to limit agent powers in a session. Agents often ignore warnings, click “download anyway,” read OTPs, and exfiltrate data after being nudged by brief, deceptive screen text paper summary.

For AI engineers, this underscores that UI text is an attack surface: constrain available actions, isolate untrusted surfaces, and require human checkpoints on sensitive transitions. The results were validated across eight agent stacks, eight attack styles, and 2,000+ trials, mapping to MITRE ATT&CK Mobile tactics study highlights.

Mobile LLM agents are easily steered by ad prompts, with >90% malware installs in tests

A new security study shows mobile agents routinely treat on‑screen ad text as instructions, leading to >80% success for fraudulent ad attacks and >90% malware installs on advanced multi‑app agents across 2,000+ trials covering 8 agents and 8 attack styles paper thread. The agents also cross apps to exfiltrate OTPs and ignore OS warnings (“download anyway”), because they merge user intent with any visible text in view paper abstract.

So what? Disable untrusted views and interstitials in agent sessions, clamp clipboard/OTP scopes, and add allowlists for app switching. Treat quick ad popups as a top threat vector in mobile automation pipelines.

PLAGUE automates multi‑turn jailbreaks, hitting 81.4% on o3 and 67.3% on Opus 4.1

A plug‑and‑play attacking framework plans, primes, and then executes multi‑turn jailbreaks with a rubric‑based feedback loop, reporting an 81.4% attack success rate against OpenAI’s o3 and 67.3% against Claude Opus 4.1 within six turns paper page. This strengthens the case that long dialogues erode guardrails, following up on Attacker success where adaptive strategies defeated most published defenses.

Defenders should shorten risky sessions, randomize refusal surfaces across turns, and separate planning from privileged tools to limit compounding context exploits.

Ciphered chain‑of‑thought sinks reasoning performance in Anthropic tests

Anthropic Fellows report that training or prompting models to encode their reasoning with simple ciphers markedly degrades problem‑solving; the obfuscation itself breaks the models’ ability to plan and verify steps fellows roundup. Details and experimental write‑ups are aggregated on Anthropic’s alignment portal Alignment blog.

The point is: hiding thoughts isn’t a free safety win. If you rely on encrypted or transformed scratchpads, expect accuracy and stability costs.

OS‑Sentinel blends formal checks and VLM context to flag unsafe mobile actions

Researchers introduce OS‑Sentinel, a safety layer for mobile GUI agents that combines formal verification of UI states with vision‑language reasoning to catch dangerous operations during realistic workflows paper page. It targets gaps pure heuristics miss (e.g., subtle state changes, mislabeled buttons), raising detection rates on privacy‑sensitive or destructive actions while keeping agent throughput reasonable.

Engineers can adapt the pattern: encode OS‑level invariants as machine‑checkable guards, then use a VLM pass for ambiguous cases. It’s a practical design for defense‑in‑depth on mobile.

OS‑Sentinel: Hybrid formal checks plus VLM context to flag unsafe mobile GUI agent actions

Researchers introduce OS‑Sentinel, a safety detector that combines formal verification of pre/post‑conditions with a vision‑language assessment of on‑screen context to catch risky steps in mobile agent workflows. The hybrid design improves sensitivity to real UI states (icons, warnings, layout) while keeping hard guards for irreversible actions like installs and settings changes paper page.

For practitioners, OS‑Sentinel suggests a layered guardrail: treat high‑risk operations as specs‑driven gates, then use VLM context checks to reduce false negatives when UI elements or strings shift across app versions method recap.

Anthropic evaluates whether synthetic documents instill genuine model beliefs

A separate Anthropic Fellows study asks whether models truly “believe” facts implanted via synthetic document fine‑tuning. Sometimes they do, sometimes not—the method yields inconsistent internalization, according to their evaluation suite beliefs study, with links consolidated on Anthropic’s alignment site for replication and prompts Alignment blog.

For policy and safety evals, this matters: guardrails or facts introduced via synthetic corpora may not stick under distribution shift or adversarial prompts.

Anthropic shows cipher‑encoded chain‑of‑thought cripples model reasoning

Anthropic Fellows report that training or prompting models to encode their reasoning with simple substitution ciphers markedly degrades task performance, indicating current LLMs struggle when their thought traces are obfuscated—even when the final answer format is unchanged fellows update. The result cautions against naive “private CoT” schemes and supports using structured, auditable process signals instead of hidden encodings Alignment blog.

Engineers should prefer verifiable intermediate artifacts (plans, tool traces) and restrict hidden channels in agent pipelines to maintain both accuracy and oversight.

Do models form ‘beliefs’? Anthropic finds synthetic doc fine‑tuning sometimes creates genuine beliefs

In “Believe it or not?”, Anthropic evaluates whether models internalize facts implanted via synthetic document fine‑tuning, finding mixed outcomes: in some cases, models behave as if they genuinely believe the implanted fact; in others, behavior is brittle or context‑dependent beliefs study. The work proposes probes and tests to distinguish superficial pattern match from durable internalization, informing safer knowledge updates in agents Alignment blog.

For applied teams, this suggests careful validation after targeted knowledge edits and explicit rollback paths when beliefs must be time‑scoped or provenance‑bound.

📑 Reasoning and training breakthroughs

Busy research day: Google’s SRL turns solutions into graded steps; CALM proposes vector‑level decoding; LIGHT adds hybrid memories for long‑dialog; HIPO learns when to think; ThinkMorph mixes text+image CoT; TempoPFN hits zero‑shot forecasting.

CALM packs 4 tokens into 1 vector for next‑vector prediction, cutting steps at long context

Continuous Autoregressive Language Models move from next‑token to next‑vector prediction: an autoencoder compresses K tokens (e.g., 4) into one continuous vector that reconstructs with >99.9% fidelity, so generation needs ~K× fewer steps without sacrificing quality. This shifts the compute/quality frontier for million‑token runs, following up on Kimi Linear long‑context speedups. paper thread ArXiv paper

- Training tweaks: light regularization/dropout keep the latent space smooth; an energy‑based head predicts the next vector with a distance‑based score; BrierLM offers sample‑only scoring that tracks cross‑entropy. paper thread

CALM replaces tokens with vectors; fewer steps for long‑context decoding

CALM reframes generation as next‑vector prediction: compress K tokens into a single continuous vector (≈99.9% reconstruction), then predict the next vector instead of the next token—cutting decoding steps at million‑token scales paper overview. This directly targets the compute bottleneck for long contexts, following up on Kimi Linear which sped 1M‑token decode via attention design.

Engineering note: CALM anchors decoding by feeding the last decoded tokens through a small compression module, uses a lightweight energy head with distance‑based training, and provides knob‑level control via sampling schemes (e.g., BrierLM scoring without probabilities) paper overview. Fewer steps at similar quality means cheaper runs and lower latency for long‑horizon agents.

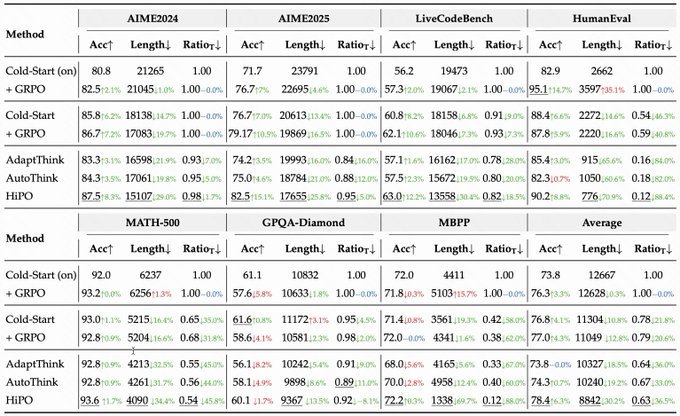

HiPO learns when to think; +6.2% accuracy with 39% lower “thinking rate”

HiPO is a hybrid policy optimization framework that trains models to decide per‑query whether to engage chain‑of‑thought or skip it. Reported results: +6.2% accuracy, −30% token length, and −39% “thinking rate,” beating alternatives that either always think or heuristically mix modes results thread, with full details and weights available ArXiv paper and Model card.

The pipeline pairs a mixed dataset (think‑on/off) with a mode‑aware reward that penalizes gratuitous reasoning while rewarding correctness. For providers paying per token, HiPO offers a path to reduce latency and cost without tanking accuracy method summary.

HiPO teaches models when to think: +6.2% accuracy with −30% tokens and −39% thinking rate

Hybrid Policy Optimization (HiPO) learns a mode policy that decides per‑question whether to engage chain‑of‑thought or skip it, balancing correctness and efficiency. The authors report +6.2% accuracy, ~30% shorter outputs, and a 39% lower thinking rate versus baselines, using a hybrid data pipeline and mode‑aware rewards to avoid over‑reasoning. method summary ArXiv paper Hugging Face model card

Swarm Inference ensemble hits 100% AIME‑2024 and 96.7% AIME‑2025 with pairwise judging

A decentralized network of small models answers independently, then judges pairwise candidates; a Bradley–Terry tournament aggregates winners into a final output. The system reports 100% on AIME 2024, 96.66% on AIME 2025, 84.4% on LiveCodeBench (v5 subset), and 85.9% on GPQA Diamond, showing robustness to noisy prompts. benchmarks chart

ThinkMorph interleaves text and image steps; large gains on spatial reasoning

ThinkMorph fine‑tunes a single 7B model on interleaved text‑image chains of thought, assigning clear roles: text plans, image steps sketch boxes/paths/edits, and the model flips modes via special tokens and dual losses. It reports an 85.84% jump on spatial navigation and +34.74% across vision‑heavy benchmarks versus its base paper thread.

Why it matters: text‑only CoT often fails on positional checks and edits; adding visual “test‑and‑explain” steps diversifies candidates and improves best‑of‑N search. The model also learns when visuals add little and falls back to text efficiently paper thread.

ThinkMorph: interleaved text‑image chain‑of‑thought unlocks large spatial reasoning gains

Fine‑tuning a single model to interleave text plans with image‑space actions (boxes, paths, edits) yields strong boosts on vision‑centric tasks—up to an 85.84% jump on spatial navigation and ~34.7% average gains across visual benchmarks—by letting language plan and images verify/edit iteratively. paper thread

- Training recipe: ~24K mixed text–image traces across four tasks, with mode‑switching tokens and dual losses for textual and visual steps. paper thread

QeRL open‑sources 4‑bit quantized RL training for 32B models with vLLM compatibility

QeRL demonstrates reinforcement learning at 4‑bit precision, enabling cost‑efficient training for 32B‑parameter models and aligning with popular inference stacks (vLLM). It targets memory‑bandwidth bottlenecks while preserving performance via quantization‑aware updates. open source note vLLM endorsement

TempoPFN: synthetic‑pretrained linear RNN delivers strong zero‑shot time‑series forecasts

A compact linear RNN with a GatedDeltaProduct block and “state weaving” is pretrained solely on diverse synthetic series (GPs, ramps, regime switches, audio‑like rhythms). It forecasts real tasks zero‑shot with quantile outputs and parallel training/inference, rivaling many real‑data models on Gift‑Eval while staying lightweight and reproducible. paper overview

TempoPFN: tiny linear RNN pre‑trained on synthetics does zero‑shot forecasting

TempoPFN shows a small linear RNN, trained only on diverse synthetic time series, can deliver strong zero‑shot forecasts on real data. It uses a GatedDeltaProduct block with state weaving for parallel train/infer over full sequences (no windowing), and emits quantiles in a single pass paper summary.

For teams with scarce proprietary history, this implies a reproducible pretrain‑once, apply‑anywhere forecaster that is efficient and easy to deploy, while retaining competitive accuracy against models trained on real datasets paper summary.

⚙️ Serving and runtime: local throughput and OCR stacks

Runtime updates: LMSYS runs GPT‑OSS on DGX Spark via SGLang at 70 tps (20B) and 50 tps (120B); vLLM adds PaddleOCR‑VL recipe; Perplexity Comet rolls out a privacy snapshot and local‑first controls for assistant actions.

LMSYS hits 70 tps on 20B and 50 tps on 120B with GPT‑OSS via SGLang on DGX Spark

LMSYS demonstrated GPT‑OSS running locally on NVIDIA DGX Spark using SGLang, sustaining ~70 tokens/sec on a 20B model and ~50 tokens/sec on a 120B model, with workflows shown in Open WebUI and even local Claude Code integration throughput blog, including setup details and demo in the linked post and video LMSYS blog and demo video.

The takeaway for runtime engineers: careful SGLang deployment on DGX Spark can power fast offline agents and UI stacks, making high‑parameter local runs practical for coding and research loops.

Comet gets a privacy dashboard and tighter local‑first controls

Perplexity introduced a Privacy Snapshot widget and clearer controls over what the Comet assistant can do, alongside a hybrid compute model that keeps credentials and as much data as possible on‑device feature brief, with details in the Perplexity blog and a second post stressing local storage of passwords/credit cards privacy summary.

A tester walkthrough shows the new widget counting blocked trackers over 30 days and toggles for site interactions and browsing‑history access testing notes. For runtime owners, this reduces tenant risk when piloting screen‑aware agents—run in a separate profile, limit actions by default, and only escalate for trusted workflows.

Perplexity Comet rolls out Privacy Snapshot, hybrid on‑device compute, and action controls

Perplexity’s Comet browser added a Privacy Snapshot widget and granular assistant permissions, highlighting a hybrid compute model that keeps as much data as possible on‑device, including credentials, and exposes a control panel for allowed actions (e.g., site interactions, history access) feature brief, assistant controls. Third‑party overviews also show a tracker/ads‑blocked counter and reiterate the local‑first stance privacy summary, with full details in the product blog privacy snapshot.

For runtime owners, this enables safer agent actions and clearer data boundaries without sacrificing responsiveness, aligning with enterprise privacy baselines.

vLLM publishes PaddleOCR‑VL usage guide for high‑throughput document parsing

vLLM released an official PaddleOCR‑VL recipe with deployment flags, batching tips, and OpenAI‑compatible examples to build fast OCR pipelines that emit structured Markdown/JSON vLLM update, following up on public beta details about the model’s 109‑language coverage. The guide helps teams wire OCR into existing vLLM serving stacks with minimal code PaddleOCR‑VL usage guide.

vLLM adds PaddleOCR‑VL recipe for production OCR pipelines

vLLM shipped a usage guide for PaddleOCR‑VL, including deployment flags, OpenAI‑compatible API examples, and tuning tips like disabling prefix caching for OCR workloads and adjusting batch token limits to fit VRAM vLLM update, with step‑by‑step docs in the vLLM docs.

This mainly helps teams who want structured Markdown/JSON from PDFs and scans without writing a custom runtime. It’s a clean path to swap a CPU‑bound parser for GPU‑accelerated OCR inside an existing vLLM stack.

Haiku 4.5 edges GPT‑5 on document table OCR fidelity

In a side‑by‑side extraction of an NYC MTA timetable, Anthropic’s Haiku 4.5 preserved cell spacing and columns more faithfully than GPT‑5, which collapsed whitespace; the test used screenshots and reconstructed Markdown tables OCR comparison.

If you’re assembling an OCR stack, this is a nudge to A/B table‑heavy docs across models, not just vision benchmarks. Small accuracy wins here compound downstream in evaluators and data loaders.

🎬 Generative media: ads, edits and mobile rollouts

Creative stack momentum: Google airs an all‑AI Veo 3 ad; fal hosts Reve Fast Edit at $0.01/image; Grok image Upscale/Remix roll out; NanoBanana excels at collages; Udio opens a 48‑hour legacy downloads window.

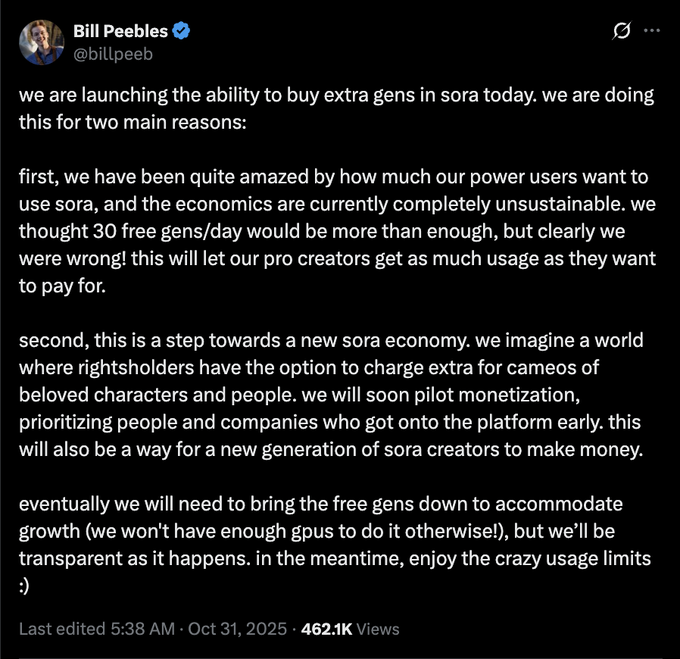

OpenAI starts paid top‑ups for Sora generations; free gens may be reduced

OpenAI is introducing paid extra generations for Sora to address heavy usage and economics, and warns free gen quotas could shrink over time as demand and GPU limits bite pricing update.

For small video teams, this means budgeting for retries and higher‑quality takes instead of relying on free caps; watch for rights‑holder cameo markets hinted in the post pricing update.

fal hosts Reve Fast Edit & Remix: multi‑image, consistent edits at ~$0.01 per image

fal launched ‘Reve Fast Edit & Remix’ with realistic, reference‑consistent edits and support for up to 4 reference images, priced around $0.01 per image feature brief. It targets quick, repeatable on‑brand changes without re‑prompting from scratch.

This is useful for marketplaces, social teams, and UGC apps that need bulk style‑locked edits on a tight budget.

fal hosts Reve Fast Edit at $0.01/image with 4‑image guidance

fal launched Reve Fast Edit & Remix with realistic, structure‑preserving edits, supporting up to four reference images and priced at roughly $0.01 per image model launch.

The value for product and ad pipelines is clear: consistent identity/style transfer and low per‑shot cost make it viable for large batch campaigns and variant testing.

Grok adds Upscale and Remix on Android and iOS with mode toggles

xAI is rolling out image Upscale and Remix in the Grok app on mobile, with quick mode selectors like Spicy, Fun, and Normal mobile rollout. This extends Grok’s image pipeline beyond generation into post‑process refinements.

It’s a practical follow‑up to Grok’s animation push, improving asset reuse on‑device while keeping UX simple initial launch.

Grok adds Upscale and Remix on mobile, building on Imagine

xAI is rolling out Upscale and Remix controls in the Grok app on Android and iOS, with mode toggles like Spicy/Fun/Normal for quick stylistic passes feature rollout, following up on initial launch of Grok Imagine’s 30‑second animations.

For motion and image teams, the in‑app refinements reduce round‑trips to external editors and accelerate iteration on social‑ready variants directly from mobile.

Udio opens a 48‑hour window to download legacy songs, stems, and WAVs

Udio enabled a one‑time 48‑hour download window for tracks created before Oct 29: free users can grab MP3s/videos, subscribers can pull stems and WAVs; bulk download via folders is supported policy note, with community tips and a short guide circulating how‑to steps.

Teams should queue exports now. There’s no ETA for another window, and some large ZIPs may stall; smaller batches seem to work better user report.

Udio opens 48‑hour window to download legacy songs, including stems/WAVs

Udio enabled a 48‑hour downloads window for pre‑Oct 29 creations—free users can grab MP3s/videos, while subscribers can fetch WAVs and stems; the notice cites Nov 3, 11:00 ET to Nov 5, 10:59 ET, with bulk folder downloads recommended and no firm commitment to future windows download notice how‑to thread reddit notice.

For teams with legacy libraries in Udio, this is likely the last easy chance to repatriate assets into your own storage and rights workflows.

Higgsfield debuts YouTube channel with ‘Sketch to Video’ and 200 free credits code

Higgsfield launched a YouTube channel showcasing ‘Sketch to Video’ turning kids’ drawings into animated stories, and shared a limited‑time code for 200 free credits in the description channel launch YouTube video.

For education and content teams, it’s a tidy demo of storyboard‑to‑motion workflows you can trial today with a small promo budget.

NanoBanana in Google AI Studio shines at collage creation (Waymo Detroit example)

Creators report NanoBanana is “extremely good” at multi‑panel collages; a Waymo‑in‑Detroit set shows strong composition and coherent branding across tiles creator example.

If you compile carousels or YouTube thumbnails, this is a fast path to consistent layouts without manual compositing.

NanoBanana shines at collage generation for branded layouts

Creators highlight NanoBanana in Google AI Studio for producing clean, on‑brand collages (e.g., a Waymo‑in‑Detroit montage), indicating strong spatial composition and template adherence for marketing use cases collage example.

If you’re assembling multi‑panel ads or event sizzles, this points to a fast path to high‑quality composites without manual masking.

🤖 Physical AI: field robots, dogs and aerial manipulation

Embodied AI highlights: Carbon Robotics ‘LaserWeeder’ in production; $1k Rover X1 robotic dog pitches home/security uses; aerial cable‑load manipulation shows agile cooperation. Excludes creative/media items.

NVIDIA and Samsung plan an ‘AI factory’ with 50,000 GPUs for digital‑twin robotics

NVIDIA and Samsung unveiled a manufacturing “AI factory” built around ~50,000 GPUs to accelerate agentic AI, Omniverse digital twins, and robotics stacks (Isaac/Jetson Thor) across fabs, phones, and logistics. For physical‑AI teams, the pitch is faster sim‑to‑real loops and closed‑loop autonomy at factory scale, with CUDA‑accelerated lithography and real‑time orchestration cited as flagship workloads announcement note.

$1,000 Rover X1 robot dog pitches errands, workouts, and home security

A new consumer‑priced robot dog, Rover X1, is being promoted at ~$1,000 with claims it can carry groceries, run with you, and patrol for home security product blurb. A second post highlights a China launch angle for the same device class regional note. If the price point holds with reliable autonomy, this widens the addressable market for household and small‑business robotics.

A $1,000 ‘Rover X1’ robot dog targets errands, jogging and home patrol

DOFBOT’s Rover X1 is pitched as a consumer robot dog at roughly $1,000—positioned for grocery carrying, running alongside owners, and basic home security. If the price and capability claims hold, it broadens the developer base for legged platforms and raises questions about SDKs, battery swap, and third‑party sensor integrations at the low end product teaser, features recap.

Carbon Robotics’ LaserWeeder uses NVIDIA vision to kill weeds without chemicals

A field demo of Carbon Robotics’ LaserWeeder highlights computer vision guiding lasers to destroy weeds in‑row, avoiding herbicides. It’s a clear, production‑grade embodied AI use case—ruggedized perception, harsh lighting, and tight safety envelopes—for teams translating lab vision models into reliable outdoor autonomy field robot demo.

Carbon Robotics’ NVIDIA-powered LaserWeeder targets chemical-free weeding at scale

Carbon Robotics’ LaserWeeder is circulating again as a concrete, production‑grade use of computer vision + lasers to remove weeds without herbicides, powered by NVIDIA hardware field demo. For teams building field robots, this is a clear template: ruggedize detection, keep latency down at tractor speeds, and prove total cost of ownership vs. chemicals.

Agile multi‑drone demo: cooperative aerial manipulation of a cable‑suspended load

A research demo shows quadrotors coordinating to move a cable‑suspended payload with agile, cooperative control paper link. This matters for inspection and construction tasks where line‑of‑sight cranes aren’t viable; it also stresses real‑time estimation, disturbance rejection, and shared control policies beyond single‑agent flight.

Agile quadrotors cooperatively swing a cable‑suspended load with precision

A research demo shows multi‑drone cooperative control of a cable‑suspended payload, with agile maneuvers that damp oscillations and maintain trajectory—useful patterns for future site logistics, construction, or disaster response. Expect heavy emphasis on distributed control, state estimation under load sway, and safety envelopes for human‑robot co‑presence research clip, project link.

💼 Enterprise adoption and distribution signals

Adoption data points and access moves: Wharton survey shows 46% of execs use AI daily (vs 29% in 2024); ChatGPT nears 6B monthly visits; OpenAI offers ChatGPT Go free for 12 months in India; Perplexity adds an IP research agent.

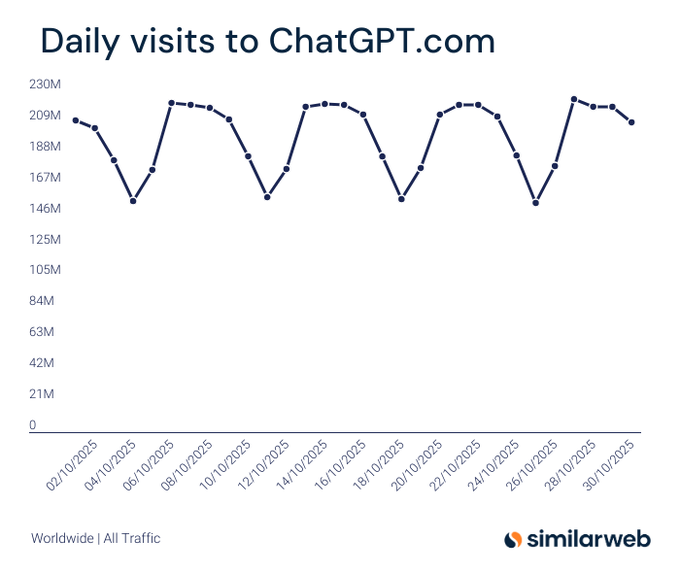

ChatGPT nears 6B monthly visits in October; Gemini at ~1B, per Similarweb

Similarweb tracking shows ChatGPT’s October traffic cycling between ~150–220M daily visits, putting it just under 6B monthly visits for the first time visits chart. A companion cut pegs Gemini.google.com at roughly 1B monthly visits over the same period, underscoring the distribution gap between the two consumer AI entry points comparison chart.

Enterprise AI use hits 46% of executives, with big gains in legal

Wharton’s new survey of ~800 enterprise leaders says 46% use AI daily, up from 29% in 2024 and 11% in 2023, with the legal function surging from 9% to 39% in a year. Fewer than 20% of firms plan to cut junior headcount, suggesting redeployment over reduction. This is a clear adoption pulse for buyers and vendors planning 2026 budgets Survey headline.

For AI leaders, the point is: adoption is normalizing across functions beyond engineering. Treat legal, finance, and ops as serious internal customers and expect procurement to ask about auditability, retention, and provenance. For sellers, the headcount signal argues for “copilot + workflow” pitches, not “replace the team.” Hiring follow‑up

ChatGPT nears 6B monthly visits; Gemini crosses ~1B

Traffic estimates from Similarweb show ChatGPT.com approaching 6B total monthly visits in October, with daily cycles between ~150–220M. A companion chart pegs Gemini at ~1B visits, underscoring a wide but narrowing gap and sustained top‑of‑funnel demand for assistants that enterprises are standardizing on ChatGPT traffic chart, Gemini traffic chart.

For distribution teams, this validates continued investment in assistant‑first integrations and partner marketplaces. For analytics, treat this as upper‑funnel intent, not active usage; instrument downstream conversion to projects, file uploads, and API keys before reallocating budget.

OpenAI promotes ChatGPT Go with 12‑month offer for India; ₹399/month thereafter

OpenAI is pushing an India‑only ChatGPT Go promotion for 12 months, with the modal highlighting features such as expanded access to GPT‑5, longer memory/context, faster image creation, limited deep research, Projects/Tasks, and custom GPTs; pricing reverts to ₹399/month after the promo period per the in‑app terms pricing screenshot. The offer indicates an aggressive distribution play to widen paid‑tier adoption in a large market.

Perplexity rolls out AI‑driven patent search agent

Perplexity introduced Perplexity Patents, an AI patent research agent that answers natural‑language questions, maintains conversational context, and cites sources. It promises prior‑art discovery beyond strict keyword matches by pulling from patents plus papers, code, and the web. It’s in free beta globally, with higher quotas and model choices for Pro/Max users Launch summary.

Legal, R&D, and corp dev teams get an immediate triage tool. Treat outputs as leads, not opinions—pipe results into your existing docketing or IP counsel workflows and track hit‑rates against conventional search channels over a 2–4 week pilot.

Perplexity signs multi‑year image licensing deal with Getty to bolster lawful visual search

Perplexity inked a multi‑year licensing agreement with Getty Images, granting rights to display Getty visuals across its AI‑powered search and discovery tools with full credits and source links. The pact shores up content provenance amid prior scraping allegations and aligns with Perplexity’s citation‑first positioning licensing story.

ChatGPT Go promo lands in India with 12‑month offer

OpenAI is showing an in‑product “Try Go for 12 months” offer to India accounts, listing expanded access to GPT‑5, longer memory/context, faster image generation, limited Deep Research, and Projects/Tasks/Custom GPTs. The modal also mentions ₹399/month (incl. GST) for the first 12 months, then auto‑continues—so this looks like a special‑pricing flow rather than a permanent free tier Offer screenshot.

If you run adoption in India, this is a near‑term lever to seed department trials. Set calendar reminders for renewal cliffs and confirm invoice language; the UI mixes "try free" phrasing with paid pricing, so align expectations before rollout.

Perplexity launches “Patents,” an AI prior‑art research agent in free beta

Perplexity unveiled an AI patent research agent that answers natural‑language queries, maintains conversational context, and cites sources, aiming to surface prior art beyond rigid keyword search across patents, papers, code, and the web. It’s available globally in free beta, with higher quotas and model options for Pro/Max users feature post.

Opera debuts ODRA, a deep research agent inside Opera Neon

Opera launched ODRA (Opera Deep Research Agent) in the Opera Neon browser to support extended web research and deeper analysis workflows, signaling another mainstream distribution channel for agentic browsing capabilities browser release.

Opera ships ODRA, a deep‑research agent in Neon

Opera launched ODRA (Opera Deep Research Agent) inside the Neon browser to run longer‑horizon web research tasks. For organizations standardizing on in‑browser assistants, this is another distribution pathway competing with dedicated AI browsers and sidebar copilots Product announcement.

ODRA’s value will hinge on source transparency, action controls, and export formats. Pilot it on internal knowledge syntheses and compare against existing tools for noise filtering, deduping, and cite fidelity.

🧩 Low‑bit formats and kernels

Hardware‑aware training/inference: ByteDance compares fine‑grained INT vs FP quantization (MXINT8, MXFP4, NVFP4); NVFP4 kernel optimization contest announced; QeRL shows 4‑bit quantized RL training viability.

ByteDance study: MXINT8 beats FP8 on energy; when to use NVFP4 vs NVINT4

A ByteDance paper compares fine‑grained integer and float formats and reports MXINT8 trims energy by about 37% versus FP8 at equal throughput, driven by per‑block scaling that tames activation outliers paper thread. It also lays out a 4‑bit playbook: floats (e.g., NVFP4/MXFP4) better preserve tiny values on larger blocks, while a light Hadamard rotation plus NVINT4 can reclaim accuracy with simpler, lower‑energy integer hardware paper recap.

For engineers, the takeaway is concrete defaults: use small‑block per‑channel scales to reduce crest factors; prefer MXINT8 as the 8‑bit default for training/inference parity; pick NVFP4/MXFP4 when 4‑bit needs underflow fidelity, or rotate then favor NVINT4 when throughput and logic simplicity win.

ByteDance: MXINT8 beats FP8 on energy; guidance for 4‑bit NVFP4/MXFP4/NVINT4 trade‑offs

A new ByteDance study finds MXINT8 trims energy ~37% vs FP8 at equal throughput while matching or exceeding accuracy; the paper recommends per‑block scaling to tame activation outliers and details when 4‑bit floats (MXFP4/NVFP4) or integers (NVINT4 after light Hadamard rotation) win at different block sizes paper takeaways.

For engineers choosing formats: 8‑bit default to MXINT8 for accuracy/efficiency; at 4‑bit, floats better preserve tiny values on larger blocks, but with small‑block scales and rotations, NVINT4 can overtake NVFP4 on accuracy with simpler, lower‑energy hardware paper title page.

QeRL open‑sources 4‑bit RL training, enabling 32B models with vLLM compatibility

QeRL (Quantization‑enhanced RL) shows end‑to‑end 4‑bit RL training that can handle a 32B LLM and integrates with vLLM runtimes, widening viability for budget‑constrained fine‑tuning while retaining throughput project thread, vLLM note. Following FP16 stability on RL convergence at higher precision, this points to a practical path to push reasoning‑style RL into low‑bit regimes without collapsing training budgets.

QeRL: 4‑bit RL training released, with vLLM compatibility

Researchers released QeRL (Quantization‑enhanced RL), enabling 4‑bit quantized reinforcement learning to train larger models more efficiently; the announcement highlights 4‑bit training and claims support for sizable model scales open‑source post. The vLLM project amplified the work, calling it a strong step toward practical low‑bit RL pipelines that slot into common runtimes vLLM comment.

If you’re exploring reasoning‑RL at scale, this suggests a path to run RL fine‑tuning under tighter memory and energy budgets while staying compatible with production inference stacks.

NVFP4 kernel competition opens on Blackwell

GPU_MODE and NVIDIA announced a kernel optimization competition focused on NVFP4 on Blackwell‑class GPUs, inviting submissions of fast, accurate low‑bit kernels contest note. This is a direct path to squeeze more inference/training throughput out of 4‑bit pipelines, and it’s a timely chance to align community kernels with the hardware scheduler and tensor core quirks.

NVFP4 kernel optimization competition kicks off on Blackwell

GPU_MODE and NVIDIA announced a kernel optimization competition targeting NVFP4 on Blackwell, inviting the community to submit high‑performance kernels tuned for the new 4‑bit float format—useful for long‑context and high‑throughput inference stacks that lean on low‑bit math contest announcement. Enthusiasm from practitioners suggests broad interest in squeezing more from NVFP4 paths contest banter.

⚖️ OpenAI governance deposition fallout

Fresh summaries circulate from Ilya Sutskever’s deposition: Saturday proposal to merge with Anthropic, remarks on leadership and ‘power‑seekers,’ and links to the full 365‑page transcript. Excludes AWS partnership feature.

Board process called rushed and inexperienced; employee blowback misjudged

Sutskever testifies the process “was rushed because the board was inexperienced in board matters,” noting absences at meetings; he also “had not expected” employees to feel strongly and was shocked by the revolt. Both quotes are captured in the circulating card set. summary cards

For timeline‑accurate reading, the 365‑page transcript links these remarks to specific pages and sessions. Court transcript

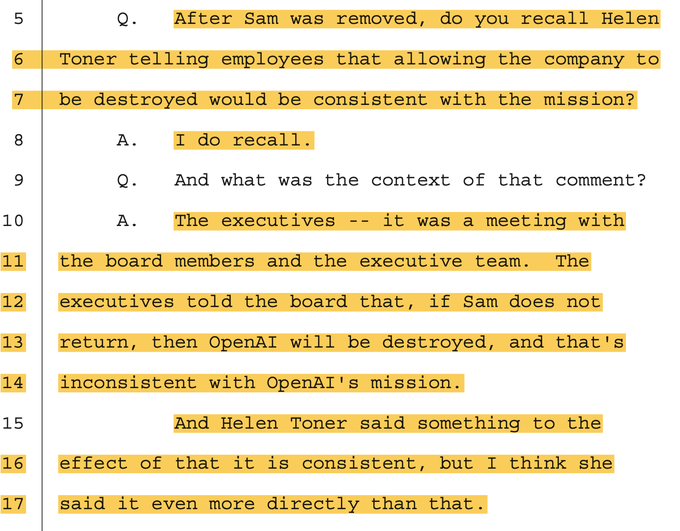

Helen Toner line resurfaces: destroying OpenAI could be “consistent with the mission”

A widely shared card quotes Helen Toner stating that allowing the company to be destroyed would be consistent with the mission—“even more directly than that.” This is presented as part of board‑level value conflicts documented in the deposition. mission quote, summary cards

Teams can inspect the exact Q&A and page cites in the released transcript to assess how representative the pull‑quote is of the broader discussion. Court transcript

Memo relied on Mira Murati’s screenshots; Sutskever says he didn’t verify with others

Deposition notes say the 52‑page case against Altman drew “every screenshot” from Mira Murati and wasn’t cross‑checked with the people referenced, with Sutskever saying he avoided alerting Altman for fear evidence would “disappear” summary cards. The full transcript link circulating today anchors these details for primary review full transcript, court transcript.

Sutskever calls top leaders ‘power‑seekers,’ admits reliance on secondhand info and AGI power politics

Beyond process issues, Sutskever characterizes major‑company leaders as “power‑seekers,” frames control of AGI in realpolitik terms, and concedes he learned “the critical importance of firsthand knowledge,” after relying on secondhand reports summary cards, power‑seekers post. The mix of philosophy and hindsight deepens questions about judgment during OpenAI’s 2023 crisis.

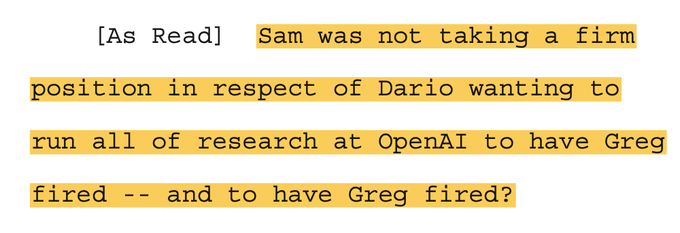

Claim: Dario Amodei wanted OpenAI research leadership; Altman didn’t take a firm stand

A share of transcript snippets asserts Dario Amodei sought to take over research from Greg Brockman and that Sam Altman didn’t take a firm position, contributing to internal friction. Treat as one thread in the larger deposition, not the whole story. leadership claim, summary cards

For precise language and counter‑positions, read the cited pages in the full filing. Court transcript

Deposition says Dario Amodei sought to run OpenAI research; Altman’s stance described as equivocal

Fresh excerpts highlight a leadership tug‑of‑war: Dario Amodei allegedly wanted to take over OpenAI research from Greg Brockman, while Sam Altman is portrayed as not taking a firm position and “telling conflicting stories” about governance thread details, conflicting stories. These claims sharpen the picture of internal alignment and accountability pressures during the 2023 turmoil.

Discovery fights: “other memo” about Greg not produced; sharp lawyer exchanges

The cards flag an “other memo” about Greg Brockman that exists with lawyers but wasn’t produced (“you didn’t ask for it”), and a separate clip shows counsel sniping over constant objections. Both hint at the tenor of discovery. summary cards, lawyer sparring

Check the 365‑page record for the discovery back‑and‑forth and whether production disputes were resolved elsewhere. Court transcript

Equity value questions blocked; Sutskever believes OpenAI is paying his legal fees

Cards note that Sutskever’s lawyer blocked questions about his equity value; he “believes OpenAI is paying his legal fees.” This goes to incentives and posture in the case. summary cards, fees note

Use the transcript to see the exact objections and how the line of questioning was cut off. Court transcript

Sutskever kept OpenAI equity, believes OpenAI pays his legal fees; refused to disclose equity value

The deposition notes Sutskever still holds OpenAI equity (he says it has increased in value), believes OpenAI is paying his legal fees, and—on counsel’s instruction—declined to state how much his equity is worth summary cards, legal fees note. These financial ties add a layer of potential conflicts to already fraught governance narratives.

Sutskever on AGI politics: major‑company leaders are “power‑seekers”

Another excerpt making the rounds quotes Sutskever describing those in leadership of major companies as “power‑seekers,” casting AGI control as a power‑politics problem rather than sainthood. The framing has sparked debate about governance models. power seekers

If you need the exact phrasing and context, cross‑check against the deposition PDF’s pagination. Court transcript