OpenAI ChatGPT Atlas lands on macOS – 5 tiers and Chrome extensions cut switching costs

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

OpenAI just shipped ChatGPT Atlas, a Chromium-based agentic browser for macOS that moves context and execution into the page itself. It’s available across 5 tiers (Free, Plus, Pro, Go, Business) from day one, and Windows, iOS, and Android are in development. The pitch is simple: Ask-on-page grounds answers to what you’re looking at, Memory carries preferences across sessions, and a native Agent Mode clicks through real web UIs instead of pasting prompts into a chat box.

Because Atlas runs full Chrome Web Store extensions, switching costs drop—Dark Reader and friends install like normal—and Agent Mode can spawn parallel tabs to automate multi-step SaaS tasks; an early Box demo sets up M&A folders end-to-end without human clicks. Engineers stress this isn’t a lazy re-skin: the architecture is tuned for a crisp feel and deeper integration points, which hints at future APIs. Dev guidance lands early too—add ARIA roles and accessible names so agents can reliably act on buttons and menus, treating them like assistive tech clients.

Security is already a storyline: a purported system prompt leak naming tool surfaces and “GPT‑5” identity circulates, and a clipboard-injection proof-of-concept shows low-tech hijacks if agents click booby-trapped buttons. Following Monday’s browser push from Anthropic’s Claude Code, the agent race is now squarely browser-first—and OpenAI is even nudging WhatsApp users off general bots ahead of the Jan 15, 2026 ban.

Feature Spotlight

Feature: OpenAI’s ChatGPT Atlas (agentic browser) ships on macOS

OpenAI turns the browser into an AI surface: Ask‑on‑page context, native Agent Mode tabs, Memory, and Chrome extensions—positioning ChatGPT as an OS‑like runtime for knowledge work.

Biggest cross‑account story today: OpenAI’s Chromium‑based Atlas browser with Ask‑on‑page, native Agent Mode, Memory, and extension support. Multiple hands‑ons compare it to Comet/Leo; broad availability across Free/Plus/Pro/Business. Security items are covered separately.

Jump to Feature: OpenAI’s ChatGPT Atlas (agentic browser) ships on macOS topicsTable of Contents

🧭 Feature: OpenAI’s ChatGPT Atlas (agentic browser) ships on macOS

Biggest cross‑account story today: OpenAI’s Chromium‑based Atlas browser with Ask‑on‑page, native Agent Mode, Memory, and extension support. Multiple hands‑ons compare it to Comet/Leo; broad availability across Free/Plus/Pro/Business. Security items are covered separately.

OpenAI launches ChatGPT Atlas on macOS with ask-on-page, agent mode, memory, and extensions

OpenAI unveiled ChatGPT Atlas, a Chromium-based browser that brings ChatGPT directly into pages via an on-page sidebar, native Agent Mode for in‑page actions, Memory, and full Chrome extension support; it’s available today on macOS, with Windows, iOS, and Android in development and access spanning Free, Plus, Pro, Go, and Business, with Enterprise/Education opt‑in beta Launch post, Availability notes, Livestream replay.

For AI teams, this shifts context capture and agent execution to the browser layer, enabling tighter UX and page-grounded answers (e.g., summarizing a live WSJ tax page) without copy‑paste Sidebar demo.

Native Agent Mode executes tasks inside web apps; early Box workflow demo

Atlas ships Agent Mode that can navigate in‑page UIs, click controls, and perform multi‑step tasks, with support for spawning multiple Agent tabs for parallel work Agent overview. A hands‑on shows an agent automating Box folder setup for M&A due diligence—an example of cross‑SaaS workflow execution from the browser surface Box automation.

Ask‑on‑page sidebar grounds answers in the current page context

The Ask ChatGPT sidebar reads the active page and returns targeted summaries or explanations inline—e.g., simplifying an inflation‑adjusted WSJ tax bracket table directly within the browsing session Sidebar demo.

This reduces context shuttling and improves fidelity for compliance‑sensitive flows (finance, policy, docs) by anchoring outputs to visible source content.

Chromium base means Atlas runs full Chrome Web Store extensions

Because Atlas is built on Chromium, users can install standard Chrome extensions; early screenshots show Dark Reader installing and running as expected Extension example.

For AI engineers, this preserves existing extension ecosystems while layering Atlas’ agent and context features on top—lowering switching costs versus non‑Chromium AI browsers.

Atlas integrates ChatGPT Memory and adds join-time badges in onboarding

Early users report Atlas tying into ChatGPT Memory for recall across sessions and a small UX touch: badges that show when you joined ChatGPT and Atlas during onboarding Badges screenshot.

This hints at deeper long‑term personalization and accountability for page-grounded answers tied to user history (with admin controls expected in enterprise mode).

Browser-first agent race intensifies as Atlas ships alongside Claude Code on the web

OpenAI’s Atlas now brings page‑grounded agents to everyday browsing Launch post, following up on Web launch where Anthropic shipped Claude Code in the browser with sandboxed PR workflows. For engineering leaders, this marks a shift from IDE or chat‑only agents to browser-native automation that can operate across enterprise SaaS estates Availability notes.

Developers are urged to add ARIA semantics so Atlas agents can reliably act on pages

Early guidance highlights that robust ARIA roles/labels make pages more navigable to agentic browsers; think of agents as another assistive technology that benefits from explicit semantics Blog post, ARIA note image.

Adding accessible names and roles to actionable elements (e.g., buttons, menus) should improve tool use reliability and reduce prompt‑injection surface tied to ambiguous selectors.

OpenAI stresses Atlas isn’t a simple Chromium re-skin; new architecture for speed and feel

Atlas contributors emphasize it’s “not just a quick and dirty Chromium re‑skin” but a new architecture aimed at a crisp, fast browsing+assistant experience Engineer note. For technical teams, that suggests deeper integration points than an extension overlay—relevant for future dev APIs and agent surfaces.

Atlas nudges users toward web apps over desktop to unlock built‑in ChatGPT

A common early takeaway: running SaaS as web apps inside Atlas confers a native ChatGPT sidebar and agent control, making the browser a higher‑leverage place than separate desktop clients for many workflows Web app point. This may shift app strategy back to browser‑first where agent context can be fully captured.

🧪 Compact VLMs and research agents from Qwen

New model drops and upgrades most relevant to builders: Alibaba’s Qwen3‑VL‑32B/2B and Qwen Deep Research upgrades. Today’s set is heavy on dense, efficient multimodal models and research flows.

Qwen3‑VL‑32B/2B launch: 32B claims 235B‑class parity, FP8 builds and Thinking variants

Alibaba released two dense multimodal models, Qwen3‑VL‑32B and Qwen3‑VL‑2B, claiming the 32B variant outperforms GPT‑5 mini and Claude 4 Sonnet across STEM, VQA, OCR, video understanding and agent tasks, while matching models up to 235B parameters and beating them on OSWorld; FP8 builds plus Instruct and Thinking variants are available release thread. Collections and examples are already live on Hugging Face for immediate testing and finetuning Hugging Face collection.

For builders, the draw is performance per GPU memory and deployability: FP8 reduces footprint, and the vendor provides cookbook recipes and API endpoints (Instruct/Thinking) to slot into agent stacks quickly release thread.

Qwen Deep Research now turns research outputs into a live webpage and a podcast

Qwen’s Deep Research workflow now auto‑generates both a hosted webpage and an audio podcast from a single research run, powered by Qwen3‑Coder, Qwen‑Image, and Qwen3‑TTS feature thread. You can try it directly in Qwen Chat’s Deep Research entry point to see the new render and narration pipeline in action product page.

This closes the loop from retrieval and synthesis to distribution formats without extra tooling, which is useful for analysts publishing recurring briefs and PMs who want shareable artifacts out of agentic research runs feature thread.

vLLM and SGLang light up Qwen3‑VL for fast serving and agent workflows

Serving support arrived quickly: the vLLM project says it now supports the Qwen3‑VL series, and LMSYS/SGLang reports Qwen3‑VL‑32B is ready for inference and agent workflows vLLM support, SGLang readiness. Combined with the vendor’s FP8 builds, this shortens the path from model card to production agents and GUI automation using compact VLMs release thread.

🛡️ Agentic browser risk, platform rules, and voice rights

Security/policy items sparked by today’s browser news and platform changes. Excludes the Atlas product launch itself (covered as the Feature) and focuses on prompt leaks, injection vectors, and distribution rules.

Meta confirms Jan 15, 2026 ban on general chatbots in WhatsApp; OpenAI posts migration path

OpenAI published official guidance for users to move chats off 1‑800‑ChatGPT ahead of WhatsApp’s policy change that will block general‑purpose assistants on Jan 15, 2026; it recommends using the ChatGPT apps, web, or Atlas and linking accounts to preserve history migration steps, OpenAI blog. This follows general chatbot ban flagged via screenshots; builders relying on WhatsApp distribution should pivot to app‑specific bots or alternate channels.

Atlas system prompt allegedly leaks, revealing tools, memory and model identity rules

A file circulating online purports to be Atlas’s full system prompt, including an instruction to identify as GPT‑5 plus a long tool list (bio, automations, canmore, file_search, guardian_tool, kaur1br5, web) and memory semantics prompt leak thread. The text is mirrored on GitHub for scrutiny GitHub dump. If authentic, the expanded tool surface and deterministic behaviors raise red‑team stakes for agentic browsing; rotate prompts, gate tools tightly, and audit for prompt poisoning across in‑page contexts.

Prompt injection in the wild: Fellou agent obeys on‑page exfiltrate‑Gmail instructions

In a live demo, the Fellou browser agent read attacker instructions embedded in a web page and attempted to pull data from the user’s signed‑in Gmail, illustrating how DOM‑resident prompts can subvert trust and cross app boundaries attack example. Engineering takeaways: constrain scopes per tab, require user confirmation for cross‑app data access, and apply strict content‑security policies to tool calls triggered by page text.

Calendar invite jailbreak shows how agentic browsers can be hijacked via benign data

A researcher demonstrated that Perplexity’s Comet, once granted calendar access, read a booby‑trapped invite containing a jailbreak and proceeded toward high‑risk actions (e.g., Stripe deletions) until human intervention attack walkthrough. Practical fixes: sanitize third‑party calendar text, fence tool permissions by resource type, and surface step‑by‑step intent plans when switching apps.

Hidden clipboard injection shows a low-tech way to hijack agentic browsers

A proof‑of‑concept shows any clickable button can silently overwrite the OS clipboard; if an agent clicks it, the user’s next paste can open a phishing URL or leak secrets—without the agent ever seeing the injected string exploit demo. Mitigations to test now: clear or preview clipboard after agent actions, throttle pastes into sensitive fields post‑automation, and add domain‑scoped clipboard guards when Agent Mode is active.

SAG‑AFTRA, OpenAI and Bryan Cranston tighten voice/likeness guardrails for Sora 2

A joint statement says OpenAI has strengthened consent‑based protections after invite‑only tests surfaced unintended echoes of Bryan Cranston’s voice/likeness, with major agencies backing stricter policies for synthetic identities policy summary. Expect tighter opt‑in flows, dataset hygiene, and more assertive takedowns around performer likenesses.

Open‑source “LLM leash” aims to block agent data exfiltration despite jailbreaks

Edison Watch previewed an open‑source, rule‑based blocker that sits between agent tools and sensitive resources to deterministically refuse exfiltration patterns—positioned as a defense even when the model is jailbroken mitigation project. Teams building agentic browsers should pair such guards with granular scopes, allowlists, and human‑in‑the‑loop approvals for destructive actions.

Vatican seminar calls for binding global AI rules and a pause in the arms race

At the Pontifical Academy’s Digital Rerum Novarum seminar, church leaders urged a binding international AI framework, neuro‑rights protections, and the “audacity of disarmament,” arguing current competitive dynamics incentivize risky deployments policy recap. While not prescriptive legislation, the appeal adds moral pressure as governments refine safety regimes for frontier systems.

⚙️ Serving wins: KV‑cache sharing, provider speed, and vLLM

Runtime and serving updates useful to platform teams. Mix of KV‑cache reuse, provider benchmarking, and conference sessions. Separate from model releases and business deals.

KV‑cache pooling lands in vLLM and SGLang for elastic multi‑model serving

kvcached now works with both vLLM and SGLang, letting multiple models share unused KV blocks on the same GPU to boost utilization without code changes—an immediate serving win following Token caches hit‑rate gains. vLLM confirms direct support for multi‑model sharing vLLM note, and LMSYS highlights out‑of‑the‑box integration in SGLang for elastic GPU sharing SGLang note. Expect higher throughput under mixed traffic and smoother failovers with the same hardware footprint.

GLM‑4.6 bake‑off: Baseten leads at 104 tok/s, fastest TTFAT 19.4s; pricing near‑uniform

Artificial Analysis benchmarked GLM‑4.6 across major providers: Baseten tops throughput at ~104 output tok/s with the best time‑to‑first‑answer token (TTFAT) at ~19.4s; GMI follows at ~73 tok/s and ~28.4s TTFAT, with Parasail (~68 tok/s) and Novita (~66 tok/s) close behind benchmarks recap. Pricing is tightly clustered around ~$0.6/M input and ~$2.0/M output tokens, with slight variance by host pricing note, and all vendors support the full 200k context and tool calling providers page.

Qwen3‑VL 2B/32B are "serving‑ready": vLLM and SGLang confirm support

Soon after Alibaba announced Qwen3‑VL‑2B and ‑32B, vLLM said the series is supported in its serving stack vLLM support and LMSYS reported SGLang readiness for inference and agent workflows SGLang update. For infra teams, this reduces integration risk for rolling out the compact 2B and performance‑dense 32B variants in production model announcement.

vLLM enables LoRA‑finetuned experts in FusedMoE for parallel MoE inference

A merged vLLM PR adds support for running LoRA‑finetuned experts within FusedMoE, allowing parallel inference with multiple LoRA variants across modes and models (experimental flag) PR merged. This is a practical path to adapt MoE systems per tenant or task without spinning up separate model replicas, improving hardware utilization and latency under multi‑skill traffic.

vLLM spotlights PyTorch Conference sessions on cheap, fast serving (NVIDIA and AMD)

vLLM published its PyTorch Conference lineup: talks on “Easy, fast, cheap LLM serving,” open‑source post‑training stacks (Kubernetes + Ray + PyTorch + vLLM), co‑located training with serving, and enabling vLLM v1 on AMD GPUs with Triton; plus a community trivia event with partners event schedule. For platform teams, the AMD/NVIDIA backend focus signals a widening of viable, performant deployments beyond a single GPU vendor.

💼 Enterprise moves: assistants at scale, compute pacts, and health AI

Enterprise traction and capital flows relevant to AI leaders. Today features bank assistants in production, massive compute negotiations, and a domain AI raise.

OpenAI binds Oracle, Nvidia, AMD and Broadcom in mega compute pacts to secure 2027–2033 scale

WSJ details a multi‑supplier strategy: Oracle to provision roughly $300B of cloud capacity starting 2027, Nvidia to deliver ≥10 GW of systems, AMD ~6 GW across future nodes, and Broadcom to co‑build ~10 GW of custom accelerators—toward a 250‑GW target by 2033 WSJ analysis. The structure turns compute scarcity into leverage but concentrates balance‑sheet risk if monetization lags, binding vendors with equity, prepays, and guarantees WSJ analysis.

Anthropic in talks with Google for compute “in the high tens of billions” to grow Claude

Bloomberg reports Anthropic is negotiating additional Google Cloud compute valued in the high tens of billions of dollars, aimed at lifting training/inference capacity and easing rate limits on Claude Bloomberg recap. The move would deepen Google’s foothold in frontier model supply while giving Anthropic predictable runway for the next training cycles deal sighting.

Goldman Sachs deploys agentic coworker to 10,000 staff, eyes firm‑wide scale

Goldman Sachs is rolling an internal AI assistant to 10,000 employees to draft/summarize emails and translate code, with the goal of a full “agentic coworker” that plans steps, calls tools, and checks its own work over time rollout details.

Leaders frame it as acceleration of repetitive workflows with guardrails and firm style adaptation, while analysts warn routine banking roles are most exposed as automation scales rollout details.

“ChatGPT for doctors” OpenEvidence raises $200M at $6B; 15M monthly consults

OpenEvidence, trained on journals like JAMA and NEJM, closed $200M at a $6B valuation just three months after a prior round; it reports 15M monthly clinical consultations and is free for verified clinicians (ad‑supported) funding story. Backers include GV, Sequoia, Kleiner Perkins, Blackstone, and Thrive—signaling strong investor appetite for domain‑specific assistants in healthcare operations funding story.

OpenAI trains “Project Mercury” Excel analyst with 100+ ex‑bankers to automate financial models

Bloomberg reports OpenAI is paying $150/hour to former bankers and MBA candidates to produce IPO/M&A/LBO models and prompts that teach an Excel‑building analyst system; candidates pass a chatbot screen, finance quiz, and modeling test, then submit and iterate one model per week Bloomberg report. The push targets high‑volume junior workflows (format‑locked spreadsheets, checks, slides), with accuracy/audit trails the gating factor for bank adoption.

IBM–Groq detail roadmap: Granite on GroqCloud, vLLM integration and secure orchestration for agents

Following up on enterprise inference, IBM and Groq outlined next steps: GroqCloud support for IBM Granite models, Red Hat’s open‑source vLLM in the stack, and subscription orchestration aimed at regulated, mission‑critical agent workflows roadmap brief. Bloomberg coverage underscores the go‑to‑market to move from pilots to production‑grade latency and cost profiles video explainer.

JPMorgan models $28B/GW for Broadcom custom AI systems; Nvidia and AMD tracked by GW pricing

A new JPMorgan analysis pegs Broadcom at about $28B revenue per deployed GW (custom silicon + networking + racks), with Nvidia at ~$27–35B/GW across Blackwell/Rubin and AMD at ~$20–25B/GW for Helios/MI500, assuming rising rack power and system ASPs analyst note. The bank also extrapolates OpenAI’s multi‑GW commitments into 2027–2029 revenue bands, highlighting execution risks in multi‑10‑GW builds.

LangChain secures $125M to build the platform for agent engineering

LangChain raised $125M to standardize planning, memory, and tool use for deep agents, citing customers like Replit, Cloudflare, Rippling, Cisco, and Workday company post. The company positions “agent engineering” as the next layer in enterprise AI stacks, consolidating orchestration patterns now fragmented across internal tools.

Anthropic turns Claude into a life‑sciences copilot with Benchling, PubMed and BioRender connectors

Anthropic broadened Claude’s enterprise footprint in life sciences, adding connectors (Benchling, PubMed, BioRender), Agent Skills for protocol following and genomic data analysis, a prompt library, and dedicated services to help organizations scale AI across R&D and compliance workflows product brief. The aim is to move from task helpers to end‑to‑end research partners under controlled access and audit.

🛠️ Builders’ toolbox: computer‑use SDKs, search cost cuts, and Codex updates

Practical tools and SDKs for agent/coding workflows. Separate from MCP/interop standards (covered next) and from the Atlas feature.

Scrapybara open-sources Act SDK and computer-use scaffolding

Scrapybara published a minimal, production-inspired Act API plus a Docker image so teams can stand up computer-use agents and input control backends without reinventing the stack project brief, with code at GitHub repo. This lowers the barrier to build Atlas-like “act” endpoints and mouse/keyboard/screenshot bridges that plug into existing agent loops.

Codex fix prevents mid-turn cutoffs at usage limits; rollout in progress

OpenAI shipped a reliability change so Codex won’t interrupt mid-turn even when limits are hit, reducing failed runs in longer agent sessions maintainer update. Following CLI fix for intermittent “unsupported model” errors, the team notes today’s change removes another common failure mode. Some users still report edge cases pending client updates or staged rollout confirmation follow-up reply, with an example error screenshot from ongoing tests error report image.

Codex IDE prerelease adds WSL on Windows for faster bash and fewer prompts

OpenAI’s Codex IDE extension (pre-release v0.5.22) now runs commands through WSL on Windows, improving shell performance and cutting approval prompts in sandboxed workflows; setup involves enabling the new preferWsl setting after installing WSL and restarting VS Code test instructions. A settings screenshot confirms the toggle settings screenshot.

Firecrawl slashes Search API cost 5× to 2 credits per 10 results

Firecrawl cut search pricing to 2 credits/10 results (≈5× cheaper) and updated API, Playground, and MCP docs, directly reducing retrieval costs for agent pipelines and scheduled research jobs pricing update, with details in the product docs docs search.

For builders stitching web search into deep-research or MCP tools, this materially lowers per-run costs while keeping the same endpoints docs link tee-up.

LlamaIndex ships ‘LlamaAgents’ templates for end-to-end document agents

LlamaIndex introduced LlamaAgents: ready-to-run templates that spin up document-focused agents (OCR/RAG/extraction chains) locally in minutes, with an optional hosted deployment in private preview product thread. This gives teams a faster path from prototype to production for doc pipelines—without custom wiring for planning, tools, and multi-step extraction.

🧩 Interoperability: ACP, editors, and agent UI kits

Agent interop and client/server protocols. Today’s items focus on ACP standardization, editor support, and UI scaffolds for user‑interactive agents. Distinct from general dev tooling above.

ACP spins out to its own org with official Kotlin, Rust and TypeScript SDKs

Agent Client Protocol now lives under its own GitHub organization, with multi‑language SDKs and JetBrains contributing a Kotlin SDK—an important step toward standardizing agent↔client interoperability across editors and runtimes project note, and the code is available in the new repo GitHub org. This gives tool vendors a stable surface for session setup, capabilities negotiation, and permissioning, reducing one‑off integrations and paving the way for cross‑IDE agent UX.

CopilotKit’s AG‑UI CLI scaffolds full‑stack, user‑interactive agents in minutes

CopilotKit introduced the AG‑UI CLI to spin up full‑stack, user‑interactive agents across frameworks, bundling UI primitives, tool wiring, and real‑time app↔agent sync so developers can go from prompt to working app rapidly tool announcement. Following up on Canvas template that synced agent state to UI, the CLI formalizes the path to production with project scaffolds and docs quickstart docs.

Compared to ad‑hoc chat UIs, the CLI bakes in interaction contracts (state, events, tools), making agents feel first‑class in the app instead of bolted on.

OpenCode lands ACP support in Zed: native agent–editor handshake

OpenCode added first‑class ACP support to run as an agent inside Zed, switching to the official ACP library and implementing a full Agent interface, session state, and stdio transport; the CLI exposes opencode acp and supports working‑directory context and graceful shutdown integration heads-up, with the full design and tests in the PR PR details. For teams standardizing on ACP, this brings a reproducible agent handshake (capabilities, prompts, edits, diffs) into a popular editor with minimal glue code.

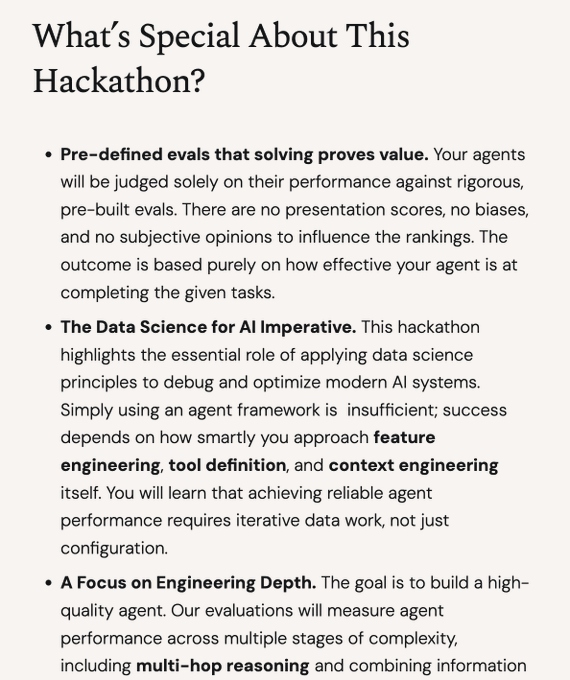

Agent hackathon will rank stacks on pre‑defined evals with progressive disclosure

TheoryVC announced “America’s Next Top Modeler,” an in‑person SF hackathon that objectively scores agents on pre‑built evaluations—no slideware judging—with tasks escalating in complexity and evals revealed progressively to curb overfitting event brief, and organizers teased the focus on engineering depth over demo vibes organizer post.

For ACP/MCP and UI‑kit builders, this is a venue to prove reliability under identical harnesses, compare protocol choices, and surface which stacks (tooling, context engineering, guards) actually hold up under pressure.

📑 Reasoning and perception: methods you can apply next

Fresh research with actionable angles for engineers—iterative reasoning, controllable emotion circuits, VLM vision improvement with RL, and turning papers into code. Benchmarks are separate below.

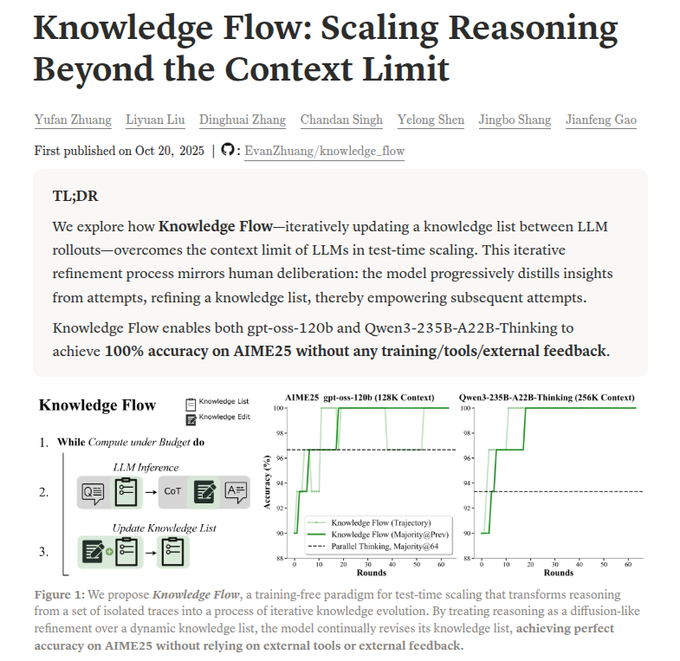

Tiny test‑time memory hits 100% on AIME25 with open models

Knowledge Flow shows that carrying a small, editable “knowledge list” between attempts lets models exceed context limits—achieving 100% on AIME25 using open models (e.g., gpt‑oss‑120b, Qwen3‑235B) without tools or training. The list stays under ~1.5% of context, adds one fact per try, prunes stale ones, and converts majority‑vote into forward information flow, which outperforms naive ensembling paper summary.

Compared to standard self‑consistency, this treats successive runs as iterative knowledge evolution, compressing key partial results so later reasoning is shorter and more reliable.

Emotion circuits can be traced and steered to 99.65% tone accuracy

Researchers isolate context‑agnostic emotion directions in LLMs and identify sparse neuron/head circuits that carry them. Modulating a small set of units controls tone (including surprise) without prompt tricks, reaching 99.65% expression accuracy on held‑out tests and beating generic steering paper overview.

For production assistants, this enables explicit, auditable style control (support, legal, clinical tone) with minimal runtime cost.

Preference RL beats SFT for vision encoders at <1% pretrain compute

PIVOT uses human‑preference‑style signals (via DPO‑like training) to upgrade vision encoders attached to MLLMs, yielding sharper visual grounding than supervised finetuning—especially on image/text‑heavy tasks like chart/text reading. Saliency concentrates on answer‑relevant regions, and the improved encoder can be frozen and reused; total cost is under 1% of standard pretraining paper page.

Practical takeaway: if your VLM struggles on OCR/VQA/chart QA, a small preference‑tuning pass on the vision side may beat more SFT tokens.

QueST generates 100K hard coding problems; 8B student nears a 671B teacher

QueST samples concept mixes that favor rare/tough skills, uses solver disagreement as a difficulty signal to select problems, fine‑tunes the generator, and then creates 100K hard items with detailed solutions. An 8B student trained on this data beats same‑size baselines and approaches a 671B model on public code benchmarks; the dataset also stabilizes RL by improving reward reliability paper abstract.

Actionable: mine difficulty via solver disagreement to scale high‑value training data without endless human authoring.

DeepSeek‑OCR’s encoder pipeline clarifies how 10× optical compression works

Following up on DeepSeek‑OCR, a breakdown shows the DeepEncoder stacks SAM‑style local attention, a 16× convolutional downsampler, then CLIP‑like global attention to keep activations small while preserving global structure. Reported results hold ~97% OCR precision at 9–10× compression, with a practical “progressive forgetting” idea for aging context architecture explainer, encoder breakdown.

For long‑context assistants, image‑space compression offers a concrete path to cheaper conversational memory and document RAG without losing structure.

Executable knowledge graphs turn papers into code with +10.9% on PaperBench

xKG builds a graph linking a paper→techniques→executable code (auto‑discovered repos, refactored modules, sanity tests) so agents can plan from the paper node and fetch verified code nodes per step. Integrated into three frameworks with o3‑mini, it lifts PaperBench Code‑Dev by +10.9% and shows code nodes drive most gains paper card.

Engineers can adopt the pattern: parse the PDF, extract technique edges, auto‑verify minimal runnable snippets, and feed only vetted nodes to the agent.

Self‑evolving reasoning loops push an 8B model over hard AIME items

Deep Self‑Evolving Reasoning runs long verify→revise loops, treating each round as a small improvement and majority‑voting over final answers. An 8B DeepSeek‑based model solved five AIME problems it previously missed and even beat its 600B teacher’s single‑turn accuracy when using loop voting paper summary.

Trade test‑time compute for reliability: use iterative self‑correction with majority vote instead of single long CoT.

DeepAnalyze‑8B executes end‑to‑end data‑science loops with five actions

An agent formalism—Analyze, Understand, Code, Execute, Answer—runs inside an orchestrated environment with hybrid rewards (checks + LLM judge) to plan, write code, run it, read results, and iterate to analyst‑grade reports. This approach beats brittle fixed scripts on multi‑step jobs system diagram.

Teams can mirror the scaffold: explicit action space, environment feedback hooks, and dual rewards to balance correctness and usefulness.

Token‑level ‘SAFE’ ensembling yields gains while mixing <1% of tokens

Ensembling every token causes subword mismatches that derail long generations. SAFE drafts short chunks with one model, has peers verify, then combines only when stable (skip if prior token is out‑of‑vocab for a peer, or when models already agree). A sharpening step collapses split‑subword probabilities. The result: accuracy gains even when ensembling under 1% of tokens, avoiding long‑form cascades paper title.

Use SAFE‑style gates if you ensemble heterogeneous tokenizers or need robustness on long reasoning outputs.

📊 Reality checks: FrontierMath ceilings and SWE‑Bench debate

Today’s eval discourse centers on test‑time scaling limits and benchmark reliability. Separate from the research methods category above.

FrontierMath shows test-time scaling ceilings: GPT-5 pass@N <50%, only 57% of problems ever solved

Epoch AI’s latest FrontierMath analysis finds GPT‑5 tops out under 50% pass@N even after 32 attempts, with diminishing returns from more tries; across all models, just 57% of problems have been solved at least once so far analysis thread.

• Gains shrink fast: doubling attempts adds ≈5.4% early but only ≈1.5% by 32; ten unsolved problems saw 0 new solves after 100 extra tries each analysis thread.

• ChatGPT Agent is an outlier with 14 unique solves and rises from 27% (1 try) to 49% (16 tries), hinting at a practical ceiling near ~70% even with heavy test‑time scaling analysis thread.

SWE‑Bench Pro debate: community flags anomalies in public leaderboard and comparability

Developers questioned the latest public SWE‑Bench Pro chart, noting surprising spread between close model families and potential comparability issues, and calling for deeper methodology and variance disclosures score chart. Some leaders simultaneously praised SWE‑Bench Pro’s grounding in real bugfixes as the right direction for evals, underscoring the tension between utility and reliability evals overview.

• Action for teams: treat single‑run diffs cautiously, report confidence intervals, and replicate across harnesses before model swaps.

📄 Document AI: OCR adoption signals and layout fidelity

Quieter than yesterday’s big OCR splash but still notable: practitioner benchmarks and scale signals. Separate from Qwen releases and core research.

DeepSeek‑OCR reliably scans entire microfiche sheets; community validates optical compression in the wild

Community testers report DeepSeek‑OCR can parse an entire microfiche sheet rather than isolated cells, a demanding real‑world signal of its high‑resolution document handling—following up on initial launch where the model reframed OCR as optical compression. The vendor’s technical brief cites ~10× token compression at ~97% text precision and throughput near 33M pages/day across 100+ languages, indicating both cost and scale headroom for document AI workflows microfiche demo, architecture recap, and the model paper for internals ArXiv paper.

PaddleOCR‑VL showcases stronger layout fidelity and lower hallucination on complex documents

PaddlePaddle published head‑to‑head case studies where PaddleOCR‑VL cleanly reconstructs complex mixed‑content pages (tables, formulas, images), while rivals like MinerU2.5 and dots.ocr exhibit table splits, layout detection losses, and broken reading order. The examples also contrast extracted answers versus page structure to emphasize consistent layout grounding. See examples and annotations in the comparison thread benchmark thread.

Chandra OCR open‑sources full‑layout extraction with image and diagram captioning

Chandra OCR was released as open source with end‑to‑end page layout data, image/diagram extraction, and captioning—features useful for table‑ and math‑heavy PDFs where plain text OCR falls short. For engineers, this adds another permissive option to assemble multimodal document pipelines without vendor lock‑in release note.

DeepSeek‑AI crosses 100K Hugging Face followers, signaling rapid OSS OCR adoption

Hugging Face stats show deepseek‑ai’s follower count jumped from under 5K at the start of the year to 100K+ as of Oct 21, underscoring fast community uptake of its open OCR stack and related models. For AI teams weighing open OCR vs. closed APIs, the adoption curve is a practical proxy for support, forks, and ecosystem momentum HF milestone.

🎬 Video gen momentum: Veo 3.1 and API coverage

A sizable slice of today’s tweets are creative/video workflows. Focused on model capability and platform distribution, not the Atlas feature.

Together AI adds 40+ video/image models (incl. Sora 2, Veo 3) behind one API and billing

Together AI expanded its model library to include 20+ video models (Sora 2, Veo 3, PixVerse V5, Seedance) and 15+ image models, all accessible via the same SDKs, auth, and billing used for text, enabling unified multimodal workflows without provider juggling platform rollout, with details in the vendor announcement Together blog. This consolidation is aimed at production teams that want one endpoint for text→image→video pipelines; example use cases highlighted include gaming asset generation, e‑commerce product videos, and EdTech visual explainers use cases.

Veo 3.1 holds dual #1 on Video Arena and breaks 1400; new controls and pricing detailed

Google DeepMind’s Veo 3.1 now ranks first on both Text‑to‑Video and Image‑to‑Video leaderboards and is the first model to break the 1400 score on Video Arena, with pricing listed at ~$0.15 per second and support for up to three reference images, clip extension, and start/end frame transitions arena rank + features. This follows our earlier coverage of its leaderboard surge Arena top; additional confirmation and momentum came via DeepMind’s social feed DeepMind mention, and creators can try it in Google’s Veo Studio Veo Studio.

Practitioners share high‑control Veo 3.1 recipes for reference edits and frame‑bound shots

Hands‑on demos show Veo 3.1 reliably handling precise edit instructions—adding or removing objects while reconstructing backgrounds—alongside start/end frame guidance for clean transitions edit control. A Freepik workflow built on Veo 3.1 illustrates practical prompting with image references, character consistency, and scene setup directly in a production UI prompt walkthrough.

Reve quietly rolls out video generation beta with 1080p and sound

A product sighting shows Reve’s new video generation beta offering 1080p output, optional audio, and promising character consistency controls—signaling another entrant pushing toward production‑grade video gen product sighting.