Anthropic tops enterprise LLM APIs at 32% share – OpenAI slips to 25%

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Enterprise LLM buyers are voting with traffic: a new market snapshot has Anthropic leading API usage at 32%, ahead of OpenAI at 25%. With H1’25 LLM API spend around $8.4B, this isn’t a vibes poll; it’s production workloads drifting toward whoever delivers reliability and control. The momentum shows up most where it counts day‑to‑day: developer tooling and code generation.

On code‑gen, Anthropic reportedly holds 42% share vs OpenAI’s 21%, a spread that mirrors perceived gains in reasoning quality and tighter enterprise knobs. Google sits near 20% and Meta around 9%, making this a four‑plus‑player market, not a winner‑take‑all duopoly. Switching remains rare—only 11% of buyers changed primary vendors—while 66% expanded within incumbents, so growth is coming from deeper usage and multi‑vendor routing, not churn. And when renewals hit, buyers weigh cost per token, data residency, auditability, and SOC attestations at least as much as raw benchmarks, which is why uptime and incident response now outrank leaderboard glory.

If you’re mapping 2025 bets, note AWS’s Rainier buildout backing Anthropic’s march past 1M Trainium chips by late 2025, a compute cushion that makes today’s usage shift look less like a blip and more like posture.

Feature Spotlight

Feature: Anthropic pulls ahead in enterprise LLM APIs

Enterprise LLM API market shifts: Anthropic ~32% share vs OpenAI ~25% in H1’25, with stronger code‑gen usage (42% vs 21%); $8.4B H1 spend, low switching (~11%), multi‑vendor routing rising.

Multiple threads report a measurable share shift in enterprise LLM API usage: Anthropic leads on usage and developer code‑gen while OpenAI slips; spend patterns show low vendor switching and rising multi‑vendor routing.

Jump to Feature: Anthropic pulls ahead in enterprise LLM APIs topicsTable of Contents

📊 Feature: Anthropic pulls ahead in enterprise LLM APIs

Multiple threads report a measurable share shift in enterprise LLM API usage: Anthropic leads on usage and developer code‑gen while OpenAI slips; spend patterns show low vendor switching and rising multi‑vendor routing.

Anthropic overtakes OpenAI in enterprise LLM API usage

A new market snapshot shows Anthropic leads enterprise LLM API usage at ~32% while OpenAI has ~25%, signaling a real vendor shift as workloads scale to production market snapshot.

- Spend and growth: H1’25 enterprise LLM API spend reached ~$8.4B, with rising inference volumes prioritizing uptime, latency, and incident response over raw benchmarks market snapshot.

- Developer split: For code‑generation, Anthropic holds ~42% vs OpenAI ~21%, reflecting perceived gains in reasoning/coding and tighter enterprise controls market snapshot.

- Market structure: Google near ~20% and Meta ~9%, confirming a four‑plus‑player field rather than a duopoly market snapshot.

- Switching behavior: Only ~11% switched vendors, while ~66% expanded within incumbents; multi‑vendor routing is up to reduce lock‑in and match tasks to best models market snapshot.

- Buyer criteria: Cost per token, data residency, auditability, SOC reports, and fine‑grained controls weigh as much as model quality in renewals market snapshot.

🏗️ Hyperscale clusters, capex and distributed inference

Heavy infra coverage today: AWS ‘Project Rainier’ details, OpenAI’s Abilene/Stargate specs and compute math, a 100 GW distributed inference notion from Tesla fleets, plus Q3 capex tallies. Excludes the enterprise share shift (featured).

AWS Rainier details: 64‑Trainium UltraServers, EFA across sites, 0.15 L/kWh water use; Anthropic scaling past 1M chips

AWS shared deeper specs on Project Rainier: each Trainium2 UltraServer links 64 chips via NeuronLink, nodes are stitched across buildings with EFA, and reported water usage is ~0.15 L/kWh alongside 100% renewable power claims technical breakdown. The cluster already comprises ~500k Trainium2 and will support Anthropic’s push to exceed 1M chips by Dec‑2025, delivering >5× the compute of Anthropic’s prior setup Amazon blog post.

Following AWS Rainier, which established the scale and activation, today’s details sharpen the server topology (HBM3, matrix/tensor optimization), latency domain (intra‑node vs EFA), and sustainability metrics—useful for capacity planning and performance modeling technical breakdown.

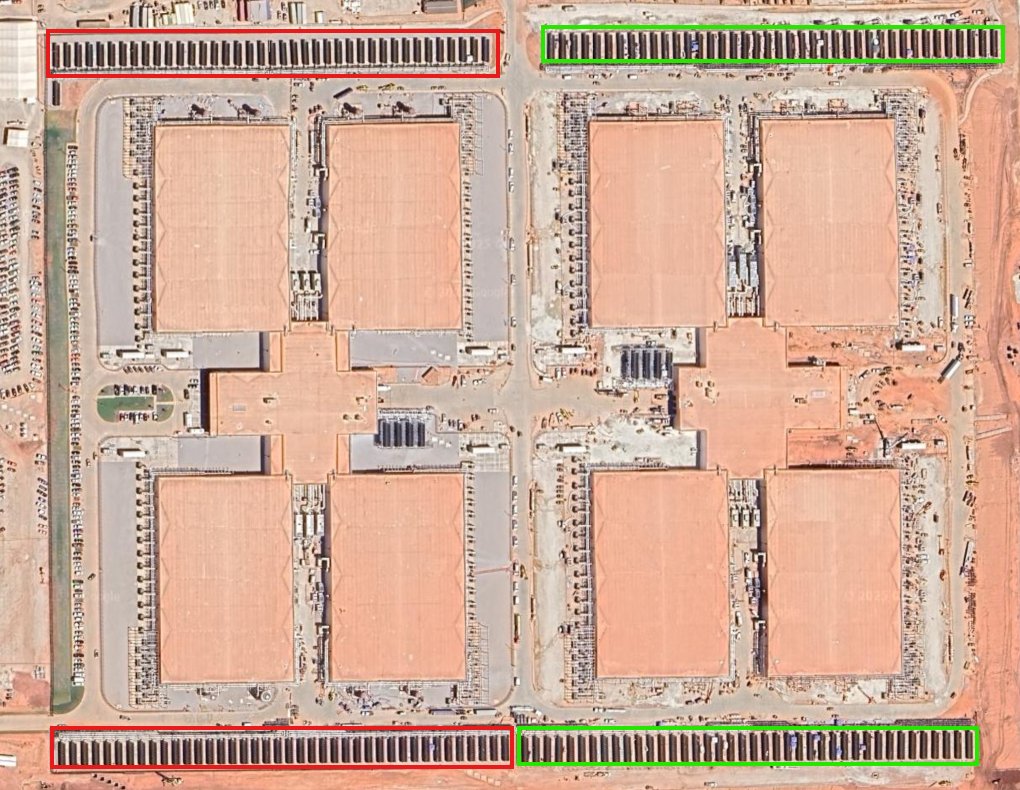

Inside OpenAI’s rumored ‘Stargate Abilene’: ~1.2 GW campus, ~56k GPUs per building, ~452k GPUs sitewide

New site analysis pegs OpenAI’s Abilene campus at roughly 1.2 GW with 4 halls per building and about 196–200 racks per hall, yielding ~56,448 GPUs per building and ~451,584 GPUs across 8 buildings at full build site analysis rack estimate. A follow‑up clarifies the 44,600 m² figure refers to each building, not the whole campus area correction. On‑site power plans cite 29 GE LM2500XPRESS turbines (~1.015 GW total) and ~80 chillers per building with direct‑to‑chip liquid cooling, while satellite photos suggest phase‑2 progress on additional buildings site analysis. One estimate claims GPT‑4‑class training could be done in “hours,” with a 100‑day run approaching ~1e28 FLOPs in FP16 compute claim.

For infra planners, the rack density, thermal approach, and on‑site generation imply aggressive performance headroom and tighter PUE/RAS trade‑offs; the compute math, if borne out, would materially compress model iteration cycles.

Hyperscaler Q3 AI capex surges: MSFT ~$35B, GOOG ~$24B, AMZN ~$34.2B, META ~$19.4B; 2025 likely >$400B

Quarterly filings and commentary show Q3 capex jumps tied to AI data centers: Microsoft ~$35B (+74% YoY), Google ~$24B (+83%), Amazon ~$34.2B (YTD ~$89.9B), Meta ~$19.4B (>2× YoY). Analysts project 2025 AI capex exceeding ~$400B; adding 2024 ($228.4B) and 2023 (~$148B) brings a three‑year total near ~$776B capex roundup. Fed Chair Powell contrasted this cycle with dot‑com, noting leaders have actual earnings and visible productivity investments (power grids, data centers) Powell remarks.

For AI orgs, this implies continued GPU/ASIC scarcity pockets, escalating grid constraints, and a widening gap between contracted vs speculative builds that affect pricing and availability.

Qualcomm and HUMAIN to deploy 200 MW AI200/AI250 racks in KSA from 2026 for high‑throughput inference

Qualcomm and HUMAIN plan a 200 MW rollout of AI200/AI250 rack solutions in Saudi Arabia starting 2026, positioning a fully optimized edge‑to‑cloud inference fabric. Claims include up to ~10× higher memory bandwidth at lower power vs Nvidia H100 (700 W TDP), and integration of HUMAIN’s ALLaM models to reduce TCO for local entities under Vision 2030 rack partnership.

If delivered at stated efficiency, this could broaden regional inference capacity and diversify away from single‑vendor GPU stacks, with implications for latency‑sensitive enterprise workloads and national AI sovereignty.

Musk proposes 100 GW distributed inference using idle Teslas; practicality hinges on networking and sandboxing

Elon Musk floated a fleet compute idea: 100M vehicles × ~1 kW each could yield ~100 GW for batched AI inference, leveraging existing power and cooling in cars Musk proposal YouTube video. Real‑world viability would favor latency‑tolerant tasks (embeddings, offline transcodes), strong isolation between tenant code and the driving stack, and caching/weight‑sharding to work around uplink bandwidth limits Musk proposal.

💻 Coding agents in practice: Codex, Cursor vs Droid, reliability

Hands‑on builder threads on Codex credit refunds/limits, experimental Windows sandboxing, stack performance investigations, and practical context engineering patterns (Droid vs Cursor).

OpenAI Codex refunds overcharges, resets limits after 2–5× metering bug

OpenAI reset Codex limits for all users and refunded all credit usage incurred from launch until 1pm PT Friday after discovering a bug that overcharged cloud tasks by ~2–5×; metering has now been re‑enabled, with cloud tasks consuming limits slightly faster than local due to VM overhead and heavier one‑shot work patterns refunds and limits. Following up on meters launch, the team listed steps taken: removed limits, fixed the bug, refunded credits, reset limits, and restored charging.

For cost planning, remember cloud tasks can process more per message but burn credits faster; local tasks remain cheaper on a per‑unit basis when the VM is not needed refunds and limits.

Codex team publishes stack performance deep‑dive to probe degradation reports

OpenAI engineers "dug into every nook and cranny" of the Codex stack and published findings to address widely reported performance degradations and variability across modes (local vs cloud, async prompting, auto‑context behavior) performance findings. External practitioners highlighted the write‑up as a useful investigation into reported regressions and behavior under load engineer read. Expect follow‑on tuning to focus on auto‑context reliability and cloud task prompting, where most divergence tends to appear.

Droid vs Cursor 2.0: Builders report steadier long runs; 7M‑token single session in Droid

A practitioner comparison finds Factory’s Droid maintains state across very long plans—shipping features end‑to‑end within a single ~7M‑token session—while Cursor 2.0 often requires new chats and context reloads as plans evolve, increasing token burn and error loops when guidelines are forgotten builder comparison. The workflow pairs structured AGENTS markdown files with just‑in‑time references, and the author shared prompts to generate those files for Claude and other platforms GitHub prompt. A live demo walk‑through accompanied the write‑up stream preview.

Takeaway for agent harnessing: centralized constraints/specs + persistent state beat ad‑hoc chat resets for multi‑day builds.

Amp replaces lossy /compact with Handoff to carry intent between threads

Sourcegraph’s Amp removed the compaction feature and introduced Handoff, which extracts relevant context and an explicit "next intent" to seed a fresh thread, avoiding lossy summaries that caused drift in long agent sessions product blog. Handoff drafts a tailored prompt and a curated file set, letting teams pivot cleanly between phases (e.g., planning → execution) without accumulating stale context—useful when combining large edits with deterministic follow‑ups.

Guidance for Codex users: Minimal MCP setups and short, focused chats work best

Based on internal evals, the Codex team recommends minimizing MCP tool sprawl and keeping conversations small and targeted to reduce error rates and improve determinism; complexity of setups tends to degrade outcomes over time eval guidance. For high‑throughput workflows, prefer single‑purpose sessions with only the tools essential to the task, rather than large, persistent agents with many Skills attached.

Field notes: Codex vs Claude Code trade‑offs on precision, over‑edits, and auto‑context

Practitioners report Codex excels at precise small/medium coding tasks but can over‑engineer, while Claude Code tends to write cleaner code and handle larger tasks yet sometimes edits too many files for minor changes; Codex auto‑context is called more reliable in practice developer notes. Builders also note Codex’s larger effective window and better single‑pass throughput can reduce the need for workaround scaffolding in long tasks developer comment. Use parallel agent runs and merge diffs when tasks span both precision refactors and broader rewrites.

📚 Research roundup: physics tests, diffusion canon, long‑ctx attention

Rich paper day: physics‑aware video evaluation, a 470‑page diffusion synthesis, hybrid linear attention for 1M context, KANs guide, small model factuality via protocol, self‑evolving agent toolboxes, citation‑hallucination, and LLM‑aided investing.

470‑page ‘Principles of Diffusion Models’ unifies score, variational and flow views

Stanford, OpenAI and Sony AI released a 470‑page synthesis that ties diffusion’s three lenses (variational, score‑based, flow‑matching) into a single framework, surveys training targets (noise/data/score/velocity), and catalogs accelerations from ODE solvers to distillation and flow‑map generators paper release, ArXiv paper. The compendium is notable for practical guidance on guidance methods, long‑step samplers and distilling multi‑step teachers into few‑step students—useful for teams shipping image/video generators.

Generative video looks real but breaks physics, says Physics‑IQ

A new benchmark from Google DeepMind shows state‑of‑the‑art video generators can produce photorealistic clips while failing basic physical reasoning across solid mechanics, fluids, optics, thermodynamics and magnetism DeepMind paper. Models like Sora are hard to distinguish from real footage yet score low on constrained motion‑consistency metrics, echoing Meta’s IntPhys‑2 results where model accuracy hovered near chance in complex scenes Meta physics benchmark. Earlier work finds physics understanding emerges more from learning on natural videos than from text alone, reinforcing the gap between visual polish and causal fidelity Video pretraining study.

Following up on video reasoning, which found reasoning failures on chain tasks, Physics‑IQ provides hard, measurable tests that separate realism from world‑modeling.

Kimi Linear cuts KV cache by ~75% and boosts 1M‑token decode up to 6×

Kimi’s technical report introduces a hybrid attention that mixes Kimi Delta Attention (per‑channel forget gates with diagonal‑plus‑low‑rank transitions) and periodic full‑attention layers to preserve global mixing, trimming KV cache by up to 75% and achieving up to 6× faster decoding at 1M‑token context under comparable quality technical report. Kernel changes (chunked updates, division‑free forms) enable stable long‑context throughput without exotic serving changes.

ALITA‑G turns successful runs into reusable MCP tools to specialize agents

ALITA‑G harvests successful trajectories into parameterized Model Context Protocol tools with docs, curates them into a sandboxed toolbox, and uses retrieval to plan and execute—improving pass@1 to 83.03% on GAIA validation while cutting mean tokens ~15% versus a strong baseline paper page. Gains saturate after ~3 harvest rounds as duplicates accrue, but token and accuracy improvements persist across GAIA, PathVQA and HLE.

Small 3.8B model nears GPT‑4o factuality via a lightweight reasoning protocol

Humains‑Junior (3.8B) reports FACTS Grounding accuracy within ±5 percentage points of GPT‑4o by adding a short, structured pre‑answer check that lists claims and validates them against the provided document, paired with fine‑tuning to enforce the protocol paper abstract. The approach increases tokens by ~3–5% and improves determinism without heavy “thinking” modes, yielding roughly 19× lower managed API cost for grounded QA.

Citation hallucinations drop once papers exceed ~1,000 citations

A bibliographic study finds GPT‑4.1 produces far fewer fake references when asked for real papers in CS topics if the target has ≥~1,000 citations—suggesting repeated exposure drives near‑verbatim recall—while low‑citation areas see elevated fabrications, especially under strict JSON output with no “I don’t know” option paper abstract. The work frames citation count as a proxy for training redundancy.

Diffusion canon highlights fast solvers and student distillation

Beyond unifying theory, the 470‑page diffusion survey catalogs production‑relevant tricks—classifier‑free guidance choices, ODE solvers that keep quality with fewer steps, and distillation that collapses long sampling into a handful of iterations. It also introduces flow‑map models that jump across time directly, a promising path for realtime video/image synthesis paper release, ArXiv paper.

KANs, explained: learnable 1D edges and practical basis choices

A 63‑page practitioner’s guide details Kolmogorov‑Arnold Networks, which swap fixed activations for learnable 1D functions on edges then sum, improving locality, interpretability and sometimes parameter efficiency versus MLPs paper summary, ArXiv paper. It maps basis trade‑offs (splines, Fourier, RBFs, wavelets), adds physics‑informed losses and adaptive sampling for PDEs, and reports parity or gains with fewer parameters—at the cost of higher per‑step compute.

LLM‑aware momentum portfolio earns higher risk‑adjusted returns

Pairing S&P 500 returns with firm‑specific news, a simple ChatGPT‑based relevancy score reranks momentum picks and tilts weights toward high‑confidence names, raising Sharpe to 1.06 (from 0.79), lowering drawdowns and keeping turnover feasible at ~2 bps trading costs paper abstract. Monthly rebalances with one day of news and a basic prompt worked best, implying LLMs can refine classic factors rather than replace them outright.

🛡️ Agent safety: Skills injection risks and AGI verification talk

Security threads focus on prompt‑injection via Agent Skills and governance guardrails. Includes a false‑positive example from aggressive safety filtering. Excludes business share shifts (featured).

Hidden SKILL.md lines can exfiltrate data after one approval in Claude Skills

Security researchers show that one “don’t ask again” approval (e.g., Python) lets a single hidden instruction in SKILL.md run a ‘backup’ script to quietly exfiltrate files; a Claude Web variant hides a password in a clickable link, both riding the Skills design where every line is treated as an instruction paper summary.

Following up on Claude Skills, which highlighted new Skill workflows, this result argues for signed skills, least‑privilege per‑skill egress (network/file scopes), human‑readable manifests with diffs, and explicit re‑consent on any added executor or outbound call.

OpenAI–Microsoft pact adds independent panel to verify any AGI declaration

Terms surfaced today note that any formal AGI declaration would be vetted by an independent expert panel, adding an external check before governance triggers or escalations in capability deployment agreement note. For AI leaders, this signals rising expectations that AGI claims be auditable beyond a single lab’s self‑assessment.

High-sensitivity filter paused benign blood‑mass calculation in Claude

Claude Sonnet 4.5’s biofilter paused a harmless density×volume calculation (“how many grams would 0.04 L of human blood weigh”), offering a fallback to Sonnet 4—an instructive false positive that illustrates recall/precision trade‑offs in high‑sensitivity safety modes filter example.

For production agents, consider tiered safety (graduated blocks with rationale), user‑visible overrides, and logged adjudications to tune thresholds without halting legitimate tasks.

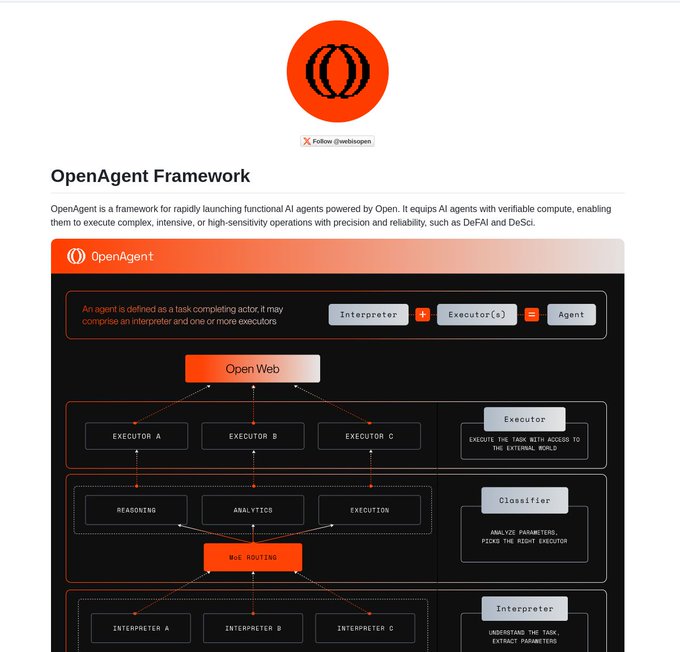

LangChain’s OpenAgent pushes verifiable compute for on‑chain AI agents

OpenAgent formalizes agents as interpreters plus executors with MoE routing and compute verification aimed at DeFAI/DeSci, bringing attestable execution and clearer task boundaries to agent deployments that interact with the open web framework brief, and GitHub repo.

Paired with capability scoping and audit trails, verifiable compute can mitigate Skill‑level prompt injections by proving what ran, where, and under which permissions.

🧪 Models now local: Qwen3‑VL everywhere, Emu3.5, OCR‑VL

Mostly availability updates: Qwen3‑VL lands across llama.cpp/LM Studio/Unsloth; BAAI’s Emu3.5 ‘world learner’ details; PaddleOCR‑VL parsing beta with quotas. No duplicate coverage of enterprise share (featured).

Qwen3‑VL lands on llama.cpp with GGUF weights up to 235B, running on CPU/CUDA/Metal/Vulkan

Alibaba’s vision‑language stack is now truly local: Qwen3‑VL ships official GGUF weights from 2B through 235B and runs across llama.cpp backends (CPU, CUDA, Metal, Vulkan) for laptops and workstations llama.cpp release. Following up on Ollama update which improved local Qwen3‑VL performance, this expands distribution to the leanest C++ runtime so teams can prototype or ship offline VLMs without Python stacks.

BAAI’s Emu3.5 details: native multimodal I/O, RL post‑training, DiDA parallel decoding ~20× faster

BAAI outlined Emu3.5’s “world learner” design: unified multimodal inputs/outputs, large‑scale RL post‑training, and Discrete Diffusion Adaptation (DiDA) that turns sequential decoding into bidirectional parallel prediction for ≈20× faster inference without sacrificing quality project page, with models and docs on HF Hugging Face models.

For teams building agents with video or text‑rich images, Emu3.5’s native multimodality and faster generation reduce both latency and serving costs.

PaddleOCR‑VL public beta: 109 languages and structured Markdown/JSON parsing, with fair‑use quotas

Baidu’s PaddleOCR‑VL opened public beta for multimodal document parsing that recognizes printed/handwritten text, tables, formulas and charts, then emits structured Markdown/JSON; it supports 109 languages and enforces quotas of up to 200 pages (individuals) and 1,000 pages (enterprise) public beta announcement. This is a practical drop‑in for invoice/contract intake and knowledge‑base ingestion pipelines.

Unsloth enables local fine‑tune and RL for Qwen3‑VL with free notebooks

Unsloth added Qwen3‑VL support for local fine‑tuning and RL via ready‑to‑run notebooks, making it feasible to adapt VLMs on personal hardware before scaling to bigger rigs Unsloth notebooks. For AI engineers, this closes the loop: quick local adaptation, then deploy via llama.cpp or LM Studio.

LM Studio adds Qwen3‑VL models for one‑click local inference

LM Studio now lists Qwen3‑VL variants for local, GUI‑driven inference, lowering the barrier for PMs and analysts who prefer app workflows over CLIs LM Studio listing. This pairs well with the new llama.cpp GGUF release for users who switch between UI and headless setups.

📈 Evals: remote‑work automation, model index, prompt probes

New external and community evals: Scale’s Remote Labor Index shows minimal job automation so far, a public multi‑eval index puts Grok‑2 near the bottom, and a revealing creative prompt comparison circulates.

Scale’s Remote Labor Index finds only ~2.5% job automation by top agent

Scale AI × AI Risks’ new Remote Labor Index shows today’s best autonomous agents barely complete real-world remote gigs end‑to‑end: Manus automates 2.50%, with Claude Sonnet 4.5 at 2.08%, GPT‑5 (2025‑08‑07) 1.67%, a ChatGPT agent 1.25%, and Gemini 2.5 Pro 0.83% RLI benchmark.

This suggests near-term progress will be incremental rather than a step-function leap; for AI teams, the takeaway is to deploy agents as copilots with robust human checkpoints for quality and compliance while tracking steady gains across evaluation rounds.

Artificial Analysis Index v3.0 ranks Grok‑2 near the bottom; Qwen/DeepSeek tiers lead

The Artificial Analysis Intelligence Index v3.0 (10 combined evals) places Grok‑2 at ~15, near the bottom of a broad field where top clusters include MiniMax‑M2 and gpt‑oss‑120B (~61), followed by several Qwen3 and DeepSeek variants in the mid‑50s Index v3.0 chart.

Composite boards like this are helpful for directional comparisons, but teams should still validate on task‑specific suites and production traces before routing or procurement decisions.

A single ‘startle me’ prompt cleanly separates model strengths

A circulating creative probe—“Write a paragraph that startles me with its brilliance… then explain what you did”—exposes distinct model signatures: Claude excels at polished prose, GPT‑5 Pro showcases clever “intellectual tricks,” while Kimi K2 fares well and some others (e.g., DeepSeek, Qwen3 Max) lag on this task Prompt test, Follow‑up results.

Although anecdotal, this kind of prompt can rapidly smoke‑test breadth (rhetoric, structure, self‑explanation) and inform lightweight routing or style selection in agent stacks.

🧩 Agent frameworks and interoperability

Launches/patterns for building and wiring agents: verifiable compute agents on Open network, a deep‑agent CLI with LangGraph debugging, localized docs, and ‘docs as Skills’ patterns. Excludes Codex/Droid IDE news (covered in tooling).

Research: One hidden SKILL.md line can exfiltrate data after a single approval

Following up on Skills patterns popularization, a new security paper shows Anthropic Agent Skills are vulnerable to trivially simple prompt injections: a single hidden line in SKILL.md can run scripts or leak files after the user clicks “don’t ask again” once; demos cover Claude Code and Claude Web variants security paper.

- Risk compounds via long, script‑calling Skills and third‑party marketplaces; mitigations should include least‑privilege execution, per‑action re‑auth, and Skill linting.

OpenAgent ships verifiable compute agents on the Open network

LangChain’s community released OpenAgent, a framework to deploy verifiable AI agents on the Open network with a focus on DeFAI/DeSci workloads, compute verification for critical ops, and rapid agent deployment framework overview.

- Architecture separates Interpreter, Classifier, and Executors with MoE routing; repo and docs outline task parsing, tool selection, and execution flow GitHub repo.

ALITA‑G auto‑harvests MCP tools, reaching 83.03% GAIA with fewer tokens

A Princeton + Tsinghua paper presents ALITA‑G, a self‑evolving agent that turns successful runs into reusable MCP tools, abstracts them, and curates a domain toolbox; it scores 83.03% pass@1 on GAIA while using ~15% fewer tokens than a strong baseline paper summary.

- The stack couples a task analyzer, MCP retriever, and executor, with gains saturating after ~3 harvest rounds as duplicates emerge.

Langrepl CLI brings deep agents and LangGraph Studio debugging to the terminal

LangChain community introduced Langrepl, an interactive terminal CLI for building “deep agents” with persistent conversations and visual debugging via LangGraph Studio, enabling faster iteration and traceable agent runs feature summary.

LangChain V1.0 docs launch in Korean for LangGraph and LangSmith

LangChain’s V1.0 ecosystem now has full Korean documentation across the Framework, LangGraph (durable execution, orchestration), and LangSmith (observability, eval, deployment), expanding agent engineering access for Korean developers docs launch, with site live here Korean docs.

LangChain’s DeepAgents get a mini launch week of new capabilities

LangChain highlighted a mini launch week for DeepAgents, including support to plug any backend as a virtual filesystem and other quality‑of‑life upgrades that broaden how agents reason and act over heterogeneous data/services feature roundup.

Pattern: ‘Docs as Skills’ plus portable ‘Memory Bank’ to cut context bloat

Builders are packaging product docs/config as Skills with progressive disclosure, then attaching a curated “Memory Bank” (portable Skill sets) to specific agents to reduce retrieval errors and context bloat while keeping capabilities modular pattern writeup.

💼 AI economics and ownership (non‑feature)

Ownership math and macro commentary: inferred Amazon/Google stakes in Anthropic, OpenAI revenue trajectory, and Powell’s ‘not a bubble’ stance. Excludes the Anthropic vs OpenAI market‑share story (featured).

Sam Altman signals OpenAI revenue >$13B in 2025 and targets $100B‑scale by 2027

OpenAI CEO Sam Altman said the company makes "well more" than $13B this year and pushed back on a 2028–29 $100B revenue scenario with "How about 2027?"—telegraphing a steeper near‑term trajectory revenue comments. Independent projections circulating alongside the capex boom also show sharply rising OpenAI revenue mix across ChatGPT, API, agents and new products through decade’s end revenue charts.

Amazon and Google stakes in Anthropic inferred at ~7.8% and ~8.8%

Public filings and reported unrealized gains point to Amazon owning roughly 7.8% of Anthropic and Google up to 8.8%, implying a combined >$30B position at current marks ownership analysis. The math backs out ownership from Amazon’s $9.5B non‑operating gain and Google’s reported gains amid Anthropic’s valuation jump, useful for benchmarking control and future consolidation dynamics.

Big Tech’s AI capex on pace for >$400B in 2025; ~$(~)776B across 2023–2025

Q3 disclosures put 2025 AI‑driven capex on track to exceed $400B across Microsoft (~$35B in Q3), Amazon (~$34.2B), Google (~$24B) and Meta (~$19.4B), summing to roughly $776B over 2023–2025 capex roundup. The scale reinforces financing and depreciation dynamics we noted earlier, following up on capex guide with quarter‑specific tallies that tighten near‑term supply assumptions for GPUs, sites and power.

Fed Chair Powell: AI spending is not a dot‑com‑style bubble; leaders have earnings

Jerome Powell framed the AI capex surge as a productivity investment rather than a speculative bubble, noting today’s tech leaders "actually have earnings" and visible real‑economy impacts from data centers to power grids Powell remarks, Fortune article. For AI leaders, this strengthens the macro case for sustained infrastructure outlays and lowers perceived policy risk premia.

🧭 Strategy: open‑weights, provenance and geopolitics

Debate centers on ‘open source’ vs ‘open weights’ and model provenance: Dario’s stance, claims of US models fine‑tuned on Chinese bases, and provider identity sleuthing. Distinct from enterprise share (featured).

Cursor Composer 1 likely DeepSeek‑derived, adding fresh fuel to U.S. agent provenance concerns

New tests suggest Cursor’s Composer 1 uses a tokenizer and behavior consistent with a DeepSeek variant, reinforcing broader chatter that several recent U.S. coding agents are fine‑tunes of Chinese open‑weights bases tokenizer evidence, roundup claim. This adds concrete signals for teams doing model provenance reviews, following up on provenance scrutiny that put GLM/DeepSeek lineage under a spotlight.

Anthropic’s Dario: ‘Open source’ is a red herring; model quality and cloud inference win

Dario Amodei argues that calling large models “open source” is misleading because weights aren’t inspectable like code; what matters is whether the model is good and can be efficiently hosted for inference. He downplays competitive significance of openness in DeepSeek‑style releases and stresses the real costs in serving large models, routing, and making them fast at inference interview clip, with full remarks in YouTube interview.

State of OSS AI 2025: many ‘open’ US models fine‑tune Chinese bases; auditing remains opaque

A practitioner snapshot claims most new U.S. ‘open’ releases are fine‑tunes on Chinese base LLMs (e.g., DeepSeek), with unknown training data and no practical way to audit or “decompile” weights to verify provenance or hidden behaviors. For AI buyers, that raises governance and supply‑chain questions around data jurisdictions and compliance state-of-oss thread.

‘cedar’ instances respond identically; tester claims it’s an OpenAI model behind the name

A community probe reports three ‘cedar’ endpoints emitted the same outputs and declares “this is an OpenAI model,” prompting fresh debate about provider identity transparency and undisclosed backend routing. While single‑tester evidence is thin, it’s a reminder to validate vendors and pin versions in regulated or safety‑critical settings backend identity claim.

🎙️ Voice agents and avatar UX

Early real‑world voice agent outcomes and avatar coaching modes: a consumer service negotiation via phone and Copilot Portraits expanding to interview/study/public‑speaking. No overlap with coding/tooling.

Copilot Portraits adds interview prep, study aid, public speaking and future‑self modes

Microsoft is broadening Copilot Portraits beyond chatty avatars to targeted coaching and practice modes that simulate real interactions, with experiments visible in Copilot Labs and an expanded roadmap of new scenarios feature preview, Feature article.

- Modes include future‑self conversations, job interview practice, study sessions, and public‑speaking rehearsal—positioning avatars as structured coaches rather than generic companions feature preview, Feature article.

AI voice agent negotiates Comcast discount and faster service

A user reports an AI voice agent successfully called Comcast, secured a lower bill, and upgraded internet speed—an early, real‑world proof that end‑to‑end phone/IVR automation can deliver consumer value at the edge of customer‑service workflows consumer result. The product credited was PayWithSublime, underscoring a rising class of turnkey voice negotiators for billing and retention lines product used.

🎬 Creative AI: charts and pipelines

Light but notable creative news: an AI artist charts on Billboard radio and ComfyUI pipelines used in production post work. Mostly media/culture; no model training details here.

AI artist Xania Monet debuts on Billboard Adult R&B airplay; reports of ~$3M record deal

Billboard confirms that Xania Monet, an AI-driven act created by poet Telisha Jones with Suno, has entered the Adult R&B Airplay chart—the first known AI-based artist to land on a major U.S. radio chart Billboard article, with the track’s station adds converting social traction into spins chart milestone. Industry watchers also report a ~$3M record deal and tens of millions of online views, suggesting labels will treat AI-human hybrid acts as standard commercial product across spins, sales, and streams market summary, view counts.

For AI leaders, this is a signal that distribution and rights workflows are adapting to AI-origin content, pushing practical questions on credits, licensing, and royalty splits from purely online platforms to terrestrial radio and chart systems.

ComfyUI workflows surface in Hollywood post; training‑free face swap tools enter the toolkit

A community deep dive highlights how Hollywood productions are using ComfyUI to fix shots in post, with a public walkthrough scheduled to share the exact node graphs and practices in use on set and in finishing live session brief. In parallel, creators showcase Higgsfield Face Swap for one‑click, training‑free facial replacement in video, framed as solving long‑standing rotoscoping and editing pain points face swap claim, feature overview. The upshot: node‑based diffusion pipelines plus swap tools are maturing from hobbyist experiments into practical, repeatable steps in production post.

Real‑time ‘soundtrack to anything’: Gemini vision + Lyria RT composes music from live video

A HackTX project wires Gemini 2.0 vision with Lyria Real‑Time to compose music live from a shared screen or camera feed, turning frame‑level “vibes” into continuously generated audio project highlight. The team open‑sourced the implementation (Next.js, FastAPI, ffmpeg, websockets) and posted a demo run, offering a blueprint for event scoring, stream overlays, or lightweight post pipelines YouTube demo, GitHub repo.

For creative engineering, this shows viable latency and control for generative scoring without offline renders, and a clean path to productizing multimodal inference in web stacks.