ChatGPT Agent Mode opens to 3 paid tiers – 4.5× faster on Sudoku

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

OpenAI just flipped on Agent Mode (Atlas) inside ChatGPT for Plus, Pro, and Business, turning the product from chat window into click-and-act assistant. It matters because Atlas works directly in the browser—researching, planning, and executing steps—without the glue code most agents demand. Early tests are mixed: one study finds it solves medium Sudoku about 4.5× faster than a human baseline, but it stumbles on reflex-timing games like Chrome’s T‑Rex Runner and Flappy Bird. Windows support is missing in this preview, and the rollout follows a brief pause on Atlas extensions for security.

Hands-on users say the basics—navigating, reading, simple clicks—feel solid, but Atlas often stalls when composing or formatting inside DOM‑heavy web apps. The new “thinking” view doesn’t help much either; auto‑scroll keeps yanking you to the bottom, making the reasoning trace hard to audit mid-run. Power users comparing it to Perplexity’s Comet argue there’s “no reason to switch” yet unless Atlas proves better at real tasks, especially content creation and edit flows.

If you’re eyeing desktop agents, note the parallel track: OpenAI’s Codex CLI added an experimental Windows sandbox this week, hinting at tighter guardrails coming to agent operations even as Atlas’s own Windows build sits out this preview.

Feature Spotlight

Feature: ChatGPT Agent Mode goes hands‑on

ChatGPT Agent Mode (Atlas) enters preview for Plus/Pro/Business, enabling agents to research, plan and act in‑browser—early evals show strengths in logic tasks but gaps in real‑time control; broad user feedback begins.

Cross‑account focus today: OpenAI’s Agent Mode (Atlas) opens preview to Plus/Pro/Business. Threads include real usage, UX feedback, and an early web‑games eval; strong Sudoku, weak reflex timing. This section owns all Atlas items.

Jump to Feature: ChatGPT Agent Mode goes hands‑on topicsTable of Contents

🧭 Feature: ChatGPT Agent Mode goes hands‑on

Cross‑account focus today: OpenAI’s Agent Mode (Atlas) opens preview to Plus/Pro/Business. Threads include real usage, UX feedback, and an early web‑games eval; strong Sudoku, weak reflex timing. This section owns all Atlas items.

ChatGPT Agent Mode opens preview to Plus, Pro and Business users

OpenAI flipped on Agent Mode in ChatGPT (Atlas) for paid accounts, enabling agents to research, plan, and take actions while you browse OpenAI announcement. The rollout follows extensions pause that temporarily disabled Atlas browser extensions for security.

Hands-on prompts and early testing are already circulating among power users hands-on try.

Paper: Atlas aces medium Sudoku ~4.5× faster than humans but struggles on reflex timing games

A new study probes ChatGPT Atlas as a web-game agent: it cleanly solves medium Sudoku roughly 4.5× faster than a human baseline, but falters on real‑time tasks like Chrome’s T‑Rex Runner and Flappy Bird due to precise timing demands paper summary. The work maps strengths to rule‑based logic and weaknesses to long‑horizon control and physics.

- Strong: Sudoku and other logic puzzles (fast, consistent execution) paper summary

- Weak: Reflex timing, strict geometry, and open‑world task chains (frequent early crashes or stalls) paper summary

Early takes pit Atlas against Perplexity Comet; Windows support called out as missing

Practitioners testing ChatGPT Atlas Agent Mode compare it to Perplexity’s Comet, arguing there’s “no reason to switch” unless Atlas proves better—and noting it isn’t available for Windows yet in this preview comparative take. Trial prompts are circulating to kick the tires on real tasks hands-on try.

Power users say Atlas stalls on DOM-heavy creation tasks despite basic browsing working

Hands-on reports praise Atlas for simple clicks and navigation but flag that it “gets stuck” when adding, formatting, or creating content inside complex web apps (richer DOM composition) power-user feedback. Testers want stronger actions for editing and composing, not just reading and clicking.

Thinking trace auto‑scroll frustrates Atlas users trying to read reasoning history

Early UX feedback says the new “thinking” view auto‑scrolls to the bottom with each entry, making it hard to review the ongoing reasoning trace during a run UX note. Users are asking for better controls to pause or browse intermediate thoughts without fighting the scroll.

🏗️ AI infrastructure: campuses, energy and financing

Infra news dominated by OpenAI’s 1+ GW Stargate campus in Michigan plus Amazon’s Anthropic site switch‑on, Meta’s 1 GW solar deals, and debt‑financed capex. Excludes Atlas (covered in Feature).

Amazon switches on Indiana AI campus for Anthropic with >500k Trainium 2, targeting 2.2 GW buildout

Amazon’s New Carlisle, Indiana site dedicated to Anthropic is now live, running on 500,000+ Trainium 2 chips and planned to span 30 buildings with 2.2 GW when complete News summary, following up on Rainier site which flagged the massive chip count and power envelope. The project flips former cornfields into a multi‑billion‑dollar AI compute hub in roughly a year, reinforcing AWS’s push to vertically own AI training capacity for key partners.

OpenAI picks Michigan for >1 GW Stargate campus; “largest investment in state history”

OpenAI will build a gigawatt‑scale Stargate data center in Saline Township, with construction targeted for early 2026, 2,500 union construction jobs, ~450 permanent roles, and closed‑loop water usage (no Great Lakes draw) Local coverage. The company also outlined the multi‑site Stargate program in its post, underscoring a US‑based AI infrastructure buildout OpenAI blog.

Debt wave funds AI buildout: AI capex now ~25% of US IG bond supply; Meta $30B, Oracle $18B, RPLDCI $27B

Bank of America data shows borrowing to fund AI data centers exploded in September–October, with AI now ~25% of US investment‑grade bond supply; recent highlights include Meta $30B, Oracle $18B, and Related Digital $27B Debt chart. Meta is also prepping another $25B sale as it frontloads ASI‑oriented capex Bond sale plan. The financing mix concentrates cheapest capital with incumbents that can match long‑lived contracts to chip lifecycles.

Samsung and NVIDIA to build AI “mega‑factory” with 50k GPUs; cuLitho targets ~20× faster computational lithography

Samsung and NVIDIA will stand up a GPU‑powered AI factory to run fab digital twins, speed chip design, and accelerate optical proximity correction with cuLitho (claimed ~20× faster), while integrating Blackwell/Jetson Thor in factory robotics WSJ summary. Running core chipmaking workloads on GPUs instead of CPU clusters signals a structural compute shift inside semiconductor manufacturing itself.

TSMC clears ~$49B A14 fab in Taichung for 1.4 nm; mass production targeted 2H’28

TSMC received permits for its A14 fab and utility buildings in Taichung, aiming 1.4 nm with ~15% speed at iso‑power or ~25–30% lower power at iso‑perf versus 2 nm, risk runs in 2027, and volume in 2H’28 Local news summary. The node claims performance‑per‑watt gains critical to AI accelerator cost curves, while avoiding High‑NA EUV reduces tool risk.

UBS model projects NVIDIA unit mix through 4Q26 with GB200 ramp and Rubin CPX on the horizon

A UBS unit‑mix chart outlines NVIDIA shipments by accelerator family through late 2026, with GB200 and later B300/GB300 gaining share as H100/H200 fade UBS chart. The mix implies continued supply‑chain pressure shifting toward Blackwell‑class parts and previews when next‑gen Rubin CPX enters the curve.

Google Cloud ascends on AI; Alphabet guides $91–$93B 2025 capex and signals larger 2026 build

Alphabet’s cloud arm has flipped from laggard to growth driver on AI demand, with management guiding $91–$93B 2025 capex and warning of an even bigger 2026 build Reuters analysis. Google’s strategy leans on TPUs opened to external labs, signing nine of ten leading AI shops and anchoring future AI workload siting.

Meta stock falls 11% as 2025 AI capex lifted to $70–$72B; investors question near‑term ROI

Despite beating Q3 estimates, Meta’s shares dropped 11% after it raised 2025 capex to $70–$72B to pursue superintelligence, with even larger outlays signaled for 2026 CNBC summary. The reaction underscores market sensitivity to open‑ended AI spending plans absent concrete service monetization timelines.

Michigan officials detail Stargate jobs and environmental protections for OpenAI campus

Governor Whitmer’s office frames the Stargate project as the state’s biggest single investment, citing 2,500 union construction jobs, ~450 on‑site roles, a closed‑loop cooling system, and no Great Lakes water draw Local coverage. The permitting‑friendly footprint and community funds attached to the project illustrate how AI campuses are negotiating local acceptance.

RPO and depreciation math split AI capex into two cycles: near‑term contracted vs speculative builds

Financial Times analysis highlights diverging contract quality and unit economics: Microsoft’s ~$400B RPO with ~2‑year duration converts faster to cash, while others carry longer, lumpier exposure; rising D&A (e.g., to ~16.8% of revenue) tightens margin control as short‑lived AI gear fills data centers FT analysis. The result is a short‑cycle, backlog‑anchored boom alongside a longer‑cycle speculative build that assumes future demand.

🛠️ Builder tooling: coding agents and research assistants

Big day for agent/dev tools outside Atlas: Cline’s native tool calling and approvals, Claude Code’s installer + update, Opera’s deep research, Kimi CLI with MCP, and Vercel Agent investigations. Excludes Atlas Feature.

Codex CLI v0.53 adds experimental Windows filesystem/network sandbox

OpenAI’s Codex CLI v0.53 introduces a highly experimental Windows sandbox for workspace‑scoped writes and controlled networking, with an on‑request approval mode and known caveat for world‑writable folders sandbox brief, and GitHub discussion. This ships days after the prior improvements CLI update that focused on undo and stability.

Claude Code v2.0.31: Vertex web search, Shift+Tab on Windows, and MCP fixes

The 2.0.31 release updates Windows mode‑switch to Shift+Tab, adds Web Search on Vertex, honors VS Code .gitignore by default, and fixes subagents/MCP tool‑name conflicts, compaction errors, and plugin uninstall behavior changelog.

Small ergonomics like /compact reliability and duplicate‑summary fixes target long‑running agent threads changelog.

Kimi CLI tech preview: shell UI with command exec, Zsh integration, and MCP

Moonshot released KIMI CLI (technical preview), a terminal‑native coding agent featuring a shell‑like UI, direct command execution, seamless Zsh integration, MCP support, and an Agent Client Protocol for broader tooling feature brief.

This lowers friction for agent‑assisted coding and automations directly from the console feature brief.

Vercel Agent adds automated ‘Investigations’ for incidents; $100 credit for new users

Vercel Agent can now auto‑detect anomalies and run AI‑driven investigations that correlate telemetry and propose remediation steps, aiming to cut MTTR for production issues; new users get $100 in credits blog post, and Vercel blog. This pushes agentic ops beyond static alerts toward root‑cause analysis as a built‑in workflow.

FactoryAI Droid can import Claude agents directly from .claude/agents

Droid now supports “Import from Claude (.claude/agents)”, making Claude agents portable into Droid’s runtime without re‑authoring feature screenshot.

This shrinks setup time for teams standardizing on Claude Skills while experimenting with alternative orchestrators.

LangChain earns AWS Generative AI Competency; LangSmith now on AWS Marketplace

LangChain joined AWS’s Generative AI Competency program and listed LangSmith on AWS Marketplace, enabling agent‑engineering workflows (tracing, evals, deployments) with ISV Accelerate alignment for co‑sell partner update.

The move eases procurement and governance for teams standardizing on Bedrock, SageMaker, and AWS data services.

LlamaIndex ships native MCP search so coding agents can query its docs directly

LlamaIndex added a native MCP search endpoint for its documentation, letting MCP‑enabled coding agents call search tools directly (no custom glue), which simplifies agent builds that need API‑accurate context docs update. This pairs well with editor agents that plan, retrieve, and cite within the same run.

Ollama v0.12.8 boosts Qwen3‑VL and engine stability; desktop adds reasoning‑effort control

Ollama 0.12.8 improves Qwen3‑VL performance (FlashAttention default, better transparency handling) and engine prompt processing; Windows now ignores unsupported iGPUs release notes, and GitHub release. The desktop app also exposes per‑chat “reasoning effort” selection to trade speed vs depth desktop UI.

Opera rolls out Deep Research Agent in Neon for long‑form web analysis

Opera launched ODRA (Opera Deep Research Agent) in the Opera Neon browser, packaging sourcing, summarization, and deeper multi‑page analysis as a built‑in research assistant feature brief. This puts an agentic researcher directly into a mainstream browser without extensions, useful for competitive/market scans and literature reviews.

Perplexity launches ‘Patents’ agent for IP research, free in beta to subscribers

Perplexity rolled out a Patents agent that structures and searches IP corpora as a guided research workflow, available free in beta for subscribers feature recap. It’s a targeted assistant for prior‑art checks and technology landscaping inside a familiar research UX.

🧪 Models: ‘thinking’ Qwen and multimodal Nemotron on vLLM

Selective model updates relevant to builders: Qwen3 Max Thinking hits arenas and Nemotron Nano 2 VL arrives on vLLM. Runtime‑only updates (e.g., Ollama engine) live in Systems, not here.

Qwen3 Max Thinking appears in LM Arena, signaling release

The ‘thinking’ variant of Qwen3 Max surfaced in LMSYS Arena, with community posts indicating rollout is underway and broader evals imminent Arena update, release note, release hint. In context of Ollama Qwen3‑VL, which added the VL lineup locally, this brings Qwen’s reasoning‑first tier into public head‑to‑heads.

Expect rapid informal benchmarking across math, coding, and agent workflows as Arena datapoints accumulate; an earlier heads‑up also flagged “within hours” timing for the drop release tease.

vLLM adds NVIDIA Nemotron Nano 2 VL (12B) for video and document intelligence

vLLM now serves NVIDIA’s Nemotron Nano 2 VL, a 12B hybrid Transformer–Mamba VLM with 128k context and Efficient Video Sampling to cut redundant tokens on long videos—aimed at faster, accurate multimodal reasoning over multi‑image docs and video integration post, vLLM blog. Builders get an enterprise‑ready path to high‑throughput VLM agents, with weights offered in BF16/FP8/FP4‑QAD formats and strong results on MMMU, MathVista, AI2D, and OCR‑heavy tasks as outlined in the release.

🧩 Interoperability: MCP workflows and agent imports

MCP‑centric moves to wire tools and agents together. Focus is on cross‑tool interoperability; implementation‑specific IDE features sit in Tooling.

LlamaIndex adds native MCP search endpoint for agent tooling

LlamaIndex rolled out a native MCP search endpoint so agent runtimes can call LlamaIndex-backed search tools directly, with docs live for builders MCP search docs. The move lowers glue-code and standardizes search access across MCP-compatible IDEs and orchestrators, following Replit templates that made MCP server deployment a one‑minute task.

This should simplify wiring retrieval into code assistants and research agents without bespoke adapters, and helps converge on MCP as the default interop surface for tool calls.

Claude Code v2.0.31 ships MCP subagent stability fixes

Anthropic’s Claude Code v2.0.31 fixes an MCP edge case (“Tool names must be unique”) that broke some subagent setups, alongside plugin uninstall and compaction fixes Changelog details. A weekly roundup also highlights resumable subagents and a new Plan subagent that can pair with MCP tools Weekly roundup.

For interop-heavy projects, the MCP bugfix unblocks multi-tool agent stacks and reduces brittle behavior when wiring several MCP servers into a single plan.

FactoryAI Droid can now import Claude agents directly

FactoryAI added “Import from Claude (.claude/agents)” to Droid, letting teams load Claude-built agents directly into Droid sessions for reuse and extension Import menu screenshot. This reduces migration friction between ecosystems and encourages agent portability across stacks.

Practically, this makes Claude-defined workflows first‑class citizens inside Droid without re-authoring skills or tools, speeding cross‑tool experimentation.

Kimi CLI tech preview lands with MCP and Agent Client Protocol support

Moonshot released a Kimi CLI technical preview that combines a shell‑like UI, command execution and Zsh integration with MCP server support and the Agent Client Protocol, positioning the CLI as a hub for interoperable tool use Kimi CLI announcement.

For agent builders, native MCP in a terminal workflow means faster local prototyping of toolchains, easier testing of server capabilities, and portability across agent runtimes that speak MCP.

CopilotKit + LangGraph demo predictive state updates with human-in-the-loop sync

CopilotKit showcased “predictive state updates,” wiring its real‑time UI to LangGraph agents so edits flow as structured workflows (agent rewrites → human approval → live sync) rather than linear text diffs Workflow post. This pattern makes collaborative agent edits feel native while keeping humans in control of final changes.

For engineers stitching tools, it’s a practical recipe for interop between an orchestrator (LangGraph), UI state, and agent tool calls—useful where MCP tools and non‑MCP services coexist.

💼 Enterprise adoption and partnerships

Signals of commercialization: Perplexity’s Getty deal for licensed images, LangChain’s AWS competency/Marketplace path, and Figma’s Weavy acquisition for AI media pipelines.

Amazon lights up Indiana AI campus for Anthropic with >500k Trainium 2 chips and 2.2 GW plan

Amazon has activated its largest AI data center for Anthropic in New Carlisle, Indiana—running over 500,000 Trainium 2 chips, scaling to 30 buildings and a planned 2.2 GW draw news brief, following up on initial build that outlined a 0.5–1.0M Trainium target this year.

The dedicated campus underscores deep, long‑term buyer–supplier alignment between a hyperscaler and a frontier lab, with material implications for model training capacity and cost curves.

Perplexity signs multi‑year Getty Images license to display credited photos in AI search

Perplexity struck a multi‑year licensing deal with Getty Images so its AI answers can show licensed editorial and creative photos with credits and links, a notable move toward “properly attributed consent.” Getty shares jumped roughly 45–50% on the news deal coverage.

The agreement formalizes image rights for AI search and follows Perplexity’s publisher rev‑share program; together they point to a paid‑content supply chain for AI results.

Figma buys Weavy and unveils ‘Figma Weave’ for AI media generation pipelines

Figma acquired Tel Aviv–based Weavy and introduced the ‘Figma Weave’ brand, bringing a node‑based canvas that chains multiple AI models to generate and edit images/video with granular layer‑level controls; Weavy will run standalone initially before deeper Figma integration deal summary.

The move positions Figma to own more of the AI media workflow (prompting, lighting, angles, compositing) inside a designer‑friendly canvas.

LangChain earns AWS Generative AI Competency; LangSmith now on AWS Marketplace

LangChain joined AWS’s Generative AI Competency program and listed LangSmith on AWS Marketplace, with ISV Accelerate eligibility and “Deployed on AWS” status—giving enterprises a vetted, procurement‑friendly path to agent engineering (tracing, evals, deployments) partner badge post.

Framework‑agnostic positioning means teams can adopt LangSmith with or without LangChain/langgraph, while plugging into Bedrock, SageMaker, S3, Opensearch, and more.

Modal partners with Datalab to scale Marker OCR pipelines with ~10× throughput on GPUs

Modal and Datalab teamed up so developers can deploy Marker + Surya OCR on GPUs in minutes, with cached weights and autoscaling that deliver roughly 10× higher parsing throughput; a hosted API backed by Modal is also available for maximum throughput partnership post, and the setup is documented in Modal’s guide Modal blog post.

This brings a deterministic, hallucination‑free document intelligence stack into an elastic, production‑ready runtime.

⚙️ Systems: sandboxes and local runtimes

Serving/runtime engineering updates: Codex’s Windows sandbox for safer agent runs and Ollama engine/desktop improvements for practical local workflows.

Codex CLI v0.53 adds experimental Windows sandbox for safer agent runs

OpenAI introduced an experimental filesystem and network sandbox on Windows that confines agent actions to a workspace with on‑request approvals, bringing tighter guardrails to Codex runs. Following up on v0.52 update that focused on stability, this release outlines a workspace‑write mode and flags, plus a key caveat: writes remain possible in directories where the Windows Everyone SID already has write permission. See setup flags and limitations in the docs sandbox flags, and the live docs and call for feedback via the GitHub page and discussion thread GitHub docs, testing call.

Ollama v0.12.8 boosts local Qwen3‑VL with FlashAttention and engine fixes

Ollama shipped v0.12.8 with Qwen3‑VL performance upgrades (FlashAttention enabled by default), faster prompt processing, and engine fixes such as better handling of transparent images and ignoring unsupported integrated GPUs on Windows. Release notes also mention app fixes like properly stopping a model before removal and correcting DeepSeek thinking toggles in the new desktop app release notes, with full details in the changelog GitHub release.

Northflank microVMs help scale secure production sandboxes during heavy launch traffic

cto.new reports moving to Northflank’s microVMs to scale secure agent sandboxes through a surge, citing per‑second billing, API‑driven provisioning, and thousands of daily container deployments without performance hits. The case study highlights a pragmatic path to isolate workloads and smooth spiky demand for agent workflows case study post, with deployment details in the provider write‑up Northflank blog.

Ollama desktop adds per‑chat “reasoning effort” and model picker controls

The new Ollama desktop UI exposes a per‑chat “reasoning effort” selector (e.g., Medium) alongside model choice, letting users trade latency and accuracy on the fly without leaving the conversation. This is a practical knob for local runs when switching between lightweight and more deliberate modes, captured in the updated toolbar screenshot desktop UI screenshot.

🛡️ Safety, abuse and rights

Policy and threat‑intel notes: music rights groups align on AI registration rules; separate post shows automated botnet detection in production. Sandbox tech lives in Systems.

ASCAP, BMI, SOCAN align on registering partly AI-made songs; pure‑AI works remain ineligible

North America’s three major PROs will now accept registrations of musical works with meaningful human authorship that incorporate AI-generated elements, while works created entirely by AI remain ineligible. The groups also reiterate that training on copyrighted music without authorization is infringement and point to ongoing lawsuits against AI firms Policy overview.

- Policies center human authorship as the basis for rights while creating a path to credit and payment when AI tools are used in production Policy overview.

Vercel BotID auto‑blocks sophisticated botnet in ~5 minutes after 500% traffic spike

Vercel says its BotID Deep Analysis detected a sudden 500% traffic surge from a coordinated bot network, identified ~40–45 spoofed browser profiles rotating through proxy nodes, and automatically re-verified and blocked the sessions within about five minutes—no customer action required Incident report, Vercel blog.

- The system flagged human-like fingerprints and behavior, then used correlation across browser profiles and proxies to classify the attack before enforcing blocks Vercel blog.

🧠 Training recipes: precision, adapters, and looping

Practitioner debates and papers on training and reasoning: FP16 vs BF16 for RL‑FT stability, zero‑latency fused adapters, and ByteDance LoopLM tradeoffs.

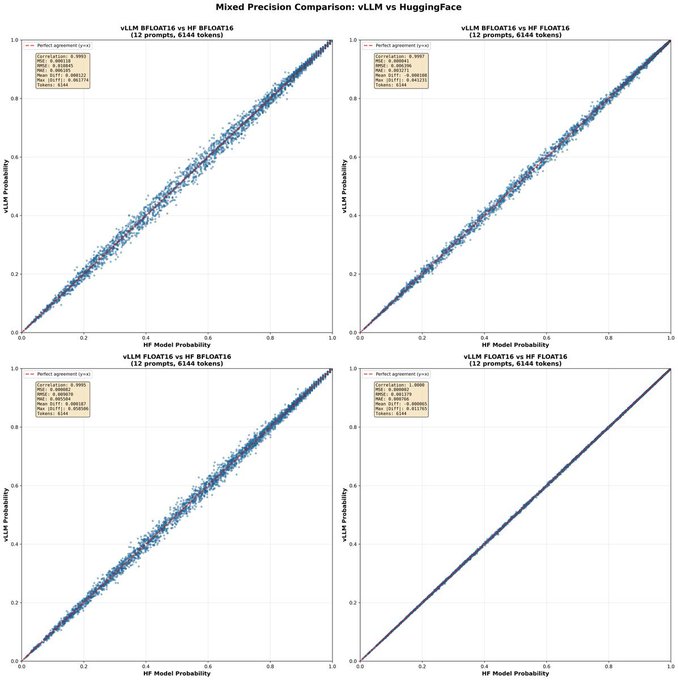

Engineers push FP16 over BF16 in RL fine‑tuning to cut train/infer divergence

Practitioners argue FP16’s 10 mantissa bits (vs BF16’s 7) reduce policy drift between training and inference in RL fine‑tuning by improving numerical agreement of kernels and absorbing rounding noise practitioner thread. The same thread later corrects the plot source while keeping the core claim intact, underscoring rising interest in precision choices for stability plot correction, with others signaling imminent switches to FP16 in production training loops engineer comment. See the linked paper thread cited in the discussion for additional context on precision trade‑offs ArXiv paper.

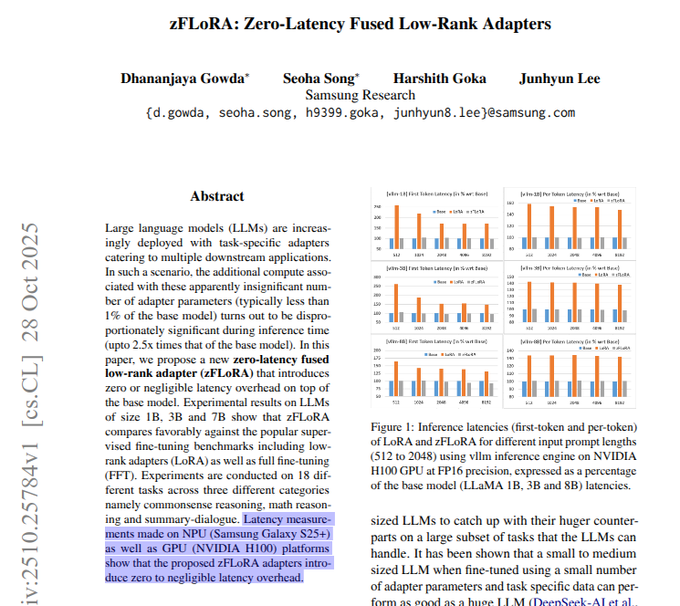

Samsung’s zFLoRA fuses adapters for zero‑latency fine‑tuning

Samsung Research introduces zFLoRA, a fused low‑rank adapter that merges adapter weights into base layers, effectively eliminating the extra matmuls and memory traffic that make classic LoRA slower (LoRA can add up to ~2.5× prefill and ~1.6× decode latency) paper abstract. Results across 18 tasks on 1B/3B/7B models show accuracy comparable to LoRA and near full FT, with latency measured on H100 GPUs and NPUs remaining close to base model runtime paper abstract.

ByteDance’s LoopLM Ouro trades recurrence for depth; small models gain, no extrapolation beyond T=4

Ouro 1.4B/2.6B repeatedly applies the same transformer stack for T recurrent steps (trained at T=4) over 7.7T tokens, learning multi‑hop tasks with fewer examples and adding a learned early‑exit gate for easier inputs analysis thread. The trade‑offs: 4× FLOPs at T=4 inference, no accuracy gains when pushing recurrence beyond the trained depth, and standard untied‑depth transformers win in compute‑matched comparisons—though LoopLMs look strong per‑parameter and under memory/KV constraints analysis thread.

CISPO RL loss fixes clipping‑induced CoT collapse, enabling longer reasoning chains

Authors recount how off‑policy PPO clipping suppressed low‑probability “thinking tokens” (e.g., “wait,” “but,” “let me”), stunting chain‑of‑thought growth; CISPO restores gradient flow when advantages are positive while retaining stability, leading to on‑policy‑like length gains without divergence origin thread. A unified formulation that covers REINFORCE and PPO is presented, with reports of near‑R1 performance on Qwen2.5‑32B in internal runs and detailed derivations of the masking and clipping behavior math details, Zhihu post.

🗂️ Agent data: RAG retrievers and high‑throughput parsing

New retrieval assets and parsing infra: NVIDIA’s Nemotron RAG family, Datalab Marker on Modal GPUs, and a patents‑focused agent from Perplexity subscribers.

Marker on Modal GPUs delivers ~10× document parsing throughput

Modal and Datalab launched a turnkey deployment for the Marker + Surya OCR stack: cache weights, spin up on GPUs in under five minutes, and autoscale to handle spikes, yielding roughly 10× higher throughput for structured document extraction versus CPU baselines Collab note, and Blog post. Teams that don’t want to self‑host can also use Datalab’s hosted Marker API, which runs on Modal’s GPU backend for maximum throughput Hosted API note.

NVIDIA posts Nemotron RAG collection with text, multimodal, layout and “Omni” retrievers

NVIDIA released a suite of retrieval models on Hugging Face covering text retrievers, multimodal retrievers, layout detectors, and new “Omni” retrievers that span image, text, and audio—licensed for commercial use, making them drop‑in building blocks for RAG systems Model roundup, and Hugging Face collection. The “Omni” variants broaden modalities for retrieval pipelines, useful for enterprise document and media search Omni retrievers.

OpenRouter launches cross‑provider embeddings directory

OpenRouter introduced a browsable catalog of embedding models across providers—useful for search, reranking, and vector‑DB pipelines—exposing pricing, limits, and quick filtering in one place Release note, and Model directory. The listing makes it easier to trial alternatives without provider lock‑in Browse page.

Perplexity debuts ‘Patents’ agent for IP research

Perplexity added a patents‑focused agent that streamlines intellectual property research workflows, with advanced capabilities available free during the beta for subscribers Feature note. The move expands RAG‑style retrieval into structured patent corpora for due‑diligence and competitive analysis.

📊 Evals and capability tracking

Measurement items outside of Atlas Feature: corrected GPT‑5 scoring deltas and a quarterly landscape showing GPT‑5 (high) retakes top spot. No other model launch repeats here.

EpochAI fixes GPT-5 scoring bug; ‘high’ now edges ‘medium’, tie on ECI

EpochAI corrected an Inspect evaluations bug that was silently forcing GPT‑5 calls set to “high” reasoning down to “medium.” Updated runs show GPT‑5 (high) slightly ahead of GPT‑5 (medium) on several benchmarks, while the two are now tied on the Epoch Capabilities Index. See benchmark bars and error bars in the update corrected scores. The root cause was an outdated Inspect version that ignored the “reasoning effort” parameter for OpenAI models unless the name began with “o” (e.g., o3); upgrading Inspect fixed it bug cause.

- Notable deltas: OTIS Mock AIME 2024–2025 (~92% vs ~87%), GPQA Diamond (~85% vs ~83%), FrontierMath T4 (~13% vs ~9%) corrected scores.

Quarterly State of AI: GPT‑5 (high) leads; US and China dominate model releases

Artificial Analysis’ latest quarterly landscape shows GPT‑5 (high) retaking the top spot on their intelligence index, with big tech pushing across modalities while smaller challengers specialize. The report also highlights U.S. and China dominance in new model releases, with relatively few entrants from elsewhere report highlights, website report.

- Modality spread: incumbents build across text, vision, audio, and agents; challengers focus on niche strengths report highlights.

📚 Research: computer use, decoding, memory and video reasoning

Fresh papers beyond training recipes: Surfer 2 cross‑platform computer use agents, AutoDeco end‑to‑end decoding control, geometric memory in sequence models, and video zero‑shot reasoning limits.

Surfer 2 unifies web/desktop/mobile computer-use agents, beating prior systems

A new paper introduces Surfer 2, a single agent architecture that generalizes computer use across the web, desktop, and mobile while outperforming earlier systems on accuracy and task completion paper abstract.

Following Copilot boost sandboxed Windows 365 computer use, this result offers a research baseline for cross‑platform action grounding and UI policy learning with stronger generalization than prior single‑environment agents.

AutoDeco lets LLMs learn their own decoding policy, moving beyond hand-tuned strategies

“The End of Manual Decoding” proposes AutoDeco, an architecture where a model learns to control its own decoding strategy—selecting sampling modes and constraints end‑to‑end—rather than relying on fixed heuristics (e.g., temperature, nucleus thresholds) paper screenshot.

The approach aims to reduce train–inference mismatch and brittle prompt‑level tuning by integrating decoding choices into the learned policy itself; details include a controller that adapts decoding parameters based on context and objective feedback loops.

Transformers and Mamba memorize as geometry, solving 50K‑node path queries in one step

A study finds deep sequence models (Transformers, Mamba) tend to form geometric memories: nodes in a knowledge graph embed so that multi‑hop paths become near‑one‑step distance checks, reaching up to 100% accuracy on unseen paths in graphs with ~50K nodes paper first page.

The work shows competition between associative (lookup) and geometric representations, with a Node2Vec baseline learning an even cleaner geometry tied to the graph Laplacian—implications include faster multi‑hop reasoning and more faithful retrieval without explicit chain‑of‑thought.

Video generators aren’t zero‑shot reasoners: MME‑CoF scores under 2/4 and fails on long chains

The MME‑CoF benchmark tests text‑to‑video models (e.g., Veo‑3 class) on 12 reasoning areas and finds they average below 2/4, handling short, locally constrained steps but failing on long‑horizon logic, strict geometry, and causal constraints benchmark paper.

Evaluators report smooth clips that nonetheless break rules (miscounts, timing errors, clutter misses), underscoring a gap between visual fidelity and robust procedural reasoning in zero‑shot settings.

🎃 Creative AI: Halloween effects, music, and recipes

Large volume of creative items: Sora character clips, Minimax/Kling horror filters, ElevenLabs Music tools, and Gemini’s Veo‑based Halloween how‑tos. This section corrals the non‑dev media news.

Higgsfield drops 1080p Halloween horror pack with Minimax + Kling, free gens and credits promo

Higgsfield launched a seasonal set of 13 Minimax transformations and 4 Kling “nightmares” (werewolf, devil, raven transition and more) with 1080p output and limited‑time free generations and credits giveaways inside the app feature rundown, free gens note. A dedicated landing page showcases one‑click “Halloween presets” and global availability promo thread, with details and examples on the site Halloween presets.

ElevenLabs Music adds stem separation and in‑painting, launches 24‑hour Halloween radio and 50% promo

ElevenLabs rolled out Music stem separation and in‑painting tools for granular remix control, alongside a one‑day ‘Radio Eleven’ Halloween station and a two‑week 50% discount on Music plans feature rundown. The in‑app radio is live for 24 hours with spooky remixes and spectral vocals radio announcement.

Sora’s ‘Monster Manor’ and character tools power Halloween shorts from creators

OpenAI highlighted a Halloween “Monster Manor” set in Sora and encouraged seasonal creations, while creators showcased multi‑minute shorts using the new Characters feature in the Sora app Monster Manor, creator short, characters note. This follows credit packs, where OpenAI teased Characters coming to the web and paid Cameos; now the app experience is fueling steady “Soraween” posts Soraween post.

Gemini shares Halloween creation playbook: Veo 3.1 monsters, costume ideas, ‘animate nightmares’ and invites

The Gemini team published a compact how‑to thread for seasonal content: generate scary creatures with Veo 3.1, ideate costume looks, build full costume mockups, animate nightmare scenes, and auto‑design party invites—all within the Gemini app and Studio how-to thread, Veo creature, costume ideas, animate nightmares, costume builder, party invites. A product overview page details image generation and editing (aka “Nano Banana”) tips and prompt guidance Gemini image guide.

ChatGPT image generation shows year‑over‑year gains on Halloween costume kit prompt

A repeat prompt (“those bags that hold cheap costumes, but make the costumes really weird”) produced sharper, more humorous packaging concepts—like “Sesame Loaf,” “Beige Carpet Stain,” and “Possessed CAPTCHA”—suggesting improved visual wit and layout fidelity over the past year image examples.

ComfyUI hosts Wan 2.2 Animate live session with control and quality tips

ComfyUI ran a Halloween‑day livestream on Wan 2.2 Animate covering practical knobs for motion control and output quality, with hosts breaking down the pipeline and sharing recipes for consistent results event announcement. A companion post links to the session and notes timing and hosts for on‑demand viewing event replay.