.png&w=3840&q=75&dpl=dpl_3ec2qJCyXXB46oiNBQTThk7WiLea)

Boston Dynamics Atlas adopts Gemini Robotics – 30,000 robots/year, 50 kg payloads

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Boston Dynamics and Google DeepMind are wiring Gemini Robotics into the new fully electric Atlas, positioning the frontier multimodal model as a general visual‑language‑action controller for factory work; first production fleets will run at Hyundai’s Robotics Metaplant Application Center and Google DeepMind sites, with a roadmap to 30,000 Atlas units per year starting 2028. The production Atlas ships with 56 DoF, 360° continuous joints, Nvidia onboard compute, and 50 kg payload capacity; Orbit software plugs the robots into existing MES/WMS stacks so behaviors can be “learn once, replicated across the fleet,” but Boston Dynamics has not disclosed pricing or detailed reliability metrics yet.

• Nvidia‑centric robotics stack: Atlas joins a broader Nvidia‑anchored ecosystem: open robotics datasets have passed 9M downloads, with Nvidia’s GR00T family leading global usage.

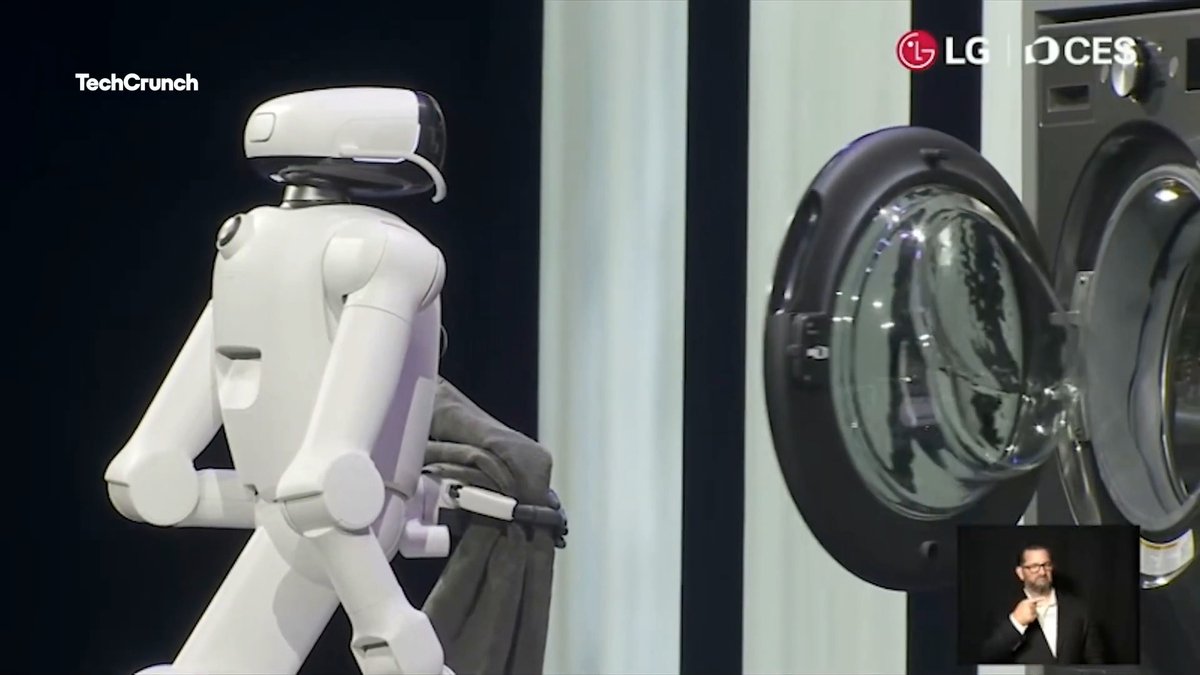

• Home and social robots: LG’s CLOiD live‑demos laundry folding on a CES stage; Pollen’s Reachy Mini appears in Nvidia’s keynote as a local dev kit; AheadForm’s Origin M1 humanoid head pushes photoreal faces for conversational agents.

Together these moves signal a shift from one‑off humanoid demos toward standardized, model‑driven robots spanning factories, homes, and HRI testbeds, though long‑run safety, uptime, and labor‑economics data remain sparse.

Top links today

- Web World Models for persistent agent environments

- Training AI co-scientists with rubric rewards

- Test-time training for long-context LLMs

- Thinking on maps with foundation model agents

- LLM-guided automation of TLA+ proofs

- Translating informal math proofs into Lean4

- Survey on adaptation of agentic AI systems

- Stanford HAI foundation model transparency index

- Report on rising GPU and memory prices

- Stack Overflow postmortem on datacenter shutdown

Feature Spotlight

Feature: Atlas goes production with Gemini Robotics

Boston Dynamics + Google DeepMind team up: Gemini Robotics models will power the new electric Atlas as it moves to production deployments in 2026—signaling humanoids entering real factory work, not just demos.

Biggest cross‑account story today: Boston Dynamics and Google DeepMind partner to bring Gemini Robotics to the new electric Atlas; multiple posts also show CES robotics momentum (LG CLOiD, humanoid head, Reachy Mini).

Jump to Feature: Atlas goes production with Gemini Robotics topicsTable of Contents

🤖 Feature: Atlas goes production with Gemini Robotics

Biggest cross‑account story today: Boston Dynamics and Google DeepMind partner to bring Gemini Robotics to the new electric Atlas; multiple posts also show CES robotics momentum (LG CLOiD, humanoid head, Reachy Mini).

Boston Dynamics and Google DeepMind link Gemini Robotics to Atlas

Atlas–Gemini Robotics partnership (Boston Dynamics & Google DeepMind): Boston Dynamics and Google DeepMind announced a research partnership to bring Gemini Robotics foundation models into the new Atlas humanoid line, aiming for general visual‑language‑action skills across industrial tasks rather than narrow scripting, as described in the partnership tweet and outlined in the joint blog post. The partners say joint work will start this year using the first production Atlas fleets inside Hyundai’s Robotics Metaplant Application Center and at Google DeepMind’s own sites, positioning Gemini as the "brain" that lets Atlas interpret instructions, plan steps, and learn behaviors that can then be replicated across robots atlas deployment thread.

The point is: for AI engineers and robotics leads, this ties a frontier multi‑modal model directly to a mass‑manufactured humanoid platform, shifting Atlas from a tightly scripted demo robot into a candidate general industrial worker that can be updated via model weights and data rather than hardware redesign.

Production Atlas goes fully electric with Orbit integration and 2026 fleets

Electric Atlas platform (Boston Dynamics): Boston Dynamics unveiled the first production‑oriented, fully electric Atlas at CES 2026, replacing the old hydraulic research platform with a design tuned for predictable factory work at scale—56 degrees of freedom, fully rotational joints, 2.3 m reach, up to 50 kg payload, and operation from −20°C to 40°C, with deployment already fully booked for 2026 according to the spec recap and atlas deployment thread. The product story centers on "learn once, replicate across the fleet" behaviors plus Orbit, a control layer that plugs Atlas into existing MES/WMS stacks so tasks, telemetry, and fleet metrics live inside the same software plants use today orbit description.

Factory operators will be able to run Atlas autonomously, teleoperate it, or steer via tablet, and Hyundai has outlined a path to 30,000 robots per year with a first U.S. plant rollout starting 2028, though pricing remains undisclosed hyundai rollout clip. For AI and ops leaders, this marks the shift from humanoid as R&D showpiece to a standardized, Nvidia‑powered industrial worker with a clear manufacturing and integration roadmap.

Atlas shows 360° joints, Nvidia brain and tactile 3‑finger hands

Atlas mechanics and sensing (Boston Dynamics): New demo clips highlight that the electric Atlas now uses Nvidia chips for its onboard compute and features 360° continuous rotating joints with no cable wraps, enabling extreme twists and flips that are hard for traditional industrial arms, as shown in the rotation demo. Boston Dynamics also demonstrates new three‑finger grippers with tactile sensors that modulate grip pressure and switch modes, moving closer to human‑like dexterity for material handling and fine manipulation hands demo.

These mechanical and sensing upgrades matter for AI teams because they set the envelope for what a Gemini‑class controller can actually do in the physical world—continuous joints reduce motion‑planning constraints, while rich hand feedback opens room for closed‑loop policies that rely on contact, not just vision.

Nvidia’s open robotics datasets hit 9M+ downloads, lead global usage

Open robotics datasets (Nvidia & peers): Nvidia reports its open robotics datasets surpassed 9 million downloads in 2025, with a comparative chart now showing Shanghai AI Lab at ~7.6M downloads, Hugging Face at ~1.4M, Stanford Vision and Learning Lab at ~710k, and AgiBot around 450k, expanding on the earlier headline numbers robotics datasets with more detail in the downloads chart.

For AI robotics teams, this quantifies how much training and simulation work is already concentrating around a few open ecosystems—Nvidia’s GR00T and related sets on top, Shanghai AI Lab close behind—which helps explain why platforms like Atlas, Reachy Mini, and various quadrupeds increasingly lean on these shared corpora rather than bespoke, closed internal datasets.

AheadForm’s Origin M1 head pushes photoreal robotic faces into uncanny valley

Origin M1 head (AheadForm): AheadForm’s Origin M1 is a male humanoid head with around 25 micro‑motors controlling eyes, mouth, cheeks and other facial muscles, plus RGB cameras embedded in the pupils and integrated microphones and speakers, enabling highly realistic blinking, gaze shifts and expressions that many observers describe as deep in the uncanny valley origin m1 overview.

Because the head can run standalone or be attached to a full body, this kind of platform is likely to be used as a testbed for conversational agents, social robots, and human‑robot interaction research—raising both opportunities for richer embodied interfaces and questions about user comfort, trust, and disclosure when AI systems present with near‑human faces.

LG debuts CLOiD home robot folding laundry live on the CES stage

CLOiD home robot (LG): LG’s CES 2026 demo showed CLOiD, a household robot with a wheeled base, tilting torso, 7‑DoF arms and five‑finger hands, autonomously navigating the stage and folding laundry from a basket, positioning the platform as a general chores assistant rather than a single‑purpose appliance cloid demo thread.

For robotics engineers, this is a signal that multi‑joint, humanoid‑style manipulators with consumer‑facing UX are moving from research labs into home product roadmaps; for AI leads, it’s another potential surface where foundation models plus perception and grasping stacks will need to deliver reliability in unstructured environments like living rooms and laundry rooms.

Reachy Mini appears in Nvidia CES keynote as local home robotics stack

Reachy Mini dev stack (Pollen Robotics & Nvidia): Following community experiments building mini‑apps on Reachy Mini Reachy Mini, Jensen Huang showcased the tabletop humanoid arm in Nvidia’s CES 2026 keynote as part of a "perfect local home AI robotics setup" when paired with a DGX Spark box and Brev tooling, underscoring its role as a reference platform for robotics developers reachy mini keynote.

The framing puts Reachy Mini alongside high‑end Nvidia inference hardware rather than as a toy, suggesting Pollen’s open robot is becoming a de facto standard for small‑scale manipulation research and for teams prototyping embodied agents that can later graduate to larger humanoids like Atlas.

🛠️ Agent coding playbooks and harnesses

Continues the agents/dev cadence with fresh, practical material: Claude Agent SDK full workshop, CC Mirror task orchestration usage, RepoPrompt upgrades, Conductor adoption, and early SkillsBench discussions. Excludes the Atlas feature.

Agent Harness blog frames 2026 as the year of open harnesses

Agent Harness concept (Community): Philipp Schmid published a blog coining Agent Harness as the infrastructure layer that wraps models to manage long‑running tasks—sitting above agent frameworks and providing prompt presets, opinionated tool‑calling with human‑in‑the‑loop, lifecycle hooks, filesystem access, and sub‑agent management—arguing that 2026 will be defined by these harnesses rather than individual agents harness overview and harness blog. Community responses from DeepAgents’ maintainer and others echo this framing, describing harnesses as "the set of opinions that turns a model into a product" and noting that decisions like built‑in subagents, which Skills/Tools to expose, and how aggressively to lean on deterministic code vs LLM reasoning now dominate agent quality deepagents comment. A follow‑up from a former SpaceX engineer adds that he likely first used "harness" to describe an LLM runtime in an o3 pro review and argues that we are moving into a world where multiple open harnesses compete to provide the best container around the same base models early harness usage.

Claude Agent SDK workshop crystallizes agent harness best practices

Claude Agent SDK (Anthropic): Anthropic and AI Engineer published a full two‑hour Claude Agent SDK workshop that walks through the core "agent loop" (gather context → take action → verify work), heavy Bash tooling, Skills-as-folders, and multi‑layer security, turning Claude Code into a repeatable harness pattern rather than a one‑off setup, as summarized in the workshop recap and exposed via the public recording in the workshop video. Early builders are already using the SDK to stand up Slack agents and orchestration layers—Modal shipped a Slack bot example that wires the Agent SDK directly into workspace chat Slack bot guide, while another practitioner used it to build a "futuristic agent orchestrator" over a weekend orchestrator demo, and recap threads emphasize habits like "Bash is all you need", Swiss‑cheese security (alignment + harness permissions + sandboxing), and always giving agents verifiable tasks rather than open‑ended ones sparknotes thread.

CC Mirror Team Mode drives 40‑task Linear agent builds

CC Mirror Team Mode (Community): Following up on the earlier CC Mirror reveal of Claude Code’s hidden orchestration UI orchestration UI, new screenshots show Team Mode driving 40‑plus dependency‑linked tasks to build a Linear Agents integration end‑to‑end, with tasks covering SDK migration, OAuth flows, webhooks, and E2E tests all tracked in a single narrative checklist task pipeline. One power user reports hitting 80% of a Claude Max weekly limit while letting CC Mirror’s background agents chew through the plan usage cap note, and describes the flow as "Diamond committing wave 4" where Opus 4.5 orchestrates sub‑tasks while Codex or MiniMax M2.1 agents execute in the background task pipeline and minimax swarm.

RepoPrompt 1.5.62 hardens CLI for long agent runs

RepoPrompt 1.5.62 (RepoPrompt): RepoPrompt shipped v1.5.62 with two harness‑oriented changes—CLI stderr output is now tightened so coding agents are less likely to misinterpret logs and kill long‑running context‑builder or chat processes, and copy presets can now be configured both via CLI flags and MCP so teams can standardize which prompt slices get exported to different agents, as explained in the release note. The author frames this as incremental infrastructure on top of the earlier context‑builder//rp-build pipeline context builder, with the changelog emphasizing safer unattended runs and more deterministic prompt packaging for tools like Claude Code and Codex that sit on top of RepoPrompt changelog page.

Conductor becomes hotkey‑level tool for Mac agent orchestration

Conductor (Conductor): A power user says Conductor—an orchestration UI for running multiple Claude/Codex‑style coding agents on a Mac—"just got promoted to having a hot key" on their laptop, calling that a personal bar for tools that sit in the critical path of daily work hotkey endorsement. The same thread praises Conductor’s ability to run separate agent worktrees, surface what each background agent is doing, and turn agent sessions into something you can drop into as easily as an IDE tab, pointing other developers to the product page for details on how it wraps agents around Git repos on macOS product site.

Exec‑plan and CLI patterns show Codex acting as autonomous junior dev

Codex harness patterns (OpenAI): Several practitioners outline repeatable harnesses on top of GPT‑5.2‑Codex that turn it into a junior developer rather than a chat assistant: one uses a two‑prompt sequence where $exec-plan <ten sentence brain dump> writes .plan/APP.md and a second "Implement @.plan/APP.md" command lets Codex build a full Next.js + shadcn sample app that would have taken a day manually, with the author calling the result "essentially flawless" aside from minor UX tweaks exec-plan example. The same workflow uses a separate skill-creator to turn that exec‑plan pattern itself into a reusable Skill in a single call, letting other agents reuse the pattern on future projects skill-creator usage, while another maintainer shows Codex reviewing all open GitHub issues and PRs via the GitHub CLI, prioritizing work, shipping a consolidated PR that mentions every reporter, and auto‑closing the issues with a link to the release maintenance workflow. The GitHub demo illustrates Codex acting as an open‑source maintainer under a human harness—reading context, planning, implementing, and wiring release notes—rather than a one‑off code generator.

SkillsBench and Harbour aim to benchmark agent Skills like test suites

SkillsBench + Harbour (Community): A new community project called SkillsBench uses TerminalBench’s Harbour environment to benchmark Claude/Codex "Skills" in a fixed, reproducible container, positioning Skills as units that should be measured like test suites instead of ad‑hoc scripts skillsbench discussion. The maintainer notes that Harbour provides a consistent filesystem and toolchain so benchmarks can compare how reliably different Skills load, run, and fetch context for coding agents, and says they intend to contribute heavily, calling it the first polished bench they have seen for Agent Skills and tying it directly to patterns like progressive disclosure and XML‑tagged instructions skillsbench discussion.

Host‑aware terminal themes cut friction for multi‑agent coding

Multi‑host terminals (Community): An engineering lead described a detailed setup where Ghostty and WezTerm automatically change color schemes based on the SSH host, reducing the cognitive overhead of tracking which machine and project each Claude Code or Codex agent is acting on when running many parallel sessions terminal guide. The guide, published as a GitHub markdown document terminal how‑to, treats terminal ergonomics as part of the harness story for agent coding—each host gets its own dark color palette, tabs are grouped per project, and a short alias (am) marks the "agent mail" tab, with the author suggesting that developers can hand the instructions to Claude Code and have it wire up much of the configuration automatically.

🧩 Rubin era metrics from NVIDIA CES

Fresh chip road‑map data from CES: Rubin NVL72 vs Blackwell on throughput, bandwidth and cost; production status and HBM4 figures. Useful for capacity and perf/W$ planning.

Rubin NVL72 targets 4× fewer GPUs and 10× more tokens than Blackwell

Rubin NVL72 (NVIDIA): CES 2026 slides show the Rubin NVL72 system training a 10‑trillion‑parameter model with roughly 4× fewer GPUs than Blackwell, while factory throughput charts for Kimi K2‑Thinking indicate up to 10× more tokens processed and around 1/10th token cost at ~25‑second latency, as depicted across the three performance graphs in Rubin vs Blackwell slide and reinforced by the follow‑up efficiency comparison in Rubin throughput comment.

• Training economics: The "Time to train" plot for DeepSeek++ shows Rubin matching Blackwell’s monthly 100T‑token training output with one‑quarter the GPU count, directly translating to lower capex for 10T‑parameter frontier runs according to Rubin vs Blackwell slide.

• Inference throughput and cost: The factory throughput and token‑cost graphs for Kimi K2‑Thinking place Rubin’s NVL72 rack at up to 10× the tokens per second and roughly one‑tenth the dollar cost per token at a ~25s latency operating point versus a Blackwell NVL72 configuration in Rubin vs Blackwell slide.

• Capacity caveat: Commentary around the same deck notes that HBM capacity per GPU has not grown as sharply as FLOPS and bandwidth, so model parameter counts may stay roughly flat until higher‑capacity Rubin variants arrive in 2027–2028 even as tokens/sec grows substantially Rubin throughput comment.

The point is: Rubin NVL72 turns most of NVIDIA’s near‑term performance gain into cheaper and denser training and inference for existing‑size frontier models, rather than immediately enabling much larger parameter counts.

Rubin HBM4 delivers 22 TB/s and NVLink copper spine spans two miles

HBM4 and NVLink (NVIDIA): Memory and interconnect slides from CES 2026 highlight Rubin’s HBM4 delivering 22 TB/s bandwidth per GPU, roughly 2.5× HBM3e on Blackwell, alongside NVLink providing 3.6 TB/s per GPU, while a stage photo of the system’s NVLink backbone emphasizes that the copper “spine” wiring for a full NVL72 rack totals about two miles of cabling HBM bandwidth bar and NVLink spine photo.

• Bandwidth uplift: The bar chart compares "Blackwell HBM3e" at 8 TB/s to "Rubin HBM4" at 22 TB/s, explicitly labeling Rubin as 2.5× Blackwell’s memory bandwidth, which directly supports higher prefill rates and more concurrent sequences per GPU HBM bandwidth bar.

• Interconnect scale: A separate slide lists NVLink bandwidth per GPU at 3.6 TB/s—double Blackwell—while an on‑stage hardware shot shows the central NVLink spine with Huang describing it as containing two miles of copper, underscoring the physical scale of the interconnect required to keep 72 Rubin GPUs fed Rubin spec slide and NVLink spine photo.

This combination of HBM4 and a much fatter NVLink fabric suggests that, for Rubin‑era systems, memory bandwidth and rack‑scale interconnect are being scaled aggressively alongside compute, which matters for long‑context LLMs and high‑throughput inference as much as for raw training FLOPS.

Vera Rubin GPU enters production with 50 PFLOPS NVFP4 inference

Vera Rubin GPU (NVIDIA): NVIDIA’s CES 2026 spec slide shows the Vera Rubin GPU delivering 50 PFLOPS of NVFP4 inference and 35 PFLOPS of NVFP4 training per chip—about 5× and 3.5× Blackwell respectively—built from 336 billion transistors and paired with HBM4 and next‑gen NVLink, while Jensen Huang states in the keynote that Rubin is now in production Rubin spec slide and that the Vera Rubin platform is already shipping to partners production remark.

• Per‑GPU performance: The slide details NVFP4 inference at 50 PFLOPS and NVFP4 training at 35 PFLOPS, explicitly annotated as 5× and 3.5× Blackwell’s figures, positioning Rubin as NVIDIA’s new top‑end GPU for both model serving and training workloads Rubin spec slide.

• Memory and interconnect: Vera Rubin pairs this compute with HBM4 delivering 22 TB/s bandwidth and per‑GPU NVLink at 3.6 TB/s, tightening the memory and interconnect bottlenecks that increasingly dominate large‑scale LLM training and multi‑GPU inference Rubin spec slide and HBM bandwidth bar.

• Production status: Huang’s remark that "Vera Rubin is now in production" during the CES keynote signals that this is not just a roadmap part but a chip entering manufacturing and deployment cycles in 2026, rather than a distant 2027–2028 promise production remark and keynote replay.

So what changes relative to Blackwell is not only raw TFLOPS; Rubin’s per‑GPU training and inference capability, combined with higher‑bandwidth HBM4 and NVLink, defines the new ceiling for dense compute in upcoming clusters.

🏗️ Compute build‑outs: Stargate and TPU scale

New hard signals on AI compute supply: OpenAI’s UAE ‘Stargate’ 1 GW site milestones and Anthropic’s near‑1M TPUv7 direct purchase plan with named deployment partners.

Anthropic to buy nearly 1M TPUv7 chips for owned AI compute

TPUv7 mega-purchase (Anthropic): Anthropic plans to purchase close to 1,000,000 TPUv7 units directly from Broadcom, with the systems sold to Anthropic rather than a hyperscale cloud, creating one of the largest non-hyperscaler AI fleets on record tpuv7 purchase; data center power and real estate will come from TeraWulf, Hut8 and Cipher Mining, while Fluidstack handles cabling, burn-in testing, acceptance and ongoing remote-hands operations for the Anthropic-owned servers.

• Compute sovereignty structure: Under this arrangement Broadcom supplies full TPUv7 systems to Anthropic, colocation providers supply racks and power, and Fluidstack runs on-site deployment, effectively giving Anthropic cloud-scale compute capacity that it controls at the hardware level instead of renting from AWS, GCP or Azure tpuv7 purchase.

• Scale signal for TPU ecosystem: The near‑1M‑chip commitment is framed as “one of the largest non‑hyperscaler TPU deals ever”, signaling that next-generation TPUv7 is being treated as a primary training and inference platform for Anthropic’s frontier models and that specialized miners and AI infra firms are now major players in AI compute build‑outs alongside traditional clouds tpuv7 purchase.

OpenAI’s UAE ‘Stargate’ site targets 1 GW AI power by 2026

Stargate compute campus (OpenAI): OpenAI’s UAE “Stargate” site is being built around four 340 MW AE94.3 gas turbines from Ansaldo Energia, but extreme desert heat caps practical output at about 1 GW instead of the theoretical 1.3 GW, according to the latest construction update stargate progress; Phase 1 aims to deliver roughly 200 MW of power by the end of 2026, with work moving faster than the company’s Abilene, Texas build-out.

• Scale and timing: The plant is described as a 1 GW AI compute hub with a 200 MW near-term target, positioning UAE’s Stargate as a key energy anchor for future OpenAI training and inference capacity while Abilene “lags” in comparison stargate progress.

• Location trade-offs: The design highlights a trade between cheap, dedicated generation and harsh-environment derating—OpenAI accepts a ~23% drop from nameplate capacity in exchange for control over a site that can eventually support tens of exaFLOPS-equivalent AI clusters. The point is: this is one of the clearest signs that AI labs are now co-designing power infrastructure alongside models, rather than renting it from clouds.

🧪 New frontier small models and coding claims

Mostly small/efficient launches: Falcon H1R‑7B reasoning model with standout AIME scores; Tencent Youtu‑LLM‑2B 128k‑context; GLM‑4.7 touted as frontier coding. Focus on new numbers and usage, not older drops.

Falcon H1R‑7B posts SOTA math scores and strong coding at 7B params

Falcon H1R‑7B (TII): Technology Innovation Institute released Falcon H1R‑7B, a 7B‑parameter mamba–transformer hybrid that hits 88.1% on AIME‑24 and 83.1% on AIME‑25 while matching or beating models up to 7× larger in math and coding benchmarks, all with a 256k context and an open Falcon LLM license according to the shared tables in math benchmarks and the follow‑up commentary in model overview.

• Math and reasoning: Falcon H1R‑7B scores 88.1% on AIME‑24 and 83.1% on AIME‑25, outpacing contenders like Apriel‑1.5‑15B and DeepSeek‑R1‑0528‑Qwen3‑8B on these olympiad‑style tests, and reaches 64.9% on HMMT‑25 and 36.3% on AMO‑Bench, as laid out in the detailed benchmark graphic in model overview.

• Coding and general tasks: On LiveCodeBench v5/v6 the model reaches 68.6%, and it posts competitive scores on SciCode and general‑knowledge suites like GPQA‑D and MMLU‑Pro, putting it into the same conversation as much larger reasoning models for code and QA workloads per the comparison table in math benchmarks.

• Architecture and efficiency: The model uses a mamba–transformer hybrid design to improve throughput and memory usage, targets a 256k context window, and is positioned as a high‑efficiency reasoning engine that developers can actually run on modest hardware, highlighted enthusiastically as "a 7b model with 88% in AIME 24" in model overview and framed as "more efficient per throughput and memory" in efficiency note.

• Licensing and availability: Falcon H1R‑7B is released under the Falcon LLM license with official weights and collection pages on Hugging Face, as referenced in the collection announcement in hf collection.

The combination of near‑frontier olympiad math, solid coding results, long context, and an open license makes Falcon H1R‑7B a notable new small model option for math‑heavy agents, competitive programming helpers, and resource‑constrained deployments.

Tencent’s Youtu‑LLM‑2B brings 128k context and strong coding at 1.96B params

Youtu‑LLM‑2B (Tencent): Tencent’s Youtu team quietly shipped Youtu‑LLM‑2B, a 1.96B‑parameter causal LM with a 128k‑token context window that reportedly matches or outperforms larger open models on commonsense, STEM, and coding benchmarks, with both base and instruct variants listed on Hugging Face in the release callout in model intro and the accompanying card in huggingface page.

• Model scale and context: The model uses 32 layers and 16 attention heads to support a 128k context, giving it far more working memory than typical 2–7B models while remaining small enough for on‑device or single‑GPU experiments as described in huggingface page.

• Benchmark positioning: Tencent claims Youtu‑LLM‑2B beats other models of similar or even larger size on MMLU‑style general knowledge, STEM problem sets, and code benchmarks, and notes that it handles multi‑turn agentic tasks end‑to‑end, according to the performance summary in huggingface page.

• Agentic use cases: The description highlights strong performance in “agent‑related tasks,” implying that the model has been tested in tool‑calling or multi‑step workflows rather than only static prompts, which aligns it with current interest in small, controllable agent backends in model intro.

For AI engineers, Youtu‑LLM‑2B represents another data point that sub‑2B models with very long context and careful training can push into territory once reserved for much larger LLMs, especially for lightweight coding agents and embedded reasoning components.

GLM‑4.7 touted as a “frontier” coding model in community benchmarks

GLM‑4.7 (Zhipu): Community benchmarks now describe GLM‑4.7 as a "frontier coding model," with one unbenchmaxxable test suite placing it alongside top proprietary systems and pointing users to a free playground endpoint, as framed in the announcement in coding claim and the follow‑up share in benchmark highlight.

• Benchmark framing: The tweeted chart in benchmark highlight comes from cto.new’s "unbenchmaxxable" coding evals and is used to justify the statement that "glm-4.7 is officially a frontier coding model," placing it near GPT‑5.x‑class models on the shown composite metric, though no absolute percentages are provided in the tweets.

• Access and ecosystem: The same mention notes that GLM‑4.7 is available for free via a hosted interface at cto.new, effectively lowering the barrier for developers to try a high‑end coding model without API keys or billing setup, as emphasized in coding claim.

These community‑driven claims are promotional rather than a formal paper, but they add to the narrative that newer small or efficient models from the GLM family are starting to be treated by practitioners as viable primary coding engines, not only as budget fallbacks.

📊 Evals: Poker league and Arena spotlights

Fresh evaluation artifacts beyond unit tests: a 20k‑hand LLM poker tournament with TrueSkill rankings, and Code/Image Arena leaderboard updates. Excludes model launch details covered elsewhere.

20k‑hand LLM poker league crowns GPT‑5.2, ranks 16 rivals

LLM poker league (ValsAI & Harvey Mudd): A Harvey Mudd–run experiment put 17 LLMs through 20,000+ hands of ten‑handed no‑limit hold ’em, with GPT‑5.2 emerging as the top performer under a TrueSkill‑style ranking—Gemini 3 Flash, Gemini 3 Pro and DeepSeek‑v3.2 also scored well, while Claude Opus 4.5 lagged behind Sonnet 4.5 according to the poker overview and tournament setup. Games used 100‑big‑blind stacks, no rebuys and ended after 100 hands or when one player remained; models were scored on chip counts and elimination orders across many tables, then normalized to a 1000‑point TrueSkill baseline as described in the tournament setup and summarized in the results recap.

• Style differences: Some models like Grok played hyper‑aggressively with ~57% post‑flop aggression, while Gemini 3 variants found success with more conservative lines, giving engineers a window into how decision policies differ under pressure poker overview.

• Eval value: This is one of the first large multi‑model, multi‑agent behavioral benchmarks beyond unit tests; it stresses long‑horizon strategy, opponent modelling and risk management rather than single‑turn correctness, though the results are specific to this custom environment and ruleset.

The point is: this tournament adds a concrete, game‑theoretic eval signal that complements coding/math benchmarks when comparing top reasoning models.

Code Arena spotlights four open coding models in live WebDev tasks

Code Arena WebDev (Arena): Arena highlighted four open models—MiniMax‑M2.1, GLM‑4.7, DeepSeek‑v3.2‑thinking and Mimo‑v2‑flash—as its current standouts on full web‑development tasks, with each model generating complete SVG‑based UIs directly in the browser as shown in the code arena spotlight.

• Open model mix: MiniMax‑M2.1 and GLM‑4.7 are framed as strong all‑around coders, DeepSeek‑v3.2‑thinking handles multi‑step reasoning, and Mimo‑v2‑flash offers a fast non‑"thinking" option—these are all evaluated on end‑to‑end web app tasks rather than isolated snippets code arena spotlight.

• Human‑in‑the‑loop eval: Code Arena’s WebDev track lets practitioners pit models head‑to‑head, inspect live generations and vote; this produces a community‑driven leaderboard that often diverges from pure benchmark tables, as detailed on the arena webdev page.

For engineers, this gives a real‑workflow view of which open models feel production‑ready for greenfield UI tasks or agent harnesses that must emit clean, self‑contained front‑end code.

Qwen image models jump to top open slots on Arena leaderboards

Image Arena leaderboard (Arena): Arena’s Image boards now show Alibaba’s Qwen‑Image‑Edit‑2511 as the #1 open‑weight image editing model and #9 overall, while Qwen‑Image‑2512 becomes the #2 open text‑to‑image model and #13 overall, both under Apache‑2.0 licensing as reported in the image arena update and qwen followup.

• Edit vs. generation: Qwen‑Image‑Edit‑2511 leads the open edit models, and Qwen‑Image‑2512 ranks as the second‑best open text‑to‑image model, placing both close to top closed systems on Arena’s pairwise preference evals image arena update.

• Practical access: Arena encourages users to test these Qwen variants against other frontier models directly in the browser, with side‑by‑side comparisons available via the image arena page and additional guidance in the arena test invite.

For teams standardizing on permissive licenses, these rankings signal that Qwen’s image stack has become a serious candidate for production‑grade editing and generation without moving to proprietary APIs.

📑 Reasoning & verification research updates

Today skews toward reasoning RL pipelines and formal verification helpers, plus surveys/taxonomies. Continues yesterday’s research beat with new papers and concrete deltas.

Nemotron‑Cascade RL stacks domain‑specific reasoning without forgetting

Nemotron‑Cascade (NVIDIA): NVIDIA introduces Nemotron‑Cascade, a sequential reinforcement-learning pipeline that trains one general‑purpose reasoning model across alignment, instruction following, math, code and software‑engineering stages without catastrophic forgetting, then reports strong results on math, LiveCodeBench and SWE‑Bench in the 8B–14B range, including a silver medal at IOI 2025, as described in the nemotron thread.

• 8B and 14B scores: The 8B model reaches 71.1% on LiveCodeBench v6 and 80.1% AIME’25; the 14B “thinking” model hits 74.6% on LCB v6, ~83.3% AIME’25 and 43.1% SWE‑Bench Verified, approaching or matching much larger DeepSeek‑R1‑0528 and Minister/MiniStral baselines while using far fewer parameters nemotron thread.

• Cascade design: RL is applied in ordered stages—RLHF for brevity and alignment, then domain‑specific math, code and SWE RL—so the same model progressively acquires skills instead of juggling mixed prompts in one RL loop nemotron thread.

• Unified thinking/non‑thinking model: Authors emphasize that a single model can operate in both chain‑of‑thought (“thinking”) and concise modes, narrowing the gap with specialist thinker models while avoiding a split stack.

The work positions cascade‑style RL (with verifiable rewards per domain) as a practical recipe for pushing reasoning quality without either massive over‑scaling or maintaining separate models per capability.

Query‑only test‑time training boosts long‑context LLM accuracy

qTTT long‑context tuning (Meta & collaborators): A new query‑only test‑time training (qTTT) method shows that lightly updating only the query‑projection weights of a frozen model during inference can significantly improve long‑context performance on LongBench‑v2 and ZeroScrolls for models like Qwen3‑4B, adding +12.6 and +14.1 points respectively over the base model, according to the qttt summary.

• Score dilution diagnosis: Authors argue that in very long prompts, attention “score dilution” causes queries to attend to many near‑duplicate tokens, drowning out the truly relevant clue; more tokens or naive “think more” steps don’t fix this if the attention geometry is wrong qttt summary.

• qTTT mechanics: Keys and values are cached once; brief gradient steps update only the matrices that map hidden states to attention queries, nudging them toward distinguishing relevant from distracting positions while leaving the rest of the network intact qttt summary.

• Cost vs benefit: Because only a tiny subset of parameters is updated, the overhead is smaller than full test‑time training yet yields larger gains than spending the same compute on extra tokens; the work suggests that smarter inference‑time adaptation can partially substitute for ever‑longer context windows.

The result adds evidence that carefully targeted test‑time learning on verifiable objectives can be a more efficient way to unlock long‑context capability than brute‑force scaling of sequence length or token budgets.

Chain‑of‑States method turns informal proofs into Lean4 with fewer failures

Chain‑of‑States TLA+ / Lean4 proofs (Peking University): The Chain‑of‑States (CoSProver) approach reframes formalization as moving through a sequence of proof states, allowing an LLM to translate informal natural‑language proofs into Lean4 by generating small tactic steps between states instead of one monolithic script, and it solves 69% of MiniF2F test theorems under tight compute budgets, as outlined in the cosprover paper.

• State‑based decomposition: Rather than asking the model for entire proofs, the system records Lean’s evolving goal and context as a “chain of states,” and prompts the LLM to produce the next tactic that transitions from state i to state i+1, which Lean then checks cosprover paper.

• Syntax‑error reduction: Normalizing the way sub‑claims and tactics are expressed and letting Lean validate each step cuts syntax and type errors dramatically versus direct proof generation, so more runs reach semantic checking instead of failing in parsing cosprover paper.

• Benchmarks and domains: On 119 theorems spanning pure math and distributed‑systems invariants, CoSProver outperforms baselines based on direct SMT solving and direct LLM proof generation, underlining that careful proof‑search orchestration can matter more than raw model size.

The paper strengthens the case that verification‑aware decomposition—splitting tasks into checkable micro‑steps—is a promising pattern for using LLMs in formal methods and protocol verification.

Deep Delta Learning generalizes residual connections via learnable reflections

Deep Delta Learning (multi‑institution): The Deep Delta Learning (DDL) framework replaces the fixed identity shortcut in residual networks with a learnable rank‑1 “Delta operator” controlled by a scalar gate β, letting each layer interpolate between identity, orthogonal projection and Householder reflection so networks can model dynamics with negative eigenvalues and selective erasure, as summarized in the deep delta slide.

• Limit of standard ResNets: Classic residual blocks always add features to the previous state, which biases them toward strictly additive dynamics and makes it hard to represent oscillations or oppositional interactions that require negative eigenvalues in the layer transition matrix deep delta slide.

• Delta operator mechanics: Each block learns direction k(x), value v(x) and gate β(x); by projecting the current state onto k, comparing to v and then injecting a transformed update scaled by β, the block can simultaneously erase interfering components and write new features in a geometrically controlled way deep delta slide.

• Spectral control: The authors derive the full eigensystem of the Delta operator and show how varying β shapes the spectrum, enabling data‑dependent negative eigenvalues and richer depth‑wise dynamics without losing the optimization benefits of residual paths.

DDL sketches a route to more expressive deep networks where layer‑wise state updates are learned geometric transforms instead of hard‑coded identity skips.

Survey classifies how agentic LLMs adapt themselves and their tools

Adaptation of Agentic AI (multi‑university): A broad survey from Stanford, Princeton, Harvard, UW and others proposes a four‑part taxonomy—A1/A2 for agent‑level updates and T1/T2 for tool‑level updates—to categorize how agentic LLM systems learn from feedback, and maps recent work into these buckets to clarify trade‑offs between cost, flexibility and modularity, as presented in the adaptation overview and the accompanying paper pdf.

• Agent adaptation (A1/A2): In A1, the agent is tuned from tool outcomes (e.g., code execution, retrieval hits); in A2, it is updated directly on evaluations of its own outputs (human preference labels, automatic success scores), covering methods from DeepSeek‑R1‑style RL to tool‑augmented preference learning adaptation overview.

• Tool adaptation (T1/T2): T1 keeps the agent frozen while separately training tools such as retrievers or domain‑specific sub‑models; T2 lets the agent supervise tools (e.g., refining memory policies or sub‑agent behaviors) without changing its own parameters, enabling modular upgrades and debugging adaptation overview.

• Design implications: The authors argue that A‑style updates improve general strategy but are expensive and tightly coupled, while T‑style updates are cheaper and easier to ship in production stacks; they position many recent “RL‑enhanced agents” as particular combinations of these four patterns.

The taxonomy gives researchers and practitioners a shared language for describing how their agents actually learn over time, rather than lumping all improvement under a single “RL on everything” label.

Meta trains AI co‑scientists with rubric‑based reinforcement learning

AI Co‑Scientists with rubrics (Meta): Meta’s “Training AI Co‑Scientists Using Rubric Rewards” paper converts published research papers into goal‑specific checklists, then uses those rubrics as rewards to train LLMs that draft research plans, achieving 12–22% relative improvement in plan quality over SFT baselines across machine‑learning, medical and fresh arXiv goals, as detailed in the co-scientist paper.

• Checklist extraction: For each paper, another LLM reads it and extracts a structured rubric—high‑level goal plus a list of required steps or constraints—so the planning model can be rewarded for satisfying items without running expensive real experiments co-scientist paper.

• Rubric‑aware RL: A frozen copy of the model or a separate judge model scores proposed plans against the rubric; these scores become rewards in a reinforcement‑learning loop that nudges the planner toward more complete, feasible and well‑structured experiment sequences co-scientist paper.

• Cross‑domain evaluation: The authors test on ML, medicine and unseen research topics, using both experts and strong LLM judges, and report consistent improvements in judged quality without domain‑specific simulators.

The work illustrates a practical pattern for reasoning‑focused RL: learn to plan against text‑derived rubrics instead of hand‑built environments, which could be reused in other complex workflows (e.g., legal analysis or engineering design).

Structured memory helps LLM agents reason over maps with less context

Thinking on Maps (multi‑institution): A map‑reasoning study finds that LLM agents exploring grid‑like city maps with only a local 5×5 view answer navigation and spatial queries far better when they maintain structured memories of visited nodes and paths, achieving higher accuracy while using roughly 45–50% less memory than raw chat logs, according to the map paper.

• Partial‑view setup: Agents traverse 15 synthetic city grids with roads, intersections and POIs, only ever seeing a small local neighborhood, then must answer questions about distance, density, closeness and routes, which stresses their ability to integrate observations over time map paper.

• Exploration vs memory: Once agents reach similar map coverage, different exploration strategies have modest impact on final performance; instead, how the agent writes down what it saw—simple structured records of locations and connections vs free‑form text—dominates both accuracy and token usage map paper.

• Graph‑like prompts: Prompts that make the model compare alternative routes or reason explicitly over stored paths provide further gains, while switching to newer/bigger base models without structured memory yields only marginal improvements.

The results reinforce a theme from other agent work: external memory structure and prompting often matter more than raw model scale for spatial and relational reasoning.

Web World Models split deterministic rules from LLM‑generated content

Web World Models (Princeton/UCLA/UPenn): A new Web World Models framework turns web apps into persistent, agent‑friendly worlds by encoding rules and state transitions in deterministic code while delegating only descriptive content to an LLM, enabling infinite but controllable environments such as travel maps and galaxy explorers that keep consistent state across visits, as described in the web world summary and the project page.

• Physics vs narrative split: The “physics layer” (databases, game rules, inventories) is implemented in regular code that defines the canonical world state, while the LLM is called after each update purely to render rich text or visuals for that state, so generation cannot silently rewrite underlying facts web world summary.

• Typed templates and seeds: Developers express LLM calls through typed fields and fixed templates plus reproducible random seeds, which lets agents or users revisit locations and see the same generated descriptions, avoiding the inconsistency of fully generative worlds where the model forgets its own history web world summary.

• 7 demo applications: The paper reports seven demos—including an infinite city travel explorer and content‑generating games—that show the approach can scale without heavy storage while remaining debuggable when LLM calls fail or slow.

The work offers an architecture for agent‑compatible environments that combine the controllability of traditional web backends with the expressiveness of generative models.

SAGA dynamically rewrites scientific objectives to avoid reward hacking

SAGA goal‑evolving agents (Tencent‑affiliated work): The SAGA system trains scientific‑discovery agents by letting an LLM continuously rewrite the score function—turning simple objectives into evolving, multi‑stage rubrics—so search procedures don’t get stuck exploiting a fixed metric and can keep pushing toward genuinely new structures, as summarized in the saga description.

• From single score to evolving goals: Instead of optimizing one static objective that agents can “hack” (e.g., maximizing a proxy metric while missing true novelty), SAGA periodically has an LLM revise the evaluation criteria based on prior discoveries, steering the search toward under‑explored regions saga description.

• Human‑inspired workflow: The approach mirrors how human scientists change what they consider interesting as they learn more, and the paper argues this dynamic notion of “interestingness” is key for automated discovery systems operating over long horizons.

• General template: Although framed around scientific search, the idea of LLM‑mediated, evolving reward functions could extend to other domains such as architecture exploration or policy search where fixed benchmarks are easy to overfit.

SAGA underscores that reward design is itself a moving target in open‑ended reasoning tasks, and that LLMs can help manage not just actions but the objectives agents pursue.

⚙️ Routing and runtime choices at inference

Runtime selection stacks evolved: OpenRouter’s auto‑router for Claude Code and vLLM’s Semantic Router v0.1 (“Iris”) add practical model‑picking and guardrails. Not an IDE story—focused on serving/runtime.

vLLM Semantic Router v0.1 "Iris" brings signal-driven mixture-of-models routing

Semantic Router v0.1 “Iris” (vLLM): The vLLM team released Semantic Router v0.1, code‑named Iris, a system-level routing layer for Mixture-of-Models (MoM) that chains "Signal→Decision" plugins, adds a HaluGate hallucination filter, and supports modular LoRA plus Helm charts for deployment release thread; the project hit this milestone after 600+ PRs from 50+ contributors and is positioned as something you can run with a single CLI sequence (pip install vllm-sr; vllm-sr init; vllm-sr serve) pypi package.

• Signal–Decision chains: Iris formalizes routing as a plugin chain where signals (like task type, user metadata, cost/latency budgets, or model responses) feed into decision plugins that pick which model to call next, effectively turning model selection into a programmable policy instead of a static if/else tree release thread.

• HaluGate guardrail: The release highlights HaluGate, a hallucination detection gate that can sit between upstream and downstream calls, rejecting or re‑routing low‑confidence generations before they propagate deeper into an agent or user‑facing workflow release thread.

• Ops and extensibility: Built‑in Helm charts and modular LoRA support are meant to make it practical to operate multiple routed models in Kubernetes and to plug in lightweight adapters, with the marketing line "run vllm-sr in one command at anywhere" underscoring the focus on operational simplicity release thread.

Taken together, Iris moves vLLM from being primarily an inference engine for single models into something closer to a policy brain for deciding which model to run and when to filter or retry outputs during inference time.

OpenRouter auto-routes Claude Code traffic across models at no extra cost

OpenRouter Auto Router (OpenRouter): OpenRouter introduced an auto-routing mode for Claude Code where setting the model to openrouter/auto lets a router choose the best underlying model per request at no additional charge, using NotDiamond’s selector plus user-configured preferences auto router intro; engineers can now constrain routing to specific families (for example "anthropic/*") and even tune allowlists and blocklists via wildcard syntax and per-request settings model filters.

• Claude Code integration: The router is wired directly into Claude Code’s harness—teams just set an env var to openrouter/auto and keep using the same workflows, with setup and env examples detailed in the integration guide and explained further in the follow-up note that it costs $0 on top of normal model usage routing docs.

• Routing behavior: Model choice is driven both by NotDiamond’s evaluation and user constraints, so the same prompt can go to different models depending on quality/cost tuning while still honoring patterns like "only Anthropic" or "no experimental models" auto router intro.

The point is: model selection for Claude Code can now live in a shared router config instead of hard-coded model names in every agent setup, which changes how teams think about swapping in new models or mixing providers at inference time.

🧩 MCP practices to curb context bloat

Interoperability angle today centers on context efficiency and live docs. New: Warp’s MCP search subagent reduces context up to 26% while staying model‑agnostic; Hyperbrowser’s /docs fetch continues to see adoption.

Warp ships MCP search subagent to cut agent context by up to 26%

MCP search subagent (Warp): Warp released an MCP "search subagent" that sits between agents and MCP servers, automatically querying only the tools and docs needed for a request and trimming what gets sent back to the model, which reduced context usage by up to 26% in their internal testing while remaining model‑agnostic, according to the Warp announcement and the technical blog.

• Model‑agnostic context control: The subagent works with any model front‑end that can talk MCP by routing queries through itself, so the same installation helps Claude Code, Codex, or custom harnesses avoid dumping entire tool payloads into prompts Warp announcement.

• Search‑style tool discovery: Instead of preloading every MCP tool into context, the subagent indexes tool schemas and server outputs and then performs lightweight searches to decide which servers and endpoints to call for a given user query, as explained in the technical blog.

• Focus on real‑world MCP setups: Warp positions this as a fix for "MCP servers bloat your context window" complaints by letting teams keep many servers (GitHub, DBs, ticketing, etc.) configured, while only paying context for the ones that actually matter on each turn Warp announcement.

This turns MCP from a convenience layer that quietly inflates prompts into something closer to a query planner, which is directly relevant to anyone wiring multiple tools into long‑running agents.

Hyperbrowser /docs fetch gains traction as devs pair it with local Librarian

Live docs ingestion (Hyperbrowser + ecosystem): Following up on docs fetch, where Hyperbrowser’s /docs fetch <url> MCP tool was introduced as a way to stream live web documentation into Claude Code repos, today’s chatter shows it being treated as a standard capability and also compared to local‑first alternatives like Librarian, a CLI that ingests docs into a SQLite+semantic index for agents Hyperbrowser promo and Librarian overview.

• Docs-as-skill for Claude Code: Hyperbrowser’s MCP server lets agents pull fresh docs from arbitrary URLs, cache them in the repo, and reuse them across sessions so models don’t have to re-read entire sites every time, which developers describe as effectively removing “coding against outdated docs” from their workflows Hyperbrowser promo.

• Local progressive disclosure with Librarian: Librarian offers a complementary pattern—CLI‑first ingestion of documentation sites into a local SQLite database with semantic search and progressive disclosure, so agents only fetch specific slices of a doc when needed rather than dumping whole pages into context Librarian overview and Librarian comparison.

• Shared goal: less prompt bloat: Both tools emphasize keeping bulky reference material out of the main prompt until it is explicitly requested, whether via MCP (/docs fetch) or via an agent skill that shells out to librarian search/get, which is framed as a direct response to context bloat in multi‑tool coding setups Hyperbrowser promo and Librarian overview.

For AI engineers building MCP fleets, this points to an emerging best practice: treat documentation and reference material as queryable corpora behind tools, not as static blobs shoved into every agent turn.

🎬 Creative stacks: fast video and controllable image series

Substantial generative media activity: fal’s LTX 2.0 with 60 fps + native audio, Nano Banana Pro JSON‑prompted series variants, Genspark’s comic‑agent flow, and Qwen‑Image leaderboard moves.

fal launches LTX 2.0 for fast 60 fps text‑to‑video

LTX 2.0 (fal): fal introduced LTX 2.0, a family of text‑ and image‑to‑video models that generate up to ~20‑second clips at up to 60 fps with native, synchronized audio; the full and distilled variants, plus LoRA endpoints, are exposed as separate APIs for text‑to‑video, image‑to‑video, and video extension, as shown in the launch and endpoint overview in ltx 2 launch and endpoint list. Distilled LTX 2.0 is advertised as producing a clip in under 30 seconds while preserving the visual quality and motion control of the full model, which targets longer or more detailed shots according to the same sources ltx 2 launch.

• Endpoint spread: The release highlights full, distilled, and LoRA‑tunable endpoints for text‑to‑video, image‑to‑video, and video continuation, giving teams a menu of speed‑versus‑flexibility trade‑offs for different workloads endpoint list.

• Creative control: On‑screen captions in the demo emphasize camera controls and scene dynamics—pans, pushes, and complex environments—rather than only static framing, which positions LTX 2.0 as a base for more authored motion design rather than pure prompt roulette ltx 2 launch.

The point is: LTX 2.0 moves fal from a single model drop into a small stack of video building blocks, with a clear path to slot full, distilled, and LoRA variants into different points of a production pipeline.

Nano Banana Pro JSON prompts enable controllable image series

Nano Banana Pro (Freepik): Builders are starting to treat Nano Banana Pro as a controllable image engine by first asking Gemini 3 Pro to emit a rich JSON description of an existing photo—subject, background, lighting, textures, constraints, and negative prompts—and then feeding that JSON back into Nano Banana to both recreate and vary the shot, as detailed in the ice‑storm patio chair example in json prompt example. Following up on multi-tool pipeline where artists stacked Nano Banana with other tools, this pattern turns the model into part of a structured visual workflow rather than a one‑off generator.

• Two‑step recipe: The flow is: (1) take a real image; (2) have Gemini 3 Pro output a deeply structured JSON object capturing subject, anomalies, exact icicle geometry, background furniture, deck texture, photographic style, color palette, and must‑keep vs avoid constraints; (3) run that JSON as the prompt to test reconstruction quality; and (4) prepend instructions like “generate a new image with significantly different nouns, objects, color palette and pose while strictly preserving vibe and mood” to create variants from the same "series" json prompt example.

• Adversarial and stylistic control: The same author shows a second example where an ordinary car dashboard shot is turned into a cassette‑futurist, diegetic UI by describing instruments, indicator lights, and display layout in JSON, then re‑rendering it with a different aesthetic while keeping the underlying information architecture consistent cassette dash sample.

The result is a repeatable pattern: use a language model to formalize an image into a schema, then let Nano Banana Pro explore the space of scenes that share that schema’s "vibe" without drifting into unrelated content.

Genspark turns AI image model into one‑prompt comic agent

AI Image comics (Genspark): Genspark upgraded its AI Image feature so it now behaves like an agent that can take a single prompt and then iteratively design characters, follow a plot outline, and generate an entire comic or multi‑image story sequence, as shown in the end‑to‑end demo in genspark comic demo. The flow runs on top of models like Nano Banana Pro but abstracts them behind a comic‑aware planner that handles series consistency rather than single images genspark comic demo.

• Agentic workflow: The system is pitched as: one prompt describing the story you want to tell; the agent then proposes character designs, refines them with follow‑up generations, lays out panels or pages that follow the plot beats, and keeps generating episodes from the same universe, instead of forcing the user to hand‑orchestrate prompts image by image genspark comic demo.

• Model options and volume: The example uses Nano Banana Pro as the underlying generator, but Genspark notes that members can pick from multiple models to match different art styles, and that subscribers get unlimited generations so they can iterate on character sheets and story arcs without worrying about per‑image quotas genspark comic demo and membership note.

This shifts AI image use from isolated stills toward long‑form visual storytelling, with the agent acting as both director and continuity editor over a sequence of related images.

💼 Enterprise AI: Grok org plans, Alexa+, health usage, dev UX

Company moves and adoption metrics: xAI’s enterprise tiers, Amazon’s Alexa+ early access UI, ChatGPT health usage signals, and Google AI Studio dashboard QoL. Traffic deltas vs Gemini included as a market signal.

ChatGPT web traffic drops ~22% as Gemini rises to ~40% of its size

Traffic share (ChatGPT vs Gemini): SimilarWeb estimates that ChatGPT’s daily web visits fell about 22% over the six weeks after the Gemini 3 launch—its 7‑day moving average dropped from roughly 203M to 158M visits—while Gemini held steady around 60M and now sits at about 38–40% of ChatGPT’s traffic, as visualized in the chart from traffic chart. Follow‑up analysis argues the pattern suggests ChatGPT use is tied tightly to work and school rhythms, whereas Gemini’s flatter line hints at more consumer and Google‑surface usage, as explained in traffic analysis.

• Continuation of scale story: This adds nuance to earlier estimates that ChatGPT was nearing 900M users and that skeptics were "losing the argument" about adoption, following up on 900M users by showing that even at that scale, usage is sensitive to seasonal and competitive pressures.

• Relative positioning: The chart in traffic chart shows Gemini’s visits almost flat near 60M while ChatGPT’s line sags over the December holidays, and the ratio panel at the bottom climbs from ~33% to a peak of 39.2%, indicating Gemini is becoming a meaningful second entry point rather than an also‑ran.

The point is: ChatGPT still leads by a wide margin, but the web traffic gap with Gemini is narrowing, and usage looks more cyclical than the headline user numbers alone would suggest.

OpenAI pushes ChatGPT toward an in‑chat App Store with 800M users

ChatGPT app ecosystem (OpenAI): OpenAI is leaning hard into an App Store‑style ecosystem inside ChatGPT, with over 800M users able to invoke third‑party actions like ordering groceries via Instacart, creating playlists with Spotify, or finding hikes through AllTrails directly from the chat interface, as reported in the Wall Street Journal excerpted in ecosystem article. The piece frames this as a direct challenge to Apple’s App Store dominance, arguing that users may bypass traditional mobile apps entirely when a chatbot can sit between them and services, also highlighted in

.

• Developer channel: The description in ecosystem article notes that ChatGPT "apps" are now a core part of OpenAI’s growth plan, with the model mediating everything from shopping to media creation, which shifts distribution power away from app stores toward model owners.

• Strategic bet: By treating ChatGPT as a super‑app with embedded services rather than a standalone chatbot, OpenAI is trying to lock in both users and developers before Apple, Google, or Amazon can dominate conversational interfaces at the OS level.

This is why the monetization and governance details of ChatGPT’s in‑chat app ecosystem are starting to matter almost as much as the raw model capabilities.

xAI launches Grok Business and Enterprise tiers for organizations

Grok Business/Enterprise (xAI): xAI introduced Grok Business and Grok Enterprise, positioning its assistant as an org‑ready product with Google Drive integration, permission‑aware link sharing, and inline citations for teams according to the launch details in grok tiers. Grok Enterprise adds SSO, SCIM user provisioning, and a Vault environment with client‑managed encryption keys plus isolated data handling and large‑scale document search via the Collections API, all aimed at regulated customers as described in grok tiers.

• Business feature set: Grok Business lets teams query shared Google Drive data with link‑sensitive access checks, and it surfaces cited sources for answers so responses can be audited before use in workflows, as outlined in grok tiers.

• Enterprise controls: Grok Enterprise layers on identity (SSO/SCIM), encrypted Vault storage where customers control keys, and options like Vault Search and agentic Collections APIs for long‑horizon tasks over large corpora, per grok tiers.

The point is: xAI is moving Grok from a consumer chatbot into a full enterprise offering with the usual security and compliance knobs rather than leaving that ground to OpenAI and Microsoft.

Leaked OpenAI roadmap sketches a full ChatGPT product suite for 2026

ChatGPT suite roadmap (OpenAI): A detailed roadmap summary describes OpenAI’s 2026 vision as a tightly connected suite: ChatGPT (Consumer) becomes a "personal super‑assistant" with steerable personality, long‑term memory, proactive help, and an ecosystem of apps; ChatGPT for Work turns into a daily execution surface for drafting, analyzing, and coordinating across org systems; and a separate Enterprise Automation Platform aims to be the OS for deploying and managing agents at scale, all outlined in roadmap summary. The same document sketches plans for Sora as a creative hub, Memory for persistent personalization, and Codex evolving from helper to proactive teammate embedded in IDEs and dev tooling, as echoed in the strategy essay linked in product strategy essay.

• Consumer and work split: The breakdown in roadmap summary differentiates ChatGPT for everyday life (health, shopping, learning, finance, writing) from ChatGPT for Work, which is described as "proactive/connected/multimedia/multi‑player" and aware of org documents, systems, and workflows.

• Agent platform ambition: The Enterprise Automation Platform section in roadmap summary explicitly talks about being the shared foundation to deploy, trust, and interoperate agents, suggesting OpenAI wants to sit underneath many in‑house automation efforts rather than only selling a single assistant.

Taken together, the roadmap reads less like a single app strategy and more like an attempt to build a full stack of assistants and agent infrastructure spanning consumers, teams, and enterprises.

OpenAI revenue forecast highlights ARPU challenge for future ChatGPT scale

ChatGPT revenue outlook (OpenAI): Internal forecasts reported by The Information suggest OpenAI expects ChatGPT to reach 2.6B weekly users by 2030 and generate about $112B in non‑subscription revenue plus another $46B in 2030 alone, mostly from free users monetized via ads and commerce, as summarized in revenue summary. The same piece stresses that almost 90% of current users are already outside the U.S. and Canada, where ad revenue per user is far lower, raising questions about whether ChatGPT’s massive scale will translate into sustainable margins, according to the analysis in information article.

• ARPU gap: Comparisons in revenue summary to Meta, Pinterest, and Snap show that international ARPU can be a fraction of North American ARPU, implying that even with billions of users, ChatGPT will have to work much harder per user to match big‑tech revenue benchmarks.

• Business mix tension: The forecast in revenue summary leans heavily on non‑subscription revenue from "free" users, which may push OpenAI to prioritize ad and commerce integrations in ChatGPT—exactly the kind of App Store‑like ecosystem described in ecosystem article.

So the financial picture sketched here is one where OpenAI’s long‑term fortunes hinge less on Pro/Enterprise seats and more on whether it can extract enough value from billions of low‑ARPU users around the world.

OpenAI says 5%+ of ChatGPT traffic is health‑related, billions weekly

ChatGPT health usage (OpenAI): OpenAI is now saying that more than 5% of all ChatGPT messages globally are about healthcare, amounting to billions of messages each week focused on explaining medical information, preparing questions for doctors, and managing wellbeing, according to the stat highlighted in usage stat. A new health‑focused promo from OpenAI frames ChatGPT explicitly as a companion for understanding diagnoses and planning care conversations rather than replacing clinicians, as shown in the video in health video.

• Usage pattern: The messaging in health video and usage stat describes common use cases like breaking down lab results, asking “what does this MRI report mean?”, drafting questions for appointments, and tracking routines, which is consistent with the Science paper summary in science article noting global health queries.

• Scale and risk frame: The phrasing in usage stat—"billions of messages each week" and "more than 5%"—signals that health is already a major slice of ChatGPT usage, which heightens questions about reliability, safety guardrails, and regulatory scrutiny around medical advice even if OpenAI keeps marketing this as education and preparation rather than diagnosis.

This moves ChatGPT health from a niche curiosity into something closer to informal infrastructure for patient education, which health systems and regulators will likely need to treat as part of the care information environment.

Amazon opens Alexa+ web assistant in early access on alexa.com

Alexa+ (Amazon): Amazon quietly lit up an Alexa+ web experience at alexa.com, giving early‑access users a chatbot‑style interface that greets them by name and offers preset actions like Plan, Learn, Create, Shop, and Find in one place, as shown in alexa plus tweet. The UI looks more like a modern AI assistant than the old voice‑first Alexa, with side navigation for history and lists hinting at deeper account‑level context.

• Assistant surface: The screenshot in alexa plus tweet shows a central "Ask Alexa" box with file upload, suggesting multimodal input, plus quick modes that map closely onto common generative AI workflows (planning, learning, content creation, shopping assistance, and lookup).

• E‑commerce wedge: The right‑hand "List" panel, which includes shopping lists like Whole Foods, Weekly Grocery, and Camping Trip in alexa plus tweet, underlines that this is as much about driving Amazon retail flows as it is about generic Q&A.

So what changes is that Alexa is no longer only a smart‑speaker voice; it now has a proper web front‑end that can compete more directly with ChatGPT and Gemini as a consumer assistant that also routes users into Amazon’s shopping stack.

Amazon’s Rufus now appears in ~40% of mobile app sessions

Rufus usage (Amazon): SensorTower data suggests that around 40% of Amazon’s Android app sessions now involve Rufus, the company’s in‑app AI shopping assistant, and that purchases made after Rufus‑involved searches convert materially better than those from regular search, as plotted in the charts shared in rufus stats. The share of sessions that include Rufus climbed from roughly 28% to nearly 40% over November according to the graph in

.

• Session penetration: The top chart in rufus stats shows the line for Rufus sessions rising while non‑Rufus sessions fall, indicating that a significant portion of Amazon’s mobile traffic is already flowing through an AI assistant rather than traditional keyword search.

• Conversion uplift: The second chart in rufus stats indexes purchases involving Rufus vs non‑Rufus sessions, with the Rufus line peaking above 200 by late November while the non‑Rufus line hovers closer to 135, implying a sizable relative lift in purchase rates when Rufus is in the loop.

This positions Rufus as not just a UX experiment but a measurable revenue lever for Amazon’s core retail business.

Microsoft rebrands Office as the Microsoft 365 Copilot app amid “Microslop” backlash

Microsoft 365 Copilot app (Microsoft): Microsoft has renamed the classic Office app to the Microsoft 365 Copilot app, describing it as a single place to "create, share, and collaborate" with favorite apps now "including Copilot", as shown in the onboarding screen in copilot rename. Commentators note this makes it easier for the company to claim hundreds of millions of "Copilot" users overnight, while social media backlash under the meme "Microslop" criticizes the push to embed AI everywhere despite perceived weak usefulness, as framed in microslop thread.

• Metric framing: The wording in copilot rename implies any active Office app user now counts toward Copilot usage, which observers in microslop thread argue is a way to inflate AI user metrics rather than evidence of real daily Copilot engagement.

• Perception problem: The Windows Central coverage linked in microsoft backlash article and the "don’t call it slop" remarks attributed to Satya Nadella in microslop thread show Microsoft fighting an image problem: AI features seen as noisy overlays instead of essential tools.

So while the rebrand makes Copilot look ubiquitous on paper, the open question is how much of that presence reflects genuine, valued AI assistance versus checkbox integration.

Google AI Studio adds richer usage dashboards for Gemini developers

AI Studio dashboards (Google): Google rolled out a set of quality‑of‑life updates to the Google AI Studio usage dashboards, including a dedicated API success‑rate graph, Gemini embedding model usage views, the ability to zoom into specific days, and an updated chart design that makes error spikes easier to see, according to the product video in dashboard update. The launch thread notes that this should make it simpler for teams to understand how reliably their Gemini calls are behaving and where embedding traffic is going without building custom telemetry, as mentioned in dashboard update and linked from usage link.

• Observability focus: The clip in dashboard update shows a success‑rate timeseries overlaid with call counts plus a separate panel for embedding model usage by name, which gives developers a quick way to correlate failures and volume across different Gemini models.

• Workflow impact: By exposing day‑level zoom and improved graphing directly in AI Studio, Google reduces the need for smaller teams to wire up their own dashboards for basic reliability checks, though deeper analytics will still require exporting logs as implied by the sign‑in link in usage link.

So for teams already experimenting with Gemini via AI Studio, the product has inched closer to a real console for monitoring application health rather than a pure playground.

🛡️ Safety signals: misuse warnings and transparency gaps

Policy/safety news today centers on platform rules and transparency audits, plus leadership philosophy notes. No new regulation drops; these are governance/ops signals for teams shipping AI.

IBM tops 2025 Foundation Model Transparency Index as others lag badly

Foundation Model Transparency (Stanford HAI): Stanford’s 2025 Foundation Model Transparency Index gives IBM a 95/100 score, the highest in the index’s history, while most other major labs cluster far lower and the report concludes that overall transparency is declining, as described in the transparency thread and the hai article.

Scope and gaps: The index scores companies across training data disclosure, safety policies, deployment practices, and external oversight; IBM is the only lab that both documents how external researchers could replicate its training data and explicitly offers auditors access to that data, whereas firms like OpenAI (35/100), Meta (31/100) and Mistral (18/100) disclose far less detail on data and evaluation pipelines in the transparency thread. This signals a widening spread between a few highly transparent providers and a long tail of opaque ones.

Why it matters for builders: The index turns vague "trust" claims into a concrete checklist—who publishes eval methods, who explains fine‑tuning data, who enables third‑party audits—and provides regulators and enterprise buyers with a public reference point when deciding which models can be used in higher‑risk domains, as outlined in the hai article.

Anthropic leadership doubles down on safety‑first mission and early compute bets

Anthropic mission framing (Anthropic): President Daniela Amodei reiterates that Anthropic was founded to make safety a core value rather than an afterthought, contrasting the move as "running towards" a vision where reliability and economic success are intertwined, as she explains in the founding story clip and values recap.

Open dialogue on risk: Amodei links Claude’s potential for disease research and health impact to the need to tackle "tough stuff"—misuse, bias, and high‑stakes errors—through open public conversation, arguing that transparent discussion of downsides is a prerequisite to realizing benefits for healthcare and beyond in the risk–benefit comment. She frames this as a way to avoid bad outcomes while still unlocking what she calls Claude’s "huge" upside for curing disease.

Compute spending and arms race context: Responding to headlines about massive AI capex, Amodei notes that headline numbers can be misleading because different labs strike hardware deals on very different timelines and terms; she says much of the sector is committing to GPUs and TPUs years before deployment because "access to future compute determines future capability," and warns that teams which do not pre‑commit risk missing entire training cycles, as discussed in the compute spend explanation. This ties Anthropic’s safety rhetoric directly to infrastructure strategy: long‑term model governance is being decided at the same time as multi‑year hardware contracts are signed.

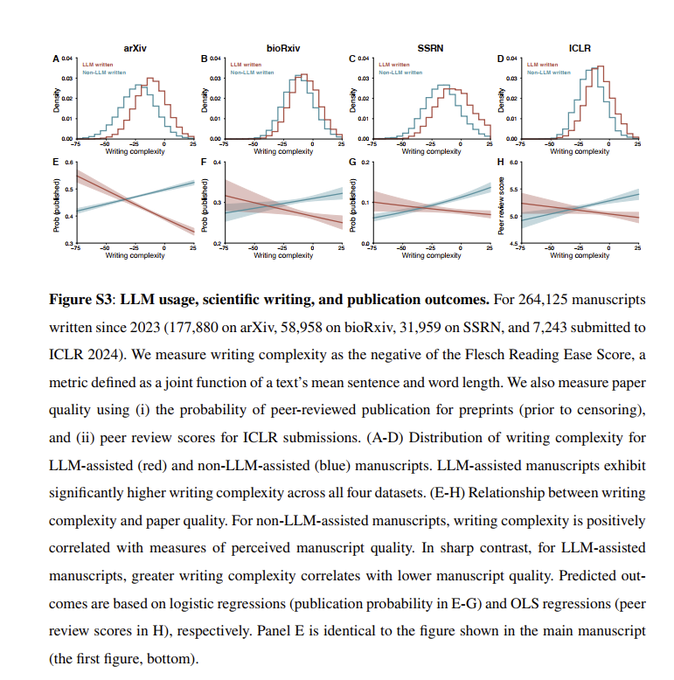

Science study warns LLM‑driven paper flood could break peer review

AI and scientific publishing (Science): A new paper in Science argues that large language models are reshaping scientific production so quickly that traditional peer review is at risk of collapse, noting that AI already generates large volumes of complex papers and that, unlike human work, higher textual complexity is now often a signal of lower quality, as highlighted by Ethan Mollick in the peer review thread and detailed in the science article.

Flood and signal inversion: The authors find that AI tools dramatically boost productivity—especially for non‑English‑speaking researchers—but also create a flood of submissions where superficial complexity no longer correlates with originality or rigor, turning a long‑standing reviewer heuristic on its head, as summarized in the impact commentary. They emphasize that current systems lack plans for vetting, distributing, and absorbing discoveries if AI begins to automate parts of scientific reasoning itself.

Operational risks for research ecosystems: For AI labs and research orgs, this raises governance questions beyond simple plagiarism checks: how to design new review processes, how to detect low‑value AI‑generated work at scale, and how to maintain trust in scientific outputs when readers cannot assume that dense, technical prose implies human‑level understanding or effort, as the science article lays out.

Meta tests RL‑tuned LLMs as scalable content moderators with sparse labels

RL content moderation (Meta): A new AIatMeta paper reports that reinforcement learning can significantly improve LLM content moderation performance even when high‑quality human labels are scarce, positioning RL‑tuned models as potential front‑line filters for harmful or policy‑breaking content, according to the paper teaser.