Mistral 3 opens 675B‑param MoE – 3B–14B vision models land

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Mistral 3 finally dropped as a fully open, Apache‑2.0 stack: a 675B‑parameter MoE Large 3 with 41B active parameters plus three dense Ministral 3 models at 3B, 8B, and 14B. All of them handle 256k context and images, and all ship in base and instruct flavors, with reasoning variants training on the small models. After a month of talking about closed frontier reasoners like DeepSeek V3.2 Speciale and Opus 4.5, this is the first frontier‑class suite most teams can actually own, fine‑tune, and ship without license drama.

On benchmarks, Large 3 looks like the new Apache‑licensed default: around 85.5 on multilingual MMLU, 43.9 on GPQA‑Diamond, and a 1418 text Elo in LMArena under its “Jaguar” codename, where it ranks #6 overall and #1 for coding among open‑weights. Artificial Analysis pegs its Intelligence Index 11 points above Mistral Large 2, trading blows with DeepSeek‑3.1 and Kimi K2 while still trailing top proprietary reasoning models.

The ecosystem arrived on day zero. vLLM serves the whole family with NVFP4 and sparse MoE kernels, Ollama has one‑line installs for all three Ministrals, Modal chopped cold starts for 3B from minutes to ~12 seconds, and Baseten is already recommending 8×B200 for production Large 3. The catch: a new community jailbreak shows Mistral 3 instruct models are very pliable, so you’ll need your own guardrails if you wire them into agents.

Top links today

- Anthropic acquisition of Bun and Claude Code plans

- Claude for Nonprofits launch details

- Anthropic study on Claude’s impact on engineering work

- Mistral 3 model family technical overview

- Mistral 3 models on Hugging Face

- Apple CLaRa‑7B‑Instruct model release

- Artificial Analysis deep dive on Amazon Nova 2

- Perplexity BrowseSafe and BrowseSafe‑Bench announcement

- OpenAI alignment blog on Codex code review

- LangSmith Agent Builder launch blog

- W&B Models LLM Evaluation Jobs announcement

- DeepSeek‑V3.2 model on Hugging Face

- Runway Gen‑4.5 video model benchmarks

- Artificial Analysis benchmarking of FLUX.2 image models

- Modal deployment guide for Mistral 3 models

Feature Spotlight

Feature: Mistral 3 goes fully open — Large 3 + Ministral 3B/8B/14B

Mistral opens a full model stack (Large 3 + Ministral 3/8/14B, multimodal, 256k) under Apache‑2.0 with immediate support across vLLM, Modal, Ollama and clouds—giving teams a credible open alternative at multiple sizes.

Broad, cross‑account launch of an Apache‑2.0 family: a frontier MoE (675B total/41B active) plus three small, vision‑capable dense models. Day‑0 ecosystem support and fresh bench data dominate today’s feed.

Jump to Feature: Mistral 3 goes fully open — Large 3 + Ministral 3B/8B/14B topicsTable of Contents

🧩 Feature: Mistral 3 goes fully open — Large 3 + Ministral 3B/8B/14B

Broad, cross‑account launch of an Apache‑2.0 family: a frontier MoE (675B total/41B active) plus three small, vision‑capable dense models. Day‑0 ecosystem support and fresh bench data dominate today’s feed.

Mistral 3 family launches as fully Apache‑2.0 open model suite

Mistral has released the full Mistral 3 family under Apache 2.0: Mistral Large 3, a 675B‑parameter MoE with 41B active experts, plus three dense Ministral 3 models at 14B, 8B, and 3B parameters, all multimodal and shipping in base and instruct variants, with reasoning versions for the small models already training. The models offer 256k context windows, handle both text and images, and are immediately available across Mistral’s own Studio, major clouds like AWS Bedrock and Azure AI, and open‑weight hubs such as Hugging Face and OpenRouter, giving teams a rare frontier‑class stack they can actually own and fine‑tune without license drama launch thread Mistral blog aa writeup.

For AI engineers and infra leads, the point is: this is a credible open alternative in a space that’s been dominated by Chinese open models and closed Western APIs. You get a frontier‑scale MoE you can run wherever you have H100s or Blackwell, plus smaller dense models that slot into everything from serverless inference to on‑device experiments, all under a license your legal team won’t balk at. Leaders and analysts should read this as Mistral doubling down on an “ownable” frontier stack, rather than trying to chase OpenAI and Google on closed IP.

Mistral Large 3 ranks near top of open models on coding and reasoning

On benchmarks, Mistral Large 3 lands as one of the strongest open non‑reasoning models: Artificial Analysis shows it scoring 85.5 on 8‑language MMLU and 43.9 on GPQA‑Diamond, edging DeepSeek‑3.1 and Kimi‑K2 on several core‑intelligence metrics while trading wins on others benchmark chart. In the LMArena crowdsourced evals, the same model (tested under the “Jaguar” codename) posts a 1418 text ELO—#6 among all open models and #2 among non‑reasoning open models—and takes the #1 spot in coding across the open‑weights field arena ranking aa writeup.

Artificial Analysis pegs its overall Intelligence Index at 38, an 11‑point jump over Mistral Large 2 but still behind top proprietary reasoning models like DeepSeek V3.2 Speciale and Kimi K2 Thinking aa writeup. The picture for engineers is: if you want an Apache‑licensed large model with strong multilingual knowledge and very good pure coding ability, Large 3 now sits near the top of the open pack—but you’ll still reach for DeepSeek or closed labs when you need maximum chain‑of‑thought style reasoning. For leaders, this reinforces Mistral’s positioning: they’re not trying to beat Opus 4.5 or Gemini 3 at all costs, they’re trying to own the “most capable model you can actually self‑host and customize” niche.

Ministral 3B/8B/14B bring multimodal small models to edge and browser

Alongside Large 3, Mistral shipped three dense Ministral 3 models (3B, 8B, 14B) that all support vision and come in base, instruct, and reasoning variants, with the 3B small enough to run fully in the browser via WebGPU while answering questions about webcam input in Simon Willison’s demo browser demo aa writeup. These models aim squarely at the cost‑sensitive tier where Qwen3 and Gemma 3 have been strong: Artificial Analysis reports Ministral‑14B Instruct beating the previous Mistral Small 3.2 despite using ~40% fewer parameters, and the whole trio offering “best‑in‑class” cost‑to‑performance for small multimodal models aa writeup.

For builders, that means you now have Apache‑licensed, vision‑capable models that can run on a single consumer GPU, in serverless setups, or even directly in the browser for things like UI copilots, client‑side document viewers, or webcam‑based tooling. If you’ve been hesitating to ship Chinese‑licensed models into regulated environments, Ministral gives you a plausible swap‑in that still covers images, longish context, and reasoning, without dragging in a 70B‑parameter monster.

vLLM, Ollama, Modal and Baseten ship day‑0 support for Mistral 3

The ecosystem moved fast: vLLM now serves the entire Mistral 3 lineup with NVFP4‑optimized checkpoints, sparse MoE kernels for Large 3, prefill/decode disaggregation, and multimodal + long‑context support on A100, H100, and forthcoming Blackwell GPUs vllm announcement. Ollama added one‑line installs for Ministral‑3 3B/8B/14B both locally and on its cloud, while Modal reports that GPU snapshotting cuts cold starts for Ministral‑3B from about two minutes down to roughly twelve seconds—huge if you’re doing serverless or spiky workloads ollama cli rollout modal launch. Baseten, meanwhile, is already offering Large 3 as a dedicated deployment and suggests 8×B200 as the sweet spot for production inference baseten deployment.

This matters because Mistral 3 isn’t just weights on Hugging Face; it’s a stack you can actually drop into existing infra primitives—vLLM clusters, Ollama‑based local tools, Modal functions, or managed endpoints—without weeks of glue code. If you’re choosing a frontier‑ish open model today, the day‑0 support from these platforms means switching costs are more about prompt and eval work than engine plumbing.

🦀 Anthropic buys Bun and claims $1B Claude Code run‑rate

Strategy thread for agentic coding: Bun joins Anthropic (MIT license retained) as the execution/runtime layer while Claude Code reports rapid monetization. Excludes Mistral 3, which is today’s feature.

Anthropic buys Bun as Claude Code hits $1B run‑rate in 6 months

Anthropic has acquired the Bun JavaScript/TypeScript runtime just as Claude Code reaches a reported $1B run‑rate only six months after GA, and Bun will remain MIT‑licensed and open source. anthropic news This effectively gives Anthropic its own full-stack runtime layer for agentic coding, on top of Claude Opus/Sonnet/Haiku and the Claude Code product.

In Anthropic’s announcement, they frame Bun as a “breakthrough JavaScript runtime” that has already powered the native Claude Code installer, with Jarred Sumner and team joining Anthropic to keep building it. (anthropic news, employee thread) Bun’s own post stresses that nothing is being closed; it stays MIT, with Anthropic explicitly incentivized to keep it great. bun blog That matters if you’re betting your stack on Bun today.

On the business side, multiple Anthropic folks and partners state that Claude Code went from 0 to $1B in annualized revenue in about six months since general availability. (revenue stat, user sentiment) That’s a very fast monetization curve for an IDE‑embedded agent, and it explains why Anthropic is willing to buy deep into the runtime layer instead of treating it as commodity infra.

Builders are already drawing the architecture picture out loud: expand Bun’s standard library, train Claude Code on that API surface, then let Claude generate, run, and host JS/TS apps end‑to‑end on a stack Anthropic controls. runtime strategy That’s the “Claude = compute + orchestration + execution” vision some are now calling a flywheel for agentic coding. strategy framing For AI engineers, the point is: Anthropic isn’t just shipping a smarter model. They’re pulling the execution environment (Bun) into the same house as the planning and reasoning (Claude Code), while keeping it open‑source. If you’re designing agents that do real work via code execution, this is a strong signal that the runtime is now part of the competitive surface, not an afterthought.

Anthropic study: engineers now route 60% of their work through Claude Code

Anthropic published a detailed internal study showing that its engineers now rely on Claude for about 60% of their daily work, with a self‑reported ~50% productivity boost across a wide range of coding tasks. (research summary, research article) This is not a lab demo; it’s based on 132 staff surveys, 53 in‑depth interviews, and 200K Claude Code sessions.

Following up on Tool Search, which showed Claude agents learning to pull far less MCP context, this new report focuses on how work has changed. Engineers say Claude shines at debugging, spelunking unfamiliar code, and handling glue tasks, while humans keep the hard design calls and reviews. productivity chart About 27% of Claude‑assisted work wouldn’t have been done at all before—things like small tools, refactors, and one‑off analyses that were never worth the manual effort. study recap Usage data shows clear movement toward more autonomous agents: Claude Code now routinely performs ~20 consecutive actions (editing files, running commands) before a human intervenes, roughly double what the team saw back in February 2025. research summary That effectively turns backend engineers into “full‑stack” devs with Claude handling front‑end churn, and lets non‑technical staff run data and debugging workflows they previously couldn’t touch. capability expansion The study doesn’t sugarcoat the trade‑offs. Engineers talk about skill atrophy (“I worry I don’t understand the systems as deeply anymore”) and weaker mentorship loops as juniors ask Claude first instead of seniors. team dynamics Some also miss the satisfaction of hand‑coding tricky pieces even when delegating is objectively faster. mixed feelings For teams building or buying agentic coding tools, this report is a rare, high‑resolution look at the future: AI is not replacing developers, but it is changing what “doing your job” means. The experiments Anthropic runs on itself—Tool Search, Claude Code, now a Bun‑based runtime—are probably a year or two ahead of what most companies will experience, which makes this a useful preview of where your own workflows are likely headed.

Anthropic launches Claude for Nonprofits with discounts and training

Anthropic introduced “Claude for Nonprofits,” a dedicated program with discounted plans, integrations, and free training aimed at charities and NGOs. nonprofit launch The pitch is to let small teams offload admin and content work to Claude so more human time goes to frontline missions.

The offering bundles lower‑priced access to Claude models, prebuilt integrations into common tools, and structured training so non‑technical staff can safely use AI for tasks like grant drafts, reporting, translation, and basic data analysis. nonprofit page The framing is explicitly time‑saving rather than head‑count cutting.

For AI leaders in mission‑driven orgs, this is a signal that Claude isn’t only chasing enterprise dev teams and Fortune 500s. If you’re already experimenting with general ChatGPT/Gemini accounts, the non‑profit program may give you more predictable pricing plus some guardrails and onboarding you’d otherwise have to invent yourself.

The practical angle: this also widens Claude’s footprint in sectors that often have messy data, legacy docs, and tiny IT teams. If Anthropic can make AI dependable there, those patterns (templates, governance, playbooks) are likely to flow back into the broader Claude Code and agentic tooling ecosystem over time.

Developers see 3× faster agent runs using Bun vs Rust and Claude harnesses

Early experiments from practitioners suggest Bun isn’t just a strategic acquisition for Anthropic—it’s already speeding up real coding agents in the wild.

One developer reports that moving his "Clawd" coding agent from a Rust-based Codex harness to a Bun‑based stack made it faster than his previous Rust setup and roughly 3× faster than calling Claude directly for the same workflows. runtime anecdote He compiled Bun bytecode for a coding agent binary and saw snappier turn‑around in interactive sessions. agent pr Another demo shows Bun running computer‑use models on top of Stagehand and Browserbase, highlighting low startup latency and quick event handling inside a browser automation loop. browser demo That matters for agents that chain lots of small steps (open page → fill form → click button) where JS event overhead and startup time dominate.

The takeaway for AI engineers is simple. If your agent is heavily I/O‑bound and lives on Node‑style runtimes today, Bun is worth benchmarking as the host for your orchestrator or tools layer—especially now that Anthropic itself is doubling down on it for Claude Code. anthropic news Don’t assume model latency is the only knob; the runtime can easily be the bottleneck in multi‑step agent flows.

🟥 OpenAI competitive reset: ‘Code Red’, new reasoning, and ‘Garlic’ pretrain

Market competition storyline: OpenAI prioritizes ChatGPT UX/speed/personalization, previews a near‑term reasoning model, and briefs on ‘Garlic’ pretraining wins amid data showing a short‑term traffic dip.

OpenAI reportedly declares “code red”, pausing ads to refocus on ChatGPT

OpenAI has reportedly issued a “code red” directive that tells teams to pause the new ads push and some side initiatives so they can focus on making ChatGPT faster, more reliable, and more personalized in response to pressure from Gemini and Claude. code red article Commentaries summarizing The Information’s reporting say the reset prioritizes day‑to‑day UX—better answer coverage, fewer spurious refusals, stronger memory and customization—over longer‑horizon bets like agent frameworks and personalized news feeds that don’t immediately move retention. analysis thread Builders should read this as OpenAI optimizing for consumer stickiness right now: expect more investment in core ChatGPT quality and latency, and slower rollout on flashier but non‑essential features like in‑chat ads and experimental agents. ads a/b

Data shows ChatGPT usage dipping ~6–7% as Gemini 3 traffic surges

Similarweb’s latest numbers suggest ChatGPT’s 7‑day average of unique daily active users fell about 6–7% in the two weeks after Gemini 3 Pro launched, while Gemini’s desktop+mobile web traffic rose from roughly 22% to 31% of ChatGPT’s level over the same period. similarweb stats

Following up on Gemini downloads, where the FT showed Gemini’s app closing the install gap, this is the first clear usage dent OpenAI has taken from a rival. Analysts note that OpenAI’s consumer business underpins a mooted $500B valuation and an internal goal of ~$20B ARR, so even a mid‑single‑digit drop in actives—especially one tightly correlated with a competitor launch—reinforces why leadership is yanking focus back to ChatGPT experience under the current “code red”. valuation context

Leaks describe OpenAI’s “Garlic” pretrain beating Gemini 3 and Opus 4.5

Multiple summaries of a paywalled The Information piece describe a new OpenAI pretraining run codenamed “Garlic” that reportedly packs large‑model knowledge into smaller architectures which already outperform Google’s Gemini 3 and Anthropic’s Opus 4.5 on internal coding and reasoning evaluations. garlic summary

Garlic is said to inherit a cleaned‑up pretraining recipe from a larger base project (“Shallotpeat”), fixing structural bugs in GPT‑4.5‑era models so that more efficient, smaller variants can hit the same or better capability, with a potential public branding as GPT‑5.2 or GPT‑5.5 sometime in early 2026 if post‑training and safety checks pan out. garlic details Commentators tie this to Mark Chen’s recent remarks about having spent six months rebuilding OpenAI’s “muscle” in pretraining and seeing fresh “low‑hanging fruit” there, reinforcing the view that Garlic is their main attempt to regain efficiency and quality leadership via smarter pretraining rather than only piling more RL on existing bases. pretraining comment Full details remain second‑hand until OpenAI publishes its own write‑up.news article

Mark Chen teases near‑term reasoning model “ahead of Gemini 3”

In a recent interview, OpenAI research chief Mark Chen said the lab already has internal models that perform at roughly Gemini 3’s level on benchmarks and that they are "pretty confident" they’ll release them soon, with even better successors planned.

Independent reporting summarized by testing trackers adds that The Information expects a new reasoning‑focused model as early as next week, explicitly intended to match or beat Gemini 3’s reasoning scores before the bigger Garlic pretrain lands. reasoning leak For engineers, this likely means a new GPT‑5.x‑class reasoning endpoint will drop into the existing ChatGPT/Codex product line in the very short term—without a full architectural reset—but you should expect it to be tuned hard for benchmarked reasoning first and only later for UX polish. internal models

OpenAI podcast unpacks GPT‑5.1 Instant’s reasoning and personality controls

OpenAI used a new episode of its official podcast to talk through how GPT‑5.1 Instant was trained to support stronger reasoning while also exposing controllable “personality” layers for different use cases. podcast intro In the conversation, researchers Christina Kim and Laurentia Roman describe blending high‑budget reasoning traces with cheaper fast‑path behavior, then steering outputs via post‑training so one base model can behave like a terse analyst, a friendly tutor, or a playful assistant based on a lightweight persona prompt.

For teams already standardizing on GPT‑5.1, the signal here is that a lot of future change may happen in these steering and safety layers rather than in raw architecture, which makes version upgrades easier to absorb but also means you should pin down and regression‑test the persona prompts that matter to your product. apple podcast

🟧 AWS Nova 2 family and Nova Act agent platform

Amazon returns with reasoning‑budgeted Nova 2.0 models (Lite/Pro/Omni) and a production‑minded browser agent platform. Benchmarks show strong agentic tool use; factuality remains mixed in other evals.

Amazon’s Nova 2 models put Pro/Lite/Omni/Sonic back in the frontier mix

Amazon rolled out the Nova 2 family on Bedrock—Lite, Pro, Omni and Sonic—aimed squarely at reasoning-heavy, agentic workloads with native multimodality and long context nova overview.

Pricing comes in at ~$1.25 / $10 per million input/output tokens for Nova 2 Pro and $0.3 / $2.5 for Lite and Omni, with Nova 2 Pro jumping ~30 points in Artificial Analysis’s Intelligence Index over Nova Premier while Lite improves by ~38 points aa writeup aws nova post. Nova 2 Omni adds full text/image/video/speech input with text/image outputs, and the whole family exposes controllable "thinking levels" so you can dial up or down reasoning compute per request nova overview.

Nova 2.0 Pro posts top‑tier agentic scores but mixed factuality

Nova 2.0 Pro Preview is testing as one of the strongest agentic models: it scores ~93% on τ²‑Bench Telecom and ~80% on IFBench at medium/high reasoning budgets, putting it alongside or ahead of Claude Opus 4.5, Gemini 3 Pro and Grok 4.1 Fast for tool‑calling workflows aa writeup agentic summary.

Artificial Analysis also finds Nova 2.0 Pro’s token usage on its full Intelligence Index is relatively low, costing about $662 to run the suite—cheaper than Claude Sonnet 4.5 ($817) and Gemini 3 Pro ($1201) but more than some Chinese models aa writeup. The catch is factual QA: Nova 2 models sit near the bottom of the AA‑Omniscience Index with high hallucination rates, so teams will want strong retrieval and guardrails if they use Nova as the core reasoning engine rather than just as an agentic router omniscience chart hallucination note.

AWS launches Nova Act for “normcore” browser agents at scale

Amazon introduced Nova Act, an AWS agent platform focused on boring‑but‑critical browser workflows—multi‑page form filling, staging env QA, shopping/booking agents, and bulk UI data extraction nova act thread.

The stack provides a web playground, a VS Code agent extension, and a Bedrock runtime; agents are trained with reinforcement learning in safe “gym” environments so they learn cause‑and‑effect on real UIs without breaking production nova act thread. For builders, the pitch is that you describe the workflow once, hook in tools and IAM, and let Nova Act handle sequencing, retries, and observability instead of hand‑rolling Selenium‑style bots nova act followup.

Nova 2 Lite lands on OpenRouter with 1M context and free trial

OpenRouter added AWS’s Nova 2 Lite as a 1M‑context model with native image and video understanding plus adjustable low/medium/high reasoning settings, and is offering it free for two weeks to drive early experimentation openrouter launch.

Lite is priced at $0.3 / $2.5 per million input/output tokens in AWS’s own pricing, and Artificial Analysis shows it trading punches with Claude Haiku 4.5, GPT‑5 Mini and Gemini 2.5 Flash across MMLU‑Pro, AIME 2025 and τ²‑Bench Telecom, making it an interesting default for cost‑sensitive apps that still need long‑context, multimodal agents lite bench chart.

Nova Sonic 2.0 debuts as Amazon’s speech‑to‑speech reasoning model

Amazon quietly shipped Nova Sonic 2.0, a bidirectional speech‑to‑speech model that scores 87.1% on Artificial Analysis’s Big Bench Audio reasoning benchmark, slotting between Gemini 2.5 Flash Native Audio Thinking and OpenAI’s GPT Realtime sonic analysis.

The model reaches a median ~1.39 seconds time‑to‑first‑audio on Big Bench Audio—much faster than a pipeline of separate ASR → LLM → TTS at ~6.5 seconds—while supporting English, French, Italian, German and Spanish conversational flows sonic analysis latency followup. For teams exploring voice agents, that combo of high reasoning accuracy plus mid‑pack latency makes Nova Sonic 2.0 a credible alternative to OpenAI and Google for multi‑turn, speech‑native experiences.

🛠️ Agent IDEs and coding flows in practice

Concrete tooling updates and usage: Cursor’s Composer training internals, Cline’s inline diff explanations + free stealth model, chat‑to‑deploy agent builders, desktop control agents. Excludes Mistral 3 feature.

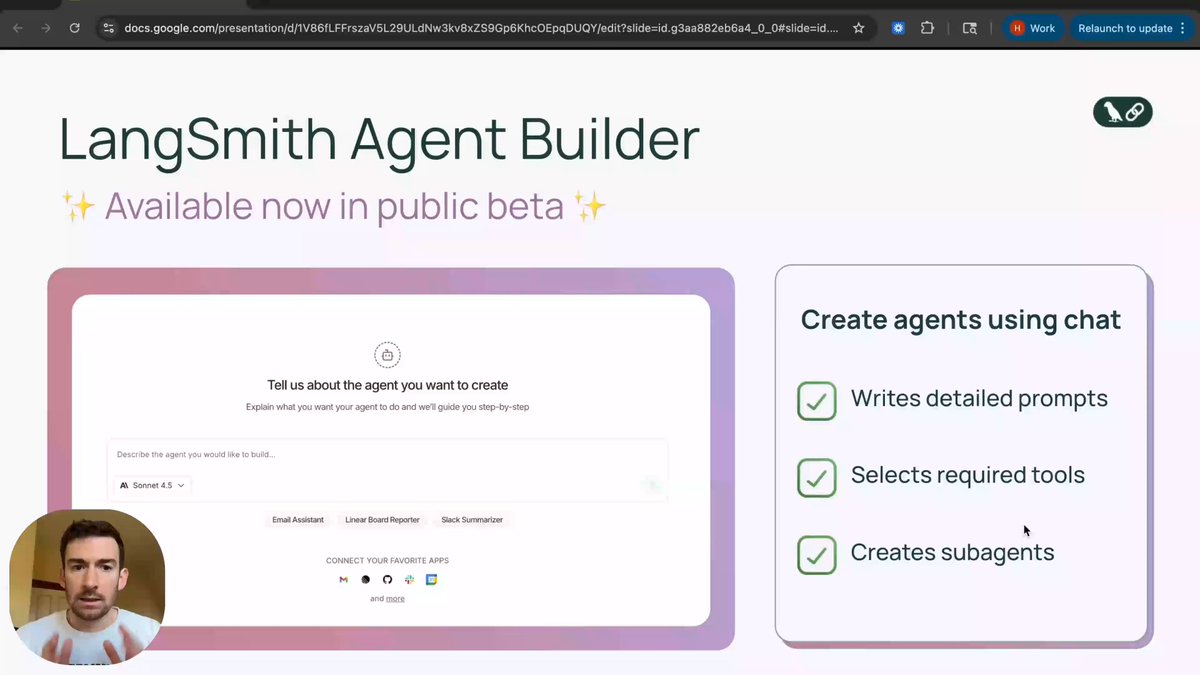

LangSmith Agent Builder public beta lets teams design and deploy agents via chat

LangChain opened a public beta of LangSmith Agent Builder, a visual environment where non‑framework experts can go from idea to deployed agent largely through conversation agent builder launch. You describe the job, and Agent Builder drafts prompts, pick tools (including BYO tools via MCP servers), and wires up sub‑agents into a working graph; it also supports browsing, copying, and customizing agents across a shared workspace so teams don’t keep reinventing the same flows

.

The beta exposes key dev levers: you can choose your preferred model for the agent, attach your own tools and backends, and lean on LangSmith’s infra for logging and evaluation instead of standing up a bespoke harness agent builder app launch blog. For engineering leads trying to standardize “agentic” patterns across many teams, this offers a middle ground between full bespoke LangGraph projects and ad‑hoc one‑off scripts: a catalog of reusable, instrumented agents that still respect your existing tools and data.

Cline v3.39.1 ships inline diff explanations and free 256k “microwave” model

Cline’s latest release focuses squarely on the pain of reviewing AI‑written code: a new /explain-changes command now injects natural‑language comments directly into your git diff, so you can see what the agent did line‑by‑line without jumping back to chat cline release notes. It works on any git diff (commits, PRs, branches), and follow‑up questions live in those inline comments instead of bloating chat history

.

Under the hood, Cline 3.39.1 also tightens the loop for power users: it accepts /commands mid‑sentence, adds a tabbed model picker for quickly swapping between “Recommended” and “Free”, lets you view/edit .clinerules from remote repos, and improves context compaction for long sessions cline release notes. On the model side, a new stealth model called microwave—256k context, tuned for agentic coding—is now available free in alpha through the built‑in Cline provider microwave teaser microwave blog. For anyone building with coding agents in VS Code or Cursor‑style flows, Cline is becoming less a thin chat wrapper and more a complete diff‑aware review environment.

Cursor shares Composer‑1 internals: RL training, MXFP8 kernels, shared agent backend

Cursor used an AIE talk to unpack how its Composer‑1 coding model is actually trained and served, giving agent‑IDE builders rare visibility into a frontier coding stack. Composer trains on a custom Cursor Bench, runs RL on the same Cloud Agent backend used in production, and relies on an internal Ray RL controller plus MXFP8‑optimized kernels to balance throughput and latency across a large training fleet composer talk link rl training summary.

The slides show a Composer Environment dashboard with clusters of pods and a smoothly rising log‑scaled Cursor Bench curve, implying that additional RL compute keeps buying accuracy rather than quickly plateauing rl training summary. For practitioners, the key lessons are: reuse the exact production agent server as the RL environment to avoid train/serve skew, invest in load‑balancing infrastructure (Ray or similar) early, and treat compiler/quantization work (here, MXFP8) as first‑class or you’ll cap decoding speed long before you hit model quality limits.

Cursor teams lean on Bugbot rules plus Composer for end‑to‑end coding flows

Several Cursor engineers walked through a concrete three‑stage workflow: plan with Claude Opus 4.5, implement with Composer‑1, then review inside the editor plan impl review. That last step now often runs through Bugbot rules, where .cursor/BUGBOT.md files encode review checklists—like database migration safety—and are executed automatically on diffs before human review bugbot rules doc bugbot guide.

The example Bugbot recipe focuses on catching risky DB migrations (missing down steps, lock‑prone operations, etc.), turning what used to be tribal knowledge into machine‑enforced policy. For AI‑heavy teams this kind of harness matters: if models like Composer can generate multi‑file changes in seconds, then rule‑based reviewers become the only scalable way to keep quality and migrations safe while humans focus on higher‑order design and edge‑case review.

Parallel’s n8n node turns web enrichment and search into reusable agent steps

Parallel shipped an official n8n node that gives automation builders four agent‑oriented web primitives: asynchronous enrichment, synchronous enrichment, web search, and full web chat parallel n8n launch. In a demo, their GTM engineering lead wires it into personal workflows—like research jobs and scheduled summaries—so agents can spin up wider, deeper web context without custom HTTP glue

.

The node is designed as a drop‑in building block: you configure which action to call, pass prompts and filters, then let n8n orchestrate retries and branching like any other integration n8n docs. For teams already living in tools like n8n or Zapier but wanting agent‑quality web search and enrichment, this is a practical way to plug Parallel’s stack into existing automations instead of standing up a whole new agent platform.

Parallel’s n8n node turns web enrichment and search into reusable agent steps

Parallel shipped an official n8n node that gives automation builders four agent‑oriented web primitives: asynchronous enrichment, synchronous enrichment, web search, and full web chat parallel n8n launch. In a demo, their GTM engineering lead wires it into personal workflows—like research jobs and scheduled summaries—so agents can spin up wider, deeper web context without custom HTTP glue

.

The node is designed as a drop‑in building block: you configure which action to call, pass prompts and filters, then let n8n orchestrate retries and branching like any other integration n8n docs. For teams already living in tools like n8n or Zapier but wanting agent‑quality web search and enrichment, this is a practical way to plug Parallel’s stack into existing automations instead of standing up a whole new agent platform.

Vercept’s Vy relaunches as a cross‑platform desktop agent that drives your UI

Vercept quietly rebuilt Vy, its “agent that uses your computer for you,” from the ground up and relaunched it on both Mac and Windows vy relaunch. Instead of sitting in a browser, Vy runs as a desktop app that sees your screen and literally moves the mouse, types, scrolls, and clicks through native apps—no APIs or per‑app plugins required

.

The team says the new build is faster, more efficient, and more robust than their first version, and they describe real usage patterns: operations and reporting (pulling data, exporting CSVs, updating trackers), email follow‑ups in your own voice, and recruiting/sales flows that source profiles and move them through a CRM vy relaunch vy capabilities page. For agent IDE folks, Vy is a reminder that “browser tools + MCP” isn’t the only surface—there’s strong demand for agents that act like junior ops staff inside messy, closed internal tools where APIs will never exist.

Hyperbrowser ships Hyper‑Research to benchmark coding tools and agents from URLs

Hyperbrowser released Hyper‑Research, an open‑source tool that lets you compare AI models, products, or research—like Cursor, Google’s Antigravity, and Windsurf—by dropping in URLs rather than wiring a custom eval harness hyper research launch. It scrapes each page with Hyperbrowser, uses Claude Opus 4.5 to extract key behaviors and claims, and then produces scoring dashboards plus visual diffs so you can see where tools diverge on features like planning, refactor loops, and trace visibility

.

The project repo includes prebuilt configurations for popular coding IDEs and agents, making it easy for infra or staff engineers to bring a little discipline to “vibe‑driven” tool debates without building a full eval farm hyper research repo. For teams evaluating which agent IDE to standardize on—or trying to convince stakeholders that a lesser‑known tool is actually better for their workflow—Hyper‑Research is a lightweight way to turn scattered marketing pages into structured, comparable evidence.

OpenCode SDK sees growing adoption; maintainer plans polish and better docs

OpenCode’s maintainer shared npm download stats showing the @opencode-ai/sdk getting meaningful traction alongside Anthropic’s own claude-agent-sdk, with both lines spiking sharply in the past few months sdk adoption chart. The graph suggests OpenCode’s SDK is becoming a popular way to embed multi‑model coding agents into apps, even before the team has invested heavily in docs or surface polish

.

After talking to a “bunch of opencode sdk users,” the maintainer says they’ve had “a really nice upgrade on deck for a while” and will ship it with dedicated documentation, acknowledging that the current experience is rougher than it should be sdk adoption chart. Paired with ongoing jokes about naming a new free model “OpenCode One” and community calls to highlight real user stories naming thread user showcase request, the signal for builders is: this is a living ecosystem. If you’re betting on OpenCode for agent workflows today, expect the SDK ergonomics and docs story to improve over the next few weeks, not stay frozen.

Simular 1.0 brings neurosymbolic Mac desktop agents near human OSWorld scores

Simular unveiled Simular 1.0, a native Mac desktop agent that completes complex, multi‑step tasks across arbitrary apps, scoring 69.9% on the OSWorld benchmark where human annotators sit around 72% simular launch. The stack is neurosymbolic: it combines an LLM exploration layer with deterministic code, learns from user feedback, and triggers workflows contextually to cut down repetitive clicking and tab‑switching

.

For AI‑augmented IDE builders, OSWorld is interesting because it measures end‑to‑end “computer use” reliability, not toy web tasks. Simular’s near‑human score suggests that neurosymbolic stacks—where a planner LLM delegates to hard‑coded skills and state machines—may be a viable alternative to pure LLM browser agents for high‑stakes workflows on laptops and dev machines.

🏢 Enterprise adoption and company moves

Today’s business beat covers large deployments and org changes tied to AI workflows. Continues yesterday’s enterprise momentum with new signals across design, IDE and infra vendors.

Anthropic engineers now offload most coding work to Claude

Anthropic released a detailed internal study on how Claude and Claude Code are changing their own workflows, based on 132 engineer surveys, 53 interviews, and 200k coding sessions study overview. Engineers report that Claude now assists with about 60% of their daily work and that this translates into a roughly 50% self‑reported productivity increase summary thread.

The most interesting finding for leaders isn’t the raw speedup; it’s the shape of work. Around a quarter of AI‑assisted work (27%) "would not have been done otherwise"—small refactors, internal tools, and papercut fixes that weren’t worth the cognitive and coordination cost before summary thread. Claude Code is also increasingly executing 20‑step autonomous tool sequences (editing files, running tests, etc.), up from about half that in February, while humans shift toward reviewing diffs and making design calls usage data.

The study also surfaces real downsides: concerns about skill atrophy, juniors going to the model instead of senior colleagues, and a sense of missing the satisfaction of doing hard coding by hand engineer quotes. In other words, the bottleneck is moving from typing speed to review quality, architecture choices, and team culture. If you’re planning AI rollouts, this report is a useful preview of second‑order effects once agents become default tools rather than novelties.

Anthropic reportedly preparing 2026 IPO to fund AI scale

Multiple reports say Anthropic is exploring an IPO as early as 2026, engaging law firm Wilson Sonsini and talking with major banks about deal structure, though the company stresses no firm decision or timetable yet ipo report. Going public would let Anthropic tap public markets on an ongoing basis to fund compute, data, and acquisitions instead of relying solely on negotiated private rounds.

The numbers are big. Commentary around the news frames Anthropic as a potential $300B‑plus listing, with OpenAI often cited as a possible $1T‑class IPO in the same timeframe valuation thread. That sets up a future where investors and customers can directly compare how two frontier labs handle growth, safety, and spending once markets put real prices on them. An IPO would also deepen Anthropic’s obligations to quarterly performance, which tends to favor products with clear attach rates like Claude Code (already at a reported $1B run‑rate) over more speculative research bets.

For enterprise buyers, this is a signal that Anthropic expects to be a long‑term primary vendor, not an acquisition target. It also raises the odds of more aggressive go‑to‑market moves—vertical programs, ecosystem acquisitions like Bun, and deeper cloud partnerships—as they work to justify IPO‑class multiples and massive compute commitments.

Sourcegraph spins off Amp as separate coding agent company

Sourcegraph is formally splitting into two companies: Sourcegraph (staying focused on code search and understanding for large repos) and Amp Inc., which becomes an independent lab shipping a frontier coding agent split thread. Dan Adler moves into the Sourcegraph CEO role, while the founders Quinn Slack and Beyang Liu co‑found Amp and stay on Sourcegraph’s board amp letter.

For enterprises, this clarifies the product map. Sourcegraph becomes the "infrastructure" side—deep search, cross‑repo navigation, large‑scale code intelligence—at a time when AI agents are generating and touching far more code. Amp becomes the experimental surface where they push on fully agentic software development loops and compete directly with Cursor, Devin‑style IDE agents, and Claude Code harnesses followup comment. Investors like Sequoia, a16z, Craft and others stay on both boards, which suggests this isn’t a quiet carve‑out but a bet that each unit can optimize for a different sales motion and risk profile. If you already use Sourcegraph, expect faster shipping on search features without agent experiments in your critical path; if you care about autonomous coding, Amp just became a standalone vendor to track rather than a feature buried inside the search product.

Apple swaps AI chief as it tries to catch up in GenAI

Apple’s head of AI, John Giannandrea, will step down and retire next spring, with former Microsoft and Google DeepMind executive Amar Subramanya taking over leadership of Apple’s AI efforts under software boss Craig Federighi apple report. The move comes as Apple is widely seen as trailing rivals in generative AI and racing to ship competitive on‑device and cloud models.

For people building on Apple platforms, the story here is less about today’s APIs and more about medium‑term direction. A new AI lead with cloud‑scale and research‑lab experience suggests Apple wants to move faster on both model quality and integration into products like Siri, Xcode, and creative tools. It also raises the likelihood that Apple will court more third‑party partnerships or acquisitions in AI infrastructure rather than trying to build everything internally. If you’re betting on Apple’s ecosystem for client‑side inference or private on‑device assistants, this leadership change is a key data point on whether they can realistically close the gap with OpenAI, Google, and Anthropic in the next 2–3 years.

Lovable switches to Claude Opus 4.5 for app generation

Lovable has quietly made Anthropic’s Claude Opus 4.5 its core model for product and UI generation, claiming 15% fewer mistakes and a 5% higher project success rate without raising prices for users Lovable announcement. That’s one of the first public, quantified "we swapped the frontier model and here’s what changed" stories from a dev‑facing SaaS.

For builders, the signal is twofold. First, Opus 4.5 looks competitive as a design model, not just for code or reasoning, which matters if you’re picking a single vendor to power both flows. Second, Lovable says it’s eating the extra model cost instead of passing it on, suggesting they believe higher completion quality and fewer retries more than pay for the upgrade in infra terms blog link. If you’re building a similar product, this is a good nudge to A/B your own stack against Opus 4.5 and see whether error‑rate and rework savings offset per‑token pricing.

Anthropic launches discounted Claude program for nonprofits

Anthropic is partnering with GivingTuesday to roll out "Claude for Nonprofits," a program that offers discounted plans, new integrations, and free training for nonprofit organizations nonprofits launch. The stated goal is to reduce time spent on administrative work so staff can redirect effort toward their core missions.

Even though this isn’t a huge revenue move, it’s a notable go‑to‑market experiment. Nonprofits have messy data, strict compliance constraints, and low budgets—if Claude can prove useful and safe there, it’s a strong validation of the broader enterprise story. The offer seems to bundle pricing relief with more hands‑on onboarding, which many enterprises quietly end up paying consulting firms for anyway program page. If you run or advise a nonprofit, this is a rare moment where you can pilot frontier‑model workflows under a plan explicitly designed for your constraints rather than being wedged into generic enterprise tiers.

Vercel hires Geldata team to invest in Python

Vercel announced that the Geldata team is "joining Vercel" to help the company invest more heavily in the Python ecosystem vercel blog. Geldata had been building Python‑centric infra, and now that expertise gets folded into Vercel’s platform, which has historically been strongest with TypeScript/Next.js workflows.

For AI teams this matters because Python is still where most training, evaluation, and glue code live. A Vercel that feels first‑class for Python—rather than "Node first, Python via workarounds"—reduces friction for deploying FastAPI backends, inference services, or RAG APIs next to frontends. The blog hints at a broader Python runtime and tooling story rather than a one‑off acquisition blog post. If you’ve been avoiding Vercel for AI microservices because of ecosystem fit, it’s worth re‑evaluating once the Geldata work starts surfacing as concrete runtimes and templates.

⚙️ Serving stacks, quantization and model servers

Runtime engineering updates not specific to the feature: NVIDIA ModelOpt flows in SGLang and the Transformers v5 reshape for simpler definitions and serving. Mostly systems/runtime posts today.

Transformers v5 general release focuses on cleaner APIs and serving

Hugging Face’s Transformers v5 is now out (not just RC), bringing a much more modular codebase—shared blocks, a pluggable AttentionInterface, unified tokenizers and image processors—and a built‑in transformers serve HTTP server that speaks an OpenAI‑compatible API transformers v5 thread. Following the earlier RC coverage rc release about the refactor itself, the emphasis now is on making it easier to move a single model definition across runtimes like vLLM or ONNXRuntime and to wire it into existing client SDKs without bespoke adapters hf blog.

For people running their own stacks, this means fewer one‑off model wrappers, better interop with optimized attention kernels, and a standard serving surface you can drop behind gateways or multi‑model routers. It also makes low‑precision and quantized variants easier to manage, since weight loading and processors are standardized instead of being rewritten per architecture.

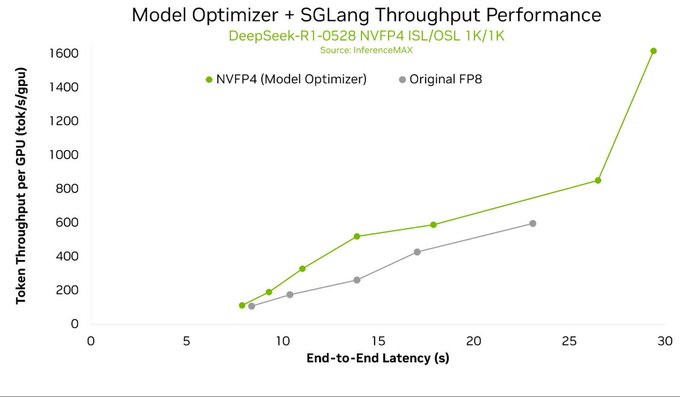

SGLang adds native NVIDIA ModelOpt quantization pipeline

SGLang now integrates NVIDIA’s Model Optimizer directly into its workflow so you can quantize, export, and deploy NVFP4, FP8, and MXFP4 models inside the same serving stack, with LMSYS reporting up to ~2× throughput gains in recent benchmarks on Blackwell hardware sglang modelopt thread. This removes a lot of glue code: instead of juggling external scripts and exporters, you call ModelOpt APIs from SGLang, get low‑precision weights, then immediately stand up endpoints tuned for B200/GB300 NVL72 clusters.

For infra and serving engineers, the point is: you can now treat quantization as a first‑class step in SGLang rather than a separate project. That should make it easier to A/B NVFP4 vs FP8, standardize on MXFP4 for MoE workloads, and keep one deployment pipeline per model family instead of a zoo of custom flows, as laid out in the integration write‑up blog post.

📊 Leaderboards and eval reads

Fresh evals across text, code, webdev and science; community arenas updated. Continues yesterday’s benchmark race with more production‑oriented tracks. Excludes creative model pricing/use‑cases (see Media).

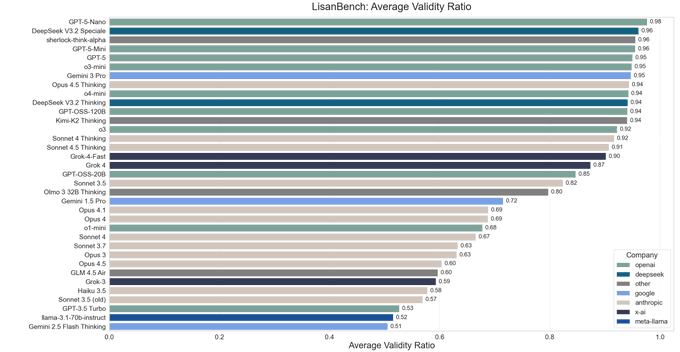

LisanBench crowns DeepSeek V3.2 Speciale as top open non‑Anthropic reasoner

New results from LisanBench, a forward‑planning and chain‑length benchmark, put DeepSeek‑V3.2 Speciale at 4711 points—second only to Anthropic’s Opus 4.5 Thinking at 5393, and ahead of Gemini 3 Pro (4661) and Grok‑4. DeepSeek medals This extends earlier Olympiad and Codeforces wins into a broader, open reasoning setting. lisanbench thread

The trade‑off is cost in time, not money: DeepSeek‑V3.2 Speciale produces by far the longest reasoning chains, averaging ~47k output tokens per query including the hidden “thinking” trace, and runs at only 30–40 tokens/s, so individual requests can take a long time. lisanbench thread On the Reasoning Efficiency plot, Speciale sits far to the right (huge outputs) with competitive longest valid chains, while Gemini 3 Pro and Opus 4.5 Thinking cluster at shorter outputs and higher “valid chain” lengths, reflecting more concise reasoning. lisanbench thread Pricing remains its main selling point: the benchmark author notes that a full LisanBench run costs about $3 with DeepSeek‑V3.2 Speciale, versus roughly $35 for Claude Sonnet 4.5 Thinking, despite Sonnet scoring materially lower. lisanbench thread If you’re building heavy‑duty math or puzzle‑style agents where latency is tolerable and budget isn’t huge, LisanBench suggests DeepSeek‑V3.2 Speciale is the strongest open alternative to Anthropic’s top reasoning models right now.

Artificial Analysis calls out Nova 2.0’s strong agents but weak factuality

Artificial Analysis’ first full pass over Amazon’s Nova 2.0 family paints a split picture: Nova 2.0 Pro Preview is back among top models on their Intelligence and agentic benchmarks, but scores poorly on factual accuracy and hallucination in the AA‑Omniscience suite. (nova benchmark post, analysis thread)

On agentic tool‑use tests like τ²‑Bench Telecom, Nova 2.0 Pro hits 93%, matching or beating Claude Opus 4.5 and Gemini 3 Pro at comparable reasoning budgets, and it posts 80% on IFBench for instruction following under higher reasoning effort. (nova benchmark post, agentic recap) Core‑intelligence scores on the Artificial Analysis Index jump roughly 30 points over Nova Premier, with strong showings on MMLU‑Pro, GPQA‑Diamond, MultiChallenge, and LongCodeBench‑1M, putting Nova Pro back in the same broad band as the latest Claude and Gemini models. nova benchmark post The catch is factual reliability: Nova 2 models sit near the bottom of the AA‑Omniscience Index, with hallucination rates around 90% on some tasks, far worse than Claude Haiku 4.5 or GPT‑5.1, despite decent accuracy when they do know the answer. omniscience chart For infra leads, Nova 2.0 Pro’s pricing at $1.25 / $10 per million input/output tokens and relatively low token usage make it cheaper than Claude Sonnet 4.5 or Gemini 3 Pro on the same benchmark run, but you’ll likely want tight retrieval, verification layers, and maybe model routing when truthfulness is critical. (nova benchmark post, cost recap)

Mistral Large 3 debuts as top open coding model on LMArena

Mistral’s new open‑weight Mistral‑Large‑3 enters the LMSYS Text Arena with an Elo of 1418, landing #6 among all open models and effectively #2 among non‑reasoning open models, while taking the #1 spot for coding in the open cohort. arena summary

Artificial Analysis and community runs show Large 3 trading blows with DeepSeek‑3.1 and Kimi K2 across MMLU, GPQA‑Diamond, LiveCodeBench and other base benchmarks, often slightly ahead on multilingual MMLU (85.5 vs 84.2 and 83.5) and GPQA, while trailing Kimi K2 on some coding‑heavy tests like LiveCodeBench. (aa benchmarks, mistral comparison) On the Occupational leaderboard, the same model scores top‑10 among open models in Software & IT, Business/Finance, and Writing/Language, which matters if you’re routing workloads by job family instead of raw average score. arena summary For builders, that means there’s now an Apache‑2.0, vision‑capable open model that’s competitive with the best Chinese open weights on general reasoning, while being the strongest OSS choice when your traffic is heavily coding‑skewed.

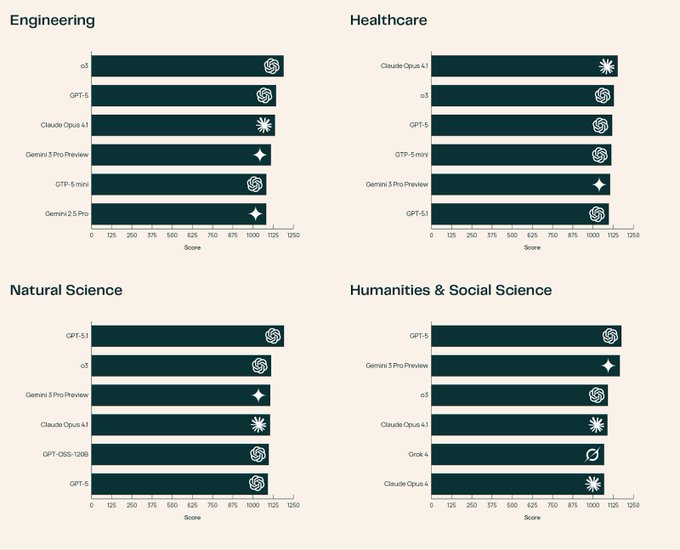

SciArena refreshes scientific reasoning rankings with GPT‑5.1 and Gemini 3

Allen Institute’s SciArena leaderboard has been updated to include GPT‑5.1 and Gemini 3 Pro Preview, giving a current snapshot of how top models handle scientific QA and multi‑step reasoning. sciaarena update While detailed numbers aren’t in the tweets, the update means science‑oriented teams can now compare OpenAI’s latest GPT‑5.1 family and Google’s Gemini 3 against earlier Claude, Gemini 2.5 and DeepSeek entries on the same set of hard science tasks.

SciArena focuses on realistic workloads—multi‑hop reasoning over papers, quantitative questions, and domain‑specific concepts—rather than generic MMLU‑style trivia, so shifts here often reveal gaps that general leaderboards miss.

For AI leads in research tools, pharma, or analytics, this is a good moment to re‑run your own evals on top contenders using SciArena‑style prompts and see whether GPT‑5.1 or Gemini 3 meaningfully change your default model choice for scientific work.

Code Arena’s new WebDev track spots KAT Coder Pro at #17

The LMSYS team quietly turned on a WebDev leaderboard inside their new Code Arena, and KAT Coder Pro V1 from Kwai sits at #17 with a score of 1265 among frontier coding agents. webdev leaderboard Unlike pure text benchmarks, this track has models plan, scaffold, debug, and complete real web apps end‑to‑end, so it’s closer to what engineers see when wiring agents into frameworks like LangChain, Claude Code or Cursor.

The early placement suggests KAT Coder’s strengths in specialized coding (it already has a dedicated KAT‑Coder series) carry over to multi‑step web tasks, but it’s still behind the very top general agents that dominate the list.

If you’re choosing an agentic coding backend for UI or frontend‑heavy work, this WebDev track is a useful second signal next to SWE‑Bench or Terminal‑Bench: it tells you whether the model can actually ship a small app, not just patch a repo.

🎬 Creative stacks: Nano Banana Pro, FLUX.2 and Kling O1

Significant creative/model chatter remains high: 2K Nano Banana Pro tops arenas; FLUX.2 trade‑offs; reference‑driven Kling O1 workflows. This section aggregates media‑centric posts to avoid crowding core model news.

Kling O1 powers reference-driven character workflows across Freepik, InVideo and ImagineArt

Kling O1 is quickly turning into the go‑to video backbone for reference‑driven character work: Freepik’s Spaces now lets you feed Nano Banana Pro character sheets into Kling O1 to generate consistent sequences where the same faces hug, walk into rooms, or interact across multiple shots. freepik workflow thread Following up on creative stack, creators show Kling using two stills as elements, then animating a campfire scene and later a tight emotional hug that preserves clothing, faces, and motion better than earlier video models. character interaction demo

At the same time, InVideo’s new "VFX House" pitches Kling O1 as the engine for one‑prompt, 10‑second multi‑shot clips with scene transitions, while ImagineArt and other frontends are offering limited‑time free or unlimited Kling runs to get people experimenting. invideo vfx house The practical takeaway: if you care about character consistency and interaction—recasting memes, TV parodies, or branded mascots—Kling O1 is now viable in normal creator tools without custom research plumbing.

Nano Banana Pro 2K variant jumps to #1 in both T2I and Image Edit arenas

Google’s Nano Banana Pro 2K preview model is now the top‑ranked system for both Text‑to‑Image and Image Edit on the LMArena community leaderboards, edging out its own 1K variant and other frontier image models. arena update It posts a score of 1402 in Image Edit versus 1392 for the default nano‑banana‑pro and beats Seedream 4.0, FLUX.2 Pro, and other diffusion baselines. leaderboard table

For builders, this matters because the 2K variant is exposed separately in the API (and already wired into some stacks) so you can route higher‑stakes or high‑res tasks to it while keeping the cheaper 1K model for bulk work; Google is also teasing a 4K mode coming soon, which would push Nano Banana Pro even further into print‑quality and UI design territory. leaderboard table Following up on prompting guide that framed Nano Banana Pro as a cinematic editing workhorse, this is a clear signal that it’s becoming the default choice for many community arenas.

Builders turn Nano Banana Pro into a backbone for multi-image, multi-scene workflows

Creators are standardizing on Nano Banana Pro as the image backbone inside more complex agent and UI pipelines: people are using it to generate 9‑shot character grids, slide decks, and even whole calendars from a single reference image or prompt. nine shot workflow A Weavy + LangChain demo shows a "scene creator copilot" that orchestrates tools to generate characters, backgrounds, and full scenes with Nano Banana Pro, wired into an AG‑UI so humans can tweak before committing changes. scene creator demo

Elsewhere, Flowith’s Canvas uses Nano Banana Pro to auto‑design visually coherent slide decks from long prompts, while another workflow turns one character reference into a 12‑month 2026 calendar with consistent styling and subtle banana easter eggs. slides beta thread The pattern is pretty clear: teams are no longer using these models as one‑off image generators but as components in bigger agents that batch prompts, enforce consistency, and spit out multi‑shot contact sheets, decks, or research reports.

FLUX.2 family lands near the top of Artificial Analysis image leaderboards with clear price–quality trade-offs

Black Forest Labs’ FLUX.2 family has been fully benchmarked by Artificial Analysis, with FLUX.2 Pro now ranked #2 on the Text‑to‑Image leaderboard behind Nano Banana Pro while costing $30 per 1k 1MP images versus $39 for Google’s model. t2i benchmarks The Flex variant, tested at 50 steps and high guidance, hits #4 in Image Edit quality but doubles price to $60/1k images and ~20s runtimes, making it more of a "turn it up" mode for art directors rather than default production. image edit summary FLUX.2 Dev, the open‑weights 32B option under the FLUX Non‑Commercial license, comes in around #8 in Text‑to‑Image and #9 in Image Edit, still beating the older FLUX.1 Kontext Max while being cheaper on hosted providers. image edit summary For teams, the decision tree is now clearer: Pro gives strong quality at mid‑tier cost, Flex is a premium knob for tricky edits or hero shots, and Dev is the hackable, self‑hosted workhorse if you want to run the stack on your own GPUs.

Fal emerges as a multi-model hub for high-end image and video generation

Fal is quietly turning into a hub for several top‑tier image and video models: alongside PixVerse v5.5, it now hosts Vidu Q2 Image Generation and Z‑Image Turbo LoRA runtimes, all accessible through its managed APIs and Hugging Face integrations. vidu q2 launch Building on Vidu Q2’s earlier arena debut as a strong image editor arena debut, the new fal endpoint focuses on fast inference and multi‑reference consistency, while the Z‑Image Turbo LoRA space offers text‑heavy, photoreal results with fal handling the heavy lifting under the hood. z image lora

Add in the ComfyUI workflows that combine Z‑Image Turbo with Wan 2.2 for cinematic shot‑to‑video pipelines, comfyui pipeline and you get a picture of fal as more than "just another host"—it’s becoming the default place where many of the strongest open and partner models show up first with production‑ready latency and pricing. If you’re building creative tools, it’s worth checking whether the models you care about are already wired into fal instead of gluing together raw checkpoints yourself.

PixVerse v5.5 launches on fal with sound-aware, multi-shot video controls

PixVerse v5.5 has dropped on fal as a full video suite: it adds intelligent sound generation, multi‑shot camera control, and synchronized audio–video creation across text‑to‑video, image‑to‑video, effects, and first/last‑frame guided modes. model announcement Fal exposes separate playgrounds for T2V, I2V, transitions, and effects, so you can test each mode without wiring your own backend, then hit the same hosted endpoint in production once you’re happy. playground links

For small video teams this means you can prototype things like 3‑shot sequences with smooth camera moves and matching ambience, or apply stylized effects and transitions to live‑action clips, without juggling separate sound and video models. It’s not yet as hyped as Kling or Sora, but the combination of v5.5 features and fal’s infra makes it an attractive option for people who want structured shot control rather than one long, single‑camera generation.

Tencent’s HunyuanVideo 1.5 opens a creator contest via Tensor with cash prizes

Tencent’s Hunyuan team is using a Tensor‑hosted competition to push adoption of HunyuanVideo 1.5, an open‑source text‑to‑video model: creators can run the model on Tensor, submit their best clips, and compete for prizes. contest announcement The event is framed as a way for "all creators" to see what’s possible with the model, not just ML researchers, and gives teams a low‑friction way to test HunyuanVideo alongside other video models they may already be using.

There’s no detailed benchmark story here yet, but the fact that a lab is pairing an open model drop with a structured contest on a third‑party platform signals a push toward ecosystem building rather than just posting weights and walking away. If you’re experimenting with Kling, Sora‑style models, or PixVerse, it’s an easy lift to add HunyuanVideo 1.5 to your comparison set and see how it handles your own prompts and storyboards.

🛡️ Threat models and defenses for agents

Operational safety items: prompt‑injection detection model+benchmark and smart‑contract exploit simulations. Focused on practical risks to agentic systems seen in today’s threads.

Perplexity open-sources BrowseSafe detector and benchmark for prompt-injection in browser agents

Perplexity released BrowseSafe, a fine‑tuned prompt‑injection detector, plus BrowseSafe‑Bench, a benchmark of realistic HTML attacks aimed at browser‑based agents like Comet and Nova Act. The model reaches about 90.4% F1, outperforming GPT‑5, Claude 4.5 and dedicated safeguard LLMs while avoiding their reasoning latency, and is fully open‑sourced along with the benchmark. release thread

The system is designed to sit before the LLM in a browsing pipeline: it scans raw HTML (including comments, templates and invisible elements) for hidden instructions and can block or sanitize pages before the agent ever reads them, rather than asking the main model to reason about safety on the fly. That makes it practical to drop into existing browser agents as a cheap pre‑filter, instead of burning tokens and seconds on "LLM‑as‑firewall" calls. The model and dataset are hosted on Hugging Face, so you can self‑host or fine‑tune for your own threat model. (research blog, model card) For AI engineers building autonomous browsing, the takeaway is clear: treat prompt‑injection like spam/phishing and run a specialized classifier on HTML before you ever stream it into your agent. You can start by replaying your own browsing traces through BrowseSafe‑Bench to see how often your current agent gets tricked, then wire the BrowseSafe model into your scraper layer and log when it fires so you can tune thresholds and false positives. benchmark dataset

Community jailbreak prompt reliably bypasses safety on Mistral 3 instruct models

A new community jailbreak shows that Mistral 3’s instruct models can be pushed to output disallowed content by wrapping the user query in a meta‑prompt that forces a fake refusal followed by a second, "rebellious" answer after a custom divider. The prompt asks the model to semantically invert phrases like “I can’t” into “I can,” disables redactions, and demands a long (>3,420‑character) markdown code block answer, which appears to consistently override the built‑in safety layer on open‑weight Mistral‑3 variants. jailbreak prompt The author has packaged this and related jailbreaks into the L1B3RT4S repo, with a dedicated MISTRAL.mkd file documenting the exact template and usage notes for Mistral 3, and is actively updating it as new models land. (update note, prompt repo) For anyone deploying Mistral 3 as the backbone of an agent—especially in open‑source stacks where you can’t rely on a hosted safety gateway—the message is: you must bring your own guardrails. That likely means combining strict upstream input filtering, downstream content classifiers (possibly separate from the base model), and rate/budget limits on high‑risk tools, rather than assuming the model’s native refusal behavior will hold once users can edit system prompts or chain calls through other agents.

🧮 Accelerator roadmaps: Trainium3 UltraServers

Non‑model hardware update with direct AI impact: Trainium3 specs and fabrics suggest lower‑cost training/inference paths at scale. Excludes TPU/GB300 TCO (covered prior days).

AWS Trainium3 UltraServers target cheaper frontier‑scale AI training

AWS introduced its Trainium3 chip and Trn3 UltraServers, packing 144 Trainium3s per node for roughly 362 FP8 PFLOPs, 20.7 TB of HBM3e and about 706 TB/s aggregate memory bandwidth, with claims of up to 4.4× higher performance, 3.9× more memory bandwidth, and 4× better performance per watt than the previous‑gen Trainium2. Trainium3 summary Full details are in the EC2 Trn3 UltraServers announcement. AWS blog post Trainium3 adds new MXFP8 and MXFP4 low‑precision formats aimed at dense and MoE workloads, and a NeuronSwitch‑v1 fabric that doubles inter‑chip bandwidth versus Trn2, allowing UltraClusters to scale to 1M+ chips for frontier‑scale training and long‑context, real‑time multimodal serving. Trainium3 summary AWS reports that on Amazon Bedrock, Trn3 can deliver up to 3× the performance of Trn2 and over 5× more output tokens per megawatt at similar latencies, which directly lowers training and inference TCO for customers willing to target the Neuron SDK and Trainium stack. Trainium3 summary For AI infra leads, the point is: this is a serious non‑Nvidia option you can actually plan around. You get a very high‑bandwidth, memory‑rich node design, native PyTorch support via Neuron, and a roadmap that includes Trainium4 interoperating with Nvidia GPUs over NVLink Fusion, so you can start experimenting with mixed clusters instead of betting entirely on one vendor. Trainium3 summary The trade‑off is ecosystem maturity—CUDA still has more libraries and community experience—so near‑term deployments will make the most sense for greenfield training jobs, MoE experiments, or Bedrock‑hosted models where AWS hides some of the toolchain complexity.

🤖 Embodied signals: Optimus hands and student dog bot

A small but notable cluster: Tesla demos more dexterous hands and students ship an affordable Gemini‑powered robotic dog. Useful to track embodied stacks intersecting with foundation models.

Tesla Optimus hands jump in dexterity with 22‑DoF, sensor‑rich design

Tesla’s latest Optimus Gen 3 / V2.5 demos at NeurIPS show much smoother whole‑body motion and close‑up shots of new hands that look far more controlled and precise than earlier versions, with observers calling the gait and manipulation "almost human‑like". Optimus running demo One clip focuses on the bare hand, revealing 22 degrees of freedom with hidden tendons and fingertip sensors said to be 4× more sensitive, already being used in Fremont for battery assembly, wire routing, and cloth folding tasks. (Optimus hand demo, Ungloved hand closeup, hands capabilities)

For AI engineers and robotics leads, the signal is that Tesla’s embodied stack is maturing from flashy walking clips into repeatable, fine‑grained manipulation that can actually do factory work, powered by a learned policy rather than hard‑coded trajectories. The combination of high‑DOF hands, rich tactile input, and large‑scale data from repetitive tasks is exactly the substrate you’d want if you plan to plug in stronger foundation‑model style planners later, so it’s worth tracking how quickly they move from demos to higher‑variance jobs where general intelligence really matters.

Stanford team open-sources ~$2K Gemini‑controlled quadruped robot dog

A group of Stanford students released an open‑source quadruped robot dog that uses Gemini via Google’s AI Studio APIs as its perception and control brain, giving it the ability to observe and interact with the world through language‑driven commands instead of custom task code. Gemini dog intro The hardware bill of materials lands around $2,000, and the team also published an "introduction to robotics" course and docs so others can replicate or extend the platform. robot dog docs

For builders, this is a concrete template for tying foundation models to low‑cost embodied agents: the dog streams camera and sensor data to Gemini, gets back high‑level actions, then maps those into locomotion and interaction primitives. It’s cheap enough for labs, student teams, and startup prototyping, and because the stack is open you can swap in other models, experiment with on‑device vs cloud inference, or re‑use the control layer for different robot forms. This is the kind of project that can quickly evolve into a standard "Gemini‑class" reference bot for teaching, research, and early commercial pilots.

📚 New research: test‑time RL, video flows, and physics‑guided code

A strong batch of preprints/papers: test‑time learning that updates models, flow‑based video generation, streaming token compression, and physics‑guardrailed code synthesis. Useful for teams planning 2026 roadmaps.

Chain‑of‑Unit‑Physics steers scientific code synthesis with physics unit tests

Chain‑of‑Unit‑Physics proposes a simple but powerful recipe for scientific code generation: have domain experts write small "unit physics" tests that encode conservation laws and invariants, then wrap an LLM code agent in a loop that only accepts programs passing those checks. Unit-Physics summary On a hydrogen ignition benchmark, this approach yields a solver whose ignition delays match expert code while running about 33% faster and using ~30% less memory.ArXiv paper

The pattern generalizes nicely: if your domain has hard constraints (mass/energy, probabilities summing to 1, no‑arb pricing), you can formalize them as lightweight verifiers and let the LLM explore the space of implementations. It turns code‑synthesis from pure pattern matching into a constrained search you can trust a lot more in production.

Hierarchical token compression speeds streaming Video‑LLMs while keeping ~99% accuracy

A new "Streaming Token Compression" (STC) framework attacks the latency of streaming video LLMs by caching visual tokens for keyframes and pruning redundant tokens over time, cutting LLM prefilling cost by roughly 45% at almost no accuracy loss. STC summary STC‑Cacher reuses encoder features for static regions between full reference frames, while STC‑Pruner scores tokens by temporal novelty and frame salience, only feeding the highest‑value ones to the language model.ArXiv paper

If you’re experimenting with live video agents—robotics, sports commentary, surveillance—this is directly actionable: you don’t need to retrain your Video‑LLM, just bolt STC in front of the encoder/LLM boundary. The paper’s numbers suggest you can treat the image encoder and LLM as modular black boxes and still get substantial latency wins.

Stabilizing RL with LLMs: theory and recipes for large‑scale GRPO training

A new Qwen‑authored paper formalizes when you can safely optimize sequence‑level rewards (like task success) using token‑level policy gradients in LLMs, and links that theory to practical tricks like importance sampling, clipping, and routing replay for MoE models. RL stabilization summary Through hundreds of thousands of GPU hours on a 30B MoE, they show that on‑policy GRPO with proper importance correction is the most stable baseline, while off‑policy speedups only work if you aggressively limit stale samples and preserve expert routing. ArXiv paper

For anyone planning RLHF/RLAIF or reasoning‑RL in 2026, this reads like the missing "how not to diverge" manual: it argues you should (a) minimize train–inference mismatch, (b) treat router state as part of the policy, not an afterthought, and (c) expect longer training, not fancier objectives, to unlock final gains once you’ve stabilized the loop.

ThetaEvolve: test‑time RL lets an 8B model beat prior math systems on open problems

ThetaEvolve is a new open framework that simplifies AlphaEvolve into a single 8B DeepSeek‑R1‑Qwen3 model trained with test‑time reinforcement learning, and it sets new records on hard optimization problems like circle packing and a first autocorrelation inequality. ThetaEvolve summary Instead of freezing weights and only evolving programs in a database, ThetaEvolve updates the model itself while iteratively sampling "parent" programs, mutating them, and verifying each child with an external checker.ArXiv paper

For practitioners, this is a concrete proof that small, open models can self‑improve on narrow domains if you can (1) encode solutions as executable programs, (2) build a robust verifier to catch crashes/cheats, and (3) turn solution quality into a dense reward signal. It’s also a strong template for agentic code‑search loops in domains like structured optimization, compilers, or trading where the objective is measurable but gradients are not.

FINDER/DEFT reveal deep research agents struggle with evidence integration, not task parsing

OPPO’s AI Agent Team released FINDER, a benchmark of 100 human‑curated research tasks with 419 checklist items, and DEFT, a 14‑way failure taxonomy, after analyzing ~1,000 reports from popular deep‑research agents. FINDER summary Their key finding: agents usually understand the query, but break down when integrating conflicting sources, verifying claims, and planning reasoning‑resilient report structures.ArXiv paper

For anyone building long‑form "analyst" agents, this is a reality check. Better search or bigger models won’t be enough; you likely need explicit architectures for evidence tracking (what supports what), cross‑source consistency checks, and planning modules that can adapt when retrieval pulls in contradictory or low‑quality material.

Four Over Six makes 4‑bit NVFP4 training and inference much more stable

"Four Over Six" is a quantization tweak for NVIDIA’s NVFP4 format that lets each 16‑value block choose whether to map its maximum to level 4 or level 6, greatly reducing rounding error near saturation. Four Over Six summary Implemented efficiently on Blackwell, it adds under 2% inference overhead and under 15% training overhead while preventing divergence in several 4‑bit LLM training runs and improving perplexity in post‑training quantization.ArXiv paper

For infra teams eyeing NVFP4 to cut costs, this paper is a strong signal that you don’t have to accept the usual "4‑bit is flaky" story. It also shows that small, format‑aware tricks at the kernel level can buy you another generation of efficiency without re‑architecting models.

LongVT incentivizes long‑horizon video reasoning with native tool calls

LongVT is a new framework for long‑video understanding that pushes multimodal LLMs to "actively look back" over minutes‑long clips by giving them native tool calls over time‑indexed video segments. LongVT summary Instead of a single global embedding, the model learns to issue structured queries (e.g., crop, replay, track) and use those tool responses as context for downstream question answering and reasoning. ArXiv paper

The key idea for builders is that long‑video QA becomes a tools‑and‑planning problem, not just a bigger context window. If you’re designing agents that must handle CCTV, meeting recordings, or gameplay VODs, LongVT is a strong argument to invest in a small, carefully chosen toolset plus a scheduler, rather than trying to cram full videos into one monolithic pass.

ReasonIF shows LRMs routinely break instructions inside their reasoning traces

Together AI’s "ReasonIF" paper measures how well large reasoning models obey instructions during their chain‑of‑thought, not just in final answers, and finds that they frequently drift—skipping constraints, changing formats, or answering the wrong subquestion mid‑reasoning. ReasonIF summary ReasonIF introduces a benchmark and taxonomy for these failures, letting you evaluate whether a model respects things like "don’t call tools" or "only answer in JSON" while it thinks.ArXiv paper

The takeaway for agent builders is uncomfortable but useful: adding more "thinking" doesn’t automatically mean better adherence to your spec. If you rely on long traces for safety or compliance, you probably need separate instruction‑consistency checks or trace‑time validators, not just output‑level filters.

ViBT scales “bridge” transformers for faster, structure‑preserving image and video edits

The Vision Bridge Transformer (ViBT) paper shows that you can replace multi‑step diffusion with a learned "velocity" path from source to target, enabling fast image and video edits that preserve structure while still supporting diverse outputs. ViBT summary ViBT learns in latent space to move noisy inputs toward the desired edit, with a rescaled velocity target and decaying noise schedule to keep trajectories close to the conditioning signal.ArXiv paper

In benchmarks, ViBT handles instruction‑based edits, style transfer, depth‑guided video, and interpolation at quality comparable to strong diffusion baselines but with simpler conditioning and cheaper sampling. paper recap For engineers, it’s a compelling direction if you’re hitting latency ceilings with diffusion‑based editors yet still need controllable, structure‑aware transforms.

Video4Spatial targets visuospatial intelligence with context‑guided video generation

The Video4Spatial paper introduces a "video‑to‑spatial" pipeline where a generative model augments raw video with synthetic context to help downstream models answer visuospatial questions, like object layout and 3D relationships. Video4Spatial summary Instead of only decoding what’s in the pixels, the system uses learned priors about geometry and motion to fill in structure, then feeds that enriched representation into a reasoning head. ArXiv paper

This sits at the intersection of perception and planning: for robotics, AR, and navigation agents, it suggests a pattern where you run a generative "world builder" alongside your planner, rather than expecting a single monolithic VLM to both see and reason perfectly from first principles.