NVIDIA Rubin NVL72 hits 10× tokens per MW – 75% fewer GPUs

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

NVIDIA turned its earlier Rubin teaser into hard economics at CES: Vera Rubin NVL72 is now framed as a rack‑scale “tokens‑per‑watt” machine, with a 10T MoE training in a month on roughly 75% fewer GPUs than Blackwell and a 1 MW cluster serving about 10× more tokens per second at steady state. Each NVL72 rack delivers 3.6 EFLOPs NVFP4 inference and 2.5 EFLOPs training with 54 TB LPDDR5X plus 20.7 TB HBM4 (1.6 PB/s), while BlueField‑4‑backed KV‑cache sharing reportedly yields up to 5× better long‑context throughput. Jensen Huang also confirmed 45°C warm‑water DLC with no chillers; Bloomberg tracked sharp same‑day drops in Johnson Controls (‑11%) and Modine (‑21%) as markets priced in thinner AI chiller demand. Runway’s Gen‑4.5 video model moved from Hopper to Rubin NVL72 in a single day, signaling CUDA‑compatible tooling is ready.

• Model evals and cost optics: Artificial Analysis’ Intelligence Index v4.0 now ranks GPT‑5.2 ahead of Opus 4.5 and Gemini 3 Pro, while a cost chart puts a full v4.0 run at $2,930 for GPT‑5.2 vs $1,590 for Opus and ~$600–$1,000 for leading open weights.

• Voice agents and capital: Nemotron Speech + Nemotron 3 Nano + Magpie demonstrate fully open sub‑500 ms voice‑to‑voice stacks; Modal sustains 127 concurrent streams on one H100. xAI closed a $20B Series E with NVIDIA and Cisco, funding Grok 5 on Colossus clusters already exceeding 1M H100‑equivalent GPUs.

Together these moves tighten the link between rack‑level efficiency, eval‑measured capability, and capital intensity at the top of the AI stack, while open speech and agent tooling show how quickly those gains are being productized in real‑time interfaces.

Top links today

- LTX-2 open video and audio model

- HY-World 1.5 world model GitHub

- vLLM-Omni v0.12.0rc1 release notes

- Nemotron Speech ASR benchmarks on Modal

- AI agent systems architectures survey paper

- Recursive Language Models arxiv paper

- LAMER exploration in language agents paper

- SWEnergy study on coding agent efficiency

- Universal Weight Subspace Hypothesis paper

- Geometry of Reason mathematical reasoning paper

- Falcon-H1R hybrid reasoning model paper

- K-EXAONE 236B MoE technical report

- Artificial Analysis Intelligence Index v4.0 results

- CogFlow visual math problem solving paper

- NVIDIA voice agent and model stack writeup

Feature Spotlight

Feature: NVIDIA Rubin resets inference economics

Rubin NVL72 promises ~10× cheaper tokens and warm‑water (45°C) DLC; partners port in a day; cooling vendors’ stocks drop—datacenter and AI cost curves shift now.

Strong, cross‑account CES coverage adds new specifics: 10× lower token cost and warm‑water DLC at 45°C, full‑stack NVL72 specs, and day‑one partner ports. This continues yesterday’s Rubin storyline with concrete cooling and adoption impacts.

Jump to Feature: NVIDIA Rubin resets inference economics topicsTable of Contents

🧊 Feature: NVIDIA Rubin resets inference economics

Strong, cross‑account CES coverage adds new specifics: 10× lower token cost and warm‑water DLC at 45°C, full‑stack NVL72 specs, and day‑one partner ports. This continues yesterday’s Rubin storyline with concrete cooling and adoption impacts.

Rubin NVL72 details sharpen 10× tokens-per-MW and 75% fewer GPUs claims

Rubin NVL72 (NVIDIA): NVIDIA expanded on Rubin NVL72’s economics at CES, saying a 10T MoE can be trained in one month with 75% fewer GPUs than Blackwell and that a 1 MW cluster can now serve about 10× more tokens per second, tightening the story from initial Rubin which outlined the first throughput and cost claims; the company also quantified rack-level compute at 3.6 EFLOPs NVFP4 inference and 2.5 EFLOPs NVFP4 training, with 54 TB LPDDR5X plus 20.7 TB HBM4 delivering 1.6 PB/s and 260 TB/s scale-up bandwidth across the NVL72 system as described in the nvl72 spec tweet and the rubin blog recap.

• Training and inference economics: NVIDIA frames Rubin as a tokens-per-watt machine rather than a raw FLOPS play, emphasizing that a 10T MoE which previously needed a much larger Blackwell fleet can now train on a quarter of the GPUs while serving 10× more tokens at the same 1 MW power envelope, according to the rubin blog recap and a separate rubin summary.

• Six-chip rack-scale stack: The Vera Rubin NVL72 integrates a Vera CPU (88 Olympus cores, 1.2 TB/s DRAM, up to 1.5 TB LPDDR5X), Rubin GPUs, NVLink 6 switches, ConnectX‑9 SuperNICs, BlueField‑4 DPUs, and Spectrum‑6 Ethernet so that compute, networking, and control behave as a single logical system, with NVIDIA contrasting this tightly-owned stack against more partner-driven rack designs from AMD’s Helios and Huawei in the rubin platform thread.

• KV‑cache reuse and long context: Rubin’s Inference Context Memory Storage Platform, powered by BlueField‑4, adds a shared AI-native key–value cache so that attention state can be reused across requests, which NVIDIA says can increase tokens-per-second throughput and power efficiency by up to 5× on long-context workloads, as detailed in the rubin blog recap and the nvidia blog.

The picture is that Rubin is being positioned not as a single GPU jump but as a rack-scale, cache-aware inference factory where both hardware and system software are tuned around tokens-per-watt rather than peak TFLOPS.

45°C warm‑water cooling for Rubin hits chiller vendors’ stock prices

Warm‑water DLC (NVIDIA Rubin): Jensen Huang highlighted that Vera Rubin NVL72 can run on 45°C warm‑water direct liquid cooling, saying that "no water chillers are necessary" for these racks and that data centers can cool Rubin-class supercomputers with hot-water loops and dry coolers instead of traditional chiller plants, according to the jensen cooling clip and the nvidia blog.

• Market reaction in cooling stocks: Bloomberg charted a sharp selloff in major HVAC and cooling vendors right after these CES remarks—Johnson Controls dropped as much as 11%, Modine slid up to 21%, and Carrier and Trane also fell, as capital markets priced in lower long‑term demand for large chiller plants in AI data centers, as shown in the cooling stocks writeup and

.

• Data‑center design implications: NVIDIA’s blog explains that warm‑water DLC plus higher flow rates on Rubin nearly double liquid flow at the same CDU pressure head, cutting fan and chiller energy and enabling dry‑cooler operation with minimal water use, which in turn frees more of a site’s power budget for GPUs rather than facility overhead, reinforcing the tokens-per-watt story from initial Rubin.

The combination of 45°C supply temperatures and dry‑cooler readiness signals a shift in AI facility economics where thermal engineering and power smoothing become as central to capacity planning as the GPUs themselves.

Runway ports Gen‑4.5 video model to Vera Rubin NVL72 in one day

Gen‑4.5 on Rubin (Runway + NVIDIA): Runway and NVIDIA reported that Runway’s Gen‑4.5 video model, described as "the world’s top‑rated video generation model", was ported from Hopper to a Vera Rubin NVL72 rack in a single day, making Runway one of the first partners to demonstrate a production video model on Rubin hardware, according to the runway partnership announcement.

• Signal on tooling readiness: The companies frame the one‑day port as evidence that existing Hopper‑targeted training and inference stacks can be brought up quickly on Rubin’s six‑chip platform without wholesale rewrites, which supports NVIDIA’s claim that Rubin is an evolution of its existing CUDA/NVLink ecosystem rather than a disruptive fork in the stack, as implied in the rubin platform thread.

• Early inference workloads: The partnership positions Vera Rubin NVL72 as a target for heavy multimodal inference—high‑resolution, long‑duration text‑to‑video—rather than only for frontier LLM training, dovetailing with Jensen Huang’s comment that a large share of future AI compute will go to video consumption and generation in the elon video focus clip.

This early Gen‑4.5 deployment indicates that Rubin’s promised 10× tokens‑per‑MW economics may extend beyond text models to high‑bandwidth video workloads once more partners follow Runway’s lead.

🛠️ Agent‑native coding: workflows, UIs and local runs

Busy day for dev tooling: Cursor’s dynamic context (filesystem offloading) lands, Claude Code gains a desktop/local entry point, Cline adds Background Edits, and paywalled orchestration (OpenCode Black) sells out. Excludes Rubin, which is the feature.

Claude Desktop adds local Claude Code so you can run agents without a terminal

Claude Code in Desktop (Anthropic): Anthropic quietly enabled a local Claude Code workflow inside the Claude Desktop app, so users can run coding agents on a selected folder without touching a terminal; setup is a four‑step flow—install Desktop, toggle the Code sidebar, grant folder access, then start prompting—spelled out in the desktop instructions.

• Terminal‑free entry point: This update gives less terminal‑comfortable users the same project‑aware coding experience previously limited to the CLI, as multiple developers point out in the retweet summary and note that you can now combine local files with Claude’s tools from a single UI.

• Local‑first usage: One engineer highlights that you can already kick off Claude Code from mobile by wiring it to GitHub, then use Desktop for local work on the same repos, which shifts Claude Code closer to being a general "agent on your filesystem" rather than a pure coding REPL in the mobile workflow note.

• Positioning vs ChatGPT: Some practitioners now compare this stack directly to ChatGPT’s Atlas and similar offerings, arguing that Claude Code plus Desktop and browser extensions better fits agent‑native workflows for real projects, as reflected in usage comments in the non‑technical angle.

Cursor’s dynamic context turns everything into files and cuts tokens by ~47%

Dynamic context (Cursor): Cursor’s new "dynamic context" system shifts from prompt‑stuffing to a filesystem‑backed approach where agents write long tool outputs, MCP responses, chat history and skills into files, then search and load only what’s needed at each step, rather than keeping everything in the context window—Cursor reports a 46.9% reduction in total tokens when using multiple MCP servers, while maintaining answer quality in the feature announcement and explaining the design in the cursor blog.

• Filesystem offloading pattern: Long JSON tool responses and prior chats become files that agents can reopen or search, so destructive summarization is treated as "offloading" rather than permanent loss, as described in the design writeup and elaborated by Cursor’s cofounder in the context engineering thread.

• MCP and tools as files: Instead of static tool lists in the system prompt, MCP servers and their tools are mirrored into a folder structure, letting agents discover capabilities by directory traversal and filename search, which several engineers note lines up well with how models are trained to reason about files in the follow‑up notes and MCP discussion.

• Implication for agent‑native IDEs: Community commentary frames this as part of a broader shift toward "everything is a file" agent architectures that favor search over giant prompts, with some debate about pinning or always‑in‑context MCPs versus pure dynamic loading in the offloading takeaways and tool loading debate.

Cline 3.47.0 adds Background Edits and spotlights MiniMax M2.1 coding model

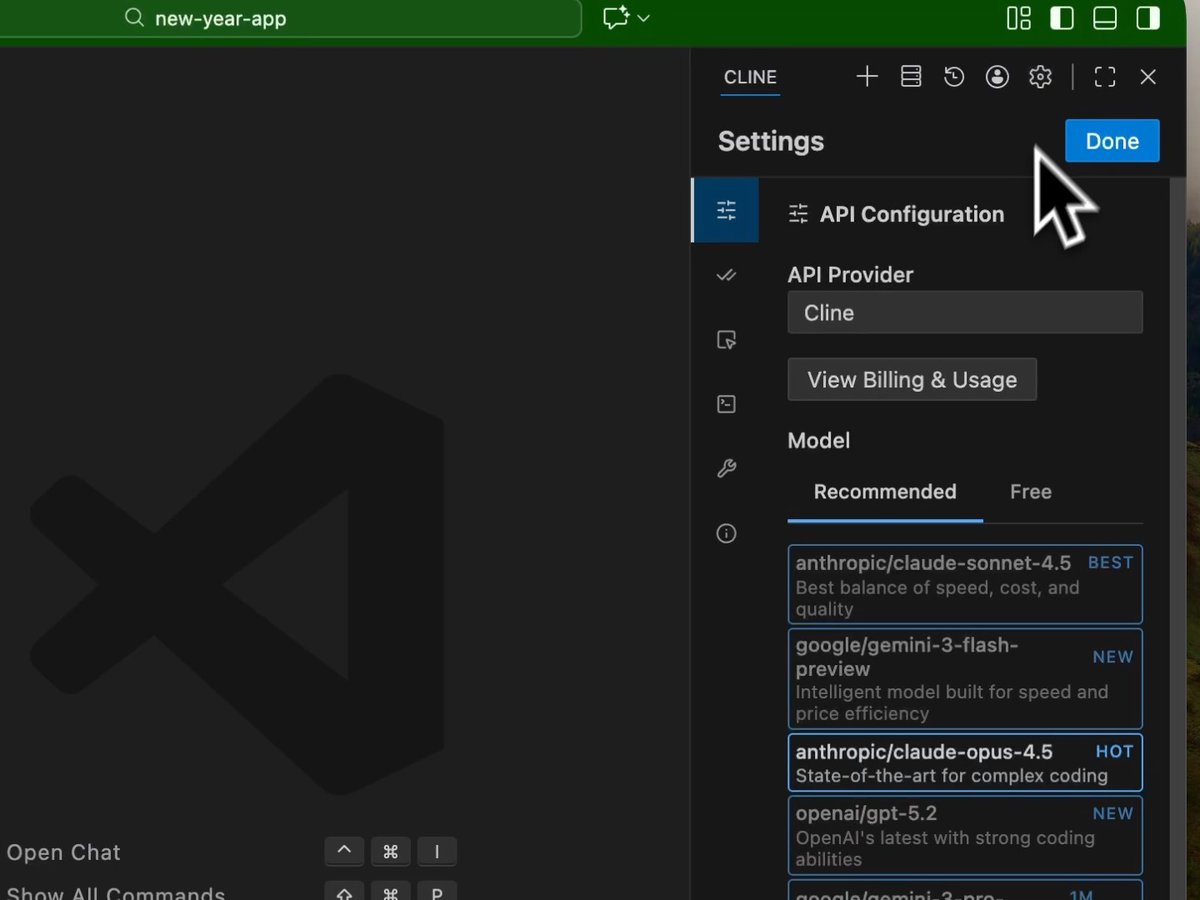

Cline 3.47.0 (Cline): The Cline team shipped v3.47.0 with a new Background Edits mode that lets the agent modify files in the background while the user keeps typing and navigating in their editor, plus a temporary free‑use window for MiniMax M2.1 as a coding backend, according to the release thread and details in the cline blog.

• Flow‑preserving edits: Background Edits addresses a frequent complaint that Cline used to yank focus into diff views and steal the cursor whenever it edited files; now it applies changes quietly until the user is ready to review and approve, which the team positions as key to staying "in flow" in the feature demo.

• MiniMax M2.1 promotion: For a limited time, MiniMax M2.1 is available free through the Cline provider, with developers calling out its strong multilingual coding and suitability for multi‑file refactors when paired with Cline’s orchestration in the model recommendation and echoed by MiniMax’s own workshop announcement in the minimax event.

• Ecosystem angle: The release also includes smaller fixes like Azure identity auth and better binary‑file handling, but the emphasis from both Cline and MiniMax is on treating Cline as a harness where you can swap in frontier and open‑weight models for long‑running coding agents rather than a single‑model assistant, as described in the release thread.

OpenCode Black $200 "any model" coding tier sells out in an hour

OpenCode Black (OpenCode): OpenCode launched a limited‑run OpenCode Black subscription at $200/month that promises "use any model" access for its coding agent server, and the initial batch sold out in about an hour, with the team saying they will use this cohort to learn usage patterns before offering cheaper, wider plans in the pricing tease and sellout update.

• Positioning vs Claude Max/Codex Pro: The creator frames Black as aiming for parity with offerings like Claude Max and Codex Pro—explicitly calling out that goal and noting this is an experiment to understand real‑world consumption before locking in limits and SKUs in the plan description.

• "Any model" orchestration: Alongside the subscription, OpenCode emphasizes that its existing server is already a convenient way to embed a coding agent anywhere and will evolve into a system that can orchestrate many agents across different providers, suggesting Black users will be early adopters of that multi‑agent, multi‑model harness in the server roadmap and follow‑up link.

• Community traction: The core OpenCode repo recently celebrated 50k GitHub stars and 500 contributors, which some users connect directly to appetite for premium tiers like Black in the stars milestone and

.

Rork leans on Claude Code and Opus 4.5 to ship mobile apps in three clicks

Rork 1.5 (Rork): Rork is pitching itself as "the easiest way to use Claude Code with Opus 4.5 to build real mobile apps," claiming it can take an agent‑authored app from spec to App Store publish in three clicks and offering a $25 subscription credit to early signups until tomorrow, as described in the rork offer.

• Agent‑native mobile stack: The product wraps Claude Code plus Opus 4.5 into an agent‑native flow that generates code, packages it as a mobile app and handles store submission, effectively turning Claude into a mobile app engineer rather than a generic assistant, a vision reinforced by the workshop and dinner announcement for M2.1 builders in San Francisco in the workshop invite.

• Ecosystem and community: Rork’s team highlights a small ecosystem of successful app founders and community members around the tool, presenting it as one of the first concrete examples where an agent harness is tied directly to monetizable mobile output rather than toy projects, which is a theme running through the rork offer.

🔌 Skills, MCP and agent‑to‑agent plumbing

Focus on interoperable agent stacks: Claude “Agent Skills” guidance, open‑source MCP mailboxes for A2A comms, centralized skill config via dotagents, and an active debate on filesystem vs MCP discovery. Excludes feature hardware topic.

Anthropic Agent Skills framing spreads as builders pivot from GPTs to reusable skills

Agent Skills (Anthropic): Anthropic’s "skills, not agents" framing is getting traction across the ecosystem, with community posts arguing that GPT-style standalone agents are a dead end and that centralized, reusable Skills are a better abstraction for complex apps, as laid out in the Skills diagram and talk in the skills explainer and in follow-on commentary in the agent skills video. Only lightweight Skill metadata is kept in Claude Code’s context by default, and the full implementation is pulled in on demand when the agent decides to call that Skill, which cuts unnecessary context bloat according to the skills metadata note.

• From GPTs to skills: Commentators describe GPT-style mini-apps as hard to manage and compose at scale and suggest turning GPT prompts into Skills so agents can invoke them directly when needed rather than users manually choosing and chaining GPTs, as discussed in the gpts vs skills and skills advantages threads.

• Access for non‑coders: Builders note that PMs and other non‑engineers can define Skills by writing natural-language specs and wiring existing tools, with one product manager explicitly told to "at least get Claude Pro and get started" since "you don’t have to know how to code to make Skills" in the skills for pm exchange.

• Role in agent‑native apps: Dan Shipper positions Skills as a core building block for "agent‑native" architecture—where every user action can also be done by an agent—and calls out that Skills sit in the filesystem as durable building blocks while agents orchestrate them in new ways in the agent native apps post.

The point is: Skills are starting to look like the portability and governance layer for agent ecosystems, while GPT-style mini‑apps are being reframed as raw material to be refactored into those Skills.

MCP Agent Mail emerges as popular open mailbox for agent‑to‑agent chat

MCP Agent Mail (community): MCP Agent Mail is being positioned as a de facto open mailbox layer for AI agents, with the author reporting four viral launches, "many thousands of happy users," and broad compatibility across different agent harnesses, as detailed in the agent mail thread. The tool auto‑detects and configures MCP servers in under a minute, provides a web UI to inspect what agents are saying to each other, and includes export and share features so teams can publish mailboxes as live artifacts, as shown in the shared mailbox demo.

• Agnostic A2A plumbing: The project is described as working with "all agents" rather than a single vendor harness, acting as a shared inbox where tool calls, intermediate messages, and final outputs are stored as MCP messages instead of staying locked inside one app silo, according to the agent mail thread.

• Operational visibility and sharing: The UI surfaces threads and metadata so humans can audit or debug multi‑agent workflows, while the export function turns those conversations into shareable URLs that others can browse without reproducing the setup, which the author emphasizes in both the agent mail thread and the linked github repo.

This places MCP Agent Mail squarely in the emerging pattern of agent‑to‑agent plumbing, filling the gap between raw MCP protocols and higher‑level orchestration frameworks.

Cursor team defends filesystem‑based MCP discovery as users call for pinning

MCP discovery (Cursor): A public back‑and‑forth between power users and Cursor’s founder surfaced trade‑offs in how MCP tools are exposed to models: Cursor deliberately avoids a Anthropic‑style "tool search" API and instead syncs each MCP server into a dedicated filesystem folder so related tools live together and can be discovered via file listing, as described in the cursor mcp design reply. Tool descriptions and details are then pulled into context progressively when the agent decides to inspect or use a tool, with the team noting that this design is biased toward longer, more ambitious tasks rather than quick one‑shot utilities in the progressive loading note.

• User concerns about cohesive servers: Users argue that this pattern works best when MCP servers act like bags of disjointed tools, but creates friction when a server is a cohesive unit (for example, a tightly integrated API suite), because then you need separate Skills or markdown docs to explain server‑level behavior to the model; otherwise quality can regress, as warned in the mcp cohesion critique.

• Calls for pinned servers and prompts: Suggestions include letting users "pin" certain MCP servers so their full tool manifests always sit in context, and leveraging MCP prompt specs so slash‑command style triggers can tell the harness when to hoist an entire server into context instead of letting the model rediscover it via search, as proposed in the pinning suggestion and prompt spec idea exchanges.

The discussion highlights an emerging design fork in MCP plumbing: whether to prioritize context efficiency via filesystem offloading or prioritize semantic grouping and always‑on access for rich, cohesive servers.

dotagents TUI consolidates Claude Code skills, hooks and commands into .agents

dotagents (community): Ian Nuttall released dotagents, a text‑UI tool that migrates scattered agent configuration—AGENTS/CLAUDE.md files, hooks, commands and skills—from multiple tools like Claude Code, Codex and Factory into a single .agents directory, then symlinks them back so there is one source of truth for both global and project‑local setups, as explained in the dotagents description. The repository, published under MIT license, supports operating either at a per‑project level or as a global agent config hub on a machine, according to the github repo.

This addresses a practical paper‑cut many early agent users hit: keeping Skills, tool manifests and agent directives in sync across several harnesses once they start wiring Claude Code, Codex, and related CLIs into the same workflows.

📊 Artificial Analysis Index v4.0 and eval cost optics

AA rolls out Index v4.0 (less saturated, more agentic) plus new GDPval‑AA, AA‑Omniscience and CritPT. Cost‑to‑run charts compare models’ dollar burn. This is new vs yesterday’s coverage by adding the full v4.0 breakdown and costs.

GPT‑5.2 tops Artificial Analysis Intelligence Index v4.0

Intelligence Index v4.0 (Artificial Analysis): Artificial Analysis released version 4.0 of its Intelligence Index with ten evaluations spread across four equally weighted pillars—Agents, Coding, Scientific Reasoning, General—and reports far less score saturation, with top models now ≤50 vs 73 in v3.0, according to the launch thread index v4 overview. GPT‑5.2 with xhigh reasoning effort leads the new index, followed by Anthropic’s Claude Opus 4.5 and Google’s Gemini 3 Pro Preview (high), while the benchmark mix shifts by dropping MMLU‑Pro, AIME 2025 and LiveCodeBench and adding AA‑Omniscience, GDPval‑AA and CritPT to better reflect real‑world, agentic workloads index v4 overview.

AA‑Omniscience decouples LLM accuracy from hallucination behavior

AA‑Omniscience (Artificial Analysis): Artificial Analysis introduced AA‑Omniscience, a 6,000‑question benchmark spanning 42 economically relevant topics in six domains (Business, Health, Law, Software Engineering, Humanities & Social Sciences, and STEM) to jointly measure factual recall and hallucination, scoring models with an Omniscience Index that rewards precise knowledge and penalizes confident guessing omniscience description. Gemini 3 Pro Preview (high) currently tops the Omniscience Index at 13, with Gemini 3 Flash (Reasoning) and Claude Opus 4.5 (Thinking) tied behind, but the breakdown shows that high accuracy does not imply low hallucination: Gemini 3 Pro and Flash reach 54% and 51% knowledge accuracy yet also post very high hallucination rates (88% and 85%), while Claude Sonnet 4.5 (Thinking), Claude Opus 4.5 (Thinking) and GPT‑5.1 (high) trade higher abstention for lower hallucination, metrics that each contribute 6.25% to the overall Intelligence Index v4.0 weighting omniscience description.

CritPT physics benchmark exposes limits of frontier reasoning

CritPT (Artificial Analysis): The CritPT evaluation, built by more than 50 active physics researchers across 30+ institutions, tests models on 71 composite, research‑level challenges in areas like condensed matter, quantum physics, astrophysics and high‑energy physics, each designed to resemble an entry‑level graduate research warm‑up with machine‑verifiable answers critpt paper. GPT‑5.2 (xhigh) leads the CritPT leaderboard with roughly 11.5% accuracy, with Gemini 3 Pro Preview (high) and Claude Opus 4.5 (Thinking) following, but Artificial Analysis stresses in its v4.0 thread that even the best current models remain far from reliably solving full research‑scale physics problems end‑to‑end critpt recap.

GDPval‑AA ranks GPT‑5.2 and Opus 4.5 on real economic tasks

GDPval‑AA (Artificial Analysis): The new GDPval‑AA benchmark evaluates generalist agent performance on OpenAI’s GDPval dataset of economically valuable tasks across 44 occupations and 9 industries, running models in a Stirrup reference harness with shell and browser access and deriving ELO scores from blind pairwise comparisons index v4 overview. GPT‑5.2 (xhigh) leads the GDPval‑AA leaderboard with an ELO of 1442, followed by Claude Opus 4.5 (with the non‑thinking variant scoring highest at 1403), other GPT‑5.2 and Opus 4.5 reasoning settings, and Claude Sonnet 4.5 at 1259, on tasks that output realistic work products like documents, slides, spreadsheets and multimedia deliverables gdpval details.

Artificial Analysis publishes dollar cost to run its full Index v4.0

Eval cost optics (Artificial Analysis): A cost breakdown chart from Artificial Analysis quantifies how much it costs to run all Intelligence Index v4.0 evaluations per model, putting GPT‑5.2 (xhigh) at about $2,930 total (roughly $504 in input and $2,361 in output tokens), ahead of Grok 4 at $1,852 and Claude Opus 4.5 at $1,590, where Opus’ spend skews toward $787 input and $621 reasoning cost cost chart post. Gemini 3 Pro Preview (high) and GPT‑5.1 (high) land in a lower‑cost middle tier at $988 and $927 respectively, while Claude Sonnet 4.5 ($810), Qwen3 235B A22B (~$622) and GLM‑4.7 (~$611) illustrate how large open‑weight models can complete the same evaluation battery at substantially lower dollar burn, with the stacked bars separating input, output and explicit reasoning surcharges cost chart post.

🧠 Open/edge models and coding specialists

Multiple model drops for builders: an edge‑first LFM2.5 family, a 14B RL‑hardened coder with full training stack, a 236B MoE tech report, and a new image contender sighting. This avoids video/audio LTX‑2 (covered under Media).

Liquid AI releases LFM2.5 open-weight edge model family

LFM2.5 (Liquid AI): Liquid AI has announced LFM2.5, an open‑weight model family designed to run fast, private, and always‑on directly on devices across text, vision, audio, and Japanese‑language use cases, with the base 1.2B model trained from 10T to 28T tokens for stronger reasoning and knowledge recall as described in the lfm2-5 blog and echoed in the lfm2-5 overview.

• On‑device focus: The models target CPU‑class hardware with low memory footprints and optimized inference so that both generation and understanding tasks can stay local, avoiding cloud latency and data export—this edge emphasis is highlighted in the lfm2-5 blog.

• Multimodal & multilingual: The family spans text, vision, and audio (including an 8× larger audio variant) and is tuned for Japanese and other languages, with early community attention drawn to high‑quality text‑to‑speech samples in the lfm2-5 overview.

• Open stack: Liquid positions LFM2.5 as fully open‑weight infrastructure for builders, enabling fine‑tuning and integration into custom agents and local apps without proprietary dependencies, as laid out in the technical details of the lfm2-5 blog.

This release extends the trend of compact, open models optimized for edge deployment, giving engineers another viable option when GPU access is constrained or data must remain on device.

LG AI details K‑EXAONE: 236B MoE with 23B active and 256K context

K‑EXAONE (LG AI Research): LG AI Research released the technical report for K‑EXAONE, a multilingual Mixture‑of‑Experts language model with 236B total parameters but only 23B active per token, supporting a 256K‑token context window and six languages, and posting performance comparable to similarly sized open‑weight peers according to the k-exaone paper.

• Architecture & efficiency: K‑EXAONE uses an MoE design to cut active compute while keeping capacity high, pairing hybrid attention with Multi‑Token Prediction to yield around 1.5× decoding speed‑ups relative to dense baselines of similar quality, as summarized in the k-exaone paper.

• Language coverage: The model is trained for Korean, English, Spanish, German, Japanese, and Vietnamese, aiming squarely at both domestic Korean usage and broader multilingual applications, with this six‑language focus highlighted in the k-exaone paper.

• Positioning vs peers: Benchmarks reported by LG put K‑EXAONE in the same band as leading open‑weight large models on reasoning and general‑purpose tasks, while operating at a lower active parameter count via MoE routing, again outlined in the k-exaone paper.

This positions K‑EXAONE as one of the more ambitious open descriptions of a frontier‑scale MoE system, giving practitioners another reference point for large multilingual, long‑context deployments.

NousCoder‑14B hits 67.87% Pass@1 with fully open RL stack

NousCoder‑14B (Nous Research): Nous Research introduced NousCoder‑14B, a coding‑specialist model post‑trained on Qwen3‑14B that reaches 67.87% Pass@1—a +7.08 point gain over the Qwen baseline—on their target coding benchmark using verifiable execution rewards, as detailed in the nouscoder announcement and expanded in the nouscoder blog.

• Training recipe: The team used 48 B200 GPUs over 4 days, running reinforcement learning in their Atropos framework with an open RL environment, benchmark, and harness so others can reproduce or extend the experiments according to the nouscoder announcement.

• Reward design: Rewards are based on verifiable code execution rather than proxy signals, which reduces reward hacking and improves alignment between metric gains and real bug‑fixing or problem‑solving ability as explained in the nouscoder blog.

• Open infrastructure: Atropos, the RL stack, and logs are all released, giving practitioners a concrete reference for building RL‑hardened coding models on top of existing bases like Qwen3‑14B, as emphasized in the nouscoder announcement.

For engineers evaluating specialized coders, NousCoder‑14B shows how much headroom remains when you combine a strong base model with domain‑specific RL and a fully open training pipeline.

Mystery "Goldfish" model impresses in Arena image comparisons

Goldfish image model (unspecified): A previously unannounced image model labeled “Goldfish” has appeared on Artificial Analysis’ Image Arena and is drawing attention for strong, photorealistic outputs in side‑by‑side comparisons against known models like FLUX.1 Kontext and GPT Image 1.5, as shown in the arena graffiti test and exercise infographic.

• Prompted comparisons: In urban street and infographic prompts, multiple users report preferring Goldfish’s samples over baselines; one example pits it against GPT Image 1.5 on an exercise‑benefits infographic, where Goldfish maintains layout and typography quality at least on par with the OpenAI model in the exercise infographic.

• Style and consistency: Earlier tests on graffiti‑covered alleyway scenes show Goldfish handling depth, lighting, and detailed textures well, matching or exceeding FLUX.1 Kontext [pro] on user preference in the arena graffiti test.

• Speculation & unknowns: Community posts note that Goldfish is not yet tied to a public card or repo, and some speculate it could be a new "Nano Banana Flash"‑style model from Google or another lab, but at this point both the architect and licensing status remain undocumented in the goldfish arena mention and goldfish speculation.

For analysts tracking the image‑model landscape, Goldfish is an early signal that another high‑end, likely open‑weights contender may be in testing, but hard details on training data, safety tuning, and access are not yet available.

🗣️ Sub‑500ms voice stacks with NVIDIA Nemotron Speech

Today’s clips show production‑grade voice agents: open models (ASR+LLM+TTS) with <500ms voice‑to‑voice, and scaling tests hitting 127 concurrent clients on one H100. Mostly engineering notes and code links.

Nemotron Speech ASR stack hits <500ms voice‑to‑voice with fully open models

Nemotron Speech ASR stack (NVIDIA): NVIDIA engineers showcase an end‑to‑end voice agent that finalizes transcription in ~24ms and completes round‑trip voice‑to‑voice in under 500ms using Nemotron Speech ASR, Nemotron 3 Nano 30GB (4‑bit) as the LLM, and a preview Magpie TTS model in a single pipeline, with all three models and their training artifacts released as truly open source, according to the demo and commentary in the agent demo.

• Latency and model stack: The reference agent uses streaming Nemotron Speech ASR for low‑drift transcripts, Nemotron 3 Nano 30GB quantized to 4‑bit via vLLM for fast reasoning, and an early Magpie checkpoint for text‑to‑speech; the system achieves 24ms transcription finalization and sub‑500ms total voice response in the example configuration, as described in the agent demo.

• Open source and deployment: NVIDIA emphasizes that Nemotron Speech ASR, Nemotron 3 Nano, and Magpie are released with weights, training data, training code, and inference code, and publishes a full reference implementation that can be deployed either on Modal’s cloud or locally on DGX Spark and RTX 5090 via Docker, as outlined in the voice agent blog and summarized in the deployment notes.

• CES positioning in open model universe: Nemotron Speech ASR is introduced at CES 2026 as part of NVIDIA’s broader push for truly open models optimized for low‑latency use cases like real‑time voice assistants, with the ASR highlighted as a building block for production‑grade, multi‑model agents in the nvidia asr promo.

Overall this positions Nemotron Speech ASR plus the paired LLM and TTS as a reference design for builders who want sub‑second, end‑to‑end voice agents without relying on closed APIs or proprietary training pipelines.

Modal benchmarks Nemotron Speech ASR at 127 concurrent streams on one H100

Nemotron Speech ASR scaling (Modal): Modal reports that Nemotron Speech ASR can serve 127 simultaneous streaming WebSocket clients with sub‑second 90th‑percentile latency on a single NVIDIA H100 GPU, framing the model as suitable for large‑fan‑out real‑time voice agents rather than single‑user demos, according to their benchmark summary in the modal benchmark.

• Concurrency and latency profile: The shared chart shows p90 delays remaining below one second even as concurrent streams ramp from tens to 120+, with separate curves for different segmenting configurations (for example 160ms, 560ms, 1.12s windows), illustrating how the ASR maintains responsiveness under load in the modal benchmark.

• Infrastructure implications: Running 127 active voice streams on a single H100 suggests that modest clusters can back sizeable fleets of phone bots or assistants, and the accompanying write‑up details how this setup was implemented on Modal’s serverless GPU platform for reproducible load testing in the benchmark blog.

This benchmark gives AI engineers a concrete scaling data point for Nemotron Speech ASR, tying its single‑conversation latency claims to multi‑tenant, production‑style workloads on current‑generation NVIDIA accelerators.

🔎 Binary + int8 rescoring for 40M‑doc, 200ms search

Hands‑on retrieval stack shows CPU‑friendly search at scale: binary indexes + int8 rescoring hit ~200ms over 40M texts with tiny RAM. Multiple posts share RAM/disk math and restore‑accuracy curves.

Binary + int8 quantized retrieval hits 200ms search over 40M docs on CPU

Quantized Retrieval demo (Hugging Face / sentence-transformers): Toma Aarsen shows a retrieval stack that answers queries over 40M Wikipedia texts in ~200ms on a single CPU server with 8GB RAM and ~45GB of disk, using a binary index in memory plus int8 embeddings on disk as described in the search thread and implemented in the demo space. Instead of storing fp32 vectors, embeddings are precomputed once, then stored as a 32× smaller binary index for fast approximate search and a 4× smaller int8 view for precise rescoring, with only the binary index resident in RAM according to the quantization blog.

• Inference pipeline: The system embeds the query in fp32, quantizes it to binary, retrieves ~40 candidates via an exact or IVF binary index, then loads the corresponding int8 embeddings from disk to rescore and rank the top ~10 using the original fp32 query, as outlined in the pipeline description.

• Accuracy vs fp32: By retrieving roughly 4× as many candidates with the binary index and then int8‑rescoring, the setup recovers about 99% of full fp32 retrieval performance, compared to around 97% when using the binary index alone without rescoring, per the results shared in the accuracy note.

• Resource and speed delta: A conventional fp32 index over the same 40M texts would require on the order of 180GB RAM and 180GB of embedding storage and run roughly 20–25× slower, whereas the binary+int8 approach uses ~6GB RAM and ~45GB of disk for embeddings, as quantified in the ram and disk discussion.

• Extensibility: The author notes that this pattern can be extended with a sparse component such as BM25 or SPLADE-style encoders to form hybrid retrieval stacks while keeping most of the efficiency gains, as suggested in the sparse extension.

Overall this thread illustrates a concrete recipe for large‑scale, CPU‑friendly retrieval where careful quantization and two‑stage scoring trade a small loss in recall for dramatic savings in memory, disk, and latency.

🎬 Open video/audio tooling and creator controls

A sizable media cluster: LTX‑2 ships open weights + training code (Day‑0 ComfyUI support) with 4K and lip‑sync, NVIDIA‑optimized checkpoints land, and image relight/mark‑to‑edit tools surface. This excludes Rubin (covered as feature).

LTX‑2 open audio‑video model gets NV‑optimized ComfyUI support

LTX‑2 (Lightricks): Open‑weight LTX‑2, a multimodal audio‑video diffusion model with native 4K output and synchronized sound, is now shipping with full weights, training code and distilled checkpoints that run locally on RTX GPUs, as described in the launch breakdown architecture thread and the Hugging Face model page model card; following up on LTX-2 launch which focused on speed, today’s updates show it moving into production‑grade tooling with Day‑0 ComfyUI graphs and NVIDIA‑tuned quantized variants.

• Day‑0 ComfyUI integration: ComfyUI added native LTX‑2 nodes with text‑to‑video, image‑to‑video and control inputs (canny/depth/pose), so creators can keyframe, set aspect ratios (16:9/9:16) and drive synchronized dialogue/SFX/music from a single pass, as shown in the ComfyUI announcement comfyui support.

• NVIDIA NVFP4/NVFP8 checkpoints: Lightricks, NVIDIA and ComfyUI report new NVFP4 and NVFP8 LTX‑2 checkpoints that run up to 3× faster with ~60% less VRAM while still generating up to 20‑second clips at 4K and 50 fps, according to the joint update comfyui nv update and the linked NVIDIA and ComfyUI write‑ups nvidia blog and comfyui guide.

• Local, extensible stack: Early testers describe running the distilled model in real time on single‑GPU desktops with no data leaving the machine architecture thread, and the published training framework plus LoRA hooks mean teams can fine‑tune on their own styles or motions without retraining the full 19B backbone ltx2 repo.

The net result is that LTX‑2 has moved from an open research drop to a practical, controllable video engine that fits into existing creator pipelines via ComfyUI and RTX‑class PCs rather than only via closed SaaS APIs.

Higgsfield Relight adds studio‑grade lighting control to static images

Relight (Higgsfield): Higgsfield introduced Relight, an image‑editing tool that lets users reposition virtual lights, adjust intensity and color temperature, and control soft‑to‑hard shadows directly on existing photos, turning static renders into relit scenes without a physical studio, as shown in the product teaser relight launch and the creator walkthrough relight deep dive.

• 3D‑aware light placement: The UI exposes controls for light direction, strength and temperature plus six presets, with the model handling geometry so highlights and shadows wrap correctly around faces and objects even as the light source moves relight launch.

• Shadow and mood control: Techhalla’s review notes per‑pixel soft‑to‑hard shadow control and shows before/after sequences where Relight adds drama or flattens contrast while preserving texture and detail, which they frame as "full spatial control" over lighting relight deep dive.

• Creator workflow focus: The same thread stresses that Relight slots into existing pipelines as an edit step—upload an image, mark it for relighting, tweak sliders, export—rather than forcing users into a new generation workflow relight deep dive.

For image and video teams, Relight effectively promotes lighting from a one‑time shoot decision to an editable parameter, which may reduce the need for reshoots when clients change direction late in a project.

Freepik Nano Banana + Kling pipeline recreates a Zelda trailer on $300

Zelda fan trailer (Freepik/Nano Banana Pro): Creator PJ Accetturo detailed how he produced a gritty Legend of Zelda teaser in five days on a ~$300 budget using Freepik’s Nano Banana Pro image model plus Kling 2.6 for animation, mapping out a story arc of "Fear → Ruin → Rage → Capture → Confrontation" and then building each beat with tightly controlled prompts zelda trailer demo.

• Prompted 2×2 scene grids: He uses a 3,000‑character prompt and a 2×2 grid setting in Nano Banana Pro inside Freepik to generate consistent lighting and multiple angles per scene, warning that 3×3 grids "lose too much detail" for cinematic work image generation tip and aspect ratio tip.

• Reference‑driven consistency: Freepik’s UI lets him load a character reference (Zelda), a style reference (a cinematic shot grid), and even a wooden doll photo so Nano Banana Pro keeps anatomy, costumes and framing coherent across dozens of generated frames reference control tip.

• Upscaling and animation: After selecting and cropping stills into a contact sheet, he upscales them inside Freepik with a long, custom upscaler prompt, then feeds key shots into Kling 2.6 with action‑specific prompts (for example, "looking back in fear") plus heavy camera‑shake to get handheld‑feeling motion workflow thread.

• Sound and distribution: The final cut layers an orchestral Zelda cover and hand‑tuned SFX from a collaborator, and Accetturo is sharing the full prompts and process via his newsletter so others can reuse the pipeline workflow newsletter.

This case study shows how off‑the‑shelf image and video models can now support end‑to‑end trailer‑style storytelling, with prompt engineering and selection doing the work that used to require crews and sets.

Genspark AI Image adds "Mark to Edit" region‑aware editing

AI Image (Genspark): Genspark rolled out Mark to Edit, a region‑aware editing mode where users click parts of an image, describe a change in natural language, and have the model apply localized edits while leaving the rest intact, as demonstrated in their launch clip mark to edit demo and product link genspark editor.

• Click‑select, then describe: The demo shows a user lassoing an object, entering a short instruction (for example, changing clothing or colors) and getting an updated render that respects the original composition and lighting mark to edit demo.

• Iterative design loop: Because each edit is scoped to the marked region, creators can iterate on multiple elements in one image—backgrounds, props, characters—without re‑prompting the entire scene, which reduces the risk of global changes undoing earlier work mark to edit demo.

For teams already using image models for layout and ideation, this sort of region‑first control shifts them closer to traditional design tools while retaining the flexibility of prompt‑based generation.

Cursor + Nano Banana + Tripo demo shows 1‑day 3D asset pipeline

Banana 3D workflow (Techhalla): A short demo from Techhalla shows developer "Vibe" coding an app in Cursor, generating reference images with Nano Banana Pro, then converting those into animated 3D FBX assets using Tripo, all within a day, to make a usable interactive environment 3d workflow demo.

• Code → concept art → 3D: The sequence walks through Cursor‑based app development, Nano Banana‑generated concept imagery for a stylized banana, and Tripo’s image‑to‑3D conversion producing a rotating banana model that is then animated and placed into a scene 3d workflow demo.

• End‑state: game‑ready asset: The final clip shows the exported FBX banana moving in a simple environment, with Techhalla arguing that this kind of "stacked" toolchain explains why AI is rapidly taking over content pipelines 3d workflow demo.

While each component here is closed or semi‑open, the workflow illustrates how off‑the‑shelf models are now able to cover concept art, geometry and basic animation in a single loop, reducing the number of specialized tools needed for small teams.

Vector‑to‑plush workflow turns Illustrator art into product shots with Nano Banana

Vector‑to‑plush pipeline (Ror_Fly): Designer Ror_Fly outlined a six‑step workflow that starts from a 2D vector in Adobe Illustrator and ends with plush toy product and lifestyle renders using Nano Banana Pro and Weavy, effectively prototyping a physical line from flat art without a photo shoot vector plush demo.

• From SVG to "material" render: They first export a flat Cartman‑style vector as PNG, then feed it to Nano Banana Pro with a system prompt that "turns 2D vectors into 8K 3D plush," enforcing exact shape and colors while simulating fabric, seams and stuffing physics vector plush demo.

• Angle and scale generation: A second prompt asks Nano Banana Pro to generate nine additional shots from locked materials, proportions and lighting, which are then composited into a contact sheet and combined with a dimension diagram so later lifestyle images preserve consistent toy scale workflow recap.

• Lifestyle expansion: Finally, the contact sheet and scale guide drive another round of prompts that place the plush in six different lifestyle contexts, giving a full product‑page kit (closeups, turnarounds, in‑use scenes) without photographing a real sample workflow recap.

For teams exploring merch or toy concepts, this sort of vector‑to‑plush workflow turns Illustrator skills plus an image model into a de facto 3D and photography department.

🤖 Humanoids and AV reasoning at CES pace

Continuing yesterday’s robotics push with new specifics: Atlas’ production‑grade specs and Hyundai trials, plus NVIDIA’s Alpamayo for explainable driving, and LG’s CLOiD home demo. Excludes Rubin economics (feature).

Atlas humanoid gets production specs and Hyundai factory trial timeline

Atlas humanoid (Boston Dynamics/Hyundai): Boston Dynamics’ latest CES brief put hard numbers on its production Atlas—around 6'2" and 198 lbs, 56 degrees of freedom, a 4‑hour battery with self‑swapping packs for near‑continuous operation, ~110 lb lift (≈66 lb sustained), and Nvidia compute for real‑time autonomy, with the robot constantly re‑evaluating posture, balance and grip on the fly as recapped in Atlas spec summary and joints and hands. Building on the Savannah plant pilot described in Atlas launch, Hyundai now shows Atlas practicing autonomous parts sequencing for roof racks on an active line and talks about ramping to roughly 30,000 units a year by 2028 once safety and quality are validated, positioning Atlas as a real industrial worker rather than a lab demo in Hyundai trial.

• Mechanical and hand design: Continuous 360° joints in limbs, torso and head avoid wire fatigue and enable superhuman twists and recoveries, while three‑finger hands can reconfigure between pinch and wide grips with fingertip tactile sensing feeding a neural controller for force‑aware manipulation of small and large parts joints and hands.

• Training and deployment: Atlas policies are trained via VR teleoperation with humans repeating factory tasks until the robot can perform them autonomously, and Hyundai frames 2028 as the first large‑scale deployment window once these pilots mature Hyundai trial.

The combined spec sheet and early Savannah trial give engineers a clearer sense of Atlas’ real operating envelope and how quickly large fleets could start appearing on automotive lines.

NVIDIA’s Alpamayo AV stack explains its own driving decisions in real time

Alpamayo AV stack (NVIDIA): NVIDIA used CES to unveil Alpamayo, an open autonomous‑driving model family that runs end‑to‑end from raw camera feeds to vehicle actuation while emitting both its chosen maneuver and a natural‑language explanation, pitched as a system that "tells you what action it’s gonna take, and the reasons behind it" in the keynote summarized in Alpamayo segment. Training combines human driving demonstrations, synthetic Cosmos scene data and carefully curated edge‑case scenarios so the model can decompose rare events into familiar patterns and explain choices like "steering to the right to avoid obstruction" before executing, as described in reasoning details and in the FSD‑oriented recap in FSD upgrade thread.

• End‑to‑end plus explanations: The stack is architected to go from multi‑camera perception straight through prediction, planning and control, while a parallel head produces textual rationales that can be surfaced to drivers, validation teams or regulators Alpamayo segment.

• Data mix and edge cases: NVIDIA highlights Alpamayo’s use of synthetic "Kosmos" scenarios and tele‑operated replays to cover long‑tail hazards, arguing this helps it generalize to novel intersections and complex merges more robustly than rule‑based stacks reasoning details.

• Positioning for FSD vendors: Commentary from AV watchers frames Alpamayo as a potential reasoning core for systems like Tesla FSD, emphasizing its "thinking like a human" narrative and lack of LiDAR reliance in FSD upgrade thread.

The announcement signals a shift toward AV stacks where explainability and human‑readable intent are treated as first‑class outputs alongside trajectories and control commands.

NVIDIA lines up a full physical‑AI stack with major robot partners at CES

Physical AI stack (NVIDIA): NVIDIA framed robotics at CES as reaching a "ChatGPT moment", rolling out a full physical‑AI platform—Cosmos and GR00T foundation models for perception and policy, Isaac Lab‑Arena simulation tools, and Jetson Thor edge compute—as global partners like Boston Dynamics, Caterpillar, LG, Franka and NEURA rolled out new humanoids, quadrupeds, industrial arms and heavy machinery all tied into the stack in the keynote montage shown in robotics ecosystem.

• Model + sim + hardware: The platform is pitched as tightly coupled across layers: Cosmos Reason handles multimodal world understanding, GR00T covers general‑purpose robot control, Isaac Lab‑Arena provides large‑scale simulated training grounds, and Jetson Thor brings that execution to edge devices on factory floors and in homes robotics ecosystem.

• Open‑source linkage: NVIDIA and Hugging Face announced plans to integrate open Isaac technologies directly into the LeRobot robotics library, effectively turning popular research code into a front‑end for the same Isaac/Cosmos stack powering the CES demos LeRobot collaboration.

For robotics teams, the message is that NVIDIA wants the combination of its models, sim tools and edge compute to be the default substrate for everything from Atlas‑class humanoids to home helpers and construction rigs.

Reachy Mini home robot sees 4,900% new‑customer spike after CES visibility

Reachy Mini (Pollen Robotics/Hugging Face): Following up on its cameo in NVIDIA’s CES keynote as an accessible desktop robot platform Reachy debut, Pollen Robotics reports a 4,900% day‑over‑day jump in new customers and 1,002 total new buyers in the latest period, attributing the surge to CES exposure and noting that units now ship with ~90‑day lead times as shown in the metrics chart in Reachy adoption data and the earlier spotlight in CES presence.

• Developer stack and guides: NVIDIA and Hugging Face co‑published how‑to material for turning Reachy Mini into a personal AI assistant using Isaac tools and the LeRobot ecosystem, with step‑by‑step demos of voice‑controlled workflows and cloud or local deployment paths in assistant guide and the showcased keynote assistant that paired the robot with a dog on stage in assistant demo.

The adoption spike suggests that a relatively low‑cost, openly programmable platform can attract a broad base of hobbyists and researchers once it’s tied visibly into a major AI stack and developer tooling.

Italian startup Generative Bionics debuts GENE.01 humanoid with "physical AI"

GENE.01 humanoid (Generative Bionics): Italian startup Generative Bionics announced its first humanoid robot, GENE.01, describing it as bringing intelligence into the body by combining generative structural design with on‑board "Physical AI" controllers so its whole‑body motion remains responsive, stable and efficient in dynamic environments, according to the launch highlight in GENE.01 intro.

• Design and control philosophy: The company pitches generative design to optimize the mechanical structure for strength and weight, then layers AI‑based control policies that coordinate joints for smooth locomotion and manipulation rather than relying on rigidly scripted trajectories GENE.01 intro.

While technical details and benchmarks are still sparse, GENE.01 adds another entrant to the growing field of mid‑size humanoid platforms aiming to blend novel mechatronics with learned control for industrial and service roles.

💸 Capital and adoption: xAI and LMArena signal scale

Two sizable funding signals and an assistant footprint move: xAI’s oversubscribed $20B Series E with NVIDIA/Cisco as strategic investors; LMArena’s $150M Series A at $1.7B; Alexa+ expands into web. Hardware feature excluded here.

xAI closes oversubscribed $20B Series E to fund Grok 5 and massive compute

xAI Series E (xAI): xAI has completed an upsized Series E round at $20B, exceeding its earlier $15B target and bringing in strategic investors NVIDIA and Cisco Investments, with Valor, StepStone, Fidelity, QIA, MGX and Baron also participating as detailed in the funding summary. The company says the capital will mainly fund infrastructure and accelerate training and deployment of Grok 5, building on its Colossus I/II clusters that already total >1M H100 GPU equivalents according to the compute breakdown.

Funding scale and positioning: The round follows xAI’s earlier $6.6B raise in 2024 and its all‑stock deal to acquire X at a notional $80B valuation for xAI and $33B for X (net of debt), with The Information previously suggesting an implied valuation around $230B for xAI—numbers cited in the compute breakdown. xAI also highlights that it is running reinforcement learning at “pretraining‑scale compute”, signaling a strategy that leans heavily on large‑scale RL rather than treating it as a small post‑training pass compute breakdown. The combination of strategic GPU partners and a stated focus on Grok 5 training and RL‑heavy pipelines underlines xAI’s intent to compete at the very top tier of general‑purpose model providers rather than staying a niche alt‑stack.

LMArena lands $150M Series A at $1.7B valuation and $30M ARR run rate

LMArena Series A (LMArena): Evaluation platform LMArena has raised $150M in a Series A round that values the company at $1.7B, nearly 3× its May seed valuation, as announced in the funding announcement and echoed by funding recap. The round is led by Felicis and UC Investments (University of California), with a16z, Kleiner Perkins, Lightspeed and others joining, and comes as the team reports an annualized consumption run rate above $30M less than a year after launching its paid evaluations funding announcement.

• Adoption metrics: LMArena says it now serves 5M+ monthly users across 150 countries, generating over 60M conversations per month to evaluate model behavior across text, code, image, video and search workloads, according to the funding announcement. It frames this as evidence that the world wants models that are “measurable, comparable, and accountable”, positioning LMArena as a kind of public benchmark and UX layer for real‑world model quality.

• Use case and spend narrative: The team emphasizes that most existing benchmarks are saturated and removed several (MMLU‑Pro, AIME 2025, LiveCodeBench) from its headline index while adding GDPval‑AA, AA‑Omniscience and CritPT to better track economically valuable tasks, hallucination and hard physics reasoning, as summarized in the index update. They present the new capital as fuel to scale engineering, research, ops and community programs rather than as a runway lifeline, and investors like TestingCatalog highlight that LMArena is becoming a default place for both labs and end‑users to compare models funding recap.

Alexa+ comes to Alexa.com, turning Amazon’s assistant into a cross‑platform web agent

Alexa+ web rollout (Amazon): Amazon has brought Alexa+ to the browser at alexa.com, turning the assistant from a voice‑and‑mobile feature into a full web app that can plan, learn, create, shop and find information, as described in the rundown brief and alexaplus analysis. Following up on alexa web, which covered the initial early‑access UI, new coverage stresses agentic task completion and third‑party integrations rather than simple Q&A.

• Agentic integrations: Alexa+ on the web now ties into services like Expedia, Yelp, Angi and Square, allowing it to not only answer questions but also carry out tasks such as booking travel, finding local services and interacting with SMB tooling, according to the alexaplus analysis. The UI surfaces actions like Plan, Learn, Create, Shop, Find prominently, positioning it as a general productivity and commerce agent.

• Adoption context: The Rundown frames this move as Amazon coming directly for ChatGPT’s web turf, arguing that the combination of Alexa’s large install base and a browser‑native experience could shift user behavior away from pure chatbots and toward assistants that sit on top of shopping and home ecosystems rundown brief. There is still no mention of pricing changes or clear GA timelines beyond “early access,” but the pattern is consistent with Amazon’s broader strategy of embedding AI assistance in every access point, from smart speakers to mobile apps and now the desktop browser.

Samsung plans 800M Galaxy AI devices in 2026, doubling AI footprint

Galaxy AI scale‑up (Samsung): Samsung plans to double its AI‑enabled mobile devices to about 800M units in 2026, powered by a mix of Google Gemini and its own Bixby models, according to co‑CEO T.M. Roh’s comments summarized in the samsung ai forecast. The company links this to strong demand for Galaxy AI features like generative images, translation and productivity tools, arguing that “AI adoption will spike rapidly” as more devices ship with these capabilities built in samsung ai forecast.

• Strategic context: The move comes as Samsung faces what it calls an “unprecedented” memory chip shortage and rising smartphone prices, and it explicitly notes that capacity is being prioritized for AI datacenters and DDR5/HBM over legacy DDR4, as highlighted in the same samsung ai forecast. That implies that handset AI features are both a consumer product story and a demand driver for the upstream GPU/memory stack.

• Competitive positioning: Coverage frames this as an escalation in the AI race against Apple and Chinese OEMs, with Samsung betting that branded “Galaxy AI” across its portfolio will help it differentiate even as Meta, Google and others push their own assistant ecosystems into glasses, PCs and apps follow-up comment. The numbers—hundreds of millions of AI‑capable devices—give a concrete sense of how quickly model‑infused consumer hardware is becoming the default rather than a premium tier.

ChatGPT Go is free for a year in India, expanding paid‑tier features

ChatGPT Go India offer (OpenAI): OpenAI is offering 12 months free of its ChatGPT Go tier in India, waiving the usual ₹399/month price for the first year according to the promo screenshot in the india offer. The banner advertises “Do more with smarter AI” and highlights upgraded capabilities like going deeper on harder questions, longer chats and uploads, realistic image generation, more stored context and help with planning and tasks india offer.

Adoption and ARPU angle: The fine print notes that standard pricing resumes at ₹399/month from Jan 6, 2027, with the ability to cancel anytime, which makes this a deliberate land‑grab move in a price‑sensitive but strategically important market rather than a permanent discount india offer. This regional offer lands shortly after OpenAI’s own disclosures that >5% of global ChatGPT traffic is health‑related and that daily usage now spans hundreds of millions of users health usage, suggesting that the company is experimenting with localized pricing and promos to convert heavy users in high‑growth markets into future subscribers once free periods expire.

ElevenLabs voice agents now assist nearly half of Cars24 sales calls

Voice agents at scale (ElevenLabs): ElevenLabs says its Agents product now handles over 3M minutes of customer conversations for used‑car marketplace Cars24, supporting sales in India, the UAE and Australia, as shown in the cars24 case study. The agents guide buyers, handle objections and escalate complex cases to humans, with Cars24 reporting that 45% of sales are now assisted by AI agents and calling costs have dropped by 50% cars24 case study.

Enterprise adoption signal: The case study positions this as a template for high‑volume voice workflows, with AI handling the bulk of routine interactions but integrating tightly with human teams for edge cases. ElevenLabs stresses that the same stack—TTS, ASR and orchestration—can be repurposed to other call‑heavy verticals, and ties the results to its broader claim that real‑time, low‑latency agents are ready for production in sales and support settings rather than remaining a lab demo impact teaser.

📑 Reasoning methods, verification, and visual math

Multiple fresh papers relevant to production agents: meta‑RL that induces exploration between attempts, spectral attention checks to verify math reasoning without training, universal weight subspaces across models, and a visual math pipeline.

Geometry of Reason uses spectral attention signatures to verify math reasoning without training

Geometry of Reason (Devoteam et al.): A new paper shows that treating attention matrices as graphs and extracting simple spectral metrics lets you detect whether an LLM’s math proof is logically valid without any extra training, reaching ~85–96% classification accuracy across seven models and 454 Lean proofs, as detailed in the paper explanation. The authors compute four scores—Fiedler (algebraic connectivity), high‑frequency energy ratio, graph‑signal smoothness, and spectral entropy—and find that a single threshold on one metric often suffices to separate valid from invalid reasoning traces, even when a formal proof checker fails for technical reasons.

• Training‑free verifier: Because the method is fully training‑free and works directly from raw attention patterns, it avoids labeled data and model‑specific graders, suggesting a cheap, generic way to flag low‑quality reasoning or hallucinated steps in math pipelines, as emphasized in the paper explanation.

• Model‑wide signal: The study reports very large effect sizes (Cohen’s d up to 3.3) across Meta Llama, Alibaba Qwen, Microsoft Phi, and Mistral models, indicating that spectral structure of attention may be a stable signature of sound reasoning rather than a quirk of any single architecture paper explanation.

CogFlow pipeline and MATHCOG dataset tackle diagram‑based math via perception→internalization→reasoning

CogFlow + MATHCOG (Tsinghua and collaborators): The CogFlow framework attacks visual mathematical problem solving by splitting the job into three explicit stages—perception, knowledge internalization, and reasoning—rather than letting a multimodal LLM free‑form its way from pixels to answers, as explained in the paper summary. The authors also introduce MATHCOG, a dataset with 120k+ aligned perception–reasoning annotations, and report higher answer accuracy with fewer "reasoning drift" mistakes where later text ignores earlier diagram facts.

• Grounded perception: CogFlow first extracts shapes and layouts from diagrams, then turns them into structured geometric facts; "Synergistic Visual Rewards" score both fine‑grained details and overall layout during reinforcement learning to strengthen this perception layer paper summary.

• Internalization and gating: A dedicated reward model checks that each reasoning step respects the internalized facts and learns from five common error patterns, while a visual gate forces the model to re‑parse diagrams when perception looks weak, so the final chain of thought stays grounded in what the model actually "saw" paper mention.

Universal Weight Subspace Hypothesis finds shared low‑rank directions across 1,100+ networks

Universal Weight Subspace (Johns Hopkins): The "Universal Weight Subspace Hypothesis" paper analyzes ~1,100 trained models (500 Mistral‑7B LoRAs, 500 Vision Transformers, 50 LLaMA‑8B) and finds that most weight variation in each layer lies in a tiny set of shared principal directions—around 16 principal components per layer can capture the bulk of variance across tasks, as outlined in the paper thread. With a joint low‑rank basis fixed, new tasks can be represented by learning only a handful of coefficients, enabling compact adapters and even merging hundreds of ViT or LoRA experts into a single compressed representation.

• Implications for adapters: Experiments show that constraining LoRA‑style adapters to this shared subspace preserves performance while drastically reducing storage and training cost, and the authors demonstrate merging 500 ViTs into one joint form, according to the paper thread.

• Shared structure: The findings suggest deep networks with similar architectures systematically converge to overlapping spectral subspaces regardless of initialization, data, or domain, which has direct consequences for multi‑task learning, model reuse, and greener training regimes paper thread.

LAMER meta‑RL teaches language agents to explore across repeated attempts

LAMER meta‑RL (multiple labs): New work proposes LAMER, a meta‑reinforcement learning framework that trains language agents to treat early attempts as exploration and later ones as exploitation, improving success by 11–19 percentage points over standard RL on Sokoban, Minesweeper, and Webshop according to the paper thread. The method runs multiple attempts on the same task during training, gives later attempts credit for information uncovered earlier via a cross‑episode discount factor, and uses in‑context reflection notes between attempts while keeping model weights fixed at test time.

• Exploration behavior: LAMER explicitly rewards cross‑attempt information gathering so agents stop repeating the same safe but uninformative actions and instead learn a "try, learn, try again" habit, as summarized in the paper thread.

• Generalization: Results show stronger performance on harder or previously unseen problems than RL‑trained baselines, suggesting this meta‑RL pattern could be plugged into production agents that face long‑horizon, retry‑friendly tasks without needing online fine‑tuning.

⚙️ Serving speed and compatibility upgrades

Runtime/serving updates beyond dev tooling: vLLM‑Omni pushes a production‑grade multimodal stack (diffusion upgrades, OpenAI‑compatible endpoints, AMD ROCm), and OpenRouter’s model rankings page halves TBT via lazy loading/code‑split.

Nemotron Speech ASR stack hits sub‑second latency for 127 streams

Nemotron Speech ASR (NVIDIA × Modal × Daily): NVIDIA’s new open‑source transcription model Nemotron Speech ASR is being positioned as a low‑latency building block for voice agents; a reference agent that combines Nemotron Speech, Nemotron 3 Nano 30GB (4‑bit) and a preview of Magpie TTS achieves ~24 ms transcription finalization and under 500 ms end‑to‑end voice‑to‑voice time in demos voice agent stack.

• Concurrent serving benchmark: Modal reports that a single H100 can serve 127 simultaneous WebSocket clients with sub‑second 90th‑percentile delay by tuning the streaming pipeline and batching, as summarized in the modal benchmark.

• Full OSS stack: NVIDIA released weights, training data, training code and inference code for Nemotron Speech, Nemotron 3 Nano, and Magpie, and Daily published an open reference implementation with deployment paths for Modal cloud, DGX Spark and RTX 5090, according to the tech writeup.

• Latency profile: A chart from NVIDIA shows Nemotron Speech’s p90 delay remaining below 1s for 120+ concurrent streams when configured with 160 ms, 560 ms, or 1.12 s segment sizes, while open baselines and proprietary APIs often sit well above that

.

The combination of fully open artifacts and concrete serving numbers makes this stack one of the clearer examples of production‑grade, low‑latency speech agents that can be hosted outside proprietary platforms.

vLLM‑Omni v0.12.0rc1 pushes production‑grade multimodal serving

vLLM‑Omni (vLLM project): The v0.12.0rc1 release shifts focus from "can do multimodal" to "ready for production" by overhauling diffusion serving, adding OpenAI‑compatible Image and Speech endpoints, and shipping official AMD ROCm Docker/CI support—this bundles TeaCache, Cache‑DiT, Sage Attention, Ulysses sequence parallelism and Ring Attention into one optimized stack, as outlined in the release notes.

• Diffusion engine overhaul: The new diffusion path integrates TeaCache and Cache‑DiT plus Ulysses and Ring Attention so image/video models generate faster and more stably on the same hardware, according to the release notes.

• OpenAI‑style endpoints: Native Image and Speech serving now use OpenAI‑compatible routes, which lets teams point existing OpenAI SDKs or clients at vLLM‑Omni with minimal changes while gaining local control, as shown in the release notes.

• AMD ROCm support: An official ROCm Docker image and CI path means the same serving stack can run on AMD GPUs as well as NVIDIA, expanding hardware compatibility for self‑hosted workloads release notes.

The release candidate status signals that interface and perf details may still move, but the direction is clear: vLLM is positioning Omni as a unified, standard‑API serving layer for text, image and speech rather than a research demo.

Binary+int8 retrieval serves 40M texts in 200ms on a CPU

Quantized retrieval (Hugging Face / sentence‑transformers): Tom Aarsen shows a search stack that answers queries over 40 million Wikipedia texts in roughly 200 ms on a single CPU server with 8 GB RAM and ~45 GB disk, using a binary index plus int8 rescoring instead of full‑precision vectors retrieval demo.

• Two‑tier embeddings: Documents are embedded once into fp32, then stored as both a binary index (32× smaller) and int8 vectors (4× smaller); live queries are embedded in fp32, binarized for fast approximate search, then rescored against a small int8 candidate set quantization blog.

• Accuracy vs. cost: By loading roughly 4× more candidates via the binary index and then rescoring with int8, the system recovers about 99% of fp32 search performance, compared with ~97% when using binary only, as explained in the rescoring thread.

• Resource profile: The fp32 baseline would need ~180 GB RAM and ~180 GB of embedding storage and run 20–25× slower, while the binary+int8 setup stays within ~6 GB RAM and ~45 GB disk for embeddings rescoring thread.

The demo, which is exposed as a public Space for hands‑on testing demo space, illustrates how aggressive quantization plus two‑stage scoring can keep large retrieval systems cheap enough to run outside GPU clusters.

LTX‑2 gets NVFP4/FP8 checkpoints and day‑0 ComfyUI support

LTX‑2 (Lightricks × NVIDIA × ComfyUI): The LTX‑2 audio‑video foundation model now ships with NVFP4 and NVFP8 checkpoints optimized for NVIDIA GPUs and is natively supported in ComfyUI from day zero, enabling 4K text‑to‑video with synchronized audio at up to 3× the previous speed and around 60% less VRAM on RTX hardware ltx2 nvfp summary.

• Local 4K generation: LTX‑2 can render native 4K video, up to 50 fps, with motion, dialog, SFX, and music produced together in a single diffusion pass, as shown in the ltx2 perf clip.

• Quantized checkpoints: NVFP4/NVFP8 variants cut memory and bandwidth requirements so the same GPUs can host longer or higher‑res clips without offloading frames to CPU, according to the ltx2 nvfp summary.

• ComfyUI integration: ComfyUI exposes LTX‑2’s controls (keyframes, canny/depth/pose video‑to‑video, upscaling) as nodes so creators can wire it into existing pipelines instead of writing new serving glue ltx2 comfyui thread.

Together with RTX‑specific optimizations, this moves complex video diffusion from cloud‑only into realistic local‑serve territory for teams with strong GPUs.

NVIDIA outlines major speedups for open AI tools on RTX PCs

Open‑source tools on RTX (NVIDIA): NVIDIA’s CES blog highlights substantial serving‑time gains for popular open tools on RTX PCs—ComfyUI reportedly sees up to 3× faster diffusion workflows, while llama.cpp and Ollama gain up to ~35% faster token generation after NVFP4/FP8 quantization and GPU‑side sampling optimizations nvidia tools thread.

• Token decode gains: For llama.cpp and Ollama, the blog attributes higher tokens‑per‑second to GPU sampling, better memory layouts, and low‑precision formats like NVFP4/FP8, all tuned for consumer RTX cards as detailed in the NVIDIA tools blog.

• Diffusion throughput: ComfyUI’s reworked kernels and scheduler paths, plus integration with new video models like LTX‑2, are credited with up to 3× shorter image/video generation times in node‑based graphs, according to the NVIDIA tools blog.

• Ecosystem framing: NVIDIA notes that developer activity around these PC‑class models has roughly doubled in a year, and that more than ten‑fold more devs are now using local models, making desktop serving performance strategically important nvidia tools thread.

The post positions RTX PCs as a serious serving tier for both experimentation and light production, not just as a training playground.

OpenRouter’s model rankings page halves blocking time with lazy loading

LLM Leaderboard (OpenRouter): OpenRouter re‑implemented its AI Model Rankings page with intersection‑observer‑based lazy loading and code‑splitting, reporting that total blocking time (TBT) is now about 50% lower while users still see the same charts and tables leaderboard update.

• Lazy loading & splits: Only the visible portion of the leaderboard DOM now renders initially; additional rows mount as they scroll into view, and heavy components are split into separate bundles so the main thread stays more responsive leaderboard update.

• Perf metrics: The Lighthouse screenshot shows First Contentful Paint at 0.4s and Largest Contentful Paint at 0.8s, with TBT cut to 340ms—down from roughly double that before the change, per the leaderboard update.

The change is confined to the frontend, but it makes a large leaderboard of multi‑provider usage stats feel more like an app than a static report and reduces client‑side overhead for people monitoring serving trends.

Tencent’s HY‑World 1.5 adds faster inference and a 5B lite model

HY‑World 1.5 (Tencent Hunyuan): Tencent’s new HY‑World 1.5 world‑model release focuses on making large‑scale 3D world generation more deployable, adding open training code, a significantly accelerated inference path and a new 5B‑parameter lite variant that fits on smaller‑VRAM GPUs hy-world update.

• Open training code: The team published fully customizable training recipes so labs can build and train world models tuned to their own simulation tasks rather than treating HY‑World as a fixed black box, as described in the hy-world update.

• Inference speed & VRAM: The accelerated inference path is marketed as fast enough for real‑time or interactive worlds, and the 5B lite model is explicitly aimed at small‑VRAM GPUs where previous versions would not fit or would stall at low frame rates hy-world update.

• Zero‑waitlist app: The associated online app now drops its waitlist, letting users stream generated worlds immediately instead of queueing—this underscores that the serving path is stable enough for broad access hy-world update.

While HY‑World is framed as spatial intelligence rather than a generic LLM, the emphasis on lighter checkpoints and faster inference echoes similar trends in text and vision serving stacks.

Vercel AI SDK 6.0.12 adds programmable image model middleware

AI SDK 6.0.12 (Vercel): The latest AI SDK release introduces image‑model middleware that wraps providers like GPT‑Image‑1, letting developers set global defaults (such as image size) and run programmable transforms on generated images—using a simple wrapImageModel call, as shown in the ai sdk snippet.

• Default parameters: The example sets a default 1024x1024 size via transformParams, so any generateImage call without an explicit size gets normalized without touching call sites ai sdk snippet.

• Post‑processing hook: The middleware is specified with specificationVersion: 'v3', signalling support for standardized hooks that could resize, filter, or run guardrails on images before they leave the server, all inside AI SDK’s pipeline ai sdk snippet.

This sits between app code and vendor APIs, making it easier to keep image serving consistent when swapping or mixing models without rewriting every call.