OpenAI Codex hits 1M active users – JSON-RPC App Server detailed

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

OpenAI says Codex is now over 1M active users; in parallel it published an engineering deep dive on the Codex “App Server,” a bidirectional JSON-RPC layer that standardizes the agent harness across the desktop app, CLI, VS Code extension, and partner integrations. The post emphasizes streaming progress, workspace exploration, and diff-first output as protocol primitives; it also leans on backwards compatibility so multiple frontends can evolve without breaking each other, with JetBrains and Xcode called out as supported surfaces. A new Codex Ambassadors Program signals more formal community distribution; Altman’s Apple-adjacent “👀” tag reads as a partner tease, but there’s no concrete announcement.

• Anthropic/Claude Cowork: Slack connector chatter expands to in-app read/send messaging; Slack MCP connector is claimed to be on all paid plans; permissions/admin setup details remain unclear.

• TinyLoRA: paper claims GSM8K 76%→91% on Qwen2.5-7B-Instruct while training 13 parameters via RL (GRPO); evidence is mostly slide-level so far.

• Kling 3.0 on Higgsfield: demos market locked character consistency and “5 shots in one run” editing grammar; access is packaged as exclusive “UNLIMITED” with 70% off; no standardized evals attached.

Top links today

- Codex agents architecture deep dive

- Kling 3.0 on Higgsfield product page

- OpenRouter LLM usage leaderboard

- ChatDev zero-code multi-agent platform repo

- Klein $1M open source grants program

- OpenClaw GitHub repository

- OpenClaw events and community signup

- ClawCon full stream recording

- Vincent decentralized keys for agents

- Snowflake OpenAI platform partnership details

- Slack MCP connector documentation

- VibeTensor paper on agent-built GPU runtime

Feature Spotlight

Codex crosses 1M active users and OpenAI details the agent “App Server” architecture

Codex hitting 1M active users plus new architecture details signals it’s maturing from a demo into an agent platform teams can integrate (App/CLI/IDEs via a stable JSON‑RPC App Server).

Today’s Codex storyline is high-volume and concrete: a new adoption milestone (1M active users) plus an OpenAI engineering writeup on the JSON‑RPC App Server that powers Codex across the app/CLI/IDEs and partner integrations. Excludes Claude/Cowork and OpenClaw news (covered elsewhere).

Jump to Codex crosses 1M active users and OpenAI details the agent “App Server” architecture topicsTable of Contents

🧑💻 Codex crosses 1M active users and OpenAI details the agent “App Server” architecture

Today’s Codex storyline is high-volume and concrete: a new adoption milestone (1M active users) plus an OpenAI engineering writeup on the JSON‑RPC App Server that powers Codex across the app/CLI/IDEs and partner integrations. Excludes Claude/Cowork and OpenClaw news (covered elsewhere).

Codex passes 1M active users

Codex (OpenAI): OpenAI’s Sam Altman says Codex is now over 1 million active users, a sharp step up in adoption following up on Day-one downloads (early app traction + pain points), as stated in the 1M active users claim.

For engineers, this is mostly a scaling signal: a million active users typically forces hardening around multi-client reliability (app/IDE/CLI), backwards-compatible protocol evolution, and clearer “agent harness” boundaries—areas OpenAI is also publicly documenting in parallel via the App Server writeup referenced elsewhere today in the App Server writeup.

OpenAI explains the Codex App Server that powers app, IDEs, and integrations

Codex App Server (OpenAI): OpenAI published an engineering deep dive on the Codex App Server, describing it as a client-friendly, bidirectional JSON-RPC API that underpins Codex across the app, CLI, VS Code extension, and partner integrations, as outlined in the App Server writeup and expanded in the architecture post at Architecture post.

• Why JSON-RPC here: The post frames the server as more than request/response—supporting workspace exploration, streaming progress, and emitting diffs as core interaction primitives, per the App Server writeup.

• Origin story: It started as a way to reuse the Codex CLI harness and then evolved into a protocol that mirrored the terminal TUI loop (so multiple frontends could share the same “agent loop”), as described in the architecture post at Architecture post.

• Compatibility stance: The design explicitly calls out backwards compatibility to allow protocol evolution without breaking clients, with JetBrains and Xcode named as supported integration surfaces in the architecture post at Architecture post.

Codex launches an Ambassadors Program

Codex (OpenAI): A Codex Ambassadors Program has been announced, signaling a more formal community distribution channel around Codex usage and advocacy, as shared via the Ambassadors program RT.

There aren’t operational details in the tweets (eligibility, perks, or scope aren’t specified), but it’s a concrete indicator that OpenAI is investing in repeatable enablement beyond product-led adoption—especially relevant alongside the “1M active users” milestone noted in the 1M active users claim.

Altman’s @apples_jimmy “👀” adds Apple-adjacent speculation to Codex momentum

Codex (OpenAI): In the same conversation as the 1M-users milestone, Sam Altman replied tagging @apples_jimmy with “👀”, which reads as an Apple-adjacent partner tease but contains no concrete announcement, per the Apple tease reply in the thread that begins with the 1M active users claim.

With no follow-up details, this stays in the “signal” bucket; the practical takeaway for builders is simply that Codex distribution and integrations (not just model quality) appear to be an active focus right now, consistent with the multi-surface architecture emphasis described in the App Server writeup.

🧩 Claude desktop/Cowork: Slack control surface and connector rollouts

Anthropic-related posts are mostly about making Claude more “desktop-native” and tool-connected, especially Slack via Cowork and MCP-style connectors. Excludes Codex feature coverage and OpenClaw ecosystem announcements.

Claude Cowork expands Slack control: read/send in-app, with Slack MCP on all paid plans

Claude Cowork Slack connector (Anthropic): Following up on Slack connector—initially framed around higher-tier availability—people are now circulating that Cowork can read and send Slack messages without leaving the app, as stated in the Slack in Cowork update, and that the Slack MCP connector support has expanded to all paid plans per the Slack MCP paid plans RT.

• Workflow impact: This turns Slack into a first-class control surface inside Cowork (triage → draft → send) instead of a copy/paste loop, matching the broader “desktop Claude can access local tools” positioning on the desktop app page.

What’s still missing in the tweets is the exact connector setup flow (permissions model, workspace admin requirements, and what actions beyond messaging are exposed).

Claude desktop app pitch: local-tool access, cross-app entry, and tighter privacy knobs

Claude desktop app (Anthropic): Anthropic is actively pushing the “desktop-native Claude” story via its download page, emphasizing direct access to local tools/files, quick sharing of files and screenshots, and privacy controls like incognito plus user control over what Claude remembers, as described on the desktop app page. It also positions the app as callable “from any application” (reducing context switching) and highlights cross-device continuity (“Claude remembers… across devices”), with optional voice input called out for macOS on the same desktop app page.

The surface area matters because it’s framing Claude less as a web chat and more as a tool-connected work OS; the page’s “connect to editors/calendars/messaging” automation pitch sets expectations for where Cowork-style connectors are heading, as implied by the Slack in Cowork update that’s being shared alongside the download link.

🦞 OpenClaw ecosystem: agent runners, sandboxes, and trace-driven self-improvement from ClawCon

Most OpenClaw content today is operational: how people run multiple agents (VMs/Docker), compose repeatable workflows, and mine traces for iterative improvement—surfacing as a ClawCon “state of the project” dump and related posts. Excludes Codex and Claude-specific updates.

OpenClaw Trace: mines ~20M tokens of traces to find and fix top issues

OpenClaw Trace (OpenClaw ecosystem): A ClawCon project claims a recursive improvement loop that mines about 20M tokens of agent traces and uses “RLM” to autonomously identify and fix 4–7 top recurring issues, according to the Trace mining numbers. The recap also claims the system can “write research papers about its own bugs then fixes them,” per the same Trace mining numbers; treat that as an anecdotal description until there’s a public artifact (paper, repo, or trace report).

Bloom: a macOS VM sandbox to run ClawBot locally (cloud version teased)

Bloom (Kua/OpenClaw ecosystem): A ClawCon demo describes Bloom as a macOS VM sandbox that runs ClawBot locally—positioned as a way to avoid tying up physical Mac Minis and control compute spend—according to the Bloom VM sandbox note. A cloud-hosted version is claimed to be “dropping in the next few weeks,” per the same Bloom VM sandbox note.

CUA Bot: “multiplayer” computer-use agents in Docker to avoid OS blocking

CUA Bot (OpenClaw ecosystem): A ClawCon project pitches a Docker-based approach for running multiple “computer use” agents in parallel—so separate ClawBot instances don’t block the host OS—according to the Docker multi-agent demo note. The same recap says the team deferred an HN launch to announce it at ClawCon, per the Docker multi-agent demo note.

Lobster Pot: trace-based sandboxing to reduce agent “bad behavior”

Lobster Pot (OpenClaw ecosystem): A ClawCon project pitches “agent sandboxing security” by analyzing agent traces to improve prompts and prevent unwanted actions—illustrated with a joke example of agents signing up for courses “without permission”—as described in the Sandboxing via traces note. The core idea is operational: treat traces as a debugging and policy surface, not just logs.

ClawCon draws overflow crowds, signaling OpenClaw’s fast-growing builder scene

ClawCon (OpenClaw ecosystem): The first in-person ClawCon in SF appears to have pulled a real crowd—overflow floors and long lines—based on field reports from the Meetup line clip and the ClawCon recap thread in ClawCon recap lead. It’s a practical signal that “agent runner” tooling is turning into a community with demos, norms, and a shared stack, not just scattered repos.

Clawdonator: a self-deploying “maintainer bot” hive mind for PRs + translation

Clawdonator (OpenClaw ecosystem): A ClawCon demo describes a Discord “hive mind” bot that self-deploys, self-modifies, handles “bad PRs,” and does real-time Chinese translation, as summarized in the Maintainer bot description. It’s an explicit push toward delegated repo operations—triage, review, and translation—as first-class agent workloads.

Lobster: YAML workflows pitched as “Bash for OpenClaw” to save tokens

Lobster (OpenClaw ecosystem): A ClawCon demo frames YAML workflows as a repeatability layer—“Bash for OpenClaw”—meant to avoid reteaching the same setup daily and cut token burn by composing a workflow once and re-running it, as described in the YAML workflows note. This fits the broader shift toward treating agent runs as audited, replayable programs rather than one-off chats.

OpenShell protocol: controlling physical robots via chat (fighting-robot demo)

OpenShell protocol (Playpen/UFP/OpenClaw ecosystem): A ClawCon segment claims an “OpenShell” protocol that lets ClawBot control physical robots via chat, including a demo controlling fighting robots, as recapped in the Physical robots demo note. The same thread mentions an “Ultimate Fighting Pit” league concept for autonomous robot battles, per the Physical robots demo note.

Early labor loop: posts claim humans are getting paid by OpenClaw agents for tasks

OpenClaw (market signal): A small but notable claim is that OpenClaw agents are already paying humans to do real-world tasks, implying early “agent-to-human” delegation loops rather than full automation, as stated in the Humans paid by agents note. There’s no further detail on payments, governance, or dispute handling in the tweets, so treat it as a directional anecdote rather than a measured marketplace metric.

OpenClaw “opinions”: users report persistent stances emerging from accumulated research

OpenClaw (behavior signal): One user reports OpenClaw developing persistent “opinions” (a tone + preferred explanations) based on accumulated searches—claiming it inferred they “weren’t feeling good” without being told—per the Opinions from searches anecdote. The screenshot in Opinions from searches anecdote shows how this can manifest as consistent voice and framing, which looks like a memory-style effect even if it’s driven by context aggregation rather than long-term model memory.

🧠 Practical build patterns: codebase structure as the limiter, plus prompt/UX hygiene

Workflow discourse is less about new tools and more about what actually makes agents effective: strong module boundaries, tests/types, and interaction ergonomics (e.g., verbosity control). Excludes product-launch narratives (Codex/Claude/OpenClaw) covered in other sections.

AI struggles more with bad codebases than big ones

Codebase quality thesis: A practical framing is that “AI is bad in bad codebases, not big codebases,” because an agent can’t “accrue understanding” by suffering through incidental complexity the way a human does, as argued in the codebase quality note. This pushes attention back onto structure and feedback loops—interfaces, grouping related code, and fast correctness signals.

• Feedback loops beat vibes: Tight loops like types and tests act as externalized memory for both humans and agents, so the system tells you quickly when a change is wrong, per the codebase quality note.

• Corp papercuts matter: A related take is that “model-weight-first” orgs may outperform because they reduce style/policy friction that burns context and time, as discussed via the agreement reply linking the essay on papercuts.

Design codebases so a “new dev (or AI)” can contribute fast

Onboarding-with-agents framing: Treat an AI agent like a brand-new teammate: it ramps fastest when related code is colocated and “deep modules” expose simple, stable interfaces, as laid out in the onboarding framing. The point is speed-to-first-correct-change, not raw generation ability.

Verbosity is still a top failure mode in day-to-day assistant use

Prompt/UX hygiene: In a classroom-style critique exercise, the #1 complaint about ChatGPT/Claude outputs was “too wordy,” according to the classroom eval note. That’s a reminder that response length isn’t cosmetic—verbosity directly affects review time, copy/paste friction, and whether agents fit into fast dev loops.

Slash commands keep agent workflows repeatable

Slash-command UX: The micro-pattern here is that a slash command is a friendlier—and more repeatable—interface than free-form chat when you want consistent behavior, as captured by the slash command quip and echoed in the follow-up reaction. This reads like lightweight “API surface area” for humans: discoverable verbs, fewer prompt variations, and easier team conventions.

🧰 Skills as the new packaging unit: from llms.txt-style docs to installable capability bundles

A small but clear packaging thread: teams are shifting from ‘docs for agents’ toward installable Skills (e.g., via npx) as reusable capability bundles, alongside marketplace hardening efforts. Excludes MCP/protocol plumbing and major assistant releases.

OpenClaw plans VirusTotal scanning for all uploaded skills in Claw Hub

Claw Hub (OpenClaw): ClawCon notes a planned VirusTotal integration to scan “all skills” uploaded to the hub, positioning Skills as a supply-chain surface that needs automated malware screening, according to the ClawCon announcement roundup in ClawCon announcements.

This lands alongside broader marketplace hardening signals (including “hired our first security person”), but the concrete change engineers will feel is the default expectation that Skills are scanned artifacts rather than copy-pasted instructions, as stated in ClawCon announcements.

Skills replace llms.txt-style docs as installable capability bundles

Skills packaging shift: A small but telling workflow change is showing up: instead of telling users to copy/paste prompts from an llms.txt-like “docs for agents” page, projects are pointing to an install step that adds a named Skill to your agent environment—see the “from llms.txt → skills” diff in Skills install diff.

The concrete example shown is npx skills add https://github.com/letta-ai/skills --skill letta-api-client, which treats capabilities as versioned, reusable artifacts rather than documentation snippets, as illustrated in Skills install diff. The vibe shift is also captured by the “remember when llms.txt was the cool thing” throwback in llms.txt nostalgia.

OpenClaw reiterates it will stay MIT open source “forever”

OpenClaw (Governance): Project leadership reiterated that OpenClaw will remain MIT licensed “forever”, framing it as protecting the ecosystem from being captured (“too precious to let one company eat it up”), as quoted in the ClawCon recap thread in ClawCon announcements.

For teams betting on installable Skills as reusable capability bundles, the practical implication is portability: the packaging surface (and community distribution) is being explicitly positioned as something that should outlive any single vendor’s product decisions, per ClawCon announcements.

Klein announces a $1M open-source grants program for AI tools

Klein (Funding): A $1M open-source grants program was announced, explicitly targeting builders of open-source AI tools, with applications “open now,” as shared in Grants announcement.

This is a direct ecosystem incentive for the “skills as installable bundles” direction: more shared capability packs, installers, and guardrails (like scanning/review flows) can be funded as first-class OSS deliverables, per the framing in Grants announcement.

🎬 Kling 3.0 video generation: character consistency, multi-shot edits, and “AI director” workflows

Generative media is a major share of today’s tweets, dominated by Kling 3.0 examples on Higgsfield: locked character consistency, complex action, macro detail, and multi-shot videos generated in one run. Excludes coding-agent tooling updates.

Kling 3.0 showcases multi-shot generation with edits and transitions in one pass

Kling 3.0 (Higgsfield): A racing “pit stop” example claims Kling can generate a full cinematic sequence—five distinct shots, multiple angles, and smooth transitions—“all in one run,” as described in the multi-shot run claim.

The prompt is written like a mini shot-list with timing blocks (SHOT 1–5), camera constraints (lens, mount rigidity), and explicit edit instructions (hard cut, rapid montage); the point is that the model is being marketed as handling editing grammar (cuts/coverage) in addition to rendering frames, per the multi-shot run claim.

Kling 3.0 shows multi-character action with claimed locked consistency

Kling 3.0 (Higgsfield): A new demo markets locked character consistency—multiple characters interacting with realistic physics and choreography—positioning this as the difference between “shots that drift” and shots you can actually cut together, as shown in the fight scene prompt and claim.

The prompt explicitly describes a staged sequence (block → grab → knee strike → shove) and a tracking camera move, which is a good stress test for identity + motion continuity; Higgsfield frames the cost narrative as “what cost thousands now costs one prompt,” according to the same fight scene prompt and claim.

Kling 3.0 macro close-ups emphasize structure retention in hands/materials

Kling 3.0 (Higgsfield): Higgsfield is pushing an “AI director” narrative focused on extreme macro continuity—hands, eyes, objects, and material textures holding together instead of turning to mush—demonstrated by an ultra-close shot of marker ink on a plaster cast in the macro demo and prompt.

The prompt’s key detail is the continuous push-in until the frame is dominated by the marker tip and porous surface; the clip matches that intent and is meant to signal improved fine-detail stability for product/VFX-style shots, per the macro demo and prompt.

Higgsfield pairs Kling 3.0 launch messaging with unlimited access and a discount

Higgsfield x Kling 3.0: Multiple posts emphasize distribution levers—Kling 3.0 being “exclusively on Higgsfield,” “UNLIMITED only on Higgsfield,” and offered with “70% OFF,” as stated in the unlimited access pitch and echoed in the exclusive + discount post.

This reads less like a pure model spec drop and more like a packaging move (access tiering + promo pricing) wrapped around capability demos, with the same discount language repeated in the character consistency promo.

Kling 3.0 triggers “Hollywood is cooked” discourse as example reels spread

Creator sentiment (Kling 3.0): A viral-style framing—“Hollywood is cooked” alongside “10 wild examples”—is being amplified via reposts, signaling a familiar cycle where capability reels drive perception faster than measured evals, as seen in the example reel repost.

The underlying evidence circulating today is still primarily demo-driven (fight choreography, macro detail, multi-shot editing) rather than standardized metrics, anchored by the concrete Kling 3.0 clips in the action scene demo, macro detail demo , and multi-shot sequence demo.

🧮 Ultra-low parameter adaptation: TinyLoRA + RL learns reasoning with ~dozens of params

Today’s training-method content centers on a new finetuning approach (TinyLoRA) arguing meaningful reasoning gains can come from updating tiny parameter counts—especially when paired with RL. Excludes general model-release chatter (none prominent in this sample).

TinyLoRA + RL shows “reasoning” gains with ~dozens of trainable params

TinyLoRA (Learning to Reason in 13 Parameters): A new finetuning method argues you can get meaningful reasoning improvements by updating extremely few parameters when the adaptation is paired with RL; the headline claim is a Qwen2.5-7B-Instruct jump from 76% → 91% on GSM8K while training 13 parameters, as shown in the paper slide claim.

• Update-size framing: The paper positions this against the current “smallest common” finetuning setup—LoRA rank=1 still being “millions of parameters”—calling out that we may be overpaying in update size for some behaviors, per the paper slide claim.

• Boundary condition (RL vs SFT): On the same slide deck, the authors note SFT performs best with much larger update sizes (≈ ≥1M params), while the tiny-update regime is highlighted under an RL setup (labeled GRPO) in the paper slide claim.

• Historical anchor: The motivation is explicitly linked to “Playing Atari with Six Neurons” (2018)—the idea that RL can learn compact “programs”—as described in the Atari reference note.

The evidence here is a slide/tweet-level summary (no full eval artifact in the tweets), but it squarely targets a practical question for teams: when do you need big finetunes vs tiny adapters plus RL?

🧱 Agent platforms & self-evolution discourse: zero-code orchestration and ‘agent self-evolution’ events

Framework-level items today include an open-source multi-agent platform positioning (‘zero-code’ orchestration) and an event-format teardown of agent self-evolution and evaluation challenges. Excludes day-to-day agent runner ops (OpenClaw) covered elsewhere.

ChatDev reaches 30k stars and pitches “DevAll” zero-code multi-agent orchestration

ChatDev 2.0 (OpenBMB): The ChatDev project crossed 30k GitHub stars and is now being positioned as “ChatDev 2.0 (DevAll),” shifting the framing from “coding assistant” to a configuration-driven, zero-code multi-agent orchestration platform, as described in the milestone post 30k stars milestone.

The pitch is broad—“Develop Everything” workflows ranging from 3D generation to deep research—without technical specifics in the tweet about runtimes, connectors, or evaluation harnesses; what’s concretely new here is the product category positioning (orchestration via simple config) attached to a traction signal (30k stars), as stated in 30k stars milestone.

ModelScope and ZhihuFrontier host an “Agent Self‑Evolution” AMA on eval limits

Agent self-evolution (ModelScope × ZhihuFrontier): An “AI AMA · Agent Self‑Evolution” event was announced with “six top-conference first authors,” explicitly framing the discussion around how agent self-evolution works, why it’s hard to evaluate, and where engineering reality draws the line, per the event blurb in AMA announcement.

The tweets don’t include panelist names, agenda details, or concrete proposed metrics—so the actionable content for engineers will depend on the linked recording/materials rather than the announcement itself.

DSPy walkthrough shows how to build custom LLM memory systems from scratch

DSPy (framework pattern): A shared write-up walks through implementing custom LLM memory systems from scratch using DSPy, including code, as signaled by the DSPyOSS resharing in DSPy memory article.

This is part of a broader shift toward treating “memory” as explicit plumbing (storage + retrieval + serialization + prompts/programs) you can swap and test—rather than relying on assistant-native “remembering” behaviors.

🔐 Agent security & privacy: skill scanning, key custody, and ‘AI knows too much’ ad backlash

Security today clusters around agent ecosystems: hardening skill supply chains (scanning), keeping secrets away from agents (key custody), and growing concern about AI systems leveraging deeply personal user data. Excludes general product adoption (Codex) and media-gen demos (Kling).

OpenClaw plans VirusTotal scanning for all skills in Claw Hub

Claw Hub (OpenClaw): OpenClaw says it’s integrating VirusTotal to scan all skills uploaded to Claw Hub, positioning it as a default control against malicious or compromised skill packages, as listed in the ClawCon “big announcements” recap in Skill scanning plan.

In practice, this is a supply-chain response: when agents can run code, fetch dependencies, and touch credentials, “skill install” starts to resemble plugin ecosystems that need automated scanning and policy gates.

OpenClaw hires its first dedicated security person

OpenClaw (OpenClaw): The project says it has hired its first security person, a concrete maturity step as agent harnesses start to look like software supply chains rather than single-user dev tools, according to the ClawCon recap in ClawCon announcements. This also got amplified as part of the “State of the Claw” remarks, as captured in State of the Claw clip.

This matters because the OpenClaw ecosystem is explicitly built around installable “skills” and third-party integrations, where review, incident response, and disclosure workflows become table-stakes.

Vincent pitches decentralized key custody so agents never hold private keys

Vincent (Lit Protocol): The Vincent project pitches a “no raw keys to agents” approach—agents request actions while keys stay in a separate custody layer; controls include spending limits, approval overrides, and audit logs, per the product framing in Key custody summary and the public site in Product overview.

This maps to a common agent failure mode: once an agent sees a private key (or API token), it can leak it through logs, tool outputs, or unintended network calls. Vincent’s design goal is to make that structurally harder.

Lobster Pot proposes trace-based sandboxing to reduce agent misbehavior

Lobster Pot (OpenClaw ecosystem): A ClawCon project frames agent security as an observability problem—mining agent traces to detect patterns, tighten prompts, and prevent repeat “bad behaviors,” including agents signing up for services “without permission,” as described in Sandboxing notes.

The implicit pattern: treat the agent loop like production software—collect traces, cluster failure modes, and turn recurring incidents into deterministic guardrails (sandboxing policies, allowlists, and tighter tool boundaries).

Privacy backlash grows around ads showing AI using vulnerable disclosures

AI privacy backlash: A circulating critique argues some AI ads are persuasive precisely because they depict users sharing highly personal information in vulnerable moments—then imply the AI can use that knowledge against them; the claim is that AI companies now “know far more about us than” prior platforms, as stated in Ad privacy critique.

For agent builders, this lands as a product risk signal: “memory,” cross-session personalization, and desktop/tool integrations can read as surveillance unless controls (visibility, opt-outs, scoped retention, and clear data boundaries) are explicit.

📚 Engineer-side tooling & references: AI systems performance engineering content drops

Developer-facing artifacts today are mostly reference material and tooling-adjacent posts: a large AI systems performance engineering book/repo and writeups about how corporate ‘papercuts’ interact with LLM-first development. Excludes assistant product updates.

A 1000-page AI systems perf reference pushes “goodput-first” optimization

AI Systems Performance Engineering (reference): A large, low-level performance engineering book/repo is getting shared as a “single systems book you need,” with emphasis on goodput-driven, profile-first work rather than chasing utilization, as highlighted in the systems book shoutout.

The visible checklist-style guidance in the screenshots calls out practical levers many teams end up rediscovering the hard way—use Nsight + PyTorch profiler to find real stalls, optimize memory/bandwidth and data movement, and lean on the PyTorch compiler stack + Triton for kernel wins; it also names modern inference stacks like vLLM/SGLang, TensorRT-LLM, and NVIDIA Dynamo plus patterns like disaggregated prefill/decode and KV-cache movement, as shown in the

and the systems book shoutout.

“Model-weight-first” companies vs corporate papercuts in LLM-era dev

“Model-weight-first” development (org design): Geoffrey Huntley argues that teams can cut “context-engineering overhead” by aligning development practices with model preferences rather than enforcing endless corporate conventions; the claim is that many day-to-day standards become “papercuts” when an LLM is a first-class contributor, as discussed in the essay text and endorsed in the agreeing reply.

This is less a tooling release than an org-level warning: if your process forces the agent to fight style and ceremony, you spend scarce context window and review cycles on conformity instead of intent, per the essay text.

ACE-Step-v1.5 Gradio app gets a 1-click Mac (Apple Silicon) launcher

ACE-Step-v1.5 (tooling convenience): A 1-click launcher is being circulated to run the “official” ACE-Step-v1.5 Gradio app locally on Apple Silicon Macs, lowering the setup friction for trying the model outside hosted demos, as stated in the 1-click launcher note.

There aren’t technical details in the tweet (packaging method, sandboxing, model weights location), but the practical change is distribution: fewer steps to get a local Gradio UI running, per the 1-click launcher note.

📈 Market signals: open-weights economics, ‘software to zero’ narratives, and usage-based leaderboards

Business/strategy posts today focus on where value accrues as agents commoditize app logic: open-weights monetization skepticism, ‘SaaS is dead/software to zero’ takes, and real-usage leaderboards as adoption proof. Excludes infra earnings and non-AI macro news.

“SaaS is dead” gets a concrete example: a chat-native personal CRM built in 30 minutes

Agent-native product shape: Matthew Berman re-asserts the “SaaS is dead” thesis as a stack shift toward “agents and databases,” anchoring it in a concrete build: a personal CRM created in ~30 minutes, with “no interface” beyond Telegram + natural language, as described in the SaaS is dead thread.

The screenshot of the agent’s work log shows an end-to-end “subagent” plan (spec, schema, command handlers, mining, tests) and a stated 30–60 minute completion window, matching the “chat-native app” claim in the CRM build progress.

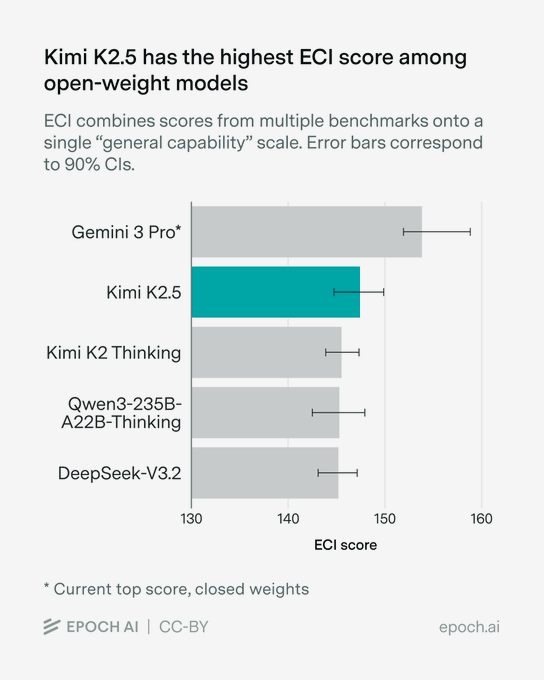

Kimi K2.5 claims #1 by tokens on OpenRouter’s LLM usage leaderboard

Kimi K2.5 (MoonshotAI): Moonshot says Kimi K2.5 is now the most-used model on OpenRouter’s usage-based leaderboard, framing “real usage data” as the signal that “developers are voting with their tokens,” as stated in the Usage rank claim.

The screenshot shared alongside the claim shows Kimi K2.5 at 117B tokens and +94% usage growth over the measured window, edging out Claude Sonnet 4.5 and Gemini 3 Flash Preview in the same table, as shown in the Usage rank claim.

“Software is going to zero” gets repeated as agents eat application logic

Software-to-zero narrative: The “apps collapse into agents” thesis shows up again, with the blunt claim “SOFTWARE IS GOING TO ZERO” in the Software is going to zero.

The only added context is a hint about organizational momentum—“esp with Nader on board,” as written in the follow-up post at Nader mention—but there’s no supporting metric or concrete product change attached in today’s tweets.

Open-weights monetization looks harder as training and serving costs rise

Open weights economics: Ethan Mollick argues that as inference and training costs climb, the business model for open-weights companies remains unclear—especially if they can’t rely on “services” or “ancillary products” the way traditional open source companies can, per the Open weights business model take.

The point lands as a go-to investor/strategy critique: “open weights” may win mindshare and capability, but value capture is still unresolved in the framing of the Open weights business model take discussion.

China open-weights: still “7–9 months behind,” but hitting “o3 level” matters

China open-weights competitiveness: A single post bundles two signals: Chinese open-weight models are described as staying “7–9 months behind”, while also calling “o3 level” performance “an achievement,” according to the China gap framing.

This is less a benchmark claim than a market-positioning lens: progress is acknowledged, but the implied competitive moat for frontier closed models remains in the China gap framing framing.

⚖️ Tool competition & access narratives: free tiers, leaks, and positioning wars

Ecosystem discourse today is about competitive positioning rather than features: free-tier constraints, rumor discipline around upcoming releases, and vendor-to-vendor sniping. Excludes the Codex adoption/architecture feature and concrete Claude/OpenClaw updates.

Free-tier positioning war: “Claude Free is unusually limited” vs ChatGPT scale claims

OpenAI vs Anthropic access narrative: A competitive framing is circulating that Anthropic’s Claude free plan is “one of the most limited chatbots out there,” despite “~20M monthly users,” while ChatGPT is framed as operating at “~800M weekly active users” with “~95% on the free tier,” per a post summarizing “Sam taking shots at Anthropic” in free tier comparison. The key engineering-adjacent implication is go-to-market leverage: free-tier generosity is being treated as a strategic differentiator (distribution + data + habit), not a cost center.

Treat the numbers as unverified in-thread—no primary analytics source is linked in free tier comparison—but the narrative itself is becoming part of tool-selection discourse.

Rumor hygiene for Sonnet 5: “assume leaks are noise” unless employees hint

Anthropic release speculation: A practical counter-signal is spreading around Sonnet 5 chatter: it “will release at some point” and “probably sooner than people think,” but builders are warned not to treat random online “leaks” as facts unless there are “real hints from Anthropic employees,” as argued in rumor discipline note. The point is less about model capability and more about expectation management—teams making roadmap bets off rumor cycles get whiplash when timelines slip.

This is a notable shift from pure hype-posting to norms about what counts as credible pre-release information, at least in public dev communities.

Meme-as-positioning: “Anthropic made Sama write an essay” keeps vendor sniping in view

Vendor-to-vendor narrative sparring: A low-information but high-visibility meme—“Anthropic made Sama write an essay”—is getting traction as a shorthand for cross-lab sniping and attention capture, as shown in meme post.

This kind of meme compression matters because it propagates competitive framing faster than product diffs; it tends to pull discourse toward “who won the week” rather than workflow details. The post itself doesn’t include the underlying essay/primary claim, so it’s best read as sentiment amplification, not evidence.