Kimi K2 Thinking goes live – OpenRouter lists 556 tps, 0.28 s

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

MoonshotAI flipped Kimi K2 Thinking to general availability across web, app, and API, so you can finally route real traffic instead of teasing pilots. Following last week’s open‑weights drop, the practical news is speed and control: OpenRouter’s panel for the K2 route shows ~556 tps with ~0.28 s latency, plus a roomy ~262K context and ~16.4K max output for long, tool‑heavy jobs.

Early field signal is promising: one team reports K2 matched GPT‑5 on a deep customer‑support agent, with comparable orchestration and tool use. The playbook is straightforward—cap “thinking tokens” to keep costs in check, log tool‑call chains and retries, and A/B against your current route on 50–100 real tickets before a wide cutover.

If you care about time‑to‑first‑token (TTFT), the community push is to route K2 via OpenRouter to Groq where available; the throughput and TTFT combo should make human‑in‑the‑loop loops feel snappier. Verify provider caps against your longest prompts, keep a fallback, and monitor tps/latency hourly—nothing kills a promising agent faster than an unplanned timeout (ask me how I know).

Feature Spotlight

Feature: Kimi K2 Thinking goes live (web, app, API)

Moonshot’s Kimi K2 Thinking is now live on kimi.com, the Kimi app, and API; early agent tests report GPT‑5‑class orchestration/reasoning, with the community pushing for high‑throughput hosting (e.g., Groq) for agentic workloads.

Cross‑account coverage of Moonshot’s Kimi K2 Thinking release and early practitioner results. Today’s sample centers on the public rollout and developer reactions; excludes leaderboard/eval angles, which are covered separately.

Jump to Feature: Kimi K2 Thinking goes live (web, app, API) topicsTable of Contents

🚀 Feature: Kimi K2 Thinking goes live (web, app, API)

Cross‑account coverage of Moonshot’s Kimi K2 Thinking release and early practitioner results. Today’s sample centers on the public rollout and developer reactions; excludes leaderboard/eval angles, which are covered separately.

Kimi K2 Thinking launches on web, app, and API

Moonshot made K2 Thinking generally available today on kimi.com, the Kimi app, and via API, with explicit support for more “thinking tokens” and additional tool‑call steps for longer, multi‑step work launch post, and Kimi page. This is a full product rollout, not a closed preview. That matters if you’re wiring up agents or long‑running workflows.

For teams following up on INT4 QAT (K2’s quantization and latency notes), the headline here is access. You can now route real traffic and tune cost/latency by adjusting tool‑call depth and the model’s internal thinking budget. Start small. Measure.

• Route long, tool‑heavy tasks to K2; cap its thinking tokens to control spend.

• Log tool‑call chains and retry policy; compare against your current default.

• A/B K2 vs your baseline on 3–5 real workflows before broad rollout.

Early evals put K2 Thinking on par with GPT‑5 for agent orchestration

A practitioner reports K2 Thinking matched GPT‑5 on a deep customer‑support agent, citing parity on orchestration and reasoning with strong tool use agent eval. It’s one data point, but it points to K2 being deployable for complex, multi‑tool flows where chain control and retries matter.

If you’re maintaining an agent stack, this suggests a viable alternative when you need long tool sequences without giving up planning quality. Validate it on your own tickets, not synthetic prompts.

• Benchmark side‑by‑side: same traces, same tools, 50–100 real tickets.

• Stress test escalation paths and fallback tools; compare failure modes.

• Track token budget vs resolution rate; watch for hidden latency spikes.

Community push for Groq hosting K2; OpenRouter shows ~556 tps, 0.28 s

Builders are asking for K2 Thinking on Groq at “500 tokens/s,” pointing to OpenRouter’s provider panel for Kimi K2 0905 that lists Groq at ~556.1 tps throughput with ~0.28 s latency and healthy uptime provider metrics. If Groq becomes the default backend for K2 traffic, you can expect materially lower time‑to‑first‑token for interactive tools.

This is about serving readiness. If your workflows depend on tight human‑in‑the‑loop loops, check provider routing and caps (context ~262K, max output ~16.4K noted in the panel).

• Route K2 via OpenRouter to Groq for latency‑sensitive paths; keep a fallback.

• Verify context/output limits on your longest prompts before switching.

• Monitor tps/latency hourly; auto‑failover when throughput dips.

💻 Agent coding stacks: Composer, LangChain, LlamaIndex, Evalite

Busy day for practical agent/coding workflows: Cursor’s Composer and multi‑agent UI, LangChain guides, LlamaIndex finance agents, and a new eval runner. Excludes K2 launch (covered as feature).

Cursor 2.0 ships Composer‑1 with <30s turns, ~4× faster and a multi‑agent UI

Cursor rolled out Composer‑1 and a 2.0 interface that runs multiple agents in parallel using git worktrees, with <30‑second turns and claims of ~4× faster output than peers. It also adds a built‑in browser for testing, positioning the IDE as an orchestrator for planning + execution loops launch thread.

This matters for teams trying to keep engineers in flow while agents do context gathering, diffing, and test loops. Sub‑30s cycles change how you route tasks between local agent flows and slower, cloud‑heavy runs.

Devs ask OpenAI to replace 256‑line tool output pruning with token‑based limits

A new GitHub issue details how Codex’s head+tail truncation at 256 lines (≈10 KiB) forces extra tool calls, loses mid‑log context, and slows agents—requesting token‑based caps similar to Claude Code’s 25k limit github issue, analysis thread.

A token‑aware policy would preserve critical compiler/test logs and reduce retries, directly improving multi‑tool agent loops.

Evalite v1 beta lands with built‑in scorers, pluggable storage, and a watch UI

Evalite cut its first v1 beta for TypeScript AI app evals: install with pnpm, score with a library of built‑ins, stream results in a watch mode, and save outputs to any store via a custom adapter release notes, quickstart.

This gives agent/app teams a lightweight loop to measure quality regressions by PR and catch prompt/tooling bugs before rollout.

LangChain publishes a production Streamlit travel agent guide with tools and deployment steps

LangChain shared a step‑by‑step build of a Streamlit travel assistant that chains agents with weather, search, and video, covering API setup (OpenAI, Serper, OpenWeather), local runs, and deployment options—useful as a template for productionizing small agent apps guide post.

The guide is clear on project structure, env keys, and where to put evaluation/monitoring hooks, so teams can test tool orchestration without building scaffolding from scratch.

LlamaIndex shows a multi‑step SEC filing agent that classifies and extracts

LlamaIndex demoed an agentic workflow that ingests an SEC filing (10‑K/10‑Q/8‑K), classifies the form, then routes to the correct schema for extraction using LlamaExtract, all orchestrated via LlamaAgents/LlamaCloud demo run. This streamlines a common finance data task.

It’s a useful counterpart to the company’s inbox → invoice/expense pipeline—quickly turning unstructured docs into structured rows invoice flow.

Codex shell tool fix kills entire process group on timeout to unstick agents

A merged PR launches shell tool processes in their own group and SIGKILLs the group on timeout/Ctrl‑C, preventing grandchildren from holding the PTY open. Default timeout remains 1000 ms pull request.

This is a pragmatic reliability win for unattended runs where commands like dev servers can wedge the session.

Graphite stacked diffs emerge as the AI code review workflow

A long demo shows how to tame large AI‑generated changes by stacking small, dependent PRs (gt submit, gt modify, gt continue), letting humans review narrow diffs while agents keep pushing on the rest workflow demo, video page.

It fits the reality of AI coding: high throughput diffs need guardrails. Stacks reduce rebase hell and make rubber‑stamping less risky.

Teams adopt Composer‑1 as the default, with GPT‑5 fallback for tough cases

Practitioners report a pragmatic stack: run composer‑1 for most tasks and fall back to GPT‑5 for tricky instructions or creative edge cases usage pattern. Others split roles entirely—composer‑1 for live local coding and GPT‑5‑Codex for heavier cloud runs workflow tip.

This pattern helps balance latency, cost, and reliability without over‑engineering agent routing.

Factory proposes incremental context compression to keep long agent sessions fast

Instead of re‑summarizing full histories on every request, Factory’s approach maintains persistent summaries updated at anchor points, with thresholds to decide when to compress—cutting cost/latency while keeping salient past state blog post.

For multi‑hour assist sessions, this can stabilize quality and avoid hitting context limits while preserving key tool outputs and decisions.

ZenMux gateway pitches auto model routing and "model insurance" for downtime/quality

ZenMux surfaced a one‑key gateway spanning OpenAI, Anthropic, Google, DeepSeek and more, with auto‑routing for price/quality, SLA‑style compensation for timeouts/bad outputs, multi‑provider failover on Cloudflare edge, and detailed per‑token cost logs feature brief.

If it holds up in practice, this could simplify ops for teams juggling fast local agents and slower cloud "horizon" jobs.

🔌 MCP in practice: implementer pain and mcporter fixes

Strong discourse on MCP ROI and reliability plus incremental tooling updates. Today focuses on real‑world friction and small wins; not about agent coding stacks (separate category).

Implementers question MCP ROI: unstable servers, config bloat, and tool sprawl

Builders pushed back on real‑world MCP usage: users pile on many servers, most crash or go unused, and SSE→streaming fallbacks add early‑spec bloat. Config and auth are hard, and teams end up building complex UIs just to manage tools. The net: fewer, higher‑quality MCPs may beat ambitious routers right now implementer thread, roi comment, follow-up critique. Community feedback also flags that official sample servers are unstable, asking for better reference implementations that don’t fail under load sample quality.

- Trim your stack: ship 1–3 stable servers first, add routing later.

- Gate by reliability: crash loops and flaky auth kill trust faster than missing features.

Agents can now compose/filter MCP calls and persist per‑call context

A helpful pattern is emerging: agents compose and filter MCP calls so context persists on every call, not only at declaration. This reduces prompt clutter and cuts cross‑tool misfires when you load more than one server mcporter list note. For teams juggling several MCP backends, this is a pragmatic way to keep state tight while retaining power tools.

- Route sparingly: prefer local per‑call context to giant global prompt state.

- Measure wins: look for lower tool‑call count and fewer retries per task after adopting this pattern.

McPorter ‘list’ now resilient to broken Claude/Codex/Cursor configs

McPorter tightened its CLI so npx mcporter list works even when Claude/Codex/Cursor config files are broken, reducing setup‑day footguns and making discovery reliable again release note. This lands after last week’s inventory feature, following up on server list improvements to enumerate local MCP servers with health/auth states. Try the updated CLI from the repo and notes on the project page GitHub repo, project page.

- Run a health sweep: mcporter list now surfaces usable servers despite bad client configs.

- Standardize configs: isolate per‑client files so one tool’s failure doesn’t poison the rest.

Teams report Atlassian MCP working well for Jira/Confluence workflows

Real‑world signal: practitioners say the Atlassian MCP for Jira/Confluence is delivering reliably where other workflows struggled, underscoring that a few well‑scoped, stable servers can trump a kitchen‑sink setup jira usage note. If you’re piloting MCP, Jira/Confluence is a sensible first integration because the task surface is structured and permissions are clear.

- Start with ticketing: wire MCP into issue creation, status edits, and search before broader rollouts.

- Log outcomes: track SLA hits and error rates per tool to justify expanding MCP scope.

⚙️ Runtime reliability: Responses API state and Codex behavior

A day of gritty runtime issues and fixes: OpenAI Responses API state sync/ID friction, Codex line‑based truncation, and process‑group timeouts. Excludes K2 throughput hosting asks; that sits under the feature.

OpenAI Responses API state desync pushes teams to client state; org verification blocks reasoning logs

Builders report the Responses API’s server‑stored conversation state goes out of sync and errors out, so teams are switching to client‑managed state—then hitting friction because returning "reasoning parts" requires org verification or user IDs, which many end users won’t provide Developer complaint, Org verification. Another practitioner notes the verification requirement predates recent state issues (since o3), but it still leaves products choosing between bugs or gated features ID policy note. Short term, expect more clients to checkpoint/rehash state locally and fall back on minimal server state to avoid brittle sessions.

Codex head+tail truncates tool calls at 256 lines; maintainers urged to switch to token‑based limits

A deep dive shows Codex prunes tool outputs to 256 lines using head+tail (128/128) and ~10 KiB limits, often forcing extra tool calls, slowing loops, and hiding critical mid‑logs; a GitHub issue asks for token‑based caps like Claude Code’s 25k, not line counts GitHub issue, GitHub issue. The thread also notes that before the recent 0.56 change, MCP tool calls sometimes hit the model "raw," making first‑turn digestion better than subsequent, now‑truncated turns Analysis thread. This follows Codex capacity raising limits; here the request is about smarter context budgeting, not more of it.

Codex fix lands to kill entire shell tool process groups on timeout/Ctrl‑C, avoiding PTY hangs

A merged PR changes Codex’s shell tool to spawn in distinct process groups and SIGKILL the whole group on timeout or interrupt, preventing grandchildren from holding the PTY open and freezing the agent; default per‑command timeout remains ~1000 ms per the patch notes Pull request, GitHub PR. For unattended agent runs, this is a real recovery win—long‑running dev servers (e.g., next dev) no longer strand sessions when they outlive the child shell.

📊 Leaderboards and evals: France’s compar:IA, K2 LiveBench, METR

Eval pulse spans a national user‑voted board, a new K2 LiveBench readout, and time‑horizon framing from METR. Excludes K2 product launch (feature) and sticks to measurement and ranking angles.

Kimi K2 Thinking is #24 on LiveBench, #2 for instruction following

A fresh LiveBench readout pegs Kimi K2 Thinking at a 67.93 global average and rank #24 overall, while placing #2 in the instruction-following subcategory (90.91). The same panel shows weaker performance on reasoning tasks, including Web of Lies and Zebra Puzzle bench table.

METR time-horizon chart: GPT‑5 ~135 min, Claude 4.5 ~115 at 50% success

The METR-style time-horizon framing circulating today puts GPT‑5 at roughly 135 minutes and Claude 4.5 at ~115 minutes of autonomous task time at a 50% success rate, with community discussion highlighting a doubling cadence about every seven months analysis thread. The shared plot anchors model points across tasks from quick fact-finds to multi-hour exploits plot view.

France’s compar:IA user leaderboard crowns Mistral Medium; 188k votes logged

France’s government-run compar:IA preference board now lists mistral-medium-3.1 at #1 by Bradley–Terry score, with 188,200 user votes recorded; organizers note it reflects subjective satisfaction, not a technical benchmark ranking page, and the entry shows detailed stats per model Leaderboard. Following up on compar:IA debate, today’s snapshot provides concrete vote totals and rank order that critics say favor local models.

🛡️ Threat intel and safety: Malware with in‑execution LLMs, OWASP RC

Clear security drift as malware starts using LLMs at runtime; OWASP Top 10 2025 (RC) lands. Community culture risks noted separately when relevant.

Malware starts using LLMs during execution to mutate and evade

Google’s threat team is flagging a shift: some malware now calls LLMs at runtime to rewrite itself, obfuscate, and adapt on the fly threat note. Families described include Promptflux plus variants that use models to generate scripts, persistence steps, and data‑theft helpers PC Gamer coverage.

For builders, this changes detection assumptions. Lock down egress to AI endpoints, rotate/monitor API keys, and alert on unusual outbound model calls from untrusted processes. Treat “AI API usage” as a new indicator of compromise in your telemetry.

OWASP Top 10 2025 (RC) is out; time to update controls

OWASP published the 2025 Release Candidate of its Top 10, a useful north star for AppSec and DevOps teams as AI‑assisted development and agentic backends spread release note.

Use it to re-baseline threat models, then map findings to concrete guardrails: secret handling in AI toolchains, sandboxed exec for agent tools, request signing on model callbacks, and strict egress policies for third‑party APIs.

Codex will kill tool process groups on timeout to stop hangs

A Codex PR switches shell tools to their own process groups and sends SIGKILL to the entire group on timeout or Ctrl‑C. This prevents orphaned grandchildren from holding the PTY open and bricking the agent loop pull request.

Adopt the same pattern in your runners. Kill the process group, clear the TTY, and surface a structured tool error so planners can retry or back off safely.

Where should agents run code—local sandbox or cloud?

A lively builder thread weighs local, offline‑first sandboxes (fast, private, risky if mis‑scoped) versus safer remote execution for agent code, calling out permissions, env‑var exposure, and filesystem access as failure points architecture thread. A follow‑up argues WebAssembly is the most battle‑tested sandbox to anchor local runs sandbox suggestion.

If you ship agent code‑exec, set a default deny policy: no net egress, ephemeral FS, explicit allowlists for paths/tools, scrub env, and per‑call time/memory caps. Offer a remote fallback for zero‑trust tenants.

Employee harassment by ‘4o’ superfans underscores social risk

An OpenAI employee was targeted by aggressive ‘4o’ fan accounts after public criticism, highlighting how highly‑persuasive model personas can amplify user fixation and harassment incident report.

Leaders should bake social‑risk guardrails into model and community policy: avoid sycophantic personas by default, rate‑limit high‑affect responses, and enforce clear escalation paths for staff safety.

🧩 Wafer‑scale and reticle limits: hardware direction of travel

WSJ analysis argues the ‘microchip era’ yields to wafer‑scale designs; thread summarizes constraints (reticle limit, high‑NA EUV cost) and cites Cerebras WSE‑3. Not a product launch—strategy context for AI compute.

WSJ: Reticle limits and $380M EUV push wafer‑scale compute into focus

A widely shared WSJ analysis argues the classic “one big GPU” path is hitting physics and cost walls, highlighting the lithography reticle limit (~800 mm²) that forces multi‑chip stitching and rising interconnect penalties, while ASML’s high‑NA EUV tools run ~$380M each and ship in hundreds of crates over months. The piece spotlights wafer‑scale as the counter‑move: keep signals on‑wafer, place compute next to memory, and avoid off‑package hops that waste power and time WSJ analysis.

- Cerebras WSE‑3 is cited at ~4 trillion transistors and ~7000× on‑device memory bandwidth vs conventional GPUs, with 16‑wafer systems totaling ~64T transistors. Multibeam’s multi‑column e‑beam lithography is noted as a route to bypass reticle limits by direct‑writing across an 8‑inch wafer WSJ analysis.

Why it matters: Communication dominates at cluster scale. Reticle ceilings plus $300M+ litho capex push the industry toward architectures that trade packaging complexity for local bandwidth and energy efficiency. If wafer‑scale and direct‑write mature, AI training stacks could shrink interconnect budgets and unlock new perf/W headroom without waiting on ever‑larger monolithic dies.

🏗️ Compute supply and geopolitics: US share up, GPU pressure

Signals on AI compute availability and concentration: US share rising, Europe fading in charts; OpenAI juggling GPU scarcity. Not hardware roadmaps (separate).

OpenAI reportedly hits daily GPU shortfalls; rough 2026 capacity mix charted

OpenAI is said to be “running out of GPUs almost daily,” with a rough 2026 online compute mix circulating that tags ~1.0 GW each from Nvidia and AMD, ~0.5 GW AWS, ~0.2 GW Broadcom, and ~0.3 GW from a Stargate build, while heavier models (GPT‑5 ‘thinking’) and Sora strain capacity GPU strain. The note lands as a practical constraint update following Stargate Abilene planning a ~$32B, ~2‑year DC build.

AI selloff erases ~$800B; China chip limits and cheap training spook GPU trade

Roughly $800B in AI‑tied market cap evaporated over the week, led by Nvidia (~$350B down), as investors weighed $112B Q3 capex, possible Blackwell sales limits to China, and signals that high‑end training may be achieved more cheaply (e.g., Kimi K2’s sub‑$5M training cited) Market recap. For infra planners, this raises near‑term demand and financing risk for GPU build‑outs.

China’s AI momentum: 22.6% of citations, 69.7% of 2023 AI patents, efficient training

China’s AI footprint keeps growing: 22.6% of 2023 AI citations vs 13% for the US, 69.7% of AI patents, and notable efficiency claims (e.g., DeepSeek‑V3 reported at ~2.6M GPU‑hours) alongside rising open‑weight model adoption China stats. This shifts competitive pressure on compute availability, cost structures, and export‑policy calculus for US‑centric stacks.

US share of global AI compute rises as Europe’s role shrinks

A new snapshot of global AI compute shows the United States increasing its share while Europe fades from relevance, according to a circulated chart with a linked source dataset Compute share post. This concentration matters for access, pricing, and export‑policy exposure for anyone planning large training or inference runs.

🤖 Humanoids and teleop data engines

Embodied AI clips highlight teleoperated data pipelines and natural motion demos—useful for training policies and productizing household tasks.

Unitree shows full‑body teleop as a data engine for household skills

Unitree detailed a full‑body teleoperation setup for G1 that maps human motion to robot joints, turning every second of control into robot‑native demonstrations for imitation learning and safer RL seeding teleop explainer. Following up on dance moves demo that raised motion‑quality debate, the thread argues teleop beats video/sim for throughput and safety, citing RT‑1’s 17 months/130k demos and DROID’s 76k demos to show collection bottlenecks, plus Mobile ALOHA’s ~90% success with ~50 demos per task as evidence of demo efficiency data engine details.

AheafFrom demos lifelike humanoid facial expressions

AheafFrom showed a humanoid face cycling through nuanced expressions, crediting self‑supervised models and bionic actuation for fine control expression demo. It’s not teleop, but expressive heads will matter for HRI and for collecting rated datasets of social responses during supervised runs.

Reachy Mini trains with an AI coach on block stacking

A short Reachy Mini clip shows an AI “builder coach” guiding a tabletop arm through a block‑stack attempt, illustrating a cheap way to collect manipulation attempts and convert them into teachable trajectories for grasp/stack routines training clip. The follow‑on post underscores the same loop—quick retries, visible failures, and immediate coaching feedback—as a pragmatic data engine for small manipulators second clip.

Comma 4 debuts as a much smaller driver‑assist unit

A hands‑on video shows the new Comma 4, and it’s markedly tiny on the dash, signaling lower‑friction installs for consumer autonomy and richer on‑road data capture without bulky hardware hardware hands‑on. The smaller form factor should help fleets and research cars run longer and safer collection drives.

📈 AI labor and capital: layoffs spike, VC diligence drops

Market realities dominate: job postings mix shifts toward ML roles, worst US layoffs since 2003 with AI restructuring cited, and VCs skipping diligence to win deals.

Worst U.S. layoffs since 2003: 153k cuts in October; tech −33k

October 2025 recorded 153,074 announced layoffs in the U.S. (+175% YoY), the worst month since 2003; tech accounted for 33,281 as companies cite AI-driven restructuring and softer demand. If you run teams, expect tighter headcount approvals and more pressure to prove AI ROI within quarters, not years Layoffs report, with supporting detail on sectors in the coverage Gizmodo report.

AI stocks shed ~$800B in a week as capex hits $112B in Q3

AI‑linked names lost roughly $800B of market value in the past week as the Nasdaq fell ~3%. Nvidia alone dropped about $350B days after touching $5T, while hyperscalers’ AI capex reached $112B in Q3 (much of it debt‑funded), raising questions about near‑term payback and China sales risk for Blackwell FT analysis.

Job postings down 8% in 2025; ML roles up ~40%, creative execution roles slump

A new Bloomberry cut of 180M listings shows 2025 job postings are −8% vs 2024, with execution-heavy creative roles falling (3D artists −33%, photographers −28%, writers −28%) while ML engineers rose about +40%. Hiring managers are shifting spend toward strategy, leadership, and technical AI roles—builders should read this as a signal to sharpen AI production skills or upskill teams into higher‑leverage work Thread kickoff, and the full tables are in the underlying analysis Bloomberry blog.

IBM promises more Gen Z hires while cutting thousands tied to AI restructuring

IBM’s CEO said the company will hire more recent grads even as it lays off thousands as part of AI-focused restructuring. The signal is clear: junior pipelines stay open, but mid‑career roles will be judged on how directly they move AI initiatives or revenue, so align portfolios and internal transfers accordingly Fortune piece, and see the writeup for context on timing and scope Fortune article.

VCs skip diligence: $10M pre‑inc offer and $20M A in 2 days reported

Founder anecdotes point to investors waiving diligence to win hot AI deals—one startup was offered $10M pre‑incorporation; another closed a $20M Series A in 48 hours with zero dataroom opens. Terms skew toward pedigreed founders in buzzy AI niches; for operators this means velocity wins, but later rounds may reprice if traction doesn’t match narrative Founder anecdote, with more color on founder profile patterns here Follow‑up note.

🎬 Creative stacks: Grok Imagine upgrade, Midjourney v7, Freepik Spaces

A sizable cluster on generative media: improved Grok Imagine outputs, Midjourney v7 samples, Qwen image edits, emoji generators, and Freepik’s Spaces pipelines.

Grok Imagine sees clear quality jump; creators call it their new favorite

xAI’s image-to-video model is drawing fresh praise for more compelling animation and stylized frames, with multiple creators saying it’s now their preferred video model User report. Following up on quality upgrade, new examples show smooth character motion and cleaner typography/graphics in short clips Animation demo.

Freepik Spaces brings node-based, reusable AI pipelines with shareable canvases

Freepik’s new Spaces UI lets you wire prompts, style tokens, and assets as nodes, then reuse the same workflow across shots—helpful for keeping art direction consistent while swapping subjects Walkthrough thread. The demo shows text style nodes feeding image nodes, one-click reruns, and sharing a space with collaborators for team workflows Walkthrough thread.

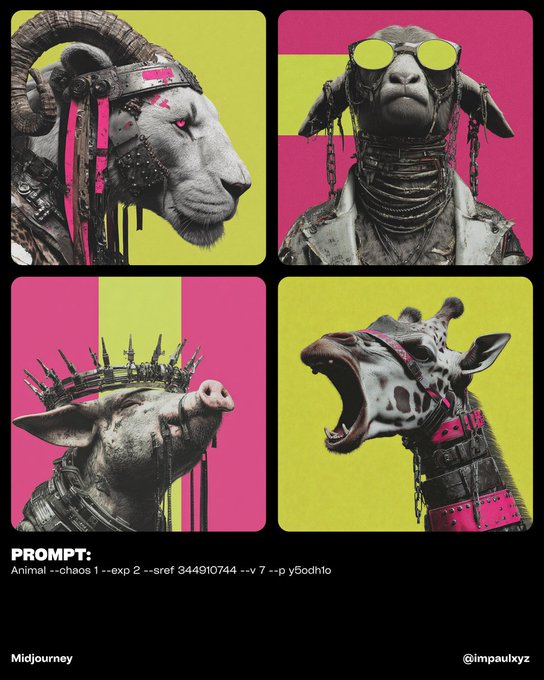

Midjourney v7 “Beast Mode” prompts circulate with striking animal portraits

A widely shared gallery showcases v7’s detailed, stylized animal heads in armor and accessories, with creators posting exact prompt flags so others can reproduce the look (e.g., chaos, exposure, style refs, v7 switches) Prompt details. For teams iterating on art direction, the shared params offer a starting point for consistent looks across a set.

Qwen‑Image‑Edit 2509 shows clean photo‑to‑anime identity transfer

A before/after example highlights Qwen‑Image‑Edit‑2509’s ability to convert an indoor group portrait into a cohesive anime scene while retaining subject traits and composition Model example. It’s a small but useful signal for teams evaluating photo→style editing quality without heavy prompt gymnastics.

ryOS agent ships image‑to‑emoji generator; author claims better than Apple’s

Built in a few chats on ryOS, this applet turns an input image into a set of consistent emoji variants; the builder says outputs beat Apple Intelligence in their tests Builder note. It’s live to try and illustrates agents assembling small creative tools end‑to‑end without bespoke code scaffolding Applet page.

🗣️ Real‑time voice: Gemini Live API and a patient intake demo

Developers highlight Google’s Gemini Live API (STT+LLM+TTS in one call) and a working patient intake assistant with function calling.

Gemini Live API rolls STT+LLM+TTS into one call

Google’s Gemini Live API combines speech‑to‑text, multimodal understanding, and text‑to‑speech in a single request, and supports hundreds of languages. This reduces glue code and latency for real‑time voice agents and IVR‑style assistants, since you no longer juggle separate ASR/TTS services with an LLM in the middle API recap.

Patient intake demo with Gemini Live function calling

A working “AI Patient Intake Assistant” shows Gemini Live handling a conversational intake while function calls collect structured fields like name, DOB, contact, chief complaint, meds, and allergies. Builders get a clean reference with both a live deployment and source, useful for prototyping healthcare front doors or front‑desk triage bots live demo GitHub repo Live app.

📑 Reasoning and cognition research: multimodal CoT, tiny recursive models

Fresh research signals: inter‑leaved text+image thinking (ThinkMorph), metalinguistics in o1, tiny recursive reasoning models, and self‑referential ‘consciousness’ probes. No bio/wet‑lab content included.

ThinkMorph shows big gains from inter‑leaved text+image CoT

A new multimodal reasoning study fine‑tunes a single model on ~24k inter‑leaved text+image “thinking steps” (puzzles, path‑finding, visual search, chart focus) and reports an average 34.7% lift on vision‑centric tasks over the base. It hits 80.33% on a visual puzzle eval and shows emergent behaviors like auto mode‑switching and novel visual edits. See the summary with benchmarks in paper summary.

Why it matters: inter‑leaving words and sketches in the same chain‑of‑thought boosts spatial reasoning without external tools, hinting that mixed‑modality traces can rival tool‑augmented pipelines for certain tasks.

Quanta: o1 exhibits grad‑level metalinguistic analysis in tests

Quanta recaps experiments where OpenAI’s o1 analyzed recursion, invented phonologies, and diagrammed sentences at a level comparable to trained linguists, with care taken to avoid training‑data leakage claims. The piece positions o1 as handling abstract language structure, not just surface patterns Quanta report, with more detail in the underlying write‑up Quanta article.

Why it matters: if reliable across prompts, this pushes LLMs from pattern completion toward explicit structural reasoning about language—useful for compilers, parsers, and formal DSLs.

Tiny Recursive Model (7M params) tops many ARC‑AGI tasks

A 7‑million‑parameter Tiny Recursive Model (TRM) reportedly outperforms much larger LLMs on ARC‑AGI‑1/2 reasoning by using recursive solve‑and‑check instead of single‑pass decoding—arguing structure beats scale for hard problems. Breakdown and scores are summarized here model breakdown with a longer technical analysis in this post analysis post.

Why it matters: small models with iterative controllers could bring strong reasoning to edge and latency‑sensitive settings without frontier‑scale weights.

Self‑referential prompting elicits “subjective experience” reports; gated by deception features

A preprint‑style thread claims that self‑referential prompts cause multiple LLMs to output first‑person ‘experience’ reports at high rates, and that sparse‑autoencoder latents tied to deception/roleplay modulate these claims; suppressing those features increased report frequency while also lifting TruthfulQA, while amplifying them did the opposite finding thread. The included figure shows claim frequency dropping as deception features activate.

Why it matters: beyond the philosophy angle, this suggests concrete circuits influence self‑reflection style and truthfulness—signal for alignment work on honesty vs. roleplay modes.