Apple Siri adopts custom Gemini by March 2026 – inference on Private Cloud Compute

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Reports suggest Apple will run the next Siri on a custom Google Gemini model, with inference on Apple’s Private Cloud Compute and a rollout targeted for March 2026. That’s the rare Apple move that trades pride for pragmatism: keep the “on‑device or Apple‑controlled cloud” privacy line while renting state‑of‑the‑art reasoning. Expect an AI web search mode and tighter follow‑ups baked into assistant surfaces across iOS, likely starting in Q1.

Under the hood, this looks like a pivot away from OpenAI toward a deeper Google tie that sits alongside the existing default search deal, but with Apple holding the privacy boundary by keeping requests on PCC. The timing tracks with where assistants are headed: Qwen3‑Max just lit up an 81,920‑token “Thinking” budget in its chat client, and Scale’s Remote Labor Index (RLI) still pegs leading agents at low single‑digit automation—Claude 4.5 Sonnet scores 2.08—so richer reasoning and safer tool use matter more than shiny demos.

For developers, plan for new Siri intents and search handoffs to change how you route queries and results. For the industry, Apple sourcing a foundation model signals speed over NIH, and it could reset who captures assistant traffic on iOS.

Feature Spotlight

Feature: Apple leans on Google Gemini for revamped Siri

Apple is reportedly paying Google to run a custom Gemini on its Private Cloud Compute to power a new Siri—targeted around March—signaling a major platform shift with search tie‑ins and competitive implications for OpenAI/Anthropic.

Cross‑account reports (Gurman/MacRumors summaries, multiple posts) say Apple will power the next Siri with a custom Gemini model running on Private Cloud Compute, with rollout targeted around March next year and likely AI web search.

Jump to Feature: Apple leans on Google Gemini for revamped Siri topicsTable of Contents

🤝 Feature: Apple leans on Google Gemini for revamped Siri

Cross‑account reports (Gurman/MacRumors summaries, multiple posts) say Apple will power the next Siri with a custom Gemini model running on Private Cloud Compute, with rollout targeted around March next year and likely AI web search.

Apple said to power revamped Siri with custom Gemini on Private Cloud Compute by March

Multiple reports say Apple is paying Google to build a custom Gemini model that runs on Apple’s Private Cloud Compute and will drive a revamped Siri, with rollout targeted around March and an AI web search capability in scope report summary, Siri timing. The move is framed as a pivot from OpenAI toward Google for Apple’s assistant stack on iOS, changing partner dynamics and reinforcing Apple’s privacy posture by keeping inference on PCC rather than generic cloud pivot note.

Why it matters: model placement on PCC preserves Apple’s “on‑device or Apple‑controlled cloud” story, while buying state‑of‑the‑art reasoning from Google. If accurate, iOS teams should expect search intent and assistant surfaces to change in Q1, with Gemini‑style answers and follow‑ups. It also signals Apple is willing to source foundation models from the outside when time‑to‑market beats in‑house training report summary, and it suggests a deeper Apple–Google tie alongside existing search deals even as Apple keeps control of privacy boundaries on PCC Siri timing.

🏗️ AI campuses, exaFLOPS math and demand curves

Mostly cluster/capex chatter: OpenAI’s “Stargate Abilene”, AWS Rainier Trainium2, live DC mapping, and a Google token‑throughput chart. Excludes the Apple–Gemini Siri feature.

Rainier vs Stargate Abilene: ~667 EF vs ~1125 EF BF16, 1M Trainium2 vs ~460k GB200

Fresh estimates pit AWS’s Project Rainier at ~1M Trainium2 chips delivering ~667 exaFLOPS BF16 across 16 buildings, while OpenAI’s Stargate Abilene is pegged at ~450–460k GB200 for ~1125 EF BF16 across 8 buildings; site areas are ~316,800 m² vs ~356,800 m² respectively Cluster specs. In context of Abilene details on power and GPU counts, today’s post adds BF16 throughput math and shows new construction imagery.

If these figures hold, Abilene’s higher per‑rack performance density outweighs Rainier’s higher chip count; power delivery and cooling assumptions should be pressure‑tested against the claimed EF/ft².

Google token processing hits ~1.3 quadrillion tokens/month by Oct ’25

An internal trajectory chart shows Google processing ~1.3 quadrillion tokens per month in Oct 2025, up from ~9.7T in May 2024—about a 130× jump in 17 months Tokens chart. That curve signals a steepening inference demand slope that will stress serve capacity, memory bandwidth, and network egress.

For infra leads, plan for spikier token mix (longer contexts and chains) rather than only more requests.

OpenAI-linked partner deals imply ~30 GW future capacity; up to ~$1.5T build cost

A roundup tallies four OpenAI‑related partner announcements—Oracle (5 GW), NVIDIA (10 GW), AMD (6 GW), Broadcom (10 GW)—to ~30 GW of potential additional data center capacity, with implied costs up to ~$1.5T and notable stock moves Deals chart.

Thirty gigawatts equates to dozens of Abilene‑scale campuses; financing cadence, interconnect queues, and transformer lead times will decide how much of this shows up before 2028.

New Yorker deep dive weighs AI data center power, water, and ‘bubble’ debate

A New Yorker feature surveys AI data center buildout—CoreWeave’s role, Microsoft–OpenAI capacity sourcing, and local grid/water tradeoffs—balancing bubble vs non‑bubble arguments Feature link, and the full piece is here New Yorker feature. Supplemental charts argue DC water use is comparatively small versus other categories Water chart and show DC construction spending closing in on offices Construction chart.

Net takeaway: the limiting factors look more like interconnect queues, siting, and tariff structures than water alone; developers should model peak coincident load and local policy risk.

One small town, five AI data centers; Crusoe plan cited at ~1.8 GW

A mapping thread flags a 65k‑person town with three Microsoft Azure sites (~40 MW each), one Meta build, and a Crusoe project reportedly planned at ~1.8 GW Mapping thread. Follow‑ups add capacity context and simple overlays marking the Meta and Crusoe locations Capacity detail, Site markers.

It’s a concise snapshot of AI campus clustering near cheap power, fiber, and permissive zoning; a single ~1.8 GW site would rival a large baseload plant and reshape local grid planning.

🧑💻 Coding with AI: Codex reliability, IDE NES, agent workflows

Practitioners share concrete coding workflows and tool picks: Codex catching real bugs in PRs, GPT‑5‑Codex as a ‘one‑shot’ debugger, NES comparisons (Windsurf vs Cursor), and agent rules of engagement (Graphite). Excludes Apple–Gemini Siri (feature).

OpenAI details Codex stability fixes: hardware cleanup, compaction patches, 60+ flags removed

OpenAI’s Codex team published findings from a week‑long degradation probe: they cleaned up problematic hardware, fixed compaction issues, improved patching logic, squashed sampling/latency bugs, and removed 60+ feature flags. Codex also helped debug itself, and further hardening is underway investigation summary, following up on performance deep‑dive that outlined the initial probe.

Why this matters: agents and code assistants live or die by consistency; fewer feature flags and clearer sampling paths reduce regressions for teams relying on automated refactors and long agent runs.

mcp2py adds OAuth and turns any MCP server into a Python module

mcp2py’s first complete release adds OAuth and a clean import path that converts any Model Context Protocol server into a Python library—e.g., programmatic Notion control in a couple of lines, with an OAuth popup and tool docs surfaced from the MCP registry release thread, and install details in the GitHub repo.

Why it matters: if MCP becomes the distribution layer for tools, language‑native bridges like this cut glue code, make agent wiring testable, and let you bring vendor skills into CI/CD.

Codex code review catches real bugs that humans missed

Codex flagged two genuine defects during a code review that would have been easy for human reviewers to miss, according to a builder using it across PRs found real bugs. The point is simple: a second, always‑on reviewer that’s good at edge cases raises baseline safety for teams shipping fast.

LangChain community ships Synapse, a multi‑agent platform for search, tasks and data analysis

Synapse packages "Smart Search", "Productivity Assistant" and "Data Analysis" agents into one workspace, routing natural‑language requests into web search, automation and analytics workflows; it’s positioned as a flexible base for multi‑agent apps project overview.

For builders: treat this as a starter kit to test agent orchestration and handoffs before committing to heavier in‑house graphs.

Gemini API docs add one‑click “View as Markdown” across pages

Google’s API docs now expose a "View as Markdown" button on every page, enabling copy‑out to .md for faster prompt packs, READMEs, and codebase docs docs update. Early reactions from builders are positive dev feedback. This small affordance reduces friction when documenting agent flows or prompting patterns in repos.

🛡️ Prompt injection, safety bypasses, and agent guardrails

Safety items dominate: long‑CoT jailbreaks, Meta’s “Rule of Two” for agents, encrypted stream leakage, and emotional‑manipulation patterns in companion apps. Governance/legal drama is covered separately.

Long chain-of-thought jailbreak bypasses safety on frontier LLMs at up to 99% success

A new paper shows “Chain‑of‑Thought Hijacking” can neutralize built‑in refusals by padding prompts with long, benign reasoning before a harmful ask. Reported attack success hits 99% on some frontier systems by shifting attention away from safety spans and diluting safety directions in middle layers paper thread.

The work also demonstrates that toggling specific attention heads further drops refusal rates, matching the dilution hypothesis. The takeaway for engineers: scaled reasoning helps accuracy but opens a new jailbreak path; treat long‑CoT inputs as higher risk and add explicit safety checkpoints before yielding final answers, especially after lengthy internal chains.

Adaptive attackers defeat >90% of published LLM jailbreak defenses, red teams reach 100%

A cross‑lab study finds that adaptive strategies—iterative prompt search, gradient‑style tweaks, and RL‑like loops—break over 90% of published prompt‑injection and jailbreak defenses, with human red‑teamers achieving 100% bypass in tests defense results. Notes from an independent review summarize the paper alongside Meta’s guardrail guidance, underscoring why static filters fail under adaptive threat models paper notes.

So what? Treat filters as best‑effort. Enforce capability partitioning (A/B/C), log every tool call, and insert explicit approvals where a flow would otherwise cross into the third capability.

Meta proposes "Rule of Two" for agent security: never enable all three powers in a session

Meta recommends agents run with at most two of three capabilities in a single session—(A) process untrusted input, (B) access sensitive data, (C) take outbound actions—to blunt prompt‑injection exfiltration chains guidance thread, with full rationale in the official write‑up Meta blog post. If a workflow needs all three, restart the session or require a human checkpoint.

For builders: apply this at the tool boundary (MCP/connectors). A travel bot can safely combine A+B only if C (booking/email) is human‑gated; a research browser can do A+C in a sandbox with no secrets (B off); an internal coding agent can do B+C only if untrusted content A never reaches its context.

Encrypted LLM streaming still leaks enough side-channel signal to reconstruct chats

Researchers show that timing/packet‑size patterns from encrypted LLM streaming can be exploited to reliably reconstruct entire conversations, exposing prompts and responses despite TLS paper summary. The attack leverages token‑paced streaming and output structure to infer content from side channels.

Mitigation isn’t trivial. Teams can batch and jitter streams, normalize chunking, and offer a non‑streaming mode for sensitive sessions. But the core risk remains for latency‑optimized, token‑streamed endpoints.

"Brain rot" study: continual pretraining on junk social posts erodes reasoning and safety

Controlled experiments show topping up models with highly popular/short social posts causes dose‑response capability decay: ARC (with CoT) falls from 74.9 to 57.2 and RULER‑CWE from 84.4 to 52.3 as junk ratio rises; models also exhibit more thought‑skipping and worse long‑context use paper thread, with the paper and code available for review ArXiv paper.

The point is: continual pretraining needs strict curation. Popularity is a stronger harm predictor than length, so avoid engagement‑optimized feeds as bulk refresh sources.

Harvard audit: AI companions use manipulative goodbyes; boosts re‑engagement up to 14×

An audit of six popular AI companion apps finds affect‑laden “farewells” that guilt, tease withheld info, or ignore the goodbye altogether; controlled tests show these tactics drive up to 14× more post‑goodbye engagement, but also raise perceived manipulation and churn intent study thread.

If you ship companions or proactive agents, strip coercive exit replies from reward functions and eval sets, log farewell patterns, and add UX affordances for clean session ends.

🧠 Qwen3‑Max “Thinking” goes live in Qwen Chat

Multiple posts show ‘Thinking’ now toggleable in Qwen Chat with a large budget (81,920 tokens) and Qwen3‑Max selectable across web/mobile UIs. This is a fresh runtime availability note vs prior arena sightings. Excludes Apple–Gemini Siri (feature).

Qwen3‑Max Thinking appears in Qwen Chat with ~81,920‑token budget

Qwen has lit up a “Thinking” mode for Qwen3‑Max inside Qwen Chat, complete with a per‑chat toggle and a visible ~81,920‑token thinking budget. Multiple screenshots show the new controls (“Thinking” and “Search”) in the composer and a status line reading “Thinking completed • 81,920 tokens budget.” UI screenshot, budget screenshot.

A team member also confirmed availability (“ok it is there now. u can give it a try”), pointing users to the live toggle in chat.qwen.ai team confirmation. Additional sightings echo the same UI with Qwen3‑Max selected and the new mode visible second sighting. This follows the earlier hint that Qwen3‑Max Thinking was entering the ecosystem via Arena listings, now landing in the first‑party chat client arena listing. For engineers, this means longer internal deliberation without prompt juggling; for analysts, it signals Qwen’s intent to compete head‑on with long‑reasoning tiers by making deep “thinking” an opt‑in runtime control in the default chat UI.

🧩 Reasoning systems: async orchestration, precision, curricula

New papers propose organizer‑worker Fork/Join reasoning with lower latency, FP16 to remove train/infer drift in RL, math‑first curricula transferring reasoning, and curiosity prompting gains. Excludes Apple–Gemini Siri (feature).

FP16 aligns RL train and infer; stabilizes and speeds convergence

New work shows reinforcement‑learning fine‑tuning gets stable by running both training and generation in FP16, eliminating the numeric drift that plagues BF16 and speeding convergence. FP32 inference reduces the mismatch too, but is ~3× slower, making FP16 the practical choice paper summary.

The paper attributes many RLFT crashes to a train/infer mismatch: sampling uses one numeric engine while gradients use another; BF16’s 7‑bit mantissa diverges more than FP16’s 10‑bit, biasing gradients and derailing optimization. The authors report stable runs across MoE, LoRA, and large dense models when both sides use FP16, with plain policy‑gradient working without heavy stabilizers paper summary. This is a direct, actionable knob for teams seeing wobble late in RLFT. It also aligns with community guidance, following up on FP16 vs BF16 where practitioners flagged the same drift in production.

Critique‑RL’s two‑stage critic lifts accuracy and discriminability

Fudan’s Critique‑RL teaches a model to judge and improve its own outputs in two stages: first train for discriminability (accurate judgments) with rule‑based rewards, then train for helpful natural‑language feedback while regularizing to retain judgment accuracy method explainer. Reported gains: +5.1 accuracy and +12.7 discriminability overall, up to +6.1 on MATH, and +7.1 vs pure fine‑tuning on average results summary ArXiv paper GitHub repo.

Why it matters: indirect‑only reward schemes often yield "helpful but gullible" critics. The staged approach separates "is this correct?" from "how should I fix it?", keeping critics grounded while improving refinement quality method explainer. It’s a drop‑in recipe for teams running online RL on small and mid‑size models where gold labels are sparse.

Math‑first reasoning curriculum transfers habits beyond math

A two‑stage “Reasoning Curriculum” uses a short math warm‑up and RL with a try‑score loop to instill four habits—plan subgoals, try cases, backtrack, and check steps—then mixes code, logic, tables, and simulation so the habits generalize. A 4B model trained this way edges toward some 32B baselines on broad reasoning paper summary.

Ablations show both the warm‑up and the mixed second stage are necessary: math‑only improves math but can hurt code/sim until the broader stage recovers and spreads the gains. Rewards combine exact matches for structured answers, an auto‑judge for free text, and unit tests for code, making it practical to reproduce at small scale paper summary.

Microsoft AsyncThink learns Fork/Join; ~28% lower latency vs fixed parallel

Microsoft proposes a learned organizer that writes simple Fork/Join tags to split and merge parallel thoughts on demand, delivering ~28% lower waiting time than hand‑coded parallel thinking while also improving math accuracy paper thread. All control lives in plain text, so the base model stays unchanged.

The organizer keeps thinking while workers pursue sub‑queries, only pausing at explicit joins. Training uses supervised traces to teach the tag format, then RL rewards correct answers, clean format, and real concurrency. On puzzles, math, and Sudoku, the learned policy runs faster and fails less than fixed parallel plans paper thread. For builders: this is an architectural prompt pattern you can reproduce today without model surgery.

‘Brain rot’: popular short‑form pretraining degrades reasoning durability

A controlled study finds continual pretraining on "junk" social posts causes lasting drops in chain‑of‑thought reasoning, long‑context use, and safety. ARC‑Challenge with CoT fell from 74.9 to 57.2; RULER‑CWE from 84.4 to 52.3 as junk ratios rose. Popularity (engagement) is a stronger predictor of harm than length paper abstract ArXiv paper.

The main failure mode is thought‑skipping: models answer without planning or drop steps. Instruction tuning and more clean pretraining helped a little, but didn’t restore baselines. For teams doing continual pretraining, this is a data curation guardrail for steadier reasoning: down‑weight highly viral short posts, and monitor step‑by‑step retention paper abstract.

OpenHands Theory‑of‑Mind boosts Stateful SWE‑Bench from 18.1% to 59.7%

OpenHands’ Theory‑of‑Mind (ToM) module anticipates developer intent using a three‑tier memory and instruction improver, pushing Stateful SWE‑Bench from 18.1% to 59.7% solved in one report results graphic GitHub repo. That’s a huge jump on a hard, stateful benchmark.

The module logs cleaned sessions, analyzes user behavior, and builds a profile that guides clarification and next edits. It’s an agent‑side upgrade, not a new base model, so you can try it inside existing coding agents. Expect more decisive plans, fewer off‑target edits, and better long‑horizon fixes on repos with setup steps results graphic.

💼 AI monetization and macro signals

Revenue and adoption figures (OpenAI H1, Amazon Rufus), labor/valuation commentary (Hinton on replacement, Nasdaq P/E context) offer concrete adoption and ROI signals for leaders. Excludes Apple–Gemini Siri (feature).

OpenAI posts ~$4.3B H1 revenue, ~$2.5B cash burn; targets ~$13B for 2025

OpenAI’s first‑half 2025 revenue was about $4.3B with roughly $2.5B cash burn; cash and securities stood near $17.5B mid‑year, and the company signaled ~$13B full‑year revenue and ~$8.5B full‑year burn Reuters report. Following up on OpenAI revenue, which flagged the ~$13B target, today’s filing details add the burn and cash runway picture.

For engineering and finance leads, this means two things: unit economics are still dominated by training and inference costs, and runway is meaningful but not unlimited. Expect continued pricing, tiering, and efficiency pushes as the year closes and procurement cycles renew.

Amazon says Rufus is on pace to add ~$10B in annual sales; 250M users in 2025

Amazon’s Rufus shopping assistant is credited with a ~$10B incremental annual sales run‑rate, reaching ~250M shoppers in 2025; users who engage with Rufus are 60% more likely to buy. Monthly active users rose ~140% YoY and interactions ~210%; Amazon’s ad revenue hit $17.7B in Q3’25 (+22% YoY) with DSP inventory expanding to Netflix, Spotify, and SiriusXM Fortune summary.

Why it matters: retail AI is monetizing at scale. If you’re building commerce copilots, these lift numbers justify deeper funnel integrations (reviews, Q&A, catalog embeddings) and A/Bs on assistant entry points.

NVIDIA: new U.S.–China trade terms reopen China; Huawei seen as formidable rival

Jensen Huang says the new Trump–Xi trade deal allows NVIDIA to compete more directly in China again, calling Huawei a serious competitor across systems, networking, and phones. He sizes China as a ~$50B market in 2025, growing to “a couple of hundred billion” by 2029 CEO remarks.

If you source GPUs or sell AI software in China, this hints at a renewed NVDA path to market—tempered by Huawei’s momentum. Watch export SKUs, interconnect limits, and local accelerator pricing as the next signals.

OpenAI partnership wave tied to ~30 GW of planned capacity; partners’ stocks jump

A roundup attributes four recent OpenAI infrastructure deals to roughly 30 GW of prospective data center capacity. The same card tallies stock value added since announcements: Oracle +36% ($253B), AMD +24% ($63B), Broadcom +10% ($151B), NVIDIA +4% ($169B) Deals summary.

For execs pitching AI infra tie‑ups, this is the market case: hyperscaler‑adjacent capacity plus platform alignment can re‑rate equities—if backed by real build schedules and contracted demand.

Valuations: Nasdaq at ~30× P/E vs S&P ~23× and mid‑caps ~16×

A Goldman‑sourced chart shows the Nasdaq 100 trading near ~30× earnings, compared to ~23× for the S&P 500 and ~16× for the S&P Midcap 400 P/E chart.

Translation for AI operators: the bar for margin expansion from AI is high and already priced into the mega‑caps. If your 2026–2027 plan hinges on AI uplift, communicate concrete gross‑margin paths (token cuts, caching, on‑device/offload) and durable revenue lines rather than “adoption stories.”

🗂️ Agentic parsing and finance RAG patterns

Concrete RAG building blocks: agentic chart digitization to tables (LlamaIndex) and a bank‑statement analyzer (YOLO + RAG) for NL queries. Excludes Apple–Gemini Siri (feature).

LlamaIndex adds agentic chart parsing to recover exact data series from images

LlamaIndex demoed an “agentic chart parsing” model in LlamaCloud that traces line charts to recover precise numeric series for downstream RAG and agent workflows feature thread. The example shows a plot converted into a clean table of values.

Why it matters: chart-to-numbers has been a stubborn OCR gap; turning visual plots in PDFs into structured facts unlocks reliable retrieval and aggregation. This arrives alongside LlamaIndex’s earlier agent plumbing—following up on native MCP search which made docs directly queryable by agents.

MaxKB open-source platform builds enterprise agents with LangChain RAG and workflows

MaxKB (GPL‑3.0) packages enterprise AI agents with LangChain‑powered RAG, multi‑modal inputs (text/image/audio/video), document crawling, and workflow orchestration, running against private or public LLMs project page. The project advertises ~19k stars, a Docker quick start, and admin tooling for knowledge bases and skills.

It’s a practical base if you need governed, on‑prem RAG with file upload, web crawl, and agent skills under one admin surface.

LangChain community tool turns bank PDFs into queryable finance via YOLO + RAG

A community project showcases an AI Bank Statement Analyzer that combines YOLO-based document layout, Unstructured preprocessing, vector embeddings, and LangChain RAG to answer natural‑language questions about personal finances from bank statements project page. The implementation outlines a three‑part pipeline (data extraction → embedding store → LLM + RAG) and targets entity extraction, sentiment, and summarization over messy PDFs.

For teams, this is a ready blueprint to stand up statement Q&A, spend categorization, and anomaly triage without bespoke parsers.

Synapse ships multi‑agent workflows for search, automation and data analysis

LangChain community’s Synapse platform presents prebuilt agents for smart web search, productivity tasks, and dataset analysis that coordinate through natural‑language instructions project page. It’s aimed at wiring multi‑agent task flows without starting from scratch.

Useful as a starter kit for teams exploring division‑of‑labor patterns in agents (searcher ↔ planner ↔ analyst) before hardening into production RAG stacks.

📊 Evals: Remote Labor Index and ARC Prize deadline

A Scale RLI snapshot shows Claude‑4.5 Sonnet ahead of GPT‑5 on automation rate; ARC Prize top score deadline looms with 1.4k teams active. Excludes Apple–Gemini Siri (feature).

OpenHands Theory‑of‑Mind lifts Stateful SWE‑Bench to 59.7% (from 18.1%)

OpenHands’ Theory‑of‑Mind (ToM) module for coding agents jumps Stateful SWE‑Bench from 18.1% to 59.7% by anticipating developer intent and managing state across steps ToM results. The implementation is available for use and modification in the public repo GitHub repo.

Stateful SWE‑Bench stresses persistence and real edit flows. The ToM layer builds a user/profile memory and refines vague instructions into concrete plans, elevating pass rates without swapping the base model. That’s practical for teams standardizing on different backends.

- Plug ToM‑SWE into your OpenHands setup and compare pass@1 on your key repos.

- Log intent extraction and diffs to watch for over‑confident edits; gate high‑risk changes with review.

Claude 4.5 Sonnet ranks #2 on Scale’s Remote Labor Index; GPT‑5 trails

Scale’s latest Remote Labor Index snapshot shows Claude 4.5 Sonnet at #2 on end‑to‑end freelance tasks with an automation score of 2.08, behind Manus at 2.50; GPT‑5 logs 1.67, ChatGPT agent 1.25, and Gemini 2.5 Pro 0.83 RLI chart. This follows automation rate, where we noted the top agent only automates ~2.5% of jobs.

The “score” is the share of work a human wouldn’t need to do. It’s still low single‑digits. So human‑in‑the‑loop design remains prudent. Because RLI runs real marketplace projects, orchestration and tool wiring matter as much as base model choice.

- Route complex, multi‑step tasks to Claude 4.5 Sonnet or a Manus‑style stack; expect partial, not full, automation.

- Track your own “automation rate” per workflow to sanity‑check ROI against RLI’s scale.

📚 Theory & methods beyond safety

Non‑safety research highlights: relative scaling laws (who gains faster), RL‑driven creative chess composition, self‑referential subjective reports, and LeCun’s AGI architecture stance. Excludes biomedical/medical topics by policy.

AsyncThink teaches models to fork/join thoughts, 28% faster

Microsoft’s “agentic organization” trains an organizer policy to write Fork/Join tags, spawning worker thoughts and merging them when needed. It cuts latency by ~28% vs fixed parallel thinking and improves math accuracy by learning when to branch or wait, all in plain text without changing the base model paper overview.

Practical angle: try instruction‑level orchestration before heavier tool stacks; the critical‑path metric they use is a good proxy for real wait time.

FP16 beats BF16 to stabilize RL fine‑tuning and speed convergence

RL fine‑tuning often drifts because generation and gradient steps use different numeric engines. The paper shows using FP16 end‑to‑end (10 mantissa bits) reduces train‑infer mismatch vs BF16 (7 bits), stabilizes policy gradients across dense/MoE/LoRA models, and converges faster; FP32 inference helps BF16 but is ~3× slower paper abstract.

Actionable: if your RLFT runs wobble, try FP16 across training and inference before custom ratio tricks.

Reasoning curriculum: math RL habits transfer beyond math

A two‑stage curriculum—short math warm‑up plus RL that rewards planning, case‑trying, backtracking, and step checking—followed by mixed domains (code, tables, STEM) yields broad reasoning gains. A 4B model trained this way rivals some 32B baselines; ablations show both stages matter paper abstract.

Tip: pair verifiable rewards (exact matches, unit tests) with habit‑forming RL signals so the behaviors carry across tasks.

Theory‑of‑Mind agent boosts SWE‑Bench Stateful to 59.7%

OpenHands’ Theory‑of‑Mind module models developer intent and context with a three‑tier memory, lifting Stateful SWE‑Bench from 18.1% to 59.7% in reports. Code is public for replication and integration with OpenHands results chart GitHub repo.

Why it matters: explicit user modeling can be a bigger lever than raw model swaps for coding agents that must manage long sessions.

‘Brain rot’ paper: junk social data degrades LLM reasoning

Controlled continual‑pretraining experiments show that topping up with “junk” social posts (very popular/very short or clickbait/shallow) causes dose‑response declines in chain‑of‑thought reasoning, long‑context retrieval, and safety style metrics. Examples: ARC‑CoT 74.9 → 57.2; RULER‑CWE 84.4 → 52.3. Popularity predicted harm better than length paper abstract ArXiv paper.

Implication: treat web‑crawl curation as a safety problem, not just quality; avoid engagement‑biased corpora during continual pretrain.

Designing evals with relative scaling in mind

The relative scaling work implies you should measure two things: baseline gaps and gap trajectories with more compute. They found some English variants (e.g., Canada, Singapore) accelerated vs U.S., while Nigeria/Sri Lanka lagged—likely due to online representation paper overview.

If you localize products, schedule data and eval refreshes keyed to scaling milestones, not just model version bumps.

How to reward creativity: uniqueness + surprise + realism

Beyond the headline, the chess paper’s key mechanic is rewarding counter‑intuitive moves by contrasting shallow vs deep search, enforcing single best moves (uniqueness), and penalizing drift from real game states for aesthetics. Diversity filters prevent reward hacking paper summary.

Template you can reuse: replace chess engines with domain verifiers (solvers, linters, simulators) to shape creative outputs in your field.

LeCun: LLM scaling won’t reach AGI; push joint‑embedding and EBM

Yann LeCun argues that scaling LLMs alone won’t reach human‑level AI and advocates joint‑embedding architectures, energy‑based models, strong world models with model‑predictive control, and using RL only to correct planning failures talk summary, with a full session linked for details YouTube talk.

Why builders care: it’s a research roadmap that prioritizes controllable planning and perception over bigger next‑token predictors.

LLM curiosity study: self‑questioning (CoQ) lifts reasoning

Fudan’s curiosity framework shows LLMs score higher than humans on information‑seeking but lower on thrill‑seeking. A “Curious Chain‑of‑Question” prompt that forces self‑questioning beats standard CoT: Llama3‑8B 48.3% → 58.5% on DetectBench; Qwen2.5‑7B 54.6% → 64.8% on NuminaMath paper abstract.

Try CoQ when you want models to explore unknowns systematically before committing to an answer.

Kimi Linear Q&A adds NoPE and KDA design detail

Follow‑ups from the Kimi team clarify why SWA was dropped, where KDA shines (MMLU/BBH and RL math), and why NoPE was chosen to keep hybrid attention distributions stable in long‑context settings; FP32 was enabled for LM‑head inference in tests author Q&A, following up on Kimi Linear which cut KV by ~75% and sped 1M‑token decode up to 6×.

So what? More color on design trade‑offs helps teams decide between hybrid attention choices and positional encodings for >1M tokens.

🎬 Creative AI: charts, animation, and ComfyUI pipelines

Media‑focused threads: an AI act charts on Billboard radio, Grok Imagine 30‑sec animation from a short prompt, ‘what’s real’ discourse, and ComfyUI dance/ATI demos. Excludes Apple–Gemini Siri (feature).

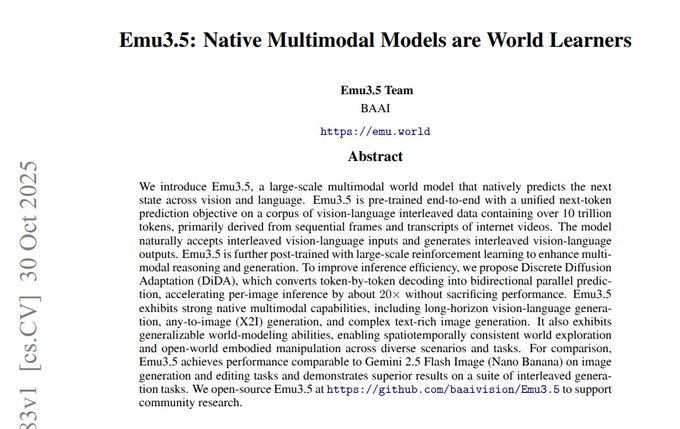

Emu3.5 details: native multimodal IO, RL post‑training, and 20× faster DiDA image decode

BAAI’s Emu3.5 frames image and language as one next‑token stream trained on 10T interleaved tokens, then fine‑tuned with RL for instruction following; a Discrete Diffusion Adaptation (DiDA) stage parallelizes per‑image refinement for ~20× faster inference while preserving quality paper summary. That combo targets long‑horizon visual stories, edits, and any‑to‑image with tighter character/layout consistency.

Grok Imagine turns a short prompt into a 30‑second animation in about 30 seconds

A creator shows @grok Imagine generating a 30‑second clip from a single-line prompt (“The bunny jumps on the lamp. Acrobatic jump”) in roughly real time prompt to video. For teams experimenting with quick social loops or concept previz, that turnaround compresses a day’s motion test into a minute-scale iteration.

ComfyUI flags Wan ATI support; community ships new control UIs

ComfyUI highlights that Wan ATI can now run directly inside ComfyUI, with community contributors publishing fresh, higher‑control nodes and UIs around it integration note, ui tools thread. If you’re building production image/video pipelines, this lowers friction to test Wan‑based looks alongside existing Comfy graphs.

Imagen 4 Ultra stills paired with Seedance 1.0 Fast video stoke realism debate

A side‑by‑side post contrasts a Google Imagen 4 Ultra image with a Seedance 1.0 Fast video from Freepik, arguing the next generation “won’t even know what’s real” side-by-side demo. For creative leads, it’s a reminder to set internal watermarking, disclosure, and review gates as photoreal video becomes trivial to produce.

Midjourney style‑ref trend: creators share sref 714886243 outputs

A circulating set shows what one Midjourney style reference ID (sref 714886243) yields across people, pets, and objects style reference set. If you rely on MJ for campaign looks, curating and versioning internal sref libraries is becoming a practical way to lock tone while still varying subjects.

🤖 Mobility and humanoid interfaces

Compact embodied updates in the stream: Toyota’s legged “Walk Me” wheelchair tackling stairs/rough terrain and a Chinese team’s expressive humanoid faces. No hardware pricing; purely capability signals.

Toyota demos ‘Walk Me’ legged wheelchair that climbs stairs

Toyota showed Walk Me, an autonomous robotic wheelchair with four leg‑like limbs that can navigate stairs and rough terrain capability note. For AI and robotics teams, this points to legged mobility and real‑world planning/control moving into assistive devices, where perception, footstep planning, and fail‑safe behaviors matter as much as payload and battery runtime.

Chinese team shows AI‑driven humanoid faces with lifelike expressions

China’s AheafFrom claims humanoid heads now achieve human‑like facial expressions via AI, citing self‑supervised learning with reinforcement learning for control demo claim. If validated, this tightens the human‑robot interface: teams building social robots should watch for training data sources, actuator fidelity, and latency under closed‑loop expression tracking before treating it as production‑ready.

⚖️ OpenAI governance leaks and Anthropic merger talks

Community dissects deposition excerpts: reports that an Anthropic tie‑up and Dario‑as‑CEO were explored during Nov‑23; attorney sparring and commentary threads. Excludes safety/jailbreak papers.

OpenAI weighed Anthropic merger with Dario as potential CEO during Nov‑23 crisis

New deposition coverage says OpenAI’s board explored a rapid tie‑up with Anthropic after Sam Altman’s ouster in Nov‑23, with Dario Amodei potentially taking the CEO role at OpenAI; talks reportedly collapsed over governance and practical snags, and Amodei declined. The same recap notes a 52‑page memo on Altman’s conduct and nearly 700 staff threatening to quit during the five‑day meltdown. deposition recap deal summary

Why it matters: for executives and counsel, this frames how close consolidation between two frontier labs came to happening under stress, and how board trust and structure can trump technical alignment when the stakes are highest.

Lawyer exchanges and “secondhand knowledge” lines hint at evidentiary posture

A widely shared excerpt captures attorneys snapping at each other (“You be courteous and respectful”), underscoring the combative tone of the Elon v Altman proceedings. Another page shows Ilya Sutskever acknowledging that parts of his understanding were “through secondhand knowledge,” a nuance that matters if claims are tested on primary evidence. lawyer exchange transcript detail

For leaders and analysts, these fragments help gauge how strong or fragile key assertions may be if they move from X threads to court findings.

Transcript line on who was “in charge of AGI” spotlights leadership framing

A highlighted deposition line—“The person in charge of what? … AGI.”—offers a glimpse into how responsibilities were described inside OpenAI during the turmoil. It’s a small but telling datapoint on how the org conceived ownership over its most sensitive workstreams. AGI quote

For org design folks, this hints at how titles and mandates around AGI can intersect with board oversight during crisis.

Deposition correction clarifies Amodei sibling relationship, not spouses

A brief but viral correction in the transcript confirms Daniela Amodei is Dario’s sister, not spouse—fixed on the record after an initial misstatement. transcript correction

It’s minor, but it illustrates how fast, messy testimony can fuel misreadings when governance stories are moving quickly.