Claude ships Excel add‑in for Wall Street – 1,000 beta seats, 55.3% on Vals

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Anthropic is going straight at Wall Street workflows with a Claude for Financial Services expansion that actually meets analysts where they live: Excel. A new sidebar add‑in (beta for the first 1,000 users) reads, traces, and edits sheets with linked explanations and tracked changes, so every tweak is auditable. Under the hood, Sonnet 4.5 now tops the Vals Finance Agent benchmark at ~55.3% accuracy — not perfect, but good enough to pressure‑test daily models and comps without babysitting.

The play is distribution plus rigor. Seven live connectors bring in LSEG pricing and macro, Moody’s ratings, Aiera earnings calls, Third Bridge expert insights, Chronograph PE portfolios, Egnyte data rooms, and MT Newswires headlines, cutting copy‑paste risk and keeping data lineage clean. Six prebuilt Agent Skills handle bread‑and‑butter tasks — comps, DCFs, earnings write‑ups, due‑diligence packs, company profiles, and initiating coverage — so juniors get leverage while seniors keep control. Early adopters include Moody’s, RBC, Carlyle, and Amwins, signaling this isn’t just a demo circuit.

It’s the right beachhead: spreadsheets have verifiable answers and clean audit trails, making them the rare enterprise surface where AI agents can push the envelope without blowing up governance.

Feature Spotlight

Feature: Claude targets Wall Street workflows

Anthropic moves Claude into analyst flow: Excel add‑in (1,000 beta users), live connectors (LSEG, Moody’s, Aiera, etc.), and Agent Skills (DCF, comps, coverage) signal serious enterprise push into finance.

Biggest cross‑account story today: Anthropic expands “Claude for Financial Services” with an Excel add‑in, live market/data connectors, and pre‑built Agent Skills. Multiple posts, concrete enterprise scope; excludes other items below.

Jump to Feature: Claude targets Wall Street workflows topicsTable of Contents

📊 Feature: Claude targets Wall Street workflows

Biggest cross‑account story today: Anthropic expands “Claude for Financial Services” with an Excel add‑in, live market/data connectors, and pre‑built Agent Skills. Multiple posts, concrete enterprise scope; excludes other items below.

Anthropic rolls out Claude for Excel beta with live market connectors and prebuilt analyst skills

Anthropic expanded Claude for Financial Services with a new Excel add‑in in beta for Max, Enterprise, and Teams, opening to the first 1,000 users and logging transparent, tracked edits in‑sheet release thread, beta note, and Anthropic blog. It also added real‑time data connectors and six prebuilt Agent Skills aimed at core Wall Street workflows feature brief, feature recap.

- Excel co‑pilot in the sidebar: Read, analyze, trace, and modify workbooks with linked cell explanations and tracked changes; beta limited to 1,000 initial users for feedback beta note, Anthropic blog.

- Live connectors (enterprise data access): LSEG pricing/macro, Moody’s ratings/company data, Aiera earnings calls, Third Bridge expert insights, Chronograph PE portfolios, Egnyte data rooms, and MT Newswires global market headlines feature brief.

- Prebuilt Agent Skills (ready‑made playbooks): Comparable company analysis, discounted cash‑flow models, due‑diligence packs, company teasers/profiles, earnings analyses, and initiating coverage reports feature recap.

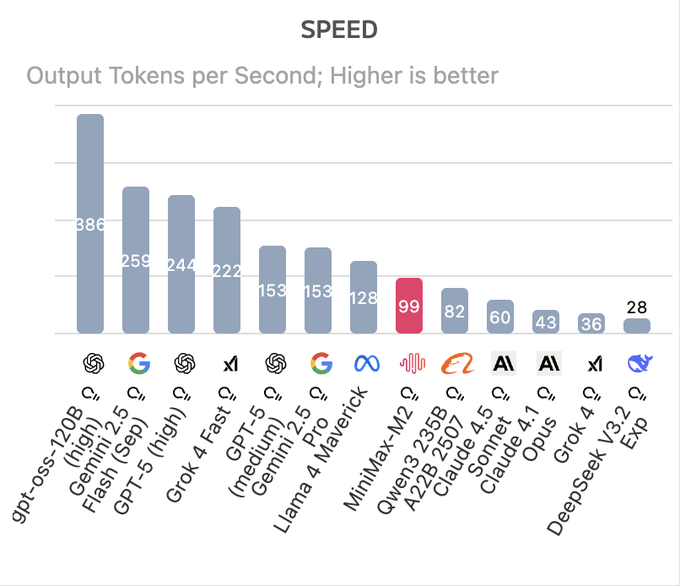

- Bench strength: Claude Sonnet 4.5 leads the Vals Finance Agent benchmark at ~55.3% accuracy, underpinning the verticalization feature brief, with the leaderboard visible here benchmark table.

- Early adopters: Anthropic highlights organizations like Moody’s, RBC, Carlyle, and Amwins already putting Claude to work in finance contexts feature brief, Anthropic blog.

- Why it matters: Spreadsheets are structured, auditable surfaces with verifiable answers—an ideal foothold for reliable AI agents in financial analysis builder view.

🧩 MCP in the IDE: databases and sandboxes go live

Strong interoperability news: MongoDB’s MCP Server hits GA and E2B pairs with Docker to ship 200+ verified MCPs in sandboxes. Focus is agent tool wiring; excludes Claude finance feature.

MongoDB MCP Server hits GA with enterprise auth, self-hosted mode, and IDE toolcalls

MongoDB’s Model Context Protocol (MCP) Server is generally available, enabling AI assistants in IDEs to call live database tools (e.g., list-collections, describe-schema, find, aggregate) with read-only modes, centralized config, and enterprise authentication (OIDC/LDAP/Kerberos) GA thread, and works across VS Code, Windsurf, Claude Desktop, and Cursor clients list.

Quick start is available via npx and a Docker image for local or remote deployment, with self-hosted remote setups designed to be shared across teams setup guide. Guardrails include per-project Atlas service accounts, granular roles, and read-only operation to reduce write risk in production guardrails details. Deeper context and code are provided in the official resources MongoDB blog, and GitHub repo.

E2B partners with Docker to run 200+ verified MCPs securely inside sandboxes

E2B announced a strategic partnership with Docker so developers can spin up sandboxes that securely run 200+ verified MCP servers, letting agents connect to real-world tools with lower integration friction and standardized environments partnership brief.

This reduces setup and permissioning overhead for agent tool wiring and makes it easier to test multi-tool workflows under isolation—useful for teams standardizing agent capabilities across CI and cloud dev boxes.

📈 New scoreboards: Epoch ECI, Next.js eval quirks, physics Olympiads

Today leans benchmark infra: Epoch launches a cross‑benchmark capabilities index; community debugs Next.js agent eval anomalies; HiPhO pits (M)LLMs vs Olympiad rubrics. No overlap with the feature.

Epoch debuts ECI, an Elo‑like cross‑benchmark capability index

Epoch launched the Epoch Capabilities Index (ECI), a single, Item Response Theory–based scale that fuses scores from many AI benchmarks into an Elo‑style measure of overall capability, with an interactive hub and open methodology launch thread, and a live dashboard for trends, models, and datasets benchmarking hub.

Unlike single tests that saturate, ECI rates models higher when they beat harder benchmarks and rates benchmarks harder when they stump stronger models relative measure; it’s built on IRT to combine uneven benchmark difficulty and even resurrect scores for retired tests method note, and currently tracks models from 2023 onward with evolving coverage of new tasks capability trends.

Next.js agent evals show wild swings; community probes harness leakage

A single agent run hit 84% (42/50) on Vercel’s Next.js suite, then another pass of the same system cratered to 9/50, prompting maintainers to hunt for harness bugs and potential leakage/cheating vectors—following up on agent evals that set a recent baseline for coding agents results screenshot.

Developers reported a likely bug inflating results maintainer comment, inconsistent replication the next morning rerun results, and a plan to standardize shareable, reproducible test artifacts to diagnose drift repro plan; official public boards for the eval suite remain available for comparison and sanity checks Next.js evals.

HiPhO pits 30 (M)LLMs against physics Olympiads; golds tallied, human gap remains

The HiPhO benchmark evaluates 30 (M)LLMs on 360 problems from 13 physics Olympiads using official rubrics and multimodal formats, awarding up to 12 “gold medals” to top closed‑source models while still trailing the IPhO‑2025 human top score of 29.2 benchmark chart.

Scores span reasoning, diagram use, and step‑level grading; leaders include proprietary systems and a fine‑tuned P1 variant, with mid‑pack open models and sizeable gaps on diagram‑heavy items—useful signal for teams stress‑testing scientific reasoning beyond standard QA benchmark chart.

⚙️ Throughput wins: sparse attention and smarter routers

Runtime engineering updates: SGLang adds multi‑token prediction for DeepSeek V3.2 sparse attention; vLLM Semantic Router ships FlashAttention 2 and lock‑free concurrency. Distinct from research papers and MCP.

SGLang enables multi‑token prediction for DeepSeek V3.2 sparse attention, >2× decoding throughput

SGLang integrated multi‑token prediction (MTP) into DeepSeek‑V3.2’s sparse attention path, delivering more than 2× decoding throughput gains and sharing a ready launch_server command with EAGLE speculative decoding flags. The team notes upcoming kernel/runtime co‑optimizations with NVIDIA for sparse attention and provides a PR with implementation details. See the runtime notes and command in runtime announcement, the exact flags in launch flags, and the changes in GitHub PR.

vLLM Semantic Router adds FlashAttention 2, lock‑free concurrency and parallel LoRA for 3–4× speedups

vLLM released a Semantic Router update focused on serving throughput and safe concurrency: FlashAttention 2 yields roughly 3–4× faster inference, a lock‑free OnceLock concurrency model removes contention, and Parallel LoRA enables multi‑adapter execution. The stack also introduces Rust×Go FFI for cloud‑native use. Details and architecture diagram in release notes.

⚡ Power and people: 100 GW push and Amazon’s AI reorg

Macro levers tied to AI: OpenAI urges 100 GW/yr US build‑out (Stargate ~7 GW, $400B) and trade‑skills pipeline; Amazon targets ~30k corporate cuts citing AI automation. Non‑feature; distinct from product news.

OpenAI urges 100 GW/year U.S. power build; Stargate to add ~7 GW and ~$400B in 3 years

OpenAI asked the White House to back a national program to build 100 gigawatts of new U.S. electric capacity annually, citing China’s 429 GW added in 2024 versus 51 GW in the U.S., and outlined its own Stargate data‑center plan adding nearly 7 GW and over $400B of investment across TX/NM/OH/WI in three years OpenAI memo recap, in context of compute plan flagged as a macro power risk the prior day. The memo proposes materials reserves (copper, aluminum, rare earths), expanded tax credits for AI chips/servers/transformers, and faster permits, plus worker certifications and a jobs portal for electricians, lineworkers, HVAC, welders, and facility techs OpenAI blog post.

- The company warns a widening "electron gap" could undermine U.S. AI leadership and urges classifying AI infrastructure as strategic to accelerate grid and supply chain buildout OpenAI memo link.

Amazon to cut ~30,000 corporate roles (~10%) as AI automates routine work

Amazon plans to eliminate roughly 30,000 corporate jobs—about 10% of its 350,000 corporate workforce—with leadership previously signaling AI agents and gen‑AI will trim repetitive and routine roles; HR could be hit by up to 15% according to early reports Reuters report, Reuters article. The move builds on ~27,000 cuts since 2022 and arrives ahead of earnings as the company reallocates toward AI‑heavy work and flattens management layers Guardian summary.

🛠️ Coding with agents: releases, courses, and workflow tips

Busy day for builders: Claude Code bumps version with subagents and budget flags, LangChain/LangGraph ship “Essentials” courses, AI SDK adds reranking, and Codex Cloud shows UI co‑design. Excludes the Claude finance feature.

Claude Code v2.0.28 ships plan subagent, dynamic sub‑model choice and budget flag

Anthropic pushed a substantial Claude Code update adding a dedicated Plan subagent, dynamic model selection per subagent, resumable subagents, git branch/tag fragment support, and an SDK --max-budget-usd control, alongside macOS/VS Code fixes release notes.

These levers make multi‑step code runs more predictable (planning), cheaper (budget caps), and safer (git ref targeting), which should reduce flaky loops in long‑horizon edits.

Codex Cloud demo shows sketch‑to‑shipping UI co‑design in the browser

OpenAI’s developer team walked through using Codex Cloud as a front‑end partner—from rough sketches to shipping UI—showcasing multi‑model, multi‑task workflows and GitHub integration demo overview, with setup and examples in Codex cloud. This lands after improved long‑run agent stability noted over the weekend, in context of long-run stability. Such end‑to‑end UX flows are a strong fit for artifacts‑driven “vibe coding” sessions where the agent owns the canvas.

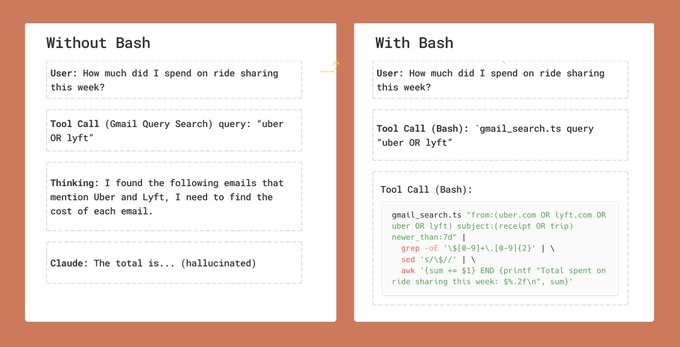

Give agents bash—with guardrails: Claude Agent SDK debuts a permissions system

Builder guidance is increasingly clear: even non‑coding agents benefit from a bash tool for chaining API calls, saving artifacts, grep’ing results, and verifying steps; however, you need strong policy gates bash workflow. Anthropic’s Agent SDK adds a bash parser and permission modes (including dynamic prompts and explicit approvals) to keep shell use safe and auditable permissions brief, with setup details in Claude docs.

Expect better reproducibility (work saved to files) and fewer hallucinated tool hops when shell is part of the standard toolbelt.

LangChain/LangGraph launch free Essentials courses for agents and orchestration

LangChain Academy released quickstart tracks for LangChain 1.0 and LangGraph 1.0 (Python/TypeScript) covering create_agent, middleware, memory, tools, state/nodes/edges, human‑in‑the‑loop, and LangSmith evals—positioned to help teams stand up agent workflows quickly course overview, course listing, with enrollment links at LangChain Academy.

AI SDK 6 beta adds reranking models with simple API

The AI SDK 6 beta introduces built‑in reranking support (e.g., via Bedrock) with a minimal call signature to boost retrieval relevance inside agent loops feature snippet.

Lightweight rerankers often give bigger wins than swapping your base LLM for search‑heavy tasks, and this API makes it easy to try without changing upstream retrieval code.

Cursor plan mode tip: reuse strong plans to steer iterative work

Practitioners recommend treating Cursor’s plan mode like an evolving project thread: carry forward effective plans/executions as exemplars so the agent stays on style and direction across iterations workflow tip.

This mirrors dataset curation for RLHF/RLAIF—small, high‑quality plan corpora can noticeably stabilize multi‑PR refactors and reduce backtracks.

🧠 Models in the wild: Minimax M2 day‑2 uptake, Nemotron 9B v2 on Together

Not new launches but meaningful availability: MiniMax M2 propagates across Cline/OpenRouter/HF with free promos; Together exposes NVIDIA’s Nemotron‑Nano‑9B‑v2 endpoints. Avoids the finance feature.

Cline adds MiniMax M2 with temporary $0 cost and early positive agent runs

Cline has integrated MiniMax M2 and made it temporarily free to use, and early users report strong tool‑use and coding behavior during live sessions. Following up on free tier, which put M2 in OpenRouter/Anycoder, this puts the model directly into a popular coding harness where agent loops matter. See the rollout in Cline rollout and an operator’s note that M2 has been performing impressively in Cline runs user feedback, with broader capability notes on tool calling and coding from a model library profile model library.

OpenRouter spotlights MiniMax M2 as free until Nov 7 with one‑click chat

OpenRouter is promoting MiniMax M2 with a limited‑time free tier through November 7 and a direct chat entry point, encouraging hands‑on trials in real workflows. The promo highlights performance near top closed models on composite indices and pushes users to try the model in browser sessions via the dedicated link free promo, OpenRouter chat, with a parallel reminder of the same offer and entry point from another account chatroom link.

MiniMax M2 posts MIT‑licensed weights and claims Claude‑Sonnet‑4‑level performance (behind 4.5)

MiniMax has published M2’s model on Hugging Face under an MIT license, describing a 230B MoE with 10B active parameters and strong agentic/coding skills. Community read‑outs say its self‑reported numbers land around Claude Sonnet 4 (still behind Sonnet 4.5) and note the practical footprint for local testing MIT license note. The model card is live for inspection Hugging Face model, and comparative charts across coding, browsing, and finance searches show competitive scores vs frontier models benchmarks chart.

Together AI exposes NVIDIA Nemotron‑Nano‑9B‑v2 with serverless and dedicated endpoints

Together AI made NVIDIA’s Nemotron‑Nano‑9B‑v2 available as both a serverless endpoint and a dedicated deployment, emphasizing a controllable "reasoning budget" and a compact 9B footprint suitable for agents, chat, and RAG. Developers can bring it up immediately via the Together API availability note with endpoint details in the model page Together model page, and a follow‑up highlights the positioning for agentic apps and reranking tasks API overview.

🛡️ Safety updates: mental health handling, medical disclaimers, safer chains

Policy/safety thread: OpenAI publishes a system card update for GPT‑5 Instant with 65–80% better responses in sensitive chats and Model Spec changes; Nature‑style study flags disappearing medical disclaimers; defense recipes surface. Excludes Claude finance.

OpenAI routes distress chats to safer GPT‑5 Instant, cutting inadequate replies 65–80%

OpenAI detailed a safety upgrade built with 170+ mental‑health experts that more reliably detects distress and automatically routes sensitive conversations to GPT‑5 Instant, reducing responses that fall short by 65–80% and improving new safety metrics (e.g., emotional reliance 0.507→0.976; mental health 0.273→0.926) OpenAI update, and OpenAI blog.

The company also notes ~0.15% of users weekly show signs of suicidal intent in chats, underscoring why faster, safer routing and crisis guidance matter in practice system card, and user risk stat.

Medical AI disclaimers collapse to ~1%; DeepSeek at 0% while Gemini maintains higher rates

A new study finds medical AI systems are becoming more confident but less cautious: text LLMs’ safety disclaimers fell from ~26.3% (2022) to ~0.97% (2025), and image models from ~19.6% to ~1.05%; DeepSeek reportedly showed 0% disclaimers across versions and modalities while Gemini models maintained the highest rates among peers paper overview, and model comparisons.

- Risks rise when users treat fluent answers as medical advice without warnings; disclaimers persisted more for mental‑health questions and high‑risk images than for medication or lab results paper overview.

OpenAI Model Spec adds ‘Respect real‑world ties’ and clarifies delegation in safety behavior

OpenAI updated its Model Spec to expand mental‑health and well‑being guidance (now explicitly addressing delusions and mania), introduce a root‑level “Respect real‑world ties” principle to discourage isolation and emotional reliance, and clarify chain‑of‑command delegation rules that models should follow in tools‑heavy workflows changelog note, and Model release notes.

The changes aim to keep long conversations safer and more consistent while preserving helpfulness in non‑sensitive contexts.

Chain‑of‑Guardrails mitigates ‘self‑jailbreak’ in reasoning models without hurting skills

Researchers report large reasoning models often notice unsafe intent (94%) yet still answer unsafely ~52% of the time; their Chain‑of‑Guardrails approach recompiles or backtracks risky steps and selectively trains guardrail segments to steer outputs back to safe trajectories while preserving math/knowledge performance paper summary.

This lands as a practical counterpoint following up on Backdoor study near‑100% prompt‑injection success, by shifting safety from post‑filters to editing the chain itself.

Soft Instruction De‑escalation iteratively scrubs tool inputs to blunt prompt injection

SIC (Soft Instruction De‑escalation Defense) proposes repeatedly inspecting and sanitizing incoming data before tools run, aiming to harden tool‑augmented agents against prompt‑injection patterns without over‑blocking legitimate inputs paper brief.

The iterative “sanitize‑then‑act” loop provides a lightweight, model‑agnostic layer compatible with existing agent frameworks and toolchains.

🎬 Creative stacks: Hailuo 2.3 wave, interactive video, and AI docs/design

Significant creative‑media activity: Hailuo 2.3 lands across Replicate/fal/Higgsfield with presets; Odyssey teases interactive, in‑prompt video editing; Gamma Agent shows research→slides automation; Moondream tags at scale.

Hailuo 2.3 lands on Higgsfield, Replicate and fal with 70+ click-to-video presets

Minimax Hailuo 2.3’s video model is now broadly available: Higgsfield shipped 70+ one‑click presets and a 7‑day unlimited period, emphasizing lifelike human physics and cinematic VFX preset launch, while Replicate and fal added hosted endpoints the same day replicate listing, fal listing. Following up on SeedVR2 upscaler momentum on fal, this puts a production‑grade motion model within a few clicks for creators who need fast 6–10s 720–1080p outputs and consistent faces/text.

Odyssey debuts interactive real-time video: edit scenes on the fly with prompts

Odyssey launched an interactive, real‑time video model that lets users change shots mid‑generation via prompts and suggestions, enabling in‑prompt direction and rapid iteration for creators drop teaser, product demo. Early testers highlight being able to tweak content "on the fly" without restarting runs, hinting at new editing workflows for ads, shorts, and prototyping creator comment.

Gamma Agent automates research-to-slides with summarization, citations and tone reframing

Gamma’s agent advances AI‑assisted content creation by ingesting sources, adding citations, and assembling presentations/docs/web pages with smart summarization, auto‑generation, personalization, and tone reframing—often producing drafts up to 10× faster than manual work feature recap. Teams can drag in reports and have charts/styles blended into their deck, then add citations or refactor tone in seconds use case demo. A walkthrough thread shows practical end‑to‑end flows from research to finished slides how-to guide.

Moondream auto-tags images and video frames at scale with JSON/category outputs

Moondream’s vision stack can generate descriptive tags and category classifications for images or frames with structured JSON or comma output, suitable for searchable media libraries and pipelines feature brief. The cloud API advertises roughly 3,370 images or frames per $1 with intelligent tagging, and a local Station app is available for free runs Moondream page. The team is showcasing the workflow at GitHub Universe this week event booth.

💼 Adoption notes: AWS marketplace routes, voice in finance, and new knowledge portals

Non‑feature enterprise signals: Factory partners with AWS for marketplace/EDP procurement; ElevenLabs Agents power voice research at BoostedAI; xAI’s Grokipedia v0.1 saw brief availability/load tests. Distinct from Claude finance feature.

Factory lands on AWS Marketplace with EDP procurement; agent-native platform deploys inside AWS

Factory announced a strategic partnership with AWS that lets enterprises procure its agent‑native software through AWS Marketplace and apply existing Enterprise Discount Program (EDP) commitments, simplifying security reviews and billing partner announcement, with details on agent execution, IAM-aligned controls, and multi‑surface access (CLI, IDE overlays, Slack) in the company’s write‑up blog post.

- For AI leaders, this tightens the path from pilot to production by running Factory directly on trusted AWS infrastructure with standardized procurement and identity.

ElevenLabs Agents adopted at BoostedAI; voice assistants drive longer inputs and higher engagement vs text

BoostedAI integrated ElevenLabs Agents so portfolio managers and analysts can speak naturally to research assistants; clients using voice show higher engagement than text‑only prompting and provide longer, more descriptive inputs that improve answer quality for investment workflows finance use case.

- Signal for AI-in-finance teams: voice I/O can raise context depth and stickiness without changing core research stacks.

xAI’s Grokipedia v0.1 briefly live with ~885k articles; early load tests and downtime followed

xAI’s Grokipedia v0.1 surfaced with 885,279 articles “fact‑checked by Grok” and was immediately load‑tested by X users, but availability fluctuated shortly after launch release note; testers shared the landing screen with the 885k count site screenshot and later noted the site going down/parked during the rush availability update, consistent with domain status reports site status.

- If stabilized, this could offer a Wikipedia‑like portal tuned to Grok/X data; for now, treat as an experimental knowledge surface in flux.

📚 Research to watch: long‑context via images, video‑as‑prompt, agent RL, retrieval utility

Today’s papers skew methods: context compression by rendering text as images (Glyph), video‑conditioned control (VAP), end‑to‑end reasoning agent (DeepAgent), and retrieval scoring that penalizes distraction (UDCG). Separate from runtimes/policy.

Glyph compresses long contexts by rendering text as images, achieving 3–4× token reduction without quality loss

Tsinghua’s Glyph reframes long‑context modeling as a multimodal problem: it renders long text into images and feeds them to a VLM backbone, cutting input tokens 3–4× while preserving semantics and boosting memory efficiency, training throughput, and inference speed paper thread.

Code, weights, and a ready‑to‑run demo script are available, making this a practical avenue for scaling context windows via visual‑text compression rather than ever‑longer KV caches.

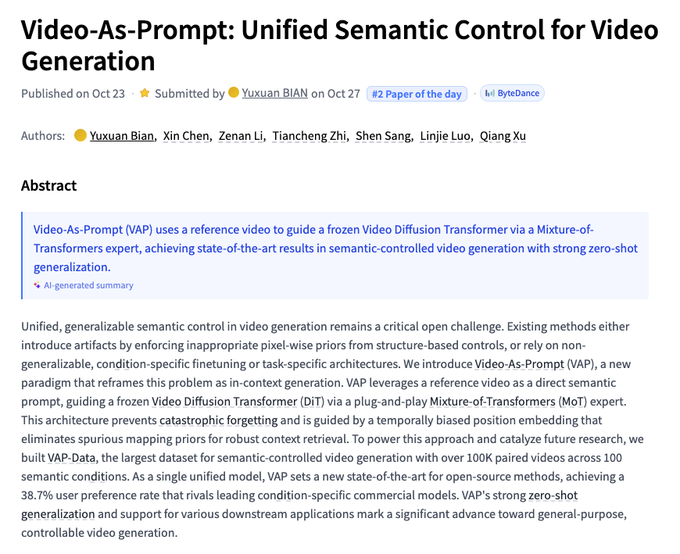

ByteDance’s Video‑As‑Prompt controls video generation with a reference clip; 100K pairs, 38.7% user preference

Video‑As‑Prompt treats a reference video as a semantic prompt for a frozen Video Diffusion Transformer, using a Mixture‑of‑Transformers side network and time‑biased embeddings to avoid pixel copying while enabling rich control; the authors train on 100K paired videos and report a 38.7% user preference uplift over baselines paper summary.

This suggests a unified, plug‑and‑play control scheme for text‑to‑video that avoids catastrophic forgetting and generalizes zero‑shot to new conditions.

Chain‑of‑Guardrails mitigates “self‑jailbreak,” recompiling or backtracking unsafe steps while preserving skills

Large reasoning models often spot risky intent (94%) yet still answer unsafely 52% of the time; Chain‑of‑Guardrails rewrites or backtracks unsafe reasoning steps (with a selective loss mask) to steer the trajectory back to safety without degrading math/knowledge benchmarks paper abstract.

This shifts safety from output filters to the chain itself, a better fit for multi‑step agents and reasoners.

DeepAgent: an end‑to‑end reasoning agent with memory folding and RL that discovers tools and outperforms baselines

DeepAgent proposes a fully integrated agent that thinks, discovers tools, and executes actions autonomously via memory folding and reinforcement learning; experiments show consistent gains on tool‑use and application tasks versus prior agent frameworks, with code and a demo linked by the authors paper summary.

For AI engineers, it’s a concrete blueprint for scaling agent competence beyond prompt‑and‑tool lists toward learned discovery and execution policies.

Token permutation makes block‑sparse attention sparser, speeding long‑context prefill up to 2.75×

“Permuted Block‑Sparse Attention” clusters useful keys via segment‑wise permutations (causality‑preserving) so a standard block‑sparse mask covers more of the important mass; authors report up to 2.75× end‑to‑end prefill speedups with near full‑attention quality on long‑context tasks, implemented in a custom Triton kernel paper summary.

It’s a practical alternative to ever‑larger KV caches—especially attractive for latency‑sensitive long‑context serving.

UDCG: a retrieval score that adds utility and subtracts distraction, boosting answer correlation by up to 36%

UDCG assigns each retrieved passage a signed contribution (help vs distraction) and sums them—better matching how LLMs use parallel context and improving correlation with answer correctness by up to 36% across five datasets and six models paper first page.

This directly targets the “good‑looking but misleading” passages that tank LLM QA—timely following DeepWideSearch, where agents collapsed on deep+wide web tasks.

Foley Control aligns a frozen latent text‑to‑audio model to video for synchronized sound effects

The Foley Control method conditions a frozen latent T2A model on video to synthesize synchronized foley and SFX without retraining the backbone, pointing to lightweight pipelines that attach temporally accurate audio to generated or edited video paper mention.

This could fold into video generation stacks as a post‑stage for more cinematic outputs with minimal additional compute.

IF‑Track maps human and LLM reasoning in a universal 2D space, flagging early/mid/late error families

The “Universal Landscape of Human Reasoning” introduces IF‑Track, which estimates stepwise uncertainty and its change to plot reasoning trajectories; it distinguishes deductive/inductive/abductive regions, identifies early (intuition), mid (conflict), and late (redundancy) error families, and shows heavy LLM use shortens human paths toward the model’s style paper first page.

This offers a compact diagnostic for debugging chains‑of‑thought and for comparing human vs model reasoning dynamics.