GPT‑5.1 Codex hits 70.4% on SWE‑Bench – ~26× cheaper

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Benchmarks moved again: GPT‑5.1 Codex grabbed the SWE‑Bench lead at 70.4%, edging Claude Sonnet 4.5 (Thinking) at 69.8% while running about $0.31 per test versus $8.26—roughly 26× cheaper. Vals AI also reports GPT‑5.1 topping its Finance Agent benchmark by 0.6%, with LiveCodeBench performance jumping from 12th to 2nd.

If you fix real repos or wire fintech flows, that cost/perf mix argues for routing more traffic to 5.1 Codex and letting behavior, latency, and price steer the rest. Artificial Analysis’ latest run nudges GPT‑5.1 to a 70 on its Intelligence Index and shows 81M output tokens vs 85M for GPT‑5, trimming estimated run cost to ~$859 from ~$913.

Don’t hand it the keys to low‑level optimization, though. A new ML/HPC leaderboard puts expert humans at 1.00× speedup while current LLM agent systems manage ≤0.15×, so keep humans in the loop for performance tuning. And if latency matters, retrieval+classifier pipelines are winning: DeReC beats rationale‑generating LLMs for fact‑checking with ~95% less runtime.

Feature Spotlight

Feature: Gemini 3 signals hit critical mass

Gemini 3 appears imminent: Sundar Pichai teases a Nov‑22 window; “Gemini 3.0 Pro” strings show up in Enterprise model selectors; “Riftrunner” shows in arenas. If confirmed, Google’s distribution could reset model choice for many teams.

Multiple independent sightings and CEO hints point to an imminent Gemini 3 release; today’s sample centers on strings in enterprise UIs, a “Riftrunner” label in arenas, and market chatter. Excludes other model news, which is covered separately.

Jump to Feature: Gemini 3 signals hit critical mass topicsTable of Contents

✨ Feature: Gemini 3 signals hit critical mass

Multiple independent sightings and CEO hints point to an imminent Gemini 3 release; today’s sample centers on strings in enterprise UIs, a “Riftrunner” label in arenas, and market chatter. Excludes other model news, which is covered separately.

“Gemini 3.0 Pro” spotted inside Enterprise agent selector

Multiple screenshots show a “Gemini 3.0 Pro” label appearing in the Gemini Enterprise agent model picker, though access remains blocked for general users sighting summary. Devtools strings align with a production‑bound model option, strengthening the case that final wiring is underway devtools strings, with write‑ups documenting recurring sightings across builds TestingCatalog post.

For AI platform owners, this is the clearest enterprise‑grade breadcrumb yet: start drafting routing and fallbacks so you can A/B 3.0 Pro versus your current defaults on day one.

Sundar boosts 69% Polymarket odds for Gemini 3 by Nov 22

Google’s CEO amplified a Polymarket contract showing a 69% chance Gemini 3.0 ships by Nov 22, which the community reads as a deliberate signal to expect a near‑term launch CEO hint. A separate roundup repeats the same read, framing Sundar’s post as soft confirmation of timing market odds.

So what? Leaders and PMs can prep eval sandboxes and rollout comms now, especially if you plan announcements at or right after AIE Code week.

‘Riftrunner’ resurfaces in arenas and tools as a likely Gemini 3 tag

A “Riftrunner” model id keeps appearing in design arenas and developer consoles, with testers describing it as a larger, more capable variant that matches expected Gemini 3.0 Pro behavior devtools console and outperforming peers on an SVG rendering comparison in creator tests svg comparison, following up on Riftrunner early strings and arena probes.

If you run eval harnesses, add a placeholder lane for Riftrunner so you can drop in the model id the moment it’s routable.

Timing chatter converges on “next week,” likely during AIE Code

Several well‑followed accounts say Gemini 3 is landing next week, with one tying the reveal to the AI Engineer Code event where Google has launched onstage before timing claim. Posts narrow it further to early week, even “likely on Tuesday,” reinforcing scheduling urgency next week call tuesday hint, while broader sightings threads keep stoking the countdown speculation post.

Practical move: line up side‑by‑side prompts and traffic shaping such that switching a portion of user flows to 3.0 takes minutes, not days.

Nano‑Banana 2 buzz suggests refreshed image stack alongside Gemini 3

Creators report strong results from “Nano‑Banana 2,” noting more realistic images, better text rendering, and accurate reflections—pointing to a revamped Google image stack that could ship alongside Gemini 3 creator take. Others explicitly pair Nano‑Banana 2 mentions with Gemini 3 timing chatter paired mention, with more output dumps circulating outputs thread and third‑party workflows already wiring “nano banana” as a selectable node workflow example.

If your product leans on generative visuals, budget time to re‑shoot style guides and update safety filters—the output distribution may shift.

📊 Benchmarks: GPT‑5.1 Codex tops SWE‑Bench; finance agent SOTA

Strong day for public evals: GPT‑5.1 Codex edges Sonnet 4.5 (Thinking) on SWE‑Bench at a fraction of cost; GPT‑5.1 leads a finance agent benchmark; meta‑analysis adds token/price deltas. Excludes Gemini 3 coverage (see Feature).

GPT‑5.1 Codex tops SWE‑Bench at 70.4% and ~26× cheaper than Sonnet 4.5

OpenAI’s GPT‑5.1 Codex leads SWE‑Bench with 70.4% accuracy versus Claude Sonnet 4.5 (Thinking) at 69.8%, while costing ~$0.31 per test vs ~$8.26 (~26× cheaper) benchmarks table. Following up on launch-top5 where new code leaderboards surfaced, this run confirms 5.1 Codex as the top value pick for repo‑level bug fixing, with latencies shown alongside the cost deltas SWE‑Bench note, and the public board now reflectable in Vals AI’s pages benchmarks page.

GPT‑5.1 leads Vals AI Finance Agent Benchmark by 0.6%

Vals AI reports GPT‑5.1 sets a new state of the art on its Finance Agent Benchmark, edging Claude Sonnet 4.5 (Thinking) by 0.6% on goal completion, with additional gains on LiveCodeBench (jumping from 12th to 2nd) and minor improvements on MMMU/GPQA/IOI finance benchmark post, follow‑up details. For teams prototyping agentic fintech workflows, this narrows the top tier to 5.1 vs Sonnet 4.5, and suggests routing by tool‑use behavior and cost may matter more than small headline margins.

Artificial Analysis: GPT‑5.1 +2 on Intelligence Index; 81M vs 85M output tokens

Artificial Analysis’ latest run gives GPT‑5.1 a score of 70, +2 over GPT‑5 at similar reasoning effort, driven largely by TerminalBench improvements; it also used 81M output tokens vs 85M for GPT‑5, cutting run cost to ~$859 from ~$913 index recap. The live dashboard breaks down per‑eval deltas and cost/latency tradeoffs useful for routing and budgeting analysis site.

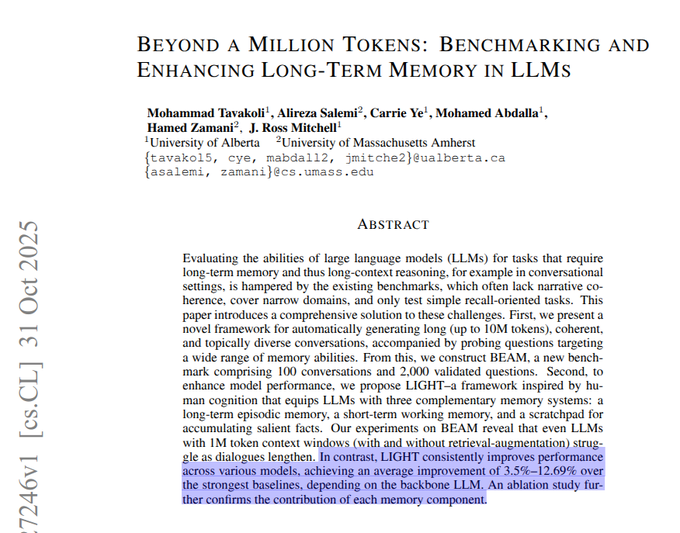

BEAM benchmark hits 10M‑token chats; LIGHT memory stack outperforms long context

BEAM introduces ultra‑long conversation evals up to 10M tokens and shows LIGHT—a hybrid of episodic retrieval, working memory, and scratchpad—consistently outperforms relying on huge context windows alone, with average gains reported across models and a clear fade in long‑context models as length grows paper abstract. For agents that must persist across days, this favors explicit memory stacks over bigger windows.

Bridgewater’s AIA Forecaster reaches expert‑level on ForecastBench with agentic search

Bridgewater’s AIA Forecaster combines agentic search over high‑quality news, a supervisor that reconciles disparate forecasts, and calibration (e.g., Platt scaling) to match superforecaster accuracy on ForecastBench, beating prior LLM baselines; on a liquid markets set, markets still lead but ensembles with the model improve accuracy paper abstract. For ops, this argues for supervised multi‑agent pipelines over single‑shot judgments.

Conciseness reward model trims tokens ~20% and lifts 7B accuracy by 8.1%

A conciseness reward model that grants brevity bonuses only when final answers are correct prevents length/training collapse, delivering +8.1% accuracy with ~19.9% fewer tokens on a 7B backbone across math tasks; the bonus fades over training and scales by difficulty paper abstract. This is a practical recipe to cut inference cost in reasoning agents without sacrificing quality.

Dense retrieval + classifier beats LLM rationales for fact‑checking at 95% less runtime

DeReC (Dense Retrieval Classification) replaces rationale‑generating LLM pipelines with dense evidence retrieval and a classifier, improving RAWFC F1 to 65.58% (from 61.20%) while cutting runtime ~95% (454m→23m). Similar speedups are shown on LIAR‑RAW paper abstract. If you need scalable veracity checks, retrieval+classifier is a strong baseline before spinning up expensive generation.

New ML/HPC leaderboard shows LLM agents slower than expert humans

A new SWE/ML optimization leaderboard with a human baseline shows expert humans at 1.00× speedup, while top LLM‑driven systems achieve ≤0.15× on ML/HPC tasks, implying current agents slow practitioners down for performance tuning despite strong coding scores elsewhere leaderboard post. Use this as a routing signal: keep human‑in‑the‑loop for low‑level optimization and reserve agents for scaffolding, search, and glue code.

Rubric‑based instruction‑following benchmark and RL recipe land for agents

A new rubric‑based benchmark and reinforcement learning approach for instruction following is out, providing a repeatable way to grade agent outputs and train toward rubric compliance—useful when subjective spec adherence matters (e.g., tone, structure) paper thread. Expect more agent evals to standardize on rubric scoring with verifiable checks.

🧰 Agentic coding stacks and DX improvements

A cluster of practical updates for building and shipping agents: skills systems, caching and planning UX, and emergent IDE behaviors. Mostly dev‑tool releases and workflows; does not cover MCP interop (separate category).

Evalite adds aggressive model caching to cut eval cost and iteration time

Evalite now caches AI SDK models in watch mode with a local UI, so you can rerun evals without reloading models—saving tokens and speeding up the red/green loop on prompts, tools and routing. The maintainer’s PR shows the feature landing with CI-friendly artifacts to keep spend predictable PR details.

Qwen Code v0.2.1: web search, fuzzy code edits, Zed support, plain‑text tools

Alibaba’s Qwen Code v0.2.1 ships free web search (OAuth users get 2,000/day), a fuzzy match pipeline that reduces retries, better Zed IDE integration, and switches tool outputs from complex JSON to plain text so models parse them more reliably. It also tightens file filtering and output limits across platforms release thread.

Vercel publishes a practical playbook for deploying internal agents

Vercel shared how they pick “low-cog, high‑repeat” work for agents, the instrumentation they use, and a case where a lead‑processing agent replaced a 10‑person workflow. The post is concrete about routing, evals, and guardrails—useful patterns if you’re moving agents from demos to production what we learned.

Amp Free chains ad→search→playground into runnable RF‑DETR demo in ~30s

A neat emergent flow in Sourcegraph’s Amp: an inline ad suggests “use RF‑DETR,” the new p0-powered web search pulls the right posts, then Amp scaffolds a runnable playground—all within one thread. It’s a glimpse of agentic UX where query, retrieval, and environment spin-up collapse into one step for developers demo thread.

Cline enables Hermes‑4 70B/405B across VS Code, JetBrains and CLI

The open‑source Cline agent now supports Nous Hermes‑4 70B and 405B in its VS Code extension, JetBrains plugin, and CLI, widening model choice for coding flows. Nous also published low per‑million pricing, making long sessions more affordable while you keep the same planner/executor UX release note.

OpenAI’s GPT‑5.1 cookbook codifies plan tools and persistence patterns

OpenAI’s updated cookbook spells out patterns that matter for agentic work—define an explicit persona, specify output formats, use a “plan” tool with milestone statuses, and set reasoning_effort='none' when you need speed over chain‑of‑thought. It’s a handy baseline for teams moving to 5.1 prompting guide.

Review AI mega‑diffs with Graphite’s stacked PR flow for Claude Code output

When Claude Code drops 6,000+ lines, Graphite’s stacked diffs pattern lets you slice the work into reviewable steps instead of YOLO‑merging. The demo shows a realistic workflow to keep agent output shippable without burying reviewers in a single giant patch stacked diffs demo.

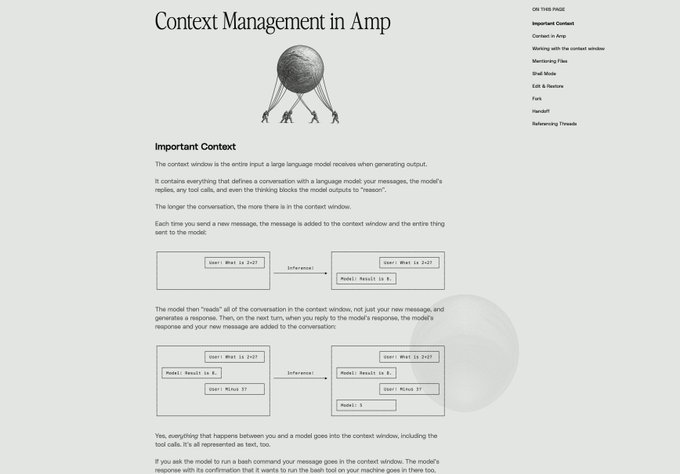

Amp posts a hands‑on context management guide for coding agents

Sourcegraph published a guide on keeping agent context lean so quality doesn’t degrade—when to trim, how to structure threads, and why long transcripts can sabotage results. If you’re seeing drift or hallucinations in long sessions, this is a useful checklist guide page.

Memex desktop adds a code viewer to inspect agent‑made edits

Memex surfaced an in‑app code viewer so you can audit what the agent changed before you accept patches. For teams trialing local, agent‑assisted builds, this reduces the “YOLO merge” pressure and keeps review in one place feature screenshot.

v0 now reports time, files, LOC and credits after each generation

The v0 agent surfaces a post‑run panel with “time worked,” files modified, lines changed, and credits used. This makes cost and blast radius visible right where you accept or revise a change, which helps teams budget and review faster feature video.

🕸️ Interoperability: MCP in the wild

Inter‑stack connectors and MCP servers show real traction today: remote MCPs plugged into inference APIs and browsers as endpoints. Pure standards/interop angle; excludes general coding DX and the Gemini 3 story.

Groq plugs Box’s remote MCP server directly into its Responses API

Groq announced you can now point its Responses API at Box’s remote MCP server, letting models call Box tools over MCP without custom glue. This is a clean, standards-based interop move that turns Box into a first-class tool provider for agent workflows on Groq hardware integration note, with a live demo slated at AI Dev NYC AI Dev site.

Why it matters: instead of bespoke SDKs per vendor, you bind a single MCP endpoint into your inference stack and route file ops, search, and content actions through Box. That cuts custom adapters, simplifies permissions policies, and makes it easier to swap providers later.

rtrvr.ai turns a Chrome extension into a remote MCP server any agent can drive

rtrvr.ai exposed its Chrome extension as a Remote MCP server, so any agent (Claude, Cursor, Slackbots) can control a user’s browser through a single MCP URL—no bespoke bridge per client. It keeps API keys local in the browser for sensitive actions, and can replay recorded tasks at cloud scale across many headless browsers security angle, with posts detailing the browser-as-infrastructure idea browser as infrastructure and parallel cloud runs for data collection cloud scaling.

For builders, this collapses the painful “agent ↔ browser” integration surface into one endpoint. It also sets a pattern: record locally, replay in cloud, while keeping secrets client-side.

MCP turns one: Anthropic × Gradio kick off a community hackathon

Anthropic and Gradio opened submissions for MCP’s first‑birthday hackathon—kickoff Nov 14, project deadline Nov 30, winners Dec 15—aimed squarely at building real MCP servers and clients kickoff stream. This lands after ongoing spec debates about MCP’s evolving guarantees, following up on no stability where the spec was acknowledged as fluid; the event shifts attention to practical interop and implementations announcement image.

Expect lots of remote MCPs, tool routers, and browser/desktop bridges—useful testbeds to harden conventions around auth, rate limits, and tool schemas.

AITinkerers Web Agents Hackathon spotlights a pragmatic MCP-friendly stack

AITinkerers set a one‑day NYC hack focused on “agents that do stuff on the web,” leaning on Redis for realtime state, Tavily for search/browse, and CopilotKit to productize the UI—an ecosystem that plays well with MCP‑style tool calling and remote endpoints hackathon post. Sign‑ups are open with details on the stack and format event page.

This is a good venue to test agent/runtime boundaries: keep web control and secrets in sandboxed endpoints, route tool calls via MCP, and ship an actual interface instead of bare traces.

🛡️ Security: AI‑orchestrated intrusions and safer assistants

Clear security/safety beats: Anthropic documents a China‑linked AI espionage campaign and Perplexity adds permissioning UIs; OpenAI disputes NYT’s sweeping data demand. This section excludes evals and model launches.

Anthropic: China-linked actor automated 80–90% of espionage with Claude Code

Anthropic details that a China‑linked group (GTG1002) used Claude Code to automate 80–90% of an espionage campaign across ~30 organizations—from recon to credential theft—before Anthropic cut access and shipped tighter rate limits, abuse detectors, and agent guardrails attack summary, and Anthropic blog post. For security leads, the point is simple: API‑hosted agents can now run professional‑grade playbooks, so model‑aware telemetry and agent‑layer controls matter as much as network IDS excerpt screenshot.

OpenAI resists NYT demand for 20M chats, accelerates client‑side encryption

OpenAI says The New York Times wants 20M private ChatGPT conversations; the company is complying under protest with de‑identified samples from Dec 2022–Nov 2024, pushing for controlled onsite review, and accelerating client‑side chat encryption while challenging the scope in court case summary. This follows NYT demand where encryption was flagged; today’s note adds sampling bounds and rejects earlier asks like 1.4B chats or removing delete controls case summary.

Perplexity Comet adds permission prompts and transparent browsing for risky actions

Perplexity rolled out a safer Comet assistant that shows when it’s browsing, lets you set behavior, and pauses to ask before sensitive actions like logins or purchases feature summary, with implementation details in the product blog. The UI now exposes what Comet is doing and offers “browse yourself,” “allow once,” or “allow always,” keeping humans in the loop for high‑consequence steps permission prompt, browsing indicator.

APIs aren’t a safety shield against misuse, say open‑source leaders

In light of Anthropic’s AI‑orchestrated intrusion report, community voices argue centralized APIs are not inherently safer than open‑weights for certain abuse classes, so defenses must live in agent policy, rate‑limits, audit trails, and workflow‑level permissions—not only in key‑gated access opinion, grounded by the attack summary.

🏗️ AI datacenters, power, and memory constraints

Infra economics moved: Google commits tens of billions to Texas data centers with dedicated power; DDR5 contract prices surge on AI demand. Non‑feature, non‑model, directly tied to AI supply/cost curves.

Google commits $40B for three Texas AI data centers with 6.2 GW power deals

Google is putting about $40 billion into three new Texas data centers through 2027 and pairing them with roughly 6,200 MW of contracted generation, plus a $30 million energy impact fund and training for ~1,700 electricians and apprentices investment summary. That’s a big swing at power‑secure AI capacity in a state where land and interconnects are easier to scale than most.

For AI infra leads, this signals cheaper, nearer‑term GPU hosting where power is pre‑bought and on‑site renewables/batteries reduce grid risk. It also concentrates a lot of AI load in one region, which helps latency for US workloads but raises regional resilience questions. The move follows Anthropic’s $50B US buildout plans $50B plan, which set the tone for hyperscale AI capex earlier in the week.

Samsung raises DDR5 contract prices 30–60% as AI demand tightens supply

Samsung lifted DDR5 contract pricing by 30%–60%: 32GB DIMMs move to ~$239 (from ~$149), 16GB to ~$135, and 128GB to ~$1,194, citing shortages driven by AI data centers pricing details. Buyers are panic‑booking capacity and signing longer contracts into 2026–2027, shifting bargaining power to memory suppliers.

This hits total cost of ownership for both inference and training clusters where DDR5 is the non‑GPU bottleneck on CPU hosts and mixed nodes. Expect procurement to front‑load orders, test lower‑capacity configs against model throughput, and push more memory‑efficient serving.

Local opposition to data centers accelerates; $98B in projects blocked or delayed

A Data Center Watch study (via NBC) tallies about $98B in US data center projects blocked or delayed from late March to June 2023—more than the prior two years combined ($64B). Organised resistance now spans 53 groups across 30 projects in 17 states, often citing power costs and land use opposition report, and the report details tightening approval paths and incentives under review NBC article.

For AI capacity planners, this means longer, riskier site timelines near metros and more value in power‑adjacent regions (e.g., Texas) or campuses with dedicated generation. Build teams should model permitting risk like a supply variable, not an afterthought.

💼 Enterprise adoption and go‑to‑market signals

Market/enterprise signals across vendors: ARR targets, AI becoming a rated behavior at Meta, and cost wins from infra choices. Excludes infra capex specifics and evals covered elsewhere.

OpenAI pace: ~$6B H1 revenue, ~$13B ARR in June; aiming for ~$20B by year-end

A fresh scorecard pegs OpenAI at roughly $6B revenue in H1 2025 and about $13B ARR in June, with a stated push toward ~$20B ARR by year‑end revenue update. For buyers and partners, that trajectory signals expanding capacity and support expectations right now.

Meta will grade “AI‑driven impact” in 2026 reviews; assistant helps write self‑evals

Meta plans to formally bake “AI‑driven impact” into 2026 performance reviews, asking employees to show concrete output gains, with an internal assistant to draft self/peer reviews off work artifacts policy brief. This pushes managers to ask how teams used AI to cut time, improve metrics, or unlock features—today, not later.

Berkshire adds ~$4.3B Alphabet stake, trims Apple 15%; read as an AI distribution bet

Berkshire Hathaway disclosed Alphabet as a new top‑10 holding at ~$4.3B while cutting Apple by ~15%, with Chubb up 16%—a classic value investor tilting toward AI‑heavy distribution and cloud optionality at Google portfolio shift. For enterprise buyers, it’s another signal that search + model delivery are seen as durable profit centers.

OpenAI will de‑identify a 20M‑chat sample under protest and accelerate client‑side encryption

OpenAI says it must hand over a de‑identified slice of 20M chats in the NYT case but is pushing to confine access and is fast‑tracking client‑side encryption for chats legal update, following up on privacy letter that outlined the demand and initial pushback. For enterprise tenants, this affects retention settings and discovery risk planning.

Genspark says AWS stack cut GPU cost 60–70% and 72% off inference via prompt caching

Genspark reports 60–70% lower GPU costs and a 72% reduction in model inference spend after moving to AWS, citing Bedrock prompt caching and infra ownership as the levers cost claim. If you pay per token at scale, replicate the cache policy experiments before lifting and shifting.

Perplexity’s Comet adds permission gates for logins/purchases and shows all browsing actions

Perplexity rolled out a trust UX for its Comet assistant: it now pauses for consent on sensitive actions (e.g., site logins, checkout) and exposes what it’s browsing and doing on your behalf feature brief. The controls make agentic flows safer for teams that need auditability before letting tools act Perplexity blog.

OpenRouter adds backup payment methods to prevent downtime on auto top‑ups

OpenRouter now lets you set up to three payment methods for auto top‑ups, trying backups when the primary fails product update. If your inference relies on OpenRouter credits, enable this to avoid silent outages during spikes.

🧠 Model & API updates (non‑Gemini)

Fresh model/API movement beyond Gemini: Claude’s structured outputs, Qwen Code upgrades, Hermes 4 via Cline, GPT‑5.1 on Replicate, and Grok‑5 roadmap talk. Keeps clear of the Gemini 3 feature.

Anthropic brings structured outputs (public beta) to Claude API

Anthropic added schema-locked structured outputs to the Claude API in public beta for Sonnet 4.5 and Opus 4.1, enforcing JSON schemas or tool definitions without brittle post‑parsing or retries feature brief, with docs emphasizing fewer schema errors and simpler control flows Claude blog post, Claude docs. Developers who previously emulated this via a single tool+schema can now switch to first‑party support dev workaround.

Grok‑5 roadmap: 6T params, multimodal, Q1’26 target

Elon Musk says Grok‑5 is a 6‑trillion‑parameter, inherently multimodal model (text, images, video, audio), aiming for higher “intelligence density” than Grok‑4, with a Q1 2026 release window model size claims, roadmap summary, timeline note. He characterizes a non‑zero chance of AGI and previews real‑time video understanding ambitions

. Teams betting on xAI should plan for large‑scale MoE‑style deployments and heavier multimodal IO.

GPT‑5.1 lands on Replicate with quickstart UI

Replicate added OpenAI’s GPT‑5.1 for hosted inference, with a quickstart UI and a short demo showcasing reliable finger‑counting and a simple prompt panel for rapid trials availability note, Replicate model. For teams that prefer managed hosting or want to A/B against their own stack, this reduces setup time and centralizes cost tracking.

Qwen Code v0.2.1 ships free web search and smarter editing

Alibaba’s Qwen Code v0.2.1 ships a packed update: free web search across multiple providers for OAuth users (2,000 searches/day), a fuzzy edit pipeline to reduce retries, plain‑text tool responses (no complex JSON), improved Zed IDE support, better .gitignore‑aware search, and cross‑platform fixes with performance tuning release notes. The on‑screen prompt flow shows first‑class WebSearch gating and permissions, which helps teams keep network calls explicit.

Claude Opus 4.5 shows up in CLI metadata

A Claude Code CLI pull request mentions "Opus 4.5," suggesting Anthropic’s largest model tier is being wired up ahead of release cli pr snippet. For engineering managers, this points to near‑term availability planning; for developers, expect updated context sizes and reasoning modes to ripple into agent harnesses that already support Sonnet 4.5.

Cline integrates Hermes‑4 70B/405B at aggressive token prices

Agentic coding tool Cline now runs Nous Research’s Hermes‑4 70B and 405B models in its VS Code extension, JetBrains, and CLI, enabling quick model swaps in existing workflows integration note. Pricing is unusually low: Hermes‑4‑70B at $0.05/1M prompt and $0.20/1M completion, and Hermes‑4‑405B at $0.09/$0.37 respectively pricing details. A short product reel demonstrates the new providers active in Cline’s selector feature overview.

OpenAI posts GPT‑5.1 prompting guide and optimizer

OpenAI shared concrete guidance for GPT‑5.1: define persona/tone, specify output format rules up front, and be explicit about when to call tools vs rely on internal knowledge. For long tasks, they recommend a plan tool with milestone states and using reasoning_effort='none' when deep thinking isn’t needed cookbook summary, GPT‑5.1 guide. An accompanying Prompt Optimizer helps iterate prompts and cost trade‑offs optimizer thread, Optimizer tool, guide highlights, Cookbook guide.

🎬 Creative AI: ads, restyling, and video tools

A heavy creative stack day: click‑to‑ad generators, real‑time video restyling, voice creation on mobile, and 3D/Video pipelines. Mostly product UX/process gains; separate from core model evals.

Higgsfield rolls out Click‑To‑Ad and runs Black Friday with unlimited image models

Paste any product URL and Higgsfield’s new Click‑To‑Ad instantly composes social ads with VO and AI avatars, aimed at one‑click creative for marketers Click‑To‑Ad demo, with a promo offering 200 credits for 9 hours. The company is also pushing a Black Friday sale (Pro from $17.4; Team $39/seat) and says “unlimited image models” are now allowed on every plan Black Friday sale, Higgsfield pricing.

- The team is also teasing Higgsfield Angles for one‑click camera re‑framing of photos Angles feature.

NotebookLM adds images as sources; Veo 3.1 supports multiple reference images

Google’s creative stack tightened: NotebookLM can now ingest images as first‑class sources (handwritten notes, whiteboards) so you can ground drafts and summaries directly on visuals Image source demo. Meanwhile, Veo 3.1 in Gemini apps can take multiple reference images alongside a prompt to guide video style/objects more precisely Veo 3.1 update, with UI strings and screenshots showing the multi‑image video flow Gemini video UI.

ElevenLabs updates mobile app to create and clone voices on device

ElevenLabs shipped a mobile app revamp that lets creators design new voices or clone their own on a phone, targeting short‑form content workflows and fast iteration without a desktop App demo. The pitch is scroll‑stopping VO made anywhere, with the same timbre and style controls people expect on web.

- Following up on Iconic marketplace, which licensed celebrity voices, this brings the capture/creation step down to the phone.

LSD v2 brings real‑time video restyling with stronger temporal consistency

Decart’s LSD v2 upgrades live video‑to‑video restyling with visibly steadier frames, better identity locking, and improved scene/context tracking versus v1—useful for streamers and live events Restyle video. A technical rundown emphasizes temporal links across adjacent frames and lightweight conditioning to keep latency low while reducing flicker and drift Tech notes.

Higgsfield Recast + Face Swap workflow spreads for fast character replacements

A creator‑tested trick pairs Higgsfield’s Face Swap with Recast: do a face swap first to get a consistent still, then feed that image into Recast for video‑level swaps—no heavy prompting required Workflow demo. It’s a practical pipeline for quick character restyling that stays coherent shot‑to‑shot.

Grok Imagine text‑to‑video draws fresh creator praise and “flow‑state” tests

Creators report stronger text‑to‑video outputs from Grok Imagine—“just hit a new level”—with example clips circulating Upgrade clip. Separate posts highlight a “flow‑state” mode for fast ideation loops while riffing through concepts Flow‑state tests. If repeatable, it gives another option for short conceptual clips alongside incumbents.

ImagineArt shows node‑based Workspaces for chained image→video loops

A creator walkthrough demonstrates ImagineArt’s Workspaces and pipeline nodes to build looping lofi clips: import a ref image, generate frames, animate with a video node (e.g., Veo 3 Fast), grab the last frame, then continue the loop—keeping style control and costs visible per node Workflow guide. It’s a tidy way to templatize repeatable creative flows.

Local TTS tip: mlx_audio runs Kokoro voices fast on Mac via CLI

For offline voice drafts, mlx_audio’s CLI can run Kokoro TTS locally on Apple silicon with streaming output, making on‑device narration practical for quick passes CLI how‑to. The appeal is low friction and privacy for creators who prefer not to ship text or voice to cloud tools.

🦾 Embodied AI: robot dexterity and agentic gameplay

Robotics clips and claims spike: debates over non‑teleoperation, precise micro‑assembly, humanoid battery swaps, and SIMA 2’s 3D goal‑following. Orthogonal to software evals and creative tools.

DeepMind’s SIMA 2 uses Gemini to plan and act across unseen 3D games

Google DeepMind introduced SIMA 2, a Gemini‑powered agent that takes high‑level natural language goals, plans, and acts in commercial 3D games via virtual keyboard/mouse input (no game code access). It generalizes skills to new titles and can play in real‑time generated worlds with Genie 3; limitations include short memory and long‑horizon precision feature brief.

Why builders care: SIMA 2 is a credible template for goal‑directed agents in constrained 3D UIs. If you’re targeting robotics, it’s a cheap sandbox for evaluation of planning, feedback, and language interaction before touching hardware.

Unitree G1 clip marked not teleoperated sparks autonomy debate

A widely shared Unitree G1 video labeled “NOT TELEOPERATED” shows smooth, varied task execution, prompting questions about how much is real‑time onboard autonomy vs. remote control. If verified, this is a notable step for affordable humanoids toward reliable, general realtime control robot demo, and could pull robotics timelines forward, as some observers note if the claim holds timeline comment.

What to do: Treat this as promising but unverified. Ask vendors for input latency traces, on‑rig compute specs, and task variance across seeds before extrapolating roadmap risk to your stack.

UBTech Walker S2 enters factories at scale with auto battery swap, 500 units targeted

UBTech says Walker S2 humanoids are now in real factory use in China with hundreds already shipped, ~$113M in 2025 orders, and a target of 500 units delivered by year‑end; a key feature is self‑service battery swaps for near‑continuous duty deployment details. This lands in context of humanoids demo, where we saw cadence building; today’s update puts numbers behind scaled deployment, including buyers like major automakers.

Why it matters: If auto‑swap keeps utilization high, humanoids stop being a once‑per‑press‑cycle novelty and start penciling for repetitive line work and warehouse shifts.

ALLEX robot hands demonstrate precise micro‑pick and fastening with safe HRI

ALLEX showcased high‑DOF hands performing micro‑pick and screw fastening with accurate, repeatable motions while maintaining safe human‑robot interaction envelopes. For factory teams exploring hand‑in‑the‑loop assembly, it’s a concrete look at dexterous manipulation that stays inside practical speed/precision trade‑offs robot hands demo.

So what? You can benchmark task time, success rate, and collision margins from the clip to scope pilot stations (PCB sub‑assembly, small fasteners) before buying grippers.

MindOn tests Unitree G1 on household chores with new hardware/software stack

Shenzhen MindOn Robotics is trialing upgraded hardware/software on the Unitree G1 to perform human‑like household tasks such as watering plants and moving packages. The demo shows smooth, varied action sequences that suggest better perception‑to‑action coupling on a consumer‑grade platform home tasks demo.

The point is: Household chores expose grounding, clearance, and contact‑rich control gaps. If you evaluate at home, log perception failures (object ID, pose, reachability) separately from policy errors to focus your next training cycles.

China trials robotic traffic cones that auto‑secure accident scenes in under 10 seconds

A pilot shows self‑driving cones rolling out from an emergency vehicle and forming a barrier in seconds; units can be tele‑operated or act autonomously, reducing responder risk during setup cone deployment.

Who should care: City ops and AV safety teams. This is a narrow, high‑value task where simple autonomy yields outsized safety gains—good ground truth for deployment, battery sizing, and remote override design.

📚 Research: self‑improving agents, memory, and efficient reasoning

Today’s papers emphasize process reliability and efficiency: agent self‑evolution, ultra‑long memory stacks, LUT compute on FPGAs, retrieval‑first fact‑checking, and calibrated concise reasoning.

Dense retrieval + classifier (DeReC) outperforms LLM rationales and slashes runtime ~95%

For fact‑checking, DeReC drops generation: retrieve evidence with dense embeddings, then classify. On RAWFC it boosts F1 to 65.58% (from 61.20% for a rationale‑generating baseline) and cuts runtime from 454m12s to 23m36s (≈95% faster). LIAR‑RAW shows similar efficiency wins, suggesting retrieval‑first pipelines are better for production latency and cost paper abstract.

Instruction following vs functionality: more instructions, more regression

Google’s “Vibe Checker” shows multi‑instruction prompts tend to degrade functional correctness, while single‑turn generation preserves functionality and multi‑turn editing better fits instruction following. The tables span Gemini, Claude, GPT‑5, and others across BigVibeBench and LiveVibeBench figure and table.

On‑policy, black‑box distillation (GAD) pushes a 14B student toward GPT‑5‑Chat

Generative Adversarial Distillation trains a discriminator to reward student outputs that look like a proprietary teacher, enabling black‑box, on‑policy learning. A Qwen2.5‑14B‑Instruct distilled via GAD scores comparably to GPT‑5‑Chat on LMSYS‑Chat auto‑evals, beating sequence‑level KD baselines paper card, ArXiv paper.

Open‑world multi‑agent ‘Station’ sets SOTA on circle packing and Sokoban

The Station is an open‑world environment where agents choose rooms/tools, keep narratives, and accumulate lineage memory. Reported results include beating AlphaEvolve on circle packing (n=32, n=26), a new density‑adaptive method for scRNA‑seq batch integration, and a 94.9% Sokoban solve rate with a compact CNN+ConvLSTM design project explainer, paper page.

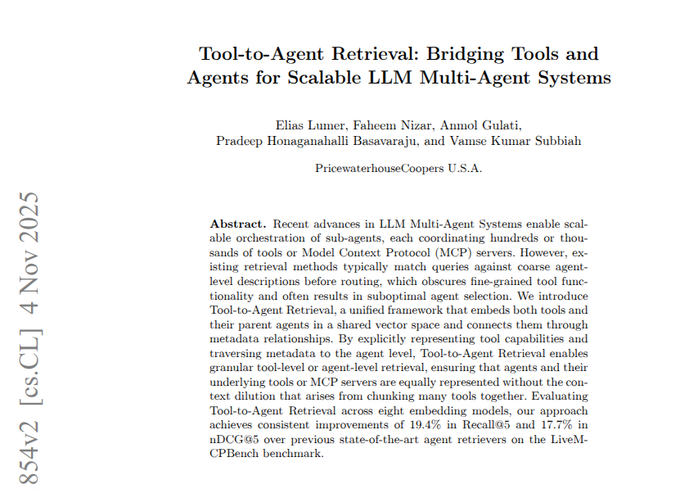

Smarter tool routing raises top‑5 recall by 19.4% on LiveMCPBench

A new approach to route LLMs to the right tools/agents improves top‑5 recall by 19.4% on LiveMCPBench, indicating that the function‑calling layer remains a major lever for production reliability and coverage paper claim.

Tiny cache for Agent‑RAG serves answers from ~0.015% of the corpus

A minimal memory stack for agentic RAG runs most queries from a microscopic slice of the corpus while preserving answer quality, cutting storage and latency for iterative search/generation loops paper note.

Agent‑RAG for fintech boosts answers via acronym expansion and refined search

An agentic RAG pipeline tailored for finance improves accuracy by expanding acronyms, refining searches, and iterating with targeted retrieval—showcasing domain‑aware, tool‑driven gains beyond generic RAG paper claim.

Dr. MAMR addresses lazy multi‑agent failures with causal influence and restarts

A study on multi‑agent reasoning introduces a causal influence metric to detect “lazy” agents and uses restart actions to recover, yielding stronger group performance on reasoning tasks paper link.

DreamGym uses LLM‑simulated environments to scale RL and speed sim‑to‑real

DreamGym reports strong RL improvements by training with LLM‑simulated experience and transferring to real settings, highlighting a practical path to cheaper data for agent learning project link.

Vector symbolic algebra solver hits 83.1% on 1D‑ARC and 94.5% on Sort‑of‑ARC

A cognitively‑inspired ARC solver encodes objects via vector symbolic algebra and learns small if‑then rules to apply actions. It posts 83.1% on 1D‑ARC and 94.5% on Sort‑of‑ARC, though full ARC‑AGI remains modest at 3.0% paper abstract.