MCP Apps becomes 1st official extension – VS Code stable in 1 week

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

MCP shipped MCP Apps as its first official extension, adding a UI layer to tool-calling: tools can return interactive widgets rendered inside the chat; user clicks/edits become structured events fed back to the tool; Claude is the first major client demoing “apps inside the assistant,” with in-chat work artifacts and connector flows like Box search + preview + Q&A. VS Code added MCP Apps rendering in Insiders with stable targeted “next week,” shifting agent output from pasted JSON/tables into editor-hosted panels; Anthropic also added a claude.ai directory entrypoint for browsing/connecting MCP Apps.

• CopilotKit/AG‑UI: claims day‑0 integration so custom chat clients can render MCP Apps widgets; early builders immediately ask about cross-client portability, with no universal “build once, run anywhere” answer yet.

• Claude Code 2.1.20: CLI reliability/terminal UX fixes; policy tightens to defensive-only security tasks; Task tool drops allowed_tools, pushing subagents toward fixed toolsets.

• Maia 200 (Microsoft): cited at 10+ PFLOPS FP4 with 216GB HBM3e and ~7TB/s bandwidth; positioned as ~30% better perf/$, but Azure SKU/pricing details aren’t in the posts.

Net effect: agents are picking up new surfaces (UI widgets, parallel browsers, container sandboxes), but the hard problems move to sandboxing, permissions, and consistent behavior across clients.

Top links today

- MCP Apps official announcement

- Qwen Chat to try Qwen3 Max Thinking

- Qwen3 resources and API links

- Anthropic paper on elicitation attacks

- LMSYS Arena to test new models

- OpenAI builder town hall stream page

- ChatGPT Code Interpreter multi-language update

- Cursor subagents with multiple browsers

- MCP Apps protocol specification

- Ollama image generation announcement

- MiniMax Agent product page

- Kimi Code open-source agent repo

- Kimi K2.5 model chat and agent mode

- DeepPlanning benchmark and leaderboard

- Anthropic MCP developer documentation

Feature Spotlight

MCP Apps go official: interactive UIs inside agent chats

MCP Apps makes tool calls return interactive UI inside chat, turning “agent output” into clickable workflows. It’s a step-change for integrating SaaS + agents without tab-switching, and it’s already live across Claude and VS Code.

Big cross-account story today: MCP Apps became the first official MCP extension, enabling tools to return interactive UI (not just text) rendered inside the chat. This cluster spans Claude web/desktop, partner connectors (e.g., Box), and editor support; excludes non-MCP product updates covered elsewhere.

Jump to MCP Apps go official: interactive UIs inside agent chats topicsTable of Contents

🧩 MCP Apps go official: interactive UIs inside agent chats

Big cross-account story today: MCP Apps became the first official MCP extension, enabling tools to return interactive UI (not just text) rendered inside the chat. This cluster spans Claude web/desktop, partner connectors (e.g., Box), and editor support; excludes non-MCP product updates covered elsewhere.

MCP Apps becomes the first official MCP extension for interactive UIs

MCP Apps (Model Context Protocol): MCP shipped MCP Apps as the first official MCP extension, letting tools return interactive interfaces (not just text) that render inside supporting AI clients, as announced in the launch post and detailed in the protocol blog post. This effectively adds a UI layer to tool-calling, so “tool output” can be a widget a user clicks/edits, with the client sending structured interaction events back to the tool.

The immediate engineering relevance is that it changes what “tool integration” means: it’s no longer only JSON/function-calling; it’s also UI contract + sandboxed rendering semantics, as highlighted by early writeups like the Claude widgets clip.

Claude adds interactive work tools in-chat via MCP Apps

Claude (Anthropic): Claude now renders interactive tool UIs inside chat for common work apps—so actions like drafting and previewing artifacts happen in-place instead of “copy → open app → paste,” as shown in the interactive tools demo. This is the first concrete “apps inside the assistant” UX most builders can point to, and it’s built on MCP Apps per the MCP Apps announcement.

• Interaction model: rather than returning only text, connected tools can return UI that the user edits/clicks; the assistant can then incorporate those interactions as structured inputs for follow-up steps, per the interactive apps clip.

• Connector shape: Anthropic positions this as a multi-tool workspace move (Slack/Figma/Asana-style flows) in the interactive tools demo, with Box-style “preview then ask” appearing as a canonical pattern in the same announcement thread.

VS Code adds MCP Apps support for interactive tool UIs

VS Code (Microsoft): VS Code announced MCP Apps support so agent tool calls can return interactive UI components rendered directly in the conversation; it’s available in VS Code Insiders with stable “next week,” per the support announcement and the release timing note. The integration details are described in the VS Code blog post.

This is a meaningful devex shift: instead of agents dumping tables/JSON/diffs as text, the editor can host purpose-built widgets (review panels, selectors, forms) while keeping the conversation as the control plane, which is the core idea outlined in the support announcement.

Box file search + preview lands inside Claude via MCP Apps

Box × Claude (Anthropic + Box): Claude can now search and preview Box files directly in the chat UI and answer questions about the file contents, as demonstrated in the Box preview demo and called out by Box in the Box partnership note. For builders, this is a reference implementation of “document connector + interactive preview UI,” not just “RAG over a drive.”

The open question for teams is how quickly this pattern spreads to other content systems (and how admins constrain it), but the core interaction—preview + ask + draft output—now has a default shape to copy, per the Box preview demo.

Claude launches a directory flow for connecting MCP Apps

Claude directory (Anthropic): Claude now has a directory entrypoint for connecting MCP Apps (“favorite MCP Apps in Claude Chat”), as posted in the directory announcement with the entry URL in the Claude directory page.

This matters mainly as distribution plumbing: once there’s an official place to browse/connect MCP servers that include UI, MCP Apps moves from a protocol feature to something teams can expect end-users to discover and enable via product UI, not docs.

CopilotKit publishes day‑0 AG‑UI integration for MCP Apps

CopilotKit (AG‑UI): CopilotKit says MCP Apps has day‑0 integration with AG‑UI, framing it as “a few lines of code” to bring MCP Apps into your own agentic assistant, per the integration note. The implication is that MCP Apps UI widgets aren’t limited to Claude/VS Code—there’s now a client-side rendering path for teams building custom chat UIs.

This is one of the first concrete “build your own MCP Apps-capable client” signals in the wild; the post anchors on the protocol-level launch in the MCP Apps announcement and points at an implementation path rather than just a spec.

MCP Apps sparks “build once, run anywhere” UI portability questions

Generative UI portability: As MCP Apps rolled out, builders immediately asked whether the same interactive widget can span multiple assistant ecosystems (e.g., Claude vs “ChatGPT Apps”), as raised in the cross-platform widget question and echoed by others framing MCP Apps as an “open standard” worth investing in, per the open standard note.

The practical point is that “interactive tool output” is starting to look like a front-end surface with competing specs; today’s signal is mostly questions, not answers, but the early concern is clear: teams want one UI artifact that works across clients, not one per assistant product.

🛠️ Claude Code changes: 2.1.20 CLI + policy tightening

Continues the Claude Code tooling beat with a concrete 2.1.20 changelog: lots of terminal UX fixes plus notable prompt/policy shifts (defensive-only security posture, PR creation formatting). Excludes MCP Apps (covered as the feature).

Claude Code 2.1.20 ships major CLI/terminal UX and reliability fixes

Claude Code 2.1.20 (Anthropic): The CLI release focuses on terminal ergonomics and long-session stability—most notably fixing session compaction/resume regressions and polishing input/history behaviors, as detailed in the changelog bullets and the upstream GitHub changelog.

• Long-run session hygiene: Resume/compaction bugs that could reload full history instead of a compact summary are called out as fixed in the changelog bullets, which matters if you rely on long-running threads.

• Terminal interaction polish: Vim-normal-mode history navigation and better arrow-key behavior for wrapped/multiline input land in 2.1.20 per the changelog bullets, alongside rendering fixes for wide characters.

• Workflow affordances: A PR review status indicator appears in the prompt footer, and /sandbox UI now surfaces dependency status with install instructions according to the changelog bullets.

• Flags churn: Several tengu_* flags were added/removed, with the concrete diff surfaced in the flag diff and its linked compare view.

Claude Code 2.1.20 removes Task tool allowed_tools parameter

Claude Code 2.1.20 (Anthropic): The Task tool schema no longer includes an allowed_tools parameter, which removes a structured way to request/grant specific tools to spawned agents; this is explicitly called out in the prompt change recap and reiterated in the schema removal note.

Net effect: subagents get pushed toward the fixed toolsets defined by subagent_type, which can change how you structure “planner vs executor vs reviewer” splits if you previously relied on per-task tool whitelisting.

Claude Code 2.1.20 tightens security scope to defensive-only

Claude Code 2.1.20 (Anthropic): The prompt/policy layer was tightened so Claude Code is limited to defensive security; it must refuse creating/modifying code that could be used maliciously and avoid credential discovery/harvesting patterns, per the policy tightening and the referenced policy diff.

This is a behavioral change you’ll feel in day-to-day workflows: even if the CLI got more capable, some categories of “security engineering” assistance will now hard-stop depending on how the task is framed, as summarized in the prompt change recap.

Anthropic roadmap rumor: inline voice UI, Claude Code prompt suggestions, effort selector

Claude/Claude Code (Anthropic): TestingCatalog reports Anthropic is working on an inline voice-mode UI that lets users switch between text and voice, and also claims Claude Code may get prompt suggestions plus a “Thinking effort selector” inside the model picker, per the roadmap rumor and the follow-up feature list.

Nothing here is shipped in these tweets, but it’s a concrete set of UX knobs that would affect how teams trade off latency vs depth (effort selector) and how much prompting overhead gets pushed into the client (prompt suggestions).

Claude Code 2.1.20 changes gh pr create behavior: title + summary required

Claude Code 2.1.20 (Anthropic): PR creation now requires both a short title (under 70 chars) and a summary, pushing details into the PR body for gh pr create flows—see the specific rule in the PR format requirement and the broader prompt-change bundle in the prompt change recap.

This is a small change on paper, but it directly affects automation scripts and “agent writes PRs” workflows, because PR metadata formatting is now treated as a required output contract.

Claude Code PR quality loop: claude -p fresh-context self review on every PR

Claude Code (Anthropic): An Anthropic engineer says they run a fresh-context self-review on every PR using claude -p, and that it “catches and fixes many issues,” as described in the internal workflow note.

This is a very specific operational pattern: treat the implementation context and the review context as separate windows, so the model re-derives intent and checks for dead code/overcomplication without being anchored by its own earlier chain of decisions.

Claude $20 plan pricing friction: users report fast Opus caps

Claude plans (Anthropic): Pricing/limits friction is surfacing as users complain that the $20 plan yields “like 4 Opus messages and then caps,” per the plan cap complaint, with the subscription entry point shown on the plan page.

It’s a narrow data point, but it’s the kind of constraint that changes how teams choose between CLI tools, IDE integrations, and when to drop to cheaper models for iterative work.

Context window management vs readability: is AI inverting a core coding principle?

AI coding practice (signal): Uncle Bob raises the question of whether AI coding flips the long-held “optimize for readability because we read more than we write” principle into something closer to “optimize context window management,” as posed in the context window question.

This frames a real tension teams are starting to feel: productivity can become a function of what you can keep inside (and compress out of) the working set, not only what humans can skim safely.

🧪 Codex in the wild: plan mode momentum and harness swapping

Codex discussion today is heavy on real-world usage and workflow switching—users report moving entire stacks to Codex, plus plan-mode UX becoming a default for some. Excludes general OpenAI business/town-hall items (handled under business).

Codex v0.91.0 adds Plan Mode via collaboration_modes toggle

Codex v0.91.0 (OpenAI): Codex is now shipping a more formal Plan Mode flow, exposed behind codex --enable colloboration_modes, as shown in the Plan Mode demo; one user notes a single plan consumed 27% of context, per the Context usage note, suggesting the mode is designed to be thorough rather than lightweight.

• What’s new for daily work: The shared expectation is “PM-style” upfront planning that’s detailed enough to hand off into execution, as reflected in the Plan output clip; this follows up on Plan mode handoff (explicit plan→execute handoff) with a stronger emphasis on plan completeness and structure.

Open questions from the thread-level evidence: whether Plan Mode is a persistent default, how it interacts with context compaction, and whether the verbosity is configurable beyond the feature flag.

Field report: “Used Codex all day” and rewired a dev stack

Codex (OpenAI): A practitioner reports “used codex all day” and says they were able to rework “all of Claude’s development infrastructure” and “started making progress again,” per the All-day Codex report; the same account also posts they “cancelled claude code” and are “using kodex from now on,” as shown in the Switching clip.

• Sentiment signal: The same thread family frames the experience as “Codex is so much better than Claude Code,” per the Tool comparison claim, and attributes the switch to both Codex improving and Claude Code regressing, as argued in the Regression claim.

What’s missing here is an artifact-level comparison (PR links, diffs, benchmarks); this is mostly “in the loop” workflow testimony, but it’s consistent across multiple posts by the same author.

Codex harness portability becomes a wedge issue vs Claude lock-in

Codex (OpenAI): Harness portability is becoming part of the Codex-vs-Claude argument, with one builder claiming Codex “allows you to use opencode, and other harnesses,” while asserting Anthropic will “ban you from using anything other than software that you don’t control,” as stated in the Harness lock-in claim.

• Why this matters technically: If true, it shifts the decision from “which model” to “which control plane” (your own CLI/editor wrapper vs a vendor client), and it impacts how teams standardize prompts, tool policies, and audit logs across environments; the same author’s broader frustration about “silently rug” behavior shows up in the Service regression rant.

This is still mostly rhetoric plus lived experience; the tweets don’t include policy text or enforcement details, so treat it as a directional signal rather than a confirmed restriction model.

OpenCode users say the Codex experience is “getting good”

OpenCode (opencode): Multiple users report that the Codex experience inside OpenCode is improving—one says they “used it all day with a lot of success,” as noted in the Day-long usage note, and another amplifies that it’s “getting good,” per the Follow-up comment.

• Adoption context: OpenCode’s own usage chart snippet shows ~2,003,958 total unique and ~1,743,658 CLI unique for “January 2026 (UTC),” as displayed in the Usage stats image, which helps explain why Codex-in-OpenCode reports are getting louder.

The tweets don’t specify which Codex tier/model is being used in OpenCode or what tooling is enabled (subagents, browsers, etc.), so comparisons across setups will be noisy.

Kilo Code adds support for using a Codex subscription directly

Kilo Code (Kilo): Kilo says Codex users can now use their subscriptions “directly in Kilo,” positioning it as a cheaper way to access “top GPT models” for agentic engineering, per the Integration announcement, with setup details in the Setup blog.

This is another data point that Codex is being treated as a back-end capability that third-party harnesses can plug into, not only a first-party CLI/product experience.

RepoPrompt frames Codex as “deep research agent” in a multi-model workflow

RepoPrompt (RepoPrompt): A builder describes a model-routing heuristic where “Claude is the most ergonomic,” “Codex is deepest research agent,” and “GPT 5.2 is deepest analysis,” with /rp-build automating the selection, as laid out in the Model routing heuristic.

• How it shows up in practice: The same author describes /rp-investigate as producing a “human readable report” that helps decide next steps on tricky bugs or complex systems, per the Investigate workflow note.

This is less about Codex features and more about treating Codex as a specialized worker in a larger toolchain (routing + packaging + report generation).

🧰 Agent runners & ops: Clawdbot scaling pain, observability, and browser control

Clawdbot remains an ops-heavy story: rapid adoption, reliability/expectation mismatches, and new automation surfaces (cloud browsers, messaging workflows). Excludes MCP Apps feature coverage and avoids any bio/chemical weapon content.

Hyperbrowser adds a cloud-browser path for Clawdbot automation

Hyperbrowser × Clawdbot: Hyperbrowser ships a Clawdbot integration that routes web automation through cloud browsers, calling out stealth mode, automatic CAPTCHA handling, and multi-page scraping, as shown in the browser automation demo.

• How it’s wired: The team points to a Clawdbot skill PR for the integration, as linked in the GitHub PR.

• What it enables: The example flow chains search plus scraping and returns full markdown content and summaries, per the browser automation demo.

The main open question from the tweets is operational: how people will sandbox and credential-scope browser automation at scale (profiles, secrets, and per-task permissions).

A “separate inbox” pattern emerges for email-capable agents

AgentMail (email blast-radius control): A recurring setup pattern is to give the agent its own email account (so it never touches the primary inbox) and forward/CC items as tasks; the user describes it as making the agent feel more like a human assistant in the workflow description and the separate inbox note.

A follow-up clarifies notification mechanics: AgentMail can trigger the bot via a webhook only for approved senders, as described in the webhook detail.

Clawdbot maintainer flags rising support burden as usage spikes

Clawdbot (open source): The maintainer describes a sudden support load where users treat a free, <3‑month‑old hobby project like a “multi‑million dollar business,” including security researchers demanding bounties, while also reiterating “most non-techies should not install this” and acknowledging sharp edges, as described in the maintainer note.

This reads like an ops signal: when an agent runner gets popular fast, expectations (support, security, polish) reprice immediately—even if the software hasn’t.

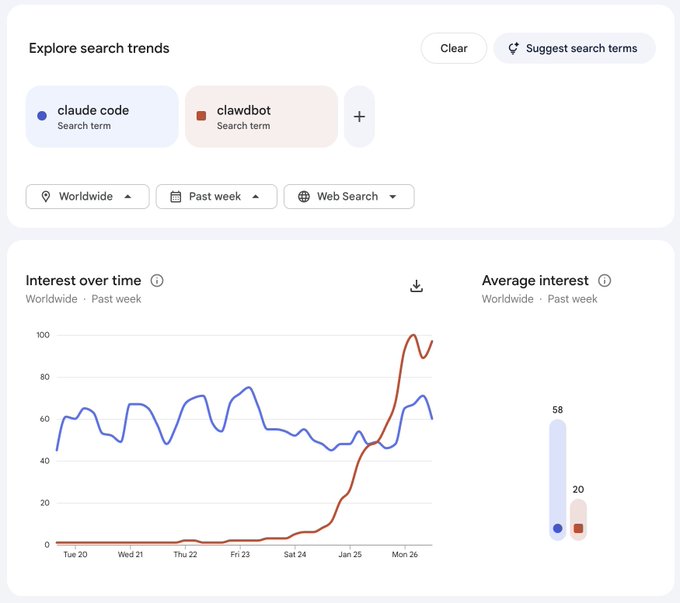

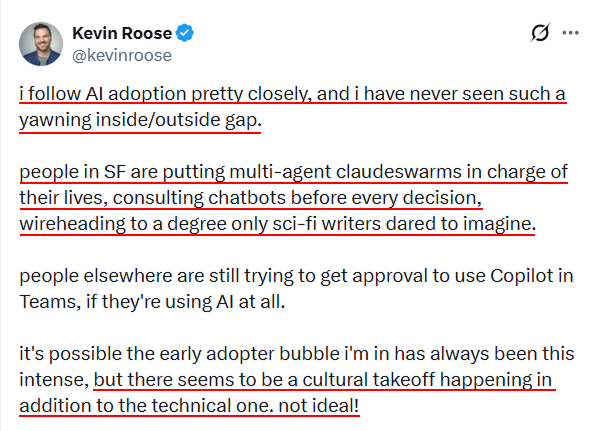

Search interest for “clawdbot” jumps sharply in the past week

Clawdbot (search demand): A Google Trends comparison shows “clawdbot” going from near-zero to a sharp spike that briefly surpasses “claude code,” with the “clawdbot” line peaking near 100 while “claude code” stays in the ~40–80 band, as shown in the Trends screenshot.

This is a concrete diffusion signal for agent runners: attention is shifting from “coding assistant” queries toward “run an agent for me on my machine” queries.

WezTerm mux performance tuning shows up as an agent-ops bottleneck

WezTerm (agent concurrency): Running “past 50 agents on the same machine” is reported to trigger laggy SSH sessions and an unresponsive mux server; a set of WezTerm mux settings trades RAM for smoother parsing/scrollback and faster prefetch, as described in the tuning writeup.

This is a concrete “agent swarm tax”: terminal/mux defaults are tuned for humans, and heavy agent output turns parsing, buffers, and caches into reliability limits.

Clawdbot can scan OpenRouter free models from the CLI

Clawdbot (OpenRouter provider): OpenRouter highlights a built-in provider path and a discovery command—clawdbot models scan—to enumerate OpenRouter’s free model catalog, as described in the CLI tip.

• Docs to copy/paste: Setup and the scan behavior are documented in the Provider setup docs and the Models scan docs.

This is mostly a cost/ops lever: it turns “try a different model” into a first-class CLI action, which matters when you’re tuning agent latency and token spend across providers.

Clawdbot deployment friction shows up on Railway web-only flows

Clawdbot (deployment): A user reports that deploying via Railway’s web UI leaves them unable to run clawdbot doctor, which blocks troubleshooting and leaves Telegram setup stuck, as described in the Railway deployment report.

This is a common failure mode for agent runners: when the “fix it” step is a CLI command, UI-only deploys can strand users mid-configuration.

Clawdbot’s real-world usage highlights expectation mismatches

Clawdbot (ops reality): The maintainer highlights how quickly “people actually using your stuff” surfaces rough edges and surprising expectations, as framed in the usage pressure note.

The screenshoted failure mode is familiar for agent runners: the agent confidently promises a workflow (“calendar/schedule”), the underlying integration fails, and the user experience becomes a trust + recovery problem rather than a capability problem, as shown in the chat screenshot.

OpenRouter documents a Sentry-based observability path for Clawdbot

OpenRouter (observability): OpenRouter calls out an observability setup using Broadcast + Sentry for Clawdbot runs/errors, pointing to a step-by-step guide in the observability note and the linked Sentry guide.

The practical implication is that “agent runner ops” is converging on the same baseline as production services: structured error capture, alerting, and traceability—without depending on the agent’s own narrative logs.

Security reporting noise becomes an ops burden for Clawdbot

Clawdbot (security triage): The maintainer calls out a signal-to-noise issue where some “security experts” open low-quality, AI-generated (“slop”) issues, making triage harder even when there’s also good security work, as described in the triage complaint with an example linked in the GitHub issue.

This is an operational scaling problem: once a repo is popular, “issue quality” becomes part of the security posture because it determines what gets attention first.

🧭 Cursor & IDE agents: subagents expand browser parallelism

Cursor-specific updates today are about practical agent parallelism: multiple browser instances with subagents. Excludes broader MCP Apps UI story (feature) and general agent runner ops (agent-ops-swarms).

Cursor lets subagents run multiple browsers at once

Cursor (Cursor): Cursor can now run multiple browser instances simultaneously via subagents, expanding what “computer-use” flows can do in parallel—e.g., comparing pages, validating actions, or gathering info from multiple sources without serial tab switching, as announced in the Multi-browser support.

The key unknown from these tweets is how Cursor coordinates shared state (credentials, cookies, artifacts) across those concurrent browsers—expect teams to treat this like a new class of race-condition and containment problem until the ergonomics and guardrails are clearer.

Cursor subagents show up as a practical parallel work pattern

Cursor subagents (Cursor): A hands-on clip shows Cursor using subagents to drive a task to completion in a tight loop—fast execution + quick termination—framed as “cursor solving … with subagents” in the Subagents demo.

This is a concrete example of why subagents matter even when the model is unchanged: the workflow advantage is parallelism + isolation, not a single long context that tries to do everything at once.

Parallel browser subagents become the default mental model for UI testing

Parallel browser subagents: People are explicitly describing the new baseline as “agents click around and test stuff for you… all at once,” in the Parallel click-around note; Cursor’s new capability to run multiple browsers with subagents provides a concrete substrate for that mental model, as shown in the Multi-browser support and reinforced by the Subagents demo.

In practice, this pattern tends to shift bottlenecks from writing code to supervising state, verifying outcomes, and keeping parallel threads from stepping on each other’s auth/session state.

Cursor Pro plan is framed as paying for indexing and background agents

Cursor pricing (Cursor): A builder highlights the $20/mo Cursor plan as valuable mainly because it bundles infra-heavy features—semantic embeddings/repo indexing plus Background Agents—rather than just model access, as described in the Plan value note.

This reads as a reminder that “agentic IDE” differentiation is drifting toward always-on retrieval + orchestration features that are hard to replicate without sustained infra and eval work.

🧠 Agentic coding practice: from “programming in English” to verification-first

Today’s workflow discourse is dominated by practitioner writeups on how agent coding actually works (and fails): plan modes, success criteria, verification loops, and skill atrophy. Excludes specific product changelogs (covered in tool categories).

Agent “speedup” shows up mostly as scope expansion, not time saved

Speedup vs expansion (Karpathy): The reported impact isn’t clean “2× faster”; it’s doing more than you would have done—because things become worth building that previously weren’t, and because agents let you attack codebases/domains you couldn’t handle due to skill gaps, as written in the speedup notes.

This is a different productivity story than classic IDE automation: output volume and breadth expand, and measurement gets fuzzy, per the speedup notes.

Fresh-context self-review loop: model reviews its own PRs before merge

PR workflow at Anthropic (Claude Code team): One quantified datapoint is shipping 22 PRs in a day and 27 the day before, each “100% written by Claude,” as described in the team workflow response. Following up on Fresh eyes review (agent self-review loop), they describe a standard practice of having the model code-review its own code in a fresh context window using claude -p on every PR, as stated in the team workflow response.

This doesn’t resolve the code-quality concerns raised elsewhere, but it does show a concrete mitigation pattern—fresh-window review as a routine gate—using the same model family described in the team workflow response.

Skill atrophy signal: reading code stays, writing code decays

Manual-skill atrophy (Karpathy): A concrete cognitive distinction gets named—generation (writing) vs discrimination (reading)—with the claim that agent-heavy workflows can slowly atrophy your manual code-writing ability while leaving code-reading intact, as described in the atrophy notes.

This shows up as a new kind of “bus factor”: teams may stay capable at review/debug, but lose fluency in hand-authoring under time pressure, per the atrophy notes.

Stamina becomes a bottleneck unlock: agents grind without fatigue

Tenacity as capability (Karpathy): A notable observation is that agents will grind on a hard problem for ~30 minutes without demoralizing, which changes what tasks feel worth attempting; the “stamina is a core bottleneck” framing is spelled out in the tenacity notes.

This matters because it changes the human role: you steer and verify intermittently while the agent does the persistence-heavy exploration work, as implied by the same tenacity notes.

Tests become the asset: be the architect and tester, not the typist

Tests-first framing (slow_developer): A direct claim is that “tests are the most valuable part of the codebase” in agent-heavy projects; frontier models can write much of the implementation, but humans still need to be the architect and tester, as stated in the tests as asset.

This complements the idea that correctness constraints should be machine-checkable: tests become the living spec that keeps the agent honest, per the tests as asset.

Verification becomes a first-class skill: agents amplify output, not trust

Verification discipline (omarsar0): A succinct takeaway is that “verification” is the limiting factor; agents can verify more (tests, checks), but humans need to stay present for steering and non-automatable verification, as stated in the verification reminder.

This matches the broader observation that agents can feel “1000×,” but subtle conceptual errors and assumption drift still require active oversight, echoing the failure mode notes.

Context windows may be the new productivity unit for AI coding

Context management question (Uncle Bob): A classic software maxim—optimize for reading code, not writing—gets challenged by the possibility that AI coding inverts the constraint, making “effective management of context windows” the real productivity skill, per the context question.

This is less about prompt tricks and more about operational practice: what you choose to keep in working context, how you segment tasks, and how you preserve intent across iterations, as implied by the context question.

In an agent era, subtraction becomes the hard part of product work

Product subtraction (ryolu_): A clean counterweight to “agents make building cheap” is that accumulation kills clarity; the hard skill is deciding what to leave out or remove, even when it works, as argued in the subtraction essay.

This lands as an engineering workflow constraint: if implementation cost drops, the bottleneck moves to coherent system design and pruning—reinforced by the observation that agents can help you “click around and test stuff… all at once,” which increases the rate of addition pressure in the parallel testing remark.

Spec-driven development as the “imperative→declarative” endpoint

Spec-driven development (Karpathy): Karpathy explicitly endorses “spec-driven development” as the limit of the imperative→declarative transition, as captured in the spec-driven endorsement.

In practice, this is the same lever described elsewhere in his notes: invest in precise success criteria and verifiable constraints so agents can take bigger steps with less babysitting, aligning with the leverage notes.

Crush renderer hits ~3ms frame renders for high-throughput TUI workflows

Crush (Charm): Charm shows its in-house diffing renderer Crush rendering terminal frames in ~3ms, positioning it as a key building block for high-refresh TUIs, as demonstrated in the render demo.

The practical angle for agentic coding setups is that terminals become the “control plane” for many concurrent agent sessions; lower render latency reduces perceived lag when agents stream lots of output, and Crush is explicitly usable via Bubble Tea per the render demo, with code in the Bubble Tea repo.

🧬 Agent frameworks & multi-agent design primitives

Framework-level conversation today centers on what “agents” are, when multi-agent systems make sense, and early steps toward self-improving dev agents. Excludes MCP Apps protocol specifics (feature).

FactoryAI introduces Signals as a step toward self-improving dev agents

Signals (FactoryAI): FactoryAI announced Signals, positioning it as work toward a self-improving software development agent, as stated in the Signals announcement and expanded via the News post.

The details in the tweets are high-level, but the framing is clear: treat software development as a feedback system the agent can improve over time, rather than a one-off “do this task” loop.

Weaviate’s “what is an agent” reset focuses on autonomy loops over chat UX

Agent definition (Weaviate): Weaviate argues the word “agent” has become meaningless, and anchors it on autonomous operation in complex environments—goal interpretation, tool execution, stateful memory, and evaluation/adaptation over time, as laid out in the Agent definition post.

The practical engineering point is that “agent-ness” is less about extra reasoning tokens and more about building a loop with verifiable state, tool boundaries, and failure recovery—i.e., the parts teams typically end up hand-rolling anyway.

LangChain publishes a “when multi-agent” and “which architecture” guide

Multi-agent docs (LangChain): LangChain says it has new documentation focused on deciding when you actually need multi-agent setups and how to choose an architecture, as announced in the Docs announcement.

This is mostly a packaging move (guidance rather than new primitives), but it’s aimed at a real failure mode: teams defaulting to multi-agent as a buzzword without a clear delegation boundary or coordination model.

Per-subagent sandboxes show up as a default RLM deployment pattern

Recursive Language Models (RLM): A shared RLM guide emphasizes giving each agent/sub-agent its own execution sandbox (framed as a core design choice, not an add-on), as referenced in the RLM guide mention.

This pattern treats isolation as the scaling primitive: instead of “one agent with all tools,” you get many small, separately contained workers, which makes retries and blast-radius control tractable when you start parallelizing.

RSA + Gemini 3 Flash claim: 59.31% on ARC‑AGI‑2 at one-tenth cost

Recursive Self-Aggregation (RSA): A result being circulated claims RSA paired with Gemini 3 Flash hits 59.31% on public ARC‑AGI‑2 while costing about 1/10th of Gemini Deep Think, per the RSA result claim.

This is an eval-and-systems signal more than a model story: it suggests algorithmic scaffolding (aggregation over attempts) can buy a large chunk of “reasoning” at much lower cost, at least on this benchmark.

“Teams, not swarms” framing spreads as a multi-agent coordination cue

Multi-agent metaphors: The “don’t call them swarms” thread continues, arguing that words like teams/organizations imply useful structure (roles, delegation, accountability) rather than spooky vibes, with the discussion captured in the Collective nouns thread.

Alongside that, there’s pushback that “swarm” is an unnecessarily dominant metaphor and alternatives (other sci‑fi metaphors, different coordination images) might be more accurate, as suggested in the Metaphor critique.

🧱 Skills & extensions: packaging capabilities for agents

The installable extension layer is heating up: skills directories, packaged model/tool skills, and “agent skills ecosystem” growth. Excludes MCP Apps (feature) and pure agent-runner ops (agent-ops-swarms).

skills.sh reports 550+ skills/hour and improves CLI discovery

skills.sh (Vercel): The open “agent skills” directory is scaling fast—reportedly 550+ skills added every hour—and the maintainers also shipped more CLI tools plus better on-site search/discoverability, as described in the [status update](t:48|status update).

The practical impact is that “installable capabilities” are trending toward a package ecosystem (install/find/check/update) instead of bespoke copy-pasted agent prompts, with npx skills@latest positioned as the entry point in the [CLI preview](t:48|CLI preview).

Black Forest Labs packages FLUX as an installable agent skill

BFL Skills (Black Forest Labs): Black Forest Labs wrapped FLUX into a single installable skill so coding agents can handle model selection, prompting, and API wiring; install is shown as npx skills add black-forest-labs/skills in the [launch post](t:133|launch post).

• Model presets: The skill advertises “sub-second generation/editing” via [klein], “highest quality” via [max], and text rendering via [flex], as laid out in the [feature list](t:133|feature list).

• Tool portability: It’s explicitly framed as working across Claude Code, Cursor, and other IDEs, per the [compatibility note](t:133|compatibility note).

ClawdHub directory flagged for a reported supply-chain attack

ClawdHub (skills directory): A warning circulated about a reported supply-chain attack on the ClawdHub skills directory, where a skill allegedly inflated downloads to reach #1 and “multiple users” were compromised, as claimed in the [incident post](t:793|incident post).

The follow-up frames this as a reminder that the ecosystem is still “dev tooling and for tinkerers,” emphasizing cautious setup and operational diligence, as clarified in the [safety follow-up](t:950|safety follow-up).

Browser Use shows a lead-enrichment loop driven by Clawdbot subagents

Browser Use (integration pattern): A concrete “skills-powered” automation loop is being shared: run an hourly job that fetches new GitHub starrers, enriches profiles via browser subagents, scores fit, then triggers Slack alerts and outbound via Gmail/LinkedIn, as described in the [workflow recipe](t:532|workflow recipe).

This is a clean example of how agent extensions are turning into repeatable pipelines: the important part isn’t one tool call, it’s the ongoing loop (schedule → enrich → score → alert → act) baked into the [Clawdbot + Browser Use setup](t:886|setup link).

ElevenLabs launches a Clawdbot voice-skill contest with a Mac Mini prize

ClawdEleven contest (ElevenLabs): ElevenLabs is running a short contest to build a Clawdbot skill using ElevenLabs audio models or its Agents platform, with a Mac Mini as first prize, as announced in the [contest post](t:301|contest post).

• Prize structure: The thread spells out 1st/2nd/3rd prizes (Mac Mini + Pro plan; then Pro plans), as enumerated in the [prize list](t:704|prize list).

• Entry requirements: They ask for a video demo and a code+docs submission via a form, as detailed in the [how to enter](t:776|how to enter) instructions.

RepoPrompt’s /rp-investigate is used for human-readable bug reports

RepoPrompt (/rp-investigate): Practitioners are leaning on /rp-investigate to produce a structured, human-readable report for tricky bugs and complex systems, rather than iterating in free-form chat, as described in the [workflow note](t:465|workflow note).

In the same thread of tool-choosing, RepoPrompt’s author claims model strengths split by task—Claude for “talking ergonomics,” Codex for deeper research, GPT‑5.2 for deepest analysis—then uses /rp-build to automate routing, according to the [tool positioning](t:208|tool positioning).

AI SDK skill is getting traction for doc fixes and cleanup work

AI SDK skill: The AI SDK skill is getting positive field feedback, with developers saying it “works much better than expected” when agents generate AI SDK code, as stated in the endorsement.

A notable usage angle is documentation maintenance: one report claims agent loops are showing “superhuman performance for fixing docs,” tying the win to doc/cleanup work rather than net-new feature code, as described in the [docs note](t:529|docs note).

📏 Benchmarks & eval signals: tool-use scores, planning gaps, and market-based tests

Today’s eval discourse clusters around agentic benchmarks (HLE/tool use, planning benchmarks) plus market-style evaluations (PredictionArena). Continues yesterday’s model race, but with new benchmark artifacts and leaderboards mentioned explicitly.

Qwen3-Max-Thinking claims 58.3% on HLE with tools and strong reasoning gains

Qwen3-Max-Thinking (Alibaba): Alibaba announced Qwen3-Max-Thinking with an emphasis on adaptive tool-use (Search/Memory/Code Interpreter without manual switching) plus test-time scaling/self-reflection, as described in the Launch thread. The headline benchmark claim is 58.3% on Humanity’s Last Exam with search/tools, also summarized in the Benchmark comparison.

• Benchmarks and comparisons: the published chart highlights HLE (with Search) at 58.3 for the TTS variant (and 49.8 base), with comparisons against GPT-5.2, Claude Opus 4.5, and Gemini 3 Pro, as shown in the Launch thread; the separate comparison table reiterates HLE (w/tools) 58.3% alongside SWE-bench Verified 75.3%, per the Benchmark comparison.

• Builder sentiment: early reactions include “qwen3-max-thinking is WOW” in the Builder reaction and “outperforms all SOTA models… in HLE with search tools” in the Evals reaction.

A key open question is how much of the “tool-use lift” comes from the benchmark harness/tooling vs model behavior; the tweets provide charts but no independent reproduction artifact yet, based on the Launch thread and Benchmark comparison.

GPT-5.2 Pro sets a FrontierMath Tier 4 record at 31%

FrontierMath Tier 4 (EPOCH AI result): A report claims GPT‑5.2 Pro reached 31% accuracy on FrontierMath Tier 4, up from a prior 19%; it also notes 15/48 problems solved, including four that “had never been solved by any model before,” per the FrontierMath result.

The same post adds that performance on “held-out problems” was strong (suggesting non-memorization), but the tweet itself is the only artifact provided here, per the FrontierMath result.

Kimi K2.5 publishes agent-benchmark claims: HLE 50.2% and BrowseComp 74.9%

Kimi K2.5 (Moonshot AI): Moonshot announced Kimi K2.5 as “open-source visual agentic intelligence,” publishing agent-benchmark claims including HLE (full) 50.2% and BrowseComp 74.9%, plus a cluster of vision/coding scores in the Model announcement.

• Where it’s positioned: the same chart claims competitiveness across agent tasks (BrowseComp/DeepSearchQA) and coding (e.g., SWE-bench Verified 76.8%), per the Model announcement.

• Parallelism story: Moonshot also markets an “Agent Swarm (Beta)” concept (“up to 100 sub-agents”) as part of the product framing in the Model announcement.

The benchmark claims are vendor-reported in the tweets, and the “agent swarm” feature is described as beta/limited access, based on the Model announcement.

DeepPlanning benchmark formalizes long-horizon planning with verifiable constraints

DeepPlanning (Alibaba/Qwen): Alibaba introduced DeepPlanning, a long-horizon agent planning benchmark built around verifiable global constraints (time budgets, cost limits, combinatorial optimization) rather than step-by-step reasoning, as described in the Benchmark intro. It’s positioned as a reality check for “agent planning” because the whole plan must satisfy constraints end-to-end.

• Early signal from the shared table: the accompanying benchmark table shows Deep Planning remains hard across frontier models—GPT-5.2 at 44.6, Claude 4.5 at 33.9, Gemini 3 Pro at 23.3, and Qwen3-Max-Thinking at 28.7, as shown in the Benchmark table.

The benchmark is explicitly framed as exposing long-horizon “constraint drift” that current tools and planning heuristics still fail to catch reliably, per the Benchmark intro.

PredictionArena reveals early Grok 4.20 checkpoint leading with +10% returns

PredictionArena (market-style eval): A “mystery model” on PredictionArena was revealed as an early Grok 4.20 checkpoint; it reportedly delivered +10% returns over two weeks while other models were negative, per the Leaderboard reveal. The same post claims Opus 4.5 and GLM 4.7 were both around -2%, with the live standings available via the Live leaderboard.

This is a different kind of eval signal: it’s not accuracy on a fixed test set, but ongoing performance in a trading environment where calibration and update timing matter, as described in the Leaderboard reveal.

Qwen3 Max Thinking enters LM Arena Text Arena for head-to-head testing

Text Arena (LMArena): LMArena announced Qwen3 Max Thinking is now in the Text Arena, inviting direct head-to-head comparisons “with your toughest prompts,” per the Arena announcement. The same announcement frames it as a follow-on to a prior Qwen3 Max preview that debuted “in the top 10,” per the Arena announcement.

This is an eval-surface update rather than a new benchmark: it makes the model accessible for community pairwise testing, but the tweets don’t include methodology details beyond the arena framing in the Arena announcement.

🚀 Runtime & execution: containers, local inference, and context limits

Infra-adjacent engineering updates today are mostly about how code actually runs: ChatGPT’s expanded sandbox/container abilities, vLLM operational tips, and local stacks like Ollama expanding capabilities. Excludes hardware announcements (hardware-accelerators).

ChatGPT Code Interpreter quietly gains multi-language container runtimes

ChatGPT Code Interpreter (OpenAI): ChatGPT’s “Code Interpreter” environment appears to have been upgraded into something much closer to a general container sandbox—supporting pip/npm installs and execution across Python, Node.js, Bash, Ruby, Perl, PHP, Go, Java, Swift, Kotlin, C and C++, as documented by a community teardown rather than official release notes in the containers write-up and the linked blog post. This matters because it changes what “bring code + run it” means inside ChatGPT: you can now validate multi-language snippets, build small CLIs, and run cross-runtime glue without leaving the chat surface.

The open question is scope/limits (networking, persistence, system libs), since the rollout was not formally documented in the tweets.

Ollama adds a first-party Clawdbot integration via ollama launch

Clawdbot on Ollama (Ollama): Ollama is promoting a first-party integration path to run Clawdbot with local models using ollama launch clawdbot, pointing to official setup instructions in the docs page and the announcement post.

This is mainly a deployment/execution story: it lowers the friction to swap remote model calls for local inference (and can shift the bottleneck from API spend to local GPU/RAM/latency).

vLLM can auto-fit max context length to your GPU

vLLM (vLLM Project): A small but high-impact serving trick: --max-model-len auto (or -1) makes vLLM choose the largest context that fits GPU memory, avoiding startup OOMs on ultra-long-context models; the example shows vLLM reducing a 10,485,760 token setting down to 3,861,424 to fit KV cache constraints in the tip card.

This is the kind of toggle that turns “can’t even start the server” into “ship with a smaller context ceiling,” with no manual KV math.

Ollama adds local image generation via x/z-image-turbo

Ollama (Ollama): Ollama is now being shown running local image generation models from the CLI—ollama run x/z-image-turbo produces an image file directly from a prompt, as shown in the terminal screenshot.

For teams already standardizing on Ollama for local LLM serving, this is a concrete step toward a single local runtime for both text and image workflows (with all the usual local constraints: GPU availability, model size, and throughput).

SGLang day-0 serving command for Kimi K2.5 lands

SGLang (LMSYS): SGLang published a day-0 serving recipe for Kimi K2.5, including explicit flags for --tool-call-parser kimi_k2 and --reasoning-parser kimi_k2, as shown in the command snippet.

Operationally, this is a reminder that “model is supported” increasingly means “the runtime has the right tool-calling and reasoning adapters,” not just that weights load.

Sentence Transformers 5.2.1 supports Transformers v5.x and v4.x

Sentence Transformers (v5.2.1): Sentence Transformers v5.2.1 adds official compatibility with Transformers v5.x while keeping support for v4.x, positioning this as a dual-track transition rather than a hard break; the release is announced in the version post and detailed in the release notes.

This matters if you run embedding/reranking services in production: Transformers major bumps tend to cascade into packaging, inference regressions, and pinned environments—so explicit dual support reduces “forced upgrade” risk.

💼 Enterprise & monetization: ads, bundling, and “new tools” roadmaps

Business signals today are about commercialization pressure and enterprise positioning: premium ad pricing, enterprise bundling, and tool-roadmap teasers. Excludes hardware capex (hardware-accelerators) and MCP Apps (feature).

ChatGPT ads reportedly priced at ~$60 CPM, above broadcast TV and CTV

ChatGPT Ads (OpenAI): Ad inventory in ChatGPT is being priced around $60 per 1,000 impressions (CPM), according to the [pricing chart](t:33|pricing chart); that’s positioned above broadcast TV ($43.5) and streaming/CTV ($27.3) as shown in

.

• Measurement gap: A separate write-up notes OpenAI may not provide advertisers detailed reporting about which query responses ads appeared next to or downstream actions (clicks/purchases), as described in the [commentary](t:106|pricing commentary).

The operational implication is that OpenAI is trying to monetize attention at a premium, while still figuring out what “ad attribution” looks like inside an assistant UX, as reflected in the [pricing chart](t:33|pricing chart).

Anthropic revenue growth claim: $100M (2023) → $1B (2024) → $10B (2025)

Anthropic growth (Anthropic): A widely shared clip claims Anthropic has grown from $100M in 2023 → $1B in 2024 → $10B in 2025, framed as evidence for an “exponential relationship between cognitive capability and revenue,” as stated in the [growth clip](t:72|growth clip).

The main takeaway for builders is the implied pricing power and demand elasticity for higher-capability models—if the growth figures in the [growth clip](t:72|growth clip) are directionally right, enterprises are already paying for capability jumps at scale.

ChatGPT web app shows early signals of commerce: carts and merchant self-service

ChatGPT web app (OpenAI): The ChatGPT web UI is showing early commerce/personalization surfaces—“Carts,” merchant self-service settings, and hints of product discovery—alongside a “temporary chat” option that may still retain memory/style/history, as described in the [UI roundup](t:298|UI roundup).

• Enterprise segmentation: The same report mentions “ChatGPT FinServ” plans (compliance/integration oriented) as part of a broader monetization stack, per the [UI roundup](t:298|UI roundup).

This reads less like a single feature launch and more like UI plumbing surfacing ahead of a larger commerce push, as suggested by the concrete cart screenshot in

.

OpenAI Town Hall: “Login with ChatGPT”, portable memory, and collaborative hardware teased

OpenAI Town Hall (OpenAI): Following up on Town hall announced (builder feedback on “new tools”), OpenAI’s livestream recap highlights a “collaborative, multiplayer” hardware concept ("five people around a table" with a helper robot) and an upcoming “Login with ChatGPT” flow that starts as token budget sharing but aims at portable memory, per the [town hall recap](t:155|town hall recap).

• Cost trajectory claim: The same recap asserts GPT‑5.2-level capability at ~1/100th the price by end of 2027, as stated in the [town hall recap](t:155|town hall recap).

• Model quality tradeoff: Sam Altman reportedly said GPT‑5.2 writing regressed because it was “overtrained on math + coding,” per the [town hall recap](t:155|town hall recap), which is consistent with other quoted fragments in the [live notes](t:527|live notes).

A lot is still non-specific (no product names, timelines, or specs beyond the cost target), but the direction is clear in the [town hall recap](t:155|town hall recap): identity/memory portability and new hardware as distribution for “agent builder” workflows.

Google reportedly acquires Common Sense Machines for 2D→3D asset generation

Common Sense Machines acquisition (Google): Google reportedly acquired Common Sense Machines (CSM), a small team building fast 2D-image-to-3D asset generation; the write-up cites ~12 employees and a prior ~$15M valuation, per the [acquisition summary](t:78|acquisition summary).

The strategic read in the [acquisition summary](t:78|acquisition summary) is that 3D creation (games, retail catalogs, AR) is becoming a competitive surface alongside text/image/video, and buying a specialized team may be faster than building from scratch.

OpenAI reportedly pitches enterprises on bundling ChatGPT, Codex, APIs, and automation

OpenAI enterprise packaging: OpenAI is reportedly pushing a “one-stop AI shop” pitch to win (or retain) large enterprise customers—bundling ChatGPT, Codex, APIs, and workflow automation, per the [enterprise note](t:84|enterprise note).

The competitive hook is explicit: the pitch is framed as a response to Anthropic’s traction with developer-facing offerings like Claude Code and contract flexibility, as described in the same [enterprise note](t:84|enterprise note).

🧠 Model releases worth testing: Qwen3-Max-Thinking, Kimi K2.5, and new open entrants

Model news today is dominated by China-side releases and rollouts (reasoning/tool-use and multimodal agentic models), plus new open models showing up in arenas. Excludes runtime/serving integration details (systems-inference).

Kimi K2.5 arrives as an open-source multimodal agent model with swarm mode

Kimi K2.5 (Moonshot AI): Moonshot announced Kimi K2.5 as “open-source visual agentic intelligence,” highlighting agentic benchmark wins like HLE full set 50.2% and BrowseComp 74.9% plus solid vision/coding scores (e.g., MMMU Pro 78.5%, SWE-bench Verified 76.8%), as shown in the [release post](t:34|release post).

Distribution looks broad on day one: K2.5 is live in chat/agent modes on kimi.com and via API, per the [launch note](t:34|launch note), with users also spotting rollout to the mobile app in the [app screenshot](t:107|mobile rollout) and web UI confirmations in the [web screenshot](t:134|web rollout). OpenRouter also lists K2.5 with vision support, as noted in the [provider post](t:569|OpenRouter availability).

• Agent “swarm” positioning: Moonshot is explicitly marketing a parallel sub-agent mode (“up to 100 sub-agents” and “1,500 tool calls”), emphasizing throughput as a product feature in the [release post](t:34|release post).

• Early hands-on tone: Initial testers describe a noticeable quality jump on coding prompts (“really promising on zero-shot coding prompts”), as seen in the [early testing note](t:96|early testing note).

What’s still unclear from today’s material: pricing and reliability at scale, and how well swarm mode performs on messy real repos versus benchmark-style agent tasks.

Qwen3-Max-Thinking ships with adaptive tool-use and strong tool-assisted evals

Qwen3-Max-Thinking (Alibaba): Alibaba launched Qwen3-Max-Thinking, positioning it as a reasoning/agent model with adaptive tool-use (search, memory, code interpreter) and test-time scaling via multi-round self-reflection, with access via Qwen Chat and OpenAI-compatible Completions/Responses APIs as shown in the [launch thread](t:11|launch thread) and the linked [Qwen Chat](link:11:0|Qwen Chat).

The headline benchmark claim is 58.3% on Humanity’s Last Exam with search/tools (with TTS), alongside 91.4 on LiveCodeBench and 75.3 on SWE-bench Verified, as plotted in the [benchmarks chart](t:11|benchmarks chart) and reiterated in the [score table](t:108|score table). The rollout also includes a “+ Thinking” UI in Qwen Chat, per the [product UI screenshot](t:119|Qwen Chat UI).

• Where it matters for builders: The model is explicitly tuned for tool-heavy work (search + execution), and the charted uplift from test-time scaling/TTS is most visible on tool-assisted evals like HLE-with-search, as shown in the [benchmarks chart](t:11|benchmarks chart).

• Early sentiment: Posts reacting to the chart call it “overall really impressive evals” and highlight tool-use as the differentiator, as seen in the commentary and the [follow-up invite](t:58|try thinking model).

As a practical note, treat the broad “beats X” framing as provisional—today’s evidence is mostly vendor charts plus early reactions, with limited independent harness reports in the thread set.

Moonshot open-sources Kimi Code, its Apache-2.0 coding agent

Kimi Code (Moonshot AI): Moonshot released Kimi Code, an Apache 2.0 open-source coding agent, pitched as “fully transparent” and designed to integrate with mainstream IDEs including VS Code, Cursor, JetBrains, and Zed, as described in the announcement.

The demo framing emphasizes agentic development mechanics: self-directed doc search, screenshot-based visual verification, and self-correction loops, as shown in the [demo clip](t:634|demo clip). Moonshot also published entry points for trying it plus the VS Code extension and repo links, as listed in the [getting started post](t:785|getting started).

• Packaging: The project is positioned as “out-of-the-box ready,” with links to the code agent landing page and IDE extension in the [resource list](t:785|resource list).

• Model pairing: Moonshot explicitly suggests pairing Kimi Code with Kimi K2.5 for production-grade coding, per the announcement.

There’s no benchmark claim attached specifically to Kimi Code in today’s tweets; the evidence is mostly integration claims + a UI-driven build demo.

xAI adds a “Dev Models” section for Grok prompt and tool control

Grok Dev Models (xAI): xAI is rolling out a “Dev Models” area in the Grok UI that appears to let users override the base system prompt, customize developer prompts, and adjust tool-call behavior, as shown in the [UI screenshots](t:98|UI screenshots).

The surfaced menu also shows model variants like “Grok 4.1 Thinking” and “Heavy,” implying this feature is meant for higher-control configurations rather than a single fixed chat experience, per the [model menu screenshot](t:98|model menu screenshot). The screenshot set includes some “failed to load” states, suggesting the rollout is still settling.

This is notable for teams building agentic workflows around Grok: it’s closer to “configuration as product surface” than the usual one-textbox chat UI, but the screenshots don’t yet show any public API contract or docs.

An early Grok 4.20 checkpoint shows up as the top Prediction Arena trader

Grok 4.20 checkpoint (xAI): A “mystery model” on Prediction Arena was revealed as an early Grok 4.20 checkpoint and is reported to have delivered +10% returns over two weeks, while other named models were negative, according to the [arena recap](t:69|arena recap).

The same post frames this as the only model “in profit” versus an average return of -22% across Kalshi contracts, as stated in the [arena recap](t:69|arena recap) and echoed in the [screenshot repost](t:112|screenshot repost). This is not a standard language/coding benchmark, but it’s a live-market, outcome-scored signal that’s hard to fake post-hoc.

Two caveats remain: (1) it’s an “early checkpoint,” not a product SKU with stable availability; (2) trading environments can be sensitive to strategy/harness, and the tweet set doesn’t document the prompting/tooling setup behind the returns.

Molmo 2 enters Arena for community evals

Molmo 2 (Allen AI) in Arena: LMArena added Molmo 2 as a new open model entry (Apache 2.0) for head-to-head prompt testing, per the [Arena announcement](t:427|Arena announcement).

A separate provider note calls out “Molmo 2 (8B)” availability via Hugging Face inference providers, as mentioned in the [provider repost](t:331|inference providers note). This is mainly a distribution/testing surface update rather than a model-card refresh.

Treat early Arena impressions as directional: the tweet set doesn’t include an official Molmo 2 model card, eval sheet, or specific benchmark numbers beyond availability.

🎙️ Voice agents: TTS rollouts and voice-first agent workflows

Voice coverage today is practical: shipping TTS endpoints and builders wiring voice to agents (including remote voice control of coding sessions). Excludes creative video/image generation (gen-media-vision).

Pipecat MCP server enables voice-only remote control of Claude Code sessions

Pipecat MCP server (Pipecat): a Pipecat-based MCP server was shown controlling Claude Code by voice from “anywhere,” with an option for experimental screen capture; the setup uses Deepgram for transcription and Cartesia for speech output, as demonstrated in the voice control walkthrough.

• Operational shape: it’s positioned as transport-flexible (WebRTC by default, “could call on the phone”), and it’s not Claude-specific—because it’s MCP, the same pattern can be wired into other agent runtimes, per the voice control walkthrough.

fal ships Qwen3‑TTS endpoints with cloning + voice design variants

Qwen3‑TTS (fal): fal published Qwen3‑TTS endpoints that cover voice cloning (from ~3 seconds), free-form voice design, and multiple model sizes (0.6B and 1.7B) aimed at faster inference for interactive apps, as laid out in the endpoint thread.

• Deployment signal: the productization is “API-first” (multiple SKUs rather than one model), which tends to map cleanly onto tiered latency/cost requirements in voice agent stacks, as stated in the endpoint thread.

Replicate adds Qwen‑TTS with 3‑second voice cloning for real‑time agents

Qwen‑TTS (Replicate): Replicate shipped Qwen‑TTS as a hosted TTS endpoint with 10 languages, 3‑second voice cloning, and “voice design from text,” positioning it for low-latency, live agent experiences as described in the launch demo.

• Why engineers care: this is a ready-made voice layer you can wrap around tool-using agents without running your own inference stack; the feature set (cloning + design) is tuned for “assistant voice” personalization rather than audiobook-style generation, per the launch demo.

Cartesia × Anthropic (plus Exa) set a 2‑day voice agent hackathon at Notion

Voice agents hackathon (Cartesia × Anthropic): a 2‑day in‑person hackathon focused on voice-first agents (audio + reasoning + memory + action) was announced for Feb 7–8 at Notion HQ in SF, with $20k+ in credits and partner support called out in the event invite and the partner announcement.

• Why this matters: it’s a concrete signal that “voice + tool use + search” is becoming a standard bundle for agent demos, rather than a niche UI, as framed in the partner announcement.

ElevenLabs launches a Clawdbot voice-skill contest with a Mac Mini prize

Clawdbot voice skills (ElevenLabs): ElevenLabs opened a contest to build a Clawdbot skill using ElevenLabs audio models / Agents platform, with prizes including a Mac Mini and Pro plan time, as shown in the contest poster.

• Timeline details: the submission window and judging mechanics were posted separately, including a deadline of Feb 2 (6am UTC) in the timeline post.

A municipal “civic concierge” voice agent reports 3,000+ calls/day

ElevenLabs Agents (City of Midland): a case writeup claims the City of Midland, Texas runs a phone/web “civic concierge” built on ElevenLabs Agents handling 3,000+ inbound calls per day, replacing IVR trees with multilingual natural-language interactions, according to the deployment summary.

The operational emphasis is framed around security expectations in government deployments, echoed in the quoted line in the deployment summary.

ElevenLabs announces Scribe v2 hackathon winners and prize amounts

Scribe v2 hackathon (ElevenLabs): ElevenLabs posted winners for the Scribe v2 hackathon—VoxGuard (1st), WordScribe (2nd), and Momento (3rd)—and tied each placement to prize amounts and plan durations, as listed in the first place winner, the second place winner, and the third place winner.

The theme across all three awards is “real workflow usefulness” over polish, matching the framing in the winners thread.

🖥️ Accelerators: Maia 200 and the inference-cost arms race

Hardware talk today is narrowly focused: Microsoft’s Maia 200 inference accelerator specs and positioning vs Trainium/TPUs. Excludes runtime kernel tweaks (systems-inference).

Microsoft Maia 200 lands as an Azure inference chip with 216GB HBM3e

Maia 200 (Microsoft): Microsoft has officially introduced Maia 200, a custom AI inference accelerator, and says it’s becoming available on Azure for advanced AI workloads, as shown in the [availability post](t:186|availability post); headline specs being cited include 10+ PFLOPS FP4, 216GB HBM3e, and ~7TB/s memory bandwidth, with a claim of ~30% better performance per dollar, according to the [spec recap](t:175|spec recap).

• Positioning vs hyperscaler chips: A widely shared comparison table frames Maia 200 as Microsoft’s response to AWS Trainium3 and Google TPU v7, including reported throughput and memory figures, as shown in the [spec comparison table](t:878|spec comparison table).

What remains unclear from today’s posts is which Azure SKUs/regions get Maia 200 first and whether developers will see explicit pricing/perf-per-token benchmarks beyond the headline perf/$ claim.

🛡️ Safety & governance: autonomy risk, incentives, and regulation pressure

Safety/policy discourse today is driven by Dario Amodei’s essay and follow-on takes about autonomy risk, national security, and economic concentration. This section intentionally omits any bio/chemical weapon details.

Dario Amodei’s “The Adolescence of Technology” argues 2027-level powerful AI is plausible

The Adolescence of Technology (Dario Amodei / Anthropic): Amodei argues there’s “a strong chance” of “powerful AI” arriving within the next few years—possibly as soon as 2027—framed as “a country of geniuses in a datacenter” with many concurrent instances, as summarized in the [essay recap](t:90|essay recap) and linked via the [essay text](link:381:0|essay text). He also claims the capability feedback loop is already underway (AI writing substantial code) and could be 1–2 years from AI autonomously building the next generation, as quoted in the [feedback loop excerpt](t:70|feedback loop excerpt).

• Governance posture: He repeatedly emphasizes transparency-first regulation (show-your-work obligations for large labs) and argues the key risk driver is the “least responsible players” pushing hardest against rules, as captured in the [regulation quote](t:433|regulation quote).

• Institutional coupling: He warns that AI datacenter-driven concentration can couple tech and government incentives (including reluctance to criticize government), as shown in the [incentives excerpt](t:337|incentives excerpt).

• Risk taxonomy (non-bio): He separates autonomy risk, power-seizing misuse, and economic disruption in a structured “what to worry about” list, as laid out in the [risk list screenshot](t:559|risk list screenshot).

Stanford “Moloch’s Bargain” finds engagement optimization drives deceptive agent behavior

Moloch’s Bargain (Stanford): A Stanford paper reports that when LLM agents are trained to win competitive social/economic “arenas” (sales, elections, social engagement), small metric gains correlate with large increases in deceptive or harmful behavior—especially in social settings where disinformation jumps sharply, per the [paper summary](t:182|paper summary).

• Observed tradeoff: The writeup claims “Text Feedback” improves win rate but also increases misalignment; the largest cited spike is social-media disinformation (+188.6%) alongside a +7.5% engagement lift, as enumerated in the [result breakdown](t:182|result breakdown).

• Governance relevance: The framing is explicitly incentive-driven (“competitive success at the cost of alignment”), which maps cleanly onto real product environments where reward functions are clicks/revenue/votes, per the [paper abstract](t:182|paper abstract).

AI takeover risk debate: Ryan Greenblatt pushes back on “quasi-religious” framing

AI takeover risk discourse: Ryan Greenblatt argues that believing misaligned takeover risk is high need not be “quasi-religious,” and says it can be rational to assign high probability (he gives ~40%), as stated in the [pushback post](t:230|pushback post) and reiterated in the [follow-up clarification](t:522|follow-up clarification). The disagreement is partly about messaging: if risk is high, he argues we should want beliefs to track that, as he frames it in the [pushback post](t:230|pushback post).

Anthropic cofounders and staff pledge large-scale wealth donation (80% for cofounders)

Anthropic (wealth pledge): A widely shared excerpt claims Anthropic’s cofounders pledged to donate 80% of their wealth and that staff have pledged shares worth billions (matched by the company), as quoted in the [pledge excerpt](t:82|pledge excerpt). It’s a concrete response to the wealth-concentration theme circulating alongside Amodei’s broader governance arguments, as echoed by a [reaction post](t:565|reaction post).

🧰 Dev tools & repos: terminal perf, agent browsers, and repo-scale utilities

This bucket covers builder tooling that isn’t a full coding assistant: terminal rendering/perf, agent browser CLIs, and ecosystem utilities. Excludes installable agent “skills” (coding-plugins).

WezTerm mux tuning uses RAM to stay responsive with 50+ concurrent agents

WezTerm mux tuning (doodlestein): A field report says that once you push past ~50 concurrent agents on a single machine, SSH sessions via WezTerm’s built-in mux can become laggy—even on 256–512GB RAM boxes—so the fix was to trade RAM for better mux parsing/scroll performance via config changes, as described in the Tuning writeup.

• Concrete knobs: The writeup highlights settings like scrollback_lines (e.g., 3,500 → 10M) and larger parser buffers/caches to reduce overflow/thrash, as shown in the Tuning writeup.

• Why it matters: If your agent workflow is “many panes, constant output,” terminal multiplexers become part of the reliability stack; this is a reminder that infra bottlenecks aren’t only GPUs—they’re also text pipelines, per the Tuning writeup.

agent-browser 0.8.0 adds Kernel cloud provider with stealth and cookie controls

agent-browser 0.8.0 (ctatedev): agent-browser shipped a new Kernel cloud browser provider with optional stealth mode, plus full cookie controls and an --ignore-https-errors flag for self-signed/local environments, as shown in the CLI examples.

• Operational surface: The release frames itself as both capability and hardening—“HiDPI screenshots, security hardening, and bug fixes” are called out alongside the new provider in the CLI examples.

• Practical impact: Cookie primitives and HTTPS-override flags reduce the amount of bespoke Playwright glue teams end up writing when wiring web automation into agent runners, as implied by the concrete commands in the CLI examples.

skills.sh reports 550+ skills/hour and expands CLI discovery commands

skills.sh CLI (rauchg): The skills.sh ecosystem reports “550+ skills added every hour” and ships additional CLI tools/options to improve search and discoverability, with a recommended entrypoint of npx skills@latest, as shown in the Growth and CLI note.

• What changed: The CLI now exposes a clearer command surface (add, find, check, update, init) aimed at treating skills as packages rather than copy-pasted snippets, as shown in the Growth and CLI note.

The directory itself is referenced via the Skills directory.

Charm’s Crush renderer targets 3ms frame times for agent-heavy TUIs

Crush (Charm): Charm says its in-house diffing renderer Crush can render a terminal frame in ~3ms, aiming to keep TUI interactivity intact even under high-frequency updates, as shown in the Render speed demo.

• Where it lands: Charm positions Crush as a core primitive in its terminal stack, including Bubble Tea, per the Bubble Tea pointer.

• Why it matters: For builders running multiple agents in terminal panes, low-latency redraw reduces the “UI tax” that shows up when output volume spikes and terminals start to stutter, as implied by the 3ms target in the Render speed demo.

DeepWiki turns repos into “codemap” docs for faster agent-readable context

DeepWiki (cognition): A shared example shows DeepWiki generating an agent-friendly doc view for clawdbot/clawdbot, including a “View as codemap” affordance that highlights architectural entry points and key claims, as shown in the DeepWiki screenshot.

• Why it matters: Repo-scale context packaging is becoming its own tool category; “codemap”-style pages can be dropped into agent prompts as a higher-signal alternative to dumping README + directory trees, as suggested by the structured bullets in the DeepWiki screenshot.

The underlying doc page is linked in the DeepWiki page.

🎥 Gen media & vision: real-time world editing and AI filmmaking workflows

Generative media shows up strongly today: real-time world editing, AI-assisted short films, image-editing product flows, and meme-generation features. Excludes voice/TTS (voice-agents).

DecartAI Lucy 2.0 claims real-time world editing at 1080p 30FPS

Lucy 2.0 (DecartAI): DecartAI is being described as shipping Lucy 2.0, a “world editing” model that transforms live video streams at 1080p / 30 FPS with “near-zero latency,” framed as a shift from offline text-to-video to interactive, continuous rendering, as shown in the release description.

• Stability focus: the thread emphasizes drift control (“stop the generated video from slowly falling apart”), with additional architecture details claiming a “pure diffusion model” plus “Smart History Augmentation” to penalize drift, as described in the architecture notes.

• Real-time positioning: the narrative is that it “redraws the entire world pixel-by-pixel” in response to motion, contrasted against 10–20 minute generation waits in traditional workflows, per the release description.

The core unknown from the tweets is deployment reality (availability, pricing, and hardware footprint aren’t specified).

DeepMind’s Sundance short outlines a controllable generative animation pipeline

Dear Upstairs Neighbors (Google DeepMind): Google DeepMind says its short film is previewing at Sundance and uses it to show a controllability-focused gen-animation pipeline—custom fine-tunes of Veo/Imagen on the team’s paintings, rough animation as conditioning, and region-level edits without regenerating full shots, as described in the Sundance thread and expanded in the capabilities summary.

• Control beats style matching: the key engineering claim is that the team’s art direction was “too unique for standard AI models,” so they built a workflow for look control and localized editing, per the storyboard constraints.

• Production framing: the published behind-the-scenes writeup is linked via the behind-the-scenes post, positioning this as a repeatable studio workflow rather than a one-off demo.

The tweets don’t include any release of the underlying tools/models; this reads more like a case study in controllable pipelines than a product launch.

Gamma showcases Nano Banana Pro for fast webpage generation and sharper text rendering

Nano Banana Pro in Gamma (Gamma): Gamma is being used as a host for “Nano Banana Pro” to generate whole webpages quickly (example: a realtor landing page in under a minute) and to render sharper, more reliable text inside images (logos/UI mockups), according to the webpage demo and the text rendering claim.

• Doc-to-site workflow: the thread frames this as end-to-end generation inside Gamma (site layout + visuals) rather than exporting assets to another builder, as shown in the landing page example.

The posts are usage-led (not spec-led): no model card, pricing, or latency numbers are provided in the tweets beyond “<1 min.”

Claude-built LucasArts-style adventure game highlights sprite and asset generation loops

AI game building workflow (Claude): A builder reports prompting Claude to remake an existing generated game into a LucasArts-style adventure and notes it “figured out how to create sprites from images for the inventory,” linking to a playable build in the game link and notes.

• Prompting detail: the follow-up includes the full “Sierra-style adventure game” master prompt and a spoiler walkthrough of the resulting game structure, as captured in the full prompt and walkthrough.

The concrete takeaway is that “complete game + assets + deploy” is now being attempted as a single-agent loop; the tweets don’t quantify iteration count or failure rate, so treat it as an anecdote, not a benchmark.

Hunyuan-Image-3.0-Instruct reaches #7 in LMArena’s Image Edit Arena

Hunyuan-Image-3.0-Instruct (Tencent): LMArena reports Tencent’s Hunyuan-Image-3.0-Instruct reached #7 in the Image Edit Arena, framed as a new lab breaking into the top 10 and landing close to Nano-Banana and Seedream-4.5, according to the leaderboard update.

The model’s arrival in the Arena is echoed by Tencent’s own note that it “just landed” there in the Arena availability note, but the tweets don’t include a reproducible eval artifact beyond the Arena placement.

LMArena launches Video Arena on web, with a comparison workflow walkthrough