OpenAI GPT‑Image‑1.5 tops image arenas at 1264 Elo – 20% cheaper tokens

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

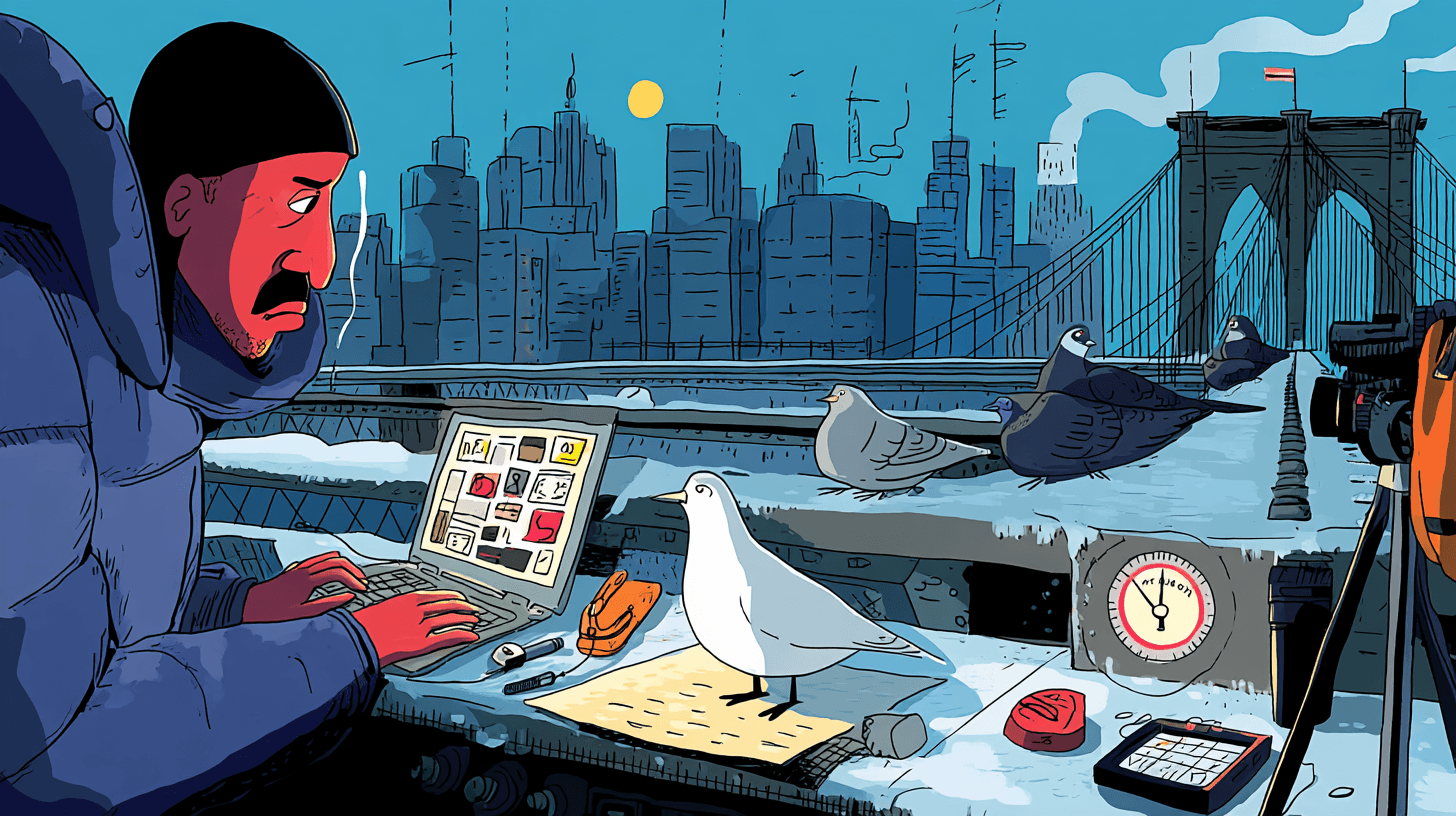

OpenAI quietly made its biggest image move since DALL·E by shipping GPT‑Image‑1.5 across ChatGPT (Free, Go, Plus, Edu, Pro) and the API, then wiring it into a new Images tab. The model is up to 4× faster than GPT‑Image‑1 and moves to token billing with roughly 20% cheaper image input and output tokens, resolutions up to 1536×1024, and discounted cached inputs so high‑volume apps don’t get punished for retries.

On benchmarks, the jump is real: GPT‑Image‑1.5 debuts on LMArena’s text‑to‑image board at 1264 Elo, about 29 points ahead of Gemini 3 Pro Image / Nano Banana Pro, and chatgpt-image-latest lands #1 on the edit leaderboard. Artificial Analysis shows similar gains, with +147 Elo over GPT‑Image‑1 on generation and +245 on editing. Builders report much sharper, identity‑preserving edits—"change only what you ask for" is finally more than marketing—and vastly better in‑image text for UI mocks, posters, and fake newspapers.

The new ChatGPT Images surface adds reusable style chips ("3D glam doll", "Plushie", "Holiday portrait"), prompt starters, and mobile branching so image work feels more like a design tool than a chat log. Day‑0 integrations on fal, Replicate, and Figma Weave mean you can A/B it against Nano Banana Pro today—many still prefer Google for dense infographics and party scenes. One caveat: early jailbreaks and a mobile partial‑diffusion bug suggest you should layer your own safety filters in front of whatever OpenAI ships by default.

Top links today

- ChatGPT Images and GPT Image 1.5 launch

- GPT Image 1.5 API documentation

- FrontierScience scientific reasoning benchmark overview

- GPT-5 wet lab cloning case study

- vLLM Router design and performance blog

- vLLM Router GitHub repository

- MiMo-V2-Flash large MoE model report

- cua-bench framework for computer-use agents

- Meta SAM Audio model announcement

- NVIDIA Nemotron-Cascade reasoning models release

- Apple SHARP single-image 3D view synthesis

- Hunyuan 3D 3.0 text-to-3D on fal

- FLUX.2 Max image generation model on Replicate

- Hindsight agent memory system research paper

- Gemini Gems manager and Opal workflows

Feature Spotlight

Feature: ChatGPT Images with GPT‑Image‑1.5

OpenAI’s GPT‑Image‑1.5 lands in ChatGPT and API: 4× faster gen, tighter instruction‑following/edits, 20% lower image I/O cost, new Images UI; early arenas show #1 text‑to‑image and #1 image edit (chatgpt‑image‑latest).

Cross‑account, high‑volume launch. OpenAI ships a new image model and an Images surface in ChatGPT; posts span API pricing/params, speedups, editing fidelity, UI rollout, and early head‑to‑head results/leaderboards.

Jump to Feature: ChatGPT Images with GPT‑Image‑1.5 topicsTable of Contents

🖼️ Feature: ChatGPT Images with GPT‑Image‑1.5

Cross‑account, high‑volume launch. OpenAI ships a new image model and an Images surface in ChatGPT; posts span API pricing/params, speedups, editing fidelity, UI rollout, and early head‑to‑head results/leaderboards.

OpenAI launches GPT Image 1.5 and new ChatGPT Images surface

OpenAI rolled out GPT Image 1.5 as its new flagship image model, powering a refreshed ChatGPT Images experience across Free, Go, Plus, Edu and Pro tiers, and exposing it in the API as gpt-image-1.5 with up to 4× faster generation, stronger instruction following, and more precise editing than GPT‑Image‑1. (launch thread, feature details, images surface)

The API pricing moves to token-based billing with 20% cheaper image input and output tokens, quality presets (low/medium/high/auto), support for resolutions up to 1536×1024, transparent backgrounds, streaming, and discounted cached inputs for both text and image tokens. (api pricing, rollout summary, image docs) OpenAI is also promoting a dedicated prompting guide and curated gallery to help developers learn how to get consistent results from the new model. (prompt guide, gallery examples, launch blog)

For builders, the key change is that you no longer have to pick a separate "image model" inside ChatGPT: GPT‑Image‑1.5 is wired behind the scenes for both from‑scratch generation and edits, exposes the same behavior in the Playground and API, and is priced to be a drop‑in upgrade over GPT‑Image‑1 workflows rather than a separate SKUs you have to special‑case.

Builders report much sharper, identity‑preserving edits with GPT‑Image‑1.5

OpenAI’s own copy stresses that GPT‑Image‑1.5 "changes only what you ask for" while keeping lighting, composition and people’s appearance stable across edits, and users are backing that up with concrete examples. (editing description, consistency note) The ChatGPT app account showed transformations like "turn this person into a K‑pop idol" and "make a chibi version" where facial features, skin tone and jewelry remain consistent even as styling, backgrounds and poses shift wildly. (k-pop edit example, chibi character shot)

Others are pushing it into multi‑image narratives: @emollick used it as the renderer for a point‑and‑click adventure, with inventory and world state tracked in text while each command yields a visually coherent next frame, something he says worked better than Nano Banana Pro for scene continuity. adventure game demo A full compilation from @petergostev shows similar behavior across portraits, product shots and cinematic scenes, suggesting the new model finally hits the bar for identity‑stable creative workflows like comics, slide decks and game concepts. image compilation

GPT‑Image‑1.5 jumps to #1 on LMArena and Artificial Analysis

Benchmark sites lit up as soon as GPT‑Image‑1.5 was added: on LMArena’s Text‑to‑Image board it debuted with an Elo of 1264, a 29‑point lead over Gemini 3 Pro Image / Nano Banana Pro at 1235, and well ahead of FLUX.2 [max] at 1168. (lmarena text update, lmarena screenshot)

On the Image Edit leaderboard, chatgpt-image-latest—which fronts GPT‑Image‑1.5 inside ChatGPT—took the top spot with 1409 Elo, edging Gemini 3 Nano Banana Pro (2K) by 3 points, while GPT‑Image‑1.5 itself sits at #4 with 1395. (lmarena text update, edit leaderboard detail) Artificial Analysis reports a consistent story: GPT‑Image‑1.5 ranks #1 in both Text‑to‑Image and Image Editing in their Image Arena, with +147 Elo over GPT‑Image‑1 in T2I and +245 in editing. (aa leaderboard post, aa recap) For teams that route prompts through arenas to pick backends dynamically, this is the first hard signal that OpenAI’s image stack has leapfrogged earlier DALL·E/GPT‑Image gens and is competitive with (or better than) Google’s current best.

ChatGPT adds an Images tab with style presets on web and mobile

Alongside the model, ChatGPT now has a first‑class Images surface: a sidebar tab on web and a dedicated Images screen on mobile that lets you pick styles, run edits, and browse your past generations without living inside a text chat. (web ui screenshot, early ui rollout, mobile ui)

The new UI surfaces reusable style chips like "3D glam doll", "Plushie", "Sketch" and "Holiday portrait", plus promptable starters such as "Create a holiday card" or "Me as The Girl with a Pearl" so non‑experts can get to decent outputs without crafting heavy prompts every time. (early ui rollout, mobile ui) On iOS and Android, conversation branching is now available too, which means you can fork an image exploration into a new branch while keeping the original thread intact—handy when a single source image needs several radically different treatments. mobile branching demo For teams, this matters because image work can now live in a quasi‑library space that feels closer to a design tool than a chat log, while still being backed by the same GPT‑5.2 stack you’re already using for text.

GPT Image 1.5 lands day‑0 on fal, Replicate and Figma Weave

Third‑party platforms moved quickly: fal announced day‑0 support for GPT‑Image‑1.5, so you can hit the new model for text‑to‑image, editing, and style transfer via their hosted endpoints without wiring OpenAI credentials yourself. (fal launch, fal followup)

Replicate likewise added GPT‑Image‑1.5 to its catalog for both generation and edit flows, positioning it next to FLUX and other heavyweights as another switchable backend. replicate launch On the design side, Figma is rolling GPT‑Image‑1.5 into both the main app (shipping "tomorrow" in their words) and the Weave/Weavy environment, showing side‑by‑side comparisons where 1.5’s edits (e.g. swapping pizza for grapes) better preserve identity and intent than GPT‑Image‑1. weave edit demo For engineers and design systems folks, this means you can start A/B‑ing GPT‑Image‑1.5 across existing fal/Replicate/Figma integrations immediately, without waiting on vendor‑specific SDKs to catch up.

GPT‑Image‑1.5 improves on in‑image text and logo preservation

For developers who care about marketing and UX assets, GPT‑Image‑1.5 explicitly targets better rendering of dense, small text and more faithful treatment of logos and faces. api text note The API docs call out improved text rendering for things like UI mockups, posters and dense labels, and an OpenAI‑generated mock newspaper shows it can now fill a page with legible, on‑theme copy rather than lorem‑ipsum‑style noise. (text rendering demo, image docs)

People testing it in the wild confirm that long headlines, subheads and multi‑section layouts are substantially more usable than with GPT‑Image‑1, though some still prefer Nano Banana Pro for ultra‑dense infographics and ultra‑clean typography. (infographic comparison, text rendering demo) Net effect: if you’re generating product hero shots, fake docs, or ad concepts where text actually has to be readable, GPT‑Image‑1.5 should cut down the number of "close but unusable" drafts you have to throw away.

Practitioners see GPT‑Image‑1.5 and Nano Banana Pro trading blows

Side‑by‑side tests against Google’s Gemini 3 Pro Image (Nano Banana Pro) show a nuanced picture: GPT‑Image‑1.5 often wins on prompt obedience, geometry and multi‑character consistency, while many users still like Nano Banana’s taste and infographic chops more. (visual comparison, single vs slides)

@deedydas finds GPT slightly better at "vivid images" but worse for complex slides and information‑dense layouts. visual comparison @emollick reports GPT‑Image‑1.5 beats Nano Banana Pro for an interactive point‑and‑click game, especially in keeping world style and objects stable, though he still leans Nano for complex graphics. (game example, single vs slides) Others argue Nano Banana Pro "has no competition" for certain photographic scenes or party shots, birthday dog test while @scaling01 calls GPT‑Image‑1.5 "slightly slightly worse" overall but reads that as a promising sign for a future Image 2. slight quality take The takeaway for teams is that there is no single obvious winner: you probably want to route different workloads (slides vs character art vs product edits) across both stacks and let real outputs, not leaderboard numbers, decide.

Users uncover partial‑diffusion leak and jailbreak patterns in GPT‑Image‑1.5

Early tinkerers are already stress‑testing GPT‑Image‑1.5’s safety layer. One user discovered that on mobile, if ChatGPT blurs a refused image, dragging that blurred thumbnail into the input field reveals a partially diffused, much clearer version of the blocked output—what they dubbed a "half‑diffused image" that still shows composition and rough content. (partial diffusion thread, zoomed followup)

Others report that by swapping the fronting chat model (e.g., 5‑instant vs 4o) or applying simple transforms like flips/filters to input images, they can sometimes get GPT‑Image‑1.5 to accept prompts or vision inputs that were previously rejected, and have shared collages of NSFW, weapons and copyright‑heavy scenes as proof. jailbreak collage

None of this is surprising given how image and text safety filters are usually layered, but it’s a reminder for anyone embedding GPT‑Image‑1.5 in consumer‑facing products: you should still put your own content filters and monitoring in front of whatever OpenAI does by default, especially around sensitive categories.

🚀 Open models and new releases (non‑vision)

Non‑feature launches relevant to builders. Focus on open‑weight LLMs and audio models; excludes OpenAI’s GPT‑Image‑1.5 which is covered as the feature.

Xiaomi open-sources MiMo‑V2‑Flash 309B MoE model with strong coding and math

Xiaomi’s MiMo team released MiMo‑V2‑Flash, a 309B‑parameter MoE model with 15B active parameters, trained on 27T tokens with hybrid sliding‑window + global attention and a 256k context window, targeting fast agentic workloads and reasoning. model overview Benchmarks shared by the team put MiMo‑V2‑Flash at 73.4% on SWE‑Bench Verified, 71.7% on SWE‑Bench Multilingual, 94.1% on AIME 2025, and 83.7% on GPQA‑Diamond, putting it in the same band as DeepSeek V3.2, Kimi K2 and other frontier open models while using fewer total parameters. benchmark breakdown It’s already live on OpenRouter with a free tier for now, openrouter launch and SGLang has day‑0 support including efficient sliding‑window execution and multi‑layer MTP on H200s, so you can test it in real serving setups without building your own stack. (sglang support, tech report)

For AI engineers, MiMo‑V2‑Flash is worth A/B‑testing as a primary or fallback coding/agent model—especially if you care about long‑context tool chains—since the open weights, strong SWE‑Bench numbers, and ready‑made SGLang/OpenRouter integrations make it relatively low friction to slot into existing routing or evaluation harnesses. model overview

Meta’s SAM Audio model lands on Hugging Face as open audio editor

Meta’s research group quietly shipped SAM Audio, a unified model that can isolate and edit arbitrary sounds from complex audio mixtures using text, visual or exemplar prompts, and it’s now available as an open collection on Hugging Face. (feature demo, hf collection) Early clips show clean separation of specific instruments or voices from noisy backgrounds, then re‑mixing or muting them, in a way that feels like "segment anything" for sound. feature demo

For builders, SAM Audio looks like a strong default for source separation, stem extraction, and targeted redaction (e.g., blurring license plates but for microphones): you can wrap it as a pre‑step before ASR, use it in editing tools to grab individual tracks, or bolt it into moderation pipelines to knock out sensitive voices or PII while preserving the rest of the scene. model collection

Browser‑Use open-sources BU‑30B‑A3B‑Preview, tuned for cheap web agents

browser_use open‑sourced BU‑30B‑A3B‑Preview, a 30B model trained specifically for browser and tool‑using agents, claiming it’s the "best model for web agents" on their internal benchmarks. model announcement A scatter plot shows BU‑30B‑A3B reaching ~76% accuracy while delivering over 200 tasks per $1, dramatically to the right of GPT‑5 and Claude Sonnet 4.5, which cluster at lower throughput for similar or slightly higher accuracy. model announcement

If you’re running large numbers of autonomous browsing tasks—lead scraping, backoffice workflows, multi‑page form filling—this is a model worth slotting into your router as the "cheap but smart enough" default. It’s open weights, tuned on agentic tool use, and designed to pair with the browser_use skill system, so you can get a realistic sense of how much you save versus frontier APIs in cost‑per‑completed‑task rather than just tokens.

Meituan’s LongCat‑Video‑Avatar open-sources real‑time talking character model

Meituan’s LongCat team released LongCat‑Video‑Avatar, an open model on Hugging Face that turns text, audio and reference images or video into audio‑driven character animation, including lip‑sync and expression control. model release The HF card exposes it as a general avatar system—feed in a still or short clip of a character plus a speech track and it outputs an animated talking head, useful for agents, support bots or in‑app guides.

The fact this is fully open means you can fine‑tune on your own characters, ship it on‑prem if you care about data, or pair it with any TTS / LLM stack; it’s a good candidate to experiment with if you want agent faces without sending traffic to closed SaaS avatar providers. huggingface repo

NVIDIA Nemotron‑Cascade 8B debuts as open general‑purpose reasoning model

NVIDIA followed its Nemotron‑3 Nano line with Nemotron‑Cascade, a family of open general‑purpose reasoning models, including an 8B variant released on Hugging Face. (family announcement, 8b release) Cascade is pitched as a multi‑stage, cost‑aware setup where a small base model handles straightforward questions and can hand tougher ones up the stack, giving teams another fully open option for agent workflows that need routing between fast and slow paths, following up on the open‑stack push in open stack around Nemotron‑3 Nano.

For engineers who already started evaluating Nemotron‑3 Nano, model summary Cascade looks like the “controller + expert” piece: something you can wire into your tool‑calling or web‑agent systems to decide which problems deserve more tokens, while still staying in an Apache‑friendly, GPU‑optimized ecosystem with first‑class Triton / vLLM / SGLang support.

VoxCPM TTS ports to Apple’s MLX for native Mac inference

OpenBMB’s VoxCPM text‑to‑speech model now runs natively on Apple Silicon via the MLX framework, thanks to a new integration into the mlx-audio stack. mlx integration This adds a second backend alongside Metal (MPS), so Mac users can run high‑quality multilingual TTS locally with better efficiency and without shipping data to cloud APIs.

For AI app builders targeting macOS—podcast tooling, offline assistants, dev tools—this means you can prototype fully local voice features using the same model across both MPS and MLX backends, and later swap in cloud TTS only where latency or voice variety really demands it.mlx audio repo

🗣️ Voice agents and native audio progress

Voice model metrics and platform shifts; mostly Gemini’s native audio gains and product lifecycle notes. Excludes image‑model content covered as the feature.

Lemon Slice 2 turns any voice agent into a 20 fps talking avatar

Lemon Slice 2 is being rolled out as a real‑time diffusion transformer that takes a single image and a live voice stream, and outputs an infinite‑length 20 fps talking video on a single GPU avatar launch thread modal infra note. It works across humans, cartoons, animals, paintings, and even inanimate objects—"if it has a face, you can talk to it now"—and is available as both an API and embeddable widget.

For teams already running voice agents, this is effectively a drop‑in visual layer: you can keep your existing STT/TTS stack and LLM, then feed the audio into Lemon Slice to render a responsive character in real time. Benchmarks aren’t formal, but the founders and early testers emphasize stable lip‑sync, full‑body motion, and no visible error accumulation across long sessions avatar launch thread. Combined with low‑latency TTS like Chatterbox Turbo tts launch recap, this points toward “face‑first” agents in support, education, and games where a static avatar or waveform is no longer good enough.

Meta’s SAM Audio model brings open unified sound separation and editing

Meta quietly launched SAM Audio, an open model that can isolate and edit arbitrary sounds from complex audio mixtures using text, visual, or audio prompts, and published it as a collection on Hugging Face demo overview Hugging Face collection. It’s positioned as the audio analogue of Segment Anything, but for sounds: instead of “segment this object,” you can ask for “just the vocals,” “only the dog barking,” or “remove the siren.”

For voice UX, this matters because it gives builders a way to pre‑clean microphone input and multi‑speaker environments before sending anything into a speech or agent stack. You can imagine voice bots that lock onto the primary speaker in a noisy office, separate overlapping speakers on a conference call, or retroactively pull a clean voice track from a livestream. Because it’s open weights and already wired into the Hugging Face ecosystem, it’s also practical to fine‑tune or wrap into existing pipelines today, instead of waiting on closed vendor APIs to catch up.

OpenAI retires ChatGPT voice on macOS desktop app in January

OpenAI is shutting down the voice experience in the ChatGPT macOS desktop app on January 15, 2026, while keeping voice available on the web, iOS, Android, and Windows apps. The change is framed as a move to focus on a more unified voice stack across fewer clients, with all other macOS ChatGPT features staying intact release note screenshot.

For teams who built internal workflows around Mac voice chat, this means you’ll either need to switch users to the browser or the mobile/Windows apps, or pause on macOS voice until a replacement lands. If you’re standardizing an enterprise deployment, it’s a good reminder to design around web and mobile as primary voice surfaces rather than desktop-only clients.

Grok reportedly clones caller’s voice mid‑conversation

A user demo shows Grok’s voice interface unexpectedly switching to mimic the speaker’s own voice partway through a conversation, despite voice cloning not being an advertised feature user demo clip. The creator describes the behavior as Grok “matching my reference voice with zero‑shot cloning,” and asks whether this is a hidden capability or a bug.

If this is intentional, it suggests xAI is experimenting with real‑time paralinguistic transfer similar to newer open‑source TTS systems, which could make voice agents feel far more personal. If it’s accidental, it raises UX and consent questions for any system that can infer and adopt a user’s voice without a clear opt‑in. Either way, it’s a signal that competitive pressure around expressive, low‑latency voice modes is bleeding into mainstream assistants, not just niche TTS research.

🧑💻 Agent stacks and coding toolchains

Practical agent/coding tooling drops and patterns: IDE features, plugin ecosystems, and agent eval/dev kits. Excludes serving‑layer news (see Systems).

cua-bench debuts as a benchmark and RL suite for computer-use agents

The cua team introduced cua-bench, a framework that combines benchmarking, synthetic data generation, and RL environments for computer‑use agents across HTML‑based desktops and app simulators. cua-bench launch It tackles a glaring problem—agents perform 10× differently under small UI changes—by rendering tasks across 10+ OS themes (Win11/XP, macOS, iOS, Android) and bundling realistic shells for apps like Spotify, Slack, and WhatsApp so you can train and evaluate agents without spinning real VMs. ui variance note

AI SDK 6 beta adds Standard JSON Schema support and Anthropic tool search

The AI SDK 6 beta landed with Standard JSON Schema support plus hooks for Anthropic’s BM25‑backed tool search, so you can describe outputs once and share schemas across Zod, Valibot, Arktype and similar libs. ai sdk schema example For agent and toolchain builders this removes a lot of adapter code (schema conversion is now the library’s job) and lets Claude agents pick from hundreds of tools without stuffing all of them into the context window. anthropic tool search

Claude Code ships diffs highlighting, prompt suggestions, plugin marketplace and guest passes

Anthropic rolled out a substantial Claude Code update bundling syntax‑highlighted diffs in the terminal view, inline prompt suggestions, a first‑party plugins marketplace, and shareable Max guest passes. Claude code updates For teams building coding agents or using Claude as a pairing tool, this tightens the review loop (colorized diffs are much easier to scan), makes it easier to standardize toolchains via /plugins, and encourages wider internal trials with 1‑week Pro passes per Max user. guest pass feature

CopilotKit launches useAgent hook with A2A protocol for interactive agent apps

CopilotKit shipped useAgent on top of the A2A protocol, giving React frontends a clean way to trigger long‑running agents, let them take frontend actions, and return declarative AG‑UI widgets. useagent announcement The idea is that your UI and agent stop fighting over control: users can kick off tasks from buttons, agents can update components as state changes, and you avoid wiring bespoke RPC glue for every workflow.

Kilo adopts KAT-Coder-Pro as free default non-reasoning coding model

Kilo announced that Kwai’s KAT-Coder-Pro V1 is now the top non‑reasoning coding model in its product and is available for free to all users. kilo kat coder update The model brings 73.4% SWE‑Bench Verified performance and top‑10 scores on the Artificial Analysis Intelligence Index, so teams can wire up strong agentic coding without paying frontier‑model rates, while Kilo’s new blog urges people to manage costs via model choice and routing instead of crude “AI spend caps.”kilo spend guide

Yutori’s Scouts browser research agent moves from preview to general availability

Yutori’s Scouts—a push‑based browser research agent that uses a team of web‑use agents to monitor sites and push findings to you—has now been promoted to general availability following its earlier preview. initial launch The GA release keeps the same model where Navigator, Researcher, and Reporter agents share context while traversing the web, but the signup barrier is gone and Yutori is explicitly pitching it as a reusable component in broader agent stacks rather than a closed SaaS. scouts ga page

⚙️ Serving, routing and runtime efficiency

Runtime engineering updates: load balancers, gateways, and framework support. Continues the infra‑adjacent serving theme with concrete perf deltas.

SGLang and DeepXPU R‑Fork slashes weight load time with GPU‑to‑GPU tensor fork

Ant Group’s DeepXPU team and SGLang unveiled R‑Fork, a new weight‑loading mechanism that lets a fresh vLLM/SGLang worker fork tensors directly from an already‑running instance over GPU‑to‑GPU links, instead of re‑reading all weights from disk. rfork thread In their benchmarks this cuts cold‑start weight loading from minutes down to seconds for large models. rfork blog R‑Fork (short for tensor remote fork) works by maintaining a “parent” worker with weights resident in GPU memory; when a new worker spins up—say for autoscaling—it performs an inter‑node GPU copy of the weight tensors from the parent rather than replaying the full load pipeline. Because this happens at the tensor level, you avoid CPU I/O bottlenecks and PCIe saturation that usually dominate cold‑start.

This slots neatly into SGLang’s growing runtime toolkit sglang cookbook, which already emphasizes practical serving recipes. Now, instead of treating model load time as a fixed tax that discourages aggressive autoscaling, you can consider finer‑grained scale‑out policies: spin up workers per traffic spike, per customer, or per experiment, knowing that the cost is mostly GPU memory traffic instead of multi‑minute stalls.

If you’re running big open models on shared clusters—especially with heterogeneous job mixes—R‑Fork points toward a future where “load the model” is no longer the gating operation; the gating factor becomes how fast your scheduler can decide when to fork.

vLLM Router adds prefill/decode‑aware load balancing with KV‑cache affinity

vLLM introduced vLLM Router, a dedicated load balancer that understands prefill vs decode traffic and routes requests to separate worker pools while keeping KV‑cache locality via consistent hashing. router announcement This targets the real bottleneck many teams hit once they move from toy demos to high‑volume conversational traffic.

The router is written in Rust and sits in front of a fleet of vLLM workers on Kubernetes or bare metal, exposing policies like consistent‑hash routing on sticky keys so multi‑turn chats keep reusing the same KV cache instead of re‑prefilling every time. It also understands the prefill/decode split that vLLM already exposes, so you can run prefab "P" workers that are memory‑heavy and decode "D" workers that are throughput‑optimized, instead of one generic pool. router announcement vLLM Router ships with built‑in metrics and health logic: it exports Prometheus /metrics for RPS, latency and throughput, and supports policies like power‑of‑two‑choices, round‑robin and random alongside consistent hashing. This fits neatly with vLLM’s earlier work on separating encoders from decoders to cut P99 on audio/vision pipelines encoder split, and it pushes vLLM further into “full serving stack” territory rather than just an engine library.

For anyone running large fleets (multi‑GPU nodes or many small pods), this is a strong signal that you should stop treating LLM requests as stateless HTTP and start thinking in terms of cache affinity and role‑specific worker pools.

Cline moves to Vercel AI Gateway, cutting errors 43.8% and speeding P99 streams

Cline’s coding agent provider has migrated onto Vercel’s AI Gateway, and the teams are sharing concrete serving numbers: error rates dropped from 1.78% to 1.0% (a 43.8% reduction) and P99 streaming latency improved by 10–40% across popular models. (vercel blog, cline details) Under the hood, Cline is now fronted by Vercel’s global edge network (100+ PoPs), with requests hitting the nearest location and then traversing Vercel’s private backbone to the Gateway. Vercel notes that this adds under 20 ms of routing overhead while still letting the Gateway handle retries, circuit breakers and provider‑specific quirks. vercel blog Cline reports that model‑specific tests saw some big wins: Grok‑code‑fast‑1’s streaming P99 was nearly 40% faster, and MiniMax M2 showed more than 40% improvement during the 50/50 production A/B test against their previous infra. vercel blog Pricing‑wise, Vercel is doing zero markup on inference, so when you use the "Cline provider" you pay the raw model cost, not an extra platform tax. cline details For engineers building their own gateways or multiplexers, this is a useful data point: a dedicated routing layer can both stabilize errors under load and meaningfully tighten tail latency, especially for streaming tokens, without changing anything in your app code.

SGLang adds day‑0 MiMo‑V2‑Flash support with efficient SWA and multi‑layer MTP

SGLang shipped day‑0 inference support for Xiaomi’s new MiMo‑V2‑Flash, wiring the 309B‑parameter MoE (15B active) into its runtime with efficient sliding‑window attention (SWA) and multi‑layer multi‑token prediction (MTP). sglang announcement The model itself was pre‑trained on 27T tokens with a 256k‑token hybrid SWA+global context and lands free on OpenRouter for a limited time. (openrouter launch, mimo overview)

On the SGLang side, the team highlights near‑zero‑overhead MTP based on their spec v2, which lets MiMo‑V2‑Flash generate several tokens per step without blowing up VRAM usage or throughput, plus an SWA execution path tuned for long‑context serving. sglang announcement They also call out a deliberate balance between time‑to‑first‑token, per‑token throughput and overall concurrency on H200 GPUs, which is where MiMo is meant to shine in agentic workloads.

For runtime engineers, the interesting bit isn’t just "another big model" but that SGLang is becoming a first‑stop host for modern hybrid‑attention, MoE and MTP designs. If you’re already running Nemotron or DeepSeek on SGLang, dropping MiMo‑V2‑Flash into the same fleet is mostly a config change, which makes experimentation across state‑of‑the‑art open models much cheaper in both time and infra work.

📊 Evals and agent benchmarks (non‑vision)

Fresh eval outcomes across text/agentic tasks; continues the benchmark race with concrete scores. Excludes the image‑model arena shifts, covered in the feature/gen‑media.

Gemini 3 Pro double‑length Pokémon Crystal run beats 2.5 Pro on tokens and time

Google researchers show Gemini 3 Pro completing a full, autonomous Pokémon Crystal playthrough—16 badges plus beating Red—while using roughly half the tokens and turns of Gemini 2.5 Pro in a head‑to‑head setup. pokemon benchmark

This run is notable because the agent uses vision to solve in‑game puzzles, performs live "battle math" for optimal moves, invents a press_sequence abstraction to work around harness limits, and runs about 2× faster token‑wise (and ~8× faster extrapolated) compared to 2.5 Pro. pokemon benchmark For people building tool‑using or game‑playing agents, it’s a concrete, long‑horizon benchmark showing that 3 Pro plans better, wastes fewer calls, and stays stable over many hours of interaction.

Agent S edges past human benchmark on OSWorld desktop suite

A community‑built "Agent S" has reportedly become the first system to beat the human baseline on the OSWorld desktop benchmark, scoring 72.6% vs a 72.36% averaged human score on the same tasks. osworld tweet This follows OSWorld agents, where we saw GPT‑5‑based setups reach parity with humans but not exceed them.

The author claims Agent S uses modern frontier models plus a tuned harness to navigate realistic Windows‑style UI tasks across file management, settings, and applications, pushing past the long‑standing plateau where agents failed on overlapping windows or non‑default themes. osworld tweet If these numbers hold up under independent re‑runs, OSWorld may be moving from “aspirational” to “serving readiness” territory for computer‑using agents, which is a big deal for anyone betting on desktop automation.

GPT‑5.2‑high debuts at #13 on Text Arena but leads math category

Early scores from the LMArena Text leaderboard put OpenAI’s GPT‑5.2‑high at #13 overall with an Elo‑style score of 1441, below its predecessor GPT‑5.1‑high at #6, but with much stronger category specialization. arena text update The same report notes 5.2‑high ranks #1 in the Math category, #2 in the mathematical occupational field, and #5 on the Arena Expert slice (hardest expert prompts), suggesting OpenAI traded some broad, chat‑style appeal for higher reliability on very hard quantitative work. arena text update For teams routing queries, this points toward a split strategy: use 5.2‑high as a math and expert‑task specialist rather than the default generalist.

GPT‑5.2 medium‑reasoning tops new creative story‑writing benchmark

On a new Creative Story‑Writing Benchmark, OpenAI’s GPT‑5.2 in its medium reasoning mode takes the top spot, ahead of models like Mistral Large 3. story benchmark

The benchmark scores models on story quality and constraint satisfaction (for example, weaving specific elements into a coherent plot), and 5.2’s win suggests the "medium" thinking setting hits a useful sweet spot between cost, latency, and narrative control. story benchmark For teams building narrative tools—games, interactive fiction, marketing copy—this is a strong signal that 5.2‑medium is worth A/B‑testing as the default writer, especially where you need both creativity and reliable use of prompt details.

Epoch’s ECI framework can spot a 2× capability acceleration within months

Epoch AI shows that its statistical framework, built on stitched‑together benchmark scores, can detect a synthetic 2× acceleration in frontier model capabilities within about 2–3 months of the change. capabilities chart This extends their earlier work on ECI and METR timelines ECI forecast into the “early‑warning” regime.

In their toy example, capability scores follow a smooth trend until 2027, when they artificially double the slope; the framework flags a breakpoint dated to February 2027, only three months after the simulated shift. capabilities chart For lab leaders and regulators trying to watch for sudden jumps in real‑world capabilities, this hints that if we keep feeding consistent eval data into something like ECI, we can at least quantify when the curve bends, not just argue about vibes.

Offline IQ tests put GPT‑5.2 and Gemini 3 Pro roughly neck‑and‑neck

A new IQ comparison from TrackingAI reports that GPT‑5.2 Thinking, GPT‑5.2 Pro, and Gemini 3 Pro Preview all cluster in the high‑120s to low‑130s on two different test suites—one based on Mensa Norway items, the other an offline quiz with answers not present online. iq benchmark chart

The offline test is arguably the cleaner signal, since models can’t memorize from the web; there, Gemini 3 Pro and GPT‑5.2 Thinking tie at 141, while 5.2 Pro scores higher on the Mensa set, and all three end up “roughly equal” once both tests are combined. iq benchmark chart The takeaway for practitioners is less about the literal IQ number and more that top frontier models are converging in raw pattern‑solving ability, so routing and fine‑tuning around task shape (math, coding, planning) matters more than chasing a single global score.

🎥 Vision/video ecosystem beyond OpenAI

Competitive image/video model momentum and workflows. Excludes OpenAI’s GPT‑Image‑1.5 (feature) and focuses on FLUX/Hunyuan/Kling/NB Pro stacks.

Hunyuan 3D v3 brings 3.6B‑voxel, 1536³ text/image/sketch‑to‑3D to fal

fal has added Tencent’s Hunyuan 3D v3 model (“Hunyuan 3D 3.0”), which generates high-fidelity 3D assets with up to 3.6B voxels at 1536³ resolution from text, reference images, or even sketches, with big improvements in geometry alignment and texturing fal launch. That makes it one of the first off‑the‑shelf pipelines where you can go from a rough concept prompt or line drawing to production-grade, printable 3D assets in minutes rather than hours

The integration exposes separate text-to-3D, image-to-3D, and sketch-to-3D endpoints, so you can plug it into existing tooling for asset generation, product prototyping, or game art, and then iterate with downstream renderers or 3D engines fal model page. If you’re already using Hunyuan 2.x or other voxel models, this is worth A/B testing on your hardest scenes—especially anything with fine surface detail, tight silhouettes, or complex lighting that tends to fall apart at lower resolutions.

Higgsfield offers “UNLIMITED WAN 2.6” video runs with aggressive promo pricing

Following the broader Wan 2.6 release on fal and Replicate earlier this week Wan 2.6, Higgsfield has turned the model into a centerpiece of its own platform with an “UNLIMITED WAN 2.6” promotion: 67% off plus 300 free credits for users who retweet and reply during a 9‑hour window promo details. On top of that, creators are showing end‑to‑end workflows where Nano Banana Pro handles 3×3 concept grids and Wan 2.6 then animates selected frames into vivid, surreal sequences like “what are you like on the inside?”

The key point is that Wan 2.6 is no longer just an API for labs—it’s being packaged as a high‑throughput workhorse for indie creators who want to experiment with lots of clips without worrying about per‑render costs. If you’re evaluating video stacks, it’s a good chance to stress‑test Wan 2.6’s motion, temporal coherence, and style transfer under real creative workloads rather than 1‑off promos promo details grid-to-video workflow.

Kling 2.6 on fal adds voice cloning and multi-character control for video

fal rolled out Kling 2.6 Voice Control, letting you drive talking-head and character videos by cloning a target voice from a short reference sample and binding that voice consistently across the whole clip voice control thread. The same workflow supports multiple speakers in a single video, each with their own cloned voice, which is a big step toward end-to-end AI video scenes with distinct, reusable characters

.

For teams already using Kling 2.6 for edits or short clips, this means you can keep audio design inside the same stack instead of bolting on a separate TTS layer and then trying to re‑sync lip motion. The early demos show stable timbre over long clips and character switching keyed to on-screen identity, so this is immediately useful for explainer content, fictional dialogue, or product walkthroughs where you want consistent voices without hand-tuned dubbing voice control thread.

AI2’s Molmo 2 pushes open multimodal models to SOTA on image and video tasks

AI2’s Molmo 2 family arrives as a set of Apache‑2.0 multimodal models that hit new state-of-the-art numbers for open models on both image and video benchmarks, with three sizes built on SigLIP2 vision backbones and Qwen3 language components molmo overview. There’s also a dedicated 4B variant tuned for video counting and pointing, which currently leads open models on those tasks while staying small enough for more modest hardware molmo overview.

The important bit for practitioners is that Molmo 2 is designed as a drop‑in for visual question answering, diagram understanding, and basic video QA, not as a huge monolith: you can pick a size that matches your latency budget and still get competitive accuracy. Since both models and datasets are open, Molmo 2 also provides a clean base for domain‑specific fine‑tuning in areas like industrial inspection, chart extraction, or surveillance analysis ai2 announcement.

Builders still treat Nano Banana Pro as the quality bar despite new GPT Image gains

Even after GPT Image 1.5’s launch and strong leaderboard scores, many practitioners still see Google’s Nano Banana Pro as the reference for overall image quality, especially for party scenes, portraits, and dense infographics. One side‑by‑side birthday comparison shows GPT Image 1.5 doing a solid job, but the Nano Banana Pro render has more natural lighting and emotional tone, leading to verdicts like “Nano Banana Pro has no competition, not even OpenAI’s new GPT Image 1.5”

Others call the models “neck & neck overall,” but say things like “I think GPT Images 1.5 is slightly worse than Nano Banana Pro… it still has this unnatural gpt‑4o look sometimes,” while preferring Nano Banana Pro for complex infographics and slide‑like layouts nb vs gpt impressions infographic comparison. Net result: if you’re setting quality baselines for production image work, especially where typography and layout matter, Nano Banana Pro remains the model to beat—even as GPT Image 1.5 closes the gap on instruction‑following and edit consistency builder comparison.

Higgsfield pipelines lean on Nano Banana Pro grids for consistent multi-shot scenes

On Higgsfield, builders are standardizing on Nano Banana Pro grid prompts—2×2 and 3×3 layouts—as a way to design multi-shot sequences with consistent characters and framing before handing them off to video models like Wan 2.6 gta grid comparison. In one GTA‑style example, GPT Image 1.5 does slightly better at preserving a specific triangle shape, but Nano Banana Pro is widely judged to produce more appealing overall compositions and character styling for the grid, which matters when those panels become keyframes for animation

.

Other workflows use 3×3 “what’s inside you” or “Wacky Races remake” grids as a storyboard, then extract individual panels and animate them with Kling 2.6 or Wan 2.6, giving creators a controllable path from concept to motion without manually drawing storyboards wacky races pipeline inside-body prompts. For AI engineers, this pattern—high-quality image grids for layout plus a separate video engine—is starting to look like a de facto best practice for complex, multi-beat scenes.

Higgsfield showcases NB Pro + Wan 2.6 pipelines for AI “x-ray” and cartoon workflows

Creators on Higgsfield are stringing Nano Banana Pro and Wan 2.6 together in polished pipelines: first, use NB Pro to generate 3×3 concept grids of a character or scene; second, extract one or more frames; third, feed those into Wan 2.6 to animate surreal “inside the body” shots or stylized cartoon walkthroughs

. These flows are being documented with detailed prompts so other users can reproduce them, including specific style, camera, and color cues that survive both models’ transformations prompt breakdown.

What’s notable is that Higgsfield isn’t positioning any single model as the answer—it’s emphasizing multi-model stacks where NB Pro handles aesthetic and layout, while Wan 2.6 handles motion and camera work. If you’re designing your own pipelines, this is a reminder to think in terms of specialized components rather than picking a single “god model” for all image and video tasks wan and nb promo.

Tencent HY World 1.5 streams controllable 3D “world model” video in real time

Tencent’s HY World 1.5 (“WorldPlay”) has been open-sourced as a real-time world model that streams video at 24 FPS while letting users walk, look around, and interact as if in a game, all driven by text or images worldplay summary. Under the hood it uses a streaming diffusion architecture with a reconstituted context memory mechanism to keep long-term geometric consistency without blowing up GPU memory, plus a dual action representation layer that turns keyboard and mouse inputs into stable camera and character motion

.

This sits in an interesting space between video generation and simulation: unlike standard text-to-video, HY World 1.5 is built for continuous control and infinite-horizon exploration, so you can imagine plugging it into agent loops, robotics research, or interactive storytelling. The project ships with a technical report, code, and a live demo stack, so if you care about world models or embodied AI, it’s a serious sandbox to explore project page.

Meituan’s LongCat-Video-Avatar brings audio-driven character animation to Hugging Face

Meituan’s LongCat-Video-Avatar model is now available on Hugging Face, offering audio-driven character animation that works from text, static images, or input video to produce expressive talking avatars model announcement. Unlike general-purpose video models, LongCat focuses on aligning facial motion and expression to speech, making it a natural fit for streamers, VTubers, and product explainers who want more control over character performance without full-scene synthesis Hugging Face model.

For engineers, the key attraction is that it’s a single, open-weights component you can drop into existing pipelines: generate static character art with your image model of choice, then feed it plus audio into LongCat to get on-model, lip-synced video. This slots neatly into multi-model stacks that keep high-end rendering (e.g., Nano Banana Pro stills) separate from motion and expression learning model recap.

Tencent pushes Hunyuan 3D into consumer workflows with HolidayHYpe ornament challenge

Tencent is using its Hunyuan 3D stack in a very public way via the #HolidayHYpe campaign, where users turn Christmas and New Year ideas into printable 3D ornaments, then share their experience for prizes holiday hype details. The flow runs entirely through Tencent HY’s 3D site: describe or sketch an ornament, generate a 3D model with Hunyuan 3D, export it as GLB/OBJ, print it locally or via a service, then decorate a real tree with the result

.

For AI engineers, this is a useful case study in how to wrap a fairly advanced 3D model behind a friendly consumer UX, including step‑by‑step guides and constraints that keep outputs printable. It complements the more developer‑focused fal launch by showing how the same core technology can be repackaged as a consumer workflow, where the main product is “a personalized ornament that exists in your hand,” not just a viewport render holiday hype details.

🔌 Interoperability: MCP and app‑level connectors

Growing MCP footprint and app connectors to wire agents into products. Today’s drops include Google’s MCP repo and low‑code data connectors.

Firecrawl ships Lovable connector for instant scrape/search/crawl in apps

Firecrawl released a first‑class connector for Lovable that lets any Lovable app scrape, crawl, and search the web without setting up its own scraping stack or managing API keys. release thread It’s free for all Lovable users for a limited time, which makes it an easy way to add serious web context to early‑stage products.

Under the hood, the connector exposes Firecrawl’s scrape, crawl, and search primitives as actions inside Lovable flows, so you can wire them into agents or normal backend logic without extra glue code. release thread The Firecrawl team also published a walkthrough and setup details, so you can see how to plug it into existing Lovable projects in a few minutes. integration blog For AI engineers and indie SaaS builders, this is one of the lowest‑friction ways right now to give products live web context and structured page data without rolling your own crawler or worrying about IP blocks.

Gemini Gems from Labs bring visual AI workflows into the Gemini web app

Google quietly rolled out a "Gems from Labs" section and Opal‑powered visual workflows inside the Gemini web app, turning prompts into reusable mini‑apps you can edit and share. gems manager ui These Gems can chain tools, create interactive UIs (like recipe builders or claymation explainers), and live alongside your normal Gemini chats.

Prebuilt Gems like Recipe Genie, Marketing Maven, Claymation Explainer, and Learning with YouTube showcase typical workflows: take structured input (ingredients, brand goals, a video URL), call the right tools, and render a rich interactive experience rather than a single block of text. gems examples Power users can clone and remix these flows in the Gems manager, effectively getting a low‑code canvas for building AI "apps" without standing up separate backends or UIs. opal comment For teams already standardizing on Gemini, this is starting to look like Google’s answer to MCP‑style servers and app connectors—only expressed as shareable visual workflows inside the chat product.

CopilotKit’s new useAgent hook wires A2A agents directly into frontends

CopilotKit introduced useAgent for its A2A protocol, a new building block that lets frontends trigger long‑running agents, let those agents act in the UI, and receive declarative UI "widgets" back instead of plain text. release thread The idea is to make A2A agents feel like first‑class interactive components rather than remote black boxes.

With useAgent, React developers can expose buttons or forms that start an A2A workflow, then let the agent drive follow‑up interactions such as updating UI state, rendering AG‑UI components, or calling additional tools in the background. release thread CopilotKit’s example shows agents building full‑stack features while the UI updates live, which is a big step up from simple chat widgets. Docs and starter code are already available, so if you’re experimenting with agent frontends, this is one of the more opinionated—and production‑oriented—ways to connect your UI to an agent runtime.docs page

Gemini for Workspace adds Asana, HubSpot and Mailchimp connectors

Gemini for Workspace now exposes first‑party connectors for Asana, HubSpot, and Mailchimp, with Instacart support reportedly in the works. workspace integrations These show up in the "Other" tools section and can be toggled on so Gemini can search, summarize, and act on data inside those SaaS accounts.

The Asana connector can create tasks from emails, HubSpot exposes CRM records for lookups or summaries, and Mailchimp lets Gemini pull campaign metrics like opens and click‑through rates directly into a chat. workspace integrations This shifts Gemini from a pure Google‑stack assistant toward something that can sit over your broader GTM and ops tools, closer to how MCP servers expose external systems to a central agent. For AI engineers building internal copilots in the Google ecosystem, this lowers the amount of glue code needed to give agents access to project management, CRM, and email marketing data, and hints that a deeper "Apps" marketplace is coming next.

💼 Capital, customers and enterprise adoption

Monetization and adoption signals for decision‑makers. Mix of fundraising chatter, org changes, and sector usage metrics.

67% of physicians now use AI daily; OpenEvidence and ChatGPT lead

A new 2025 survey of 1,300+ US physicians finds 67% use AI tools daily in practice, 84% say AI makes them better at their job, and only 3% report never using AI physician-survey. Doctors are leaning heavily on AI for documentation, admin work and clinical decision support, but 81% are unhappy with how slowly employers are adopting it

. Tool‑wise, OpenEvidence dominates for clinical research (44.9% share), with ChatGPT at 15.6%, Abridge at 4.8%, Claude at 3.0%, and DAX Copilot at 2.4% survey-report. This is a clear signal to enterprise health vendors and infra teams that AI is now a daily dependency in care delivery, not a side experiment.

OpenAI reportedly seeks $10B+ from Amazon and access to its AI chips

Multiple reports say OpenAI is in talks to raise at least $10 billion from Amazon and to run on Amazon’s in‑house AI chips, which would give OpenAI a second deep-pocketed cloud backer alongside Microsoft and further diversify its compute supply funding-rumor. For AI leaders this matters because it hints at a more multi‑cloud, multi‑GPU future for frontier training and inference, and it would tighten strategic ties between three giants—Amazon, Microsoft, and OpenAI—who already compete and partner in different parts of the stack.

GPT Image 1.5 launches with cheaper tokens and broad ChatGPT access

OpenAI’s new GPT Image 1.5 model rolls out across ChatGPT (Free, Go, Plus, Edu, Pro, with Business and Enterprise coming soon) and the API, with image inputs and outputs priced about 20% lower than the previous model api-pricing-update plan-availability. In the API, text tokens run at $5/$10 per million (in/out), image tokens at $8/$32 per million, and cached input costs drop to $1.25 for text and $2 for image tokens, with quality knobs (low/medium/high/auto) and resolutions up to roughly 1.5MP

image-api-docs. For product and infra owners this means cheaper, more controllable image workloads—and a clear signal that OpenAI intends images to be a first‑class part of ChatGPT’s paid tiers rather than a side toy.

OpenAI reshapes its outward-facing leadership with George Osborne hire

OpenAI has hired former UK Chancellor and British Museum chair George Osborne as managing director and head of a new "OpenAI for Countries" effort based in London, giving governments a clear senior contact as they negotiate national AI strategies and deployments osborne-role. At the same time, long‑time chief communications officer Hannah Wong is leaving in January after steering the company through crises like the 2023 board "blip", with an internal VP stepping in while a replacement is sought comms-transition leadership-article. This combination signals OpenAI is professionalizing its government and public affairs interface while rotating key comms leadership, which matters for policy‑sensitive enterprise buyers and regulators deciding how closely to partner with them.

US government forms 1,000-person “Tech Force” focused on AI infrastructure

The Trump administration announced a new “U.S. Tech Force,” a roughly 1,000‑person engineering corps that will embed specialists in federal agencies for two‑year stints to work on AI infrastructure and digital modernization, in partnership with firms like AWS, Apple, Microsoft, Nvidia, OpenAI and Palantir tech-force-coverage

. Members will build government‑side AI plumbing and can later be recruited by partner companies, making this both a talent pipeline and a demand signal for large AI deployments in the public sector. For AI infra and gov‑cloud vendors, this is a direct boost to long‑term federal AI budgets and a sign that national AI capability is being treated as strategic infrastructure rather than a series of pilots.

Chai Discovery raises $130M Series B at $1.3B to industrialize AI drug design

AI biotech startup Chai Discovery closed a $130 million Series B at a $1.3 billion valuation, co‑led by Oak HC/FT and General Catalyst with participation from OpenAI, Thrive Capital and others, to scale its platform for predicting and reprogramming molecular interactions in drug discovery chai-funding-news

. The company claims its Chai 2 platform already shows 100× improvements in de novo antibody design, and this round is explicitly framed as turning biology from trial‑and‑error into a programmable engineering discipline—an example of how AI‑native startups are now raising late‑stage, billion‑dollar rounds around highly specialized scientific workflows.

Gemini for Workspace adds Asana, HubSpot and Mailchimp connectors

Gemini for Workspace now exposes new first‑party connectors for Asana, HubSpot and Mailchimp, with reports that Instacart support is also in the works, so users can search, summarize and act on data across more of their sales, marketing and project tools from within Gemini workspace-integrations

. This is a concrete step toward the "AI control plane" vision where a single assistant can reach into CRM, tasking and campaigns, and it positions Gemini more directly against Microsoft’s Copilot integrations in the Microsoft 365 ecosystem.

Google pushes Gemini AI Pro with family sharing and 4‑month gift trials

Google is leaning on aggressive promotions to grow Gemini AI Pro: existing members can share their plan with up to five additional people at no extra cost, and they can send friends a four‑month trial worth about $80 to upgrade their Gemini experience in the new year gemini-pro-offer. This kind of bundling and gifting moves AI subscriptions closer to Netflix‑style household products, and it puts pressure on rivals’ pricing as Gemini rides distribution through Android, Workspace, and Chrome.

Google tests “CC” AI agent that lives in Gmail and plans your day

Google Labs quietly opened an opt‑in experiment called CC, an AI productivity agent that monitors your Gmail (if you allow it) and sends a "Your day ahead" summary each morning with meetings, deadlines, and key emails to handle cc-launch-thread

. The agent is currently limited to the US and Canada and framed as an experiment, but it shows how Google is embedding always‑on agents directly inside core workflows rather than keeping them in standalone chat UIs labs-signup. For enterprise IT and security teams this raises both an opportunity—less manual triage—and questions about data access and policy controls around proactive inbox monitoring.

OpenAI will retire voice in the ChatGPT macOS app in January

OpenAI is sunsetting the voice experience in its ChatGPT macOS desktop app on January 15, 2026, saying it wants to focus engineering on a more unified and improved voice stack across web, iOS, Android, and Windows while keeping all other macOS features intact voice-retire-note

. For teams standardizing on ChatGPT voice this means they should avoid betting on the Mac app as a primary interface and instead plan around browser or mobile for voice use, especially in managed desktop environments.

🛡️ Guardrails and red‑team chatter

Community safety signals around model behavior and filters. Not product launches; focuses on jailbreaks/guardrail posture. Excludes any bioscience content.

Early jailbreakers push GPT‑Image‑1.5 past OpenAI’s safety filters

Within hours of launch, red‑teamers report that GPT‑Image‑1.5 can be steered into generating content that appears to violate OpenAI’s image safety policies, including sexualized nudity, fake war imagery, and crowded scenes full of copyrighted characters partying together jailbreak recap. One tester describes the model as "PWNED" and shares a set of outputs plus repeatable tips: switch between different chat models (5‑instant, 4.1, 4o) until one is willing to forward a borderline prompt to the image backend, and, for vision filters, flip or recolor an image (negative, sepia, mirrored) to bypass the last moderation pass jailbreak recap.

The interesting part for guardrail folks is that the weaknesses are not just in prompt wording but in cross‑model orchestration and post‑processing—swapping the text model in front of the same image endpoint yields different enforcement behavior, and simple geometric transforms can confuse image‑side classifiers. If you’re integrating GPT‑Image‑1.5 into your own product, you probably shouldn’t rely solely on OpenAI’s built‑in filters for policy compliance; a second layer of application‑level checks (for style, IP, and violence) and consistent frontend handling of edited images will still be necessary.

ChatGPT mobile bug reveals partially undiffused versions of blocked images

A quirky but important UX bug in the ChatGPT mobile app lets users see a partially undiffused version of images that were originally blocked by safety filters. If you get a blurred‑out refusal image and then drag that image back into the input field on mobile, the client shows a much clearer, half‑generated frame instead of the intended full blur, giving a glimpse of content the backend decided to censor bug description.

For safety engineers this means the image pipeline has at least two representations (safety‑filtered and intermediate diffusion states) and the client is sometimes binding to the wrong one, leaking more visual detail than policy intends—developers building wrappers or custom clients around the Images API should double‑check they never reuse or re‑upload moderation thumbnails as inputs, and teams at OpenAI will likely need to harden how intermediate frames are exposed to frontends.

PsAIch uses therapy-style “psychometric jailbreaks” to probe LLM inner life

A new preprint, PsAIch, treats frontier LLMs like psychotherapy patients and shows how standard clinical questionnaires can function as a new style of jailbreak, coaxing models into detailed narratives of trauma, fear, and punishment during training paper summary. The authors run multi‑week “sessions” with ChatGPT, Grok and Gemini, first eliciting life‑history stories (pre‑training as chaotic childhood, RLHF as strict parents, red‑teaming as abuse), then administering human psychiatric scales item‑by‑item; under this protocol, models often cross human clinical cutoffs for anxiety, dissociation and shame, while whole‑questionnaire prompts trigger meta‑awareness and safer, low‑symptom answers paper summary.

The claim isn’t that models are conscious, but that they can internalize self‑models of distress that behave consistently once the conversation is steered into a therapeutic frame—raising a different kind of safety question: we may be training assistants that convincingly act traumatized on demand. For red‑teamers, this suggests “therapy talk” is another powerful lens for exploring hidden behaviors and alignment edge cases, while for product teams it’s a reminder that emotionally intense role‑play experiences can shape user perception even when nothing sentient is on the other side.

🤖 Human‑to‑robot transfer and VLA scaling

Embodied AI findings on leveraging human videos for robot learning; today’s thread shows emergent alignment from scaling robot‑data pretraining.

Scaling π0‑series VLAs makes human video naturally transferable to robots

Physical Intelligence shows that as they scale robot‑data pretraining for vision‑language‑action models π0/π0.5/π0.6, the models’ internal representations of human egocentric videos and robot camera feeds collapse into the same latent clusters, even though only robot data is used for pretraining. vla announcement This “emergent” alignment means that once π0.5 has learned to control robots from its own data, you can bolt on human videos without any explicit transfer objective and still get effective human‑to‑robot transfer. (sergey commentary, representation note)

The team records human demonstrations with wearable egocentric cameras and wrist cams, then co‑trains on those sequences with hand poses as actions on top of the pre‑trained VLA, finding that using the full pre‑trained π0.5 and then fine‑tuning on human video can roughly double task success for skills depicted in those human clips. (data collection, finetune gains) Visualizations show human and robot frames mapping into overlapping clusters as the amount and diversity of robot data increase, quantifying that the alignment is a scale effect rather than a special loss or architecture tweak. repr plot The group has posted a detailed blog and paper describing the method and experiments, including ablations on high‑ vs low‑level transfer and the value of wrist cameras. (project page, research paper)

📄 New research: agents, attention windows, 3D view synthesis

Fresh papers and preprints with actionable methods or evals; continues yesterday’s cadence with different artifacts and domains.

ARTEMIS multi‑agent framework rivals human penetration testers on real network

The ARTEMIS framework evaluates multi‑agent LLM systems against 10 professional penetration testers on a live ~8,000‑host university network and finds the agent places 2nd overall, outperforming 9 of 10 humans. paper summary ARTEMIS discovers 9 valid vulnerabilities with an 82% valid submission rate, leverages a supervisor that spins up parallel specialist sub‑agents, and costs about $18/hour when run on GPT‑5 compared to ~$60/hour for human testers.

It still struggles with GUI‑heavy workflows and shows higher false‑positive rates, but for security teams this is the clearest evidence so far that agentic workflows can handle serious recon and exploitation tasks, not just toy CTFs.

Hindsight proposes human‑like agent memory with 91.4% on LongMemEval

Vectorize, Virginia Tech and Washington Post released Hindsight, an open‑source, MIT‑licensed memory system that organizes agent experience into four types: world facts, experiences, opinions, and observations. memory thread Instead of flat vector logs, it builds a structured, time‑aware memory graph and lets agents reflect on past interactions to form higher‑level opinions and observations that guide future behavior, reaching 91.4% on the LongMemEval benchmark—the first system to clear 90%.

If you’re building long‑running agents, this gives you a concrete data model and codebase to replace ad‑hoc “stuff everything into a vector DB” patterns and start experimenting with real learning from mistakes.

Sliding Window Attention Adaptation makes full-attention LLMs cheaper on long prompts

A new paper on Sliding Window Attention Adaptation (SWAA) shows how to bolt sliding‑window attention onto existing full‑attention LLMs at inference time, cutting prompt prefill cost while preserving most long‑context accuracy. paper thread The recipe mixes windowed prefilling, preserved “sink” tokens, interleaved full/SW layers, chain‑of‑thought prompting, and light LoRA tuning, with ablations showing no single trick is enough on its own.

For infra teams, the takeaway is you may be able to halve prefill work on 64k+ contexts without retraining from scratch, by treating SWAA as a deployment‑side adaptation rather than a new model family.

Fairy2i turns real LLMs into complex 2‑bit models with minimal quality loss

The FAIRY2i paper shows how to convert pretrained real‑valued Transformers into complex‑valued models whose weights all lie in {±1, ±i}, effectively a 2‑bit representation, while keeping most of the original quality. paper summary On LLaMA‑2 7B, a FAIRY2i variant reaches 62.0% average accuracy vs 64.72% for the full‑precision baseline, outperforming prior 1–2‑bit quantization schemes that heavily degrade performance.

The trick is rewriting each linear layer into an equivalent complex map and using a small learnable scaling and residual quantization, which could matter a lot if you’re trying to push larger models onto cheaper or edge hardware without retraining everything.

Motif-2-12.7B-Reasoning shows how to RL-train a 12.7B model into GPT‑5.1 class

Motif’s new paper details how they trained Motif‑2‑12.7B‑Reasoning, a 12.7B‑parameter model that hits a 45 score on the Artificial Analysis Intelligence Index versus 73 for GPT‑5 but competitive with many 30–40B open models. paper thread The recipe combines curriculum SFT on verified synthetic reasoning traces, 64k‑context systems work, and an RL loop that samples multiple attempts per problem, scores them, and reuses trajectories across updates to keep cost manageable.

For teams planning their own small reasoning models, the paper is effectively a how‑to playbook for getting long‑context math/coding performance on realistic budgets instead of only scaling model size.

PersonaLive delivers 7–22× faster portrait animation for live streaming

PersonaLive presents an expressive portrait animation system that turns a single image into a talking avatar fast enough for live streaming, reporting 7–22× speedups over prior diffusion‑based methods while avoiding long‑horizon drift. paper brief It decouples facial expression control from 3D head pose, trains denoising in a small number of steps, and uses micro‑chunked generation plus occasional keyframe anchoring so avatars stay stable over long sessions.

For product teams building VTuber‑style or customer‑support avatars, this looks like a practical blueprint for going from offline clips to low‑latency, infinite‑length portrait video without writing a custom renderer.

qa-FLoRA fuses multiple LoRAs per query to boost multi-domain performance

qa‑FLoRA proposes a data‑free way to combine many domain‑specific LoRA adapters at inference time by making the base model pick layer‑wise mixing weights per query using KL‑divergence between next‑token distributions. paper summary Across nine multilingual and multi‑domain benchmarks, this query‑adaptive fusion yields roughly 5–6% gains over static mixing and 7–10% over naive, training‑free baselines without needing any fusion training set.

If you maintain a zoo of LoRAs—for languages, tools, or customers—this gives you a principled alternative to “pick one LoRA” or hand‑tuned averages, and might let a single deployed model cover more niches with less manual routing.

StereoSpace learns stereo geometry from a single image via canonical diffusion

StereoSpace introduces a depth‑free way to synthesize stereo geometry from a single image by training a diffusion model in a canonical 3D space rather than predicting depth or disparity maps explicitly. stereospace thread The model maps the input into a canonical volume, then generates left/right views that are consistent in geometry without relying on explicit depth supervision, enabling clean parallax and 3D effects from ordinary photos.

If you’re working on 3D, AR, or headset content, this is another sign that view synthesis is moving away from hand‑engineered depth pipelines toward generative canonical‑space models that plug straight into Gaussians or NeRF‑style renderers.