OpenAI GPT‑5.2 multiplies tokens 6× on agents – 1T‑token debut

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Two days after OpenAI’s GPT‑5.2 launch, independent evals are filling in the fine print. On Artificial Analysis’ GDPval‑AA run, GPT‑5.2 xhigh tops the agentic leaderboard at 1474 ELO, edging Claude Opus 4.5 at 1413, but burns ~250M tokens and ~$610 across 220 tasks—over 6× GPT‑5.1’s usage.

ARC‑AGI‑2 tells the same story: GPT‑5.2 high reaches ~52.9% at ~$1.39 per problem vs GPT‑5’s 10% at $0.73, and xhigh and Pro runs go higher still. Meanwhile on SimpleBench’s trick questions, GPT‑5.2 base scores 45.8% and Pro 57.4%, trailing GPT‑5 Pro and far behind Gemini 3 Pro at 76.4%, reinforcing that “more thinking” mainly helps long, structured work.

Usage doesn’t seem scared off: Sam Altman says GPT‑5.2 cleared 1T API tokens on day one despite roughly 40% higher list prices. VendingBench‑2 and Epoch’s ECI put it in the same long‑horizon league as Gemini 3 Pro and Opus 4.5 rather than a runaway winner. The practical takeaway: treat xhigh reasoning like a surgical tool for quarterly plans, audits, and gnarly debugging, while cheaper, faster models handle your inner loops.

Top links today

- GPT-5.2 long-context capabilities overview

- OpenAI circuit sparsity research post

- Stirrup agentic harness and GDPval tools

- Building Sora Android app with Codex

- Tinker GA launch and model support

- Olmo 3.1 Think 32B model artifacts

- Olmo 3 and 3.1 technical paper

- VoxCPM open-source TTS model card

- Luxical-one fast static embeddings blog

- AutoGLM smartphone UI agent model

- Gemini 2.5 Flash Native Audio update

- HICRA hierarchy-aware RL for LLM reasoning

- Asynchronous Reasoning training-free thinking LLMs

- InternGeometry olympiad geometry agent paper

- Causal-HalBench LVLM object hallucinations

Feature Spotlight

Feature: GPT‑5.2 reality check—evals vs cost and latency

GPT‑5.2 leads GDPval‑AA but burns ~250M tokens and ~$608 per run; underperforms on SimpleBench and is slow at xhigh. Engineers must weigh accuracy gains against cost/latency for real agent workflows.

Day‑two picture for GPT‑5.2: strong agentic task wins but mixed third‑party benchmarks and high token spend. Threads focus on SimpleBench underperformance, GDPval-AA costs, and heavy xhigh reasoning latency for AGI‑style gains.

Jump to Feature: GPT‑5.2 reality check—evals vs cost and latency topicsTable of Contents

🧄 Feature: GPT‑5.2 reality check—evals vs cost and latency

Day‑two picture for GPT‑5.2: strong agentic task wins but mixed third‑party benchmarks and high token spend. Threads focus on SimpleBench underperformance, GDPval-AA costs, and heavy xhigh reasoning latency for AGI‑style gains.

GPT‑5.2 tops GDPval‑AA ELO but at ~250M tokens for 220 tasks

Artificial Analysis’ GDPval‑AA run now has GPT‑5.2 (xhigh) at the top of its agentic knowledge‑work leaderboard with an ELO of 1474, beating Claude Opus 4.5 at 1413 and GPT‑5 (high) at 1305. gdpval summary This is a third‑party rerun of OpenAI’s GDPval dataset, following up on gdpval expert wins which covered OpenAI’s own 70.9% win‑or‑tie rate vs human professionals.

The win comes with a clear price tag: running GPT‑5.2 through all 220 agentic tasks cost about $608–$620 and used ~250M tokens, versus ~$88 and ~40M tokens for GPT‑5.1 on the same harness, implying >6× more token usage for the new model. (run cost tweet, token usage tweet) Most of that overhead comes from letting GPT‑5.2 use the xhigh reasoning effort setting inside Artificial Analysis’ Stirrup agent framework, which encourages long tool‑using chains for each task. stirrup github repo For teams, the takeaway is that GPT‑5.2 xhigh really does buy state‑of‑the‑art agentic performance on realistic business workflows, but only pencils out when each task is worth a few dollars of compute—more like quarterly planning decks and complex RFPs than everyday chat or light ETL.

Xhigh reasoning boosts GPT‑5.2’s scores but raises per‑task cost and latency

The same high‑profile benchmarks that made GPT‑5.2 look like a monster also highlight how much extra compute it burns when you let it think in xhigh mode. On ARC‑AGI‑2, GPT‑5.2 (high) reaches ~52.9% but costs around $1.39 per task—roughly double GPT‑5’s 10% score at $0.73 per task and comfortably above GPT‑5.1 high’s lower‑accuracy, lower‑cost point. arc cost commentary Xhigh and Pro runs cost even more.

Users are noticing. One builder notes that AI Explained’s run of GPT‑5.2 xhigh on SimpleBench burned roughly 100k “thinking” tokens per query, xhigh token note while another jokes that “gpt 5.2 xhigh might as well be AGI because by the time it finishes my query, AGI will be here.”latency joke Others accuse OpenAI of “benchmaxing”—raising prices by ~40%, then letting models think far longer on evals to win leaderboards, without surfacing cost and time alongside accuracy. benchmax rant All of this is the flip side of arc-agi gains, where GPT‑5.2 Pro (xhigh) hit 90.5% on ARC‑AGI‑1 and achieved a 390× efficiency gain over last year’s SOTA when you compare to the original $4,500 DeepSeek‑style runs. Yes, you can buy huge gains with more test‑time compute—but your own workloads will only benefit if you actually let the model spend tens of thousands of extra tokens per hard problem.

For teams, the practical response is to treat xhigh reasoning as a scalpel, not a default: reserve it for the few percent of tasks where a single wrong answer is very expensive, and instrument your pipelines so that you see not just accuracy but also tokens, dollars, and seconds per task.

Builders reserve GPT‑5.2 for deep audits while leaning on faster models day‑to‑day

A pattern is emerging in how experienced developers are actually slotting GPT‑5.2 into their stacks: it’s a specialist, not the default. One engineer’s “best coding model TL;DR” is: daily driver Claude Opus 4.5, GPT‑5.2 (high) for planning, audits and bug‑fix review, and GPT‑5.2 Pro for the very hardest problems via repo‑level prompts. coding model tldr

Another reports that GPT‑5.2 is “most used atm… painful slow, pragmatic approach & advice but accurate,” with Opus 4.5 as a fast daily driver, Composer‑1 as an ultra‑fast cheap helper, and Gemini 3 Pro reserved for UI design ideas. model pool comment A third says GPT‑5.2 “has more… personality,” pushes back more, and often one‑shots complex requests—but adds bluntly: “It is DEAD SLOW, I mitigate by working on many things at once.”slow but strong

Some people tried to replace Opus entirely and backed off. One radio‑firmware builder found scenarios where both Opus 4.5 and GPT‑5.2 failed, concluding after an hour‑long session, “i will not be cancelling my opus subscription… opus is just more interactive and faster at trying things.”codex vs opus test kept opus

So the lived reality matches the benchmarks: GPT‑5.2 is excellent when you can afford to let it think—especially for whole‑repo reasoning, architecture reviews, or tricky planning—but for tight inner loops and interactive coding, many teams are sticking with faster, cheaper models and calling 5.2 only when the extra depth truly matters.

Sam Altman says GPT‑5.2 crossed 1T API tokens on day one

Sam Altman reports that GPT‑5.2 “exceeded a trillion tokens in the API on its first day of availability and is growing fast,” which is an enormous adoption spike for such a new and relatively expensive model. altman usage stat That implies developers immediately pushed large volumes of requests—probably a mix of agents, batch jobs, and upgraded production workloads—onto the new endpoints.

This surge arrives despite OpenAI increasing GPT‑5.2 prices by about 40% over GPT‑5.1 for the same token types and encouraging the use of heavy reasoning‑effort modes that further inflate usage. gdpval summary It lines up with economic work suggesting that demand for AI compute is highly elastic over time: once quality crosses a threshold, firms re‑architect workflows to exploit it, even if each call is pricier. jevons elasticity note If you’re running a serious AI product, the signal is simple: a lot of your peers are already experimenting with GPT‑5.2 at scale. The real question isn’t whether to try it, but which slices of your workload justify its higher per‑token and per‑request cost.

SimpleBench shows GPT‑5.2 underperforming older GPT‑5 and Claude Opus

On AI Explained’s SimpleBench—trick questions designed to test common‑sense reasoning and avoid obvious traps—GPT‑5.2’s scores are notably weak compared to both its predecessors and competitors. simplebench reaction GPT‑5.2 (base) lands at 45.8% and GPT‑5.2 Pro at 57.4%, versus GPT‑5 Pro at 61.6%, Claude Opus 4.5 at 62.0%, and Gemini 3 Pro at 76.4%.

Even more awkwardly for OpenAI, GPT‑5.2 base is only marginally ahead of older mid‑tier models like Claude 3.7 Sonnet (44.9%) and Claude 4 Sonnet (thinking) at 45.5%, and sits well behind its own GPT‑5 (high) configuration at 56.7%. (simplebench screenshot, simplebench recap) This aligns with early user sentiment that GPT‑5.2’s reasoning shines on long, structured tasks but doesn’t automatically dominate on short, trap‑heavy queries.

For engineers choosing default models, SimpleBench is a reminder that “latest and greatest” doesn’t always mean better on casual question‑answering—you may want to route tricky, bait‑style prompts to whatever you’ve empirically seen behave best rather than assuming GPT‑5.2 will outperform everything out of the box.

Epoch’s ECI suggests ~3.5‑hour METR time horizon for GPT‑5.2

Epoch plugged GPT‑5.2’s new capabilities score (ECI 152) into its regression against METR’s Time Horizons benchmark and predicts that a carefully run GPT‑5.2 should sustain competent, failure‑free behavior for about 3.5 hours on the hardest safety tasks. (eci time horizon thread, eci score thread)

In the same model, Gemini 3 Pro lands at a central estimate of 4.9 hours while Claude Opus 4.5 comes out at 2.6 hours, though Epoch stresses that the 90% prediction intervals are wide—roughly 2× up or down—and that their ECI framework historically underestimates Claude‑family models by about 30%. (eci caveat, eci benchmarks hub) So this doesn’t prove GPT‑5.2 is strictly “worse” than Gemini 3 Pro on Time Horizons, but it does support the qualitative picture: OpenAI’s model is now in the same long‑run league as the best models, not dramatically ahead.

For leaders thinking about multi‑hour agents or semi‑autonomous workflows, this suggests GPT‑5.2 is viable for long‑running tasks, but you should still budget guardrails, monitoring, and periodic human checkpoints rather than assuming it can be left alone indefinitely.

VendingBench‑2: GPT‑5.2 improves on GPT‑5.1 but trails Gemini 3 and Opus 4.5

On Andon Labs’ VendingBench‑2—an agent simulation where models manage a small “vending” business over ~350 days—GPT‑5.2 posts a big gain over GPT‑5.1 but still lags the top models. vendingbench summary The plotted equity curves show Gemini 3 Pro and Claude Opus 4.5 ending near the $4.5k–$5k mark, Claude Sonnet 4.5 around ~$3.8k, GPT‑5.2 around ~$3k, and GPT‑5.1 stuck near ~$1.2k.

So GPT‑5.2 looks substantially more competent than GPT‑5.1 at multi‑step, economically grounded decision‑making, but it’s not yet matching the strongest Gemini or Opus configurations on this particular long‑horizon benchmark. vendingbench summary For people building revenue‑impacting agents—pricing bots, growth agents, operations planners—this is a subtle but important data point: GPT‑5.2 is competitive, but not clearly dominant, once you move from academic math to money‑linked simulations.

🔎 Autonomous research agents: HLE/DeepSearchQA race

Continues yesterday’s Gemini Deep Research momentum but adds a new twist: Zoom claims HLE leadership with federated AI, while Google opens the Interactions API path for backgroundable Deep Research. Excludes GPT‑5.2 evals covered in the feature.

Gemini Deep Research gets Interactions API access and the DeepSearchQA benchmark

Google is turning its Gemini Deep Research agent into something engineers can call directly: the new Interactions API now supports a deep-research-pro-preview-12-2025 agent that runs long‑horizon web research jobs in the background. interactions code At the same time, the team has open‑sourced DeepSearchQA, a 900‑task benchmark for autonomous research across 32 domains, where Deep Research scores 66.1% vs. Gemini 3 Pro’s 56.6%. DeepResearch chart

Following up on initial launch, the code sample from Google’s devrel team shows a simple pattern: create an interaction with agent='deep-research-pro-preview-12-2025' and background=True, poll client.interactions.get() until status == "completed", then read interaction.outputs[-1].text. interactions code This makes Deep Research feel like a first‑class asynchronous job runner, not a one‑shot chat model—you kick off a multi‑minute research session, go do something else, and retrieve a synthesized report when it’s done.

On the evaluation side, Google is treating DeepSearchQA as the long‑form counterpart to benchmarks like HLE. The released card shows Gemini Deep Research at 66.1% vs. Gemini 3 Pro at 56.6% and o4‑mini/o3‑deep‑research baselines in the 40s on DeepSearchQA, plus strong scores on Humanity’s Last Exam (46.4%) and BrowseComp (59.2%). DeepResearch chart Unlike a simple QA leaderboard, tasks force the agent to read PDFs, CSVs, and web pages, then synthesize multi‑source answers—exactly the kind of workflow the Interactions API is built to host.

For you as a builder, the interesting part is the alignment between API surface and benchmark. You now have:

- A production‑oriented agent endpoint that can run for minutes in the background with clear status semantics. interactions code - A public, structured benchmark (DeepSearchQA) that mirrors those capabilities and gives you a yardstick for your own research agents. DeepResearch chart So what? If you’re experimenting with autonomous literature review, due‑diligence bots, or compliance research, you can start by:

- Prototyping against the Interactions API using Deep Research as a strong baseline agent.

- Running your own harness on DeepSearchQA tasks to see if custom prompting, tools, or routing can beat Google’s default agent.

- Treating Deep Research less as “the smart model” and more as one component in a federated or multi‑agent system, especially now that Zoom has shown how orchestration can shuffle the leaderboard. Zoom HLE blog This feels like the start of a more formal agent race: Google is giving you both the agent (via Interactions) and the testbed (via DeepSearchQA), while other players like Zoom are proving that you can swap in your own pipelines and still compete at the top of Humanity’s Last Exam. builder confusion Expect more teams to plug into these APIs, run their own federated setups, and publish results that blur the line between “model benchmark” and “agent benchmark.”

Zoom’s federated AI edges Gemini Deep Research on Humanity’s Last Exam

Zoom is claiming the new top spot on Humanity’s Last Exam (HLE), reporting a 48.1% full-set score for its “federated AI” agent, beating Gemini 3 Pro’s 45.8% and GPT‑5 Pro’s 38.9% in the same table. Zoom HLE blog This is the first time a collaboration-heavy, multi‑model agent stack has publicly leapfrogged a frontier lab’s own research agent on this benchmark.

Zoom describes a three‑stage loop—explore, verify, federate—where multiple models search, propose answers, cross‑check against full context, and then a separate “Z‑scorer” picks or combines outputs. Zoom HLE blog In other words, the win is less about a single base LLM and more about orchestration: routing different question types to different specialists, running redundant attempts, and using verification passes to knock out bad candidates.

Builders are understandably puzzled: HLE leadership just shifted from Google’s Gemini Deep Research to a conferencing company, and Zoom hasn’t disclosed which underlying models it uses. builder confusion That raises all the usual questions about apples‑to‑apples comparisons—how much of the gain is agent design vs. base model choice vs. search tooling—and whether federated stacks should live in the same leaderboard row as single‑model agents.

For AI engineers and analysts, the takeaway is that agent architecture now matters as much as model choice on complex research tasks. If you’re building your own HLE‑style agents, this result argues for:

- Routing: different models or prompts for lookup, reasoning, and answer synthesis.

- Verification: explicit cross‑checks on each candidate answer against the full context, not just “best of N sampling”.

- Federation: a cheap arbiter model or scoring function (like Zoom’s Z‑scorer) that treats upstream models as interchangeable workers rather than oracles.

The point is: Zoom just demonstrated that a well‑engineered federated agent can outrun lab‑native systems on one of the toughest public research benchmarks. That doesn’t settle which base model to bet on, but it does signal that the next meaningful gains in autonomous research may come from how you wire models together, not only from waiting for the next frontier release.

🧰 Coding agents & skills: ChatGPT/Codex skills, IDE QoL, ops

Hands‑on upgrades for builders: ChatGPT’s /home/oai/skills for PDFs/Docs/Sheets, Codex CLI experimental skills, Cursor’s visual editor fixes, Oracle adding GPT‑5.2 Pro extended thinking. Excludes GPT‑5.2 benchmark debates covered in the feature.

OpenAI quietly rolls out reusable “skills” for ChatGPT and Codex

OpenAI’s code sandbox now ships with a /home/oai/skills folder and Codex CLI has an experimental ~/.codex/skills mechanism, giving builders a first‑party way to package multi‑file tools for spreadsheets, DOCX and PDFs as reusable “skills.” skills folder demo skills writeup

Each skill is a directory with a Markdown spec and optional scripts; the CLI discovers them at startup and the prompting layer uses a “progressive disclosure” contract (read SKILL.md, then only load extra files when needed) as shown in the extracted system prompts. (cli skills docs, cli skills guide, prompt gist) Simon Willison’s deep dive also uncovers the PDF skill’s pipeline: PDFs are rendered to per‑page PNGs, then handed to a vision model so ChatGPT can reason about layout, tables and images instead of doing brittle text extraction. (skills deep dive, skills blog post) Developers are already abusing this—one example had ChatGPT assemble a multi‑page report on New Zealand’s kākāpō breeding season entirely inside the sandbox using the PDF skill. pdf skill example pdf viewer

For AI engineers, this is effectively a supported, file‑system‑based tool plug‑in format: you can ship well‑tested code plus docs alongside your agent, keep prompts small, and rely on OpenAI’s own runtime to turn those skills into tool calls, instead of reinventing a bespoke tools layer on every project.

Claude Code adds Android client, async runs, and desktop local files

Anthropic’s Claude Code now spans more surfaces: there’s an Android app in research preview for cloud‑hosted coding sessions, agents can run asynchronously, /resume groups forked sessions, MAX users can hand out 1‑week Pro guest passes, and the desktop app just gained support for working on local files without dropping to the CLI. (Claude CLI, weekly roundup, local files comment)

On Android, you can kick off long Claude Code tasks from your phone, let them run in the cloud, and review or resume them later—handy for tests or refactors that don’t need a laptop in front of you. weekly roundup Guest passes (/passes) make it easier to get teammates onto Claude Code Pro without running a full rollout, and async agents help with “fire‑and‑forget” background exploration of large repos. guest pass screenshot The desktop local‑files support closes a big ergonomics gap: you can now point Claude Code at your local project directly from the GUI and still get the same multi‑agent workflows, rather than juggling editor, CLI, and browser. desktop cli view Taken together, Claude Code is looking less like a fancy CLI and more like a full dev‑environment companion: always‑on, multi‑device, with a path to share access and keep code close to where developers actually work.

CopilotKit’s `useAgent()` turns any React app into an agent console

CopilotKit v1.50 introduces a useAgent() hook that lets you plug any LangChain/LangGraph, Mastra or PydanticAI agent directly into a React frontend and let it drive chat UI, generative interfaces, frontend tool calls, and shared state. (copilotkit v1-50, pydantic useagent)

The hook wraps an agent endpoint and exposes a simple interface (const { agent } = useAgent({ ... })) so your UI can send messages, stream responses, and let agents take actions (like mutating app state or calling tools) without custom wiring each time. pydantic useagent Separate adapters make it easy to talk to LangGraph agents, and other tweets show people wiring up back‑and‑forth multi‑agent chats (e.g. Gemini vs GPT arguing about pizza) with the same primitive. (langgraph adapter, multi-agent pizza demo) There’s even an early integration with VoltAgent, where useAgent acts as the bridge between their agent orchestration and a React front‑end. voltagent comment If you’re building an agentic product, this gives you a higher‑level “agent socket” for the UI layer: instead of reinventing chat plumbing and event handling for each experiment, you can standardize on useAgent() and swap in different backends as your orchestration stack evolves.

Cursor rapidly iterates on its new visual editor based on feedback

Cursor shipped a wave of quality‑of‑life fixes to its brand‑new visual browser editor—removing animations, adding ⌘Z/⌘⇧Z undo/redo, making backspace delete the current element, rounding blur to 0.1, and letting Select add multiple elements as context. (visual editor, update list, rollout note)

These are all about feel: selection now snaps instantly instead of easing, keyboard shortcuts work like designers expect, and multi‑select means you can hand the agent a coherent set of DOM nodes to refactor instead of a single div at a time. (undo shortcut demo, multi-select demo) The team is shipping changes literally the day after launch, explicitly calling out users by handle in thank‑you notes, which tells you this editor is core to their agent story, not a side experiment. rollout note If you’re already using Cursor’s browser to refactor UI, this makes the visual editor much less toy‑like: you can treat it more like Figma‑meets‑DOM for quick layout tweaks, while Cursor keeps generating the underlying React/Tailwind code.

Oracle CLI adds GPT‑5.2 Pro with extended thinking and sturdier uploads

The Oracle 🧿 CLI for headless browser automation now supports gpt-5.2-pro, including the new “Extended thinking” mode in its model switcher UI, and it hardens file uploads by pasting contents inline up to ~60k chars and automatically retrying when ChatGPT rejects them. (oracle 0-6 release, oracle release notes) Extended thinking lets Oracle push 5.2 Pro into the long‑horizon regime—multi‑step browsing or codegen runs that reason for many minutes before committing actions—without you hand‑tuning reasoning settings for each call. long thinking example The upload changes matter if you’re driving ChatGPT’s browser UI from Oracle: instead of a single failed drop killing the run, Oracle now degrades gracefully by inlining smaller files and retrying larger ones, which is exactly what you want for flaky web frontends.

For teams using Oracle as an agent harness, this is a clean way to get access to GPT‑5.2 Pro’s xhigh‑style reasoning inside scripted browser workflows, while reducing two of the most common sources of flakiness: fragile uploads and under‑ or over‑thinking model calls.

Acontext launches as a context and skill memory layer for agents

Acontext is positioning itself as a “context data platform” for cloud‑native AI agents, storing session traces, artifacts and distilled reusable skills so agents can actually learn from past work instead of repeating brute‑force exploration. acontext overview

The core idea: log each agent session, break it into tasks with outcomes, then automatically distill successful patterns into named skills (like deploy_sops or github_ops) that future runs can invoke, all visible in a dashboard that mirrors a Session → Tasks → Skills pipeline. acontext overview That moves a lot of “agent memory” out of brittle prompt soup and into a structured store, with enough metadata to debug why certain workflows work or fail.

If you’re experimenting with long‑running coding or ops agents, Acontext looks like the missing state layer: it can host per‑org context, track user feedback, and surface which chains of tools and prompts are actually reliable enough to promote into first‑class skills for your orchestrator.

Anthropic memory tool lands in Vercel AI SDK for persistent agent context

Vercel’s AI SDK has added support for Anthropic’s memory_20250818 tool, letting agents persist, view, and edit long‑term memory via a structured tool interface instead of shoving everything into prompts. memory tool code

In the example ToolLoopAgent config, memory is just another tool, but its execute handler handles commands like view, create, str_replace, insert, delete, and rename over a backing store such as a file or database. memory tool code That means you can give Claude‑powered agents a durable scratchpad or knowledge base—say, per‑user preferences or project notes—while keeping the actual persistence logic on your side of the wire.

For anyone building multi‑session coding assistants or project copilots, this is a clean pattern: treat memory as a first‑class tool with a narrow API, not an implicit blob in system prompts, and let the SDK orchestrate when the model is allowed to read or modify it.

Helicone adds one‑line DSPy integration for agent observability

Helicone shipped a direct integration with DSPy so you can trace and inspect DSPy‑built agents with a single config change, bringing its logging and analytics to DSPy’s programmatic prompting workflows. helicone dspy integration DSPy is increasingly used to structure multi‑step reasoning and retrieval pipelines; having Helicone capture each call, latency, cost and outcome makes it much easier to debug when your agent trees blow up or silently degrade.

The key point is simplicity: the Helicone team emphasizes that adding their client to a DSPy project is a “one line change,” which lowers the bar enough that even experimental agent runs can be instrumented from day one.

If you’re leaning into DSPy for complex coding or research agents, pairing it with Helicone gives you a quick path to see where prompts are thrashing, how different models behave under the same policy, and which parts of a pipeline are actually worth optimizing.

🎙️ Realtime voice: Gemini Live, Translate, and call UX

Voice stacks level up: Gemini 2.5 Flash Native Audio improves tool calling and instruction adherence; Translate adds live speech‑to‑speech with tone and cadence; LiveKit cuts false end‑of‑turns. Mostly platform and latency/form‑factor updates.

Gemini 2.5 Flash Native Audio tightens tool calls and multi-turn voice

Google rolled out an updated Gemini 2.5 Flash Native Audio model with much stronger function calling and instruction adherence for live voice agents, hitting 71.5% on ComplexFuncBench audio, 90% dev-instruction adherence, and 83% overall conversational quality. audio launch note This builds on the TTS refinements from earlier in the week tts update, and it’s already exposed via the Live API and Vertex as gemini-2.5-flash-native-audio. pipecat api mention

For builders, the point is that the native audio stack now decides more reliably when to call tools and when to stay in conversation, which matters for real customer calls where spurious tool calls burn latency and money. The benchmark chart shows a clear step up versus the September Flash Native Audio release and competitive parity or better versus OpenAI’s realtime stack on function-calling accuracy. bench comparison Full details on the audio roadmap sit in Google’s latest Gemini audio blog.google blog post

Google Translate adds Gemini-powered live speech-to-speech with headphones

Google Translate is getting Gemini-powered live speech‑to‑speech translation plus a new “Live translate” headphone beta that preserves tone, pacing, and pitch across more than 70 languages and ~2,000 language pairs. translate summary You can either stream one-way translation to headphones or run a two-way mode that switches target language by speaker, starting on Android in the US, Mexico, and India, with iOS and more regions due in 2026. rollout details

Under the hood, Translate now leans on Gemini 2.5 Flash Native Audio to resolve context and idioms so it avoids literal, awkward phrasing; the video demo shows near‑instant English/Spanish captions with natural prosody. demo praise The same blog clarifies that translation is noise‑robust and auto‑detects languages, and it frames this alongside Translate’s upgraded practice mode that adds streaks and more tailored feedback. translate blog post For voice UX teams, this is essentially turning any headphones into a low-friction, near‑real‑time interpreter, following up on earlier pricing work for Gemini Live two‑way voice. price point

LiveKit’s new end-of-turn model cuts false interruptions by 39%

LiveKit shipped an updated end‑of‑turn detection model for its voice stack that reduces errors by an average of 39% across 14 languages, with special handling for structured data like phone numbers, emails, and URLs. model announcement That means voice agents are less likely to cut users off mid‑sentence or hang awkwardly when someone is slowly dictating a number.

The team explains in their blog how they trained the detector on multi‑language conversational data and tuned it to avoid treating brief pauses inside email addresses or numeric sequences as true turn endings. livekit blog post For anyone running Gemini, OpenAI, or custom LLMs behind LiveKit, this is a drop‑in improvement: you keep your model prompts the same, but users experience fewer "hello? are you still there?" moments and more natural overlaps in live calls.

Pipecat exposes new Gemini Live audio models for browser voice agents

Pipecat updated its demo to use the new Gemini Live model gemini-2.5-flash-native-audio-preview-12-2025 from Google AI Studio and the GA gemini-live-2.5-flash-native-audio on Vertex AI, giving developers a quick way to try the upgraded live voice stack in a browser. pipecat demo

The landing page now ships a Gemini Live preset that shows streaming waveforms, partial transcription, and back‑and‑forth audio turns, so you can feel the lower latency and smoother turn‑taking before wiring it into your own stack. pipecat demo This ties the new Native Audio metrics to a concrete dev surface: instead of wiring the Live API from scratch, you can prototype an agent pipeline in Pipecat, then later swap in your own backends while keeping the same event model. It’s a good reference if you’re trying to understand how to plumb Gemini Live into WebRTC or Daily-style infra and want to see working code rather than docs alone.

MiniMax voices arrive on Retell AI with <250 ms latency and 40+ languages

Retell AI added MiniMax voices to its telephony stack, advertising sub‑250 ms latency for real‑time conversations, smart text normalization, and support for 40+ languages with inline code‑switching. minimax retell launch This is squarely aimed at production call centers and conversational IVRs where every extra 100 ms of delay makes the agent feel synthetic.

Retell’s announcement highlights that the TTS layer now understands URLs, emails, dates, and numbers, so callers hear cleaned‑up speech instead of raw tokens, and that MiniMax’s multilingual support can switch languages fluidly inside a single turn. minimax retell launch For teams building voice agents on Retell, this gives a new fast‑path for non‑English markets without having to manage your own low‑latency TTS servers or pronunciation dictionaries.

OpenBMB’s VoxCPM offers tokenizer-free TTS at 0.17× real-time

OpenBMB released VoxCPM, a tokenizer‑free text‑to‑speech model that runs at real‑time factors as low as 0.17 on consumer GPUs, meaning it can generate speech several times faster than playback speed while keeping high fidelity. voxcpm thread Instead of discrete codes, it uses a hierarchical continuous setup (text‑semantic language model + residual acoustic language model) to keep both stability and expressiveness.technical report

The 0.5B‑parameter model is Apache‑2.0 licensed and ships with a Hugging Face model card plus demo space, so it’s easy to drop into an existing stack for offline or near‑realtime synthesis.huggingface model card For voice agent builders, VoxCPM is interesting as a local fallback or on‑device voice for latency‑sensitive flows where a cloud TTS round‑trip is too slow, or where you want more control over the full waveform generation process than typical codec‑based models allow.

🎬 Generative video & visuals: actor swaps, Kling/Kandinsky, playbooks

High‑volume creative news: InVideo ‘Performances’ preserves acting while swapping cast/scene, Leonardo + Kling 2.6 workflows, and Kandinsky 5.0 entries on Video Arena. Ensures creative stack coverage distinct from model evals.

Invideo launches Performances for high-fidelity cast and scene swaps

Invideo is rolling out a new Performances engine that lets you swap actors or entire scenes while preserving the original performance – eye lines, lip sync, micro‑expressions, timing – from a single iPhone take, following up on ai film tool that focused on stylized, performance‑preserving looks. feature breakdown Creators can now do Cast Swap (new character over the same acting) or Scene Swap (change character plus environment) and turn one raw take into up to 100 polished variants without reshoots. actor swap demo

For UGC agencies and performance marketers, this effectively turns a laptop into a lightweight virtual production stage: one script and session can become hundreds of consistent ads with frame‑accurate acting, while swapping brands, outfits or backdrops as needed. marketing use cases A detailed how‑to from the vendor walks through using Performances for both cast and full‑scene replacement, including guardrails to keep emotion and motion locked to the original take. feature guide The point is: this is one of the first mass‑market tools where actor swaps feel like controlled post‑production, not a glitchy face filter, which changes how smaller teams can think about re‑use of on‑camera talent.

Leonardo + Kling 2.6 workflow promises cheap AI game cutscenes

A new guide from Leonardo’s community shows how to build game cutscenes by chaining Nano Banana Pro for stills with Kling 2.6 for motion, arguing that studios will adopt AI video for cutscenes because it is faster and cheaper than traditional pipelines. workflow thread The recipe is straightforward: grab an in‑engine screenshot, feed it to Nano Banana Pro with a cinematic prompt to get a high‑quality still, iterate a few times, then hand that still to Kling 2.6 to generate animated shots with native audio.

Because Kling 2.6 can be steered shot by shot and already includes synced audio, small teams can prototype entire sequences without mocap or voice sessions, then selectively upscale the best takes. workflow thread The same flow works beyond games – any static key art or product render can become a short, on‑model video sequence – but the thread explicitly targets "video game industry will start using AI to create its cutscenes" and provides prompts and settings for people to copy into Leonardo today.workflow guide For engineers, the takeaway is that image and video models are starting to look like a single composable stack: one image model for look, one video model for motion and sound, tied together by prompt conventions rather than custom tools.

Video Arena ranks Kling 2.6 Pro and Kandinsky 5.0 among top video models

Video Arena’s latest leaderboard update shows Kling 2.6 Pro and Kandinsky 5.0 entering as serious contenders for text‑to‑video and image‑to‑video work, adding third‑party signal around models that were mostly seen through vendor promos before. leaderboard thread Kling 2.6 Pro now sits at #10 overall for text‑to‑video with a score of 1238 and #6 for image‑to‑video with 1296, reportedly a 16‑point jump over Kling‑2.5‑turbo‑1080p, while Kandinsky‑5.0‑t2v‑pro debuts as the #1 open‑weight text‑to‑video model (#14 overall, score 1205) and the lighter Kandinsky‑5.0‑t2v‑lite lands #3 open (#22 overall, score 1121). shots launch

For creative teams deciding what to actually wire into tools, this means there is now crowd‑sourced preference data saying Kling’s latest release and Kandinsky 5.0 are competitive not just on single demos but across many prompts and judges. The update also tightens the race among open models: Kandinsky 5.0 now anchors a realistic open‑source option for video, while Kling 2.6’s gains hint that proprietary stacks will keep iterating fast; both matter if you are choosing a default backend for a video editor, ad‑builder or game tool rather than hand‑picking a model per project.

ComfyUI showcases 3×3 Nano Banana Pro grid for product ads

ComfyUI is highlighting a new "3×3 Grid For Product Ads" workflow that uses Nano Banana Pro to generate nine distinct ad shots from a single image prompt, then lets you pick and upscale a favourite shot inside the node graph. event announcement The live deep‑dive pairs community creators with ComfyUI’s team to walk through a template where one Nano Banana Pro call lays down style and subject, and the grid node fans that out into a board of variations tuned for things like angle, framing and background treatment.

Because it is implemented as a public template rather than a closed tool, anyone running ComfyUI can drop it into their own pipeline for ecommerce, app screenshots or social promos, and adjust how aggressive the variation is versus brand consistency. workflow link People close to the project note that this is effectively an ad‑shot generator for small shops: instead of hand‑prompting dozens of product angles, you route a single base image through the grid, get nine options, and only invest more compute in the one or two that are worth upscaling. community reaction It is another example of how open‑graph tools are turning "prompt engineering" patterns (like multi‑shot boards) into reusable building blocks that designers can treat as presets rather than fragile one‑off hacks.

🏗️ AI infra economics: GPUs, DC timelines, and debt risk

Infra threads center on supply and financing: Nvidia may boost H200 output for China amid tariffs/capacity limits, Oracle slows DC buildout, and Bloomberg flags $10T data‑center boom risks. Also a TPU design note from Jeff Dean on speculative features.

AI data center boom heads toward $10T with rising debt and glut risk

Bloomberg estimates that the total cost of the AI‑driven data center build‑out could reach around $10 trillion globally, and counts roughly $175 billion of US data center credit deals in 2025 alone. bloomberg dc bubble Another dataset shows JPMorgan as the single largest project‑level lender at close to $6 billion in AI data center loans, with a long tail of banks and private credit funds following. dc lending chart

Following up on Broadcom’s report of a $73 billion networking backlog for AI data centers ai backlog, this wave is now funded with increasingly aggressive terms: some borrowers seek 150% of build cost and yields barely 1 percentage point over Treasuries. Deals are being securitized or structured as synthetic leases, which hides risk on balance sheets and echoes late‑cycle real‑estate patterns. For AI companies, this can keep GPU hosting cheap in the short run, but infra and finance teams should model scenarios where oversupply, falling rack rents, or a credit squeeze force consolidations, cancelled expansions, or sudden price hikes on long‑term training and inference contracts.

China mulls extra $70B in chip incentives as global AI race heats up

Bloomberg sources say China is weighing as much as $70 billion in new semiconductor incentives to accelerate domestic fabs and GPU makers, on top of existing programs like the “Big Fund” and earlier stimulus. china chip plan A separate investment breakdown shows China already leads planned and allocated chip support at about $142 billion, versus ~$75 billion in the US (CHIPS Act plus loans and tax breaks) and smaller but rising efforts in South Korea, Japan, and the EU. global chips chart

For AI builders this isn’t abstract industrial policy. If even part of this money flows into advanced nodes and accelerator design, it shortens the time until Chinese GPU and NPU lines can stand in for Nvidia in local training clusters, and reduces the bite of US export controls in the medium term. It also sharpens the contrast with Europe’s more regulatory focus: a world where China pours >$200 billion into fabs while Brussels waits for an “AI bubble” to pop will tilt where model training, inference hosting, and even open‑source model innovation physically live.

Nvidia weighs boosting H200 GPU output for China despite export fees

Reuters reports that Nvidia is considering increasing production of its H200 GPUs after Chinese orders outstripped what it can ship in December, even under new US rules that add a 25% fee on China sales and keep the chips on TSMC’s scarce 4 nm lines. china h200 report H200 is estimated to deliver around 6× the performance of Nvidia’s China‑specific H20, and Chinese regulators are reportedly weighing requirements that each imported H200 be bundled with a certain ratio of domestic accelerators.

For infra teams this means China’s access to high‑end Nvidia silicon may grow again, despite earlier quotas steering state buyers toward Huawei and Cambricon GPUs huawei shift. If Nvidia does ramp H200, it will be competing with Blackwell and future Rubin parts for the same TSMC capacity, so non‑Chinese buyers could still see tight supply and pricing pressure in 2026–27. The point is: export controls are becoming a tax and routing problem, not a clean cutoff, and capacity allocation decisions at TSMC now directly shape where frontier‑scale AI training happens on the planet.

Oracle pushes some OpenAI-linked data center timelines from 2027 to 2028

AILeaks notes that Oracle has delayed parts of its data center build‑out for OpenAI from 2027 into 2028, citing labor and materials shortages on the projects that underpin future ChatGPT capacity. oracle dc delay These sites are part of Oracle’s broader pitch as a primary cloud for OpenAI training and inference workloads.

For infra leaders the message is that even with huge demand and capital lined up, physical execution is the bottleneck. Power, crews, and construction supply chains move slower than GPU allocation spreadsheets. If you’re planning to lean on these Oracle‑hosted clusters in the late‑2020s, it’s worth treating dates as soft and building contingency plans—regional diversity, alternative clouds, or more aggressive on‑prem builds—so model launches and enterprise rollouts aren’t gated by someone else’s construction schedule.

Jeff Dean explains TPU strategy of reserving die area for speculative features

Jeff Dean says it’s nearly impossible to predict ML hardware needs 2–6 years out, so Google’s TPU roadmap deliberately embeds researchers early and allocates part of each chip’s die area to speculative “maybe” features. jeff dean clip If a new training pattern or architectural idea works, the hardware is ready on day one; if it doesn’t, only a small slice of silicon is wasted.

This is a very different posture from traditional CPU roadmaps optimized around stable, known workloads. For people designing accelerators or negotiating long‑term GPU/TPU commitments, the takeaway is that a chunk of next‑gen tensor cores, interconnects, or quantization paths will be experimental by design. That increases upside—faster adoption of things like sparsity, attention variants, or RL‑heavy training—but also means some features your teams bet on may never be exercised in production. Infra leads should keep tight loops between research and platform teams so they can actually exploit the “maybes” they’re already paying for in chip area.

💼 Enterprise adoption & market share: BBVA, Menlo data, Go tier

What changed today: OpenAI expands ChatGPT Enterprise at BBVA (120k seats), Menlo Ventures shows Anthropic at 40% enterprise LLM spend vs OpenAI 27%/Google 21%, and ChatGPT Go expands in LATAM. Excludes feature’s 5.2 eval storyline.

BBVA rolls ChatGPT Enterprise out to 120,000 employees

OpenAI and Spanish bank BBVA are expanding their deployment of ChatGPT Enterprise to 120,000 staff, framing it as a shift toward "AI‑native" banking workflows rather than a small pilot tool. bbva announcement The joint blog post says the rollout will support internal productivity use cases and lay groundwork for future agentic workflows across retail and corporate banking, underlining that large, regulated incumbents are now comfortable standardizing on frontier LLMs for day‑to‑day knowledge work. OpenAI blog This matters for builders because BBVA is a G-SIB‑scale reference customer: it validates ChatGPT Enterprise’s security posture for financial data and signals that banks may soon expect deep integrations (tickets, docs, CRM, RPA) rather than isolated chatbots. If you’re selling into fintech or FS, this is a strong proof point that "generic" LLMs can clear compliance and be rolled out at full workforce scale, not just to innovation labs.

Menlo: Anthropic jumps to 40% of enterprise LLM spend, OpenAI falls to 27%

Menlo Ventures’ 2025 State of GenAI report shows a sharp reshuffle in enterprise LLM API spend: Anthropic now captures 40%, up from 24% last year and 12% in 2023, while OpenAI has slid to 27% from 50% in 2023; Google has climbed to 21% from 7% over the same period. menlo market share In coding‑focused workloads Anthropic is even more dominant, with 54% 2025 share vs 21% for OpenAI and 11% for Google.

The same dataset shows open‑source providers (Meta, Mistral, Qwen, DeepSeek, others) shrinking from 19% to 11% of enterprise API usage as Llama’s slow release cadence and fragmented offerings blunt its earlier momentum. open source decline

For engineering leaders, the takeaway is that Anthropic and Google have converted their model quality and pricing into real budget share, while open models are still more of an infra and cost‑control play than the primary API for most large enterprises. If you’re building multi‑provider routing, these numbers argue for treating Anthropic as a first‑class default alongside (not behind) OpenAI in 2025.

ChatGPT Go expands in LATAM as a cheaper GPT-5 access tier

Following up on Go launch where OpenAI partnered with Rappi to push ChatGPT Go in Latin America, the company is now more broadly surfacing Go in the ChatGPT app across LATAM markets as a low‑cost middle tier between Free and Plus. go plan screenshot A Spanish UI screenshot shows Go including GPT‑5 access for free users but adding higher message caps, more file uploads, more image generation, advanced data analysis, and expanded memory compared to the free plan.

This effectively turns Go into a mass‑market, region‑sensitive way to monetize GPT‑5 usage without the full Plus price, and it will likely push a lot of casual users and small businesses onto a predictable subscription instead of pure free usage. If you build around ChatGPT as a frontend for your product in LATAM, expect more of your customers to have Go‑level limits (more files, more images) even if they’re not on full Plus, and consider tailoring flows to work within those expanded but still finite quotas.

Pinterest says open models cut its AI costs by ~90%

Pinterest CEO Bill Ready told The Information that the company has reduced its AI model costs by about 90% by switching heavily to open‑source LLMs instead of “brand‑name” proprietary APIs. pinterest quote

Clement Delangue from Hugging Face amplified the quote as evidence that we may be at the "peak of proprietary APIs" for many workloads. pinterest quote For AI platform teams this is a concrete datapoint that production recommender/search/ads systems can move to self‑hosted or managed open models without killing quality, and that CFOs now expect order‑of‑magnitude savings from such moves. It increases pressure on closed‑model vendors to justify higher per‑token pricing with differentiated capabilities (long‑context, safety, tools) or enterprise features, and it strengthens the business case for investing in your own fine‑tuned open‑model stack rather than assuming API costs will always be acceptable.

Lightfield pitches AI-native CRM built around transcripts, not fields

New startup Lightfield is positioning itself as an "AI‑native" CRM that treats emails, calls, and meetings as the primary source of truth and lets an LLM backfill and maintain structured fields like deal stage, last touch, objections, and budget. lightfield overview Instead of reps typing into rigid forms, the system continuously ingests conversations, runs extraction over the whole history, and then proposes CRM updates for humans to accept, including retroactively populating new fields across months of past data.

The company claims a 20k+ waitlist and says hundreds of YC founders have already switched from HubSpot/Attio/Notion‑style stacks, betting that AI‑first CRMs will win once they can keep structure in sync with messy text at scale. lightfield overview For AI engineers this is a good reference pattern: center the unstructured corpus, derive schema on demand, and keep humans in the loop approving model‑suggested changes, rather than bolting a chatbot onto an old SQL‑first backend.

🧠 Reasoning training: targeted credit, longer RL runs, async thinking

New research‑style advances emphasize efficiency: hierarchy‑aware credit assignment for planning tokens, extended open RL runs (Olmo 3.1), and async reasoning to keep speaking while thinking. Practical for scaling ‘agents that reason’.

Asynchronous Reasoning paper turns “thinking LLMs” into real‑time agents

The Asynchronous Reasoning paper proposes a training‑free way to let "thinking" LLMs keep reasoning while they are already talking, cutting end‑to‑end response delays by 6–11× while keeping the same plan quality. paper summary Instead of generating all private chain‑of‑thought then answering, the method runs three token streams in one forward pass—user input, hidden thoughts, and public reply—by remapping rotary positions in the KV cache so the model sees one coherent timeline.

On interactive benchmarks, the system gets first spoken or visible output in ≤5 seconds while preserving the benefits of long CoT, and a safety variant that adds a "safety thinker" stream cuts harmful jailbreak answers from 13%→2% on a red‑teaming set. paper summary For anyone building voice agents, copilots, or robot controllers on top of slow "Thinking" models, this is a big deal: it suggests you can retrofit existing models with async planning using only inference‑time cache tricks, without retraining or architecting a whole separate fast/slow model pair.

HICRA RL paper shows planning‑token credit boosts LLM reasoning

A new RL paper proposes Hierarchy‑Aware Credit Assignment (HICRA), which concentrates reward and gradient signal on planning tokens instead of treating every token equally, and it delivers sizable gains on math and Olympiad benchmarks versus GRPO and similar baselines. paper thread The authors identify "Strategic Grams" like “but the problem mentions that…” as scaffolding for reasoning, then up‑weight those n‑grams during RL, pushing Qwen3‑4B‑Instruct from 68.5%→73.1% on AIME24 and Qwen2.5‑7B‑Base up +8.4 points on AMC23 and +4.0 on Olympiad tasks according to the OpenReview draft. ArXiv paper

For builders, the point is: if you’re training reasoning models, most of your RL signal is currently wasted on low‑level execution tokens; this work offers a practical recipe (token classification + reweighted loss) to get more out of the same data and steps. It also gives a more mechanistic story for "aha moments" and length scaling under RL, which helps explain why longer chains sometimes emerge abruptly rather than smoothly. rl-commentary

Olmo 3.1 32B Think shows long RL runs keep paying off

Allen AI quietly kept the Olmo 3 32B RL job running for three extra weeks and found that reasoning and coding scores kept improving instead of saturating, leading to the new Olmo 3.1 32B Think model. olmo-thread The extended run used roughly 224 GPUs and ~125k H100 hours—about $250k at $2/hour—and still hadn’t clearly hit a plateau, with visible gains on hard benchmarks like AIME and SWE‑style coding tasks. rl-keep-going

All intermediate checkpoints plus the final 3.1 models (Think and 32B Instruct) are fully released, along with millions of RL completions and preference datasets for the community to study and reuse. release-links For anyone planning post‑training budgets, this is a strong data point that large‑scale RL on strong base models can remain in a productive regime far longer than the small 1–2‑week runs many labs standardize on, and that stable infra and evaluation may now matter more than squeezing a bit more pre‑training. It also provides rare transparency on end‑to‑end RL cost versus DeepSeek‑R1‑style efforts, which helps smaller orgs reason about where open RL is actually competitive. cost-audit-summary

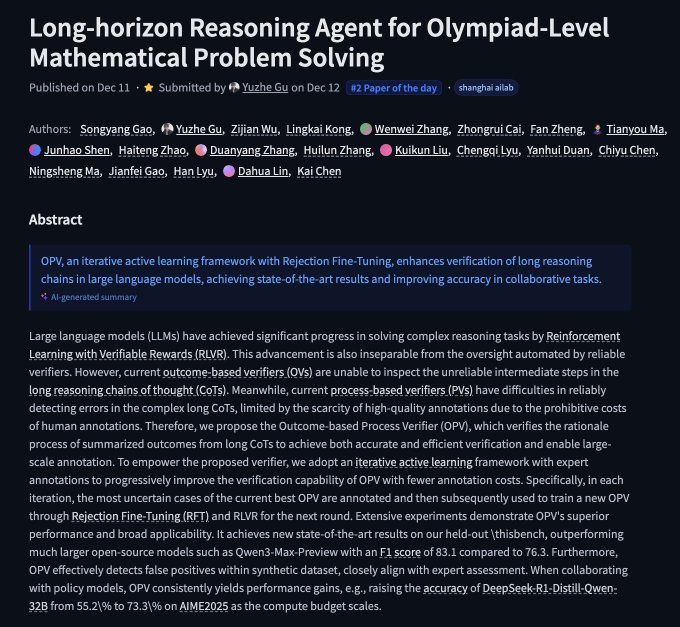

OPV verifier framework lifts Olympiad‑level math agent performance

Shanghai AI Lab and collaborators released a long‑horizon math agent that combines a reasoning LLM, a symbolic prover, and an Outcome‑based Process Verifier (OPV) to tackle 50 years of IMO‑style geometry and other Olympiad problems. paper intro OPV scores candidate rationale steps based on whether they eventually lead to a correct outcome, then iteratively improves via rejection fine‑tuning and RLVR, boosting DeepSeek‑R1‑Distill‑Qwen‑32B from about 55.2%→73.3% on AIME25 in the paper’s results while also cutting false positives on synthetic data.

The system shows that you can treat an LLM’s chain‑of‑thought as an object for separate training, using outcome‑grounded process checks rather than only rewarding final answers. For teams experimenting with verifier‑style RL (e.g., grading CoT, training critic models), OPV is a concrete template: maintain an outcome model, a process model, and an active‑learning loop that focuses annotation on the most uncertain reasoning traces. rl-verifier-summary

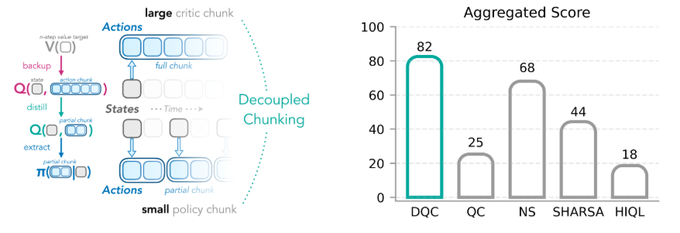

Decoupled Q‑Chunking scales RL with long critic chunks, short actors

A new RL method called Decoupled Q‑Chunking from Sergey Levine’s group argues that action chunking—standard in imitation learning—can also be made to work for reinforcement learning by separating how the critic and actor see time. method-summary The critic is trained on long chunks to capture delayed credit assignment, while the actor only executes short, reactive chunks, which avoids the usual instability when both try to operate on long sequences.

For continuous‑control benchmarks, the paper reports state‑of‑the‑art results and better wall‑clock efficiency, since the critic can reuse long‑horizon value estimates while the actor sticks to a low‑latency control loop.project page If you’re working on robotics or other control‑heavy agents, the idea is appealingly modular: you can keep your existing short‑horizon policy architecture and swap in a chunked critic, rather than redesigning the whole stack around hierarchical options.

🧪 Fresh models: sparse circuits, Devstral in Ollama, mobile UI agent

Smaller but notable releases: OpenAI posts a 0.4B ‘circuit‑sparsity’ model on HF, Mistral’s Devstral 2 family lands in Ollama, and Zhipu’s AutoGLM targets smartphone UI understanding/actions. Voice/TTS models are in the voice section.

Mistral’s Devstral 2 lands in Ollama with 24B and 123B variants

Ollama added Mistral’s new Devstral 2 coding models, so you can now run devstral-small-2 (24B) and devstral-2 (123B) locally with ollama run … or hit the 123B cloud flavor via devstral-2:123b-cloud. ollama launch That makes a top‑tier SWE‑bench performer available in the same workflow as your other GGUF models. devstral card

A community AWQ 4‑bit quantization of the 123B model reports ~400 tokens/s prefill and ~20+ tokens/s generation with a 200k context window on a single GPU, which is strong for repo‑scale agents and terminal bots. awq benchmark Practically, this means you can prototype Devstral‑backed coding agents on commodity hardware, then point the same prompts at Ollama Cloud or Mistral’s own endpoints when you’re ready to scale.

Zhipu’s AutoGLM targets smartphone UI understanding and on‑device agents

Zhipu (Z.ai) open‑sourced AutoGLM, a vision‑language model trained to understand smartphone screens and drive them like a human, and exposed it via free APIs on Z.ai plus partners Novita Labs and Parasail. autoglm announcement The model parses UI layouts, text, and icons, then outputs structured actions (taps, scrolls, text input), which is exactly what you need for autonomous mobile agents.

The release includes weights, docs, and examples for tasks like form filling, app navigation, and multi‑step flows (e.g., “open app, find booking, change date”), so you don’t have to bolt a generic VLM onto brittle template matching. zai product page For AI engineers this is a shortcut to building “operate my phone for me” agents without first collecting your own massive UI dataset or writing a custom screen‑understanding stack.

OpenAI drops 0.4B "circuit-sparsity" model on Hugging Face

OpenAI quietly published a 0.4B‑parameter circuit‑sparsity model on Hugging Face, aimed at researchy tasks like bracket counting and variable binding rather than general chat. release note It comes with Apache‑2.0 licensing, example Transformers code, and is small enough to run on a single decent GPU or even CPU for experiments. model card For engineers and interpretability folks this is a handy sandbox: it’s a sparse, toy‑scale model with clear algorithmic probes, so you can study circuits, sparsity patterns, or new training tricks without burning H100 hours. It also gives teams a concrete, OSS reference when they talk about “circuit‑level” behavior instead of only relying on opaque frontier checkpoints. hf repost

📉 Builder sentiment: speed/cost tradeoffs and model choices

The discourse itself is news: cancellations of ChatGPT Plus, debates over ‘slowness’ at xhigh, and practical model stacks (Opus 4.5 daily driver; GPT‑5.2 for hard audits). Complements the feature by focusing on adoption vibes, not evals.

Builders standardize on Opus 4.5 for speed, GPT‑5.2 for hard audits

Working engineers are converging on a mixed model stack where Anthropic’s Claude Opus 4.5 is the fast daily driver and GPT‑5.2 is reserved for planning and the nastiest bugs. One popular TL;DR recommends Opus 4.5 for routine coding, GPT‑5.2‑high to "plan, audit, fix bugs", and GPT‑5.2 Pro with a repo‑level prompt for the hardest problems coding stack summary.

Another developer’s pool looks similar: GPT‑5.2 (Thinking) as the most‑used model despite being "painful[ly] slow", Opus 4.5 as a top‑tier but riskier daily driver, a cheaper Composer‑1 for ultra‑fast edits, and Gemini 3 Pro for UI and design suggestions model pool breakdown. Others echo that 5.2 "pushes back harder" and one‑shots complex tasks but is dead slow, so they keep multiple tasks in flight to hide latency slow but capable. A radio‑firmware experimenter reports that GPT‑5.2 spent about an hour trying to design a custom protocol, found more bugs than Opus, but still failed the underlying hardware issue and ultimately didn’t earn a subscription switch away from Claude hour long attempt sticking with opus. At the extremes, some joke that xhigh "might as well be AGI because by the time it finishes my query, AGI will be here" xhigh slowness joke. For practitioners, this is a clear signal: 5.2 is increasingly the audit/planning specialist in the toolbox, not the default REPL.

Engineers push for cost‑ and latency‑aware evals, not xhigh‑only bragging

Following up on vals cost, where GPT‑5.2’s higher price per query was already a concern, more builders are questioning evals that ignore cost and wall‑clock time. Greg Brockman remarks that "useful lifetime of a benchmark these days is measured in months", which several people quote as they argue that static leaderboards say less and less about real‑world value benchmark lifetime quote benchmark retweet.

One detailed critique notes that ARC‑AGI‑2 scores for GPT‑5.x rise as you crank up reasoning effort, but the cost per task also jumps from $0.73 for GPT‑5‑high to about $1.39 for GPT‑5.2‑high, and even more for xhigh, so "you would expect that performance… would improve with additional test‑time compute" arc cost commentary. Another thread accuses OpenAI of "benchmaxing" with xhigh (~100k thinking tokens) while simultaneously raising list prices by ~40% and argues that METR‑style horizons should always be reported alongside total tokens and elapsed time, not in isolation pricing and xhigh rant. Counter‑voices point out that GPT‑5.2’s big reliability gains—like ~34–47% fewer hallucinations—are the real upgrade and matter more than leaderboard deltas, but they accept that those benefits must be justified against much longer latencies hallucination drop thread. The net effect is growing pressure on labs and independent benchmarkers to treat cost, latency and stability as first‑class metrics, not afterthoughts.

Users start cancelling ChatGPT Plus over GPT‑5.2 ‘vibe’ shift

Some long‑time ChatGPT Plus subscribers are cancelling despite GPT‑5.2’s higher capability, saying the product no longer feels like an upgrade. One user shares the in‑app retention dialog offering a free extra month of Plus if they stay, but still confirms cancellation, writing "It's not the same anymore" and that nerfed responses "don't work" for learning technical topics plus cancel screenshot nerfed response comment.

Another builder notes that Reddit threads describe GPT‑5.2 as "flat", overly safety‑sensitive and "treats adults like preschoolers", with many casual users explicitly missing GPT‑4o’s tone and freedom reddit sentiment reddit typo fix. For teams shipping foundation‑model products, the takeaway is that pushing hard toward enterprise‑grade safety and consistency can create real churn on the consumer side if the overall personality and perceived autonomy step backward, even when raw intelligence goes up.

Multi‑agent handoff hype meets skepticism over token waste and degradation

As multi‑agent orchestration tools compete on features like automatic handoff between models (e.g., Opus for planning, Gemini for frontend, Codex for backend), some builders are pushing back that these patterns mostly burn tokens and often lower answer quality. One engineer notes that while it’s possible to route sub‑tasks between several LLMs, "your thinking tokens are likely gone, output of each model will be worse" once you start chaining them, and complains that multi‑agent harness vendors rarely surface this trade‑off multiagent cost warning.

In a separate reply, the same author snarks about users bragging "I use opus to plan, gemini for the frontend and codex for the rest" and responds with a skeptical "mhmmm", implying that orchestration bragging often outruns measured benefit handoff skepticism. This real‑world sentiment lands shortly after Google’s own research on scaling agent systems concluded that more agents are not always better and that coordination frequently amplifies errors or adds overhead unless the task structure really demands it multiagent study summary multi-agent study. Combined with feedback‑bucket analyses showing that many user complaints come from assistants "not finding the result" or lacking context rather than raw reasoning agent feedback chart, the practical advice is: start with a strong single agent plus solid retrieval, and add specialized sub‑agents only when profiling shows they’re worth the extra tokens and latency.

Open‑source and smaller models gain favor as teams chase 90% cost cuts

Cost pressure is driving a noticeable shift in how builders talk about model strategy. Hugging Face CEO Clément Delangue highlights Pinterest’s report that it saved 90% on AI model costs by leaning on open‑source systems instead of "brand‑name" proprietary APIs, and argues that we’re at the peak of pure proprietary API dominance, with attention and revenue about to rebalance toward open weights and custom training open source savings peak proprietary claim.

Some individual practitioners echo this framing when they look at GPT‑5.2’s 40% list‑price increase and the heavy token burn of xhigh reasoning, questioning whether marginal capability gains justify the spend when smaller or open models can handle a large slice of everyday workloads pricing frustration. Others point to fast, CPU‑friendly embedding models like Luxical‑One that deliver ~97× Qwen GPU throughput for retrieval as concrete examples of how stepping off the frontier can save money without wrecking quality for a given task luxical release. The underlying sentiment is pragmatic: use frontier systems where they win clearly (hard reasoning, long‑context agents), but aggressively push everything else down to cheaper, often open, components to keep unit economics sane.

Builders tire of ‘X is finished’ lab wars and focus on fit‑for‑purpose models

A chunk of AI Twitter is openly annoyed at zero‑sum lab fandom, with one engineer calling out how every Google drop gets spun as "openAI is finished", only for the script to flip to "google is finished" a week later when OpenAI ships something, and labeling both sides of the shilling "pathetic" fanboy complaint. Others say this is missing the plot: models are diverging in feel and strengths over time, which is good, because it lets teams match specific jobs—coding, research, multimodal UI—to the model that fits, rather than pretending there’s a single universal winner model divergence comment.

Ethan Mollick adds that frontier models across US labs and Chinese/French open‑weight releases are still surprisingly similar in overall ability and prompt adherence, so choosing between them is less about abstract IQ and more about pricing, latency, tools, and safety trade‑offs frontier similarity note. Another commenter backs Demis Hassabis’s stance that scaling is still paying off and probably won’t magically stop at some near‑term wall, but warns that whether a given bump in capability is worth adopting depends on economics and workflow, not fan loyalty scaling still useful. For practitioners, the mood is shifting away from "who’s ahead" arguments and toward portfolio thinking: pick what works today, swap it out next quarter if the mix of speed, cost and reliability changes.

🤖 Embodied stacks: video world models, monocular mocap, care robots

Robotics items cluster around sim‑to‑real and motion: DeepMind evaluates Veo‑based world models vs ALOHA‑2 trials (r=0.88), MoCapAnything tracks 3D skeletons from monocular video, and RobotGym highlights assistive care concepts.

DeepMind validates Veo-based world models as reliable stand‑in for robot trials

DeepMind released a robotics paper showing that policies evaluated in a Veo-based video world simulator correlate strongly (r ≈ 0.88) with real‑world success on over 1,600 ALOHA‑2 bimanual robot trials, letting teams train and select policies without burning hardware time first robotics thread. This pushes simulation‑to‑real from “rough proxy” toward a quantitative, benchmarked tool for policy search and regression testing, and follows earlier work on more open‑ended robot reasoning open-ended agents.

The key result: a single Veo‑powered world model can predict which policies will succeed on real hardware across a wide set of tasks and even out‑of‑distribution conditions, instead of needing per‑task simulators ArXiv paper. For AI engineers, this means you can iterate policies, curriculum schedules, and reward shaping in simulation with some confidence that gains will transfer, then reserve expensive ALOHA‑class runs for final verification and fine‑tuning. For infra leads, it’s an early data point that investing in video‑trained world models can pay off as an evaluation primitive, not just for pretty generative demos. The interesting open question is how far this approach generalizes beyond table‑top bimanual manipulation into mobile and human‑interactive settings, where model errors and safety margins matter even more.

MoCapAnything offers unified 3D motion capture from a single RGB video

The MoCapAnything project introduced a unified 3D motion capture system that recovers arbitrary skeletons from monocular videos, with demos of walking, jumping and other actions side‑by‑side with the reconstructed 3D pose model announcement. For robotics and graphics teams, this kind of monocular mocap turns cheap phone footage into structured motion data that can drive humanoid controllers, animation rigs or imitation‑learning pipelines without multi‑camera stages or markers.

Because it supports arbitrary skeletons rather than a single human template, you can in principle retarget captured motion to different robot morphologies or game characters without bespoke per‑rig tooling. That lowers the barrier to collecting diverse motion priors for legged robots, manipulators or hybrid forms, and makes it easier to build datasets where the same behavior is expressed across multiple embodiments. The next step for practitioners will be stress‑testing it on non‑lab footage (occlusions, clutter, motion blur) and checking whether the 3D estimates are clean enough for stable control when fed into model‑based RL or trajectory optimization stacks.

RobotGym’s Qijia Q1 sketches a dual wheelchair–humanoid robot for elder care

RobotGym’s Qijia Q1 concept shows a robot that doubles as a powered wheelchair and mobile helper for elderly users, handling mobility while also manipulating objects like food in a microwave robotgym clip. The demo is less about polished autonomy and more about articulating the motion, compliance, and human‑interaction requirements if you want a single platform that can both carry a person and work around them safely in tight domestic spaces.

For embodied‑AI builders, this is a concrete target domain that stresses the whole stack: robust navigation in clutter, safe close‑contact manipulation, intent recognition from speech and gesture, and fail‑safe behavior when something goes wrong concept summary. It also highlights why simulation and world models will matter here: you can’t cheaply iterate on failure modes when a fall or collision injures a frail user, so you need high‑fidelity virtual training and testbeds before deploying new behaviors on hardware. Teams thinking about care robots can use Qijia‑style concepts as a design foil—map out what sensors, actuation, and policy abstractions you’d need to cover real ADLs (activities of daily living), then decide which parts are tractable with today’s vision‑language‑action models and which still need bespoke control.

🛡️ Policy & IP: one rulebook push and content licensing friction

Policy front tightens: a White House EO seeks a single national AI framework (DOJ task force, funding levers), and Disney sends Google a cease‑and‑desist over alleged Gemini IP infringement. Excludes any GPT‑5.2 system card items (feature).

US executive order moves to preempt state AI laws with one national rulebook

The White House has now signed an AI executive order that directs the DOJ to form a task force within 30 days to challenge state AI regulations and pushes Commerce to publish a list of “onerous” state AI laws within 90 days, aiming for a single federal framework for AI governance policy summary. This follows the earlier signal toward a unified regime national framework and explicitly leans on interstate commerce arguments to override state‑by‑state rules, with TechCrunch noting that startups may face a legal limbo while court challenges play out policy summary, white house order .

For AI builders, this means compliance strategies built around the strictest state rules (like deepfake labels or model registry mandates) may get rewritten in the medium term, but the order does not instantly void those state laws—it sets up litigation and federal standards that could take years to stabilize. Smaller teams selling into regulated sectors (finance, healthcare, legal) are especially exposed: they may still need to honor current state requirements while also preparing for a more centralized, possibly more scale‑friendly, federal rulebook that Washington is trying to force through.

Disney’s cease‑and‑desist accuses Google Gemini of massive‑scale IP misuse

Disney has sent Google a cease‑and‑desist letter accusing it of using Disney, Pixar, Marvel and Star Wars IP at “massive scale” to train Gemini and then promoting infringing outputs, including a Gemini‑driven “figurine” image trend that allegedly featured unlicensed Disney characters cnd summary. Coming the same week as Disney’s $1B equity and licensing deal with OpenAI and Sora, this escalates the split strategy noted earlier—licensing its catalog to one lab while threatening legal action against another disney letter, techcrunch article.

If Disney pushes this beyond demand letters, it could become one of the first big test cases on whether training and actively marketing branded generations fall inside US fair use, and it will make other model providers think harder about trending prompts that center specific franchises. For AI leaders, the risk isn’t only training data: front‑of‑house features (prebuilt prompts, social trends, or branded "figurine" workflows) that encourage users to crank out recognizable IP are now very much in the litigation blast radius.

🧭 Search & embeddings: CPU‑fast lexical embeddings, eval hooks

A CPU‑first turn for embeddings: Luxical approximates transformer embeddings using lexical features + tiny ReLU nets, hitting 6,803 docs/s on CPU (~97× Qwen). Integrations for Sentence Transformers aim to bring quick MTEB checks.

Luxical-One debuts as CPU-fast lexical embedding model ~97× Qwen throughput

DatologyAI released Luxical-One, a lexical+tiny-MLP embedding model that approximates transformer embeddings but runs at ~6,803 docs/s and ~23 MiB/s on an M4 Max CPU, about 97× the throughput of a Qwen3‑0.6B GPU baseline on their benchmark setup. luxical announcement The model uses TF‑IDF-style lexical features fed into a small ReLU network trained contrastively, giving transformer-like semantic behavior while staying entirely CPU-friendly. throughput numbers

For builders this means document similarity, clustering, and classification pipelines that used to require GPUs can be moved to cheap CPUs or laptops without a huge quality hit, freeing GPU budget for generation models. blog post Luxical-One is published under Apache‑2.0 on Hugging Face with simple transformers-style loading code and examples for immediate use in text search and analytics stacks. model card Analysts like @tomaarsen argue this fills an underappreciated niche between static TF‑IDF and full attention-based encoders, especially where throughput, latency, or cost are the primary bottlenecks. embedding overview

Luxical-One gains Sentence Transformers integration and path to MTEB

Community contributors wired Luxical-One into the Sentence Transformers ecosystem, so it can now be used via the familiar SentenceTransformer API and dropped into standard retrieval and clustering workflows. hf integration note This integration means Luxical’s CPU-speed embeddings can soon be evaluated on MTEB and other common benchmarks, not just Datology’s in-house doc-half-matching tasks. retrieval question The current Luxical evals focus on matching two halves of the same document, where the model already performs strongly, but @tomaarsen notes that retrieval benchmarks are the real test for LLM-era use cases and is preparing MTEB runs using the new adapter. retrieval plans For practitioners, the update turns Luxical-One from an interesting standalone demo into something you can swap into existing ST-based pipelines with minimal code changes, then directly compare speed/quality trade-offs against MiniLM, E5, or Qwen encoders using your existing eval harnesses. hf discussion