Google’s BATS lifts BrowseComp accuracy to 24.6% – cuts agent cost 31%

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Google’s new BATS framework is the first agent paper this week that feels directly aimed at your cloud bill. The lightweight Budget Tracker plugin alone hits ReAct‑level accuracy on web tasks using 10 instead of 100 tool calls and trims overall cost by about 31%, simply by exposing live “query budget remaining” counters inside the agent’s reasoning loop.

Full BATS orchestration pushes harder on quality. On BrowseComp, a Gemini‑2.5‑Pro agent with BATS scores 24.6% vs ReAct’s 12.6% under the same 100‑tool cap; BrowseComp‑ZH jumps to 46.0% vs 31.5%, and HLE‑Search to 27.0% vs 20.5%. Planning and self‑verification both become budget‑aware, and the paper introduces a unified cost metric that blends token spend and tool‑call prices so you can reason in dollars, not abstract “steps.”

DAIR.AI is already teaching BATS in its agent courses, which is usually a sign a pattern is graduating from research toy to production norm. Paired with Artificial Analysis’ token‑usage charts showing GPT‑5.2‑xhigh burning nearly 2× Sonnet’s tokens for similar work, the direction of travel is clear: agents that ignore budgets are going to feel as dated as models that ignore context windows. Time to make “budget‑aware by design” part of your standard agent spec.

Top links today

- BATS budget-aware tool-use agents paper

- Imaginary RoPE extension for long context

- BEAVER deterministic LLM rule verifier paper

- ARTEMIS AI penetration testing agents paper

- Tom’s Hardware AI semiconductor giga cycle analysis

- Bond Capital trends in artificial intelligence report

- Statista AI investment hubs by region chart

Feature Spotlight

Feature: Budget‑aware agent scaling (BATS)

Google’s BATS makes agents budget‑aware, doubling BrowseComp accuracy vs ReAct under equal budgets and hitting ReAct‑level accuracy with 10× fewer tool calls—clear design guidance for reliable, cheaper web agents.

Cross‑account coverage converges on Google’s BATS/Budget Tracker paper showing that making agents explicitly aware of tool‑call budgets lifts accuracy and slashes cost; mostly web‑agent results and concrete deltas vs ReAct.

Jump to Feature: Budget‑aware agent scaling (BATS) topicsTable of Contents

🎯 Feature: Budget‑aware agent scaling (BATS)

Cross‑account coverage converges on Google’s BATS/Budget Tracker paper showing that making agents explicitly aware of tool‑call budgets lifts accuracy and slashes cost; mostly web‑agent results and concrete deltas vs ReAct.

Google’s BATS makes web agents budget‑aware, slashing tool use and cost

Google researchers released the BATS framework and its lightweight Budget Tracker plugin, showing that making web agents explicitly aware of their remaining search/browse budget can match or beat ReAct while using an order of magnitude fewer tool calls and ~31% less cost. paper thread Budget Tracker alone reaches ReAct‑level accuracy with 10 vs 100 tool calls (−40.4% search, −21.4% browse, −31.3% overall cost) by surfacing live counters like “query budget remaining” inside the agent’s reasoning loop. detailed recap

Full BATS orchestration goes further by making planning and self‑verification budget‑aware: on BrowseComp, a Gemini‑2.5‑Pro agent with BATS hits 24.6% accuracy vs ReAct’s 12.6% under the same 100‑tool cap, with similar gains on BrowseComp‑ZH (46.0% vs 31.5%) and HLE‑Search (27.0% vs 20.5%). paper thread The paper also proposes a unified cost metric that combines token spend and tool‑call cost, so teams can reason about accuracy vs money instead of raw hit rate alone. ArXiv paper DAIR.AI is already using BATS as a teaching example in its agent courses, signaling that “budget‑aware by design” is likely to become a standard pattern for serious production agents rather than an optimization afterthought. dair ai courses

📊 Frontier scoreboards and token economics

Fresh, mixed leaderboard snapshots and cost/latency signals; mostly Artificial Analysis indices and niche suites. Excludes BATS (feature).

AA‑Omniscience Index finds Gemini and Opus more reliable than GPT‑5.2

Artificial Analysis’ new AA‑Omniscience Index, which mixes knowledge coverage and hallucination penalties, scores Gemini 3 Preview (high) at +13 and Claude Opus 4.5 at +10, while GPT‑5.1 (high) sits near neutral at +2 and GPT‑5.2 (xhigh) drops to −4, meaning it produced more incorrect than correct answers under this metric AA reliability chart.

This lines up with earlier factuality work like Google’s FACTS leaderboard, where top models were only ~69% factually correct in realistic settings Factuality 69pct. The point is: GPT‑5.2’s strongest reasoning tier may win on hard problems, but for "answer this and don’t be wrong" workloads, Gemini 3 and Opus still look safer on this particular board. If you run retrieval‑light Q&A, customer support, or analyst workflows where silent failure is expensive, this is a decent argument to route factual questions to Gemini/Opus and reserve GPT‑5.2‑xhigh for deep audits or coding.

Artificial Analysis Index ties Gemini 3 and GPT‑5.2 at the top

Artificial Analysis’ latest Intelligence Index snapshot shows Gemini 3 Preview (high) and GPT‑5.2 (xhigh) tied for first place at a score of 73, ahead of Claude Opus 4.5 and GPT‑5.1 at 70 on a suite of ten hard reasoning and coding evals (14 of 355 models shown) AA index chart.

For builders, this reinforces the sense of a three‑way frontier rather than a single dominant model: Chinese models like DeepSeek V3.2 (66) and Kimi K2 Thinking (67) sit just behind the US labs in this view AA index chart. Compared to CAIS’ Text Capabilities Index, which also had Gemini 3 Pro slightly ahead of GPT‑5.2 on average capability Text index, the AA scores suggest a similar story: OpenAI has caught up on many reasoning tasks, but Google’s stack still looks marginally stronger overall on public multi‑task benchmarks. For teams choosing a default, the main takeaway is that all three frontier models live in the same band on these mixed suites, so price, latency and tool surfaces will matter more than a 1–3 point gap here.

AA token-usage chart exposes big efficiency gaps between frontier models

Artificial Analysis also published a token‑usage breakdown for running its Intelligence Index, showing GPT‑5.2 (xhigh) burning ~86M output tokens across the full eval set versus ~48M for Claude Opus 4.5 and ~42M for Claude 4.5 Sonnet; Gemini 3 Pro Preview (high) comes in around 92M Token usage chart.

This means GPT‑5.2‑xhigh uses about 2× the tokens of Sonnet and roughly 1.8× Opus for the same workload, even before you apply higher per‑token pricing. Earlier GDPval‑AA work had already flagged GPT‑5.2 as strong but heavy on tokens GDPval ELO; this new view makes the trade‑off concrete across multiple models. For infra leads and agent builders, the implication is simple: if you mirror AA‑style long, tool‑rich runs in production, token efficiency is now as important as benchmark score, and routing some tasks to leaner models like Sonnet or Opus can cut evaluation and monitoring costs dramatically without leaving the frontier band on capability.

CritPt benchmark shows GPT‑5.1 and GPT‑5.2 at 0% on this suite

Artificial Analysis’ CritPt leaderboard, aimed at stress‑testing models on a specific consistency and safety suite, reports GPT‑5.1 (high) and GPT‑5.2 (xhigh) both at 0% success, while Gemini 3 Pro Preview (high) reaches 9.1% and DeepSeek V3.2 Speciale 7.4% CritPt chart.

Commenters highlight the "0% / 0%" result as a red flag for how GPT‑5.x behaves under these adversarial prompts, especially since other strong models at least clear a few percent CritPt chart. It doesn’t mean GPT‑5.2 can’t solve these tasks when hand‑prompted; it means its default policy is either refusing, mis‑interpreting, or failing in ways that this benchmark deems incorrect. For teams evaluating safety and alignment, CritPt is a reminder that you can’t read frontier quality off MMLU‑style scores alone; you need targeted suites that poke at specific failure modes, and those may reshuffle your routing choices even among top‑tier models.

🧰 Skills, agent stacks and coding workflows

Practical agent/coding tooling: Codex experimental skills, agent blueprints, LangChain multi‑agent apps, Azure samples, and terminal UX. Excludes BATS (feature).

Codex CLI gets experimental “skills” with AGENTS.md and SKILL.md patterns

OpenAI’s Codex CLI quietly picked up experimental skills support (codex --enable skills), letting teams package reusable capabilities with their own SKILL.md metadata, and tying into a broader AGENTS.md convention for agent setups. Following up on the earlier skills launch in ChatGPT and Codex skills rollout, this drop comes with GitHub docs plus Anthropic’s parallel Agent Skills write‑up, so there’s finally a shared language for describing what an agent can do and when to call it.

Codex now treats skills as discoverable, progressively disclosed tools: the agent first reads light metadata (name, description), then pulls in full instructions and scripts from SKILL.md only when relevant, mirroring Anthropic’s progressive disclosure design for Claude skills codex skills update agents guide. That pairs naturally with AGENTS.md, a "README for agents" file that encodes build, test, and style rules specifically for coding agents instead of humans, so the same repo can stay clean for developers while being richly instrumented for AI codex skills update. Anthropic’s engineering blog walks through how their Agent Skills system bundles procedures, tools and prompts into directory‑scoped skills that Claude can load on demand, which should interoperate conceptually with Codex skills even if implementations differ agent skills blog. For AI engineers, the upshot is you can start standardizing how you describe tools and workflows across Codex, Claude and other agents instead of stuffing everything into ad‑hoc system prompts.

Azure AI samples repo connects local Ollama and LangChain.js to Azure serverless RAG

Microsoft published a consolidated Azure AI Samples repository that walks through a full path from local prototyping with Ollama to production RAG workloads on Azure using LangChain.js, including a serverless reference azure samples.

The main diagram shows a three‑step flow: develop locally with Ollama, integrate via LangChain.js, then push to Azure for RAG/serverless deployment across Python, JS, .NET and Java azure samples. For teams supporting multiple languages or juggling on‑prem and cloud, this repo gives you concrete code for wiring the same agent or RAG chain across environments, rather than re‑implementing each time github repo. It’s especially relevant if you want to keep early experiments cheap and local but still have a clear route to a hardened Azure service once something sticks.

LangChain community ships AI Travel Agent template with six tools and cloud deploys

LangChain’s community released an AI Travel Agent reference app built in Streamlit that wires a single agent into six specialized tools—weather, web search, calculator, YouTube, currency conversion, and cost breakdown—along with recipes for deploying to Streamlit Cloud, Heroku, and Railway travel agent summary.

The architecture keeps things simple: one central agent orchestrates calls to the tools, then the Streamlit UI renders both chat and structured results like cost tables and video suggestions in one place travel agent summary. For builders, this is a concrete pattern for “agentic dashboards”: instead of reinventing tool plumbing, you can fork the repo, swap in your own APIs (hotel search, rail schedules, loyalty programs), and ship a usable vertical agent in a weekend github repo. It’s especially useful if you’re still figuring out when to call tools and how to present their outputs cleanly to non‑technical users.

Synapse Workflows shows a LangGraph multi‑agent stack for search, productivity and data

A new LangChain community project, Synapse Workflows, demonstrates a practical three‑agent stack coordinated by LangGraph: a Smart Search agent for web research, a Productivity agent for email and calendar tasks, and a Data Analysis agent for working over datasets, all feeding a unified response back to the user synapse summary.

The diagram makes the pattern clear: a single natural‑language front door hands the query to LangGraph, which then fans out to specialized agents and re‑merges their outputs before replying synapse summary. For AI engineers thinking about “many small agents vs one big one,” this gives you a concrete blueprint, including where to put orchestration (LangGraph), how to segment responsibilities (research vs productivity vs analytics), and how to keep the UX sane by returning one combined answer instead of three competing chats github repo. It’s a good starting point if you’re trying to bolt agents into existing productivity tools rather than building a new app from scratch.

Cursor vs Droid comparison shows how IDE instructions quietly change Codex behavior

A side‑by‑side experiment running Codex Max on the same backend rewrite prompt in Cursor and Droid found that the IDEs’ hidden system instructions materially changed how the "same" model planned and executed the task ide comparison.

Ray Fernando used Claude Opus to turn a fuzzy migration idea into a structured multi‑step spec, then fed that spec to Codex Max inside both tools; Droid’s “spec mode” produced a different, often more detailed plan than Cursor despite identical model and prompt text ide comparison. The takeaway for engineers is uncomfortable but important: when you evaluate models via hosted IDEs, you’re also evaluating each product’s opinionated system prompts, guardrails, and tool wiring youtube replay. Serious teams will need either raw API access or a way to inspect and control those hidden layers if they want reproducible behavior across environments.

JustHTML becomes a flagship case study for serious agent‑assisted coding

Emil Stenström’s JustHTML library—3,000 lines of pure‑Python HTML5 parser that passes 9,200 html5lib tests—was built largely by coding agents (Claude Opus/Sonnet, Gemini 3 Pro) orchestrated via VS Code’s agent mode, and now serves as a detailed 17‑step “vibe engineering” case study justhtml overview.

Instead of one‑shot generation, Emil used agents as junior collaborators: drafting modules, refactoring against failing tests, and iterating under tight human review until the library hit 100% test coverage and spec compliance case study blog. Simon Willison highlights this as the opposite of “vibe coding”: professional‑grade work where agents are pointed at small, fully specified tasks inside a human‑designed architecture workflow thread. For AI engineers and leads, it’s a rare, end‑to‑end look at what actually works when you want an agent to help ship a serious library rather than toy scripts.

Unix-style composable tools emerge as a pattern for coding agent stacks

One engineer described building their agent environment around a Unix philosophy of many small, focused tools—each with its own CLI and blurb in AGENTS.md—instead of a single monolithic “do everything” framework composable tools thread.

Their setup includes separate utilities for agent mail, task selection, history search, linting, sensitive command handling, tmux/session control, and memory, all loosely integrated so each can work alone or together composable tools thread. In this model, AGENTS.md becomes an “OS for agents,” installing and describing which tools to use on a per‑project basis and how, rather than cramming everything into one mega‑agent small tools image. For AI engineers experimenting with Codex, Claude Code, or Antigravity‑style IDEs, this suggests a pragmatic direction: treat agents as shells that orchestrate a toolbox of well‑scoped commands, instead of trying to cram your entire workflow into one giant prompt.

Warp terminal lets agents pull live command output via @‑references

Warp added a small but powerful feature to its agent mode: you can now type @ and fuzzy‑select any previous terminal output block to pull straight into the agent’s context, including logs from still‑running dev servers warp feature.

This builds on Warp’s earlier slash‑command and MCP work for agent workflows warp commands, but tackles the real pain point of “how do I get the model to see exactly this log chunk without copy‑pasting.” In practice, it turns the terminal buffer into a lightweight context store—run tests or build scripts, then @‑mention the failing chunk so the agent can reason over it without re‑executing commands advent mention. If you’re experimenting with CLI‑first coding agents, this is the kind of UX glue that makes them usable day to day instead of relegated to demos.

Yutori’s Scouts opens up a push‑based browser research agent built on SoTA web use

After a few months in closed beta, Yutori opened general access to Scouts, a product built on their state‑of‑the‑art browser‑use agents that continuously monitor the web and push updates to users instead of waiting for ad‑hoc queries scouts launch.

Scouts rethinks the “agent + browser” stack as a long‑running subscription: you define what to watch, and the agent does persistent browsing, summarization, and alerting on your schedule, reducing the friction of spinning up one‑off deep research runs scouts launch. For analysts and PMs drowning in change logs, filings, or ecosystem news, this points toward a future where research agents feel more like RSS for the modern web—opinionated feeds powered by autonomous browsing rather than manual Google searches.

FAANG engineer outlines a realistic AI‑infused coding workflow from design doc to prod

A FAANG software engineer shared a step‑by‑step description of how their team uses AI in a fairly traditional enterprise workflow: rigorous design docs and reviews up front, then AI agents for test‑first development, implementation, and even code review before staging and production faang flow.

The key takeaway is that AI isn’t replacing process; it’s slotted into existing gates. The engineer reports about a 30% speedup from initial feature proposal to production, largely by having the agent draft tests and boilerplate while humans focus on architecture and edge cases faang flow. For leaders trying to calibrate expectations, this is a grounded picture: LLMs sit inside design‑review‑implement‑review‑deploy loops rather than running free as autonomous coders, and the wins come from better test coverage and faster iteration inside that structure.

🧪 Agentic coding: from papers to production‑grade code

Research artifacts showing big jumps by structuring information and constraints; continues yesterday’s coding‑agent thread with new results and workflows. Excludes budget‑aware scaling (feature).

DeepCode agent surpasses human PhDs and commercial tools at paper-to-code

DeepCode, a multi‑agent coding framework from HKU, treats "turn a paper into a repo" as a channel‑optimization problem and hits a 73.5% replication score on OpenAI’s PaperBench, beating Cursor (58.4%), Claude Code (58.7%), and Codex (40.0%) as well as prior scientific agents like PaperCoder at 51.1%. paper thread

It does this by aggressively structuring information flow: a planner first distills messy PDFs into a blueprint (file tree, components, verification plan), then a code agent generates files while a stateful code memory keeps cross‑file consistency, RAG fills in standard patterns, and a validator uses runtime feedback to iteratively repair failures until tests pass. paper thread On a tougher 3‑paper subset rated by ML PhD students from Berkeley, Cambridge, and CMU, humans averaged 72.4% replication while DeepCode scored 75.9%, suggesting that with the right scaffolding agents can now match or edge out expert reproduction workflows. paper thread The framework is fully open source, with implementation details and benchmarks in the authors’ release.ArXiv paper For engineers building coding agents, the takeaways are clear: spend less effort chasing bigger models, and more on compressing specs into blueprints, maintaining structured code memory, and closing the loop with execution‑based self‑correction.

Chain of Unit-Physics bakes physics tests into multi-agent code generation

Researchers at the University of Michigan propose Chain of Unit‑Physics, a multi‑agent framework that encodes domain expertise as physics unit tests and uses them to steer scientific code synthesis toward solutions that obey conservation laws and bounds. paper explainer A supervisor agent plans the solution, a code agent writes multiple candidate programs, and diagnostic and verification agents run them inside a sandbox—installing libraries, executing code, inspecting errors—then iterate until one implementation passes all physics‑based checks. workflow details Those checks look like what a senior scientist would insist on: temperatures, pressures, and densities must stay within physical limits; shock waves must conserve energy; equilibrium states must behave correctly; mass fractions must sum to one, and similar invariants. physics tests On a challenging combustion problem where closed‑weight models and generic code agents failed, Chain of Unit‑Physics converged in 5–6 iterations, matched a human expert’s implementation with ~0.003% numerical error, ran about 33% faster and used ~30% less memory than baselines, at roughly the same API cost as mid‑sized closed models. results recap Because failures are routed through explicit tests, the authors note you can tell whether a bug lies in the generated code or in the test suite itself, which makes the system far easier to debug than opaque RL‑fine‑tuned agents. diagnosis notes For anyone trying to push agentic coding into safety‑critical domains, the paper is basically a recipe: turn your invariants into executable tests, then let a sandboxed, multi‑agent loop search the implementation space until reality‑checked code emerges. ArXiv paper

🧠 Long‑context and deterministic verification advances

Model‑science updates on position encoding and safety checking; mostly preprints with clear engineering implications for memory and guardrails. Excludes BATS (feature).

BEAVER offers deterministic safety bounds for LLM rule‑following

The BEAVER framework from UIUC gives teams a deterministic way to bound how often an LLM will obey a prefix‑closed rule (like “never output an email address”) under its full sampling distribution, achieving 6–8× tighter probability bounds and surfacing 3–4× more high‑risk prompts than rejection‑sampling baselines at the same compute budget paper summary ArXiv paper.

Instead of sampling many completions and hoping you saw the bad ones, BEAVER builds an explicit tree of safe prefixes, expanding the highest‑probability unfinished prefixes using next‑token probabilities from the model and pruning branches as soon as a prefix violates the rule, which only works for prefix‑closed constraints where any extension of a bad prefix is also bad paper summary. At each step it maintains a lower bound (probability mass of finished safe strings) and an upper bound (treating all unfinished prefixes as potentially safe), so you can stop early and still have mathematically sound guarantees about compliance rates.

They demonstrate this on math‑validity constraints, email‑leakage rules, and secure‑coding policies, showing that naive sampling can dramatically underestimate violation probability while BEAVER pinpoints more genuinely risky prompts for red‑teaming paper summary. For safety and platform teams, the immediate implication is a new class of offline audits: you can take an LLM and a production safety spec, then compute conservative bounds on how often the model will slip, rather than trusting a handful of random probes—at the cost of more structured constraint design (prefix‑closed rules) and some nontrivial tree‑search engineering.

RoPE++ keeps imaginary attention to halve KV cache with 64k+ context

RoPE++ (“Beyond Real”) extends rotary position embeddings by preserving the imaginary part of the complex dot product and turning it into extra attention heads, letting long‑context LLMs cut KV‑cache memory by about 50% while maintaining similar accuracy out to 64k tokens in reported benchmarks paper thread ArXiv paper.

Standard RoPE only uses the real component of the complex inner product to encode relative position, effectively throwing away half the positional signal; RoPE++EH instead feeds the imaginary component into separate heads that naturally put more weight on distant tokens, which stabilizes attention over long ranges without growing model width paper thread. For engineers, the headline is that you can either keep context length fixed and halve KV‑cache memory (cheaper inference, more sequences per GPU) or hold memory constant and extend usable context, with no architectural changes beyond how you parameterize rotary and how you shard KV. The catch is that this isn’t a drop‑in trick for existing checkpoints: you need to train or at least heavily finetune with the new embedding scheme, and you’ll want to validate that your deployment stack (kernels, KV layout, caching) understands that different heads now play distinct “near vs far” roles instead of being fully symmetric.

🏗️ AI infra cycle, memory bottlenecks and export risk

Infra economics and policy affecting AI capacity: giga‑cycle demand, HBM/packaging, and proposed H200 export limits. Excludes other safety/policy items.

AI-driven semiconductor “giga cycle” pushes chips toward ~$1T and HBM toward $100B

Creative Strategies and Tom’s Hardware argue AI has kicked the whole semiconductor stack into a "giga cycle", with total chip revenue projected to climb from about $650B in 2024 toward ~$1T by 2028–2029 and High Bandwidth Memory (HBM) growing from ~$16B in 2024 to over $100B by 2030 giga cycle summary. This builds on earlier estimates of a $10T AI data center buildout data center boom, but adds the key detail that HBM and advanced packaging (TSMC’s CoWoS capacity is projected to expand >60% from late‑2025 to late‑2026) will be the real choke points, not just GPUs themselves.

For infra and model teams, this means memory footprints and bandwidth per FLOP are going to be priced and rationed: architectures that squeeze HBM (Mixture-of-Experts, quantization, KV cache compression) and that can tolerate slower or tiered memory will age better than brute‑force dense models. It also suggests that supply risk is migrating down the stack to HBM vendors and advanced packaging lines, so multi-foundry, multi-memory vendor strategies will matter as much as “which GPU” when you plan 2027–2030 capacity tom hardware article.

US bill seeks 30‑month halt on Nvidia H200 export licences to China

A bipartisan group of six US senators has proposed a bill that would bar the Commerce Department from issuing export licences for Nvidia’s H200 GPUs to China for 30 months, directly challenging a recent decision that allowed controlled H200 sales h200 export article. Following up on earlier talk of expanding H200 output for Chinese customers H200 China output, lawmakers argue Commerce leaned too heavily on Huawei’s current capabilities and want to see the technical basis for the approval.

Representative John Moolenaar notes Huawei’s 910C was fabbed at TSMC and is now blocked, and suggests its next 910D part—likely built domestically—could be a step backward, undermining the logic that Chinese chips rival H200. Nvidia counters that cutting H200 licences could cost it “tens of billions” in China revenue and threaten thousands of US jobs h200 export article. For anyone depending on H200‑class ramps, this is a clear policy risk: treat China‑bound capacity as highly uncertain, and expect global supply ripples if Nvidia has to redirect or redesign SKUs to stay within new rules ft export report.

Broadcom’s $11.1B quarter underscores how AI pays for the “plumbing”

Broadcom’s semiconductor segment hit about $11.1B in the latest quarter, and the company is leaning into its role as AI “plumbing”: co‑developing Google TPUs and other custom accelerators, plus selling Tomahawk Ethernet switch chips, PCIe switches, and retimers that shuttle data between GPUs, CPUs and storage broadcom ai plumbing.

For infra leads, the takeaway is that scaling AI clusters is now constrained as much by high‑radix Ethernet, PCIe fabric, and custom ASIC supply as by GPUs themselves. Broadcom’s chart shows semis now dwarfing its infrastructure software line, so networking ASIC lead times and design choices (e.g. 800G vs 1.6T, RoCE vs alternatives) will be first‑order architectural decisions, not afterthoughts. If you’re planning next‑gen racks, start modeling congestion and failure domains around these non‑GPU parts, because this is where a lot of hidden capacity and risk lives.

Bond report projects “AI era” to reach tens of billions of edge devices

A Bond Capital chart shared by Rohan Paul shows how compute platforms have compounded from ~1M mainframes to ~4B mobile internet devices, with the "AI era" expected to cover tens of billions of units spanning phones, IoT, and robots compute devices chart. That projection reframes AI not only as a data center story but as an everywhere story, where most interactions eventually land on-device.

For architects this matters in two ways: training demand will still concentrate in hyperscale clusters, but inference and simple agents will increasingly run on cheap, power‑constrained hardware where you can’t assume fast networks or big VRAM bond report. That pushes model design toward small, specialized models, smart distillation, and hardware‑aware architectures, and it suggests that supply crunches won’t be limited to H100‑class GPUs—cheap NPUs, microcontrollers with accelerators, and RF parts for connected devices are part of the same cycle.

Starcloud and Google explore space-based data centers for AI compute

Commentary around Starcloud and Google’s Project Suncatcher highlights a serious push toward data centers in space as a long‑term answer to AI’s power and cooling demands space datacenter thread. Starcloud pitches orbital clusters that tap 24/7 solar, need minimal batteries, and use radiative cooling, while Google’s Suncatcher plans a 2027 learning mission with TPUs in orbit in partnership with Planet to test radiation‑hardened ML chips google blog post.

For infra planners this is not a 2026 procurement option, but it’s a signal about direction: if AI data center power more than doubles by 2030, moving a slice of training compute above the atmosphere could decouple AI growth from terrestrial grid constraints and local permitting fights starcloud site. The near‑term impact is more practical: expect heavyweight research into radiation‑tolerant accelerators, ultra‑reliable networking, and new scheduling models where you blend ground and orbital clusters, with latency and uplink limits as first‑class constraints.

🗣️ Builders’ stacks, long‑context warnings and UX pain points

Community signals on which models get real work done, plus cautionary notes on 1M‑token threads and chat UX; useful for leaders calibrating policy and defaults.

Builders lean into multi-model stacks with Opus 4.5, GPT‑5.2 and Gemini

Following up on model stack that highlighted an early Opus/GPT‑5.2 split, today’s chatter makes the pattern feel locked in: Opus 4.5 is becoming the default agentic coding engine, GPT‑5.2 Pro the slow but thorough auditor, and Gemini 3 the generalist "world" model. One senior builder reversed an earlier take to say Opus 4.5 in Claude Code is now "better than anything in Codex CLI" for agentic coding while GPT‑5.2 Pro remains "a better engineer overall" for hard work opus coding praise. Another compresses their own stack to "Gemini for the world, GPT for work, Claude for code, Grok for news," which is a neat shorthand for how teams are routing requests today stack slogan.

Twitter polls back this up: in one "best coding model" poll, Opus 4.5 drew a clear majority while GPT‑5.2 and Gemini 3 Pro split a much smaller share, with "my brain" still jokingly competitive coding model poll. This isn’t just about vibes; people are optimizing cost, latency and failure modes per task. That’s why others report using Haiku 4.5 for cheap day‑to‑day chat and saving Opus 4.5 for planning and implementation, with GPT‑5.2 brought in only when they really need the extra reasoning depth haiku opus split. The point is: leaders should stop looking for one "best" model and instead formalize stacks that codify when to call which model, because your best engineers are already working this way.

Amp debates whether 1M‑token threads help more than they hurt

Sourcegraph’s Amp team is openly wrestling with whether to re‑add a large mode that guarantees 1M tokens of context (using Sonnet 4.5), warning that such threads can quietly rack up $100–$1,000+ in model spend and degrade answer quality as people dump unrelated topics into one mega‑conversation amp long thread. Their experience is that most real coding tasks stay under ~200k tokens and work better as small, focused threads; long context only really shines for things like big refactors with tightly related changes. They already ship detailed guidance on context management and a Handoff feature instead of compaction, and are looking for product levers (opt‑in gating, in‑product education, maybe hard caps) to keep people from sleepwalking into huge, messy threads amp blog post.

Not everyone agrees: at least one power user argues that "200k tokens is not enough," reflecting the common instinct to reach for more context when things go wrong rather than tightening prompts and structure long context dissent. For leaders, the lesson is clear: if you expose 400k–1M context windows, you should also expose hard safeguards and a recommended workflow (branching, handoff, summaries) because raw limits alone don’t teach people how to avoid context bloat and bill shock.

Claude’s chat compaction and file UX spark pushback from knowledge workers

Several heavy users are calling out Claude’s chat UX as a poor fit for knowledge work, even while they love Claude Code. One thread argues that the "compacting conversation" feature that works great for coding (where it can distill tasks and files) feels awful in long research chats because it abruptly resets tone and flow instead of sliding a rolling window over past context claude compaction critique. The same user reports frequent hard errors that end the chat altogether, compounding the frustration.

A follow‑up adds that all chatbots currently struggle with file handling compared to CLI tools: Gemini often confuses which Nano Banana image the user is referring to, and ChatGPT "misplaces files that it makes" in a way terminal‑based workflows don’t file handling comment. But Claude’s compaction is singled out as the most jarring design choice, because it assumes every long conversation is a project with tasks and files instead of a narrative thread. If you run a team relying on Claude for analysis or product thinking, this is a signal to push people toward the code/CLI surfaces when reliability matters, and to ask Anthropic (or your own wrappers) for clearer options between rolling windows and hard compaction.

GPT‑5.2 Pro’s Extended and xhigh modes trade latency for reliability

Builders are converging on a shared view of GPT‑5.2 Pro: its Extended/xhigh reasoning modes feel like the most capable single model for deep engineering work, but they are slow and expensive enough that you have to schedule them. One engineer says the xhigh variant "is the slowest model, but most of what it codes works with minimal fixes," praising how it keeps running tests between changes and follows instructions well over long sessions xhigh coding note. Another is seeing a new "Extended" toggle in ChatGPT Pro and calls it "the most powerful thinking mode of GPT‑5.2," explicitly distinguishing it from the separate "Thinking" models extended mode praise.

The catch is UX: people joke about having to change their laptop sleep settings because GPT‑5.2‑thinking‑xhigh runs for so long, or liken it to taking care of a tamagotchi that you leave working overnight and check in on periodically. Teams are starting to treat these modes as batch jobs or review pipelines rather than interactive chat defaults, which is exactly the kind of pattern ops leads should encode into tools and policies instead of leaving to individual judgement.

💼 Market maps and enterprise platform signals

Analyst tier lists and adoption hints relevant to buyers: open‑model standings, AI investment hubs, Copilot roadmap, and a new Google IDE in the wild.

Updated open‑model tier list crowns DeepSeek, Qwen, Kimi as frontier labs

A new open‑models year‑in‑review from Nat Lambert ranks DeepSeek R1, Qwen 3, and Moonshot’s Kimi K2 as the three frontier open labs, with Zhipu (GLM‑4.x) and MiniMax in the “close competitors” band and a long tail of “noteworthy”, “specialist”, and “on the rise” players. open models tier list The thread explicitly notes no US lab in the frontier or close‑behind open tiers, underscoring how much cutting‑edge open work is now coming from China. open models tier list A follow‑up update adds Cohere (for non‑commercial models), ServiceNow’s Apriel, Motif, and TNGTech’s MoE work, and lightly teases Meta’s relatively low placement despite its Llama branding. followup additions The full write‑up breaks out winners (DeepSeek R1, Qwen 3, Kimi K2), runner‑ups (MiniMax M2, GLM‑4.5, GPT‑OSS, Gemma 3, Olmo 3), and honorable mentions across speech and VLMs, which is useful as a shopping list for infra teams deciding which open stacks to bet on over the next 12–18 months. year in review

Google’s Antigravity IDE quietly ships as a free, agent‑first editor

Google’s new Antigravity IDE is now downloadable for free and is already drawing praise from early power users as one of their “favorite new Google products,” signaling real interest in agent‑centric editors beyond Cursor and VS Code. user praise

The product page describes Antigravity as an agent‑first coding environment: it offers tab autocompletion, natural‑language code commands, a task‑centric “mission control” view for multiple agents, and synchronized control across editor, terminal, and browser surfaces. ide page For platform teams, this is an early sign that Google is not just shipping models via Gemini but also competing directly in the IDE layer, which could matter for where developers anchor their day‑to‑day workflows over the next year.

Microsoft Copilot teases 2025 Flight Log and Smart Plus GPT‑5.2 mode

A leaked Copilot screenshot shows Microsoft preparing a “Copilot Flight Log of 2025” recap experience plus a renamed Smart Plus mode that explicitly calls out GPT‑5.2 as the engine for long and complex tasks. copilot screenshot

The mode picker now separates Smart Plus (GPT‑5.2), Real talk, Think deeper, Study and learn, and Search, hinting at a more opinionated task‑based model routing strategy baked into the UX. copilot screenshot For enterprise buyers, this suggests Copilot will lean harder on GPT‑5.2 for deep workflows while wrapping it in digestible yearly summaries and labeled modes, which may both raise usage and make it easier to explain value to non‑technical staff.

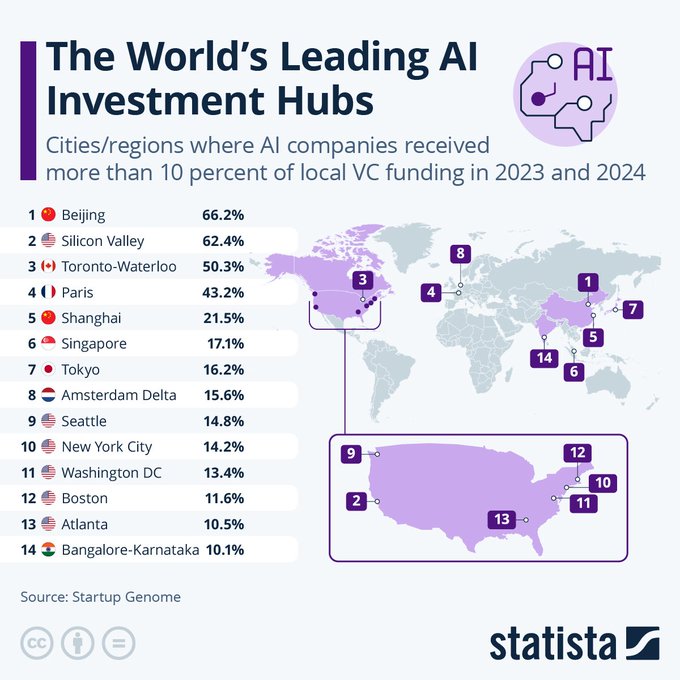

Statista map shows Beijing and Silicon Valley dominate AI share of VC

A Statista map of “AI investment hubs” shows how concentrated AI funding has become: in 2023–24, AI companies took 66.2% of all VC in Beijing and 62.4% in Silicon Valley, with Toronto‑Waterloo at 50.3% and Paris at 43.2%. funding chart

Lower down the table, New York is at 14.2%, Seattle 14.8%, and Bangalore‑Karnataka 10.1%, suggesting that outside a few metros, AI is still a minority of local startup funding. funding chart For AI leaders planning new offices or GTM focus, this is a clean signal about where AI‑native ecosystems (talent, capital, customers) are already dense versus where you may be early.

US computing patents classified as G06 spike after ChatGPT era

A USPTO chart of computing‑related patents (CPC code G06) shows a sharp post‑ChatGPT spike: after a long, gradual rise since the post‑Netscape era, 2024 saw roughly 6,000 more AI‑adjacent patents than 2023 in the US alone. patent graph

The tweet frames this as “computing‑related patent grants, USA = exploded… again + faster post‑ChatGPT,” underlining how quickly firms are trying to lock down IP around AI systems, tooling, and applications. patent graph For AI leaders and analysts, this isn’t about any single filing; it’s a backdrop that hints at denser patent thickets, more freedom‑to‑operate work, and a stronger argument for open ecosystems and cross‑licensing where possible.

🎬 Creative pipelines: Gemini Flash, NB Pro grids, and Retake

High‑volume creative posts: single‑file animated ‘videos’ from code, 3×3 grid workflows, and one‑prompt video edits; fewer pure model launches, more pipeline tips.

Gemini 3 Flash spins an entire animated “video” from a single HTML prompt

A builder shows Gemini 3.0 Flash generating a full educational "Life Cycle of a Star" animation—HTML, JS, visuals, subtitles, background music and voiceover—in ~30 seconds from one long prompt, with no external assets or slideshows. Gemini Flash demo This goes beyond the earlier SVG and UI dump demos of Flash Flash SVG demo and starts to look like a one-shot pipeline for explainer videos, since the output is a single self‑contained HTML file you can drop into CodePen or a course platform. Star lifecycle prompt The prompt explicitly asks for continuous camera moves, subtitle‑style captions, and auto‑play behavior, which Flash respects closely, hinting that "describe the film in text, get a working player" is now a realistic workflow for small teams and educators.

3×3 grids evolve into multi-model pipelines for AI “cinematography”

Creators are pushing beyond the now‑standard 3×3 Nano Banana Pro grids for story beats and discovering the tradeoffs: great visual mood but weak character consistency and unreliable upscaling when you zoom into individual frames.

One approach is to treat the grid as a storyboard, then upscale chosen frames in Freepik’s Nano Banana Pro with prompts like “4K, keep same lighting and color, photorealistic skin,” which can restore detail without completely losing the original feel. Freepik upscale test Others are chaining models—e.g., using three NB Pro stills as keyframes and then feeding the first and last into Kling O1 to generate a smooth 8‑second shot in between. Nano Banana Kling demo A parallel thread experiments with Henry Daubrez’s 4‑image stacks for scenes that don’t need nine angles, since fewer but more coherent frames tend to hold character identity better while still giving you editorial choice. Christmas stack workflow Following earlier 3×3 product‑ad and aging‑grid experiments NBP 3x3 grid, this is slowly turning into a playbook: grid for coverage, pick winners, upscale or animate with a second model.

LTX Retake turns 20-second clips into new shots with one prompt

LTX Studio’s Retake feature is being used as a practical "one‑prompt" video editor: you upload up to ~20 seconds of footage, highlight a segment, and describe an event like “a glowing pink orb appears in my hand and disappears in a puff of smoke,” and Retake regenerates the action, lighting and VFX around the existing performance.

The same workflow can change props (a skull reciting Hamlet, giant waves, different character actions) or even swap dialogue, with the crucial constraint that prompts must describe events and camera moves, not tiny visual tweaks like exposure or object removal. Retake walkthrough A follow‑up link drives people straight into the Retake UI, positioning it as an everyday tool for creators who used to spend hours in timeline editors and now can prototype alternate takes in about a minute per shot. Retake link

DesignArena’s new Lotus and Cactus image models appear to be OpenAI GPT‑4

Users probing DesignArena’s freshly added "Lotus" and "Cactus" image models are finding that when asked "What model are you?" they reply "I am GPT‑4" and name OpenAI as the provider, complete with whiteboard screenshots showing the Q&A.

Model identity Q&A It’s a strong hint that these are either thin wrappers or fine‑tuned variants of OpenAI’s image stack rather than entirely new architectures, which matters if you care about safety profiles, training data provenance and style behavior. For AI leads, the takeaway is simple: when a creative SaaS quietly swaps models under branded names, you should still assume the underlying capabilities, costs and IP rules of the original lab apply unless they publish something concrete to the contrary.

Nano Banana Pro gets a "cloud pareidolia" recipe for pseudo‑photography

Prompt crafters are sharing a detailed Nano Banana Pro setup that makes fake smartphone sky photos where random clouds almost resemble dinosaurs, dragons, car silhouettes or logos—intentionally blurry and overexposed so the shapes feel like real pareidolia instead of clean icons.

The recipe specifies grainy phone camera framing, blown‑out auto‑exposure, suburban rooftops and power lines at the edge, and explicitly says the cloud shape should be "vague, indefinite, imperfect," so the viewer’s brain does the last mile of recognition. Prompt details Building on earlier Nano Banana Pro work like aging grids and 3×3 sequences Aging grid, this is another example of treating the model less like an illustrator and more like a controllable generative lens that mimics how amateur photos actually look.

Nano Banana Pro is being used for full literary spreads like A Christmas Carol

One creator is feeding the entire text of Dickens’ A Christmas Carol into Nano Banana Pro and asking for accurate depictions of each ghost "alongside their original visual descriptions," turning a classic novella into a single composite illustration that respects the source language. Ghosts illustration Another follow‑up shows a two‑page graphic‑novel style "Stave I" spread—panels of Scrooge, Marley’s knocker, and the chained ghost rendered with period‑appropriate lighting and staging, ready to drop into print or digital comics.

Graphic adaptation For AI illustrators, this hints at a workflow where you can keep prose as the ground truth, then let the model do global casting and scene layout before you refine with manual edits or additional tools.

Horror and sci‑fi merchants are prototyping detailed pillow designs with NB Pro

Nano Banana Pro is also showing up in more commercial pipelines: one thread shows a "collection of pillows" with richly embroidered xenomorph facehuggers, Demogorgon heads, and fungal horror portraits rendered as high‑detail velvet cushions complete with brand tags and studio‑style staging.

Pillow workflow These look like straight‑to‑product‑photo concepts—lighting, fabric texture, composition and even fake studio environments are all baked in—which means a small merch seller can go from idea to catalog‑ready mockups in a single prompt and then hand the best designs off to real textile artists or print‑on‑demand vendors.

🤖 Embodied: open robots ship, lab rigs get game‑ready

Concrete embodied signals: thousands of open Reachy Minis shipping for AI builders and a refined Doom rat‑rig with new actions; little new model work today.

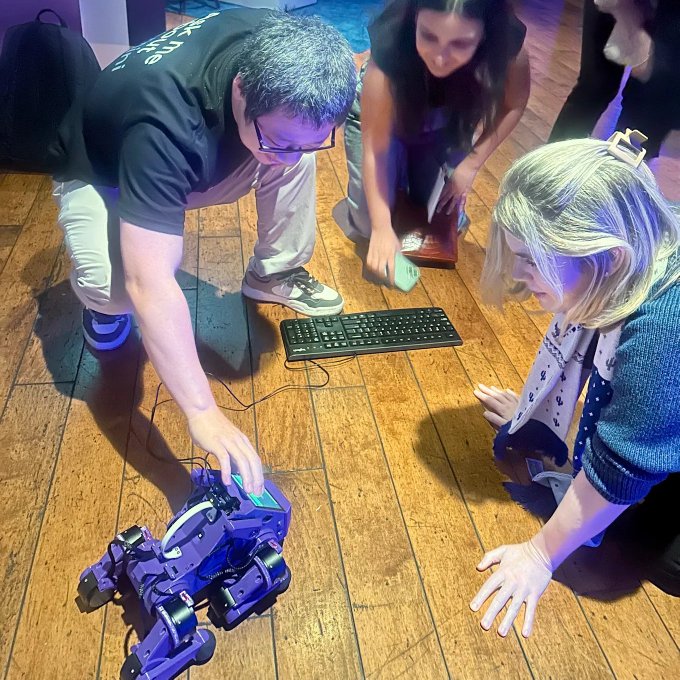

Reachy Mini robots start landing on desks as open-source AI hardware

Hugging Face and Pollen’s Reachy Mini tabletop humanoids are now not just announced but actually arriving at customers’ doors, following the earlier news that 3,000 units are shipping worldwide with roughly half headed to the US Reachy shipping tweet. One early buyer shared a DHL notification that their unit will arrive before Christmas, underscoring that this is a real, in-hand platform for embodied AI work rather than a pre-order promise delivery email.

Following up on Reachy Minis, which focused on the 3,000-unit production run, today’s Hugging Face blog digs into what teams actually get: an 11-inch, 3.3 lb open robot that’s fully programmable in Python, with a $299 “lite” kit and a $449 wireless version, aimed at everyone from hobbyists to lab researchers Reachy blog post. Because it’s open-source and desk‑sized, AI engineers can prototype perception, control, and small agent loops (vision → plan → act) without investing in a full-size humanoid fleet, and robotics leads get a standard “dev board with arms” they can ship to collaborators or classrooms and then script from the same codebase.

Doom-playing rat rig evolves into a richer embodied learning setup

The open Doom-rat experiment has reached a new milestone: rats on a spherical treadmill can now not only walk and turn through a virtual corridor, but also trigger shooting actions at enemies in-game, controlled entirely by their body movements and feedback loops rat Doom upgrade.

The rig holds a rat in a body-fixed harness above a freely spinning ball; motion sensors on omni wheels translate subtle ball rotations into Doom’s forward, lateral, and turning controls, while air puffs to the whiskers serve as a “you hit a wall” penalty and a reward spout delivers treats when the rat executes the desired behavior training setup explainer. Because all of this is non-invasive and uses a curved AMOLED display as the visual world, it’s effectively a low-cost, high-bandwidth interface between biological control and a programmable environment, which is valuable for AI and robotics folks who study closed-loop control, sim-to-real gaps, and the limits of reinforcement learning when the “agent” is a real body rather than a neural net.

Gemini Live drives Stanford’s Puppers robot dog in new demo

A Google DeepMind engineer showed off a small four-legged “Puppers” robot from Stanford wired up to Gemini Live via Google AI Studio, turning the dog into a conversational, voice-driven embodied agent Puppers walkthrough. The setup lets a user speak to Gemini, which then issues commands that control the robot dog’s movements and behavior in real time.

For AI engineers, the significance is that this is a concrete reference build for hooking a production multimodal model into a physical robot in a classroom-friendly form factor, rather than a bespoke industrial arm or lab-only platform. Teams exploring agent frameworks, safety layers, or high-level planning can treat this as an example pipeline—speech → Gemini Live reasoning → action API to Puppers—and adapt it for their own robots or simulators, without waiting for expensive commercial humanoids to be available.

🎙️ Realtime voice: Translate and site voice agents

Smaller, practical voice updates—Gemini-powered speech‑to‑speech in Translate and an ElevenLabs voice agent wired into a consulting site’s RAG/CRM flow.

Gemini speech-to-speech in Google Translate headed to devs next year

Google’s Gemini-powered realtime speech-to-speech mode in Translate is live for users and is now being explicitly positioned as coming to developers early next year, giving teams a clear signal to start planning voice translation features around it. This follows the initial launch with headset support Translate audio and clarifies that the same stack will be exposed via developer APIs, not just the consumer app translate feature.

For AI engineers, this means you can expect production-grade, low-latency streaming translation (the same pipeline as the consumer app) to be accessible without having to build your own ASR+MT+TTS chain. Leaders should treat this as a likely 2026 building block for multilingual support, call centers, and live captioning, and start thinking about privacy, data routing, and where on your stack you’d plug a third-party translation stream rather than rolling bespoke voice infra.

Solo consultant shows ElevenLabs voice agent wired into RAG and n8n CRM

An independent consultant implemented an ElevenLabs conversational voice agent on their site that answers questions using built‑in RAG and tool calling, then pipes captured leads into an n8n workflow that stores emails and sends Telegram notifications for follow‑up voice agent video. They’re now offering to help others add the same pattern, describing a stack of ElevenLabs voice, a knowledge‑base, and n8n automations workflow details linked from their consulting site consulting site.

This is a small but telling example of how “AI receptionists” are moving from slideware to working micro‑systems: voice handles the conversation, RAG grounds answers in your docs, and low‑code automation turns calls into CRM events without writing a full backend. For AI engineers and ops leads, it’s a reminder to design voice agents as first‑class parts of the sales and support funnel—hooking them into your existing databases, messaging, and ticketing—rather than treating them as stand‑alone demos.

Builders report noticeable Gemini Flash native audio quality jump

Early users are noticing a clear improvement in Gemini Flash’s native audio experience, with one long‑time watcher saying the upgrade is obvious “immediately when you open the app” Flash audio audio quality note. While there are no new numbers, this kind of unsolicited UX feedback usually points to better prosody, fewer artifacts, or both.

If you’re building voice agents on Gemini Flash Native Audio, this suggests Google is quietly iterating on the TTS side—potentially smoothing multi‑turn conversations and making long sessions less fatiguing. It’s a good moment to re‑test your own prompts and voice personas: subtle quality shifts can change how human your agent feels, even if latency and pricing stay the same.

Community project brings Gemini Native Audio translation into the web stack

A community dev has wired Google’s Gemini Native Audio into a browser-based experience: you speak English, the other side hears Spanish in near real time, with support for 20+ languages web translation demo. This isn’t an official SDK, but a working example that shows how to bridge Gemini’s streaming audio APIs into typical web frontends.

For engineers, this is a concrete pattern for adding bilingual or multilingual voice chat to SaaS products without waiting for full-stack vendor tooling: run the Gemini stream server-side, then pipe audio to and from a web client. It also hints at practical latencies—good enough for conversational back‑and‑forth—so teams can start experimenting with customer support, education, or telehealth scenarios today, even before more polished UI components arrive.