.png&w=3840&q=75&dpl=dpl_3ec2qJCyXXB46oiNBQTThk7WiLea)

Claude Code runs 10+ parallel agents – 3,000 tests in days

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Claude Code’s creator Boris Cherny published a detailed playbook showing Opus 4.5 with Thinking orchestrating 10+ concurrent agents—five local terminals plus up to 10 web/iOS sessions—via Plan mode, slash‑command subagents, hooks and MCP tools; he claims strong verification loops (tests, bash, UI checks) improve shipped code quality by 2–3× despite higher per‑call cost. The “Ralph Wiggum” bash‑loop pattern is now folded into this official workflow for overnight DI setups and 100%‑coverage test suites, while Google principal engineer Jaana Dogan reports Claude Code recreated a distributed agent orchestrator comparable to a year‑long internal Google effort in about an hour, a high‑signal but still anecdotal validation of current agentic coding capabilities.

• Agent Skills layer: Anthropic’s file‑based Skills now port cleanly between Claude Desktop and Claude Code; community N Skills Marketplace curates dev‑browser and Gas Town helpers, and a lease‑review Skill runs under cheaper GLM‑4.7, underscoring model‑agnostic packaging.

• Multi‑agent research stacks: Jeff Emanuel’s BrennerBot coordinates multiple models via beads graphs, Agent Mail and ntm, accumulating ~3,000 unit and integration tests in under a week, all written and maintained by agents.

• Front‑door flows and input: A /spec-init command interviews developers into a reusable SPEC and delivered a production‑grade app with tests in ~5 hours vs a month estimate; Typeless’ iOS voice keyboard surfaces as a sponsored way to drive these workflows from phones.

Together these pieces recast Claude Code from an experimental IDE feature into a growing ecosystem of skills, loop patterns and orchestration stacks aimed at sustained, test‑heavy software work, though systematic throughput and reliability benchmarks versus human teams remain sparse.

Top links today

- RLHF book updated online edition

- AI Futures Model automation forecasts

- Recursive Language Models arXiv paper

- Youtu-LLM agentic lightweight LLMs paper

- Zero-Overhead Introspection for adaptive compute

- Bayesian Geometry of Transformer Attention paper

- Propose, Solve, Verify formal code paper

- Parameter efficient methods for RLVR paper

- Backpropagation in Transformers tutorial paper

- Memory systems for autonomous AI agents

- Discreteness in diffusion language models

- Nvidia H200 supply chain analysis

- FT analysis of AI-driven DRAM supercycle

- Digitimes on Baidu Kunlunxin AI chip IPO

- Satya Nadella interview on AI slop debate

Feature Spotlight

Feature: Claude Code playbook hits production muscle

Anthropic’s Boris Cherny publishes a detailed Claude Code workflow; a Google principal says it rebuilt a distributed agent orchestrator in ~1 hour—strong signal that agentic coding is ready for serious teams.

Large multi-post thread from Claude Code’s creator plus community proofs show agentic coding maturing fast: concrete workflows (Plan, subagents, hooks, MCP) and a Google principal validating real outcomes. Mostly agent workflow details; few non‑Claude items.

Jump to Feature: Claude Code playbook hits production muscle topicsTable of Contents

🛠️ Feature: Claude Code playbook hits production muscle

Large multi-post thread from Claude Code’s creator plus community proofs show agentic coding maturing fast: concrete workflows (Plan, subagents, hooks, MCP) and a Google principal validating real outcomes. Mostly agent workflow details; few non‑Claude items.

Claude Code creator publishes detailed playbook for running 10+ parallel coding agents

Claude Code (Anthropic): Claude Code’s creator, Boris Cherny, laid out a concrete end‑to‑end workflow for using multiple Claude agents in parallel, centering on Plan mode, Opus 4.5 with Thinking, and strong verification loops, expanding on earlier community skill patterns skills thread; he describes running five local Claudes plus 5–10 web sessions, teleporting work between terminal and browser, and even starting long‑running jobs from his phone according to the workflow recap and Claude Code page.

• Model and session strategy: Cherny runs Opus 4.5 with Thinking for everything, arguing it is the fastest end‑to‑end because it needs less steering and better tool use despite higher per‑call cost model choice; he keeps 5 local terminal tabs and up to 10 web/iOS sessions in flight, handing tasks back and forth with --teleport and background agents terminal setup.

• Planning and auto‑apply: Most work starts in Plan mode (Shift+Tab twice) to negotiate a PR‑sized plan, then switches to auto‑accept edits so Claude can usually one‑shot the implementation once the plan is approved, as he explains in plan usage.

• Team knowledge and skills: His team maintains a shared CLAUDE.md in the repo, updated via a GitHub Action that tags @.claude on PRs to append new lessons, and they treat slash commands and subagents as first‑class workflow units stored under .claude/commands/ and .claude/agents/ team playbook and CLAUDE overview.

• Hooks, permissions, tools: A PostToolUse hook auto‑formats code to avoid CI nits, /permissions pre‑approves safe bash commands instead of --dangerously-skip-permissions, and a Slack MCP server, BigQuery CLI, and Sentry integration let Claude search docs, run analytics, and pull error logs on its own permissions usage, formatting hook and tool integrations.

• Verification as the main lever: Cherny emphasizes that giving Claude a way to verify its work—running tests, bash commands, or UI checks via the Claude Chrome extension—2–3× improves final quality, with every change to claude.ai/code exercised in a real browser loop until UX “feels good” model choice and verification blog.

The thread effectively upgrades Claude Code from a chatty assistant to a documented production pattern: Plan → subagents/commands → tools/hooks → automated verification, all orchestrated across many concurrent sessions.

Google principal says Claude Code recreated their agent orchestrator in about an hour

Claude Code (Anthropic): Google principal engineer Jaana Dogan reports that Claude Code generated a distributed agent orchestrator matching a year‑long internal Google effort in roughly an hour, after she described the problem at a high level jaana comment and later shared a screenshot of the exchange orchestrator screenshot.

• Baseline effort vs agent output: Dogan notes her team had been trying to build distributed agent orchestrators at Google “since last year” with multiple options and no alignment, framing Claude Code’s one‑hour design as essentially replicating what the team had already built jaana comment.

• External validation for agent workflows: Community reactions highlight this as a concrete, high‑signal endorsement of Claude Code’s current capabilities from a senior infra engineer, contrasting it with many more speculative claims about coding agents orchestrator screenshot.

For engineering leaders tracking whether agentic coding has crossed from toy demos into systems work, this is an unusually clear real‑world data point from inside a major lab.

Claude Agent Skills spread across marketplaces and even power GLM 4.7

Agent Skills (Anthropic): Anthropic’s file‑based Agent Skills format—bundling instructions, knowledge files, and scripts—is starting to look like a de facto skills layer for Claude Code and beyond, with diagrams comparing Skills to Custom GPTs skills diagram, guidance on reusing desktop skills in Claude Code skill reuse, and real tasks like lease review running on GLM‑4.7 via a Claude skill glm skill usage.

• Concept as Custom GPT analogue: Community breakdowns describe skills as “file‑based packages” with instructions, knowledge files, and optional scripts that auto‑activate when relevant, explicitly likening them to Custom GPTs but with git‑friendly files instead of a cloud UI skills diagram.

• Official framing: Anthropic’s own blog positions Skills as how agents get procedural knowledge and organizational context, emphasizing that real work needs these persistent capabilities rather than just raw models skills blog and skills article.

• Cross‑environment reuse: Skills created in Claude Desktop can be exported as zips and dropped into ~/.claude/skills or project .claude/skills folders for Claude Code, giving teams a straightforward way to share capabilities across machines and repos skill reuse and skills docs.

• Model‑agnostic use with GLM 4.7: One developer shows a lease‑review skill running under GLM‑4.7 in Claude Code, surfacing nuanced concerns like a “10% monthly interest” clause and self‑help eviction, and notes GLM‑4.7 is cheaper for this kind of analysis glm skill usage.

• Emerging skills marketplace: The community‑run N Skills Marketplace curates high‑leverage skills like dev-browser, gastown, and zai-cli, installable via /plugin marketplace add commands, and a dedicated Gas Town skill teaches agents how to use Steve Yegge’s orchestrator IDE skills marketplace and gastown skill.

Together these pieces suggest Skills are becoming a portable capability layer for Claude‑style agents, rather than a feature locked to any single model or interface.

Ralph Wiggum background loops graduate from hack to serious overnight agent pattern

Ralph Wiggum pattern (Community): The “Ralph Wiggum” pattern—long‑running bash loops that let Claude Code keep working until it decides it is DONE—has moved from a clever hack to a real background‑agent workflow, with new examples of Ralph writing dependency injection setups and full test suites overnight and even texting status updates over WhatsApp bash loop, hooks and Ralph and whatsapp usage.

• Hook‑integrated long runs: Boris Cherny folds Ralph into his official playbook, describing how for very long‑running tasks he either prompts Claude to verify with a background agent, uses an Agent Stop hook, or uses the Ralph Wiggum plugin together with permissive modes so Claude can “cook without being blocked” hooks and Ralph and ralph plugin repo.

• Concrete overnight work: Matt Pocock reports that while he slept, Ralph wrote a DI setup for his AI Hero CLI and 100% coverage tests for two critical scripts, and he later wired Ralph to WhatsApp so it can text him when it is done whatsapp usage and context link.

• Performance vs humans: Another screenshot circulating in the community shows engineers remarking that “ralph driven development is beating top engineers,” underscoring that these loops are starting to match or exceed human throughput on some tasks ralph praise.

• Next stage ambitions: Advocates talk about “systems as loopbacks”—using Ralph‑like patterns for auto‑healing, auto‑development and auto‑release—arguing that skilled operators should move from in‑the‑loop to on‑the‑loop, designing these loops rather than hand‑holding individual runs loopback plans.

The pattern now looks less like a weekend experiment and more like a primitive for scheduling and supervising autonomous coding tasks inside real codebases.

BrennerBot and beads turn Claude Code into a multi-agent scientific research stack

BrennerBot stack (Community): Jeff Emanuel’s new BrennerBot project uses Claude Code plus an ecosystem of open tools—Agent Mail, the ntm orchestrator, cass search and memory, and his beads task system—to coordinate multiple frontier models on scientific inquiry, and has already accumulated nearly 3,000 unit and integration tests in under a week brennerbot intro and project readme.

• Multi‑agent orchestration: BrennerBot treats models as a group of specialists that operate over a shared beads task graph and communicate via Agent Mail, with ntm handling agent orchestration and custom memory/search tooling providing long‑term context brennerbot intro and brenner quotes.

• Agent‑maintained verification: Emanuel notes that historically such a dense test suite would be a form of tech debt, but here tests are written and maintained by agents, so adding more tests and updating them as code evolves becomes “free” compute rather than human toil tests commentary.

• Tools optimised for agents, not humans: He argues that conventional tools like linters and trackers were shaped around human constraints (low tolerance for false positives, conflict‑heavy workflows), and that new tooling such as his beads_viewer should instead ask “what do agents want?”, with robot‑mode interfaces separate from human UIs beads comment and agent-first tools.

• Loop‑aware project management: Beads decomposes large projects into epics, tasks, and subtasks with explicit dependencies, so many agents can pick the most impactful “bead” at any moment and advance the system like an ant colony rather than a single assistant multi-agent loops.

BrennerBot illustrates how Claude Code and similar agents are starting to sit at the core of serious, test‑heavy research systems rather than being bolted onto existing workflows.

Spec-init slash command turns vague ideas into executable Claude Code plans

Spec-init workflow (Community): Builders are standardizing a /spec-init <SPEC_DIR> slash command that uses Claude Code’s AskUserQuestion tool to interview the developer, draft a full specification, and then drive a multi‑step implementation plan, turning open‑ended ideas into structured work spec intro and prompt text.

• Interview‑driven spec building: The command’s prompt tells Claude to “interview me continually and systematically until the spec is complete,” probing implementation details, UI/UX, tech stack, constraints and trade‑offs, and to store the resulting spec in the given directory for reuse prompt text.

• From spec to vibe coding: Omar Sar describes using /spec-init on a new project and getting a production‑grade app with tests in ~5 hours, versus his estimate of about a month of traditional work, after which Claude Code executed the detailed plan it had just helped author spec intro and usage report.

• Reuse across projects: The same spec flow can be adapted for large features or seeded with an initial human‑written SPEC, making it a repeatable front‑door into “vibe coding” rather than a one‑off mega‑prompt reuse comment and author reply.

This pattern shifts the hardest part of agent use—from inventing the right prompts each time—to a shared command that gathers requirements like a product manager before the agents touch code.

Voice-first Typeless keyboard brings Claude Code-style ‘vibe coding’ to iOS

Typeless keyboard (Typeless): A sponsored review highlights the Typeless AI voice keyboard for iOS as a way to drive Claude Code and other agentic tools from a phone, syncing dictation history across mobile and desktop and emphasizing its use for “vibe coding” in Claude Code and Google AI Studio typeless review and app link.

• Hands‑free prompting: The user calls out that Typeless’ wave animation and custom dictionary make it practical to dictate long, technical prompts and abbreviations without frequent corrections, something they say other mobile dictation tools struggled with typeless review.

• Cross‑device workflows: Dictated text is stored and accessible on other devices, helping avoid losing long prompts when mobile dictation fails in native apps, and making it easier to kick off or refine Claude Code sessions from a phone and then continue on a laptop typeless review.

While this sits at the edge of tooling, it shows how agentic coding workflows are leaking into fully mobile, voice‑first setups rather than staying tethered to desktop terminals.

🏗️ GPU, HBM and datacenter finance watch

Fresh supply/capacity and financing signals: China H200 demand vs TSMC CoWoS scale‑up, mega‑campus builds, and chip‑backed SPVs. Continues yesterday’s DRAM/HBM storyline with concrete 2026 ramps and capex structures.

Nvidia faces 2M‑GPU China H200 demand as TSMC CoWoS ramps toward 2026

H200 supply (Nvidia/TSMC): Reports say Chinese firms have ordered around 2 million H200 accelerators for 2026 while Nvidia currently holds only ~700,000 units in stock, forcing a push to expand TSMC’s advanced CoWoS packaging from roughly 75–80k wafers/month to about 120–130k by late‑2026, as described in the h200 demand thread and the linked ai chip supply article.

• China demand vs controls: The H200 is said to deliver about 6× the training performance of the export‑limited H20 for Chinese buyers, so customers reportedly want to front‑load imports before any new restrictions, with initial shipments potentially drawn from existing inventory if Beijing approves by mid‑February 2026 h200 demand thread.

• CoWoS bottleneck: TSMC’s plan to lift CoWoS capacity to up to 120–130k wafers/month by end‑2026 directly targets the main packaging bottleneck for GPU+HBM modules used in AI servers, according to the same ai chip supply article.

• Future platforms: The report notes that Nvidia’s next‑gen "Vera Rubin" CPU+GPU platform is scheduled to ramp in H2‑2026, so any CoWoS expansion also underwrites that roadmap, not only Hopper‑class H200s.

The picture that emerges is a tight H200 market where Chinese demand, export approvals, and TSMC’s CoWoS build‑out jointly decide how much frontier‑class compute actually ships in 2026.

Amazon builds $11B Indiana AI campus targeting roughly 2.2 GW of power

Indiana AI campus (Amazon): Amazon is constructing an $11B data‑center campus in St. Joseph County, Indiana, that is projected to draw about 2.2 GW of power once fully built, implying another massive dedicated footprint for AI and cloud compute amazon campus thread.

• Visual progress: Drone imagery shows large tracts of cleared land and partially erected industrial buildings labeled "Amazon Data Center", indicating early‑stage but substantial physical progress on the site amazon campus thread.

• Relative scale: The author notes that this is only one of many such projects, and that even larger sites are planned elsewhere, which situates the Indiana build within a broader wave of multi‑GW campuses intended to backstop AI training and inference growth amazon campus thread.

Together with other mega‑projects, this campus highlights how power availability, not just GPUs, is becoming a first‑order constraint and investment focus for large AI operators.

DRAM supercycle now forecast to lift 2026 consumer device prices by 5–20%

DRAM squeeze (multiple manufacturers): Memory makers and analysts now warn that a new DRAM supercycle driven by AI datacenter HBM demand could push consumer electronics prices up 5–20% in 2026 as supply shifts to high‑margin HBM and away from PC/phone RAM, extending the HBM‑driven shortage flagged in DRAM supercycle into the retail device market dram price report.

• HBM priority and stockpiling: The report notes that data‑center operators are locking in HBM capacity via long‑term contracts, while device OEMs and distributors react by stockpiling commodity DRAM—turning normal purchasing cycles into panic buying and further driving up spot and contract prices dram price report.

• Price jumps already visible: Samsung is reportedly raising some memory‑chip prices by as much as 60%, and analysts expect 50%+ quarter‑to‑quarter DRAM price jumps into late‑2025 and 2026, making it hard for PC and phone makers to hide the impact through minor design tweaks or margin cuts dram price report.

• Consumer impact window: The article argues that since new fabs take 2–3 years to come online and much of that new capacity is effectively pre‑sold to AI clouds, the pricing of mainstream devices will mostly track how memory makers allocate output between HBM and lower‑end DRAM until at least Q4‑2027 dram price report.

For AI builders this links cluster economics back to end‑user hardware: every incremental HBM stack that goes into a GPU server is one less DRAM kit for PCs, with direct implications for how cheaply users can access local inference hardware.

Oracle tipped to use chip‑backed SPVs and off‑balance‑sheet debt to fund GPUs

Chip‑backed SPVs (Oracle): A new prediction from The Information argues that Oracle will likely finance its next wave of AI data centers by issuing chip‑backed debt through special‑purpose vehicles (SPVs), with GPUs as collateral, rather than loading the borrowing directly onto its main balance sheet oracle debt prediction.

• Structure described: In the suggested structure, an SPV would own a pool of Nvidia GPUs and related data‑center assets and take on loans secured by those chips; Oracle would then lease capacity from the SPV, so in a default scenario lenders would seize the GPU‑rich facilities rather than broader Oracle assets oracle debt prediction.

• Reason for indirection: The article notes that Oracle has already committed to building huge data centers for OpenAI but lacks the cash to buy all the GPUs upfront; SPVs allow it to raise funds against existing chip inventories to buy more, while keeping leverage metrics and credit ratings healthier by keeping much of the debt "off balance sheet" oracle debt prediction.

• Broader trend: The piece frames this as part of a wider move where big tech firms like Meta and xAI are experimenting with alternative financing structures so they can sustain massive AI capex without alarming equity markets with headline debt loads oracle debt prediction.

If this forecast bears out, GPU bundles could increasingly sit inside securitized vehicles much like aircraft or real estate, changing both how AI capacity is financed and how liquid those assets become in credit markets.

Baidu’s Kunlunxin files for Hong Kong IPO to fund domestic AI accelerator stack

Kunlunxin IPO (Baidu): Baidu’s AI chip unit Kunlunxin has confidentially filed for a Hong Kong IPO, with Baidu expected to retain about 59% ownership after listing and a recent fundraising valuing the unit around $3B, according to coverage shared today kunlunxin ipo story.

• Inference‑focused strategy: The thread notes that Kunlunxin was originally built to power Baidu’s own search, cloud, and model workloads, and that the IPO is meant to fund scaling wafer supply, qualifying systems with local server partners, and deepening the software stack so Chinese customers can run training and especially inference at scale without relying on Nvidia hardware that is vulnerable to US export controls kunlunxin ipo story.

• Capex offload: As a separately listed entity, Kunlunxin will be able to raise capital directly and be valued as a semiconductor company, while Baidu can lease data‑center capacity and chip services from it, keeping some capex off its main balance sheet but retaining strategic control kunlunxin ipo story.

The move fits a broader pattern of Chinese platforms trying to build domestic AI accelerators and supply chains that are less exposed to Western licensing risk while still addressing surging inference demand.

Vantage, Oracle and OpenAI push ahead with a $15B+ AI data center campus

Vantage campus (Oracle/OpenAI): New footage highlights construction of a multi‑site Vantage Data Centers campus that Oracle and OpenAI are partnering on, framed as a $15B+ infrastructure investment to host large AI workloads and future expansions vantage campus note.

• Physical footprint: The video walk‑through shows a sprawling campus layout with multiple large halls under development and labels it a "Vantage Data Center Campus", tying the spend directly to AI data‑center real estate rather than generic cloud build‑out vantage campus note.

• AI‑specific context: The post argues that AI is becoming a "very physical problem" requiring land, permits, power, cooling and long build cycles, and presents this Oracle–OpenAI–Vantage project as one of many such mega‑sites now being funded to secure compute supply into the late 2020s vantage campus note.

The scale and framing underline that hyperscale AI partnerships are increasingly materialised as multi‑billion‑dollar dedicated campuses rather than incremental additions to existing cloud regions.

xAI buys third ‘Macrohardrr’ site near Memphis as compute nears 2 GW

Macrohardrr campus (xAI): Elon Musk’s xAI has reportedly purchased another large building in Southaven, Mississippi, to host a third supersized data center—nicknamed “Macrohardrr”—adjacent to its existing "Colossus 2" facility near Memphis, pushing its total planned compute capacity toward ~2 GW xai macrohardrr post, following up on xAI campus.

• Clustered build‑out: A map in the coverage shows multiple xAI logos clustered around the Memphis metro area, with the new Southaven site positioned close to prior acquisitions, suggesting a contiguous mega‑campus rather than scattered single sites xai macrohardrr post.

• Timeline and intent: The report states that construction is slated to begin in 2026, with the new facility intended to complement existing sites such as Colossus 2 in Memphis and earlier purchases, all dedicated to training and serving xAI’s Grok models xai macrohardrr post.

This move reinforces that xAI is financing its own concentrated compute region rather than relying solely on third‑party clouds, and that its GPU roadmap is tied to a specific physical geography.

Morgan Stanley forecasts inference AI chips to reach 80% of cloud AI spend by 2030

Inference vs training (Morgan Stanley): A Morgan Stanley chart circulating today shows analysts expect AI inference chips to account for roughly 80% of cloud AI semiconductor spending by 2030, up from a minority share in 2021, arguing that inference demand will eventually exceed training as AI apps scale morganstanley chart.

• Spending mix: The bar chart tracks training vs inference chip spend from 2021 through 2030e in US dollars, with the tan "Inference" segment growing to four‑fifths of the total by the end of the forecast window, while the blue "Training" segment shrinks to about 20% morganstanley chart.

• Rationale: The commentary notes that once models are trained, the cost center shifts to serving "an endless stream of user requests all day long", making metrics like cost per output, throughput, memory capacity, and latency the deciding factors in hardware choice and opening room for non‑Nvidia players focused on cheaper, efficient inference chips morganstanley chart.

For infra planners this frames the medium‑term risk that capex and vendor lock‑in optimized for training may not match where most AI chip dollars are headed within a decade.

📚 Long‑context via Recursive Language Models (RLM)

Multiple posts on RLM now on arXiv and follow‑ups: programmatic self‑calls treat the prompt as external data, sustaining quality beyond 1M+ tokens. New today are concrete graphs, helper‑agent flows and open‑model results.

Recursive Language Models show stable performance past 10M tokens

Recursive Language Models (MIT): Follow‑up analysis of the RLM paper, building on the initial launch that introduced prompts as external data, highlights that GPT‑5‑based RLMs keep accuracy steady on tasks even when inputs exceed 10 million tokens by fetching snippets from a Python REPL instead of relying on the model’s native context window rlm explanation; the core idea is that the LLM repeatedly queries a separate text store, runs small sub‑calls over retrieved spans, and composes answers, avoiding the steep quality drop seen when vanilla GPT‑5 is pushed past ~272K tokens rlm explanation.

So what changes is that context size is no longer the hard bottleneck; the graphs in the new thread show GPT‑5 performance collapsing toward zero on long‑context benchmarks while the corresponding RLM curves remain roughly flat across log‑spaced input sizes up to 1M+ tokens, confirming that prompt‑as‑data plus programmatic self‑calls can turn a fixed‑window model into something that behaves like it has effectively unbounded context rlm explanation.

Qwen3‑Coder‑480B works well as an open Recursive Language Model

Qwen3‑Coder‑480B (Alibaba Qwen): New results shared by the RLM authors underline that open‑weight Qwen3‑Coder‑480B already works "extremely well" as an RLM, with the RLM‑style prompting substantially outperforming both the base model and summary‑agent baselines on long‑context benchmarks like CodeQA, BrowseComp+, OOLONG and OOOLONG‑Pairs open model remark; in some settings, the Qwen3 RLM without sub‑calls even beats the version that uses sub‑calls, and it closes much of the gap to GPT‑5 run under the same RLM framework in the comparison table open model remark.

The point is that practitioners now have at least one widely‑available open model that behaves competitively under RLM inference, which makes it easier to experiment with 100K–1M+ token workflows—such as codebase question‑answering and web‑scale document analysis—without depending entirely on closed GPT‑5 tiers open model remark.

Prime Intellect’s RLMEnv wraps Recursive Language Models with helper agents

RLMEnv and helper agents (Prime Intellect): A community write‑up describes the Prime Intellect implementation of RLMs, which extends the original MIT design initial launch with a small Python environment (RLMEnv), helper sub‑LLMs and verifiers so that a main model like GPT‑5‑mini can orchestrate web search, math solving, and exact copy tasks over very long inputs while gaining around 10% accuracy compared with plain prompting rlm env summary; the system treats prompts as objects, lets the top‑level model spawn specialized helpers, and uses a public verifiers repo plus an RLMEnv training harness so others can reproduce the flows rlm env summary.

Instead of thinking of RLM as a single clever prompt, this implementation reframes it as an inference‑time stack—outer planner, code workspace, helper models, and verification tools—that together make it practical to apply Recursive Language Models to real multi‑step tasks rather than only to controlled academic benchmarks rlm env summary.

🧠 Reasoning training: RLVR PEFT, formal loops, adaptive compute

Mostly training science: PEFT choices for RLVR, formal‑verification self‑play, and zero‑overhead introspection. New today are side‑by‑side PEFT results on R1‑distill and practical compute allocation signals.

ZIP-RC introspection predicts success and remaining work to adapt test-time compute

ZIP-RC adaptive compute (multi): The Zero‑Overhead Introspective Prediction for Reward and Cost (ZIP‑RC) method trains an LLM head to predict both answer correctness and remaining generation length directly from unused logits, letting a controller allocate retries and early stops and boosting accuracy on mixed‑difficulty math sets by up to 12 percentage points at similar or lower token cost according to the ziprc summary and the arxiv paper.

• No extra forward passes: Because ZIP‑RC reuses logits from the main decode, it adds almost no wall‑clock overhead compared with standard majority‑vote ensembles, while enabling per‑sample budgets such as giving hard problems more attempts and cutting off easy ones early, which the authors show leads to better accuracy–compute trade‑offs on several math benchmarks in the arxiv paper.

Bayesian Geometry of Attention shows tiny transformers doing near-exact Bayesian updates

Bayesian attention geometry (Dream Sports/Columbia): Experiments in controlled "Bayesian wind tunnels"—bijection elimination and HMM state tracking—show a 2.7M‑parameter transformer can match true Bayesian posteriors within 10⁻³–10⁻⁴ bits of entropy, while a capacity‑matched MLP fails by orders of magnitude, supporting the view that attention implements content‑addressable Bayesian updates in low‑dimensional value manifolds as detailed in the bayesian paper summary.

• Mechanistic picture: The authors find that residual streams act as the belief substrate, feed‑forward layers perform the posterior update, and attention layers build orthogonal key bases and sharpen query–key alignment over layers, with a learned value manifold parameterized by predictive entropy, offering a concrete geometric story for in‑context reasoning behavior in larger LLMs according to the bayesian paper summary.

PEFT choices for RLVR: DoRA-style adapters beat vanilla LoRA on DeepSeek-R1

RLVR PEFT methods (multi): A new evaluation of 12+ parameter‑efficient fine‑tuning schemes on DeepSeek‑R1‑Distill 1.5B and 7B finds that structural LoRA variants like DoRA, AdaLoRA and MiSS consistently outperform vanilla LoRA and often even full‑parameter fine‑tuning under reinforcement learning with verifiable rewards, while extreme compression harms reasoning quality according to the peft paper summary.

• Practical takeaway: The authors report that PEFT methods which modify LoRA’s decomposition and gating achieve the best pass@1 on math‑style RLVR tasks, whereas SVD‑initialized adapters and very low‑rank setups tend to destabilize training or underfit, so RLVR practitioners get a concrete menu of adapter types and rank budgets that appear safe on R1‑style reasoning models as summarized in the peft paper summary.

Propose–Solve–Verify loop uses formal proofs to boost verified Rust pass@1 by up to 9.6×

Propose–Solve–Verify (multi): A formal‑verification self‑play loop called PSV‑VERUS has a proposer model generate new specs, a solver produce code plus proofs, and a Verus verifier accept only fully checked solutions, yielding up to 9.6× higher pass@1 than baselines like AlphaVerus or pure RFT on verified Rust benchmarks as shown in the psv paper summary.

• Formal RL loop: Because only proof‑checked trajectories are added back into training, the system automatically adjusts spec difficulty and keeps the reward signal perfectly sharp, which the authors argue is critical for reasoning‑heavy domains where unit tests miss subtle bugs, according to the Dafny2Verus and MBPP results in the psv paper summary.

Deep Delta Learning generalizes residual connections with learnable erase/flip gates

Deep Delta Learning (Princeton/UCLA): A new Deep Delta Learning framework replaces plain ResNet shortcuts with gated "delta" operations that can not only add features but also shrink, erase or flip them along learned directions, aiming to keep residual training stability while representing back‑and‑forth state changes more cleanly, as outlined in the deep delta summary and the linked PDF.

• State reversibility: The authors derive how the gate can smoothly interpolate between identity, deletion and sign‑flip of specific feature directions, arguing that this lets very deep networks model reversible or oscillatory dynamics without clogging the residual stream with obsolete activations, which has been a long‑standing limitation of standard additive shortcuts according to the deep delta summary.

🧪 Open coding & translation models gain traction

Open models dominate chatter: MiniMax M2.1 trending and priced for developers, plus Tencent’s dual‑model MT system. Excludes Grok Obsidian eval chatter, which is covered under evals.

MiniMax M2.1 posts strong open-weight coding scores and fewer hallucinations

MiniMax M2.1 (MiniMax): Artificial Analysis rates the open‑weights coding model at 64 on its Intelligence Index, calling it the fifth most capable open model with 230B total and 10B active parameters, and reports a GDPval‑AA ELO of 1124 vs 1068 for MiniMax M2—about a 58% win rate in head‑to‑head knowledge‑work tasks analysis thread.

• Reasoning and tool use: The report highlights strong reasoning and agentic tool use at a relatively modest active size, putting M2.1 in what it calls the "most attractive" quadrant for intelligence vs parameters analysis thread.

• Hallucination behaviour: On the AA‑Omniscience hallucination eval, M2.1 improves its score from −50 to −30, mainly by abstaining on about one‑third of questions it can’t answer while keeping its answer rate near 22%, a pattern the authors say brings it close to Grok 4’s hallucination rate hallucination summary.

• Open weights and hosting: The weights are published on Hugging Face with recommended inference stacks like vLLM and others, and Artificial Analysis notes the eval run consumed about 90M output tokens at a dollar cost of $128, similar to DeepSeek V3.2 and far below Kimi K2 Thinking analysis thread.

The picture for engineers is that M2.1 now looks like a serious open candidate for coding agents and general knowledge work when cost, hallucination behaviour and deployability all matter—not only raw benchmark scores.

MiniMax M2.1 Coding Plan offers low-cost agentic access from $2

MiniMax Coding Plan (MiniMax): Developers highlight the new MiniMax Coding Plan priced at $2 for the first month and then $10/$20/$50 per month across Starter, Plus and Max tiers, each capped at 100/300/1000 prompts per 5‑hour window respectively for M2.1‑backed coding agents pricing reaction and detailed in the coding plan page.

• Targeted workloads: The plan copy emphasizes mobile‑friendly and multilingual coding support, with MiniMax pushing M2.1 as a default for agentic workflows like repo analysis and feature implementation rather than one‑off completions coding plan page.

• Cost position vs peers: At $0.30 per million input tokens and $1.20 per million output tokens on the first‑party API, Artificial Analysis notes that M2.1’s evaluation run cost $128 for ~90M output tokens, framing the Coding Plan tiers as an aggressive way to get predictable spend on top of that baseline analysis thread.

For teams already experimenting with open models, this pricing structure turns M2.1 into an obvious candidate when they want a predictable, prompt‑metered coding agent without managing raw token accounting themselves.

MiniMax M2.1 tops Hugging Face trending with 171k downloads

MiniMax M2.1 adoption (MiniMax): MiniMax reports that its M2.1 open model has become the #1 trending model on Hugging Face, with about 171k downloads and 786 likes, sitting alongside GLM‑4.7 and Qwen image models in the trending list huggingface snapshot.

• Model positioning: The listing shows MiniMax‑M2.1 as a 229B‑parameter text‑generation model with 10B active parameters, clearly labeled as the flagship of the MiniMax AI organization on the platform huggingface snapshot.

• Ecosystem traction: This download velocity lands on top of the strong eval story from Artificial Analysis analysis thread, signalling that self‑hosting and third‑party inference providers are already experimenting with M2.1 for real coding and agent workloads rather than treating it as a purely academic release.

For open‑model users, this is an early data point that M2.1 is not only scoring well in benchmarks but is also getting pulled into the broader tooling and hosting ecosystem at scale.

Tencent’s HY‑MT1.5 pairs 1.8B on-device and 7B cloud translation models

HY‑MT1.5 translation (Tencent): Tencent’s HY‑MT1.5 release combines a 1.8B‑parameter on‑device model (about 1 GB RAM, ~0.18s latency for 50 tokens) with a stronger 7B cloud model, together covering 33+ languages and 5 Chinese dialects while preserving document formatting in translation workflows mt overview.

• On‑device vs cloud trade‑offs: A latency/quality chart shared in the thread shows HY‑MT1.5‑1.8B clustering close to domestic and foreign commercial APIs on quality while beating them on response time, and the 7B model topping all listed commercial APIs on FLORES‑200 scores mt overview.

• Production readiness: The team stresses support for format‑preserving document translation and terminology customization, positioning HY‑MT1.5 as a production MT stack that can run locally on consumer hardware or in Tencent’s cloud, with the two tiers covering different latency and quality needs mt overview.

For engineers who need controllable, privacy‑sensitive MT, this dual‑model design shows how a single architecture can span on‑device usage and high‑end cloud quality while staying competitive with commercial APIs on both speed and accuracy.

🧩 Agent runtimes: Interactions API, MCP, skills & web agents

Runtime/connectivity updates rather than coding workflows. Excludes the Claude Code feature. Focus on Interactions API, Firecrawl’s /agent, and curated skills installs.

Anthropic’s Agent Skills emerge as a portable packaging layer for tools and context

Agent Skills (Anthropic): Anthropic’s Agent Skills format is increasingly used as a portable package for instructions, knowledge files and executable scripts that agents can auto‑activate when relevant, with the company positioning it as a way to equip agents for real‑world work in the skills announcement and the detailed skills docs. Users can now download a skill defined in Claude Desktop, save it as a zip, extract it, and drop the folder into ~/.claude/skills (user scope) or .claude/skills (repo scope) so the same skill becomes available in Claude Code without re‑authoring it skill reuse.

• Model‑agnostic usage: Skills are not hard‑wired to Anthropic models—one example shows a lease‑review skill being invoked while the session runs on GLM‑4.7 inside Claude Code, with the model using the Skill’s guidance and scripts to analyze a Philippine lease and output a structured concern summary glm skills demo.

• Conceptual position vs Custom GPTs: Community discussion frames Skills as Claude’s answer to Custom GPTs—file‑based, git‑friendly packages that load instructions, knowledge and tools on demand instead of opaque hosted configs, with diagrams comparing them to GPTs’ instruction blocks and per‑user storage in the skills vs custom gpts. Together these signals suggest Skills are becoming a de facto agent capability layer spanning desktop, CLI and even non‑Anthropic backends, rather than a Claude‑only feature.

Google’s Interactions API becomes a central agent runtime for Gemini

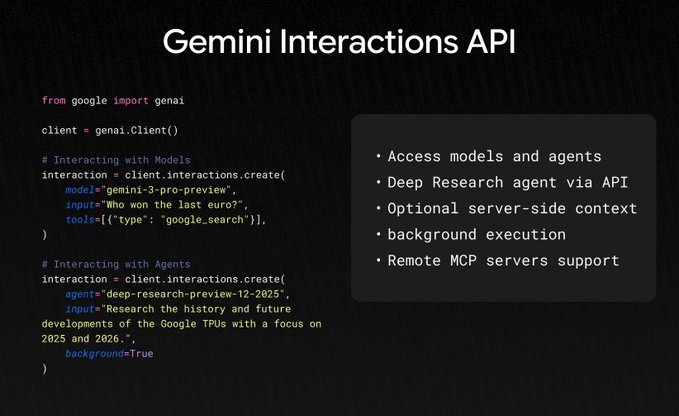

Interactions API (Google DeepMind): Google’s Interactions API is now in public beta as a unified runtime for Gemini models and agents such as Deep Research, exposing one interface for text, tools, MCP and background runs according to the api overview and the accompanying Google blog post; it already supports optional server-side state, the Remote Model Context Protocol (MCP), and background execution as called out in the developer docs. The roadmap includes combining function calling, MCP and built‑in tools like Google Search, File Search and a Bash tool within a single interaction, plus multimodal function calling and fine‑grained argument streaming in 2026, framing this as the main locus for Gemini’s agentic features going forward api overview. Following up on the AG-UI layer framing of an emerging agent application layer, this pushes Google’s stack closer to a first‑class, hosted agent runtime rather than ad‑hoc tool wiring inside chat UIs.

Firecrawl /agent adds structured screenshot capture to its web agent API

/agent runtime (Firecrawl): Firecrawl expanded its /agent endpoint so agents can now request "get a screenshot" alongside structured data extraction, avoiding custom CSS selectors or manual browser scripting as demonstrated in the feature demo; the agent orchestrator handles navigation, scraping and screenshot capture in one call, returning both the data and an image artifact to downstream tools.

• Playground and workflow: A dedicated /agent playground lets teams prototype flows where a single prompt yields both scraped JSON and page screenshots, which can be useful for visual regression checks or human review agent playground.

• Building on earlier scrape API: This builds on Firecrawl’s earlier /scrape upgrade to richer multi‑format outputs multi-format scrape, extending the stack from one‑shot scraping toward a more general web agent runtime with actions beyond text-only responses.

Codex skills formalize file-based tools invoked with $ in CLI and VS Code

Codex skills (OpenAI): OpenAI’s Codex CLI and VS Code extension now support explicit skill invocation by typing $ and then autocompleting a skill name, letting the agent call user‑defined capabilities stored under ~/.codex/skills rather than relying solely on inline prompts, as outlined in the skill invocation note. Under the hood, each skill is a file‑based package that can run scripts or workflows, which one engineer likens to "training your own pokémon" because you curate and evolve a personal toolset that Codex can deploy on demand pokémon analogy.

• Runtime surfaces: Skills work consistently in both the terminal and the VS Code extension ($ npm install -g @openai/codex; $ codex), meaning the same skill definitions can be reused across interactive shells and editor sessions without separate configuration cli usage. This positions Codex’s skills system as a parallel to Claude’s Agent Skills, but wired into OpenAI’s own agent runtime.

Community N Skills Marketplace curates reusable Claude Skills for real work

N Skills Marketplace (community): Developer Numman Ali launched the "N Skills Marketplace", a public GitHub repository that curates practical Agent Skills like dev-browser, a Gas Town orchestration helper, and a zai-cli reader, with install instructions that plug directly into Claude Code’s plugin system using /plugin marketplace add numman-ali/n-skills and /plugin install commands, as described in the marketplace intro and the skills repo.

• Gas Town orchestration skill: One highlighted skill wraps Steve Yegge’s Gas Town IDE concepts into a guided workflow, so an agent can walk a user through understanding and operating Gas Town without manually reading the long Medium post, with the author explicitly asking Yegge for feedback on the implementation gastown skill.

• Open, apply-to-join model: The marketplace is explicitly positioned as a public, curated list where other builders can apply to have their Skills listed, aiming to gather a small set of high‑leverage capabilities that "actually allow work to get done" rather than a long, unvetted catalog marketplace intro. This pushes Skills toward a more ecosystem‑like distribution model, closer to a lightweight app store for agent capabilities.

Hyperbrowser previews HyperPages as browser infra for research agents

HyperPages and Hyperbrowser (Hyperbrowser): Hyperbrowser introduced HyperPages, a research‑page builder that acts as a browser infrastructure layer for AI agents—given a topic, it browses the web, pulls sources, drafts sectioned articles with images, and exposes an interactive editing surface, all powered by the Hyperbrowser API according to the hyperpages teaser and the product site. Developers can request an API key for Hyperbrowser so their own agents can drive the same browsing and synthesis pipeline programmatically rather than through the hosted UI api key note.

• Agent-centric design: The marketing frames Hyperbrowser explicitly as "browser infra for AI agents", emphasizing that the stack handles navigation, extraction and formatting so agents can focus on higher‑level planning and writing, which aligns it with other emerging web‑agent runtimes rather than traditional scraping libraries product site. This makes HyperPages an early example of a vertical app (research writing) built directly on top of an agent‑oriented browser API.

zai-cli emerges as a CLI web reader and Claude Skill for blocked content

zai-cli web reader (community/Z.AI): The zai-cli tool appeared as a simple command‑line interface that uses Z.AI’s stack to read and summarize web pages, with a demo showing it successfully ingesting Steve Yegge’s Gas Town Medium article via npx zai-cli read "<url>" while Claude Code’s built‑in browser hit a 403 on the same URL, as reported in the zai-cli demo.

• Agent‑friendly outputs: The tool prints structured JSON containing title, description, URL and cleaned article text, making it straightforward to feed into downstream agents or RAG pipelines, and its repository documents additional commands for vision, search and GitHub exploration in the GitHub repo.

• Skill packaging: zai-cli is also one of the day‑one entries in the N Skills Marketplace, meaning Claude agents can call it as a Skill instead of shelling out manually, which illustrates how external CLIs are being wrapped into Skills to work around site‑specific access issues marketplace intro.

📈 Evals & methodology: style control, lightweight runs, convergence

Today’s evals lean methodology and practical runs: Arena’s style‑control, GLM‑4.7 on 115GB VRAM, Arena image model additions, plus “new benchmarks” calls. Excludes Claude Code which is the feature.

GLM‑4.7‑REAP‑40p W4A16 boots on ~115 GB VRAM, enabling full local evals

GLM‑4.7‑REAP‑40p W4A16 (0xSero / Zhipu): A pruned and 4‑bit quantized Mixture‑of‑Experts variant of GLM‑4.7 with 218 B total parameters and 32 B active has been brought up on a single machine with roughly 108–115 GB of VRAM, showing that near‑frontier open models can be run locally for end‑to‑end benchmarks rather than only via cloud APIs, according to the model announcement and the quantized model card.

• Lightweight run profile: The REAP‑40p pipeline prunes router‑weighted experts to shrink disk size to about 108 GB in W4A16 while keeping 32 B active parameters per token, which is small enough for high‑end single‑node setups but still large enough to be interesting for research‑grade evals, as detailed in the full model card.

• Eval implications: Community testers note they still "need to benchmark" this setup first boot note, but the ability to load it at all lets independent groups reproduce code‑ and reasoning‑heavy test suites on their own hardware rather than trusting vendor‑reported scores.

LMArena debuts Style Control leaderboard to separate flair from ability

Style control leaderboard (LMArena): LMArena introduced a Style Control Leaderboard that tries to strip out presentation effects—answer length, markdown usage, friendliness—from head‑to‑head model comparisons so votes reflect underlying capability rather than polish, as described in the style discussion and elaborated in the eval blog.

• Methodology angle: The leaderboard reweights or constrains stylistic dimensions (e.g., token count, headers, list density) before scoring, aiming to counter models that win by sounding confident rather than solving the task—this responds directly to concerns that some systems "are optimized for longer, friendlier responses" that sway annotators without real gains, as noted in the style discussion.

• Why it matters: For engineers and analysts relying on community battles to choose models, this is an attempt to tighten the link between Arena Elo and real task performance, especially as style‑tuned smaller models proliferate.

Grok 4.20 “Obsidian” gets early community evals on DesignArena

Grok 4.20 “Obsidian” early evals (xAI): A new Grok variant nicknamed Obsidian—likely Grok 4.20—is being exercised on DesignArena, with early users reporting it produces about three times as much front‑end code per prompt for web design tasks than Grok 4.1 and feels noticeably less "lazy", while still lagging Opus and Gemini on quality by their judgment, as described in the designarena notes and obsidian review.

• Behavior profile: One tester notes Obsidian "generated a lot of code, like super verbose and detailed" and calls it "better than last gen in webdev but still behind opus and gemini" obsidian review, highlighting a trade‑off where increased effort does not always mean better structure.

• Methodology angle: These are small‑sample, task‑specific impressions rather than formal benchmarks, but they illustrate how community arenas and personal "private benchmarks" are being used to probe new frontier releases before any standardized eval suite is available.

Arena adds Qwen‑Image‑2512 and Edit‑2511 for image model head‑to‑heads

Qwen‑Image models in Arena (LMArena / Alibaba Qwen): LMArena added Alibaba’s Qwen‑Image‑2512 text‑to‑image model and the Qwen‑Image‑Edit‑2511 editor to its image battle pool, enabling side‑by‑side community evaluations of realism, text rendering and fine‑grained edits against other top generators, as announced in the arena update.

• Evaluation scope: The new entries let annotators compare Qwen‑Image‑2512’s improved natural textures and layout‑aware text rendering, and test Qwen‑Image‑Edit‑2511 on tightly localized edits like removing people or restyling backgrounds without collateral damage, building on results users have shared elsewhere for these models.

• Why this is useful: For practitioners who care about concrete behaviors (e.g., infographics legibility or background‑preserving object removal), this expands the public, preference‑based benchmark surface beyond generic aesthetic scores.

Beauty‑contest experiment finds LLMs mispredict real human play

Beauty‑contest rationality gap (multiple LLMs): An experimental economics study on the p‑beauty‑contest game reports that state‑of‑the‑art chatbots tend to model humans as more strategically rational than they really are—aiming near game‑theory equilibrium while actual players cluster around much higher, less‑iterated guesses—showing a miscalibration in human behavior modeling, as summarized in the beauty-contest summary.

• Eval design: The authors pit humans and several LLMs against each other in the 0–100 "guess half the average" game and analyze how many levels of reasoning each exhibits, finding that the models frequently perform deeper "reasoning about others" than the human baseline implied by the experimental data.

• Why it matters: This points to a methodological gap for agent evals—optimizing LLMs for internal coherence and equilibrium play does not guarantee accurate forecasts of real human actions when those humans are noisy, bounded and sometimes non‑strategic.

Pangram touted as rare AI detector with sub‑0.5% error rates

Pangram detector accuracy claims (Pangram): In a discussion of AI‑generated text detection, Pangram was highlighted as one of the few detectors backed by an independent evaluation claiming both false‑positive and false‑negative rates under 0.5 %, contrasting with popular tools that misclassify well‑known human texts as AI‑written, according to the detector comment.

• Methodology note: The commenter points out that many detectors flag documents like constitutions or classic literature as machine‑generated, while Pangram has not (anecdotally) exhibited these obvious errors in their experience, suggesting a stricter calibration.

• Caveats: These numbers come from a single cited independent study and anecdotal usage rather than a broad public benchmark suite, so further third‑party testing would be required before treating Pangram’s error rates as a general standard.

💼 Capital & enterprise moves: AI21, Kunlunxin, Kimi, Grove

M&A chatter and capital flows that shape the AI vendor map. Mostly concrete deal rumors and filings; also a founder program opening. Excludes infra capex which is grouped under infrastructure.

Nvidia in advanced talks to acquire AI21 Labs for $2–3B

AI21 acquisition talks (Nvidia): Nvidia is reportedly in advanced negotiations to buy Israel‑based AI21 Labs for $2–3 billion, with coverage stressing that the move is driven mainly by access to the company’s roughly 200‑person research and engineering team rather than by its current SaaS footprint, according to the deal report; AI21’s founders, including Amnon Shashua, are said to be shifting attention to a new venture called AAI focused on reasoning‑heavy “thinking models,” which would leave Nvidia owning AI21’s platform and staff while the original leadership pursues a fresh thesis.

For AI leaders, this points to Nvidia deepening its vertical integration into model talent and IP rather than staying purely a hardware and tooling vendor, tightening the link between GPU supply and frontier model development.

Baidu’s Kunlunxin AI chip unit files confidentially for Hong Kong IPO

Kunlunxin IPO plans (Baidu): Baidu’s AI chip arm Kunlunxin has confidentially filed for a Hong Kong listing, with Baidu expected to retain about a 59% stake while spinning the unit into a separately financed vehicle aimed at serving both internal and external AI workloads ipo coverage; recent fundraising reportedly valued Kunlunxin around $3 billion, and the listing is framed as a way to fund domestic accelerator production, software stack investment, and partnerships with local server vendors as Chinese platforms hedge against Nvidia export controls and supply volatility

.

For infra‑minded engineers and analysts, this marks another major Chinese push to build a full inference stack—from chips to compilers—outside the US GPU ecosystem while still tightly coupled to a large consumer AI platform in Baidu search and cloud.

Kimi confirms $500M round, $4.3B valuation and >¥10B cash for K3

Kimi funding runway (Moonshot AI): Following up on Kimi funding, where Moonshot AI’s chatbot Kimi was reported to have raised about $500 million at a $4.3 billion valuation to scale its model line, a new LatePost piece adds that the company now holds over 10 billion yuan (~$1.4 billion) in cash reserves and that CEO Yang Zhilin is explicitly positioning an upcoming K3 model as a heavily scaled training run meant to rival global frontiers latepost writeup.

This combination of large cash buffer and stated K3 ambitions gives investors and competitors clearer evidence that Kimi intends to remain a long‑term, well‑capitalized Chinese player in the frontier‑model race rather than a short‑lived local entrant.

OpenAI opens next Grove cohort for pre‑idea technical founders

Grove founder program (OpenAI): OpenAI has opened applications for the next cohort of OpenAI Grove, a five‑week, in‑person technical program in San Francisco for very early‑stage founders that offers workshops, office hours with researchers, and early access to models and tools program announcement; the latest application window runs until late September 2025, with the cohort scheduled for Oct 20–Nov 21 and a time commitment of roughly 4–6 hours per week, according to the detailed program page.

For engineers and researchers considering spinning out AI startups, Grove effectively functions as a pre‑accelerator that trades equity‑free access to OpenAI staff and infrastructure for tighter pull into the OpenAI ecosystem.

Report predicts potential OpenAI acquisition of Pinterest in 2026

Pinterest acquisition rumor (OpenAI): A summary of reporting from The Information claims OpenAI is being predicted as a potential acquirer of Pinterest in 2026, framing the image‑heavy social platform as both a rich synthetic‑image corpus and a distribution channel already saturated with AI‑generated content m&a prediction; the commentary raises the question of whether such a deal would formalize the use of user‑uploaded and AI‑generated imagery as training data for future OpenAI vision models, in contrast to today’s more arm’s‑length data sourcing.

While highly speculative and lacking any official confirmation, the scenario reflects how analysts are starting to model full‑stack combinations of foundation model vendors with visually oriented consumer platforms as part of the next wave of AI‑driven consolidation.

🛡️ AI policy & integrity: China chatbot rules, EU pressure on TikTok AI

Safety/regulatory items directly impacting AI services. Mostly China’s draft rules for human‑like chat and Poland’s EU push on AI‑made political clips. No general ‘slop’ debates here.

China drafts strict ‘human-like’ chatbot rules with suicide escalation and MAU triggers

China chatbot rules (CAC): China’s Cyberspace Administration has drafted what could become the strictest rules worldwide for “human‑like interactive” AI chat, requiring a human operator to intervene and notify guardians whenever suicide or self‑harm is mentioned, and forcing minors and elderly users to register a guardian contact, according to the policy summary in China draft rules. Services with over 1 million registered users or 100k monthly actives face annual safety audits, design bans on addiction‑oriented mechanics and fake emotional bonding, mandatory pop‑up reminders after two hours of continuous use, and the risk of app‑store removal for non‑compliance, as detailed in the Ars Technica article.

The draft effectively ties product design, usage analytics, and moderation policies together, making large Chinese chatbot operators treat crisis escalation, age/guardian handling, and engagement mechanics as regulated safety features rather than pure UX choices.

Poland pushes EU to probe AI-made TikTok ‘Polexit’ clips under the DSA

Polexit TikTok case (Poland/EU): Poland’s digital affairs ministry is urging the European Commission to open a Digital Services Act case against TikTok over a surge of AI-generated “Polexit” videos, saying the clips targeted 15–25‑year‑olds and look like an organized influence campaign rather than organic user content, as reported in Polexit TikTok report. Officials cite linguistic patterns they say resemble Russian‑language syntax and highlight how synthetic “talking head” personas let operators rapidly iterate scripts and messaging at scale, pushing Brussels to treat this as an early enforcement test of how the DSA handles AI‑driven political manipulation on very large platforms, according to the Notes from Poland article.

For AI and platform teams, the case underscores that synthetic media, political targeting, and recommendation systems are now being evaluated jointly by regulators, with potential obligations framed around provenance, detection, and systemic risk rather than model internals alone.

🤖 Drones & humanoids move into operations

Embodied deployments dominate: record‑size drone swarms, border humanoids, high‑rise fire response, and hotel/casino cleaning. Today is heavy on fielded systems; little lab robotics.

China flies 15,947‑drone swarm and debuts AI “flying TV” displays

Drone swarms and flying displays (China): China showcased record‑scale AI swarm coordination with 15,947 drones flying in tight formation over Liuyang—reported as a Guinness World Record with zero human pilots and no collisions in the swarm record; a separate demo shows an AI‑stabilized “flying TV” LED panel, effectively turning the sky into a movable screen in the flying screen.

For engineers, these clips imply mature real‑time swarm control, latency‑tolerant links, and collision‑avoidance stacks that could generalize from entertainment to city‑scale systems like coordinated inspection, logistics, or emergency signaling rather than one‑off lab demos.

UBTech Walker S2 humanoids begin 24/7 duty at China–Vietnam border crossings

Walker S2 border deployment (UBTech): China has contracted UBTech’s Walker S2 humanoids to stand guard at real border crossings near Vietnam, where they help manage people flow, support inspections, and handle logistics around the clock as described in the border deployment and detailed in the border article; following up on tennis control, where Walker S2 was shown rallying balls in a lab, this marks a shift from controlled demos into continuous public‑space operations.

The deployment uses human‑sized bipedal robots with speech interaction and self‑swappable batteries (about a few minutes per swap) so they can stay online in harsh, remote posts while rotating packs, which is a concrete test of locomotion robustness, perception in crowded scenes, and human–robot interaction at scale.

High‑rise fire truck drone enters real use with 200m altitude and 45m spray reach

Firefighting drone truck (China): A new fire truck design integrates a roof‑launched quadcopter that can take off in under 60 seconds, climb up to 200 m, and spray water or foam about 45 m horizontally to reach windows and rooftops that ladder trucks cannot, according to the drone firetruck; building on drone truck, which first introduced the concept, the latest clip shows the system operating over real buildings rather than training grounds.

The configuration effectively decouples vertical reach from the chassis, so AI‑assisted flight control, hose dynamics, and targeting become central engineering problems, and it previews how cities might retrofit legacy fleets with aerial modules instead of redesigning entire trucks.

Viral machine‑gun drone clip highlights accelerating dual‑use trajectory

Weaponized quadcopters (unspecified): A circulating video shows a multirotor drone firing a belt‑fed machine gun while hovering, advertised bluntly as a "MACHINE GUN ON DRONE" in the weapon demo; commentary notes that even as firefighting and inspection drones spread, "their real benefit will be in the military" according to the same post.

The clip underlines how the same autonomy, stabilization, and payload tech enabling civilian fleets can be repurposed for armed platforms, which has direct implications for export controls, Geofencing, and how AI targeting or navigation modules are governed.

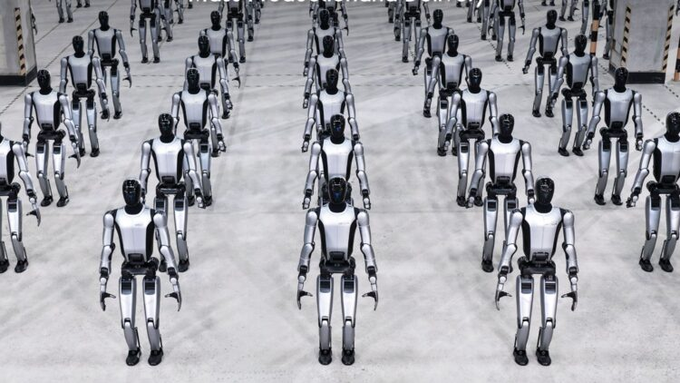

Analysts see humanoid robots as a $9T market by 2050 with China targeting majority share

Humanoid robotics race (China, global): A CNBC‑covered analysis pegs the global humanoid robot market at roughly $9 trillion by 2050, with China potentially capturing more than 60% as firms like Unitree, UBTech, AgiBot, and Xpeng already mass‑produce robots for logistics and service roles, while Tesla’s Optimus is still framed more as a future vision in the market forecast.

Chinese policy designates humanoids as a strategic technology, and the combination of state‑backed programs and aggressive private manufacturing suggests that deployments like Walker S2 at borders and “Terminator” police companions in Shenzhen are first steps toward much broader labor substitution in security, warehousing, and manufacturing.

MGM’s New York‑New York rolls out autonomous cleaners on Las Vegas casino floors

Service cleaning robots (MGM Resorts): MGM’s New York‑New York and other Strip properties are now running fleets of autonomous floor‑cleaning robots on live casino carpets, with footage showing a small white unit navigating around signage and doors at New York‑New York in the mgm cleaning bots; this extends the broader shift to service robots in airports, hotels, and casinos highlighted earlier in service robots.

These deployments stress‑test mapping and obstacle avoidance in cluttered, high‑traffic interiors with strict uptime and noise constraints, and they hint at a near‑term division of labor where robots handle overnight or off‑peak cleaning while human staff focus on guest‑facing tasks.

Robot influencer “Rizzbot” at center of $1M assault lawsuit after alleged attack by streamer

Rizzbot assault case (Social Robotics): A manufacturer of the humanoid robot influencer Rizzbot has filed a complaint seeking around $1 million in damages after streamer Ishowspeed allegedly hit and choked the robot during a filmed interaction, with the company claiming its head camera failed and walking ability degraded as described in the lawsuit summary; an Austin police report notes the damage occurred without the owner’s consent, and follow‑up posts say an investigation and early‑stage litigation are under way in the case update.

The case is one of the first high‑profile legal tests of how damage to embodied AI systems—especially those with their own online followings—will be treated in practice, including how courts value hardware, on‑board sensors, and the economic impact of taking a “robot creator” offline.

🎬 Creator & sim stacks: Photoshop FLUX.2, Qwen‑Edit, Runway GWM‑1

High volume of creative/media items today: Photoshop+FLUX.2 power‑tips, Qwen image edit wins, motion/video research, and loop workflows. Focuses on production techniques and world‑modeling; excludes robotics sims.

FLUX.2 Pro gets concrete Photoshop recipes and a Firefly unlimited window

FLUX.2 Pro (Adobe): Ambassador content walks through FLUX.2 Pro inside Photoshop for pose‑preserving subject replacement, box‑guided color changes, arbitrary aspect‑ratio expansion, and one‑shot golden‑hour relighting, with prompt wording examples that keep paws, limbs and angles intact flux2 tips; Adobe is also running an offer where Firefly Pro/Premium and high‑credit plans get unlimited generations on all image and Firefly Video models through January 15, which matters for teams planning heavy experimentation this month firefly promotion and firefly promo page.

Qwen-Image-Edit-2511 shows precise local edits and strong style control vs peers

Qwen-Image-2512 and Edit (Alibaba Qwen): Builders report that Qwen-Image-Edit-2511 can remove specific people from a frame using vague natural language like “remove the bald at the bottom” while leaving underground station lighting, perspective and surrounding detail almost untouched local head removal; another example has the model delete crowds from a Dumbo photo and restyle it into a “Once Upon a Time in America” look, where composition and objects stay coherent and Nano Banana Pro is described as having nicer style but worse object consistency and some hallucinations dumbo restyle.

Qwen-Image-2512 rank: Following earlier claims that Qwen-Image-2512 was at the top of open‑source image models Qwen image, Alibaba now highlights a fourth‑place overall finish and best open‑weights position on its internal Arena while exposing both generation and edit models directly in Qwen Chat and on Hugging Face and ModelScope qwen overview and qwen image page.

Runway’s GWM‑1 family turns Gen‑4.5 into an interactive world model stack

GWM‑1 (Runway): Runway is repositioning its Gen‑4.5 architecture as a family of action‑conditioned “General World Models” called GWM‑1, splitting into GWM‑Worlds for explorable environments, GWM‑Avatars for interactive characters, and GWM‑Robotics for robot‑focused synthetic data, all designed to learn dynamics from experience rather than static prompts runway summary and runway blog post; the models can take inputs like camera moves, character controls or robot commands alongside video, essentially acting as learned simulators rather than one‑shot generators.

Simulation focus: The announcement frames language‑only systems as insufficient for robotics, materials and climate work, arguing instead for agents that act in and learn from simulated worlds before deployment.

Dream2Flow and FlowBlending push structured, efficient video generation

Dream2Flow and FlowBlending (research): Two recent papers attack long, controllable video generation from complementary angles—Dream2Flow learns 3D object flow so a system can morph and move objects between states while respecting scene geometry, explicitly targeting open‑world manipulation rather than single‑clip eye candy dream2flow demo; FlowBlending instead treats the denoising process as stages and blends a small and large model over time, so early steps use a light model and later ones switch to a heavy model, reaching near‑large‑model quality at much lower PFLOP cost flowblending overview and flowblending paper.

Practical angle: The FlowBlending chart contrasts PFLOPs and output quality across prompts, while the Dream2Flow demo shows continuous shape morphing in 3D, giving practitioners concrete signals about when to favor better motion representation versus staged compute savings for production pipelines.

Nano Banana Pro and Kling 2.6 power two-prompt “impossible” motion loops

Nano Banana Pro + Kling (Freepik/Higgsfield): Creators say Nano Banana Pro combined with Kling 2.6 motion now produces clips realistic enough that “2026 reminder: assume everything online is fake” when a robotic arm animates a tiny banana‑shaped object in smooth 3D motion teaser; a new Higgsfield workflow shows how to create seamless “impossible transitions” between two images by cycling through an iterator, always using the previous clip’s end frame as the next start and closing the loop with a final shot that returns to the original frame, all driven by only two main prompts loop workflow and workflow link.

Workflow evolution: This setup builds on earlier five‑prompt Nano Banana + Kling O1 toy‑box recipes Nano Banana by cutting prompt count, leaning on a shot iterator, and keeping geometry and style tightly aligned across frames to support creator‑grade looping transitions.

SpaceTimePilot separates camera and time to re-stage scenes from one clip

SpaceTimePilot (Adobe/Cambridge): The SpaceTimePilot model takes a single input video and lets users independently adjust camera trajectories and timeline, so they can, for example, run slow motion at 0.5×, reverse motion, or hold a frozen 'bullet‑time' frame while the virtual camera orbits the subject, all while preserving multi‑view consistency spacetimepilot thread; the work comes with CamxTime, a synthetic dataset of around 180k samples that pair original clips with time‑remapped versions to train and evaluate these camera‑time controls spacetimepilot paper.

Why it matters: For editors and simulation teams, SpaceTimePilot points to a path where time editing, replay tools and novel‑view video can come from the same generative backbone rather than separate toolchains.

Creators share Nano Banana Pro thumbnail pipelines for YouTube-style content

Nano Banana Pro thumbnails (independent creators): New walkthroughs show end‑to‑end thumbnail pipelines built on Nano Banana Pro—creators first generate base characters and outfit variations, then assemble character sheets, composite multi‑character scenes, add stylized text and even generate animation‑ready keyframes using tools like Weavy and Nano Banana before sending stills into Kling O1 for motion if needed thumbnail workflow; another thread publishes 16 tuned prompts that compare 'cheap vs expensive' product shots or outrageous 'Nike burger' visuals, demonstrating how Nano Banana can be directed toward highly clickable YouTube covers prompt list.

Production relevance: These recipes, which follow an earlier Freepik Spaces 2‑image→16‑thumbnail workflow thumbnail space, give small teams concrete system prompts and staging steps they can reuse to industrialize thumbnail creation across series rather than hand‑design each asset.

JavisGPT proposes a unified LLM for sounding-video understanding and generation

JavisGPT (Tencent): The JavisGPT system introduces a unified multimodal LLM that jointly processes audio, video and text through an encoder–LLM–decoder stack, using a SyncFusion module and synchrony‑aware queries so an LLM can reason over 'sounding videos' and then drive a pretrained JAV‑DiT generator to produce coherent audio‑visual outputs javisgpt summary; its three‑stage training pipeline—multimodal pretraining, audio‑video finetuning and large‑scale instruction tuning—is backed by the JavisInst‑Omni dataset of over 200k curated audio‑video‑text dialogues javisgpt paper.

Who cares: This kind of architecture targets tasks like answering questions about noisy clips, generating matching soundscapes for silent footage, or editing narration and visuals together rather than as separate passes.

🗣️ Voice‑first devices & stacks: OpenAI’s ‘pen’ path

Light but notable device/stack chatter: screenless, pen‑style companion with handwriting capture and a more natural audio stack. Excludes yesterday’s broader audio consolidation; today adds manufacturing and codenames.

OpenAI consolidates audio teams as it readies a 2026 voice-first companion

Audio stack consolidation (OpenAI): OpenAI is reportedly merging its audio teams to build more natural, faster voice models and a screenless, audio‑first personal device targeted for 2026, following up on audio model which outlined a Q1 2026 speech revamp and a voice companion; this push frames voice as a primary interface rather than a side feature. Coverage notes goals around duplex‑style conversations, reduced turn‑taking latency and time‑to‑first‑byte, and a hardware form factor meant to curb screen addiction—with Jony Ive said to be shaping an elegant, fun object—in the audio push story; separate reporting points to a new audio generation model aiming for more realistic, responsive speech by roughly March 2026 in the audio model rumor, aligning the model roadmap with the leaked pen‑like “third device” so that a dedicated voice stack, microphones and on‑body hardware arrive as a coordinated package rather than separate experiments.

OpenAI’s Gumdrop pen device leak points to Foxconn build and 2026–27 launch

OpenAI pen device (OpenAI): OpenAI’s first hardware, codenamed Gumdrop, is now described as a pen‑shaped, screenless “third core device” that will be manufactured by Foxconn for a 2026–27 launch window, according to a translated Chinese report shared in the manufacturing leak. Leaks describe an iPod‑Shuffle‑sized gadget that can clip to clothing or hang on a neck strap, with a microphone and camera to sense the user’s surroundings and handwriting recognition that converts notes directly into ChatGPT sessions, extending earlier pen‑form‑factor rumors about an ultra‑portable assistant in the form factor thread; community discussion also fixates on naming jokes like “O Pen AI” in the naming joke and branding quip, signalling that expectations are now anchored on a pen‑style, always‑on companion rather than a phone‑like device.