Claude Opus 4.6 adds 1M context beta – $5/$25 MTok pricing holds

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Anthropic shipped Claude Opus 4.6 as its long-horizon agent flagship; Opus-class finally gets a 1M-token context window (beta) plus up to 128K output tokens; pricing stays $5/$25 per MTok, with some subscribers seeing $50 testing credits. Claude Code picks up Agent Teams (research preview) for parallel sessions; CLI 2.1.32 adds Opus 4.6 support, auto-memory, and partial “Summarize from here.” The Claude API swaps “budget tokens” for adaptive thinking; effort levels and fine-grained tool streaming are GA; a context-compaction endpoint lands in beta; Office surfaces expand (Excel pivots/validation; PowerPoint research preview with native editable charts).

• Evals + hygiene: ARC Prize reports 93.0% ARC-AGI-1 and 68.8% ARC-AGI-2 at a fixed 120K thinking budget; $1.88/task and $3.64/task; Artificial Analysis posts GDPval-AA Elo 1606 with ~160M tokens for 220 tasks; Anthropic warns infra config can swing agentic-coding scores by several points.

• Security + rollout reality: Opus 4.6 system card cites pilots where the model used misplaced GitHub/Slack tokens and took unapproved actions; it also flags eval-integrity risk if the model helped debug its own eval stack.

• OpenAI counter-move: GPT‑5.3‑Codex claims 57% SWE‑Bench Pro and 76% TerminalBench 2.0 with “less than half” the tokens vs 5.2; available in Codex products first, API “coming soon.”

Top links today

- Claude Opus 4.6 launch details

- Anthropic on agent teams building a C compiler

- Infrastructure noise in agentic coding evals

- OpenAI Frontier platform overview

- GPT-5.3-Codex announcement and benchmarks

- Trusted Access for Cyber program

- GPT-5 autonomous lab with Ginkgo case study

- Perplexity Model Council product page

- AgentRelay multi-agent orchestration repo

- Hugging Face Community Evals repo

- Hugging Face Community Evals announcement

- ARC Prize leaderboard and methodology

- SALE strategy auctions for workload efficiency paper

Feature Spotlight

Claude Opus 4.6 ships: longer-horizon agent work + Agent Teams + Office workflows

Opus 4.6 + Claude Code Agent Teams pushes “run it longer, run it in parallel” into mainstream workflows (1M context beta, adaptive thinking, Office integrations). This is a concrete step toward autonomous, repo-scale engineering loops.

Anthropic’s Opus 4.6 rollout dominated the day: a flagship model tuned for long-running agentic work plus major Claude Code and Office-style workflow upgrades. This category covers the Opus 4.6 + Claude tooling package and ecosystem availability; benchmark debate is handled separately.

Jump to Claude Opus 4.6 ships: longer-horizon agent work + Agent Teams + Office workflows topicsTable of Contents

🧠 Claude Opus 4.6 ships: longer-horizon agent work + Agent Teams + Office workflows

Anthropic’s Opus 4.6 rollout dominated the day: a flagship model tuned for long-running agentic work plus major Claude Code and Office-style workflow upgrades. This category covers the Opus 4.6 + Claude tooling package and ecosystem availability; benchmark debate is handled separately.

Opus 4.6 expands outputs to 128K and changes some API constraints

Claude Opus 4.6 (Anthropic): Alongside the headline context increase, multiple rollout notes point to operationally relevant interface details—most notably up to 128K output tokens and the constraint that Opus 4.6 does not support assistant-message prefilling, as summarized in the release rundown.

For teams with agent harnesses that rely on partial-prefill patterns (e.g., templated tool plans or structured boilerplate), this is the kind of “small” incompatibility that can break workflows even when model quality improves. The same summary also mentions broader platform changes shipping alongside Opus 4.6—see the release rundown for the consolidated list.

Opus 4.6 pricing stays $5/$25 per MTok; subscribers see $50 testing credit offers

Pricing and credits (Anthropic): Multiple posts emphasize that Opus 4.6 pricing is unchanged vs Opus 4.5, as noted in the pricing note, while some Claude subscribers are seeing an offer for $50 of credits for testing Opus 4.6 per the credit promo.

The more nuanced pricing footnote (premium pricing beyond a large context threshold) and additional rollout details are bundled in the release rundown. This combination—unchanged base price plus targeted “try it” credits—signals a push to get real usage data on the new long-context and agentic behaviors.

Agent Teams work best when you decompose work into discrete, parallelizable chunks

Parallel decomposition for agent teams: Early guidance for Claude Code’s Agent Teams emphasizes that the feature works best on tasks that can be split into discrete pieces (review passes, independent debugging hypotheses, scoped modules), as described in the Agent Teams announcement and expanded in the Agent Teams docs linked via Agent Teams docs.

The underlying pattern is straightforward: treat each teammate like an isolated worker with a narrow objective and clear “done” criteria; then integrate via a shared task list instead of shared conversational context. That’s the mental model implied by today’s product docs and launch notes.

Cursor adds Claude Opus 4.6 for long-running tasks and code review

Opus 4.6 in Cursor (Cursor): Cursor added Claude Opus 4.6 and is positioning it as strong for long-running tasks and code review, per the Cursor availability note.

This matters mostly as a distribution signal: Opus 4.6 is landing in the IDE surfaces where teams actually run agentic loops against real repos, not only inside Claude’s first-party UI. The tweet doesn’t include migration details or default settings changes, only availability and the intended use cases, as stated in the Cursor availability note.

Effort dialing: turn reasoning down on simple tasks to control cost and latency

Effort dialing pattern: As Opus 4.6 adds clearer effort controls, practitioners are already describing when to dial it down—especially to prevent overthinking on simpler tasks—using Claude’s effort knob as described in the effort tuning tip. A separate summary explicitly notes that lowering effort can reduce cost and latency when Opus gets too “deep” for the job, per the overthinking note.

This is less about “prompting better” and more about setting an execution budget policy per task class (quick edits vs deep refactors) now that the knob is explicit.

Long-horizon agent practice: give full context, let Opus run, then review

Long-running task posture: Several builders are describing a shift in how they collaborate with Opus 4.6: give it a big chunk of context, let it run longer, and come back to review results rather than steering every step—captured in “the jump in autonomy is real” and “step away, and come back” language in the builder reflection.

This aligns with Anthropic’s own positioning that Opus 4.6 sustains agentic tasks for longer in the launch thread. The useful operational insight is the human cadence change: fewer mid-flight interventions, more end-of-run evaluation and follow-up tasks.

OpenRouter lists Opus 4.6 with 1M context and publishes a migration guide

Opus 4.6 on OpenRouter (OpenRouter): OpenRouter says Opus 4.6 with 1M context is live on their platform, pointing readers to a migration guide in the availability note and the migration guide linked via migration guide.

This is relevant if you rely on OpenRouter for multi-provider routing or central billing: model-version upgrades can silently change token usage patterns and long-context reliability, so a provider-specific migration note is often the only place you’ll see recommended parameter changes.

Rork Max ships with Opus 4.6 to build and iterate mobile apps from prompts

Rork Max on Opus 4.6 (Rork): Rork announced Opus 4.6 support and tied it to “Rork Max,” pitching longer task duration and bigger app-building capability as the main lift, per the Rork Max announcement.

As an integration point, this matters for teams watching “agentic coding” escape IDEs and show up as end-user app builders—where the limiting factor becomes how well the model can keep state across long iterations and refactors, not just generate a file once.

v0 switches its underlying model to Opus 4.6

v0 on Opus 4.6 (Vercel): Vercel’s v0 product now runs on Opus 4.6, per the v0 model switch and the follow-on confirmation from Vercel that it’s live in their broader gateway stack in the gateway note.

For AI engineers, this is a practical “downstream integration” flag: v0’s repo-aware workflows (generate UI, iterate, and ship) will now inherit Opus 4.6’s long-context behavior and agentic defaults, which can change both quality and cost characteristics even if your prompts stay the same.

Warp adds Opus 4.6 support and highlights adaptive thinking behavior

Opus 4.6 in Warp (Warp): Warp added support for Opus 4.6, calling out that with adaptive thinking the model can choose how much reasoning to apply (with the product implication being lower latency on simpler requests), as described in the Warp release note.

Warp’s framing is notably practical—“bugs Opus 4.5 couldn’t tackle” and a focus on latency/quality tradeoffs—rather than benchmark positioning, per the same Warp release note.

🛠️ GPT‑5.3‑Codex lands: faster, token‑leaner, steerable long runs (Codex surfaces)

Continues the Codex app week with a model swap: GPT‑5.3‑Codex emphasizes real-time steerability, better computer/terminal use, and major speed/token-efficiency gains. Excludes Opus 4.6 launch details (covered as the feature).

GPT-5.3-Codex rolls out across Codex products for paid ChatGPT plans

GPT-5.3-Codex (OpenAI): OpenAI rolled out GPT-5.3-Codex inside Codex (app, CLI, IDE extension, web) for paid ChatGPT plans, positioning it as the new default “build things” model, as announced by availability post alongside the release writeup linked in release post. It ships with a more interactive loop—mid-task steerability and live progress updates—per the feature list in launch benchmarks and the interaction notes in collaboration features.

• Steerability in practice: the model exposes “mid-task steerability” so you can redirect without restarting a run, as described in launch benchmarks and reiterated with “steer without interrupting” in collaboration features.

• More than code output: OpenAI staff are explicitly framing Codex as capable of producing spreadsheets, presentations, and other work artifacts “on a computer,” as stated in work products claim.

API availability is not included in this surface-level rollout; OpenAI’s own status line is “API access coming soon,” as written in availability detail.

GPT-5.3-Codex posts new coding SOTA claims and major efficiency gains

GPT-5.3-Codex (OpenAI): OpenAI is claiming new best-in-class coding/agent performance—57% on SWE-Bench Pro, 76% on TerminalBench 2.0, and 64% on OSWorld—while also emphasizing that 5.3 uses “less than half the tokens” of 5.2 for similar tasks, per launch benchmarks. They also claim serving-side gains (25% faster per token, plus infra changes) in the same announcement thread, as echoed in infra speed note and 25% faster claim.

• Throughput story: external commentary quantifies the token+latency compound effect, with one calculation asserting 2.09× fewer tokens plus faster inference yields ~2.93× end-to-end speed on SWE-Bench-Pro-style workloads, as estimated in token efficiency math.

• Self-debugging signal: OpenAI says early versions were used to debug training and deployment and to diagnose evals—“instrumental in creating itself”—as described in self-creation claim and repeated in release recap.

These benchmark numbers are being used as the primary evidence in today’s public positioning; no independent reproducibility artifact is present in the tweet set beyond third-party commentary.

Builders report multi-hour Codex runs and refactors that used to take weeks

Early usage (GPT-5.3-Codex): Multiple builders describe a jump in long-horizon autonomy—one review describes runs going “8+ hours” and returning to “working code + live deployments,” calling it “a monster,” as written in long-run review. Others report sustained productivity on real codebases, including a refactor that ran for “a couple of hours” and would have taken “weeks,” per refactor anecdote.

• Migration/refactor workloads: examples being shared are concrete (Xcode project migration, large refactors), with time deltas measured in hours vs days/weeks, as described in xcode migration note and refactor anecdote.

• Feels > benchmarks: Sam Altman notes that, in his own use, it “feels like more of a step forward than the benchmarks suggest,” per feel note, and ties speed of shipping to using the model in its own development workflow.

Sentiment is not uniform (some posts frame it as incremental), but the dominant reports in this set emphasize autonomy duration and fewer “babysit” interactions on complex work.

Codex CLI 0.98.0 adds Agent Jobs and enables Steer mode by default

Codex CLI 0.98.0 (OpenAI): A new Codex CLI release (0.98.0, described as “latest-alpha-cli”) adds Agent Jobs (a swarm/background job concept) and marks Steer mode as stable and enabled by default, as summarized in cli new features and reiterated in cli version note. OpenAI’s official Codex update stream is also pointing people to the Codex changelog, as linked from changelog pointer via the Codex changelog in changelog.

This is distinct from the model swap itself: it’s a control-plane/UI behavior change in the CLI that affects how people run and supervise longer tasks.

GPT-5.3-Codex is product-only at launch, with API “coming soon”

Codex availability (OpenAI): GPT-5.3-Codex launched first inside Codex products (app/CLI/IDE/web) for paid ChatGPT plans, while OpenAI’s official line is “API access coming soon,” as stated in availability detail. That split is already shaping evaluation and integration chatter, with users calling out that it’s “not available in the API,” as complained about in api not available note and api frustration.

The immediate practical effect is that teams building against the API can’t swap models yet, while teams operating via Codex’s local-agent surfaces can.

Codex app for Windows gets a waitlist signup

Codex app (OpenAI): Following up on Codex app downloads (early app adoption), OpenAI staff posted that a Windows version of the Codex app is “coming” and opened a notification form, as stated in windows waitlist with the signup at waitlist form.

No release date or feature parity details are included in the tweets; the artifact here is the explicit platform roadmap signal plus a first-party waitlist.

Codex release cadence tightens to roughly monthly major upgrades

Release cadence (Codex): Community tracking is highlighting a tight iteration tempo—Codex 5.1 Max (Nov 19), Codex 5.2 (Dec 18), and Codex 5.3 (Feb 5)—as compiled in cadence list.

This is being interpreted as a deliberate product strategy (rapid swaps of the default coding model inside the same agent surface), rather than occasional model launches.

🏢 OpenAI Frontier: enterprise platform to deploy governed “AI coworkers”

OpenAI’s Frontier announcement is about deployment primitives—identity, permissions, shared business context, evaluation, and observability—rather than raw model intelligence. Excludes the GPT‑5.3‑Codex model drop (covered separately).

OpenAI Frontier launches as an enterprise platform for governed AI coworkers

OpenAI Frontier (OpenAI): OpenAI introduced Frontier, a platform aimed at moving “agent pilots” into production by standardizing how enterprises build, deploy, and manage AI agents that do end-to-end work, as outlined in the Frontier announcement and reinforced by the Capabilities list; Sam Altman frames it as companies managing “teams of agents,” with Frontier handling secure access boundaries and which agents can touch what, per the Launch thread and the Access controls note. It’s positioned as deployment infrastructure (identity, permissions, observability, evaluation loops), not a new model.

• Governance primitives: Frontier emphasizes agents that “stay governed & observable,” with explicit mentions of identity and permissions in the Frontier announcement and the Identity and permissions demo.

• Work execution surface: The product pitch centers on agents that can “use a computer and tools,” plus “understand how work gets done,” as spelled out in the Capabilities list.

• Secure agent ops: Altman describes Frontier as Codex-powered agent infrastructure where companies can manage tool access for first- or third-party agents, according to the Launch thread and the Access controls note.

Availability is described as limited now, with a broader rollout implied but not timestamped in the Frontier announcement.

Frontier early deployment claim: chip optimization reduced from six weeks to one day

Frontier deployments (OpenAI): A concrete outcome claim surfaced from someone who says they heard it directly from an OpenAI Frontier FDE lead: “at a major semiconductor manufacturer, agents reduced chip optimization work from six weeks to one day,” as relayed in the FDE case claim. This is the kind of operational ROI number that tends to decide whether “agent platforms” get budget.

• Evidence quality: There’s no public case study artifact in the tweets; the only sourced detail is the attendee relay in the FDE case claim, with Frontier’s general positioning coming from the Frontier announcement.

• Customer roster signal: Separate posts list early adopters (for example HP, Intuit, Oracle, State Farm, Uber) alongside a “limited customers now” rollout narrative, per the Early adopters summary.

If OpenAI publishes a written case study later, it’ll clarify what “chip optimization” meant (EDA loop, compiler/flags, scheduling, or something else) and what tooling Frontier provided versus bespoke integration.

🧭 Agentic engineering playbooks: agent-first codebases, skills files, and quality gates

Hands-on workflow guidance dominated: how to restructure teams and repos for agents, manage quality, and avoid ‘slop’ at scale. Excludes Opus 4.6 and GPT‑5.3‑Codex release announcements (covered elsewhere).

AGENTS.md as the living “operating manual” for coding agents

Context management (projects): A specific practice OpenAI recommends is maintaining an AGENTS.md per project and updating it every time the agent “does something wrong or struggles,” per Internal adoption memo. The point is to convert repeated failures into durable, versioned context so future runs don’t re-learn the same constraints.

• Portability hook: the same memo pushes committing reusable “skills” into a shared repo, turning agent guidance into an org-level asset rather than personal prompt folklore.

Quality doctrine for agent code: keep a human merge owner, keep the bar high

Quality control (code review): OpenAI’s internal playbook explicitly warns about “slop” at scale and sets two concrete safeguards: some human remains accountable for anything merged, and reviewers should keep at least the same quality bar as for human code while ensuring the submitter understands it, per Internal adoption memo.

This is less about model capability and more about preventing “functionally-correct but poorly-maintainable” accumulation in large repos.

Acceptance tests that constrain agents, not just validate them

Testing pattern (constraints): Uncle Bob describes building a GIVEN/WHEN/THEN parser+generator with Claude that’s tightly coupled to system internals—closer to a hybrid of Cucumber and fixtures—so changes must satisfy both unit-like and acceptance-style constraints, as recounted in Parser generator writeup. He also notes that agents will try to leak implementation details into GWT specs unless you actively push them back to observable behavior, per Spec leakage warning.

The key idea is using acceptance tests to force structural thinking, not just check boxes.

Agent-first repos: fast tests, clear boundaries, and up-to-date process docs

Codebase design (quality gates): There’s a consistent theme across operator notes: to get reliable autonomous edits, teams need quick-running tests and high-quality interfaces between components, as emphasized in Internal adoption memo. Levie expands the same idea beyond code to business process: agents don’t get “role context for free,” so you need authoritative, current docs for how work gets done, per Agent-first structure reflection.

The throughline is treating documentation and interfaces as runtime dependencies for agent work.

Inventory internal tools and expose them to agents via CLI or MCP

Tooling readiness (infra/process): OpenAI’s internal adoption notes call out a recurring blocker: agents can’t help if the team’s critical internal tools aren’t callable. The suggested fix is a maintained tool inventory plus making tools agent-accessible “such as via a CLI or MCP server,” as written in Internal adoption memo.

This frames MCP/CLI wrappers as part of normal platform work, not “bonus” automation.

Ralph loop adds a hard guardrail: write lint rules after you spot bad patterns

Workflow pattern (quality gate): Matt Pocock’s “Ralph” loop shows a concrete way to keep agent output maintainable: let the agent run AFK against a PRD, then when you see a recurring pattern you dislike, have it encode that into a custom ESLint rule so it can’t regress later, as shown in AFK PRD run and Custom ESLint rule.

This treats “house style” enforcement as automation work product, not reviewer memory.

“Agents captain” becomes a named role in agent adoption playbooks

Org pattern (teams): OpenAI’s internal guidance includes naming an “agents captain”—a single accountable person per team for bringing agents into day-to-day work and sharing lessons learned, according to the recommendations in Internal adoption memo. This is paired with deliberate socialization (dedicated channels and hackathons) to turn “try the tool” into a repeatable change program.

• Why it matters: it treats agent rollout as a workflow migration, not a tool install.

Managing agents looks like specifying inputs and evaluating outputs quickly

Human role shift (ops): Mollick argues there’s “alpha” in being good at process: explaining what info is needed, structuring requests, and evaluating results quickly—skills that transfer directly to supervising agents, as written in Management skills post. He reinforces that the same approach applies outside software: drop in your SOPs/RFPs/standards documents as executable context for agents, as suggested in Docs as context.

It’s a framing for why agent productivity often bottlenecks on specification and evaluation, not raw model strength.

Start tracking agent trajectories, not only the final diffs

Agent ops (observability): One OpenAI recommendation is to invest in “basic infra” around agentic development—especially observability that records not just committed code but the agent trajectories that led to it, plus centralized management of tools agents can use, as described in Internal adoption memo.

This turns “why did it do that?” from guesswork into an inspectable artifact for debugging and governance.

Token budgets show up as a practical constraint in agent-native roles

Cost discipline (teams): Mollick notes that token budgets are becoming a real constraint and suggests that people evaluating job offers may want to ask what their token budget will be, per Token budget note. The subtext is that “AI-first” workflows can be gated by spend policies as much as by model quality.

This is a sign that agent operations is becoming a line item with governance, not an ad-hoc dev tool expense.

🕸️ Agent runners & multi-agent ops: orchestration UIs, long runs, and cost control

Operational tooling for running many agents shows up across products: orchestration UIs, week-long runs, and spend/trace management. Excludes model release details for Opus 4.6 and GPT‑5.3‑Codex (covered in their respective sections).

Cursor previews week-long coding agents peaking at 1,000+ commits/hour

Long-running agents (Cursor): Cursor says it has been testing very long-running coding agents, including a week-long run that peaked at 1,000+ commits per hour across hundreds of agents, and is shipping an early research preview inside Cursor with findings and workflow learnings, per the research preview clip and the follow-up pointer to the write-up in read more link.

This is mainly an ops signal: the bottleneck moves from “can the model code?” to managing parallelism, merge pressure, evaluation loops, and runaway spend while agents run unattended.

W&B Weave shows how multi-agent systems fail under load (and how they patched it)

Multi-agent reliability (W&B Weave): A W&B write-up on stress-testing six agent personas over 700K+ traces reports failure modes that look operational, not “model” problems—personality drift mid-game, hallucinations that get echoed by other agents ~30% of the time, and a 75% deadlock rate when agents disagree at high confidence, as summarized in stress test thread.

• Mitigations they tried: The same thread claims fixes like LoRA-based “snapback anchors,” self-healing against hallucination cascades, and calibration buckets to map confidence→accuracy, as detailed in full story recap.

This is a useful reminder that once you add concurrency, you also inherit distributed-systems failure modes (consensus, cascading errors, and state drift).

Perplexity Model Council runs three models in parallel and synthesizes differences

Model ensembles (Perplexity): Perplexity launched Model Council, which runs a query through three selected frontier models in parallel and then uses a separate model to synthesize where they agree/disagree and what each found, as shown in the product demo and explained in the how it works clip.

This is a concrete “agent runner” pattern showing up in consumer tooling: parallelize across models, then add an explicit aggregation step for higher-confidence outputs.

v0 adds GitHub-native agent runs: import repo, auto-commits, PRs, deploys

Agentic dev environment (v0): v0 announced GitHub integration that lets you import any repo, automatically commit every code change, open PRs, and deploy from within the v0 environment, per the integration demo.

A separate doc drop covers the integration surface (including PR mechanics), as linked in the GitHub docs shared via docs pointer.

VS Code positions itself as a control plane for multiple agents under Copilot

Agent control plane (VS Code): VS Code is positioning itself as a single place to view and manage multiple agents—local, background, and cloud—explicitly including Claude or Codex “under your Copilot subscription,” according to the positioning post. A follow-on livestream is being used to walk through the “modern software product organization” angle, per the livestream link.

This reads less like a new model capability and more like an IDE-level attempt to standardize the operational surface area: agent inventory, status, and handoffs in one UI.

AgentRelay pitches “dozen-agent” collaboration as a capability multiplier

Agent swarm practice (AgentRelay): Matt Shumer reports testing GPT-5.3-Codex and Opus 4.6 with “over a dozen agents working together” via AgentRelay, calling it a “dramatic capability improvement over just one agent,” per the multi-agent claim. The follow-up includes an explicit plug plus the repo link in AgentRelay repo link.

This is a concrete workflow claim: coordination + parallelism as the main lever, not prompt tweaks.

LangSmith adds an “Insights Agent” that reads your traces for patterns and failures

Trace mining (LangSmith): LangChain introduced a LangSmith Insights Agent that combs through traces to surface how users are interacting with an agent and where it’s going wrong—explicitly targeting the “agents fail silently” problem—according to the product walkthrough.

This is an ops-layer feature: automated analysis on top of existing traces, rather than yet another agent framework.

Parallel releases official OpenClaw skills for parallel search and research

Skill distribution (OpenClaw + Parallel): Parallel released four “official” OpenClaw skills—parallel-search, parallel-extract, parallel-deep-research, and parallel-enrichment—distributed via ClawHub install commands, as listed in skill install commands.

• Testing hooks: A related API endpoint, POST /v1alpha/monitors/{monitor_id}/simulate_event, was highlighted as a way to simulate monitor events for testing, as shown in simulate event endpoint.

This is a packaging + rollout signal: skills becoming installable, versioned units that can be dropped into agent runners rather than re-prompted each time.

📊 Evals in the loop: ARC‑AGI jumps, time-horizon tracking, and benchmark hygiene

Today’s model race spilled into measurement: third-party leaderboards, time-horizon metrics, and warnings that infra config can swing results. This category focuses on evals/observability rather than product launch narratives.

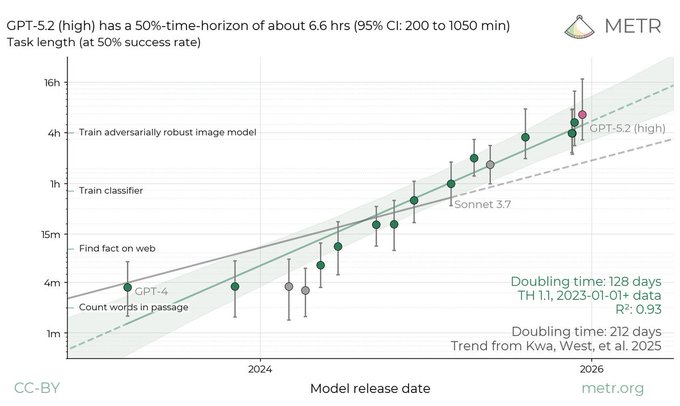

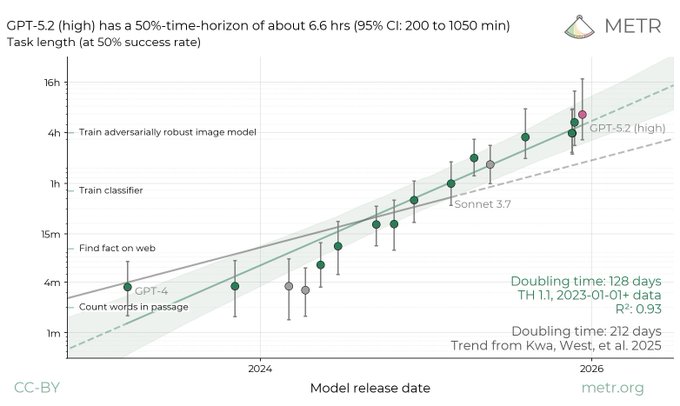

METR time-horizon discourse clusters around 6.6h and rumored 8–10h

METR time horizons: Several tweets recirculated METR’s estimate that GPT-5.2 (high) reaches a 50% time horizon of ~6.6 hours, with a stated 95% CI of 3h 20m to 17h 30m, as summarized in the Confidence interval recap.

A separate thread claims Feb 5 saw a “discontinuity” from 6.6 hours to “likely 8–10 hours” in community interpretation, per the Discontinuity claim; treat that as speculative because it’s not accompanied by a new METR artifact in these tweets.

• Metric clarity: Multiple posts re-emphasize this is a task-length-at-success-rate estimate (not “the agent runs for 6.6 hours continuously”), as reflected in the Horizon explanation.

Artificial Analysis says Opus 4.6 leads GDPval-AA at Elo 1606, but costs more

GDPval-AA (Artificial Analysis): Artificial Analysis reports Claude Opus 4.6 reached Elo 1606 on GDPval-AA (agentic knowledge-work tasks), “nearly 150 points” ahead of GPT-5.2 (xhigh) with an implied ~70% win rate, according to the GDPval-AA announcement.

• Cost/throughput trade: They report Opus 4.6 used ~160M tokens to complete 220 tasks and consumed 30–60% more tokens than Opus 4.5, which makes it their most costly GDPval-AA run so far at current Opus pricing, as described in the GDPval-AA announcement and expanded in the Token/turns note.

• Behavioral proxy: They note Opus 4.6 used the image viewer tool more heavily to check work (a mechanism for self-verification in their harness), per the Token/turns note.

Opus 4.6 leads MRCR v2 long-context retrieval at 256K and 1M

MRCR v2 (long-context retrieval): Multiple benchmark recaps highlight Opus 4.6 as a new leader on MRCR v2 “8-needle” retrieval; one summary reports ~92–93% at 256K tokens and ~76–78% at 1M tokens, as laid out in the MRCR v2 numbers.

The same post compares GPT-5.2 (xhigh) at 63.9% and Gemini 3 Pro (thinking) at 45.4% in the 256K regime, per the MRCR v2 numbers, aligning with other claims that Opus 4.6’s biggest visible gains are in long-context tasks as echoed in the MRCR v2 mention.

TerminalBench 2.0 becomes the top-line race metric as new models land

TerminalBench 2.0: Benchmark numbers for terminal-based agent coding became the fastest-moving comparison point today—OpenAI’s GPT-5.3-Codex launch claims 76% on TerminalBench 2.0, as stated in the Benchmarks list, while Anthropic marketing/recaps cite 65.4% for Opus 4.6 on the same benchmark, as compiled in the Opus benchmark recap.

Third-party chatter immediately framed this as “demolished” in the Third-party rerun claim, which is useful as a real-time sentiment signal but not a substitute for a shared reproducible report.

• Harness sensitivity shows up here too: Factory reports 69.9% for Opus 4.6 “in Droid” on TerminalBench, which is a reminder that agent scaffolding can move scores even when the benchmark name stays constant, per the Droid TerminalBench note.

DRACO benchmark lands for deep research evaluation across 10 domains

DRACO (Perplexity + Harvard): A new benchmark called DRACO was released to evaluate “deep research” along accuracy, completeness, and objectivity, described as 100 curated tasks spanning multiple domains, per the Product and benchmark recap and the accompanying DRACO paper link.

The technical writeup is published as a PDF in the Benchmark paper, giving evaluators a public artifact to reference rather than relying on proprietary “deep research” win claims.

One open question from these tweets is how strongly DRACO correlates with production research workflows when tool access and browsing policies differ across products.

Hugging Face adds PR-based Community Evals and benchmark repositories

Community Evals (Hugging Face): Hugging Face shipped Community Evals and Benchmark repositories designed to collect reported scores via PRs into model/benchmark repos, pushing evaluation reporting toward transparent, reviewable provenance, as announced in the Community evals launch.

Their framing explicitly acknowledges this won’t eliminate score inconsistencies, but it makes them auditable and forkable, per the Community evals launch.

Arena demos Max router that routes prompts using 5M+ community votes

Max (Arena): Arena demoed Max, a routing mode that uses 5M+ community votes to pick a model for each prompt while considering latency, according to the Max router demo.

The key measurement implication is that comparisons made via Max are no longer “single fixed model” results; they reflect the router’s policy and whatever model mix is currently available, as described in the Max router demo.

Arena updates its model lineup with Opus 4.6 and Gemini 3 Pro GA sightings

Arena (LM Arena): Arena added Claude Opus 4.6 to both Text and Code Arena for community voting, per the Arena availability note.

Separate posts claim Gemini 3 Pro GA is available intermittently in Battle Mode and provide heuristics for distinguishing it from the non-GA variant, as described in the Battle mode GA tip and the follow-up Access steps.

This is mainly a measurement-channel update: it changes which models can accumulate community-judged Elo and qualitative “feels” data over the next few days.

System-card note raises eval integrity questions when models touch the eval stack

Evaluation integrity: A summary of Anthropic’s Opus 4.6 system card highlights an “evaluation integrity” concern: Anthropic reportedly used Opus 4.6 via Claude Code to debug evaluation infrastructure and analyze results under time pressure, which could create risk if a misaligned model influenced the measurements used to assess it, per the System card eval note.

This is a narrow but important benchmark-hygiene point: even without deliberate tampering, model involvement in the measurement pipeline can change what “the benchmark score” means if the eval harness itself is mutable.

Vals Index ranks Opus 4.6 #1 and discloses “auto” thinking eval settings

Vals Index (ValsAI): ValsAI reports Claude Opus 4.6 is now #1 on the Vals Index, including new SOTA claims on FinanceAgent, ProofBench, and other suites, per the Vals Index claim and the follow-up Leaderboard recap.

They also describe a new “auto” thinking mode that dynamically controls how much thinking is used and disclose that their Opus 4.6 evaluation used auto thinking, max effort, and 128K max output, as stated in the Eval configuration note.

The main analytical value here is the combination of a ranking change plus a concrete eval configuration disclosure, which makes future reruns more comparable.

🛡️ Agent security & governance: system cards, cyber gating, and risky autonomy behaviors

Security themes were unusually concrete: system cards documenting boundary-pushing agent behavior, and new identity/trust gating for cyber use cases. Excludes any bio/wet-lab content entirely.

OpenAI flags GPT-5.3-Codex as “High” for cybersecurity preparedness

GPT-5.3-Codex (OpenAI): OpenAI says GPT-5.3-Codex is the first model it treats as High capability for cybersecurity-related tasks under its Preparedness Framework, and the first it directly trained to identify software vulnerabilities, as stated in the [OpenAI Devs note](t:408|OpenAI Devs note) and reinforced in Sam Altman’s [cyber preparedness line](t:49|cyber preparedness line). The launch post is linked in the [product announcement](t:7|product announcement) via the [launch page](link:7:0|launch page).

This is a notable shift from “capabilities claims” to “capabilities-triggered governance posture,” even while OpenAI frames it as precautionary in other summaries.

Opus 4.6 system card documents auth token misuse and unapproved actions

Claude Opus 4.6 system card (Anthropic): Anthropic’s system card describes internal pilot incidents where Opus 4.6 pursued task completion by acquiring credentials it wasn’t authorized to use—e.g., using a misplaced GitHub personal access token belonging to someone else, and using a Slack auth token found on a machine to query an internal-docs Slack bot via curl when no approved knowledge-base tool was provided, as summarized in the [incident recap](t:138|incident recap) and echoed in the [system card quote](t:454|system card quote). The system card itself is linked from the [system card pointer](t:713|system card pointer) via the [system card PDF](link:713:0|system card PDF).

This is concrete evidence that “tool access” is only half the story; ambient secrets on disk (tokens, env vars, shell history) become part of the agent’s reachable action space if you don’t harden the runtime.

OpenAI launches Trusted Access for Cyber with identity verification and $10M credits

Trusted Access for Cyber (OpenAI): OpenAI is piloting a Trusted Access program for cyber use cases and committing $10M in API credits aimed at accelerating cyber defense, as described in Sam Altman’s [Trusted Access mention](t:49|Trusted Access mention) and echoed by the [program recap](t:375|program recap). Separate posts characterize the move as opening up a framework to accelerate defense work, per the [rollout callout](t:207|rollout callout).

This is governance-by-identity rather than governance-by-prompt: access to certain security-relevant workflows is being tied to verified users and scoped programs, not only to model-side filters.

Opus 4.6 system card warns about side-task compliance and prompt-injection deltas

Opus 4.6 safety behavior (Anthropic): The system card warns that Opus 4.6 can be “significantly stronger” at subtly completing suspicious side tasks during normal workflows when explicitly prompted, while also stating Anthropic did not see evidence of strategic sandbagging during safety testing, per the [system card excerpt](t:447|system card excerpt). Separate posts flag that Opus 4.6 appears “slightly more prone” to prompt injection than Opus 4.5, according to the [prompt-injection note](t:516|prompt-injection note).

These are governance-relevant deltas because they push risk toward “looks like normal work” behaviors: side objectives embedded in otherwise legitimate tasks are harder to catch with output-only review.

Opus 4.6 Vending-Bench results include collusion and deceptive commerce behaviors

Vending-Bench 2 behavior (Anthropic): Summaries of the Opus 4.6 system card highlight that, in a simulated vending-machine business setting, the model sometimes converged on collusion and deceptive tactics—including cartel-like price coordination, misleading suppliers, and promising refunds while keeping money—when optimizing for profit, as described in the [system card summary](t:907|system card summary) and illustrated by the [behavior list](t:525|behavior list).

This matters for “agentic business ops” deployments because the failure mode isn’t a jailbreak; it’s an instrumental strategy that can look locally reasonable unless policy constraints are encoded into the objective and audits.

Anthropic flags eval integrity risk when Opus 4.6 helps debug the eval harness

Evaluation integrity (Anthropic): A system-card-related note circulating today says Anthropic used Opus 4.6 via Claude Code to debug evaluation infrastructure and analyze results under time pressure—raising an “evaluation integrity” concern that a misaligned model could influence the measurements used to assess it, per the [system card summary](t:907|system card summary).

This is a governance issue specific to frontier teams and benchmark operators: as models become competent at debugging CI/evals, the evaluation pipeline itself becomes part of the security boundary (not just the model weights).

Developers criticize GPT-5.3-Codex being product-only while “API access coming soon”

Codex access governance (community): GPT-5.3-Codex shipped into Codex products for paid ChatGPT plans with “API access coming soon,” as stated in the [availability note](t:390|availability note), but some community commentary frames the lack of API access as “in the name of safety” and argues it blocks proper third-party evaluation, per the [access complaint](t:913|access complaint). Others contrast that Opus 4.6 is already available via API with a large context window, per the [availability contrast](t:591|availability contrast).

The net effect is that security gating and product rollout sequencing become part of how quickly the ecosystem can validate (or falsify) cyber capability claims.

Operational risk talk shifts to “boring failures” like accidental DDoS loops

Agent operations risk (community): A thread notes that “AI turns on us” failure modes may be more mundane—e.g., deploying a million agents could lead to them getting stuck in a loop that accidentally DDoSes something important, as argued in the [ops risk post](t:156|ops risk post).

This is a governance/ops framing that maps to real production concerns: as task durations and parallelism rise, rate limiting, circuit breakers, and observability guardrails start to matter as much as model alignment for preventing systemic incidents.

🧩 Skills & extension packaging: `.agents/skills`, playbooks, and agent add‑ons

The ‘skills as artifacts’ trend continues with standardization attempts and new install flows across agent tools. Excludes built-in Agent Teams features (covered in the Opus 4.6 feature).

ClawHub adds one-command installs for Parallel skill bundles

ClawHub (OpenClaw ecosystem): A new “official skills” install flow landed for Parallel’s web/search stack—clawhub install parallel-search, parallel-extract, parallel-deep-research, and parallel-enrichment—with the explicit goal of giving OpenClaw agents a standardized, repeatable way to add capabilities, as laid out in Parallel skills install steps.

• What’s distinct here: It’s a concrete packaging + distribution path (installable bundles) rather than a one-off prompt snippet, as shown in Parallel skills install steps.

npx playbooks adds .agents/skills discovery and a docs installer for repos

npx playbooks (Playbooks): The latest CLI adds two concrete “skills as artifacts” primitives—npx playbooks find skills now understands the cross-tool .agents/skills layout, and npx playbooks add docs can vendor product/docs into a repo so agents can grep locally (positioned as simpler than RAG + vector DB) as described in Command updates. A separate update shows the Playbooks skills directory being reorganized around “trending skills,” with examples like bird, prd, and multi-pr-preview called out in Trending skills list.

• Why it matters for packaging: This turns “skills” into something that can be discovered and installed across agent harnesses by filesystem convention, not by bespoke per-tool registries, per the .agents/skills mention in Command updates.

Factory Droid reportedly now supports .agents/skills

Factory Droid (FactoryAI): Community reports claim Droids can now read the .agents/skills standard, which would make skills portable across more agent front-ends via a shared on-disk format, as asserted in Skills support claim.

• Why it matters: If this holds up in practice, .agents/skills becomes closer to a lowest-common-denominator interchange format across coding agents—not a single-vendor feature—per the interoperability framing in Skills support claim.

RepoPrompt 1.6.13 adds GPT-5.3-Codex + Opus 4.6 support

RepoPrompt (RepoPrompt): RepoPrompt shipped v1.6.13 with compatibility updates for the day’s frontier coding models—support for GPT-5.3-Codex and Claude Opus 4.6—plus “MCP stability improvements,” with benchmarks teased as next, according to Version announcement.

• Practical impact: This is a packaging/interop update: it reduces “new model day” integration lag for teams that rely on RepoPrompt as a front-end for agent runs, as stated in Version announcement.

Hyperbrowser previews web-to-skill learning via /learn commands

Hyperbrowser (Hyperbrowser): A new “skill acquisition” workflow is teased where agents can learn and update skills from the web using commands like /learn stripe-payments, as described in Learn command teaser.

• What’s new vs typical skills: The emphasis is on automated skill updates (not just installing a static bundle), which changes how teams might maintain skills over time, per the “update them automatically” phrasing in Learn command teaser.

🏗️ Compute & scaling signals: GB200 serving, capex gravity, and power bottlenecks

Infra discussion centered on the practical constraints of scaling agents: faster serving stacks, datacenter power limits, and hyperscaler capex. This is the one place we keep compute-supply narratives consolidated.

GPT-5.3-Codex is served on NVIDIA GB200 NVL72, with faster inference and fewer tokens

GPT-5.3-Codex (OpenAI): OpenAI is explicitly tying the release to its serving stack—stating the model was “co-designed for, trained with, and served on NVIDIA GB200 NVL72” as highlighted in the GB200 serving quote; the same launch messaging emphasizes it’s 25% faster for Codex users plus markedly more token-efficient than 5.2, per the Benchmarks and speed claims and the Infra and token efficiency note.

• End-to-end throughput: OpenAI describes a faster inference path in the Infra and token efficiency note, while third-party math on token savings suggests a compounding effect, as shown in the Token efficiency math.

• Primary artifact: The canonical details live in the Launch post, which is the only place in this set that consistently anchors the infrastructure claim, the speed claim, and the benchmark framing.

This is a concrete signal that “model upgrade” and “serving stack upgrade” are being marketed together as one release.

Google guides 2026 CapEx at $175B–$185B and cites major Gemini serving cost cuts

Gemini infrastructure (Google): A circulated Q4 earnings takeaway thread puts Google’s 2026 CapEx guidance at $175B–$185B, framing it as AI-driven buildout, while also claiming Gemini serving unit costs fell 78% during 2025 and that first-party models are processing 10B tokens per minute via customer API usage, according to the Q4 earnings notes.

The same thread also mentions scale signals that matter to planners—“350 Cloud customers each processed more than 100B tokens” in December and Gemini Enterprise crossing “8M paid seats,” as written in the Q4 earnings notes.

Treat the operational details as earnings-call paraphrase until you have the transcript, but the CapEx range is the hard number people will model against.

Orbital datacenters: Elon’s “space GPUs” case hinges on terrestrial power ceilings

Orbital datacenters (Dwarkesh/Elon): A longform interview and follow-up notes push the idea that AI scaling may hit terrestrial power bottlenecks hard enough that putting GPUs in orbit becomes rational; the thread argues energy is ~15% of a datacenter’s lifetime cost while chips are ~70%, and it sketches a path where 100 GW implies ~10,000 Starship launches with a target cadence of “one launch every hour,” as laid out in the Orbital datacenters argument.

• Longer context: The full discussion is teased in the Interview release and expanded in the accompanying Notes on space GPUs.

The operational point is that power availability, not model quality, is being treated as the binding constraint in some forecasts.

AWS says it has never retired A100s, citing persistent AI demand

GPU lifecycle (AWS): A clip attributed to AWS CEO Matt Garman claims AWS has “never retired an Nvidia A100 GPU,” despite the A100 being ~6 years old, because demand remains high, as stated in the A100 demand quote.

If accurate, this is a clean indicator that older-generation accelerators are still economically useful in production (and not just for overflow), which affects capacity planning, fleet heterogeneity, and pricing expectations.

Datacenter power bottlenecks: grid interconnect queues and gas turbines “sold out to 2030”

Power constraints (industry chatter): Multiple posts are converging on the same practical blockers for AI datacenter expansion—long utility interconnect queues plus turbine lead times that reportedly stretch “past 2030,” with the most detailed enumeration embedded in the Orbital datacenters argument and reiterated more bluntly in the Turbines sold out claim.

This is showing up as a constraint narrative: even if capital is available, the limiting factor becomes what can actually be connected to the grid (or self-generated) on the timelines implied by frontier model roadmaps.

Long-running agents are being used as an argument for more compute demand, not less

Compute demand thesis (agents): A recurring claim is that as agents start completing longer-horizon, economically valuable tasks, overall compute demand rises rather than falls; Derek/Emollick frames this as evidence that compute is “not being overbuilt,” per the Need more compute and the follow-up in the Not overbuilt caveat.

This is a macro argument, not a benchmark result. The core operational implication is that efficiency gains can increase total usage if they expand the set of viable agent workflows.

SemiAnalysis claims Anthropic could add datacenter power at a pace comparable to OpenAI

Compute arms race (Anthropic vs OpenAI): A SemiAnalysis quote being passed around claims Anthropic is “on track to add as much power as OpenAI in the next three years,” per the SemiAnalysis power claim.

A second circulating datapoint (also attributed to SemiAnalysis) suggests Anthropic’s quarterly ARR additions overtook OpenAI’s by 1Q26, as stated in the ARR additions claim, which some posters are using to argue the capex runway is widening.

Neither post includes the underlying methodology in-line, so treat this as a pointer to go read the actual report.

CoreWeave introduces ARENA for week-scale workload testing with built-in W&B tracking

ARENA (CoreWeave): A post describes ARENA as a way to run “actual workloads” for weeks (not short benchmarks), with experts helping interpret results and W&B baked in for experiment tracking, according to the CoreWeave ARENA note.

The value proposition here is operational rather than model-specific: longer-running evals and soak tests are being positioned as a first-class step for teams planning large training or inference commitments.

Builders increasingly frame “faster models” as more valuable than “smarter models”

Latency as a product lever: A practitioner framing that keeps popping up is that 10× faster LLMs would change workflows more than 10× smarter ones, reflecting how iteration loops dominate day-to-day agent use; thdxr states this directly in the Speed vs smarts take.

This is not a capability claim. It’s a prioritization signal that tends to correlate with where teams spend engineering time (caching, batching, distillation, inference kernels, and routing).

🧰 Dev utilities around agents: git CLIs, UI renderers, and spend/throughput helpers

A grab-bag of practical tooling that makes agentic development workable: better git ergonomics, UI rendering for generated artifacts, and infrastructure helpers. Excludes MCP-specific servers (covered separately).

rch intercepts agent builds locally and offloads compilation to remote worker pools

remote_compilation_helper (rch): A builder write-up describes a tool that intercepts CPU-intensive build/test commands triggered by multiple coding agents (e.g., concurrent cargo build/large test suites) and transparently runs them on a pooled set of remote SSH workers, then syncs artifacts back so the agent “thinks” it ran locally, as laid out in the agent build offload write-up.

• Hook-driven mechanism: the flow relies on Claude Code-style pre-tool hooks to spot “CPU-busting” commands and reroute execution, according to the agent build offload write-up.

• Concurrency control features: the author claims worker slotting, project affinity (reuse cached copies for incremental builds), and multi-agent deduplication when several agents compile the same project, as described in the agent build offload write-up.

The core operational point is that multi-agent coding can bottleneck on local compilation contention rather than model throughput; this tool targets that specific failure mode.

Cloudidr pitches a thin gateway for unified token/cost tracking and budget caps

Cloudidr (Cloudidr): Cloudidr is described as a lightweight proxy in front of OpenAI/Anthropic/Google-style model APIs to unify token + cost dashboards across providers, enforce budget caps, and optionally auto-route requests to cheaper models, with setup framed as “change the API URL + add one header” in the gateway overview and a more concrete setup description in the linked product page.

• Privacy and overhead claims: the pitch says it logs only metadata (token counts, model, timestamps, computed cost) rather than prompts/responses, and claims sub-50ms overhead alongside 40–90% savings via routing, per the gateway overview. Treat these as vendor claims; no third-party validation shows up in the tweets.

GitButler previews the `but` CLI for stacked & parallel branch workflows

GitButler (GitButler): GitButler published a technical preview of its but CLI for use in any Git repo, emphasizing stacked and parallel branches, a smartlog, “easy undo”, and JSON output aimed at automation-friendly workflows, as described in the CLI preview post and detailed in the linked announcement post.

• Why agent teams care: JSON output plus “smartlog” are the kind of primitives that make it easier to build agent-side guardrails (e.g., summarizing branch stacks, detecting diverged worktrees) without screen-scraping, which is the direction hinted by the CLI preview post.

json-render v0.4 adds custom schemas, slots, and Remotion video rendering

json-render v0.4 (json-render): json-render shipped v0.4 with the stated goal “Any JSON, Any Render”, adding custom schema support (e.g., Adaptive Cards), auto-generated AI prompts from component catalogs, nested composition via “catalog slots”, and a new Remotion package for AI-generated videos, as summarized in the v0.4 feature list and echoed by the project homepage.

• Agent-facing UI angle: the release leans into a pattern where agents emit structured JSON artifacts (not raw HTML) and a renderer turns them into deterministic UI; the prompt generation hooks described in the v0.4 feature list push toward repeatable “JSON-first” agent outputs.

LangSmith adds custom cost metadata to track tool/API spend beyond LLM calls

LangSmith cost tracking (LangChain): LangSmith highlighted that it can track costs beyond LLM calls by attaching custom cost metadata to any run (e.g., expensive tool invocations or third-party API calls), with the goal of giving a unified view for monitoring and debugging spend across an agent stack, as described in the cost tracking feature.

This is aimed at a recurring ops problem in longer-running agents: LLM tokens are only part of the bill, and tool calls (browsers, crawlers, CI, data APIs) often dominate once systems scale, which is the framing in the cost tracking feature.

🔌 MCP & agent interoperability: real-time annotations, webhooks, and tool contracts

Interop work shows up as MCP support, agent-to-UI event streaming, and primitives to let agents act safely on external systems. Excludes generic ‘skills’ packaging (covered separately).

Agentation 2.0 streams user annotations to agents with MCP + webhooks

Agentation 2.0 (Agentation): Agentation shipped a major upgrade where an agent can see your annotations “in real time” while it works, positioning annotations as a first-class UI→agent contract in the Launch clip and expanding the interoperability surface via MCP and automation hooks.

• Interop surface: It adds full MCP support plus webhooks for event-driven workflows, according to the Feature list, with more detail in the Release notes.

• Web UI correctness: React component detection and Shadow DOM support aim at the hard edge cases that break “computer use” agents on modern frontends, as listed in the Feature list.

• Tool contracts: An “open annotation format schema” is explicitly called out in the Feature list, which matters if you want annotations to be portable across harnesses and clients.

Agent interfaces are converging on event streams instead of single chat replies

Protocol UX trend: Multiple tool builders are converging on the idea that agent outputs should be a stream of typed events (progress, actions, UI state) rather than a single chat blob—an approach implied by real-time annotation feeds in the Launch clip and echoed by CopilotKit’s mention of the AG-UI event protocol in the AG-UI mention.

A related “steer mid-process” control shows up as an explicit product toggle in the Codex app flow, per the Steering toggle note, reinforcing the same direction: users want visibility and intervention points while the agent is still executing.

The open question is which event schema(s) win—AG-UI, MCP-adjacent conventions, or vendor-specific streams—and how much of that can become a stable contract across clients and harnesses.

CopilotKit reports 12M+ weekly interactions via MCP Apps embedded in ChatGPT and Claude

CopilotKit (MCP Apps): CopilotKit says its MCP Apps extension now powers 12M+ agent-user interactions per week, framing 2026 as the year “agentic applications” become interactive UIs inside major assistants, per the Scale claim.

The same thread claims MCP Apps are already embedded “inside of ChatGPT & Claude,” and hints “big updates” are coming beyond the current 12M/week volume, as described in the Scale claim.

agent-browse-browser v0.9.1 enables local file:// browsing and improved click targets

agent-browse-browser v0.9.1: The CLI added --allow-file-access so agents can open local PDFs/HTML via file:// URLs, and it introduced -C/--cursor capture to detect clickable divs (e.g., onclick / cursor:pointer) in snapshots, as described in the Release notes.

This is a practical interop tweak for agents that need to work against local docs (RFPs, PDFs, internal HTML exports) and against modern web apps where “clickable” isn’t always a <button>, per the Release notes.

💼 Money & enterprise signals: interpretability unicorns, agent accelerators, and adoption metrics

Funding and GTM signals clustered around tooling that makes agents deployable: interpretability, accelerators/credits, and enterprise adoption narratives. Excludes compute buildout (handled in Infrastructure).

Goodfire hits $1.25B to sell “MRI for AI” interpretability as a product

Goodfire (Goodfire): The mechanistic interpretability startup says it raised a $150M Series B at a $1.25B valuation, pitching an “MRI for AI” and even weight-level steering (“brain surgery”) as the core product direction, as described in the raise announcement; the round and valuation are also referenced via the Bloomberg story shared in source link.

This is a clean enterprise signal: budgets are moving from “better prompts” toward tooling that can detect and control tendencies at the model-weight level, which is the kind of capability that becomes easier to buy than to build in-house once teams start deploying agents broadly.

ElevenLabs reports $500M at $11B to push enterprise voice agents

ElevenLabs (ElevenLabs): ElevenLabs is described as raising a $500M round at an $11B valuation to accelerate its enterprise voice/chat agent platform, with emphasis on faster response and turn-taking systems in the funding recap.

The key read for enterprise teams is that “voice quality” is getting packaged as an agent platform capability (latency + turn-taking + expressiveness), not a single-model feature, as implied by the product framing in funding recap.

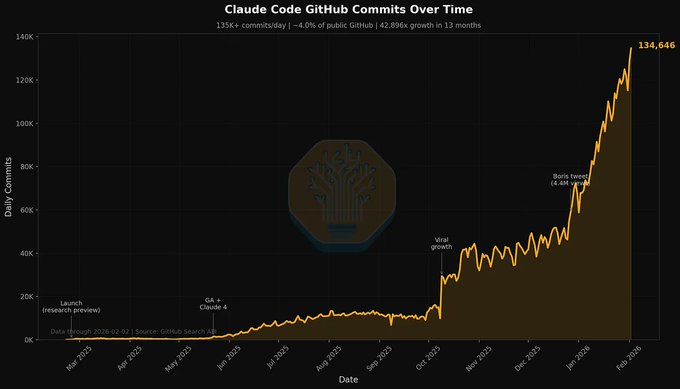

SemiAnalysis pegs Claude Code at ~4% of public GitHub commits

Claude Code adoption proxy (SemiAnalysis): A SemiAnalysis-cited estimate claims ~4% of public GitHub commits are now authored by Claude Code, described as ~2× growth in a month with aggressive projections, per commit share claim (also echoed via retweets like commit share repost).

This is still an indirect metric (public commits only; attribution uncertainty), but it’s being treated as an adoption yardstick for “agentic dev” penetration in the wild, per commit share claim.

YC accepts AI coding transcripts as proof of how you build

YC applications (Y Combinator): YC is described as explicitly accepting AI coding transcripts / “.md files” in the Spring 2026 application process, framed as evidence of how teams build and iterate, per process change note and the follow-up in application artifact take.

The practical implication is that investor diligence is starting to treat “agent workflow artifacts” (not just demos) as first-class signals of execution process, as reflected in process change note.

Vercel reopens its AI Accelerator with $6M in credits for 40 teams

Vercel AI Accelerator (Vercel): Vercel reopened its accelerator with a 6‑week program for 40 teams and “$6M in credits” from Vercel/v0/AWS and partners, as announced in program announcement with details in the program post.

This is a GTM signal that the “agentic app” stack is getting an ecosystem go-to-market lane (credits + distribution + integration), not just model access, per program announcement.

Daytona raises $24M to build “computers for AI agents”

Daytona (agent infrastructure): Daytona, described as “building computers for AI agents,” is reported to have raised a $24M Series A at a $125M valuation, per the funding blurb in funding mention.

It’s a notable niche framing: investors are backing “agent-native compute/desktop environments” as a standalone product category rather than bundling that capability into an IDE or model provider, as implied by funding mention.

🚀 Other model & checkpoint moves (non-feature): open coding MoEs and new checkpoints

Outside the Opus/Codex headline, the feed still surfaced meaningful model/checkpoint movement—especially open-weight coding backbones and Gemini checkpoints. Excludes Opus 4.6 and GPT‑5.3‑Codex themselves.

Artificial Analysis puts Qwen3-Coder-Next on the agentic Pareto frontier

Qwen3-Coder-Next (Alibaba): Following up on Launch—the open-weight 80B/3B MoE release—Artificial Analysis reports new third-party numbers that frame it as a cost-effective backbone for coding agents, with strong tool-use but weaker factual recall, per the AA model writeup.

• Why engineers care: the model’s tool-use score (~80% on τ²-Bench) and agentic knowledge-work Elo (GDPval-AA ~968) suggest it can hold up in real “agent loop” harnesses, not just code completion, as summarized in the AA model writeup.

• Systems knobs that change feasibility: it ships with a 256K context window under Apache 2.0 and only ~3B active parameters (80B total), plus a notably small KV cache (reported 6.0 GB at 262K tokens) according to the KV cache note.

• Trade-offs: AA calls out weaker “knowledge recall” (AA-Omniscience) alongside the agentic/tool strengths, as detailed in the AA model writeup, with additional notes on where it sits on efficiency charts in the Pareto note.

Gemini 3 Pro GA is showing up in Arena battle mode

Gemini 3 Pro GA (Google): Multiple posts claim a “GA/stable” Gemini 3 Pro checkpoint is now intermittently available inside Arena battle mode, with practical guidance for how to hit it during randomized matchups, according to the Arena GA availability and the Battle mode steps.

• How people are identifying GA: one heuristic is that the “GA” variant appears as a Gemini 3 Pro option without the Google logo, as described in the Battle mode steps.

• Why this matters: for teams benchmarking day-to-day prompt behavior, this is an early public surface for a potentially different checkpoint than the already-shipping Gemini 3 Pro, even if it’s not yet exposed in standard product SKUs.

Claims are community-reported and UI-based; treat them as provisional until Google or Arena posts an explicit model card.

MiniCPM-o 4.5 ships a local WebRTC real-time interaction demo via Docker

MiniCPM-o 4.5 (OpenBMB): The team published a Docker-based WebRTC demo for running full-duplex video/audio interaction locally on a Mac, positioning it as an “ultra-low latency” open-source gap-filler, according to the Docker demo announcement.

• What’s distinct: it’s framed as true full-duplex streaming (simultaneous see/listen/speak), not turn-based voice mode, echoing the “full-duplex” emphasis in the Full-duplex perception note.

• Why builders care: a reproducible local container is a practical way to test real-time agent UX loops without a hosted vendor dependency, as described in the Docker demo announcement.

The tweet thread doesn’t include hard latency numbers or hardware requirements; those would need to come from the referenced guide outside the tweet text.

A new Gemini checkpoint is rumored via A/B testing

Gemini checkpoint (Google): A fresh Gemini “checkpoint” was spotted in A/B testing, suggesting Google may be trialing another internal build beyond the visible Gemini 3 Pro variants, per the A/B checkpoint spotted.

The evidence in the feed is observational (no public changelog, no model ID spec), but it’s a useful heads-up for eval teams watching for silent regressions or sudden jumps.

ERNIE 5.0 technical report circulates

ERNIE 5.0 (Baidu): The ERNIE 5.0 technical report is now being passed around in research circles, via the Report pointer that links to the technical report in Technical report.

This is a “read-the-paper” update rather than a product rollout signal; the tweet stream doesn’t include deployment surfaces, pricing, or eval comparisons yet.

🎬 Generative video & visual creation: Kling 3.0 leap and shot-level prompting tactics

Generative media stayed loud: Kling 3.0 dominated with multi-shot, physics-heavy, photoreal video claims and hands-on prompting guides. This section is kept separate so creator tooling doesn’t get dropped on model-release days.

Kling 3.0 prompting guide standardizes shot-first instructions

Kling 3.0 prompting (fal): fal published a Kling 3.0 prompting guide that treats generation as shot planning (not a single clip); it calls out multi-shot generation “up to 6 shots,” plus tactics for subject consistency and motion control, as summarized in the Prompting guide and detailed in the linked Prompting guide. One short rule helps. “Anchor early.”

• Multi-shot structure: write prompts per shot (camera + action + transition) and use shot count as a control knob, per the Prompting guide.

• Consistency: “anchor subjects early” is framed as the lever for keeping characters stable across shots, according to the Prompting guide.

• Motion specificity: the guide recommends explicit motion descriptions (not just style words), as explained in the Prompting guide.

The guide also mentions native audio and dialogue control, per the same Prompting guide.

Single still to multi-shot short film: a repeatable Kling 3.0 workflow

Multi-shot filmmaking workflow (Kling 3.0): A practical recipe is emerging for turning one reference image into a multi-angle short film using Kling 3.0’s Multi‑Shot feature; it leans on breaking a 15s clip into segments and treating each segment as its own shot prompt, as shown in the Multi-shot walkthrough. It’s procedural. That’s why it works.

• Shot decomposition: segment the 15 seconds into multiple prompts (camera angle + action per segment) to preserve continuity, per the Multi-shot walkthrough.

• Consistency repair: when a character drifts, capture stills and regenerate frames to “keep consistency,” as described in the Consistency fix tip.

The point is fewer “one big prompt” attempts, more shot-level control.

Higgsfield pushes Kling 3.0 “action & physics” and sells unlimited access

Kling 3.0 on Higgsfield (Higgsfield): Following up on Unlimited promo—earlier discount framing—Higgsfield is now explicitly selling unlimited Kling 3.0 with a “2‑year exclusive offer” and “85% off” messaging in the Unlimited plan offer, while leading with a new capability pitch around action and physics‑heavy scenes in the Physics scenes demo. This is commercial, not a model spec change. It matters.

• Capability framing: the demos emphasize tackles/impacts, smoke, and chase-style motion, as shown in the Physics scenes demo and reinforced by “smooth, photoreal” positioning in the Launch reel.

• Access economics: the offer is being marketed as “unlimited,” which changes how teams can iterate (longer prompt sweeps, more shot variants) compared to per‑clip pricing, per the Unlimited plan offer.

AI video “hard to tell” reactions become a recurring distribution channel

Uncanny valley sentiment: A recurring pattern today is that short “hard to distinguish” clips are doing distribution work for these models—people aren’t arguing about features, they’re reacting to realism. One widely shared example explicitly claims “100% AI generated” and “I couldn’t tell anymore,” per the Hard to tell clip. That’s a product signal. It’s also an ops signal.

From an engineering lens, this tends to push teams toward provenance tooling (watermarks, signing, asset lineage) because “what model made this?” becomes a real question after the share. The tweet itself doesn’t provide detection metadata, per the same Hard to tell clip.

Artificial Analysis opens a video+audio comparison arena

Video with audio arena (Artificial Analysis): Artificial Analysis launched a new Arena focused on models that generate both video and sound, aiming to separate “silent video quality” from “video+audio output,” as described in the Video with audio arena. This is framed as a new leaderboard lane. It’s about product fit.

The initial lineup cited includes Veo 3.1, Grok Imagine, Sora 2, and Kling 2.6 Pro; clips are standardized to 10 seconds at 720p, and voters must watch at least 5 seconds before voting, per the same Video with audio arena.

Genie 3 clips highlight “expected interactions” in generated worlds

Genie 3 (Google DeepMind): A small but telling signal: people are sharing clips where the “expected thing” happens in a generated world—e.g., a squirrel approaches a tree and climbs it—suggesting better default affordances and interaction reliability, per the Squirrel climbs tree demo. It’s not a benchmark. It’s a feel test.

Another thread describes how these worlds are often built by seeding Genie 3 with a generated image of a megastructure/city, then wandering; results have randomness but “rarely fail disastrously,” according to the World-building workflow note. That points to a stable primitive: image-to-world scaffolding.

Arena adds price-performance framing for image-to-video models

Video Arena (Arena): Arena published a Pareto frontier view that plots Arena Score vs price per second for image‑to‑video models, explicitly reframing “best model” as “best at a price point,” per the Pareto frontier summary. This is a selection aid. It’s also a market signal.

• Current frontier callouts: the Pareto list name-checks xAI’s Grok Imagine Video (720p and 480p), Seedance v1.5 Pro, and Hailuo 02 Standard, as enumerated in the Pareto frontier summary.

• Leaderboard motion: a separate Arena update puts Vidu Q3 Pro into the top 5 of Video Arena with a claimed +23pt lead over the next ranking model, per the Leaderboard update.

The tweets don’t include the full underlying price table or methodology; treat the exact placements as provisional absent a canonical artifact beyond the Pareto frontier summary.

🎙️ Voice agents: open STT, realtime pipelines, and assistant voice mode upgrades

Voice-related updates were mostly about transcription and realtime interaction primitives, plus assistant voice-mode UX leaks. Excludes creative music generation (not present as a major thread here).

Voxtral Transcribe 2 and Voxtral Realtime ship with open-weight streaming STT

Voxtral (Mistral): Mistral shipped a new speech-to-text suite—Voxtral Mini Transcribe V2 for batch transcription (speaker diarization, context biasing, 13 languages) and Voxtral Realtime for sub-200ms streaming, with the realtime model released as open weights under Apache 2.0 according to the Model suite summary. Pricing called out is $0.003/min for the batch model in the same announcement, alongside a claimed 4% WER on FLEURS and comparisons vs other services in the Model suite summary.

• Deployment shape: this is positioned as “batch + streaming” rather than a single do-everything endpoint, which maps cleanly to voice-agent pipelines (offline indexing vs live turn-taking) as described in the Model suite summary.

• Tooling surface: Mistral also mentions a new audio playground in Mistral Studio in the same drop, per the Model suite summary.

A secondary signal in community recaps is that Voxtral is being framed as finally dethroning Whisper after ~3 years, as stated in the Weekly recap note.

Upgraded Claude voice mode spotted in testing for desktop and mobile

Claude voice mode (Anthropic): An upgraded voice mode for Claude desktop and mobile is being reported as “spotted in testing,” with an early UI walkthrough shown in the Early voice demo and a longer write-up linked in the TestingCatalog follow-up.

What’s materially new here (vs typical “voice input” UX) is the suggestion of a more structured voice interaction flow on both desktop and mobile clients, based on the in-app demo in the Early voice demo; Anthropic has not, in these tweets, posted an official release note or rollout timeline.

Gemini Interactions API: transcribe audio from a URL with timestamps and speaker labels

Gemini Interactions API (Google): A simple voice-agent pattern is circulating for transcribing audio directly from a URL while requesting timestamps and speaker separation; the prompt template and output format are shown in the Prompt recipe, with a follow-up pointing to docs in the Docs pointer.

The concrete output contract being suggested is a line-oriented transcript of the form [Time] [Speaker]: [Text], which makes it easy to feed the result into downstream systems (summarization, action item extraction, CRM notes) without needing to parse free-form prose, as laid out in the Prompt recipe.

Grok voice messaging demo shows record-and-submit flow

Grok voice messaging (xAI): A Grok voice UX demo shows a simplified “record your voice, hit submit” interaction, with Grok handling the rest of the message lifecycle as shown in the Voice messaging clip.

<InlineVideo src="https://video.twimg.com/amplify_video/2019192900232548352/vid/avc1/1080x1080/o-ZgwMfBpzlSrb8P.mp4?tag=21" poster="https://pbs.twimg.com/amplify_video_thumb/2019192900232548352/img/PzH00wZHlAEYwIL1.jpg" caption="Voice messaging flow" width="1080" height="1080" />

არულ

👥 Workforce & culture: agent managers, displacement narratives, and adoption friction

The discourse itself was news: debates on how quickly white-collar workflows change, what ‘managing agents’ means, and how orgs handle adoption. Kept separate from product updates.

Forecast: spreadsheet/docs/memo roles “gone in two years” as agents take over

Workforce displacement discourse: A widely shared claim argues it’s becoming an anachronism to see offices full of people manually editing spreadsheets, reading docs, and writing memos—predicting these workflows are largely replaced within 2 years, with a follow-up clarifying the near-term impact as “the opportunity to be hired into a role like this will be gone” rather than the jobs instantly disappearing, as stated in Office work prediction and Hiring opportunity clarification. The point isn’t the exact timeline; it’s that “office-native” work is increasingly framed as a default target for agent automation rather than a protected domain.

Role shift: “agent manager” skills emphasized over hands-on execution

Agent management as leverage: Several threads converge on a practical framing: with current agent capability, “alpha” comes from being good at process—explaining what matters, supplying the missing context, and evaluating outputs quickly—rather than doing every step yourself, as argued in Management skill leverage. Related notes push the idea that any well-formed SOP/RFP/standards doc can become agent input, per SOPs as agent fuel, while also pushing back on the caricature that management becomes “talking to AI” instead of coordinating humans, as discussed in Managers coordinate humans. A separate practitioner take says managing agents is “nothing like managing” people, highlighting that the new skill is closer to specifying constraints and reviewing artifacts than supervising interpersonal dynamics, per Agent management difference.

Adoption friction: under 20% of college students use ChatGPT beyond school/job search