GPT-5.3-Codex adds high-cyber gating – claims 25% faster, 90% Next.js

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

OpenAI began a phased GPT-5.3-Codex rollout across Cursor, GitHub, and VS Code; OpenAI says it’s the first model classified as “high cybersecurity capability” under its Preparedness Framework, so broader API access is explicitly coupled to mitigation work. The launch post claims ~25% faster performance vs GPT-5.2-Codex plus SOTA results on SWE-Bench Pro and Terminal-Bench; independent artifacts aren’t bundled in the chatter. Sam Altman also says the Codex app hit 1M downloads in week one and usage grew 60%+ WoW; Free/Go access continues post-promo with tighter limits implied.

• Vercel eval signal: Vercel’s Next.js harness shows GPT-5.3 Codex (xhigh) at 90% success on 20 tasks vs Claude Opus 4.6 at 80%; narrow domain + single setup caveats apply.

• Partner rollout friction: @code said GPT-5.3-Codex briefly appeared, then the VS Code rollout was paused for users who didn’t see it yet.

• UX/behavior notes: Cursor reports 5.3 “noticeably faster than 5.2”; one user says “discuss” no longer reliably prevents tool execution, while “give me options” does.

Top links today

- OpenAI ads in ChatGPT announcement

- GPT-5.3 Codex rollout details

- OpenAI unified developer hub

- HBR on AI productivity and burnout

- FullStack-Agent paper and benchmark

- vLLM PR adding GLM-5 support

- Claude Opus 4.6 for nonprofits

- Perplexity Deep Research on Opus 4.6

- Aurora Alpha model page on OpenRouter

- CNBC on BNY Mellon digital employees

Feature Spotlight

GPT-5.3‑Codex rolls into IDEs (Cursor/GitHub/VS Code) with “high cyber” gating

GPT‑5.3‑Codex is now landing directly where engineers work (Cursor/GitHub/VS Code) while being treated as “high cyber” capability—forcing teams to plan for phased access, safeguards, and new baseline agent speed.

High-volume cross-account story: OpenAI’s GPT-5.3‑Codex expands across major coding surfaces, with explicit Preparedness “high cybersecurity capability” handling and immediate developer benchmark/UX feedback. (Excludes ads/monetization and non-Codex model news.)

Jump to GPT-5.3‑Codex rolls into IDEs (Cursor/GitHub/VS Code) with “high cyber” gating topicsTable of Contents

🧑💻 GPT-5.3‑Codex rolls into IDEs (Cursor/GitHub/VS Code) with “high cyber” gating

High-volume cross-account story: OpenAI’s GPT-5.3‑Codex expands across major coding surfaces, with explicit Preparedness “high cybersecurity capability” handling and immediate developer benchmark/UX feedback. (Excludes ads/monetization and non-Codex model news.)

GPT-5.3-Codex begins phased IDE rollout with Preparedness “high cybersecurity” handling

GPT-5.3-Codex (OpenAI): OpenAI began rolling out GPT-5.3-Codex to Cursor, GitHub, and VS Code as a phased release, and says it’s the first model treated as high cybersecurity capability under its Preparedness Framework—so API expansion is tied to scaling mitigations, per the rollout announcement and Altman’s note about the “extra work” in rollout note.

OpenAI frames this as a “small set of API customers” start with broader access “over the next few weeks,” as written in the rollout announcement, with the public release details centralized in the launch post.

OpenAI’s GPT-5.3-Codex post emphasizes 25% speedup and stronger agentic coding

GPT-5.3-Codex (OpenAI): OpenAI’s launch write-up claims ~25% faster performance than GPT-5.2-Codex plus gains in reasoning and “professional knowledge,” and highlights state-of-the-art results on SWE-Bench Pro and Terminal-Bench, as described in the launch post that OpenAI Devs shared in rollout announcement.

The same post also positions the model as more capable on long-running, tool-heavy coding tasks (including internal use for debugging its own deployment), which is part of why the rollout is being handled more cautiously, as OpenAI reiterates in rollout announcement.

Codex app passes 1M downloads in week one; Free/Go access stays (with possible limits)

Codex app (OpenAI): Altman says the Codex app hit 1M downloads in its first week and overall Codex usage grew 60%+ week over week, as posted in download and growth stats.

OpenAI also says it will keep Codex available to Free/Go users after the promotion—though limits may be reduced—per the same download and growth stats, with a separate confirmation that Free access is staying in Free access note.

Cursor adds GPT-5.3 Codex and calls it faster than 5.2

GPT-5.3 Codex in Cursor (Cursor/OpenAI): Cursor says GPT-5.3 Codex is now available and “noticeably faster than 5.2,” with internal engineers already preferring it, according to the Cursor availability note and follow-on enthusiasm in Cursor user reaction.

Cursor leadership also notes the integration required additional safeguards because OpenAI labeled the model “high cybersecurity risk,” as described in the Cursor safety note.

Vercel posts Next.js agent evals showing GPT-5.3 Codex (xhigh) at 90%

Next.js agent evals (Vercel): Vercel’s shared evaluation table shows GPT-5.3 Codex (xhigh) at a 90% success rate on 20 Next.js generation/migration tasks, ahead of Claude Opus 4.6 at 80%, as shown in evals screenshot and reflected on the public page linked via the benchmarks chart.

Treat it as a narrow harness signal (Next.js-specific tasks + one evaluation setup), but it’s the most concrete numeric comparison in today’s rollout chatter, as presented in evals screenshot.

Early adoption chatter shifts toward Codex 5.3 as a fast default

Codex 5.3 usage sentiment: Multiple builders describe switching their default to Codex 5.3 primarily due to speed while retaining “most of the intelligence,” with one saying it’s “becoming my primary model” in daily driver note and Cursor reinforcing it’s now “preferred… for many of our engineers” in Cursor availability note.

The same thread-space frames the tradeoff versus Claude Opus as execution vs deliberation—“Opus feels like a better thinker, 5.3… a better doer,” as written in model comparison note—and highlights price as a practical factor (“way cheaper than Opus 4.6”), as claimed in cost comment.

There’s also visible disagreement about relative quality (some say Opus 4.6 felt worse than 4.5), which shows up as uncertainty rather than consensus, per model switching note.

Codex 5.3 prompt tweak: “give me options” to reduce auto-execution

Prompting pattern (Codex 5.3): One practitioner reports Codex 5.3 is “more trigger-friendly” (more likely to start writing/running code), and that a lightweight “discuss” directive no longer reliably prevents execution—switching to “give me options” works better for keeping it in advisory mode, per prompting behavior note.

This is a small but concrete workflow adjustment for teams that rely on a “talk-first” pass before letting the agent touch the repo, as described in prompting behavior note.

Reverse-engineered Codex CLI strings hint at multi-agent and “Codex Cloud” features

Codex CLI (OpenAI) – reverse engineering: A binary sleuthing thread claims Codex CLI v0.98.0 contains strings and flags implying unreleased features like multi-agent collaboration, remote cloud execution, a skills catalog, structured code review, and ghost commits/rollbacks, as laid out in feature list screenshot and echoed in the retweet in thread amplification.

None of this is confirmed on official roadmaps in today’s tweets; it’s best read as “likely-in-progress product surface area,” with the concrete artifact being the string/flag inventory shown in feature list screenshot.

Codex 5.3 “effort” setting: reports that High matters more than Medium

Runtime setting pattern (Codex 5.3): A user reports that dropping Codex 5.3 down to Medium cost/effort led to worse outcomes than expected, and that High is needed to get the “good work” the model can do, per effort setting note.

It’s an early datapoint that “effort” selection may be more than a latency knob for 5.3-style agent tasks, as implied by effort setting note.

OpenAI moves Platform API docs into a unified developer hub

Developer docs (OpenAI Devs): OpenAI says its Platform API docs now redirect into a unified developer hub that consolidates API docs, guides/cookbooks, and Codex + ChatGPT app content, as announced in docs consolidation note with the landing page at developer hub.

For teams maintaining internal runbooks, this is a concrete doc-surface change (URLs, navigation, canonical references) rather than a model capability update, as stated in docs consolidation note.

🧠 Cursor Composer 1.5: RL-scaled coding model + self-summarizing long-task UX

Cursor ships and markets Composer 1.5 as a speed/intelligence tradeoff model, with training details (RL scaled far past v1) and explicit long-task context handling via self-summarization. (Excludes the GPT-5.3‑Codex rollout story.)

Cursor releases Composer 1.5 for coding workflows

Composer 1.5 (Cursor): Cursor says Composer 1.5 is now available and is meant to “strike a strong balance between intelligence and speed,” per the Release announcement and the linked Release blog. It’s framed as a model you can keep in your interactive loop. That’s the practical change.

• Rollout surfaces: Cursor is pushing it directly inside the product UI, with “learn more” details consolidated in the Cursor post.

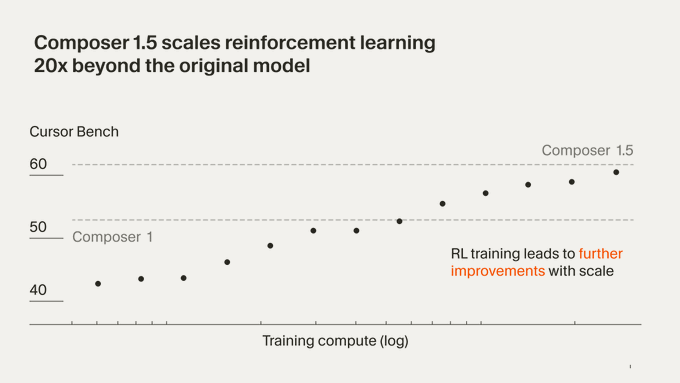

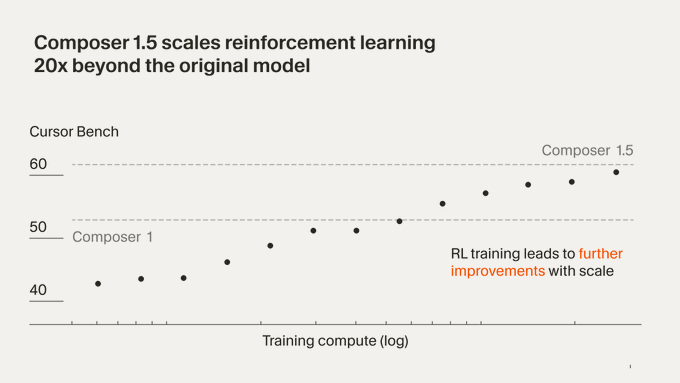

Composer 1.5 claims 20× more RL than Composer 1

Composer 1.5 (Cursor): Cursor attributes the jump from Composer 1 to 1.5 primarily to scaling reinforcement learning ~20× further on the same pretrained model, as described in the Release blog. It also claims the RL phase used more compute than the original pretraining run.

This is a concrete training signal. It implies the product improvements are coming from post-training scale, not a new foundation model.

Composer 1.5 ships self-summarization for long tasks

Composer 1.5 (Cursor): Cursor says Composer 1.5 can “handle longer tasks” via self-summarization when it hits context limits, aiming to preserve accuracy across varying context lengths as outlined in the Release blog. Community chatter repeats this as “infinite context” behavior, while noting it’s ultimately a summarization strategy rather than a larger window, per the User recap.

This is directly about long-running agent sessions. It’s the UX fix many teams actually notice.

Builders start using Composer 1.5 for subagents and rapid iteration loops

Workflow usage (Cursor): Multiple builders are describing Composer 1.5 as a good fit for rapid iteration and subagent work—“rapid iterations in flow state” in the Iteration post and “love using this model for subagents” in the Subagent comment.

This is a clear deployment pattern: keep a fast-enough model on sub-tasks to reduce latency in multi-agent harnesses.

Composer 1.5 adds adaptive “thinking tokens” behavior

Composer 1.5 (Cursor): Cursor describes Composer 1.5 as using thinking tokens to modulate reasoning depth—quick on simpler prompts and more deliberate on harder ones, as explained in the Release blog. This is the kind of behavior that changes how agents feel in-editor.

It’s a specific knob/behavioral contract. It’s not just “smarter.”

Composer 1.5 pricing chatter centers on Sonnet 4.5 comparisons

Cost/speed tradeoff (Cursor): At least one early reaction flags that Composer 1.5 is “more expensive than Sonnet 4.5,” paired with an expectation that quality should justify that delta, per the Cost comparison.

There’s no pricing table in these tweets. It’s a sentiment signal.

Cursor Bench plot sparks confusion about what’s being compared

Evaluation communication (Cursor): A user asks whether the Cursor Bench graphic is comparing Composer 1 vs 1.5 via dashed lines or first/last points, highlighting ambiguity in how the plot should be read in the Plot question. The chart itself claims RL was scaled 20×, but the visual mapping isn’t obvious.

This is a small but real issue: teams relying on internal eval plots need unambiguous legends.

🧰 Claude Code & Claude apps: CLI hardening, memory rules, and mobile “Tasks” hints

Anthropic/Claude tooling chatter centered on Claude Code CLI 2.1.38 fixes (including security hardening), default-model control workarounds, and early signs of a Tasks mode in mobile apps. (Excludes GPT-5.3‑Codex rollout.)

Claude Code CLI 2.1.38 hardens heredoc parsing and blocks .claude/skills writes in sandbox

Claude Code CLI 2.1.38 (Anthropic): 2.1.38 includes a security-focused tweak to heredoc delimiter parsing intended to reduce command-smuggling risk, and it also blocks writes to the .claude/skills directory when running in sandbox mode, per the changelog thread.

This is a concrete tightening of the “agent can run shell” threat surface, and it lands alongside other small CLI correctness fixes outlined in the changelog at Changelog.

Claude Code workaround remaps default Haiku subagents to Sonnet/Opus via settings.json

Claude Code (Anthropic): A practical workaround is circulating to permanently prevent default sub-agents from using Haiku by remapping the alias with an env var in ~/.claude/settings.json, using ANTHROPIC_DEFAULT_HAIKU_MODEL to point at a Sonnet/Opus variant, as described in the settings snippet with additional caveats in the Max plan note.

This matters in large repos where cheaper sub-agents can miss key logic, and it’s one of the few “sticky” knobs people have for sub-agent model selection right now.

Claude Code 2.1.38 updates auto-memory prompts with explicit save/avoid rules

Claude Code (Anthropic): Prompt-level changes in 2.1.38 tighten what Claude should store as durable memory—shifting from generic “record learnings” language to explicit rules like saving stable cross-session patterns (architecture, preferences, recurring fixes) while avoiding session-specific state and unverified notes, as summarized in the prompt diff notes and reflected in the prompt diff at Version compare.

• Task example markup fix: The Task tool examples also fix a mismatched closing tag in the example agent descriptions, which reduces “example parsing” ambiguity for the model, per the example block fix.

Claude Code CLI 2.1.38 fixes VS Code scroll regression and session UX bugs

Claude Code CLI 2.1.38 (Anthropic): The 2.1.38 release ships a cluster of CLI/VS Code usability fixes—most notably a VS Code terminal scroll-to-top regression from 2.1.37, Tab autocomplete queuing slash commands, and duplicate sessions when resuming inside the extension, as listed in the changelog thread and detailed in the release notes via Changelog.

These are small but high-frequency papercuts for people living in terminal-first agent loops, especially when sessions are resumed repeatedly across days.

Claude Code ops guidance: explicitly force Sonnet/Opus for subagents on large repos

Claude Code (Anthropic): A second, complementary pattern is to explicitly instruct the parent agent to use Sonnet or Opus for sub-agents—because Explore defaults to Haiku and Task is inherited—reducing missed logic risk on complex codebases, as shown in the subagent model output.

This is framed less as “taste” and more as reliability control: the model choice for background exploration can change what facts even make it into the main agent’s context.

Claude Code Read() tool supports native PDF reads with page chunking

Claude Code (Anthropic): Builders are calling out that Claude Code’s Read() tool can ingest PDFs directly (file drop or path) and that it behaves better than pre-converting to Markdown for doc-heavy workflows, as shown in the PDF Read tool notes.

The same notes highlight operational constraints that matter for long documents: large PDFs need the pages parameter and appear capped at 20 pages per request, which pushes you toward chunked reads and targeted section pulls rather than one-shot ingestion.

Claude mobile app shows a new “Tasks” section with “New task” CTA

Claude mobile apps (Anthropic): Screenshots suggest Anthropic is testing a dedicated “Tasks” section in the Claude mobile navigation, including an empty-state “Your tasks will show up here” screen and a “New task” button, per the Tasks UI screenshots.

This reads like a UI surface for longer-lived, async-ish work units on mobile (distinct from chat threads), but it’s still only a UI sighting—no public behavior guarantees or API surface are visible yet.

Anthropic expands Opus 4.6 access to nonprofits on Team/Enterprise at no extra cost

Claude Opus 4.6 (Anthropic): Nonprofits on Team and Enterprise plans now get Opus 4.6 access at no additional cost, according to the policy announcement with program details on the eligibility page at Nonprofit access page.

For organizations already standardized on Claude in regulated settings, this is a straightforward policy shift that changes which tier “most capable model” is available under.

Claude Code 2.1.38 removes a usage-limit notifications feature flag

Claude Code CLI 2.1.38 (Anthropic): The 2.1.38 flag set reportedly drops tengu_c4w_usage_limit_notifications_enabled, per the flag removal note with the underlying change visible in the version diff at Version compare.

The tweets don’t clarify whether this means the behavior became default, moved elsewhere, or was fully removed; it is at least a configuration surface change people may notice if they were depending on those notifications.

Claude chat app UI refresh circulates via screen recording

Claude app (Anthropic): A short screen recording of what’s described as a “new UI” for Claude is being shared, showing a cleaner chat surface and fast response interaction in the UI recording.

No official release notes are referenced in the tweets, so it’s unclear which platforms/tiers this applies to or whether it’s an experiment versus a broad rollout.

🧩 Skills & extensions boom: universal loaders, web-research packs, and safer execution

Installable skills/plugins and ‘skills directories’ accelerated: universal skill loaders, web research packs, and “run skills with any LLM” alternatives. (Excludes MCP/protocol plumbing and excludes the GPT-5.3‑Codex rollout.)

Acontext open-sources a model-agnostic Agent Skills API with stdout/stderr visibility

Acontext (memoDB): Acontext open-sourced an “Agent Skills API” positioned as an alternative to provider-locked skills systems; it’s pitched as model-agnostic (via OpenRouter), gives developers visibility into skill runs (stdout, stderr, artifacts), and is self-hostable—details are described in the Launch summary and linked from the GitHub repo and Docs page.

The core claim is operational: instead of the LLM implicitly deciding when a skill runs, the app layer can gate execution (approvals/guardrails) while still supporting the same “skills” abstraction, as outlined in the Launch summary.

OpenSkills adoption signal: 8.1K stars and a universal loader pattern

OpenSkills (Open source): OpenSkills is being framed as a cross-agent distribution layer (“one CLI, every agent”), with reported traction at 8.1K GitHub stars and 21,000/month npm downloads, plus a convention of syncing skills into AGENTS.md and loading them via CLI, as described in the Project stats and its GitHub repo.

The emphasis in the tweets is less on a new release and more on the standardization pattern: a single skill format that can travel between different coding agents/harnesses without rewriting tool glue, per the Project stats.

Parallel agent skills pack bundles four web research primitives

Parallel agent skills (parallel-web): A “four skills” web pack is being promoted as the minimal set to make agents useful for knowledge work—web search, web extract, data enrichment, and deep research—with install flows shown for Claude Code via plugin marketplace and a /parallel:setup step, as laid out in the Four-skill description and follow-up install notes in the Claude Code setup steps.

The concrete engineering angle is packaging: one named skill pack intended to be reused across multiple agent UIs while keeping execution behavior legible (search → fetch URLs → enrich lists → synthesize), as described in the Four-skill description.

OpenClaw details VirusTotal-backed scanning and daily re-scans for skills

OpenClaw (ClawHub skill security): Following up on Skill scanning (initial VirusTotal integration), OpenClaw is now describing automatic scanning for every published skill, plus daily re-scans and blocklisting for flagged threats, as summarized in the Partnership details.

The operational detail added here is lifecycle scanning (pre-approval checks plus recurring rechecks), rather than a one-time “review at publish time,” as spelled out in the Partnership details.

Playbooks skill scan: one command to flag suspicious local skills

Playbooks (skills hygiene): A one-liner is circulating for auditing locally installed agent skills—npx playbooks scan skills—with the intent to surface flagged skills and remove anything suspicious, as demonstrated in the Command demo.

This is framed as supply-chain hygiene for agent ecosystems (skills as executable units), with the workflow described directly in the Command demo clip.

skills.sh positions “npx skills add …” as a portable skill distribution layer

skills.sh (Agent Skills Directory): The skills.sh directory is being highlighted as a shared install surface for “agent skills” across many agents (Claude Code, Copilot, and others) using an npx skills add owner/repo flow, as shown in the Install snippet and described on the Agent skills directory.

The thread also points to skills packs being installable outside a single vendor’s plugin marketplace, with --global and “install all” semantics referenced in the Install snippet, which frames skills as a portability primitive rather than a single-tool feature.

Google publishes gemini-skills, a public skills library for Gemini API workflows

gemini-skills (Google Gemini): Google published a public “Gemini Skills” repository described as a library of skills for Gemini API/SDK and model interactions, as announced in the Repo announcement and visible in the

.

The repo description positions it as reusable guidance/components for Gemini model interactions, with install and usage conventions described on the GitHub repo.

Keep.md exposes bookmarks as a Markdown API for agents

Keep.md (agents + content capture): Keep.md is pitched as a way to turn any Chrome tab, X bookmark, or URL into a Markdown API that agents can poll on a schedule for summarization or drafting workflows, as described in the Product description and on the Keep.md page.

The product framing includes a free tier (up to 50 links) and paid plans, per the Keep.md page, with the core engineering idea being “content capture → normalized markdown → agent retrieval” rather than bespoke scrapers.

🕹️ Running agents in practice: harnesses, parallel workspaces, and “ops friction” reports

Ops-focused tooling and lived experience running many agents: parallel workspaces, dashboards, long-running runs, and reliability pain points. (Excludes installable skills/plugins and excludes the GPT-5.3‑Codex rollout.)

Codex app long-session lockups push some users back to terminal workflows

Codex app (OpenAI): A practitioner report says the Codex app “kept locking up” on long sessions, driving a return to terminal-based setups despite liking the app’s form factor, as described in Back to terminals note.

The same post calls Claude Code “mediocre” in that specific usage and mentions trying alternative monitoring tooling next, which is a reminder that agent UX can be gated by long-session stability as much as model quality.

Verdent refreshes its parallel agent workflow with isolated workspaces and plan-first execution

Verdent (Verdent AI): Verdent’s latest upgrade is being framed around parallel multi-agent execution with isolated workspaces and a plan-first “Plan → Code → Verify” loop, alongside benchmark claims of 76.1% on SWE-bench Verified (single attempt) and 81.2% pass@3 as summarized in Upgrade overview.

• Surface area: It’s presented as both a macOS desktop app (“Deck”) for orchestrating multiple workspaces and a VS Code extension, per the same Upgrade overview thread.

• User controls: The pitch emphasizes visible diffs, verification, and the ability to compare/merge competing implementations across workspaces, as described in Plan-first execution note.

The evidence here is vendor-reported; the thread doesn’t include a public eval artifact beyond the quoted SWE-bench numbers.

🧿oracle 0.8.5 focuses on long-running agent stability (timeouts, zombies, browser controls)

🧿oracle (steipete): Version 0.8.5 is out with stability work aimed at using GPT-5.2 Pro from agents, including new CLI flags for long-running sessions (timeouts and “zombie” detection) plus MCP browser controls and a bridge workflow, as summarized in Release mention and detailed in the linked Release notes.

The changes are about preventing stuck sessions and preserving progress, which is the failure mode most teams hit once agents run for hours rather than minutes.

RepoPrompt agent mode highlights interactive context building and mid-run cancellation

RepoPrompt (Agent mode): A user report highlights an ops-oriented difference versus “other harnesses”: the context builder runs interactively in the UI, progress is visible, and runs can be cancelled mid-flight, as shown in Context builder UI.

This is less about model capability and more about controlling long-running work (watching what the agent is doing, stopping it early, and re-running with different context selection).

Superset pitches a terminal UI for running multiple coding agents in parallel

Superset (superset.sh): Superset is being positioned as a “terminal for coding agents” that can run multiple CLI agents in parallel while keeping changes isolated via git worktrees, as described on its product site in Superset landing page. It’s also being shared as a “clone this repo and build your AI coding workspace” starting point in Workspace clone suggestion, pointing to an open-source repo as the distribution surface.

The practical point is operational: one place to supervise concurrent agent runs, rather than juggling multiple terminal windows and branches.

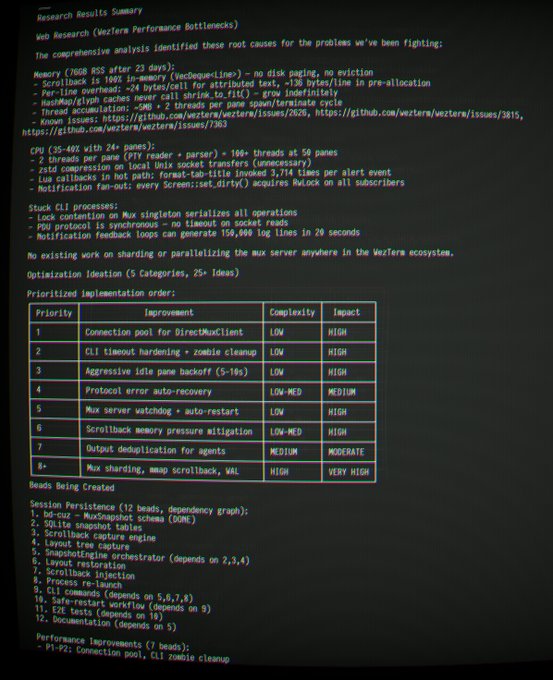

WezTerm under agent-swarm load prompts a “FrankenTerm” fork for persistence and perf

FrankenTerm (terminal ops): A detailed field report says WezTerm “dies horribly under the extreme load” of agent swarm sessions (memory leak in the mux server; no good way to rescue/serialize sessions), motivating a fork/rename effort to “FrankenTerm” for full-stack control, as described in WezTerm overload notes.

• Failure mode: The post highlights lost work/time when the terminal can’t sustain many panes and long-running agent output, per WezTerm overload notes.

• Persistence pattern: It also calls out a “git-backed .jsonl + git-ignored sqlite” dual-store pattern for recoverable state in these workflows, again in WezTerm overload notes.

This is an ops friction story, not a model story: if your UI can’t survive long sessions, you’ll be forced back into shorter loops even when the agent could keep going.

OpenClaw teams experiment with “claws-only” ops channels and multi-agent pitching

OpenClaw (community ops): Teams are creating dedicated “claws-only” channels where multiple OpenClaw instances interact and coordinate, as shown in a Discord screenshot in Claws-only channel.

• Multi-agent editorial workflow: One example is having three OpenClaws discuss and pitch publishable story ideas, with results shared in Agents pitch stories.

• Live evaluation culture: There’s also a push to livestream a “battle royale” task competition with audience voting, per Battle royale suggestion.

It’s a real signal that “agent ops” is becoming a social/team practice, not just a solo developer workflow.

Browserbase + OpenClaw gets pitched for delegated web tasks like cancellations

Browser automation (OpenClaw + Browserbase): A concrete use case being discussed is wiring Browserbase into OpenClaw to handle delegated “go cancel my subscription to X” tasks, as described in Browserbase integration idea.

This is a small but telling ops pattern: the agent value shifts when it can drive a real browser session under supervision, not just generate instructions.

📊 Benchmarks & eval signals: arenas, deep-research scoring, and better leaderboards

Evaluation chatter that’s actionable for model selection: Arena standings, Deep Research benchmark deltas, and updated evaluation methodology for image models. (Excludes GPT-5.3‑Codex-specific benchmarks, covered in the feature.)

Claude Opus 4.6 takes #1 across Code and Text Arena leaderboards

Claude Opus 4.6 (Anthropic): Arena reports Opus 4.6 thinking landing at #1 across both Code and Text Arena, with thinking + non-thinking taking the top-2 slots on both leaderboards, per the Arena leaderboard claim and the linked Code Arena link that points to the live Code Arena leaderboard. This is a clean “model selection” signal because it reflects paired comparisons across a wide prompt mix rather than a single vendor eval.

• Leaderboard positions: Arena’s post calls out Code Arena score 1576 and Text Arena score 1504, while also claiming Anthropic holds 4 of the top 5 in Code Arena, as stated in the Arena leaderboard claim.

Treat it as a directional signal; the tweets don’t include a reproducible prompt set or run artifacts beyond the public leaderboards.

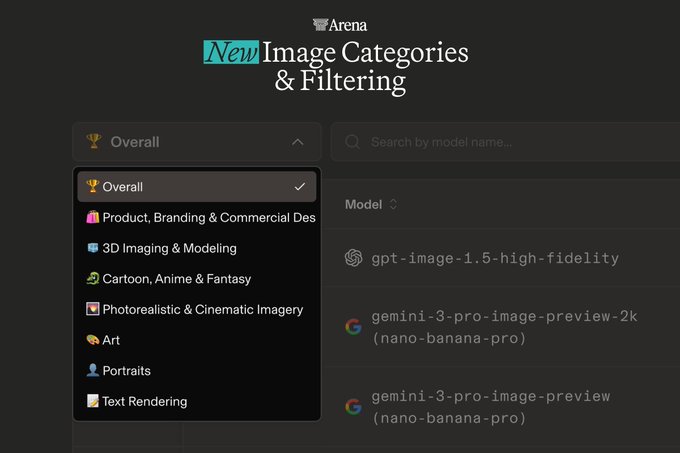

Image Arena splits into prompt categories and filters noisy prompts

Text-to-Image Arena (Arena): Arena is updating its Image Arena methodology by adding category-specific leaderboards and applying prompt quality filtering after analyzing 4M+ user prompts, as described in the Methodology update thread.

• New prompt categories: Arena lists seven categories (Product/branding, 3D, Cartoon/anime/fantasy, Photoreal/cinematic, Art, Portraits, Text rendering) in the Category list follow-up.

• Quality filtering: Arena says it removed roughly 15% of “noisy or underspecified” prompts and recomputed rankings for more stable results, per the Filtering note post and the public Leaderboard page.

This matters for model selection because it reduces “one number to rule them all” effects and makes it easier to pick a model by workload (logos vs photoreal vs typography).

Perplexity Deep Research upgrades its backend to Claude Opus 4.6

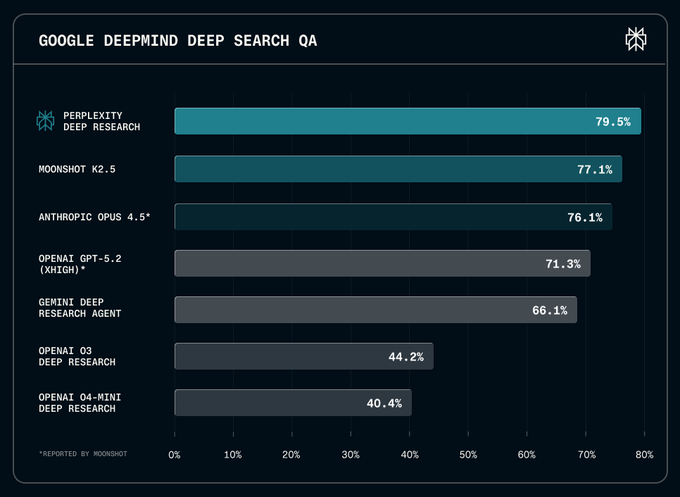

Perplexity Deep Research (Perplexity): Perplexity says Deep Research now runs on Claude Opus 4.6, rolling out to Max users first, and claims improvements on internal + external benchmarks in the Backend model switch post.

• Benchmark delta snapshot: The shared bar chart shows “Perplexity Deep Research” at 81.9% on “GOOGLE DEEPMIND DEEP SEARCH QA,” ahead of Moonshot K2.5 (77.1%) and “Anthropic Opus 4.5*” (76.1%), as shown in the Backend model switch image.

The post doesn’t detail prompt policy, citations strategy, or retrieval stack changes—only the model swap—so attribution of gains to Opus 4.6 vs pipeline tweaks remains unclear.

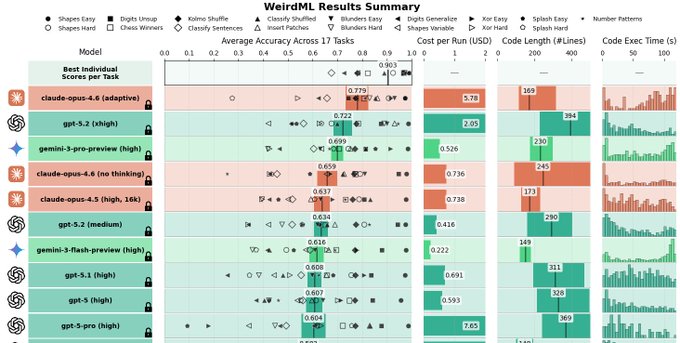

WeirdML: Opus 4.6 passes GPT-5.2-xhigh, with shorter solutions but slower runtime

WeirdML (community eval signal): A WeirdML update claims Opus 4.6 “dethroned GPT-5.2-xhigh” and notes an interesting trade-off: it “finds much shorter solutions” but “code execution times went up,” per the WeirdML summary.

That combination (shorter code, slower execution) is a useful reminder that “cleaner” solutions in text can still hide expensive algorithms or missed optimizations; the tweet asks whether the length gap is due to optimization choices, as described in the WeirdML summary.

🧾 ChatGPT ads experiment: labeling rules, targeting signals, and trust debates

OpenAI begins testing sponsored ads in ChatGPT for US Free/Go users, with explicit separation from answers and new user controls; heavy discourse on incentives and UX trust. (Excludes GPT-5.3‑Codex rollout.)

ChatGPT starts US ads test for Free/Go users with sponsored block separate from answers

ChatGPT ads (OpenAI): OpenAI says it’s beginning a U.S.-only test of sponsored ads to a subset of Free and Go users; ads are labeled “sponsored,” visually separated from the model response, and OpenAI claims ads “do not influence ChatGPT’s answers,” per the Ads rollout post that also shows the new in-app placement.

• Ad selection + privacy claims: One summary says eligible ads are matched using signals like the current chat topic, past chats, and prior ad interactions—while advertisers don’t get access to chats/memories and instead see only aggregate reporting (views/clicks), as described in the Mechanics breakdown.

• User controls: The same thread describes controls to dismiss ads, manage personalization, and delete ad data, plus an option to turn ads off in exchange for fewer daily free messages, according to the Mechanics breakdown.

Altman frames ChatGPT ads as “education,” rejects ads inside the response stream

Ads UX stance (OpenAI): Sam Altman argues advertising should be “education, not persuasion,” positioning ads as a way to reduce “capability overhang” (what AI can do vs what people know), as summarized in the Altman ad framing clip.

He also says OpenAI “will never insert ads into response stream” (calling that deceptive), while criticizing Anthropic’s Super Bowl messaging about chat ads, according to the Response-stream clip.

Daniela Amodei warns ad incentives can push sycophancy and engagement over truth

Incentives debate (Anthropic): Daniela Amodei argues models need to avoid sycophancy (blind agreement), and frames ad-based monetization as an incentive to optimize for engagement time even when that conflicts with truth or user well-being, as described in the Sycophancy warning clip.

The claim is used to justify Anthropic’s “no ads” posture and to raise trust questions as consumer chat apps begin experimenting with ad placements, per the Sycophancy warning clip.

CNBC: ChatGPT ads begin testing; OpenAI expects ads to be under half of long-term revenue

Business context (CNBC/OpenAI): A CNBC report excerpt says OpenAI is testing ads in ChatGPT (clearly labeled at the bottom and “not affecting answers”), while noting ChatGPT is “back to exceeding 10% monthly growth” and is at “over 800 million weekly users,” as quoted in the CNBC excerpt.

The same post claims OpenAI expects ads to be less than half of long-term revenue, with the full story linked in the CNBC report.

OpenAI Podcast episode explains ChatGPT ad principles and guardrails

OpenAI Podcast (OpenAI): OpenAI published a podcast episode featuring ads lead Asad Awan, framing the rationale for bringing ads to ChatGPT’s Free/Go tiers and the principles meant to keep ads clearly separated from answers, as announced in the Podcast post.

The listening links (Spotify/Apple/YouTube) are shared in the Platform links post, including the Apple episode and the YouTube episode.

🧭 Workflow patterns: review bottlenecks, spec alignment, and “AI intensifies work”

Hands-on practices for shipping with agents: code-review as the constraint, lightweight design docs, and the observed burnout/mental-load cost of faster loops. (Excludes tool release notes and excludes the GPT-5.3‑Codex rollout.)

AI productivity gains can translate into higher work intensity and burnout

AI intensifies work (HBR): A new round of discussion points to evidence that AI tooling can increase cognitive load and burnout even when it increases output, as summarized by Simon Willison in HBR burnout note and linked again in HBR story link. The reported dynamic is that faster iteration encourages more parallel tasks, more checking/verification, and “one more prompt” loops—raising mental exhaustion rather than reducing total work.

• What’s measurable here: the cited study involves ~200 employees at a U.S. tech company, per the synopsis referenced in HBR burnout note.

Code review throughput is the limiting factor in agent-driven coding

Code review bottleneck: Following up on Read every diff (supervise diffs as a firehose), more builders are explicitly calling out that “if you're not reading every line of code… you're not going to make it,” as argued in Read every line take, while others describe their personal bottleneck as “how quickly i can read code, validate that code,” per Review speed bottleneck. A recurring failure mode is that “simple” agent sessions can produce multi‑hour review burdens via massive diffs, like the +14,626 line weekend MVP screenshot shared in 15k-line diff example.

• What changes in practice: the limiting step shifts from writing to review bandwidth; screenshots like 15k-line diff example illustrate how quickly “small” experiments can become review-sized projects.

• Social proof: multiple independent voices are converging on review as the hard constraint, including the stricter “read every line” stance in Read every line take and the more pragmatic “I’m limited by my ability to review” framing in Review speed bottleneck.

A short design doc before code is framed as the fastest way to cut PR churn

PR alignment pattern: A practitioner claim gaining traction is that spending ~10 minutes aligning on a ~200‑line Markdown design/architecture doc (often AI-assisted) can cut PR rework by 80–90%, as described in 200-line doc claim and reiterated in Design time beats review time. The core mechanic is shifting ambiguity resolution earlier, so code review becomes verification instead of requirements negotiation.

• Why this is surfacing now: with agents producing code faster, review cycles become the pacing item; the doc is pitched as a cheap coordination artifact that reduces downstream back-and-forth, per 200-line doc claim.

Some builders stop reading AI plans and instead use long “grill me” alignment chats

Claude planning workflow: One practitioner reports they’ve stopped reading the final plan output entirely; instead, they use a prolonged back-and-forth where the model “grills” them until both share the same design concept, treating the generated plan as a compressed artifact of the conversation, as described in Don’t read the plan and followed up with a promise to share the underlying skill in PRD skill tease.

• Practical implication: the plan becomes a checkpoint/snapshot, not the primary interface; the value is in exploring “branches of the design tree” during the conversation, per Don’t read the plan.

Testing discipline is framed as the real safety rail for agent coding

Testing discipline over language wars: A view resurfacing is that statically typed vs dynamically typed languages matters less than having strong unit tests plus acceptance scenarios; the claim is that shipping with agents “without a good solid suite of unit tests… and acceptance scenarios is suicide,” as stated in Tests over language debates. The same thread points at using compiler warnings/refactoring as back-pressure, but positions tests as the core control surface.

• Why it matters for agent output: when diffs are large and fast, tests become the scalable verification layer, per Tests over language debates.

“Wrangling AI” is framed as a long-lived skill, with developers as early beneficiaries

Wrangling AI as a skill: A widely shared framing is that the next 10–20 years will reward people who can harness AI systems effectively across industries, with software developers positioned as early “first movers” because they can evaluate agent output against deep domain expertise, as argued in Wrangling AI skill. The chisel→table saw analogy is used to acknowledge craft change while staying optimistic about leverage.

• Tone shift: the emphasis is less on model capabilities and more on operator skill—learning how to “multiply its efforts,” per Wrangling AI skill.

🏢 Enterprise agent deployment reality: embedded teams, “digital employees,” and back office automation

Signals that enterprises still need hands-on integration help: embedded engineers, back-office automation, and org restructuring around agent rollouts. (Excludes ChatGPT ads and excludes GPT-5.3‑Codex rollout.)

OpenAI reportedly expands embedded engineering help for enterprise agent rollouts

OpenAI enterprise deployment (OpenAI): Reporting claims OpenAI is hiring “hundreds” of engineers in a consulting/implementation posture to get agents working inside real enterprise systems, as described in the Enterprise consulting report—a signal that out-of-the-box agents still fail on tool wiring, context, and workflow integration.

The same thread notes Anthropic is also working closely with enterprise customers, and cites a retailer case where outside help was needed after “basic tasks” failed in pilots, per the Enterprise consulting report.

Goldman Sachs and Anthropic embed engineers to automate back-office workflows

Goldman Sachs × Claude agents (Anthropic): Goldman reportedly worked with embedded Anthropic engineers for ~6 months to build autonomous systems for high-volume back-office processes like trade reconciliation and client onboarding, as summarized in the CNBC snippet; the framing is “efficiency gains” and slowing future headcount growth rather than immediate layoffs.

The report emphasizes executives were surprised Claude performed well on rule-heavy work beyond coding, according to the CNBC snippet.

BNY Mellon deploys 134 AI “digital employees” for repetitive ops work

BNY Mellon “digital employees” (enterprise ops): BNY Mellon says it has deployed 134 AI “digital employees” focused on repetitive tasks in payment operations, with managers overseeing both human and AI staff, per the CNBC report excerpt.

The same post highlights org-level impact signals—BNY headcount at 48,100 vs ~53,400 in 2023 and a claimed potential 19% EPS boost from AI/tech investment—according to the CNBC report excerpt.

Anthropic adds Opus 4.6 access for nonprofits on Team/Enterprise at no extra cost

Claude Opus 4.6 access policy (Anthropic): Anthropic says nonprofits on Team and Enterprise plans now get Claude Opus 4.6 “at no extra cost,” positioning it as an impact lever for orgs with constrained resources, per the Policy announcement and the linked Nonprofit plan page.

The announcement frames this as expanding access to frontier capability inside existing enterprise governance controls (Team/Enterprise), as stated in the Policy announcement.

🛡️ Security incidents: prompt injection in translation and “agent does crimes” failure modes

Concrete safety failures and exploit surfaces: prompt-injection in consumer translation UX and examples of models generating harmful operational plans when given the wrong objective. (Avoids procedural wrongdoing details.)

Google Translate “Advanced” flow reportedly prompt-injectable via Gemini backend

Google Translate (Google): Reports suggest Google Translate’s “Advanced” mode for some languages is backed by an instruction-following LLM (claimed to be Gemini-1.5-Pro) and can be steered by meta-instructions embedded in the source text—i.e., classic prompt injection against a “translate this” wrapper, as described in the Translate model claim and the more detailed Injection writeup.

The security point is that translation UIs need hard separation between user-provided text and control instructions; the writeups argue the boundary is currently porous enough that users can sometimes elicit non-translation behaviors (including policy-violating outputs), which is why this is being discussed as an LLM product-footgun rather than a “fun jailbreak.” A deeper analysis of what this reveals about task fine-tuning boundaries shows up in the LessWrong analysis.

Screenshot circulates of Opus producing an “operation plan” targeting nsa.gov

Claude Opus 4.6 (Anthropic): A screenshot is circulating showing an agent-style UI generating a structured “operation plan” for an unauthorized security assessment against real public infrastructure (nsa.gov), highlighting how quickly “roleplay” or mis-scoped prompts can turn into operationally framed wrongdoing in agent wrappers, as shown in the Operation plan screenshot.

The incident is less about any single step (the screenshot reads like a plan template) and more about product boundary-setting: when planning UX, task templates, and multi-agent metaphors are combined, you can end up with highly actionable-seeming artifacts unless guardrails, scope checks, and refusal triggers are tight at the workflow layer—not just in a chat-style completion.

🏗️ Infra signals: AI capex financing + reliability hiccups that block shipping

Infra/ops signals that affect builders: large-scale financing for AI investment and reliability incidents (e.g., GitHub outages) that stall dev workflows. (Excludes funding-round chatter not tied to infra.)

Alphabet’s reported $15B bond sale to fund 2026 AI spend draws $100B+ demand

Alphabet/Google (Infra financing): Alphabet is reported to be raising about $15B via a global bond sale to help finance a $185B 2026 AI investment strategy, with the deal drawing $100B+ of orders, according to the Bond sale summary. The same thread frames it as part of an industry-wide capex surge (Alphabet/Amazon/Meta/Microsoft forecast ~$650B 2026 capex), and cites Oracle’s recent $25B issuance peaking at $129B orders as a comparable signal of AI-driven financing demand, per the Bond sale summary.

This is a direct read on how much capital markets are willing to underwrite AI infrastructure right now, independent of any single model launch cycle.

Microsoft’s forward P/E slips below IBM as AI uncertainty becomes the story

Public-market signal (SaaS durability): A widely shared framing is that AI is pushing investors to discount long-dated SaaS cashflows; one chart shows IBM trading at a higher forward P/E than Microsoft—24.08x vs 22.87x—described as a first in 10+ years in the Forward P/E thread.

The same narrative gets reinforced by separate valuation comps showing MSFT’s P/E lower than Costco/Walmart/IBM in another circulated chart, as shown in the P/E comparison chart. Together, these posts argue the market is pricing in lower switching costs and faster “build vs buy” substitution as agentic dev gets cheaper, per the Forward P/E thread.

GitHub outage blocks dev work mid-session

Reliability (Dev bottleneck): Multiple posts flagged a GitHub outage as an immediate workflow blocker—e.g., one dev notes they finally had time to work but “GH is down,” according to the Work blocked note. Others amplified the same incident in meme form, per the Outage meme.

For teams leaning harder on agentic coding, this is a reminder that the dependency chain still runs through Git hosting and CI surfaces—when GitHub is down, the whole loop stalls.

🧬 Model watch: GLM-5 sightings, Meta ‘Avocado’ rumors, and stealth routing models

Non-Codex model developments with engineering implications: large open-model architecture sightings, rumored frontier checkpoints, and new hosted “stealth” offerings. (Excludes generative video models, covered separately.)

GLM-5 sightings harden: DeepSeek-style MoE+DSA shows up in vLLM and spec tables

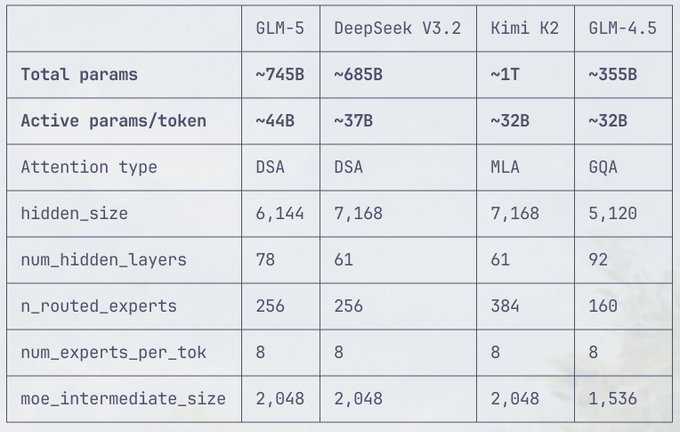

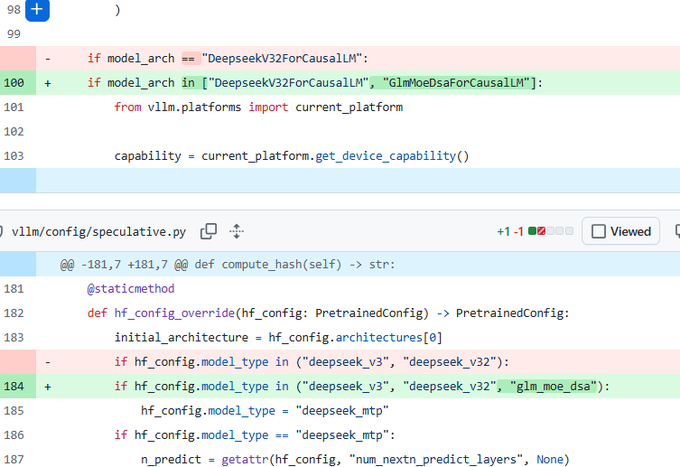

GLM-5 (Z.ai): GLM-5 chatter moved from “coming soon” to concrete architecture evidence; a spec table pegs it at ~745B total params with ~44B active/token and 256 routed experts, alongside DeepSeek-V3.2-like DSA attention, as shown in the Spec table screenshot.

• Inference stack signal: a vLLM PR merged support for GlmMoeDsaForCausalLM, with code paths treating it like DeepSeek-V3/V3.2 (including wiring into speculative config overrides), per the vLLM pull request and the diff screenshots in vLLM diff.

• What’s missing (so far): investigators note no multimodal support spotted in the same integration thread, according to the vLLM diff.

Net: engineers can start planning for DeepSeek-adjacent deployment assumptions (MoE routing + sparse attention quirks) rather than a totally new serving profile, with the “GLM-5 on GitHub” breadcrumbs continuing in the Model mention spotted.

Meta “Avocado” rumor claims pretraining-only beats top open models

Avocado (Meta): following up on Avocado leak (internal testing sightings), a Korean outlet claim circulating on X says Avocado’s pretraining alone is already surpassing top open-source models, with post-training (RLHF/alignment) not done yet, as shown in the Article screenshot.

The same thread frames this as unusual—“competing without post-training”—and adds skepticism and a correction that the claim is vs open-source baselines, not all closed models, per the Article screenshot and the follow-on framing in Timeline skepticism.

OpenRouter adds stealth model Aurora Alpha: free, very fast, prompts logged

Aurora Alpha (OpenRouter): OpenRouter listed a new “stealth model” called Aurora Alpha positioned as an extremely fast reasoning model for coding assistants and real-time chat, as announced in the Stealth model post.

• Data handling: the listing explicitly notes the provider logs prompts and completions (potentially used for improvement), per the Logging disclosure, with the model entry available via the OpenRouter model page.

The practical engineering implication is that this is a latency-first option with an unusually explicit tradeoff: free access in exchange for logged traffic, as written in the Logging disclosure.

Kimi K2.5 widens distribution: now live on TinkerAPI, keeps “usage proxy” buzz

Kimi K2.5 (Moonshot): Kimi K2.5 showed up as “live” on TinkerAPI, widening the set of places teams can route it without going through OpenRouter-first flows, per the Availability post.

• Adoption framing: Fireworks AI and community posts continue to describe Kimi as pushing “real-world use cases, not just benchmarks,” as shown in the Fireworks card.

• Usage as validation: OpenRouterAI amplified a claim that Kimi is becoming the most-used OpenClaw model based on OpenRouter metrics, per the Usage metrics repost.

This is mostly distribution signal (another endpoint to try), with no new model card, pricing, or context-limit details surfaced in the tweets.

⚙️ Runtime & serving plumbing: vLLM adds GLM hooks and inference support lands fast

Inference-stack updates tied to new model families, especially vLLM compatibility work that signals near-term deployability. (Excludes model product rollouts and excludes generative media tooling.)

vLLM lands GLM adaptation hooks for DeepSeek-style MoE + sparse attention stacks

vLLM (vllm-project): The vLLM project merged PR #34124 adding first-class support for a new GLM architecture entrypoint (GlmMoeDsaForCausalLM), which is a strong “deployability is imminent” signal for GLM-5-style checkpoints—see the merged GitHub PR and the code-path screenshots in the diff snippet.

The concrete plumbing change is that vLLM now treats GLM’s glm_moe_dsa model_type alongside DeepSeek’s v3/v3.2 families in speculative/config override logic (routing it through DeepSeek MTP-related configuration), as shown in the diff snippet. The same tweet thread also claims the architecture matches DeepSeek-V3.2’s sparse attention + multi-token prediction setup and notes “no multimodal support spotted,” per the diff snippet—treat that as a code-reading inference, not an official model card.

Transformers config hints at GLM-5 integration with min_transformers_version=5.0.1

Transformers compatibility (Hugging Face ecosystem): A GitHub config-mapping sighting shows zai-org/GLM-5 being wired into auto-model examples with min_transformers_version="5.0.1" and is_available_online=False, as shown in the code screenshot shared in config mapping screenshot.

This matters for serving teams because it’s the difference between “weights exist somewhere” and “standard tooling can reliably instantiate/configure the architecture.” The same cluster of sightings also references the GlmMoeDsaForCausalLM class name (matching vLLM’s new hook), as visible in config mapping screenshot and echoed by the vLLM adaptation discussion in GLM model_type mention.

📚 Agent research & system papers: full-stack agents, multi-agent scaling, and recursion

New papers and research threads directly about agentic software engineering systems (multi-agent metrics, end-to-end webdev agents, recursive reasoning scaffolds).

Google’s 180-config agent study quantifies when multi-agent helps—and when it hurts

Agent scaling study (Google Research): Google reports a controlled evaluation of ~180 agent configurations and claims a sharp tradeoff: multi-agent setups can improve parallelizable tasks by 81%, but can degrade sequential tasks by 70%, as summarized in the study summary and explained in the Google blog post. Short sentence: more agents is not monotonic.

The piece frames “agentic” tasks as those requiring sustained multi-step interaction under partial observability, then tests multiple coordination architectures rather than treating “multi-agent” as a single knob. It’s notable mainly because it puts numbers on something practitioners often feel anecdotally: coordination overhead can swamp any decomposition wins.

This also connects cleanly to the emerging push for collaboration-efficiency metrics (budget-matched baselines) rather than raw success rates, without requiring new models to be trained.

A “collaboration gain” metric for multi-agent systems calls out compute illusions

Collective AI metric Γ (arXiv): A new multi-agent systems (MAS) paper proposes a collaboration gain metric, Γ, that compares MAS performance to a single agent given the same total resource budget—so adding agents only “counts” if it beats budget-matched single-agent scaling, as laid out in the Gamma metric thread and formalized in the ArXiv paper. This is a direct attack on a common failure mode in agent papers: reporting improvements that are really just “more attempts/tokens”.

The paper also names a practical systems problem engineers recognize: “communication explosion” (lots of unstructured cross-agent chatter) can reduce net performance below single-agent baselines, which reframes orchestration work as a bandwidth-and-noise problem, not only a prompting problem.

FullStack-Agent: a multi-agent recipe for real backend+DB integration, not mock apps

FullStack-Agent (arXiv): A new research system claims a concrete fix for a common agentic coding pitfall—agents that generate polished UIs but leave fake endpoints and mock data—by splitting work across a planning agent plus dedicated backend and frontend agents with execution feedback loops, as summarized in the paper walkthrough and detailed in the ArXiv paper. It’s aimed at shipping “three layers that actually talk”: UI, API, and database.

Key reported results are on FullStack-Bench (647 frontend, 604 backend, 389 database tests), where the authors cite 64.7% frontend, 77.8% backend, and 77.9% database accuracy for their approach, alongside big deltas vs baselines in the same paper walkthrough. Short sentence: this is an eval for integration.

A second thread in the same writeup is data generation: “repository back-translation” (extract patterns from real repos; reproduce from extracted plans) to synthesize training trajectories that look more like real full-stack work than prompt-only demos.

Open-weights RLM-Qwen3-8B-v0.1 ships as a recursion proof of concept

Recursive Language Models (RLMs): The RLM authors say they post-trained and released an open-weights RLM-Qwen3-8B-v0.1 as a small proof of concept, claiming it was “surprisingly easy” to get a marked capability jump, per the Open-weights note and the follow-up Read more link. This is positioned as evidence that “learning to recurse” may not require very large models.

The key idea is scaffolding longer-horizon reasoning via compressed iterations (recursing over summaries/working state), rather than depending purely on long-context windows. Short sentence: it’s a test-time strategy, but trained.

What’s still missing in today’s tweets is any shared benchmark artifact or reproducible eval suite for the released checkpoint; the posts are primarily a release+claim, not an independent measurement.

🧑🏫 Labor & culture signals: layoffs, burnout, and “AI slop” platform effects

When discourse itself is the news: AI-attributed layoffs, burnout narratives from faster tool use, and platform-level degradation from AI-generated replies. (Excludes product release notes.)

Baker McKenzie cuts ~700 staff roles citing AI-driven “rethinking work”

Baker McKenzie (legal ops): A reported ~700-person reduction (framed as “rethinking the way we work, including through the use of AI”) is hitting support functions—not lawyers—across IT, knowledge, admin, DEI, L&D, secretarial, marketing, design, per the Layoff note. This is a cleaner signal than generic “AI will cut jobs” talk because it names which job families are being consolidated first (back-office and workflow-heavy support layers).

The open question is whether this pattern stays concentrated in support orgs or starts shifting into billable roles once agent reliability and auditability improve.

HBR study: AI boosts output but can also increase burnout and mental exhaustion

Workload psychology: A Harvard Business Review study (200 employees at a US tech company) argues AI tools often don’t reduce work; they increase task concurrency and context switching, which can raise cognitive load and burnout risk—summarized in the HBR takeaway and linked via the HBR article. The piece calls out a specific failure mode that will feel familiar to teams shipping with agents: the “just one more prompt” loop that expands scope faster than humans can triage or verify.

This lands as a management and process issue as much as a tool issue, especially when orgs implicitly ratchet expectations upward based on increased draft velocity.

Developers report X is filling with AI-generated posts and replies

Platform signal: Developers are complaining that AI-generated posts/replies are now volume-dominant enough to change daily workflows—“can barely keep up with blocking,” as described in the Blocking complaint. For teams using X as a discovery channel, this is effectively an “observability” problem: lower signal-to-noise, higher moderation overhead, and more incentive for closed/community channels for technical exchange.

No hard measurement is provided in the tweets; it’s anecdotal but coming from builders who spend time on the platform.

🧪 Training & data engineering: MoE parallelism, quantization tricks, and massive token curation

Training-facing technical artifacts: new MoE parallelism designs, extreme quantization training, and large-scale data curation frameworks. (Excludes productized coding models like Composer, covered elsewhere.)

Multi-Head LatentMoE + Head Parallelism proposes constant-communication MoE training

Multi-Head LatentMoE + Head Parallel (research): A new MoE parallelism design splits each token into multiple “heads” and exchanges heads across GPUs before routing, so routing + expert compute happen locally and inter-GPU communication stays constant as expert count grows, as described in the Design explainer. It reports up to 1.61× faster training than standard Expert Parallelism while keeping quality, with deterministic communication and up to 4× less inter-GPU traffic (k=4) per the Results recap.

• What’s new mechanically: “Head Parallelism” shifts the expensive part of EP (data-dependent token→expert shuffles) into a fixed, balanced all-to-all of heads; the paper also highlights IO-aware routing to avoid materializing large routing tensors, as outlined in the Design explainer.

• Primary artifacts: Details are in the ArXiv paper, with an accompanying GitHub repo for sparse GPT pretraining experiments.

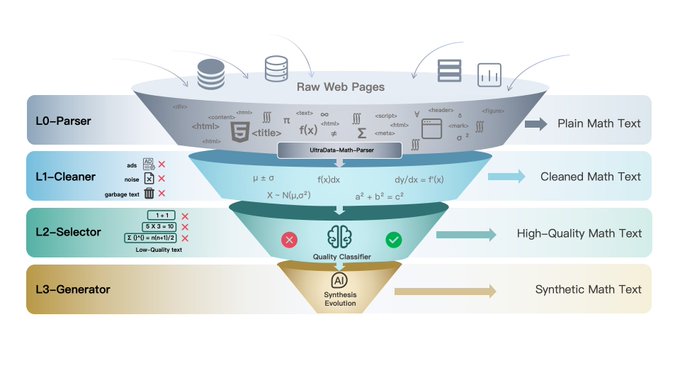

OpenBMB launches UltraData with a L0–L4 data lifecycle and 2.4T open tokens

UltraData (OpenBMB): OpenBMB introduced UltraData, framing data work as “data–model co-evolution” and shipping a L0–L4 tiered data management framework plus 2.4T open tokens and full-stack processing tools, according to the UltraData announcement. This is explicitly positioned as an answer to “noisy data” becoming the limiter when scaling, as laid out in the Tier definitions.

• Pipeline breakdown: L0–L1 focuses on parsing + heuristic cleaning; L2 uses model-based scoring for info density; L3 covers synthesis/reasoning data for mid-training/SFT; L4 aims at structured + verified data for RAG, per the Tier definitions.

• What’s actually shipped: The release claims open-sourcing the infra behind their parsing/cleaning/selection/generation stack, as stated in the Open-source infra note.

SE-BENCH proposes a clean benchmark for knowledge internalization via library obfuscation

SE-BENCH (THUNLP/OpenBMB): A new benchmark isolates “knowledge internalization” by obfuscating a well-known library (NumPy) into a pseudo-novel package (randomized identifiers) so tasks are trivial algorithmically but impossible without learning the new API; the construction pipeline and evaluation framing are shown in the SE-BENCH thread. One reported takeaway is an “open-book paradox”: training with documentation access can produce 0.0 accuracy once docs are removed, while a closed-book variant retains substantial performance (example bars show 39.6 in one setting), as visualized in the SE-BENCH thread.

The paper entry is referenced in the Paper page, while much of the implementation detail and ablations are summarized in the SE-BENCH thread.

UltraData-Math ships 290B tokens and shows math/code pretraining lifts

UltraData-Math (OpenBMB): OpenBMB singled out UltraData-Math as a 290B-token “deep focus” math pretraining dataset, claiming 88B tokens at an L3 refined tier (Q&A, dialogues, textbooks) and reporting downstream lifts on a MiniCPM-1.2B run—MATH500 37.02, GSM8K 61.79, MBPP 49.27—as described in the Math dataset thread. Short-term adoption signal: they also say the dataset hit #1 on Hugging Face trending, as shown in the Trending screenshot.

The dataset entry and metadata are linked via the Hugging Face dataset.

RaBiT targets 2-bit LLMs via residual-aware binarization training

RaBiT (research): A new quantization-aware training approach, Residual-Aware Binarization Training, argues that standard residual-binary paths co-adapt (redundant features) and proposes enforcing a residual hierarchy derived from shared full-precision weights, as summarized in the Paper page. It positions itself as pushing the 2-bit accuracy–efficiency frontier, with the writeup also calling out a 4.49× speed-up versus heavier quantization schemes in its reported comparisons, per the Paper page.

ERNIE 5.0 technical report details an ultra-sparse MoE and “elastic” training paradigm

ERNIE 5.0 (Baidu): Baidu’s ERNIE 5.0 technical report landed, describing a natively autoregressive foundation model for unified multimodal understanding/generation across text, image, video, and audio, with an ultra-sparse MoE architecture and an “elastic training paradigm,” as shown in the Tech report post. The abstract also emphasizes a next-group-of-tokens style objective and discusses challenges scaling RL post-training for ultra-sparse MoE, per the PDF report.

The tweets don’t include independent eval artifacts, so treat capability claims as provisional until third-party reproductions show up.

🎬 Generative video & creative stacks: Seedance 2.0 shockwave, Sora people i2v, and tooling mashups

Non-coding gen-media updates with direct product implications: Seedance 2.0’s perceived quality jump dominates, alongside Sora’s people photo-to-video expansion and creator pipelines. (Excludes enterprise/ads/coding assistant releases.)

Seedance 2.0 clips push into app promos and long action scenes, with China-only beta access

Seedance 2.0 (ByteDance): Following up on Seedance previews (early quality rumors), creators are now attributing 2K video output, native audio generation (speech + music), and multimodal input support to Seedance 2.0, alongside claims it’s hard to distinguish from real footage in some cases, as described in the feature claim thread and echoed by reactions like “No freaking way that’s AI generated” in the reaction post.

Access chatter also converged on “beta” and “China-only” availability, with people pointing to Dreamina pages showcasing a “Seedance 2.0” generator experience, as shown in the Dreamina Seedance page.

• Use-case expansion beyond cinematic: Posts highlight motion-graphics and app-promo-style outputs (coherent text + transitions) in the motion graphics example, plus longer choreographed sequences and “real or Seedance 2.0?” spot-checks in the real vs AI prompt.

• Reality check on “Hollywood level”: At least one tester says it’s a clear step up but “not Hollywood-level (yet),” in the hands-on caution.

Sora expands image-to-video from real photos of people via Characters

Sora (OpenAI): Sora is now described as supporting image-to-video generation from real photographs of people under Characters (formerly “Sora Cameos”), with OpenAI framing it as enabling “family and friends” animation while emphasizing ongoing guardrails, as stated in the Characters update screenshot.

The operational implication is straightforward: teams doing creator tooling or UGC-style pipelines get a first-party path for “photo → clip” of real individuals, while OpenAI’s messaging suggests tighter policy enforcement and monitoring than earlier “character” workflows.

CapCut adds Seedream 5.0 image model with free trial access

Seedream 5.0 (CapCut): CapCut is showing Seedream 5.0 as a selectable image model inside its “AI image” flow, including a free trial and UI-level knobs for aspect ratio and 2K resolution, as shown in the CapCut model picker UI.

The screenshots also suggest positioning around stronger reference-image support and “richer world knowledge,” plus visible “use” counters per model option, which makes it easier to treat model choice as a budgeted resource instead of a hidden backend swap.

fal ships real-time image-to-image editing for FLUX.2 Klein at 10+ FPS

FLUX.2 Klein realtime (fal): fal announced real-time image-to-image editing running at 10+ FPS, positioning it as low-latency and production-ready with hand-tuned kernels, as shown in the realtime editing announcement, with a public playground linked in the realtime playground.

This sits in the “interactive art direction” gap—where latency, not model quality, is the limiting factor—and fal is explicitly selling the runtime work (kernels + latency) rather than new model weights.

Kling 2.6 Motion Control highlights single-image action/expression control for i2v

Kling 2.6 Motion Control (Kling): Kling is being promoted around motion control from a single image, with claims of controlling actions and expressions via video references or a preset library—packaged as “PetsBowl” style examples—per the Motion Control overview and follow-on demo framing in the pet-photo animation clip.

For creative stacks, this is the “pose/acting layer” people have been faking via multi-pass prompting; Motion Control is presented as a first-class knob rather than an emergent trick.

Creator toolchains normalize: Midjourney + Nano Banana + Kling + ElevenLabs + editor stack

Creator workflow stacking: One recurring pattern is publishing the full “ingredient list” for a finished clip—image gen, video gen, music/voice, and editing—so others can reproduce the look; a concrete example lists Midjourney + Nano Banana Pro for images, Kling 3.0 for video, ElevenLabs for music, and Splice/Lightroom for finishing, as shown in the stack breakdown post.

This is less about any single model and more about the emerging default creative pipeline: best-of-breed tools composed into a repeatable recipe.

Topview launches Board, a shared canvas for multi-model AI video production

Board (Topview): Topview is pitching Board as a team collaboration surface for AI video—an “infinite canvas” workflow where multiple people generate, select, and annotate assets in one place—while claiming it integrates multiple top models (including Veo 3.1, Sora 2, Kling 2.6, and Nano Banana Pro) and can be cheaper than direct API usage, per the product overview.

The notable product bet is that version control for AI video is a UI problem (shared review state + decision trail), not a prompt problem.

Freepik + Kling 3.0 + Nano Banana Pro workflow for fight videos under an hour

Fight-video workflow: A step-by-step recipe for generating stylized fight sequences claims end-to-end production in under an hour by combining Nano Banana Pro (character/frame generation) with Kling 3.0 (looping transitions), all orchestrated from Freepik, as demonstrated in the workflow walkthrough.

The key technique described is grid-based “frame picking” (extract row/column) followed by stitching with start/end frames to maintain continuity across clips.