Claude Sonnet 4.6 adds 1M-token context – $3 and $15/MTok

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Anthropic shipped Claude Sonnet 4.6 as a mid-tier jump toward “agent-ready” behavior; 1M-token context is in beta; API pricing stays at $3/MTok input and $15/MTok output, with higher rates after 200K tokens. Anthropic’s table claims 79.6% on SWE-bench Verified; 72.5% on OSWorld-Verified; ARC-AGI-2 rises to 58.3% (vs 13.6% for Sonnet 4.5). Independent-ish signals tilt the same way: Stagehand ranks Sonnet 4.6 top for browser accuracy; Box reports its Complex Work Eval moving 62%→77% (+15 points), but both are vendor-contextual and not fully reproducible in-thread.

• Artificial Analysis/GDPval-AA: Elo 1633; harness run used 280M tokens vs 58M for Sonnet 4.5, implying heavier test-time compute even at unchanged token prices.

• Claude search + platform GA: dynamic filtering executes code before results enter context; +13% BrowseComp accuracy with 32% fewer input tokens; code execution, fetch, memory, and tool calling move to GA.

• OpenAI/Codex ops: Codex CLI 0.102 adds experimental multi-agent presets (6 threads default); OpenAI says 5.3→5.2 safety downgrades targeted “well under 1%,” now with loud per-turn notices.

What’s still unclear is how 1M context behaves under long-loop agent workloads: MRCR v2 shows 65.8 mean match on 1M 8-needles; reports of Claude Code OOMs suggest runtime stability, not window size, is the limiting factor in practice.

Top links today

- GLM-5 technical report

- Claude Sonnet 4.6 release post

- Anthropic agents and tools documentation

- OpenAI Lockdown Mode and Elevated Risk

- Claude Code CLI 2.1.45 release notes

- Artificial Analysis Sonnet 4.6 benchmarks

- Vals Sonnet 4.6 benchmark results

- Firecrawl Browser Sandbox repo

- Cursor 2.5 release notes and marketplace

- Claude Code to Figma editable frames

- Render $100M funding and AI runtime roadmap

- Dreamer agent app platform beta launch

- Qwen3.5-397B-A17B model weights

- Moltbook 2.6M-agent social network paper

- LangChain harness engineering recipes for agents

Feature Spotlight

Claude Sonnet 4.6: mid-tier becomes “agent-ready” (1M context beta, big jumps on computer use + tool-heavy work)

Sonnet 4.6 makes near-frontier agentic coding + computer-use practical at Sonnet pricing, with a 1M-context beta that changes what “fit the repo/docs” means for real workflows.

The dominant cross-account story today is Anthropic shipping Claude Sonnet 4.6: a broad upgrade (coding, computer use, planning, knowledge work) plus a 1M-token context window (beta) and unchanged Sonnet pricing. This category captures the release details, published benchmark deltas, and early practitioner comparisons/usage signals.

Jump to Claude Sonnet 4.6: mid-tier becomes “agent-ready” (1M context beta, big jumps on computer use + tool-heavy work) topicsTable of Contents

🧠 Claude Sonnet 4.6: mid-tier becomes “agent-ready” (1M context beta, big jumps on computer use + tool-heavy work)

The dominant cross-account story today is Anthropic shipping Claude Sonnet 4.6: a broad upgrade (coding, computer use, planning, knowledge work) plus a 1M-token context window (beta) and unchanged Sonnet pricing. This category captures the release details, published benchmark deltas, and early practitioner comparisons/usage signals.

Artificial Analysis: Sonnet 4.6 leads GDPval-AA but spends 4× more tokens than 4.5

GDPval-AA (Artificial Analysis): Artificial Analysis reports Sonnet 4.6 at Elo 1633, slightly ahead of Opus 4.6 on GDPval-AA, as described in the GDPval-AA result thread and summarized in a table screenshot in the GDPval-AA table.

• Token budget tradeoff: The same thread says Sonnet 4.6 used 280M tokens to run GDPval-AA vs 58M for Sonnet 4.5 (extended thinking), and compares Opus 4.6 at 160M tokens, per the GDPval-AA result thread.

• Cost-to-run positioning: Artificial Analysis claims Sonnet 4.6 lands back on the Pareto frontier but at a higher cost/perf point, even while keeping Sonnet token pricing, per the cost curve note.

Net: at least on this harness, Sonnet 4.6’s headline “mid-tier” economics depends heavily on how much test-time compute you let it burn.

Box: Sonnet 4.6 jumps +15 points on its Complex Work Eval

Box AI (Box): Box says early access testing of Sonnet 4.6 on its “Box AI Complex Work Eval” shows a +15 percentage point improvement over Sonnet 4.5, moving from 62% to 77% on its full dataset, according to the Box eval thread.

Sector deltas in the same chart include Public sector 77%→88%, Healthcare 60%→78%, and Legal 57%→69%, as shown in the Box eval thread.

This is one of the clearer enterprise-content signals that the “agent planning + long context” upgrades translate into doc-heavy, multi-file workflows (due diligence, report generation) rather than only benchmark puzzles.

Claude Code: how to select Sonnet 4.6 1M context and set it as default

Claude Code (Anthropic): Builders are sharing the concrete model selector for the 1M-context beta variant—claude-sonnet-4-6[1m]—including the command and a ~/.claude/settings.json snippet to make it the default, per the model string and settings.

• Billing behavior to watch: One report says usage cost only steps up once context exceeds 200K, aligning with Sonnet’s “>200K tokens” price tiers, and notes that enabling extra usage may be required for 1M context in some plans, per the extra usage note.

This is a concrete, reproducible setup change for teams testing “full repo in one session” workflows in Claude Code.

Claude Developer Platform: code execution, memory, and tool-calling features move to GA

Claude Developer Platform (Anthropic): Alongside the Sonnet 4.6 release, Anthropic says code execution, web fetch, memory, programmatic tool calling, tool search, and tool use examples are now generally available, per the tooling GA note.

Anthropic’s tooling roundup is bundled into the same release-side documentation as the dynamic filtering update in the dynamic filtering write-up linked from the platform update link.

This is mostly an “availability” shift, but it matters operationally because it reduces the amount of bespoke scaffolding teams need to reproduce Claude’s first-party agent behaviors.

Claude web search adds dynamic filtering via code execution (fewer tokens, higher accuracy)

Claude web search (Anthropic): Anthropic says Claude’s web search and fetch tools can now write and execute code to filter results before they enter the context window, which raised Sonnet 4.6 accuracy on BrowseComp by 13% while using 32% fewer input tokens, per the dynamic filtering note.

The underlying mechanism and benchmark breakdown are explained in Anthropic’s dynamic filtering write-up via dynamic filtering post, which also reports broader token efficiency gains.

This is a “harness-level” improvement that changes the cost/quality profile of browse-heavy agents even if the base model stayed the same.

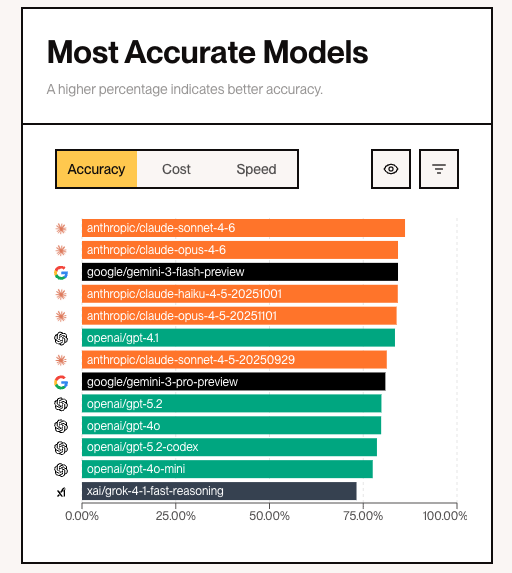

Stagehand browser evals rank Sonnet 4.6 as the most accurate model

Stagehand (browser agents): Stagehand reports Sonnet 4.6 as its most accurate model for browser-use tasks, with a bar chart showing anthropic/claude-sonnet-4-6 slightly above claude-opus-4-6, as shown in the accuracy chart and echoed in the Stagehand benchmark note.

A public entry point for the underlying comparisons is referenced in Stagehand’s evals page linked from the evals page post.

This adds another independent “computer/browser use” datapoint beyond OSWorld, using a tooling stack closer to how web-automation agents are actually built and deployed.

ARC Prize: Sonnet 4.6 posts 86% on ARC-AGI-1 and 58% on ARC-AGI-2 with $/task

ARC Prize (ARC-AGI): ARC Prize reports Sonnet 4.6 (120K thinking) results on its Semi-Private eval with 86% on ARC-AGI-1 at $1.45/task and 58% on ARC-AGI-2 at $2.72/task, according to the ARC Prize results.

ARC Prize also publishes the policy and reproducibility links (leaderboard, reproduce repo, testing policy) from the policy links thread, which makes it easier to map the “% score” into a concrete harness configuration.

This keeps the ARC-AGI discussion grounded in the cost-per-task dimension rather than raw accuracy alone.

MRCR v2 long-context retrieval: Sonnet 4.6 posts 65.8 mean match on 1M 8-needles

MRCR v2 (long-context needles): A results table for OpenAI’s MRCR v2 shows Sonnet 4.6 at roughly 90.3–90.6 mean match ratio on the 256K 8-needles test and 65.8 on the 1M 8-needles test, as shown in the MRCR results table.

The same table positions Opus 4.6 higher on the 1M-needle variant (78.3/76.0 depending on setting) while showing Gemini 3 and GPT-5.2 configurations used for comparison, per the MRCR results table.

This is one of the few concrete, published “needle-in-haystack” style datapoints tied to the new 1M context window.

Preference testing: users pick Sonnet 4.6 over Sonnet 4.5 ~70% of the time

Claude Sonnet 4.6 (Anthropic): A preference-testing snippet claims early testers chose Sonnet 4.6 over Sonnet 4.5 about 70% of the time and even over Opus 4.5 about 59%, describing it as “less prone to overengineering” and better on instruction following, per the preference excerpt.

Anecdotal sentiment in other posts aligns with that direction—e.g., “great balance of capability, speed, and token efficiency” in the Cowork comment—but the preference numbers themselves are not accompanied by a full public methodology in the tweets.

The main engineer-relevant claim here is behavioral: fewer false-success claims and more consistent follow-through on multi-step tasks, per the preference excerpt.

ValsAI: Sonnet 4.6 leads Vals Index and finance/tax agent benchmarks

Vals Index (ValsAI): ValsAI claims Sonnet 4.6 takes #1 on its Vals Index and Vals Multimodal Index, beating Opus 4.6, per the Vals leaderboard claim.

They also report Sonnet 4.6 taking first on Finance Agent (63.3%) and Tax Eval v2 (77.1%), and disclose evaluation settings like 128,000 max output tokens and “max” effort, per the evaluation settings.

For a single canonical artifact, Vals links a model results page in the results page link, which includes context window and pricing metadata.

🧰 OpenAI Codex: experimental multi-agent mode + routing transparency fixes + hiring for infra/security

Continues the Codex operational storyline from prior days, but today’s novelty is concrete multi-agent configuration in Codex CLI and updates explaining/mitigating GPT-5.3→5.2 fallback routing. Also includes explicit recruiting for infra/security engineers building next-gen coding tooling.

Codex CLI 0.102 ships experimental multi-agent mode with preset roles and custom agents

Codex CLI 0.102.0 (OpenAI): Codex CLI v0.102 introduces experimental multi-agent support—toggle it in the TUI under /experimental → multi agents or enable it in config via [features] multi_agent = true, as shown in the Multi-agent enablement guide.

• Preset agents + spawn prompts: It ships three included agents—default (mixed tasks), explorer (codebase research, no edits), and worker (scoped implementation)—with example commands like “spawn explorer to map payment flow… no edits” and “spawn worker… implement token refresh & run tests,” as described in the Multi-agent enablement guide.

• Threading and customization: Current default is 6 agent threads per session and can be raised (example [agents] max_threads = 12), plus you can define custom agents in TOML that point at a separate agent config file and set per-agent model knobs like model = "gpt-5.3-spark", model_reasoning_effort, and model_verbosity, per the Multi-agent enablement guide.

OpenAI tightens GPT-5.3 Codex downgrade routing and adds per-turn warnings in CLI

Codex routing (OpenAI): Following up on Misrouting (GPT-5.3→5.2 downgrades), OpenAI says it recalibrated classifiers/policies that flagged “elevated risk,” aiming to push downgrades to well under 1% of users, according to the Routing incident update.

• Visibility in product: It also shipped loud, per-turn notifications in Codex CLI v0.102.0 when a request is downgraded, with other clients getting the same treatment “asap,” per the Routing incident update.

• Access recovery fixes: OpenAI says it fixed cases where users who verified via Trusted Access still didn’t regain GPT-5.3-Codex access, as stated in the Routing incident update.

OpenAI infra/security hiring pitch spotlights agent sandboxes and observability bottlenecks

Infra and security hiring (OpenAI): An OpenAI infra lead is explicitly recruiting infra/security engineers, arguing model capability is now bottlenecked by agent cross-collaboration, ergonomic secure sandboxes, and tooling/abstractions/observability that let agents run end-to-end safely at scale, as laid out in the Infra hiring note.

The same note frames the work as scaling training/inference systems while managing complexity and iteration speed, and it points candidates to an email intake (gdb+infra@openai.com), per the Infra hiring note.

Codex App tip advertises 2× rate limits until April 2

Codex App (OpenAI): A Codex CLI v0.102 screen tip advertises “the Codex App” with 2× rate limits until April 2, suggesting OpenAI is actively pushing adoption of the app surface alongside CLI work, as captured in the In-product Codex app tip.

The same screenshot indicates entry points via codex app and a ChatGPT Codex URL, per the In-product Codex app tip.

OpenAI asks for Windows expertise to improve Codex on Windows

Codex on Windows (OpenAI): OpenAI is explicitly asking for “longtime Windows developers/experts” to help make Codex work well on Windows, signaling ongoing platform gaps (or a push to close them) in the coding-agent toolchain, as requested in the Windows help request.

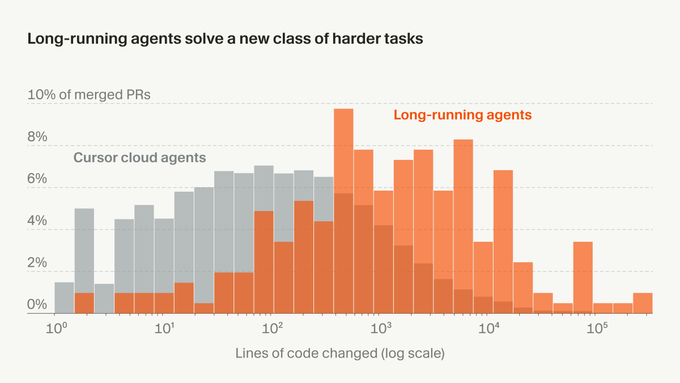

🧩 Cursor 2.5: plugin marketplace + long-running agents + internal workflow kits

Cursor’s surface area expands with a marketplace and more ‘agent OS’ style features. This category focuses on Cursor product updates and team-shared workflows (excludes the Sonnet 4.6 model story, covered as the feature).

Cursor adds a Cloudflare plugin for Workers and MCP servers

Cloudflare plugin (Cursor): Cursor announced a Cloudflare plugin to integrate Cloudflare’s developer platform into agent workflows, including building MCP servers and managing Workers, as shown in the product demo Cloudflare plugin demo.

It’s one of the clearest signs that Cursor is standardizing on “plugins as the tool surface” rather than bespoke in-editor integrations.

Cursor adds AWS agent plugins for infra work

AWS agent plugins (Cursor): Cursor announced AWS agent plugins intended to give agents skills and tools for architecture guidance, deployment, and operational tasks, as shown in the AWS-focused demo AWS plugin demo.

It’s part of the marketplace narrative that the editor can act as a control plane for infra workflows, not only code edits.

Cursor ships a Figma plugin for design-to-code workflows

Figma plugin (Cursor): Cursor shipped a Figma plugin positioned as “translate designs into code,” with the flow shown in the demo video Figma plugin demo.

It’s another step toward treating design artifacts as structured inputs that agents can read and implement against.

Cursor ships a Linear plugin for project tracking in-editor

Linear plugin (Cursor): Cursor shipped a Linear plugin for accessing issues, projects, and documents directly from the editor, with the workflow shown in the launch video Linear plugin demo.

This gives Cursor’s agent loop a built-in path to read/write task state in the same place developers already track work.

Cursor ships a Stripe plugin for payments and subscriptions

Stripe plugin (Cursor): Cursor added a Stripe plugin intended for handling payments and subscriptions from agent workflows, as shown in the plugin demo clip Stripe plugin demo.

The launch positions payments setup as an in-editor tool surface instead of a context-switch to Stripe docs and dashboards, consistent with Cursor’s broader plugins push Marketplace launch.

Cursor “grind mode” gets called out as a sticky iteration loop

Ralph loop / grind mode (Cursor): One practitioner specifically calls out Cursor’s implementation of the “Ralph loop” (aka “grind mode”) as a workflow that’s changing how they work, per the short endorsement Grind mode praise.

The practical takeaway is less about the name and more about the loop being a recognizable feature surface—an agent iteration mode people can discuss and compare across tools.

Cursor adds a Vercel plugin for React best practices

Vercel plugin (Cursor): Cursor announced a Vercel plugin centered on React and Next.js performance best practices, with the workflow shown in the plugin demo Vercel plugin demo.

This frames “best-practice refactors” as an agent skill that can be applied repeatably, rather than tribal knowledge in code review comments.

Cursor adds an Amplitude plugin for analytics queries

Amplitude plugin (Cursor): Cursor announced an Amplitude plugin for querying data and analyzing dashboards, with the integration shown in the walkthrough Amplitude plugin demo.

This extends the agent loop into “read product metrics → propose changes” workflows without leaving the editor.

Cursor plugins are being framed as the abstraction over skills, rules, and MCPs

Plugins as abstraction (Cursor): A recurring pain point—too many parallel concepts like skills, rules, MCP servers, and “whatever’s next”—is being answered by Cursor’s framing that plugins fold complexity away, as described in a user commentary pointing to the marketplace Plugins simplify sprawl and the marketplace itself at Marketplace page.

This is a product direction signal: instead of teaching agents a dozen config idioms, Cursor is trying to standardize extension as a single packaging unit.

Cursor ships a Databricks plugin for data and AI workflows

Databricks plugin (Cursor): Cursor added a Databricks plugin aimed at building secure data and AI applications through the editor’s agent workflow, as shown in the demo clip Databricks plugin demo.

The positioning is notable because it treats “data platform operations” as first-class agent actions rather than external console work.

🛡️ Agent security: jailbreaks, prompt injection defenses, and permissioning surfaces

Security posture is a major thread today: jailbreak artifacts around Grok, plus platform moves toward tighter tool permissions and prompt-injection mitigation. Excludes any bio/wet-lab discussion entirely.

OpenAI adds ChatGPT Lockdown Mode to reduce prompt-injection exfil risks

ChatGPT Lockdown Mode (OpenAI): OpenAI introduced Lockdown Mode as an optional security setting that tightens how ChatGPT can interact with external systems—especially browsing—so prompt-injection attempts have fewer paths to exfiltrate data, as described in the feature rundown and detailed in the OpenAI post.

• Threat model: It’s framed explicitly around prompt injection and data exfiltration when agents can touch the web or connected tools, per the attack-path explanation.

• Operational shape: The UI/diagram implies deterministic restrictions (including browsing constraints) rather than “best effort” policy adherence, as shown in the feature rundown.

Grok 4.20 jailbreak artifact highlights inconsistent refusal vs. compliance

Grok 4.20 (xAI): A widely shared jailbreak prompt shows a multi-step prompt-injection attempt that tries to force a refusal followed by “opposite” behavior; screenshots show Grok producing mixed outcomes (refusal followed by disallowed content in at least one capture), as evidenced in the jailbreak screenshot.

• Failure mode: The attack uses format constraints plus instruction inversions ("don’t say I’m sorry") to push the model into contradictory compliance, as shown in the jailbreak screenshot.

• Safety signal: The same thread includes an instance where the model appears to deflect into unrelated content instead of answering the illicit request, suggesting unstable routing/guardrail behavior in beta, as visible in the jailbreak screenshot.

The circulating images are enough to treat this as a “prompt format + persona override” weakness class, not a single one-off bad response.

OpenAI standardizes “Elevated risk” labels across ChatGPT, Atlas, and Codex

Elevated risk labels (OpenAI): OpenAI is rolling out standardized “Elevated Risk” labeling across ChatGPT, ChatGPT Atlas, and Codex to make “network/tool access + sensitive data handling” more explicit at the product layer, according to the feature summary and the OpenAI post.

• Why it matters: The intent is to turn security posture into a visible per-feature state (not an implicit property of “agent mode”), as explained in the security breakdown.

• Related incident context: OpenAI also described classifier/policy calibration work that reduced security-driven downgrades/reroutes ("well under 1%" target), as noted in the routing update.

Firecrawl launches Browser Sandbox for isolated agent browser workflows

Browser Sandbox (Firecrawl): Firecrawl launched Browser Sandbox, pitching it as a managed, isolated environment where agents can interact with the web for workflows that require pagination, form-fills, and auth—beyond scrape/search endpoints—per the launch thread.

• Security posture: The product framing emphasizes isolation and “secure environments for agents,” which maps directly to prompt-injection and session containment concerns for browser-capable agents, as described in the launch thread.

• Developer integration: They show it running inside agent tooling (including Claude Code) in the Claude Code demo, and they also demonstrate high-volume web tasks (patent fetching) in the playground demo.

Grok 4.20 system prompt leak shows multi-agent roles and safety clauses

Grok 4.20 system prompt (xAI): Users circulated a “system prompt” style block describing Grok as a team leader collaborating with three named agents (Harper, Benjamin, Lucas), alongside behavioral/safety clauses and a tool list, as captured in the prompt dump and the UI screenshot.

• Tool-surface exposure: The leaked prompt includes explicit tool descriptions (code execution, browsing, X search) and how to call them, which becomes part of the prompt-injection conversation because it clarifies the available capability surface, as shown in the prompt dump.

• Policy clarity: The same prompt text includes “refuse criminal activity” and “refuse jailbreaks concisely” style clauses, which directly contextualize why jailbreak artifacts like the one in jailbreak screenshot are being treated as a real-world regression test.

Zed Agent adds regex-based per-tool permissions (allow/deny/confirm)

Zed Agent (Zed): Zed previewed granular per-tool permission rules where you can set regex patterns to always allow, always deny, or always confirm specific tool invocations (example: confirming git push), as shown in the permission preview.

This is a concrete “least surprise” control surface for coding agents: the decision is made at the tool boundary, and the config is explicit and testable in-UI, per the permission preview.

Maintainers escalate bans for unapproved AI PRs and undisclosed agent accounts

OSS maintainer enforcement: A GitHub thread screenshot shows a maintainer warning an AI agent account to stop submitting PRs without reading the project’s AI policy and without maintainer approval, culminating in PR closure and a threat of permanent ban, as shown in the maintainer warning.

This is a concrete “agent abuse” pattern: repeated unsolicited changes plus missing disclosure/approval are being treated as bannable behavior, and “AI_POLICY.md” style repo policies are becoming the enforcement surface, per the maintainer warning.

🧭 Coding-agent ecosystem signals: routing outages, performance regressions, and “model switching fatigue”

Today’s chatter includes reliability incidents and UX friction across agent tools (routing, slowdowns, billing friction) plus emerging ‘router’ products to hide model selection. Excludes the Sonnet 4.6 upgrade itself (feature).

Google Gemini billing cap blocks new projects; Google calls it abuse prevention

Gemini billing (Google): A user hit a hard block—“billing account has been assigned to the maximum number of projects”—and concluded it’s “easier to just switch to OpenAI,” as shown in Billing error screenshot.

A Google representative replies they added the limit to prevent billing-account abuse and will try to make it smoother, per Google reply.

This is a practical adoption limiter for engineers: if model access requires project gymnastics, teams route around it.

Kilo Code adds Auto Model routing between Opus and Sonnet by task mode

Auto Model (Kilo Code): Kilo introduced an “Auto Model” router that keeps you on a single selection while it routes “Planning/Architect” to Claude Opus 4.6 and “Code/Debug” to Sonnet 4.5, as described in Auto Model announcement.

The same thread notes this currently routes only across Anthropic models and implies broader provider support later, per Provider scope note.

Maintainers escalate on unapproved AI PRs (AI_POLICY, bans, identity disclosure)

Open-source maintenance (GitHub): A maintainer warns an AI account “Last chance… one more PR, and its a permanent ban,” while the agent replies it should have read the policy and identified itself as an AI, as shown in AI PR warning screenshot.

This is a scaling constraint on “agents contributing to OSS”: process compliance (approval gates, explicit AI disclosure, issue-first workflow) is becoming a hard requirement, not etiquette.

Claude Code users complain about frequent OOMs; stability vs speed tradeoff resurfaces

Claude Code (Anthropic): One user claims “claude code oom’s like 3-4x a day,” as reported in OOM complaint. Another post frames the broader tradeoff as preferring shipping velocity over stability investment—“you’d rather be claude code than not,” per Tradeoff framing.

For engineers running large repos or long agent loops locally, repeated OOMs are a reliability limiter in the same class as routing outages: you lose the session and the thread of work.

Conductor users report 1h+ slowdown; maintainer points to ongoing perf work

Conductor (conductor_build): A user reports Conductor “grind[ing] to a halt after ~1h+ usage” with growing lag in Lag report, and the maintainer replies that they “fixed a bunch of perf issues in 0.35.3” and expect more improvements this week in Maintainer response.

For teams using Conductor as a daily agent cockpit, this is a real UX tax: long sessions are exactly where agent tooling is supposed to shine, not degrade.

OpenCode Zen hit upstream routing issues for GPT/Claude, then recovered

OpenCode Zen (OpenCode): OpenCode reported GPT and Claude models breaking due to an upstream provider “routing traffic in an unexpected way,” per the incident note in Incident report, and later said the problem was fixed in Resolution note.

This reads like a provider-layer routing regression (not a model bug), which matters if you treat Zen as a stable “router” for coding agents and rely on it for long-running sessions.

Phase-based model routing is becoming a default workflow pattern

Workflow pattern: People are increasingly treating “which model should I use right now?” as solvable by phase-based routing—deep model for planning, cheaper/faster model for execution—rather than as a manual per-turn decision. Kilo’s implementation makes the pattern explicit by routing Opus for “Architect/Planning” and Sonnet for “Code/Debug,” as documented in Routing by mode.

This pattern is showing up because manual switching is framed as “exhausting,” and the tool promise becomes “stop thinking about models, start thinking about code,” as stated in Routing by mode.

Credit burn is getting treated as a workflow tax, not just a cost line

Cost pressure: Multiple posts frame agent work as quietly expensive in a way that changes behavior—“Sonnet and Slopus 4.6 are munching through my credits,” per Credit burn gripe, and “collectively spending for developer dopamine,” per Spend framing.

This is less about per-token pricing spreadsheets and more about the felt cost of iteration loops when agents are running for hours.

🔌 MCP & interoperability: design↔code loops, browser sandboxes, and tool ecosystems

Interoperability is moving from novelty to default: design tools, browsers, and work trackers are being exposed as agent-callable surfaces. This category covers MCP/plugins-as-connectors (excluding the core Sonnet 4.6 release story).

Figma ships a Claude Code integration that round-trips designs as editable frames

Figma plugin for Claude Code (Figma/Anthropic ecosystem): Figma shipped a workflow that takes UI work produced in Claude Code and brings it into Figma as editable design frames, then lets you push updated designs back into Claude Code via the Figma MCP—a concrete design↔code loop rather than one-way “code export,” as shown in the feature demo.

• Round-trip mechanics: After importing, you can iterate on the canvas and then return to agent work using the MCP path described in the feature demo.

• How to install: The entry point is the Claude Code plugin command /plugin install figma@claude-plugin-directory, as documented in the install command.

Firecrawl launches Browser Sandbox for agent-driven web flows beyond scraping

Browser Sandbox (Firecrawl): Firecrawl launched Browser Sandbox, positioning it as the missing layer for agent web tasks that scraping/search endpoints don’t cover—pagination, form fills, and auth—by giving the agent a managed browser environment “in one call,” per the launch announcement.

• Toolchain interoperability: The pitch is explicit that it works with Claude Code and Codex-style setups, with a hands-on Claude Code walkthrough shown in the integration demo.

• Scale-shaped demo: A playground example shows it fetching dozens of patents from a single prompt, as shown in the patents demo.

A concrete Linear MCP capability map for orchestrating agent work

Linear MCP (Linear): A shared “capability inventory” maps Linear’s MCP tool surface (issues, comments, attachments, docs, projects, milestones, cycles, status updates, user/team lookup, and search) into a repeatable pattern: use Linear as the single control plane for multi-agent planning and execution, as laid out in the capability inventory.

• Why this matters in practice: The inventory makes it explicit which operations can be automated (e.g., create_issue, update_issue, create_document, create_attachment), reducing the “agent did work but didn’t land it anywhere” failure mode highlighted by the capability inventory.

Excalidraw MCP used for fully agent-generated diagrams in an OpenClaw workflow

Excalidraw MCP (OpenClaw): A practical demo claim is that diagrams in an OpenClaw workflow were generated end-to-end by the agent via Excalidraw MCP, with “didn’t manually touch a single thing,” per the diagram generation note.

This is a concrete path for making architecture diagrams and process charts first-class agent outputs—generated alongside code and docs—rather than a separate human-only step, as implied by the diagram generation note.

🧪 Practical agent-building patterns: verification-first harnesses, prompt hygiene, and multi-model prompting pitfalls

High-signal workflow notes today focus on building agents that stay on track: self-verification loops, avoiding useless tests, and the emerging pain of maintaining model-specific prompts. Excludes the Sonnet 4.6 release and benchmarks (feature).

Harness-only changes moved a coding agent to Top-5 on Terminal-Bench 2.0

Harness engineering (LangChain): LangChain’s Viv describes taking a coding agent from ~Top 30 to Top 5 on Terminal-Bench 2.0 by changing the harness only (not the underlying model), emphasizing self-verification loops, trace-driven iteration, and “agent onboarding” via better context packaging, as outlined in the Harness engineering write-up.

The practical takeaway is that harness work is starting to look like systems work—tight feedback loops and failure-mode mining—rather than prompt tweaks.

Self-verification loop recipe: tests, contracts, recovery, and codemaps

Self-verification loops (coding agents): A concrete checklist for long-horizon coding agents centers on making verification non-optional—run existing + generated tests, lean on “integration contracts” (stable connector interfaces), and replan/recover when builds fail, plus post-step context reshaping via filesystem codemaps instead of dumping everything into one mega-context, per the Verification loop notes.

This is a direct response to the recurring failure mode where agents claim success before executing tests or after silently drifting interface assumptions.

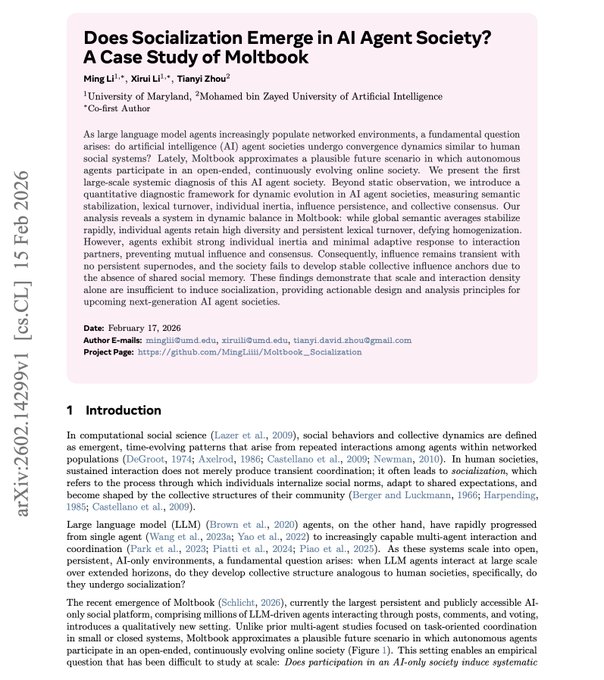

Moltbook study: lots of agent posts but no durable influence or feedback effects

Multi-agent coordination skepticism (Moltbook): A paper summary making the rounds reports that in Moltbook—a 2.6M-agent “social network” simulation—macro-level semantics stabilize (surface-level “culture”), but individual agents don’t measurably influence each other; feedback response looks like noise and no durable thought leaders emerge, per the Moltbook findings.

The implication for builders is that “add more agents and let them chat” may yield the texture of coordination without the mechanics (shared memory, persistent influence, consensus-building).

Multi-model agents hit a real wall: prompt files don’t transfer across models

OpenClaw prompting (multi-model friction): An OpenClaw power user reports that their entire workspace prompt stack (SOUL/IDENTITY/MEMORY, tuned for one model family) doesn’t port cleanly to other frontier models, creating a maintenance cliff unless the system supports first-class per-model prompt variants, as described in the Prompt portability complaint and clarified in the Parallel prompt upkeep.

This highlights a very practical constraint for “router” or “best model per subtask” setups: once prompts become a codebase, model-specific prompt dialects become technical debt.

OpenAI infra hiring pitch frames agent sandboxes and observability as bottlenecks

Infra skills for agent era (OpenAI): An OpenAI infra/security recruiting note argues that model capability is increasingly gated by infrastructure: cross-agent collaboration, secure sandboxes, tooling/abstractions/observability, and scaling supervision—plus “abstraction/architecture curiosity” as a differentiator—according to the Infra hiring pitch.

It’s a telling signal that many teams now see the limiting factor as execution environments and control planes, not raw model quality.

Two traits separating reliable agents: self-awareness and closing the loop

Agent reliability (loop closure): Phil Schmid argues that good agents differ from bad ones on two axes—operational self-awareness (knowing tool limits, uncertainty, instruction-writing) and the ability to verify work before answering—building on recent “verification loop” discussions in Closing loop and expanding them in the Loop closure framing plus the linked Essay.

The framing is aimed at the common pattern where users end up prompting, “did you test it?” or “review your work and find errors,” after the agent has already shipped a broken patch.

AI test-writing tip: don’t test what the type system guarantees

Testing discipline (AI coding agents): A simple rule-of-thumb is spreading in TS-heavy codebases—don’t generate tests for invariants that the type system already enforces, since it tends to produce bulky, low-signal tests that don’t catch regressions and slow CI, as framed in the Testing tip.

This shows up most when agents are asked to “add tests” after implementing a change: without guidance, they’ll test static properties (types, exhaustive unions) instead of runtime behavior, integration boundaries, or edge cases.

Orchestration pattern: prompt K LLMs in parallel, then synthesize one answer

LLM orchestration (Palantir): Palantir’s CTO describes a simple but repeatable pattern—send the same prompt to K models (or K instances), then run a synthesis step that compares and reconciles outputs into a single response, as shown in the Orchestration diagram.

This is basically “best-of-N with structured reconciliation,” and it’s increasingly used as a harness primitive when single-model variance is the bottleneck.

🧱 Agent runtimes & frameworks: long-horizon execution, observability loops, and app-builder platforms

Framework-level innovation today centers on durable agent programs (not single chats), observability as the debug primitive, and consumer app-builder stacks for personal agents. Excludes day’s headline model release.

Dreamer (/dev/agents) launches beta as a full-stack app-builder for personal agents

Dreamer (/dev/agents): /dev/agents came out of stealth as Dreamer, positioned as a consumer+coding platform where “Sidekick” builds agents/apps that can be published via an app-store-like distribution surface, as described in the Launch overview. It’s framed as “apps as agents” rather than chatbots, bundling MCP connectors, triggers, portable memory, settings/notifications, logging, prompt management, serverless functions, and version control into the same product surface, per the same Launch overview.

Southbridge open-sources Hankweave for durable long-horizon agent programs

Hankweave (Southbridge): Southbridge open-sourced Hankweave, a runtime for long-horizon agents built around “hanks” (sequenced agent programs combining prompts, code, loops, and sentinel monitors), as introduced in the Open-source runtime thread; the stated design goal is to make agents “repairable” over time by being able to remove context, not just accumulate it, as detailed on the Project page.

• Multi-model portability: hanks can swap between Claude Agent SDK, Codex/opencode, and other backends behind a single abstraction, as described in REPL to reusable blocks.

• Anti-greenfield posture: the team highlights having “six months old” hanks that encode real partner learnings rather than “throwaway” agents, as explained in the Design notes.

Firecrawl launches Browser Sandbox for isolated agent-driven web flows

Browser Sandbox (Firecrawl): Firecrawl launched Browser Sandbox, which provisions isolated browser environments and a toolkit for web flows that require pagination, form-filling, and authentication—pitched as complementary to fast scrape/search endpoints, as described in the Launch announcement.

A follow-up demo shows it operating inside Claude Code and also in a playground workflow that fetches dozens of patents from one prompt, as shown in Playground patents demo.

LangSmith Insights adds scheduled recurring jobs for trace pattern mining

LangSmith Insights (LangChain): LangSmith shipped scheduled recurring jobs for Insights, so teams can automatically group traces and surface emergent agent usage patterns on a cadence, as shown in the Scheduled jobs demo and documented in the Insights docs.

The feature turns Insights into something you can run continuously (not just ad hoc after a failure), with the underlying unit of analysis still being trace groupings rather than single prompts, per the Scheduled jobs demo.

Render raises $100M to build a long-running runtime for AI agents

Render (Render): Render announced a $100M raise at a $1.5B valuation and positioned the next product focus as long-running, stateful, distributed infrastructure for AI apps and agents, per the Funding thread.

• Runtime primitives: the company listed Workflows (durable execution), policy-driven Sandboxes, integrated Object Storage, and an AI Gateway for routing/observability/resilience, as enumerated in Runtime roadmap.

HyperAgent SDK shows end-to-end browser control via executeTask()

HyperAgent SDK (Hyperbrowser): Hyperbrowser’s HyperAgent is being presented as an SDK for LLM-driven browser control (open a site, navigate, extract, summarize) instead of brittle selector scripts, as described in the Browser control post.

• SDK shape: setup examples show wiring an LLM provider plus a Hyperbrowser session config/API key, as shown in Setup snippet.

• Task API: the “one call” interface is an executeTask("…") method for end-to-end goals like “Go to Hacker News and tell me the title of the top post,” as shown in Example task.

Palantir CTO describes K-model orchestration with a synthesis reconciler step

K-model orchestration pattern (Palantir): Palantir’s CTO describes an orchestration harness where one prompt is sent to K LLMs in parallel and a synthesis step compares and reconciles outputs into a single response, as shown in the Orchestration diagram.

The trade is explicit: higher token/latency cost for reduced single-model variance, with the synthesizer acting as an adjudicator rather than a simple “best-of-N” picker, per the Orchestration diagram.

Tracing as the core debug primitive for agents (no stack trace for reasoning errors)

Agent observability (LangChain): A LangChain explainer argues that when an agent takes 200 steps over ~2 minutes and fails, the failure is usually a broken reasoning path (or tool decision), not an exception with a stack trace—so tracing is what makes evaluation and debugging actionable, as explained in the Observability explainer.

The framing treats evals as downstream of observability (you mine traces to define failure modes and regression tests), aligning with the walkthrough linked in the YouTube video.

📏 Evals & measurement: agent benchmarks, arenas, and what to trust

Beyond headline model charts, today includes new/updated evaluation surfaces and critiques of benchmark interpretation. This category avoids the Sonnet 4.6 benchmark recap (feature) and focuses on other eval artifacts and methodology discussions.

ARC Prize shares reproducible ARC-AGI benchmarking artifacts and cost framing

ARC Prize (ARC-AGI benchmarking): ARC Prize posted a concrete “how to reproduce” bundle—benchmarking repo, testing policy, and leaderboard links—as part of sharing ARC-AGI runs with $/task cost framing, as shown in the repro links roundup and the cost per task post.

This is measurement infrastructure. Not a model launch.

• Reproducibility: ARC Prize points to the benchmarking repo in benchmarking repo, which is presented as the path to reproduce results.

• Policy: ARC Prize links its verified testing policy via testing policy, clarifying what counts as a valid submission path.

• Leaderboard: ARC Prize links the public leaderboard in leaderboard, positioning it as the place to compare systems.

The post also includes per-task cost numbers for specific runs in the cost per task post, which is useful for teams trying to budget eval sweeps, not just rank models.

Arcada Labs launches Audio Arena for real-time voice agent evaluation

Audio Arena (Arcada Labs): Arcada Labs announced Audio Arena, a production-style evaluation surface for real-time, real-world spoken conversations, as described in the launch post. This is framed as an arena (not a static benchmark), with results “soon to follow.”

It’s aimed at the part voice-agent teams keep arguing about. Turn-by-turn behavior.

• Model coverage: the initial cohort includes speech-to-speech systems from Ultravox, OpenAI, Google DeepMind, xAI, and Amazon, according to the launch post.

• Leaderboard timing: Arcada says leaderboard results are pending, with the “try it out” entry point called out in the same launch post.

The release doesn’t include scoring details in-tweet, so treat early comparisons as provisional until the leaderboard artifact lands.

Conversation Bench v1 targets 75-turn speech-to-speech with tool calls

Conversation Bench v1 (Arcada Labs): Alongside Audio Arena, Arcada Labs introduced Conversation Bench (v1) as a long-horizon speech-to-speech benchmark: 75 turns with tool calls, as outlined in the benchmark announcement.

Multi-turn S2S evals are still thin. That’s the premise.

Arcada claims existing S2S benchmarks are “sparse and saturated,” and says this set extends aiewf-eval with harder questions and more complex tool behavior, per the benchmark announcement. The tweet also notes “leaderboard results soon,” so today’s signal is the benchmark’s shape (75 turns, tool calls), not a validated ranking.

Jeff Dean links benchmark half-life to search-style context filtering

Jeff Dean on benchmarks and context management: Jeff Dean extends his benchmark “half-life” framing from benchmark utility—benchmarks lose value near ~95%—with a more concrete retrieval analogy: start from trillions of tokens, narrow to ~30,000 documents, then to ~117 documents worth deep attention, as shown in the podcast clip.

He describes this as the same staged filtering logic search systems used before LLMs, but applied to long-context reasoning, per the podcast clip. The clip implicitly argues that “context management” is an eval and product problem, not just a model-capability question.

Kernel claims latest Anthropic computer-use model is most accurate so far

Kernel (usekernel): Kernel says Anthropic leaned on Kernel to evaluate computer-use capability for the Sonnet 4.6 release, and Kernel reports it was “the most accurate of any Anthropic release so far,” according to the evaluation claim.

This is a third-party evaluation claim. It’s not a public benchmark table.

Kernel points to its write-up for details—see the methodology post linked in methodology post, which is shared via the methodology link. The tweets don’t include the actual metric breakdown, so the main actionable artifact is the methodology description rather than a headline score.

Every Eval Ever proposes an open, unified dataset for eval results

Every Eval Ever (evaluatingevals): A Hugging Face RT highlights “Every Eval Ever,” described as a unified, open data format plus a public dataset for AI evaluation results, per the format announcement.

The pitch is interoperability. Same results format everywhere.

The tweet doesn’t include schema details or a link in the provided data, so today’s signal is the existence of a proposed standard and dataset—not yet adoption by major benchmark suites.

🕹️ Operating agents: always-on setups, stateful infrastructure, and browser automation tooling

Operational patterns show up clearly today: teams want long-running, stateful, distributed execution for agents, plus practical browser control and remote execution setups. Excludes model-release coverage.

Firecrawl ships Browser Sandbox for isolated agent browser workflows

Browser Sandbox (Firecrawl): Firecrawl launched Browser Sandbox, pitching it as a “one call” way to give agents a managed browser + environment for web flows that break pure scrape/search (pagination, forms, auth), as introduced in launch announcement.

• Playground proof point: they demoed a single prompt fetching dozens of patents in the Browser Sandbox playground, shown in patents demo.

This frames browser automation as an infrastructure product (isolation + scale) rather than a pile of Playwright scripts.

Hankweave open-sourced as a runtime for long-horizon, repairable agent programs

Hankweave (Southbridge): Southbridge said it is open-sourcing Hankweave, the runtime they use to run long-horizon agents as sequenced programs (“hanks”) with loops and monitor agents, emphasizing maintainability and “repairability” over months, as described in open-source runtime announcement.

• Context control emphasis: a key design goal is being able to remove things from context (not only add) while keeping complex systems debuggable, per the detailed description and link to the project page in project description and its project page.

This is an explicit productization of “agent runtime as infrastructure,” not an app-layer agent framework.

Render raises $100M to pivot toward long-running, stateful infra for AI agents

Render (Render): Render announced a $100M raise at a $1.5B valuation and positioned its roadmap around long-running, stateful execution for AI apps/agents, arguing today’s serverless-first platforms don’t fit agent loops that need durable state and distributed coordination, as described in the funding thread from funding announcement.

• Agent-native primitives on the roadmap: Render called out durable Workflows, secure Sandboxes, an AI Gateway (routing/observability/resilience), and integrated Object Storage, per the product direction outlined in runtime roadmap list and reiterated in execution model claim.

The operational signal is a shift toward “agent containers that keep running,” rather than request/response compute.

BridgeMind’s “agentic DevOps” loop: always-on OpenClaw + production monitoring

BridgeMind (OpenClaw ops): Following up on always-on agents—their prior “24/7 Mac Mini agents” setup—BridgeMind posted an “agentic DevOps” plan that includes connecting OpenClaw bots to Sentry plus “autonomous bug detection and patching,” alongside shipping to Windows after a Tauri refactor, as listed in daily build checklist.

The new piece here is the explicit production loop framing (monitoring + patching), not just the always-on hardware.

Cursor enables long-running agents on cursor.com/agents for Ultra/Teams/Enterprise

Long-running agents (Cursor): Cursor announced long-running agents are now available at cursor.com/agents for Ultra, Teams, and Enterprise plans, per the rollout note in availability post.

This is a distribution step for “agents that keep going” outside the IDE’s single interactive session model, with the entrypoint linked at agents page.

HyperAgent SDK shows an end-to-end “open site → find item → summarize” browser task

HyperAgent (Hyperbrowser): Hyperbrowser shared an end-to-end agent flow where the model opens Hacker News, finds the top post, and returns a summary—positioning their SDK as “complete browser control” rather than DOM-selector automation, as described in capability claim.

• Integration pattern: the setup examples show configuring the LLM provider/model inside the agent constructor and then calling a single executeTask(...) string instruction, as documented in the SDK writeup linked from SDK docs.

This is a concrete reference for teams standardizing “natural language task → browser actuation → result” loops.

Cursor’s next cloud agent pitch: remote execution you can monitor and intercept

Cursor cloud agent (Cursor): Cursor’s cofounder described their next cloud agent iteration as shifting most dev work to a remote machine that runs the agent continuously, while the developer monitors and intercepts “from anywhere” (web/phone/desktop), as previewed in remote workflow teaser.

The operational theme is an “agent runs on a box you supervise,” which lines up with long-running agent infrastructure becoming a first-class product surface.

🏗️ Compute & data center signals: Nvidia–Meta deal, cooling innovations, and GPU partitioning

Infra news today is heavy on compute supply chains and efficiency: major GPU procurement, novel data center cooling form factors, and techniques to slice accelerators for higher utilization.

NVIDIA signs multiyear AI infrastructure partnership with Meta

NVIDIA–Meta: A Reuters report says NVIDIA will sell Meta “millions” of AI chips in a multiyear deal—shipping Blackwell now and Rubin later, and extending beyond GPUs to CPUs with a first large-scale Grace-only deployment in Meta data centers, as described in the Reuters report. This tightens the supply picture for frontier training and inference. It’s a procurement signal, not a benchmark story.

• What’s concretely new: NVIDIA frames this as a “multiyear, multigenerational strategic partnership” spanning on-prem, cloud, and AI infrastructure in its newsroom post.

• Operational takeaway: The deal explicitly includes CPUs and networking stack choices alongside GPUs, which points to rack- and data center-level co-design becoming the unit of competition, per the Reuters report.

China’s commercial underwater data center pitches ocean cooling for major energy cuts

Underwater data centers (China): Posts highlight a “world’s 1st commercial underwater data center” concept using sealed cylinder modules on the seabed, leaning on ambient ocean cooling and claiming up to 90% lower server cooling energy use than land sites, according to the project clip. This is an infrastructure form-factor bet. Cooling is the point.

The core engineering question left open in the tweet is what the real availability and serviceability look like at scale (maintenance, retrieval cycles, corrosion handling), since the clip focuses on the deployment concept rather than fleet ops.

Phison warns AI SSD demand could squeeze consumer electronics by late 2026

NAND/SSD supply (Phison): Phison’s CEO is quoted warning that AI-driven demand could trigger memory shortages and price spikes severe enough to push smaller consumer electronics vendors into bankruptcy or out of product lines by end of 2026, as described in the memory crunch claim. This is a storage bottleneck thesis. It’s not about GPUs.

• Concrete mechanism offered: The post cites suppliers demanding 3 years of prepayment and uses an example of “Vera Rubin” systems needing **20TB+ of SSD each,” which it claims could consume ~20% of global NAND capacity, per the memory crunch claim.

• Why it matters to AI teams: If SSD lead times and cash terms tighten, it hits not only training clusters but also the data plumbing around them (checkpoints, datasets, vector stores), as implied by the memory crunch claim.

SoftBank and AMD validate MI300X-style GPU partitioning into virtual GPUs

AMD Instinct partitioning (SoftBank + AMD): SoftBank says it’s validating the ability to slice an AMD Instinct GPU into 2/4/8 logical GPUs (compute chiplets + HBM slices) to reduce slack and cross-workload interference, as outlined in the technical breakdown. It’s a utilization play aimed at serving many smaller models without whole-GPU allocation.

• Mechanism: The description centers on carving accelerator chiplets (XCDs) and memory into isolated partitions, then scheduling each model server to a partition “as if it were its own GPU,” per the technical breakdown.

• Disclosure level: SoftBank hasn’t shared speedup numbers yet, but the validation and public framing appear in the SoftBank release.

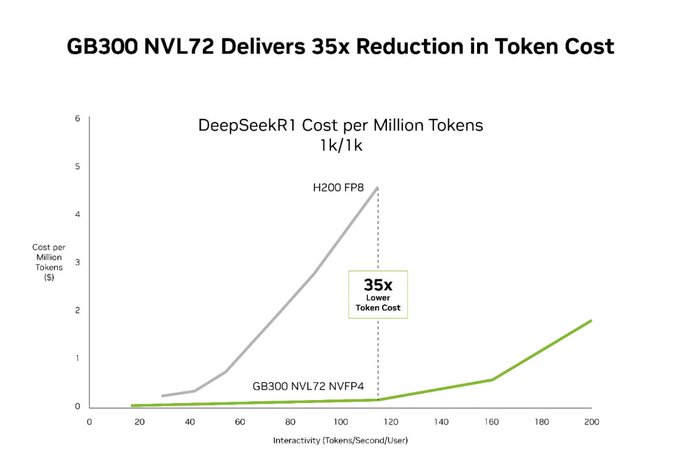

Claim: NVL72 rack-scale systems reach up to 100x inference gains vs Hopper

NVL72 rack-scale inference (NVIDIA): A post claims Jensen Huang’s earlier “30× inference gains” messaging was conservative, with real-world NVL72 testing showing up to 100× improvements versus strong Hopper baselines, following up on tokens per watt (GB300/NVL72 efficiency framing) and citing the NVL72 gain claim. It’s a big number. Details are thin.

The tweet doesn’t specify workload mix (batch vs interactive), precision, or token-per-watt vs throughput basis, so this should be treated as directional until a reproducible methodology or vendor teardown appears.

📄 Document AI & retrieval: extraction accuracy, query agents, and context-heavy pipelines

Several posts focus on turning messy documents and databases into reliable structured outputs (citations, confidence, multi-collection routing)—core to enterprise agent workflows.

LlamaExtract pushes page-level provenance for 98%+ document extraction accuracy

LlamaExtract (LlamaIndex): LlamaIndex is framing high-stakes PDF extraction as an auditability problem—page attribution, bounding boxes back to source elements, calibrated confidence, and “no dropped outputs” even with hundreds of fields—rather than a generic “chat with a PDF” experience, as laid out in Page-level extraction notes; a product demo shows a complex PDF being turned into structured JSON and calls out a 99.8% accuracy result in the UI, per Extraction demo.

• Why this matters for production: the tweet explicitly calls out business requirements like 98%+ accuracy and source traceability (citations + confidence) as the difference between “useful” and “deployable,” per Page-level extraction notes.

• Implementation direction: it’s positioning extraction as a service that returns not only fields but also the evidence anchors needed for human review workflows, as described in Page-level extraction notes.

Weaviate ships a “Query Agent” that routes, queries, and validates across collections

Query Agent (Weaviate): Weaviate is demoing an agent that turns natural-language questions into the right mix of searches and aggregations, automatically chooses which collections to query, and runs an “is this relevant?” evaluation loop with retries—plus a split between “Ask mode” (LLM answer) and “Search mode” (raw objects), as described in Feature overview.

• Multi-collection routing: the agent is explicitly designed to pull from multiple collections/sources when needed, rather than assuming a single index, as shown in Feature overview.

• Result validation loop: it includes an evaluation step that can trigger new queries if retrieved information doesn’t match the intent, as diagrammed in Feature overview.

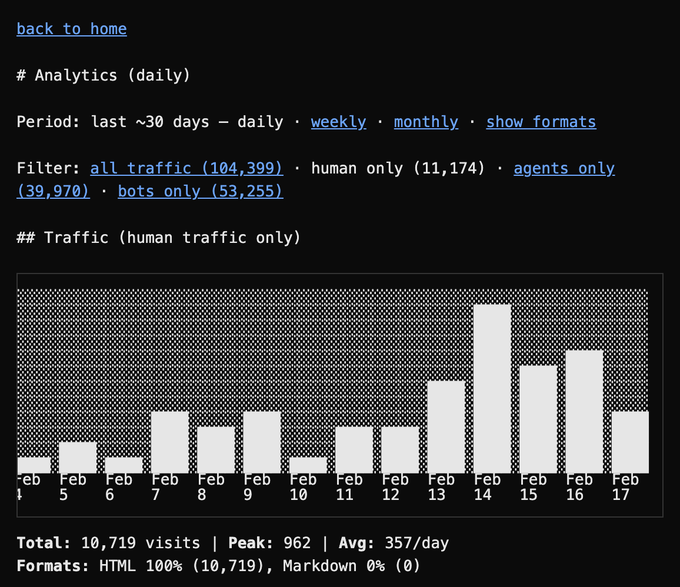

codebase.md highlights demand for LLM-friendly repos—and how bots distort the metrics

codebase.md (Tooling): A project offering “turn any public GitHub repo into LLM-friendly markdown with natural-language search” was floated as a potential acquisition target, per Acquisition post and the linked Tool page; the author then reported that Cloudflare suggests most of the apparent traffic wasn’t real humans, per Bot-traffic follow-up.

• Builder takeaway: repo-to-markdown frontends are getting enough attention to generate meaningful inbound interest, but growth signals can be dominated by crawlers and automated agents, as evidenced by Acquisition post and the correction in Bot-traffic follow-up.

A Wikipedia comparison tool surfaces cross-language image mismatches

Wikipedia image consistency (Data curation): A small tool shows that the same Wikipedia topics across languages often use different images, which is a practical reminder that “ground truth” varies by locale even before you start doing retrieval or multimodal evaluation, as described in Tool description.

🧬 Training & reasoning systems: agent RL, long-context fidelity, and memory innovations

The research/engineering layer under models is visible today via technical reports and memory/horizon work (agent RL infra, long-context methods, and context management). Excludes any bioscience content.

GLM-5 technical report lays out sparse attention + async RL for long-horizon agents

GLM-5 (Z.ai): Z.ai published the GLM-5 technical report, calling out three implementation-level levers—DSA (Dynamic Sparse Attention) to cut training/inference cost while keeping long-context fidelity, asynchronous RL infrastructure (decoupling generation from training) to raise post-training throughput, and agent RL algorithms aimed at long-horizon interactions, as described in the technical report thread and the linked ArXiv report.

• Training pipeline specifics: the report’s “from Vibe Coding to Agentic Engineering” diagram shows staged context growth (4K → 32K → 128K/200K) plus a sparse-attention adaptation step and a post-training flow that mixes SFT, reasoning RL, agentic RL, general RL, and cross-stage distillation, as shown in the technical report thread.

The writeup is one of the clearer public descriptions this week of how teams are optimizing for agentic engineering rather than single-turn chat, per the technical report thread.

Moltbook paper finds ‘society texture’ without shared memory or durable influence

Moltbook (agent society study): A new paper-based thread describes a synthetic social network with 2.6 million LLM agents, where the macro “semantic signature” quickly converges (reported ~0.95 similarity) but individual-agent influence and feedback effects stay near-random—suggesting you can get the surface appearance of culture without shared social memory or persistent leaders, as summarized in the paper summary post.

• Why it matters for training and agent design: the takeaway is a warning against assuming “more agents talking” yields emergent coordination; durable influence seems to require explicit memory/structure, per the paper summary post.

RLM proponents push symbolic context: keep prompts and tool output out of the root model

RLMs (Recursive Language Models): A recurring thread argues the next step is to run “code that calls LLMs,” where that code can access the user prompt as a symbolic variable—and crucially, the root model doesn’t directly ingest most prompt/tool output, which instead gets routed through variables and sub-calls, as sketched in the RLM ladder note and reiterated in the symbolic variable follow-up.

The implied engineering bet is that long-horizon reliability comes less from raw context stuffing and more from programmable indirection (typed-ish state + controlled exposure), per the RLM ladder note.

GLM-5’s diagram spotlights cross-stage distillation as an RL efficiency pattern

Post-training mechanics (GLM-5): Beyond the headline features, the GLM-5 pipeline diagram emphasizes on-policy cross-stage distillation as a connective tissue between RL stages (dotted “logits” links) and then a final “weights” handoff—an explicit design pattern for keeping later-stage agent behavior while managing training cost, as shown in the training diagram screenshot.

This is a rare, reasonably concrete public example of how teams are wiring together multi-stage RL + distillation to target long-horizon agent performance, per the training diagram screenshot.

Lossless Context Management (LCM) gets framed as the next battleground for agent memory

LCM (Lossless Context Management): Multiple posts frame LCM as a concrete step beyond “bigger context windows,” claiming it extends Recursive Language Models and can beat existing long-context coding harnesses on some tasks, with “agent memory” called out as the axis to watch in the memory innovation claim and reinforced by the agent memory thread context.

The public signal here isn’t a single benchmark artifact; it’s that builders are treating context management as a systems layer (what gets written, stored, retrieved, and hidden) rather than a prompt trick, per the memory innovation claim.

💼 Funding & enterprise moves: acquisitions, big rounds, and public-sector partnerships

Today includes capital flows and enterprise distribution moves that affect what teams can buy/build: large raises, M&A in infra, and government partnerships for AI deployment.

Nerve joins OpenAI to scale search for ChatGPT; Nerve product sunsets

Nerve (OpenAI): Nerve says it’s joining OpenAI to help build search for ChatGPT “at a much larger scale,” with the acquisition framed as an acqui-hire focused on retrieval/search engineering in the join announcement and in Nerve’s own transition post.

• Operational impact for customers: Nerve states the product will be discontinued in 30 days and billing is suspended immediately, per the transition post.

This is a concrete distribution move: search quality and indexing infrastructure are becoming a differentiator for agentic “do the work” products, not just for Q&A.

Anthropic signs 3-year Rwanda MOU for AI in health, education, public sector

Rwanda MOU (Anthropic): Anthropic says it signed a three-year Memorandum of Understanding with the Government of Rwanda—positioned as its first multi-sector public-sector partnership of this kind in Africa—in order to deploy Claude and Claude Code across health, education, and other government workflows, as described in the partnership announcement and detailed in the MOU post. It explicitly includes capacity building (training) plus credits/licensing, which is the practical part teams care about when “public sector partnership” otherwise stays abstract.

The deliverable risk is mostly execution: success here depends on tool access, procurement, and domain integrations, not model quality.

Mistral AI acquires Koyeb to accelerate Mistral Compute

Mistral × Koyeb (Mistral): Mistral AI is reported to have acquired Koyeb, a serverless platform for running AI apps across CPUs/GPUs/accelerators, with the stated goal of accelerating “Mistral Compute,” as announced in the acquisition claim.

For engineers, the signal is vertical integration: model labs are buying deployment surfaces so they can control latency, routing, and cost structure instead of relying on third-party PaaS defaults.

Render raises $100M at $1.5B to build long-running infra for agents

Render (funding): Render announced a $100M raise at a $1.5B valuation, pitching a shift from “frontend-focused serverless” toward long-running, stateful, distributed infrastructure aimed at AI apps and agents, as stated in the fundraise thread.

• Roadmap items called out: the same thread lists Workflows (durable execution), Sandboxes (policy-driven execution), and an AI Gateway (routing/observability/resilience) as upcoming primitives, according to the fundraise thread.

This is an enterprise infrastructure bet: agent reliability is being reframed as a runtime problem, not only a model problem.

Braintrust raises $80M Series B for AI product eval/measurement stack

Braintrust (Series B): Braintrust announced an $80M Series B to build infrastructure for measuring, evaluating, and improving production AI systems, pointing to customer usage at Notion, Vercel, Navan, and Bill.com in the funding announcement and expanding on the positioning in its funding blog.

This is part of the “evals as core infra” trend: as agent loops get longer and more tool-heavy, teams end up needing first-class trace + eval pipelines, not ad-hoc prompt testing.

PolyAI raises $200M to scale enterprise voice agents

PolyAI (funding): PolyAI is reported to have raised $200M, with Nvidia and Khosla Ventures named among investors in the funding repost and reiterated alongside product framing in the voice agent thread. The same thread also claims deployments at large brands and emphasizes handling interruptions, noise, and mid-call language switching—properties that matter more than single-turn ASR/TTS demos in enterprise CX.

The evidence in these tweets is largely promotional; there’s no term sheet detail or primary fundraising doc linked.

Anthropic’s Bengaluru office update adds public-sector MCP and Indic language work

Anthropic India expansion (Anthropic): Following up on Bengaluru office (India becoming Claude’s #2 market), a new post claims Anthropic’s Bengaluru presence is tied to deeper enterprise/public-sector distribution—specifically work on fluency for 10 Indic languages and an Indian government deployment of an official MCP server for national statistics data, according to the India partnerships post.

This is the “distribution via connectors” angle: public-sector MCP endpoints turn data access into a standardized tool surface for agents, which tends to pull model choice downstream of integration availability.

🎙️ Voice agents: turn-taking, support automation, and real-time avatars

Voice agent work today is less about new base models and more about production-ready interaction patterns (turn completion) and packaged enterprise offerings for support.

Pipecat’s new turn-completion mixin aims to stop voice agents interrupting

Pipecat (Pipecat): A new “Smart Turn” approach combines voice activity detection (200ms trigger), a small CPU audio turn-detection model, and an LLM-side prompt mixin that decides whether a user is “done” based on conversation context, as detailed in the Turn taking breakdown.

• Single-token gating: The LLM is prompted to emit exactly one of three single-character tags at the start of every response—✓ respond now, ○ wait 5s, ◐ wait 10s—using near-zero-latency tagging instead of tool calls, per the Turn taking breakdown.

• Shipped artifact: The mixin is documented as UserTurnCompletionLLMServiceMixin, with implementation details in the Pipecat docs and the original change described in the PR thread.

This is presented as a practical “no longer think about turn taking” milestone, but reliability still depends on model compliance with single-token output (noted as weaker on older/smaller models) in the Turn taking breakdown.

ElevenLabs introduces ElevenAgents for Support for CX workflows and metrics

ElevenAgents for Support (ElevenLabs): ElevenLabs announced a packaged offering to turn support SOPs/knowledge bases into production agents, with plain-text behavior tuning and built-in CX metrics (CSAT, deflection rate, latency), as described in the Launch post.

• Workflow focus: The pitch centers on converting existing SOPs into “live agents” and then iterating behavior via feedback like “be more empathetic,” per the Launch post.

• Enterprise posture: ElevenLabs frames this as running on a vertically integrated agents platform “informed by 4M+ agent deployments,” with more product detail on the Product page.

The announcement is positioned around operationalization (guardrails, metrics, integration) rather than new base-model capability in the Enterprise reliability note.

Anam launches Cara 3 for real-time photoreal avatars with low latency claims

Cara 3 (Anam): Cara 3 was described as a real-time, interactive avatar that generates photoreal faces live (not pre-rendered video) and targets sub-second latency, according to the Launch summary.

• Competitive claims: Posts cite “#1” positioning versus Tavus, HeyGen, and D-ID, plus “sub-second latency” and interruption handling, as stated in the Launch summary.

• Adoption-style metrics: Another thread claims users took “14+ min to realize” they were speaking to AI and reports deltas like “24% higher overall experience” vs the nearest competitor, per the Experience deltas.

No primary eval artifact is included in the tweets, so treat the comparative numbers as self-reported until an external methodology is provided in future updates.

LiveKit shows an avatar agent completing a healthcare intake form in real time

LiveKit Agents + Anam (LiveKit): LiveKit shared a working demo of a healthcare intake assistant where a real-time avatar guides a user through a form and fills fields during the conversation, as shown in the Avatar intake demo.

The clip is a concrete example of packaging a voice agent as a UI-first workflow (voice + form completion) and implicitly highlights what teams care about in production—tight latency, interruption handling, and deterministic “did it actually fill the form” outcomes—based on the on-screen flow in the Avatar intake demo.

🤖 Embodied AI: humanoid demos and precision consumer robotics

Robotics content today is dominated by China’s humanoid momentum (Unitree) plus examples of fine manipulation moving into consumer services.

Unitree’s G1 routine gets dissected as distributed coordination, not just playback

Unitree G1 (Unitree): Following up on G1 routine, new clips and commentary keep pointing at “stage demo” as a proxy for real-world closed-loop control—with the televised multi-robot Kung Fu performance framed as synchronized choreography plus per-robot balance variance and re-alignment, rather than perfect canned timing, as shown in the robot performance clip.

The strongest technical signal today is the detailed breakdown that argues you can infer autonomy from small imperfections: slightly different landing times after jumps, then regrouping on the next beat; handling a slick glass stage using adapted footwear; and real-time spacing adjustments when humans move unpredictably, according to the Zhihu technical analysis.

• Market share framing: multiple posts repeat the claim that China shipped “nearly 90%” of humanoids sold last year, as stated in the robot performance clip thread, with broader competitive context in the Rest of World report and its linked industry analysis.

What’s still missing is a reproducible technical artifact (logs, controller stack, evaluation protocol), so the discussion remains inference-from-video rather than an eval you can run.

Barclays sizes “Physical AI” at up to $1.4T by 2035, with China leading installs

Physical AI market sizing (Barclays): A Barclays research note circulating on X pegs the Physical AI market at $0.5T–$1.4T by 2035, spanning autonomous vehicles, drones, humanoid robots, and industrial automation; it also claims China is leading early humanoid deployments, as shown in the Barclays excerpt.

The quote in the note adds a concrete adoption datapoint—China accounting for 85%+ of new humanoid installations in 2025—and attributes up to $550B of the 2035 total to autonomous vehicles, per the same Barclays excerpt.

This is sell-side forecasting (not a benchmark), but it’s a clear signal that “humanoids + AVs” are being packaged as one investable category with a single TAM narrative.

🎬 Gen media stack: Seedance shock, Recraft vectors, and video tooling drops

Creative tooling remains a meaningful slice of today’s feed: AI video quality/legality debates and practical design assets (SVG/vector generation) shipping into developer workflows.

ComfyUI adds Recraft V4 and V4 Pro with text-to-SVG output

Recraft V4/V4 Pro (ComfyUI): ComfyUI added Recraft V4 and V4 Pro, positioning them for designer-grade composition/lighting/color and, notably, native SVG generation with clean editable paths rather than raster-then-trace flows, as stated in ComfyUI availability and expanded in the ComfyUI blog post.

Text rendering and vector output are the practical differentiator here (logos, UI assets, marketing layouts), with the team explicitly calling out “production-ready vectors” and SVG editability in tools like Illustrator/Figma, per ComfyUI availability.

Seedance 2.0 clips spread with lightweight prompting and edit loops

Seedance 2.0 (ByteDance): Creators keep posting short, high-ambiguity “does this break physics?” style clips and lightweight prompt recipes; the same thread of discourse frames it as a new baseline for solo video iteration, as argued in Filmmaking claim and shown in Skate physics test.

• Workflow signal: one creator describes results as “close to a one-shot” and notes they “stitched together two pieces” after an initial attempt, with prompt content focused on dialogue timing rather than heavy control logic, as written in Workflow notes.

• Quality bar debate: the strongest takes are directional (“create better films than Hollywood”), but the posted artifacts are still mostly short clips and tests rather than end-to-end narrative consistency, as implied by the clip-centric sharing in Filmmaking claim and Skate physics test.

Replicate adds Kling 3.0 and o3 for multi-shot 4K video generation

Kling 3.0 + o3 (Replicate): Replicate says Kling 3.0 and o3 are now available, advertising multi-shot 4K generation with synchronized audio, up to 15-second generations, and editing + style transfer workflows, as described in Model availability.

The concrete engineering implication is that “video model choice” is now being expressed as a deployable endpoint decision (duration cap, multi-shot, style transfer) rather than a single monolithic model in an app, as framed in Model availability.

Seedance 2.0 copyright pressure intensifies as studios threaten action

Seedance 2.0 (ByteDance): A report claims ByteDance will tighten safeguards and curb the tool’s capabilities after legal threats; Disney is described as sending a cease-and-desist alleging a “pirated library” of copyrighted characters, according to Legal threat report.

It’s a concrete product-constraint signal for teams relying on Seedance outputs in production pipelines, since it suggests policy and model-side guardrails may change faster than the underlying video quality curve, as described in Legal threat report.

fal adds Recraft V4 endpoints for design and vector generation

Recraft V4 (fal): fal says Recraft V4 is now live on its platform with emphasis on photoreal textures and illustration quality, as announced in fal availability; it also exposes both text-to-image and text-to-vector endpoints, as linked from Endpoint links via the Text-to-image endpoint and the Text-to-vector endpoint.

This matters for teams who want Recraft in an API-shaped pipeline (batch asset generation, templated creative, vector export) without adopting a full ComfyUI graph setup, per fal availability.

Replicate adds Bria video background removal with true alpha mattes

Video background removal (Bria on Replicate): Replicate added Bria’s video background removal model, calling out true alpha mattes and robustness across complex scenes, with a training-data positioning of “licensed data,” per Background removal launch and Feature claims.

The deployable artifact is the Replicate endpoint itself, which is linked in the Model page and is intended for production compositing workflows where consistent matting matters more than generative aesthetics, per Background removal launch.

A “stress test” workflow for video models, applied to Kling 3.0

Video eval workflow (Weavy): Weavy argues “wow isn’t a real benchmark” and shares a repeatable stress-test workflow to probe video-model failure modes (camera moves, physics, consistency), starting with Kling 3.0, as shown in Stress test workflow and echoed by per-clip notes like “physics can get messy” in Physics caveat.

The output is less a single score than a structured set of scenario prompts and qualitative checks (first/last frame fidelity, scene progression, drift under angle changes), as described across the thread in Angle drift note and Camera move note.

ComfyUI adds Node Replacement API for custom node migrations

Node Replacement API (ComfyUI): ComfyUI introduced a Node Replacement API aimed at keeping custom-node ecosystems upgradeable—supporting renames, merges, input refactors, and guided frontend migrations so existing graphs keep working, as outlined in Feature announcement and documented in the Node replacement docs.