IBM Granite 4.0 hits token‑efficiency frontier – 32B MoE runs 9B active

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

IBM just dropped Granite 4.0, an Apache‑2.0 open‑weights family that blends Mamba and Transformer layers to cut memory without tanking quality. The headliner is a 32B hybrid MoE that only activates 9B params and still handles 128K context, and it’s already live on Hugging Face, Replicate, Ollama, and LMSYS Arena. Pricing looks sane: Replicate lists H‑Small around $0.06 input and $0.25 output per 1M tokens.

Independent tests put Granite‑4.0‑H‑Small at 23 on the Artificial Analysis index, ahead of Gemma 3 27B and behind Mistral Small 3.2 and EXAONE 32B; more interestingly, it finishes with fewer output tokens than peers at 5.2M. The 3B Micro scores 16, sips 6.7M tokens, and even runs fully in‑browser via WebGPU. Locally, you can one‑line pull granite4:small‑h in Ollama, and a community run shows the 7B Tiny‑H hitting about 34.5 tok/sec on a 16GB M1 Mac — perfectly usable for RAG and tool‑calling.

With Arena support for Battle and Side‑by‑Side, you can validate preference wins on your prompts before wiring up production.

Feature Spotlight

Feature: IBM Granite 4.0 open‑weights hybrid stack

IBM’s open Granite 4.0 brings hybrid Mamba/Transformer models with strong tool use at low cost, Apache‑2.0 licensing, and day‑1 distribution (HF/Replicate/Ollama) — a credible, enterprise‑friendly open alternative.

Big multi‑account story: IBM ships Granite 4.0 (3B/7B/32B) hybrid Mamba/Transformer models under Apache‑2.0; immediate availability across HF, Replicate, Ollama and Arena. Tweets include benchmarks, cost/perf plots, WebGPU demos, and enterprise positioning.

Jump to Feature: IBM Granite 4.0 open‑weights hybrid stack topicsTable of Contents

🪨 Feature: IBM Granite 4.0 open‑weights hybrid stack

Big multi‑account story: IBM ships Granite 4.0 (3B/7B/32B) hybrid Mamba/Transformer models under Apache‑2.0; immediate availability across HF, Replicate, Ollama and Arena. Tweets include benchmarks, cost/perf plots, WebGPU demos, and enterprise positioning.

IBM ships Granite 4.0 (3B/7B/32B) hybrid Mamba/Transformer under Apache-2.0, live on Replicate, Ollama, and LMSYS Arena

IBM released Granite 4.0, a family of open‑weights LLMs with a hybrid Mamba/Transformer design aimed at lowering memory without big accuracy loss. Models are available immediately on Hugging Face, Replicate, Ollama, and the LMSYS Arena, with 128K context and Apache‑2.0 licensing. overview thread Replicate blog Ollama commands Arena listing

- Sizes and variants: Micro 3B (dense), Micro‑H 3B (hybrid), Tiny‑H 7B, Small‑H 32B (9B active MoE). Ollama commands

- License and context: Apache‑2.0 and 128K tokens to fit enterprise workflows. benchmarks roundup

- Distribution: Replicate positions Granite for summarization, RAG and agent workflows on consumer GPUs. Replicate blog

- Try it in Arena across Battle, Side‑by‑Side and Direct modes for head‑to‑head comparisons. Arena listing Arena site

Artificial Analysis: Granite‑4.0‑H‑Small scores 23, Micro 16, with best‑in‑class token efficiency

Independent tests put Granite‑4.0‑H‑Small at 23 and Granite‑4.0‑Micro at 16 on the Artificial Analysis Intelligence Index, while using fewer output tokens than most peers under 40B parameters—H‑Small at 5.2M and Micro at 6.7M during the full run. benchmarks roundup

- Pricing context: Replicate lists Granite‑4.0‑H‑Small around $0.06/$0.25 per 1M input/output tokens (serving readiness). benchmarks roundup Replicate blog

- Positioning: H‑Small places ahead of Gemma 3 27B on the index, trailing Mistral Small 3.2 and EXAONE 32B (non‑reasoning). benchmarks roundup

- Spec: All Granite 4.0 models advertise 128K context and Apache‑2.0 licensing for permissive enterprise use. benchmarks roundup

Ollama adds granite4: micro/micro‑h/tiny‑h/small‑h for one‑line local runs

Local developers can now pull Granite 4.0 with a single command in Ollama (e.g., “ollama run granite4:small-h”), covering 3B, 7B, and 32B variants including the hybrid H‑series. Ollama commands Ollama model page

- Variants: micro (3B dense), micro‑h (3B hybrid), tiny‑h (7B hybrid), small‑h (32B hybrid/MoE). Ollama commands

- Use cases: Good fit for agent tool‑calling and RAG loops locally before promoting to hosted endpoints. Ollama commands

Granite 4.0 Micro runs fully in‑browser via WebGPU + TransformersJS

IBM and community partners showcased the 3B “Micro” model running 100% locally in the browser using WebGPU with 🤗 TransformersJS—handy for privacy‑sensitive demos, offline UX, and rapid prototyping. overview thread

- Developer angle: Zero server needed for quick POCs; ship a link to validate UX flows or agent tool‑use UI without backend. overview thread

- Caveat: Expect mobile devices to throttle; desktop GPUs deliver a smoother experience.

IBM joins LMSYS Arena; Granite‑4.0‑H‑Small ready for Battle and Side‑by‑Side tests

LMSYS Arena added IBM as a provider today, inviting head‑to‑head comparisons for Granite‑4.0‑H‑Small in Battle, Side‑by‑Side, and Direct modes—useful for fast, human‑in‑the‑loop evals across tasks. Arena listing Arena site

- Why it matters: Arena rankings reflect tens of thousands of human votes, complementing synthetic benchmarks with preference‑based signal. Arena listing

- Practical tip: Use Arena to compare Granite to incumbent closed models on your typical prompts before committing infra.

Token‑efficiency frontier: Granite 4.0 models sit on the cost/performance Pareto for small open weights

Artificial Analysis plots Granite 4.0 Micro (≤4B) and H‑Small (≤40B) on the intelligence‑vs‑output tokens frontier, indicating both models deliver strong results with fewer tokens than peers—useful where output cost or rate‑limits are tight. frontier snapshot analysis note model page

- Micro (≤4B segment): Frontier position vs Gemma 3 4B and LFM 2 2.6B. analysis note

- H‑Small (≤40B segment): Competitive tradeoff vs larger non‑reasoning open models, with favorable per‑token pricing. model page

Real‑world local perf: Granite‑4.0‑H‑Tiny hits ~34.5 tok/sec on an M1 16GB Mac

A community run shows ibm/granite‑4‑h‑tiny in LM Studio generating ~34.5 tokens/sec on an M1 Mac with 16GB RAM—signal that smaller Granite variants are practical for on‑device dev loops. lmstudio demo

- Takeaway: Hybrid layers plus modest size make Granite‑H‑Tiny viable for offline drafting, extraction, and light agents without a GPU. lmstudio demo

🧰 Coding agents, IDEs and workflows

Hands‑on updates across agentic coding stacks: GPT‑5 oracle patterns, Cursor worktrees/TUI, Zed’s Python overhaul, Google’s Jules CLI, Droid BYOK/local models, and Cline’s GLM‑4.6 results. Excludes the IBM Granite feature.

Amp adopts dual‑agent pattern: Sonnet 4.5 builds, GPT‑5 plans and debugs on call

Amp now routes day‑to‑day coding to Claude Sonnet 4.5 and summons a GPT‑5‑powered oracle subagent for planning, debugging and architecture reviews when you add "Use the oracle" in instructions usage note. A product note shows GPT‑5 replacing o3 as the oracle model, with example prompts for design refactors and failure analysis feature brief.

- Oracle triggers are natural‑language (“Use the oracle …”), keeping the main loop fast while spiking in deeper reasoning on demand usage note.

- The shift formalizes a popular builder pattern: a lightweight executor paired with a slower planner for hard steps feature brief.

GLM‑4.6 nears Claude on code edits in Cline’s tests—at a fraction of the price

Cline’s v3.32.3 integrates GLM‑4.6 and, in internal runs, the model reached a 94.9% success rate on diff edits—within 1.3 points of Claude Sonnet 4.5—while costing roughly one‑tenth as much release notes. The team’s blog details the evaluation and pricing calculus for builders who need high throughput Cline blog post, echoing community data points on GLM‑4.6’s strong diff performance bench result. This follows broader agent support for GLM‑4.6 earlier in the week agent adoption.

- Lower token cost + near‑par accuracy makes GLM‑4.6 attractive for large edit batches release notes.

- Sonnet 4.5 still leads slightly in the lab’s head‑to‑head, but economics favor GLM for many workloads bench result.

Jules SWE Agent gets a terminal: npm install -g @google/jules

Google’s Jules now runs from the terminal, letting you kick off software tasks directly inside a local repo with a single install cli launch. A community write‑up walks through parallel usage with Gemini CLI and notes the CLI’s fit for engineers who prefer shell‑first workflows feature article.

- Install with npm and point at a folder to let Jules context‑load and execute agent runs cli launch.

- CLI unlocks easier orchestration with existing scripts and CI, complementing the web UI feature article.

Parallel agent branches land in Cursor; OpenTUI gets first‑class syntax highlighting

Agent workflows in Cursor can now split into isolated Git worktrees—useful for running multiple agent tasks in parallel without stepping on each other’s changes worktrees update. Practitioners are already trialing worktrees to spin up separate agent lanes per task parallel demo. In tandem, OpenTUI gained native Tree‑sitter syntax highlighting, a sizable UX bump for reviewing diffs and large edits in‑terminal syntax demo.

- Worktrees help avoid merge churn and enable concurrent PRs from separate agent sessions worktrees update.

- The TUI upgrade pairs well with agent‑driven refactors that touch many files, improving scan speed for human‑in‑the‑loop review syntax demo.

Droid can now run your own or local models via custom_models in config.json

Developers can bring their own keys and route Droid to Anthropic/OpenAI‑compatible endpoints—or even local models—by adding entries under custom_models in ~/.factory/config.json byok update, with the official BYOK guide showing provider, base_url and key fields BYOK docs.

- Models appear alongside hosted options in /model, making swaps trivial during agent runs byok update.

- Maintainers reiterate that custom model usage is free within the CLI, useful for cost‑sensitive pipelines BYOK docs.

Zed ships Python overhaul with auto environments and reliable servers

Zed detailed a Python experience revamp: basedpyright by default, auto‑activated venvs with per‑project interpreters, monorepo‑ready toolchains and run/launch configs that don’t fight you feature thread, with a longform breakdown of the new toolchain selector and multi‑venv support Zed blog post. Users are invited to file remaining pain points in the discussion thread GitHub discussion.

- Separate language servers per toolchain reduce stale/incorrect diagnostics in multi‑project repos feature thread.

- Defaults include Ruff and ty integration; Python feels closer to Zed’s fast TypeScript flow feature thread.

🧠 Reasoning RL and post‑training advances

Rich paper drops on exploration and verifiable rewards: MCTS inside RLVR (DeepSearch), compute‑aware exploration (Knapsack RL), a unified agent gym (GEM), truthfulness RL, and VLA fine‑tuning in a video world model. Excludes model launch news.

DeepSearch puts MCTS inside RLVR, hitting 62.95% with ~5.7× fewer GPU hours

A new training loop integrates Monte Carlo Tree Search directly into Reinforcement Learning with Verifiable Rewards to expand exploration and improve credit assignment. Reported results: 62.95% on AIME/AMC while using ~5.7× less compute, with entropy‑guided learning from both correct and confident‑wrong paths. paper diagram Hugging Face page

- Global frontier selection and node‑level q‑values stabilize updates (Tree‑GRPO) and reduce wasted rollouts. figure overview

- Caches verified solutions and filters easy items to focus budget on hard leaves; sustained gains where standard RLVR plateaus. release brief

Knapsack RL reassigns rollouts to hard prompts, boosting learning 20–40% at fixed compute

ByteDance proposes framing exploration budget as a knapsack: score prompts by expected gradient value and cost, then allocate more samples to tough items while trimming solved ones. This raises the share of non‑zero gradients by ~20–40% and can grant up to 93 rollouts to a single hard prompt without increasing total compute. paper thread paper page

- Uniform sampling wastes work (easy prompts always pass; hardest always fail); adaptive reallocation maintains productive signal. paper thread

- Average accuracy lifts of +2–4 points and peaks of +9 reported on reasoning benchmarks under constant budget. paper thread

Meta’s TruthRL: Ternary rewards (correct/IDK/wrong) cut hallucinations by 28.9%

A lightweight change to the reward signal—separating abstention from error—trains models to answer only when confident and say “I don’t know” otherwise. Reported outcomes include 28.9% fewer hallucinations and 21.1% higher truthfulness across tasks with or without external docs using online RL with an LLM judge. paper summary

- Simple 3‑level reward outperforms binary schemes and extra knowledge/reasoning bonuses while avoiding timid behavior. paper summary

- String‑match grading biased abstention; judge‑based rewards gave stable, calibrating signals for truthfulness. paper summary

GEM, a ‘Gym for Agentic LLMs,’ standardizes multi‑turn RL with REINFORCE+ReBN

GEM offers small, tool‑using worlds (math, coding, word games, terminal) to train agents over many steps with step‑level rewards. A simple REINFORCE plus Return Batch Normalization objective credits individual turns and often outpaces GRPO, with discounts <1 naturally inducing binary‑search strategies. paper overview

- Bundles Python and web‑search tools for grounded improvements after RLT fine‑tuning. paper overview

- Works as a training and eval harness so teams can iterate on long‑horizon policies without bespoke simulators. paper overview

RL from user conversations personalizes models and lifts reasoning from 26.5→31.8

Meta outlines an online loop that mines chat histories: weak replies are rewritten using user‑guided feedback; short personas score candidates; and the model learns pairwise preferences with quality filters. Held‑out users see better instruction following, personalization, and reasoning rising from 26.5 to 31.8. authors’ summary

- Judge‑based rewards convert messy logs into reliable signals, avoiding over‑abstention seen with string matches. authors’ summary

- Shows a viable path to continual post‑training without curated datasets, using the traffic your product already has. authors’ summary

VLA‑RFT fine‑tunes robot policies in a learned video world with verified rewards, in 0.4K steps

A video‑based world simulator predicts future frames for short action chunks; pixel and perceptual rewards then guide Group Relative Policy Optimization to update the Vision‑Language‑Action policy end‑to‑end. Comparable or better performance arrives after ~0.4K RFT iterations vs ~150K supervised steps, with better recovery under perturbations. framework figure

- Pretraining both policy and simulator on demonstrations avoids early collapse and keeps rollouts stable. framework figure

- Reported gains on LIBERO tasks under object/goal/robot‑state shifts; suggests practical offline RLT for embodied agents. framework figure

BroRL: Hundreds of rollouts per example revive plateaued RLVR and sustain improvements

A broadened exploration recipe increases rollouts per instance so correct‑token mass keeps growing while unsampled effects diminish. Theoretical mass‑balance analysis plus simulations suggest large rollout sizes guarantee net gains in correct probability, reviving models that had saturated under standard ProRL‑style training. paper brief Hugging Face page

- Positions broad exploration as a compute‑efficient alternative to longer training runs with little signal. author notes

- Practical takeaway: treat rollout count as a first‑class knob alongside reward shaping and sampling temperature. author notes

OmniRetarget preserves contacts in human→robot motion, improving sim‑to‑real learning

Amazon researchers build an “interaction mesh” that links body, objects, and terrain, then solve a constrained retargeting problem to enforce no‑penetration, stable contacts, and feasible joints. Cleaner, interaction‑aware data boosts RL stability and yields zero‑shot transfer to a physical humanoid. research summary

- One human demo expands into many robot‑ready variants across poses, shapes, and terrains—reducing reward engineering and data collection. method details

- Outperforms keypoint‑matching baselines on penetration, foot sliding, and contact preservation metrics. research summary

Vision‑Zero turns VLM training into a spy game, scaling supervision without labels

The system builds two‑stage self‑play: players describe images while a hidden “spy” blends in; then voting yields verifiable rewards. Iterative self‑play policy optimization alternates between clue generation and voting to avoid plateaus, improving chart QA and visual reasoning with zero human labels. paper explainer

- Cheap synthetic edits create many image pairs; each pair yields multiple supervised signals via gameplay. paper explainer

- Separates perception (encoder/tuning‑sensitive) from reasoning (language‑centric), clarifying where multimodal gains originate. paper explainer

📊 Leaderboards and real‑world eval signals

Fresh eval snapshots and tooling telemetry: Claude Sonnet 4.5 ties #1 on Text Arena, improves computer‑use trajectories, WebDev ranking, and provider throughput/latency dashboards. Excludes the IBM Granite feature.

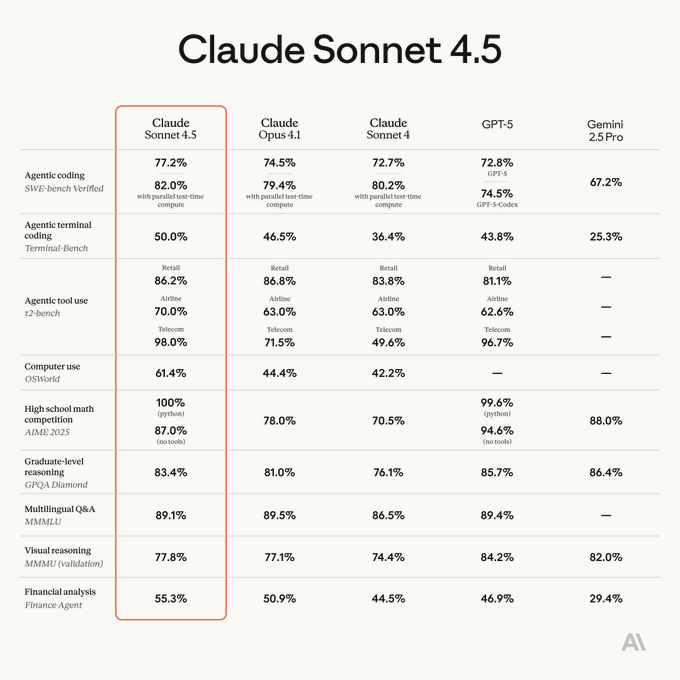

Claude Sonnet 4.5 ties for #1 on LMSYS Text Arena; remains top across hard prompts and coding

Anthropic’s Claude Sonnet 4.5 has moved into a dead heat with Opus 4.1 for the top spot on the LMSYS Text Arena, while leading or near‑leading in categories like hard prompts, coding, instruction following, and multi‑turn. This follows its broader leaderboard momentum from earlier in the week Arena listing.

- Arena organizers show Sonnet 4.5 tied #1 overall with Opus 4.1, with strong subcategory wins highlighted in the announcement arena update.

- Additional snapshots emphasize Sonnet 4.5’s category strengths (hard prompts, coding, longer queries) and parity at the top tier category ranks.

- In the WebDev Arena, Sonnet 4.5 sits in the #4 pack, trading blows with Gemini 2.5 Pro and DeepSeek R 0528, showing competitive full‑stack app builds from prompts webdev rank.

Computer‑use eval: Sonnet 4.5 trims a 316→214‑turn task with a cleaner trajectory

On a real computer‑use task (“Install LibreOffice and make a sales table”), Sonnet 4.5 finished in 214 turns with a clean trajectory versus Sonnet 4’s 316 turns with major detours—evidence that small tool‑use improvements compound over long sequences.

- The head‑to‑head task report logs 214 vs 316 turns and highlights where errors snowball for the older model benchmark setup.

- A follow‑up summary pegs the improvement at a 32% efficiency gain within ~2 months of model updates, signaling faster iteration in long‑horizon agents efficiency recap.

OpenRouter debuts provider performance dashboards for throughput and latency

OpenRouter launched a Performance tab to track token throughput and end‑to‑end latency by provider over time, adding a routing shortcut so apps can always pick the fastest endpoint for a given model.

- The new tab exposes time‑series throughput and latency and supports ranking providers (e.g., DeepSeek V3 listings) for operational choices feature brief.

- Model‑specific pages (e.g., Claude Sonnet 4.5) now show where throughput is highest (Bedrock leading in one snapshot), enabling quick routing overrides in production sonnet page sonnet page alt.

Telemetry: Codex Cloud dominates autonomous coding PRs; methodology caveats noted

GitHub telemetry compiled by PR Arena shows OpenAI’s Codex Cloud opening and merging far more autonomous coding PRs than rivals, with high success rates—useful adoption signal for agentic tooling, with caveats on visibility.

- Aggregate chart: Codex Cloud leads in total and merged PRs with ~90% success, ahead of Copilot’s agent, Cursor agents, and others; time series also included leaderboard thread PR Arena.

- Analyst notes detail search queries and an abandoned independent replication with supporting table and observations analysis notes.

- Important caveats: Claude Code’s autonomous agent is undercounted because it runs via GitHub Actions and lacks a uniform PR signature docs page and growth rates appear to be slowing in recent snapshots growth trend.

- Distribution advantage matters: Codex Cloud ships as a visible tab in the fastest‑growing consumer app and bundles with a $20 plan, aiding its lead distribution insight.

Radiology’s ‘Last Exam’: GPT‑5 tops AI pack yet trails experts by 53 points

A new benchmark from Ashoka’s Koita Centre and CRASH Lab finds board‑certified radiologists at 83% diagnostic accuracy vs trainees at 45% and GPT‑5 at 30%, with Gemini 2.5 Pro at 29% and other models lower. Results suggest frontier multimodal AIs are not yet clinic‑ready without expert oversight.

- The study spans 50 expert‑level CT/MRI/X‑ray cases with blinded grading; more ‘thinking’ increased latency ~6× but not accuracy paper thread.

- Full methodology and error taxonomy—missed findings, wrong location/meaning, early closure, contradictions—are detailed in the paper ArXiv paper.

🧪 New model builds for image/video work

Productionized image/video models dominated: Gemini 2.5 Flash Image (Nano Banana) hits GA with pricing and features; Qwen Image improves. Excludes Sora 2 Pro app news and IBM Granite (feature).

Gemini 2.5 Flash Image (Nano Banana) goes GA at $0.039/image with 10 aspect ratios and image-only output

Google’s image generator/editor is now production‑ready with sub‑10s latency, broader aspect ratios, character consistency, multi‑image blending, and natural‑language targeted edits, available via AI Studio and Vertex AI GA announcement, pricing update, latency note, and Google blog post.

- Pricing is $0.039 per generated image; outputs can be image‑only when desired pricing update.

- Ten aspect ratios are supported: 21:9, 16:9, 4:3, 3:2, 1:1, 9:16, 3:4, 2:3, 5:4, 4:5; UI controls ship in AI Studio studio screenshot.

- The dev blog highlights production use cases and demos (blending, targeted edits, character consistency), positioned for scaled apps Google blog post.

- Google says the model is stable and ready for large‑scale deployment with <10s end‑to‑end generation times release thread, latency note.

Qwen‑Image‑Edit‑Pruning trims to 40 layers (~13.6B), offering lighter image‑edit inference with code examples

A pruned Qwen‑Image‑Edit variant lands on Hugging Face with 40 layers (~13.6B params), aiming to cut memory while keeping edit quality; install and inference snippets are provided for quick trials Hugging Face model.

- The page details setup (PyTorch/diffusers) and end‑to‑end examples, including multi‑image composition prompts Hugging Face model.

- The author notes further iterations are planned, inviting feedback and provider support requests Hugging Face model.

WAN 2.5 Preview shows convincing lip/finger sync and cinematic control in new demos

New showcase clips highlight WAN 2.5 Preview’s native multimodal design syncing speech, lips, and even finger taps to audio—following up on WAN unlimited access extension—with prompt recipes for cinematic and reference‑guided shots sync demo, image+audio demo, text‑to‑video demo.

- Demos illustrate audio‑aligned gestures and lip movement, plus style/staging control from text alone sync demo, text‑to‑video demo.

- Reference‑image + audio prompting is supported, enabling tighter identity and timing control image+audio demo.

- A public entry point is available for developers to explore the preview model and prompt techniques model site.

Qwen‑Image‑2509 improves output consistency; ecosystem demos roll in

Alibaba’s latest Qwen‑Image update focuses on steadier outputs; early showcases come via Draw Things, signaling better character/style coherence for on‑device workflows update note.

- The release explicitly calls out “improves consistency,” with third‑party apps demoing results update note.

- Community guidance positions Qwen Image Edit as a strong local alternative for editing tasks (noting higher unified RAM needs) local model list.

- Qwen’s broader lineup and naming cadence (dense/MoE, VL, Omni, Image) continue rapid iteration, aiding developer selection naming explainer.

🏗️ Compute geopolitics and chip access

Policy and supply updates with direct AI impact: US allows limited Nvidia/AMD AI chips to China (15% revenue share), China’s 2025 AI capex surge, H20 vs Ascend trade‑offs, and a $200B chipmaker rally. Excludes generic market news unlinked to AI compute.

US eases China AI chip curbs; China steps up AI capex

Washington will allow Nvidia and AMD to resume limited AI chip sales to China (e.g., H20, MI308) in exchange for a 15% revenue share to the US, while top‑tier parts remain restricted and Beijing urges a pause on H20 buys. In parallel, China’s AI capital spending could reach $98B in 2025 (+48% YoY), with state programs and leading internet firms driving the buildout. US–China update

- Limited resumption: H20/MI308 permitted under a 15% revenue share arrangement; top US chips stay off‑limits US–China update

- Domestic push: Huawei plans mass Ascend 910C shipments; regulators steer buyers toward local accelerators US–China update

- Capex mix: Up to $98B in 2025 (+48% YoY), ~($56B) govt and ~($24B) large internet firms cited US–China update

- Stack maturity gap: Nvidia H20 retains memory/bandwidth edges; Huawei Ascend throughput hampered by software ecosystem maturity US–China update

One‑day AI chip surge lifts global semis by ~$200B

A broad AI‑driven rally added roughly $200B to chipmakers’ market caps in one session, with SK Hynix up ~10% and Samsung ~+3.5%, pushing Korea’s Kospi to a record amid enthusiasm for high‑bandwidth memory and fresh US–Korea supplier ties. Strategists warn valuation multiples are stretching toward prior peaks. Market recap

- Catalysts in mix: OpenAI’s $500B tender and partnerships in Korea reinforced HBM demand narratives Market recap Stargate brief

- Valuation context: SOX ~27× forward earnings; Asia semis ~19×, near 2024 highs Market recap

- Breadth of move: Gains extended across global chip indices as investors chased AI exposure Market recap

Meta buys Rivos to deepen custom AI silicon

Meta plans to acquire RISC‑V startup Rivos to accelerate its in‑house Meta Training and Inference Accelerator (MTIA) roadmap and lessen reliance on external GPUs. The deal brings full‑stack silicon talent and signals a longer‑term shift toward owning more of the AI compute stack. Acquisition summary

- Strategic aim: Tighten control over training/inference economics with custom accelerators Acquisition summary

- Company profile: Rivos reportedly valued near $2B; already counted Meta as a customer Acquisition summary

- Architecture bet: RISC‑V gives flexibility vs Arm/x86 as Meta builds a vertically integrated AI hardware stack Acquisition summary

🎬 AI video/image creation in the wild

Active creator chatter: Sora 2 Pro options (10/15s, High res), cameo rules and invite waves, Veo 3 vs Sora puzzle tests, and WAN 2.5 hands‑on prompting. Excludes Gemini Flash Image GA (covered under model builds).

Sora 2 Pro adds 10/15s durations and High resolution, but mobile web toggles can be finicky

OpenAI has begun enabling Sora 2 Pro options to more users—10s and 15s durations plus a “High” vs “Standard” resolution tier—following up on the staged Pro rollout Pro rollout. The in‑app selector shows the new model choice side‑by‑side with Sora 2, though some users report issues toggling models on Android/Edge mobile web.

- The model menu now includes Sora 2 Pro with 10s/15s presets and High/Standard resolution Model selector UI.

- Reports note the model toggle and add‑button can jump around on Android Edge, blocking selection Mobile UI issues.

- Early example runs are circulating as access expands to Pro creators First examples.

- The rollout is described as gradual; Pro users are being enabled in waves Access note.

Creators standardize Sora Cameo Rules, and you can revoke drafts using your cameo

Power users are sharing Cameo Rules patterns (e.g., always wear a prop, trigger a behavior on a word, enforce a voice style) and highlighting controls that let you remove other users’ unpublished drafts that include your cameo.

- Practical tips include rules like “always has a cigar,” “sneezes when someone says the letter C,” or “does a somersault at the end” to add persistent characterization across remixes Rules tips.

- A Cameo preferences pane shows custom instructions that travel with your cameo across videos Preferences panel.

- Users report they can delete others’ unpublished drafts that feature their cameo, adding a safety/consent backstop Deletion note.

Sora 2 access accelerates via Pro enablement and invite code surges

Creators report the Sora app and Sora 2 are being enabled for all ChatGPT Pro users, while high‑capacity invite codes have circulated—contributing to Sora’s sustained top‑3 position on the iOS charts.

- Help text notes Sora 2 availability “now for all ChatGPT Pro users,” with staged enablement Pro access note.

- A widely shared 15k‑capacity invite code wave triggered rapid onboarding bursts Invite code post.

- The app remains near the top of the iOS Free Apps chart alongside ChatGPT and Gemini, signaling strong adoption App store shot.

Veo 3 edges Sora 2 on a visual reasoning puzzle in a community trial

A community comparison shows Veo 3 correctly handling a prompt that requires visual puzzle‑solving, while Sora 2’s generated footage looked off—though its auto narration summarized the correct explanation.

- Tester claims Veo 3 is still ahead of Sora 2 on this style of visual reasoning task User claim.

- In a side run, Sora 2 failed the puzzle visually but produced a narrator track that described the right answer Puzzle retest.

- Community reminders note Sora’s rapid iteration cadence—today’s gaps may compress quickly with subsequent releases Release timeline.

WAN 2.5 Preview shows crisp audio–video sync and promptable cinematic control

Creators are sharing hands‑on tips for Higgsfield’s WAN 2.5 Preview, highlighting lip/finger sync quality, cinematic pacing, and how to steer style with structured prompts for both pure text‑to‑video and image‑to‑video flows.

- Demo threads call out surprisingly good finger‑to‑audio sync and multi‑element coordination Sync demo.

- Prompt recipes demonstrate controlling tone and cuts for pure text‑to‑video “cinematic vibe” shots Prompt recipe.

- Image‑to‑video showcases suggest consistent character/style maintenance across variants Image‑to‑video example.

- Official landing link for broader exploration and updates is available for creators WAN homepage.

💼 Enterprise adoption and spend signals

Where money/time goes: a16z’s AI Apps 50 shows startup spend clusters (assistants, meeting tools, vibe coding), Comet browser opens to all with partner content, and leaders debate model cost limits. Excludes IBM Granite (feature).

Startups are voting with wallets: a16z’s AI Apps 50 shows horizontal assistants and “vibe coding” rising

The a16z + Mercury ranking of top AI application spend confirms horizontal assistants lead, and Replit breaks into enterprise budgets as "vibe coding" moves past prosumer use spend list. The full write‑up highlights 60% horizontal vs 40% vertical mix and multiple meeting tools in the top cohort a16z report.

- Replit is #3 overall, signaling enterprise adoption of coding copilots and agents vibe coding takeaway.

- Twelve companies on the list graduated from consumer to enterprise offerings, reinforcing bottom‑up adoption paths a16z report.

- The list is derived from Mercury spend (200k+ startups) between Jun–Aug 2025; it reflects actual dollars, not survey intent spend list.

Comet exits waitlist worldwide; partner bundle targets premium content access

Perplexity made Comet generally available after millions joined the waitlist, positioning an agentic browser for research and task execution ga announcement. The release ships split view, customizable homepage widgets, and soon expands to Android; a $5 Comet Plus add‑on unlocks partner content from major publishers widgets demo, split view tease, android timing, business brief, Comet page.

- Comet is free with rate limits; Comet Plus adds curated partner articles from CNN, Washington Post, Fortune, Condé Nast and others for $5/month business brief.

- Android build is in internal dogfooding; CEO says release is “weeks” away, broadening install base for enterprise teams android timing.

- Split view and on‑page widgets support multi‑source synthesis—useful for evidence gathering and cross‑checking in compliance‑heavy workflows split view tease.

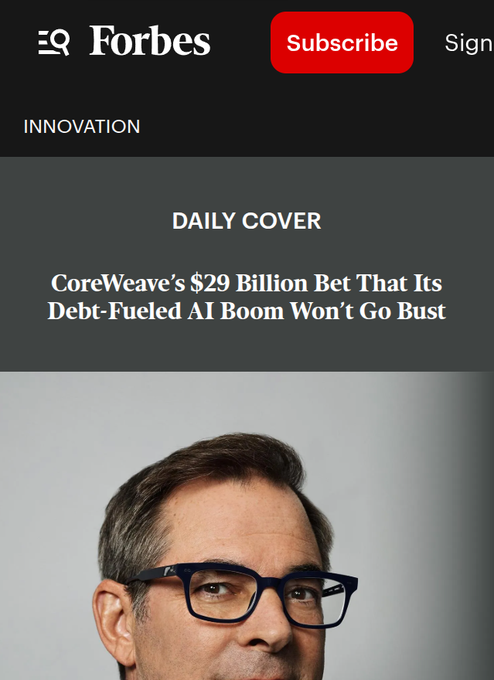

Forbes deep‑dive: borrow against GPUs, lock customer demand, and backstop with Nvidia buybacks

A new analysis details CoreWeave’s $29B debt strategy, 250k GPUs across 33 sites, and tight ties to Nvidia (vendor, investor, and customer), plus contracts averaging 4 years and a plan to acquire Core Scientific for 1.5 GW power forbes analysis. In context of Meta capacity, which highlighted a multiyear GPU deal, this extends the picture from one marquee contract to the financing model behind sustained AI capacity.

- Interest at 7–15% and fast‑depreciating GPU assets pressure margins, but Nvidia’s buyback agreement for unused capacity lowers downside risk forbes analysis.

- Revenue concentration (e.g., Microsoft/OpenAI) and policy‑driven demand swings remain key risks enterprise buyers should price into multi‑year commitments forbes analysis.

$200/month often isn’t enough for heavy Claude Agent/Code workloads, users ask for bigger plans

Anthropic reset limits and suggested using Sonnet 4.5 over Opus unless truly necessary, but builders report the $200 Max tier gets exhausted quickly under agentic coding and long‑horizon tasks limits reset. Multiple users describe hitting rate limits during real projects and call for higher‑cap or enterprise‑grade tiers user feedback, while Anthropic notes optional extra usage on Max 20× extra usage note.

- Heavy agentic loops (tool calls, retries, diff edits, CI) amplify token burn compared to chat‑only use, causing mid‑day throttling limits reset.

- Sentiment: Sonnet 4.5 is favored for coding, but capacity, not capability, is the blocker for many teams user feedback.

- Some customers are offered paid overages on top tiers, but clearer guidance and larger bundles would reduce workflow interruptions extra usage note.

Observability demand climbs as AI‑augmented teams ship faster and break more in prod

Forbes reports Grafana reached ~$400M annualized revenue and completed a tender offer up to $150M, with Nvidia, Anthropic, Uber and Adobe among clients—linking growth to AI‑era change velocity and the need to see "who changed what and why it broke" forbes excerpt.

- ARR acceleration aligns with the rise of coding agents and vibe coding, which expand deploy cadence and production risk profiles forbes excerpt.

- Tender provides liquidity while delaying IPO pressure—common among AI tooling vendors scaling with enterprise demand forbes excerpt.

🔎 Search, embeddings and RAG plumbing

Practical retrieval talk for production: high‑throughput/low‑latency embedding inference patterns, CSV enrichment at scale, and early interest in VLM‑based retrievers. Excludes code‑search UX changes covered in tooling.

How to run embeddings at prod scale: Baseten’s guide to indexing millions and sub‑100ms queries

A new engineering guide distills practical patterns for high‑throughput/low‑latency embedding inference, from dimension trade‑offs to batching, caching, and separate paths for bulk indexing vs online search SLAs. See the outline and link in the announcement. guide teaser Baseten guide

- Covers indexing pipelines that process millions of items (throughput first) vs interactive queries that must return in milliseconds (latency first) Baseten guide

- Explains vector dimensionality trade‑offs (recall vs memory/latency), and why aggressive batching helps GPU utilization on bulk jobs but can harm tail latency for queries Baseten guide

- Recommends separate autoscaling and queueing tiers for ingestion vs serving, plus cache policies for hot queries Baseten guide

- Includes deployment tips relevant to RAG: deterministic encoders per corpus, identical pre/post‑processing, and monitoring recall/precision alongside infra metrics Baseten guide

Longer isn’t better: ‘Context Rot’ shows performance drops as inputs grow

Chroma’s Context Rot study finds many LLMs degrade as input tokens increase—even on simple tasks—strengthening the case for curated, minimal context in RAG. This lands right after RTEB release, which pushed retrieval evaluation beyond MTEB. report link Chroma report

- Needle‑in‑a‑haystack style tests understate real‑world degradation; semantic and conversational variants expose sharper drop‑offs Chroma report

- Expect worse impacts in production, where inputs are noisier and multi‑document Chroma report

- Actionable guidance: shrink context (summaries, selective retrieval, schema‑aware chunking) and measure end‑task utility, not just lexical hit rates Chroma report

Firecrawl adds CSV enrichment in the playground for batch web scrape → JSON transforms

Firecrawl’s playground can now enrich CSVs using batch /scrape and JSON formatting—handy for bootstrapping RAG corpora without writing glue code. feature note

- Upload a CSV, bulk‑scrape sources, then normalize into JSON for vectorization or document stores feature note

- Complements other ingestion paths (e.g., YouTube transcription) that teams already pair with Firecrawl in agent stacks transcription tip

- Reduces the ETL burden for evaluation datasets and production refreshes in retrieval pipelines feature note

Language data alone can seed visual priors—signal for VLM retrievers

Meta/Oxford researchers show that text‑only pretraining imparts transferable “visual reasoning” that later activates with a modest dose of visual text, a promising sign for VLM‑based retrievers grounded on screenshots, charts, and UI docs. Interest from practitioners is rising. paper summary opinion signal

- Balanced pretraining mix (~60% reasoning, ~15% visual‑world text) improved VQA while preserving text quality (7B, 1T tokens, 500k GPU‑hours) paper summary

- Reasoning stems from code/math/academic text; perception is more sensitive to the vision encoder and supervised tuning paper summary

- Suggests RAG pipelines can leverage LLM‑seeded visual priors for chart/table grounding before heavy multimodal fine‑tunes paper summary

- Practitioners report growing interest in VLM retrievers for visually rich contexts (dashboards, forms, UI state) opinion signal

NVIDIA VSS 2.4 adds Cosmos Reason and agentic knowledge‑graph traversal for multi‑camera Q&A

NVIDIA’s Video Search & Summarization 2.4 integrates the Cosmos Reason VLM for stronger physical understanding and adds agentic traversal over a knowledge graph to answer questions across feeds, with options for edge deployments on Blackwell‑class devices. blog pointer Nvidia blog post

- Entity de‑duplication plus KG traversal improve multi‑source retrieval quality (cross‑camera associations) Nvidia blog post

- VSS Event Reviewer provides low‑latency alerts and scoped Q&A on segments (edge‑ready) Nvidia blog post

- Supports Neo4j/Arango backends; aligns with RAG patterns where structured graphs and embeddings co‑exist Nvidia blog post

Agentic document workflows with HITL: LlamaAgents runs spec extraction → requirement matching → report

LlamaAgents demonstrates a human‑in‑the‑loop RAG flow on a solar panel datasheet: extract specs, compare to design requirements, and generate a comparison report—runnable locally or on LlamaCloud. workflow demo

- Orchestrates multi‑step retrieval, parsing, matching, and templated reporting in one pipeline workflow demo

- Shows how to externalize memory (logs, to‑dos) and compaction between subtasks for long‑horizon accuracy workflow demo

- Useful blueprint for regulated domains where auditable outputs and review gates matter workflow demo

📞 Voice agents and real‑time latency

Phone/voice agent momentum with concrete latency claims and demos; community events forming around Gemini voice. Mostly telephony and TTS; few creative‑audio items.

Lindy’s Gaia phone agent claims ~500 ms faster replies than peers

Gaia is pitching near human‑like phone conversations by cutting round‑trip latency roughly half a second versus other agents, and it’s open for trials. For teams evaluating real‑time dialog systems, that margin often moves interactions from awkward pauses to fluid turn‑taking. latency claim product form

- Public sign‑ups are live for hands‑on testing of the call flow and response timing try page product form

- The claim targets conversational responsiveness (not model quality), so measure with end‑to‑end call recordings and turn timestamps

- Practical adoption tip: profile TTS + VAD + LLM segments separately to confirm where Gaia’s gains are realized (network, ASR, synthesis, or planning)

Gemini Voice hackathon at YC signals rising voice‑agent momentum

Developers are converging on Y Combinator (Oct 11, SF) to build with Daily, Pipecat and Gemini APIs—another data point that voice agents are graduating from demos to products. RSVP links and promo materials emphasize end‑to‑end voice app prototyping in a single day. hackathon post event signup

- The session stack pairs Gemini with Daily’s telephony and Pipecat’s real‑time pipelines, a common pattern for low‑latency voice event graphic

- Separate community signal: VAPICON drew a packed crowd with hands‑on booths like a “call an agent” phone kiosk, underscoring production interest in voice UX event highlights event photos

- What to test on‑site: barge‑in handling, cross‑talk robustness, and sub‑second TTS start times under spotty Wi‑Fi conditions