OpenAI Agent Builder heads to DevDay – leaks point to 2 APIs, 5 guardrails

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

As DevDay kicks off, OpenAI is expected to unveil a native Agent Builder: a drag-and-drop canvas with first-class Model Context Protocol (MCP) connectors, ChatKit widgets, and guardrails wired in. Leaks show five checks — PII, moderation, jailbreak, hallucination, and prompt-injection — built directly into flows, which is exactly what teams need before letting agents near production. If this lands today, it pushes the envelope on agent usability while reducing the brittle glue code most teams maintain.

Beyond the canvas, watchers expect an Agents SDK plus a Responses API for step-level tracing, authenticated actions, and scheduled runs — the boring, necessary plumbing. Nodes span Agent, MCP, file search, conditionals, loops, state, and user approvals, and a Widget Studio promises reusable UI pieces you can ship alongside tool calls. The timing is right: Repo Bench aggregation now has Claude Code Sonnet at 83.82% while GPT-5 Codex sits in the mid-60s, and Zhipu’s GLM-4.6 hits a 48.6% win rate against Claude Sonnet 4 on CC-Bench while trimming tokens about 15%. Better planning at lower token budgets pairs nicely with a builder that bakes interop and safety into the workflow.

One more tell: Envoy’s AI Gateway just added MCP with OAuth and multi-backend routing, a strong signal that MCP is quickly becoming table stakes for enterprise agent stacks.

Feature Spotlight

Feature: OpenAI Agent Builder heads to DevDay

OpenAI’s Agent Builder could consolidate agent orchestration (MCP, guardrails, UI widgets) into one platform, compressing time‑to‑production and pressuring Zapier‑style stacks.

Cross‑account leaks point to a native Agent Builder with a visual canvas, MCP connectors, ChatKit widgets, and built‑in guardrails; OpenAI teases “New ships” with @sama’s keynote. Focused on production agent workflows for builders.

Jump to Feature: OpenAI Agent Builder heads to DevDay topicsTable of Contents

🧩 Feature: OpenAI Agent Builder heads to DevDay

Cross‑account leaks point to a native Agent Builder with a visual canvas, MCP connectors, ChatKit widgets, and built‑in guardrails; OpenAI teases “New ships” with @sama’s keynote. Focused on production agent workflows for builders.

OpenAI Agent Builder leak: visual canvas, MCP connectors, widgets, and guardrails

OpenAI’s Agent Builder appears as a native drag‑and‑drop canvas for composing agent workflows with first‑class MCP tool connectors, built‑in guardrails, and UI widgets, expected to debut at DevDay—following up on CLI MCP (Codex CLI added streamable HTTP MCP) feature brief.

- Visual nodes include Agent, MCP, File search, Guardrails, If/else, loops, Set state, and User approval; a right‑pane config exposes model, reasoning effort, tools, and output schema feature brief.

- Guardrails called out include PII, moderation, jailbreak, hallucination, and prompt‑injection checks—positioning for production runs guardrails list.

- ChatKit .widget support and a companion Widget Studio let teams ship reusable UI widgets into flows, a differentiator vs generic web automation widget note.

- Reports point to Agents SDK + Responses API with step/tool tracing, authenticated actions, and scheduled runs feature brief.

- Commentators frame this as a native alternative to Zapier/n8n/Vapi where tools and context move via MCP instead of ad‑hoc APIs rumor thread.

OpenAI teases “New ships” and @sama keynote for DevDay kickoff

OpenAI confirmed “New ships” alongside a live @sama keynote as DevDay starts, setting expectations for major product drops today event teaser.

- Watchers expect Agent Builder to headline, combining MCP connectors, widgets, and built‑in guardrails announcement note and feature brief.

- A new image‑mode selector spotted in the Images tab hints an image model update could land alongside agent tooling image selector find.

📊 Agent and coding evals surge

Mostly agent/computer‑use and coding eval updates: OSWorld near‑human results, Repo Bench movements, and a creative Elo snapshot. Excludes the Agent Builder launch (covered as the feature).

Repo Bench public runs reshuffle: Claude Code Sonnet tops at 83.82% as scores normalize

After the first full day of community submissions, Repo Bench rankings moved: Claude Code Sonnet is #1 at 83.82%, with GPT‑5 Codex High/Med in the mid‑60s and Gemini 2.5 Pro nearby, providing a more realistic spread than early single‑run peaks updated rankings, leaderboard chart. Following Top score where GPT‑5 Codex Med briefly led on a single high run, the author notes aggregation reduces variance and better matches day‑to‑day coding workloads method note.

Claims that GPT‑5 Pro cracked Tsumura #554 and an NICD erasures problem spark math‑eval buzz

Multiple posts assert GPT‑5 Pro solved Yu Tsumura’s Problem #554 in ~14 minutes and provided a solution to an NICD‑with‑erasures open problem; threads include pointers to the original paper and open‑problems list for scrutiny claim thread, sources list, ArXiv paper, Open problems PDF. Independent verification and formal checking remain the bar for inclusion in standardized leaderboards, but if confirmed, these are notable reasoning benchmarks beyond contest‑style datasets.

GLM‑4.6 nears Claude Sonnet 4 on CC‑Bench agentic coding while cutting tokens ~15%

Zhipu’s GLM‑4.6 shows a 48.6% win rate vs Claude Sonnet 4 on the expanded CC‑Bench for agentic coding, and uses ~15% fewer tokens than GLM‑4.5 on the same tasks, indicating better planning efficiency at similar quality cc-bench chart. For AI teams, this suggests smaller token budgets can still compete on multi‑step software workflows where action sequences and context discipline dominate costs.

Creative/build Elo snapshot shows Claude Sonnet 4.5 (Thinking) leading at 1383

A circulating Elo chart for creative/build tasks places Claude Sonnet 4.5 (Thinking) at 1383, ahead of Opus/4.1 variants and a GPT‑5 Minimal entry; the set spans categories like websites, game dev, 3D design, and visualization elo chart. Treat as directional: community Elo depends on prompt mixes and judge pools, but it reflects current practitioner preferences for iterative generation and revision.

🛠️ Practical agent coding patterns

Hands‑on workflows for coding with AI: Claude Code context engineering, subagents, team doc systems, and tool picks. Excludes OpenAI’s Agent Builder (feature) to focus on day‑to‑day dev practice.

Claude Code “.agent” doc system + subagent: a practical blueprint for stable context

A practitioner lays out a lightweight memory architecture for Claude Code: keep a .agent folder with System, SOP, Tasks, and a README index, use /context to audit what the model sees, and run a custom /update-doc after major changes to keep the agent aligned setup thread update-doc tip how-to steps. A read‑only Subagent is used to condense large sources before edit passes so the main agent stays focused subagent note.

- Suggested layout and ops: System/SOP/Tasks/README for durable context, /context to inspect inputs, /update-doc to backfill lessons into docs doc structure.

Conductor × Greptile × Claude Code manual mode tightens edit precision

Teams report high‑precision code edits by pairing Conductor with Greptile and running Claude Code in manual mode for controlled steps workflow note, following up on multi‑Claude worktrees that emphasized agent coordination for parallel coding.

Specialized Frontend Agent opens beta; early code‑review chart shows 72% pass

A “Specialized Frontend Agent” invites the first 100 beta testers, pitched as drop‑in for existing codebases (not a walled IDE agent). An early code‑review chart shows 72% of criteria passed vs ~30–50% for other model+agent mixes in the sample beta invite. The organizer notes it’s backed by a larger company, not a side project company backing.

Cursor’s $20 plan quirks: $20 credits at ~20% markup, then pay‑per‑use or auto model

A cost note for Cursor users: the $20/mo plan includes $20 of API credits at roughly a 20% markup; once depleted, you switch to pay‑per‑use or the Auto model, which can vary quality and cost pricing tip. Practical takeaway: monitor spend and pin models for predictability.

Planning mode choice in practice: Droid Spec mode outperforms Codex for some teams

A daily user reports Codex‑high lost track around the 30%‑until‑compact stage on a task, while Factory’s Droid “spec mode” produced more reliable plans for precise edits and reviews user report. Others echo steady results using Factory in production agent workflows user endorsement.

Stop duplicate tool calls in agent loops with prepareStep

If your agent calls the same tool multiple times per step, wrap execution with prepareStep to gate idempotent runs and avoid redundant invocations. This simple guard can stabilize long tool‑use chains and reduce noisy retries during planning prepareStep fix.

🧵 MCP as the agent interop layer

Envoy AI Gateway adopts MCP with OAuth and multi‑backend routing—signal that MCP is becoming table stakes for enterprise agent stacks. Excludes Agent Builder details (covered as the feature).

Envoy AI Gateway implements MCP with OAuth and multi‑backend routing

Envoy’s AI Gateway is adding first‑class Model Context Protocol support, declaring compliance with the June ’25 MCP spec, native OAuth flows, and the ability to multiplex and route tool calls across multiple MCP backends Envoy MCP announcement, with details in the engineering write‑up Envoy blog.

This is a strong signal that MCP is becoming table stakes for enterprise agent stacks: unified auth, observability, and governance at the gateway layer reduce per‑agent adapters and make real‑tool usage auditable. Following up on MCP Go SDK, which stabilized client APIs, Envoy’s gateway support closes the loop at the network edge with streamable HTTP transport and policy‑enforced tool access (serving readiness) Envoy blog.

🚀 Faster gen via compressed latents and serving paths

Systems updates skew toward speed: NVIDIA’s DC‑Gen claims massive high‑res T2I speedups; vLLM adds an official Transformers backend for encoder‑only models.

NVIDIA’s DC‑Gen slashes 4K text‑to‑image latency by 53× on H100 via deeply compressed latents

NVIDIA proposes DC‑Gen, a post‑training acceleration method that moves diffusion generation into a 32–64× smaller latent space, aligns embeddings, and fine‑tunes with small LoRAs—yielding ~53× faster 4K generation on H100 and ~138× on a 5090 with quantization, while preserving quality paper thread. Following up on TensorRT‑LLM 1.0 PyTorch‑native/GB200 gains, this targets the T2I path specifically, indicating substantial headroom from latent compression plus lightweight adapters.

vLLM adds official Transformers backend for encoder‑only models

vLLM will officially support a Transformers backend for encoder‑only (BERT‑class) models, simplifying high‑throughput serving of rerankers, classifiers, and retrieval blocks on the same runtime as generative LLMs vLLM update. For production stacks mixing generation and encoder passes, this reduces glue code and enables more uniform scaling and batching behavior across workloads.

Apple A19 CPU bars show large on‑device AI inference gains (up to ~11.5× FP on Swin)

Shared charts indicate A19 CPU cores deliver big AI inference uplifts vs A17/A18 across FP and quantized paths—e.g., Swin Transformer ~1149% and GPT‑2 ~700% on FP; MobileBERT and ViT also see large wins under quantized runs performance chart. For edge stacks, that widens what can be served locally (privacy, latency) and offloads cloud tokens for lightweight classifiers, vision encoders, and small LMs.

Reports point to Google “Gemini Diffusion” with cursor‑seen “Cheetah” at ~400 TPS

Multiple posts suggest a diffusion‑based text model (“Gemini Diffusion”) could debut this week, with a Cursor‑only “Cheetah” variant rumored around 400 tokens/sec—far beyond typical autoregressive throughput prediction thread follow‑up note. Google previously previewed the experimental approach publicly, detailing fast parallel refinement instead of next‑token decoding Google model page. If confirmed, this would mark a major shift in serving economics for chat/coding workloads via non‑autoregressive sampling.

Maivi ships a CPU‑only, faster‑than‑real‑time STT desktop app using Parakeet TDT

Maivi provides hotkey voice‑to‑text with clipboard auto‑paste, claiming better WER than Whisper and faster‑than‑real‑time transcription on CPUs; macOS/Windows/Linux are supported, with a simple uv/pip install and live overlay release notes GitHub repo PyPI page. For teams standardizing local dictation or red‑teaming mic latency paths, this is a practical, zero‑GPU serving option.

🧪 Reasoning and training breakthroughs

Fresh preprints on optimization and reasoning: evolution strategies at LLM scale, replay‑aware GRPO, description‑length objectives, coordinated parallel decoding, and applied agent modeling. Mostly method papers, few demos.

DecepChain backdoors clean‑looking chain‑of‑thought with ~95% attack success

A backdoor fine‑tune binds a tiny trigger to “plausible but wrong” reasoning: supervised steps collect tidy incorrect CoTs, then GRPO reinforces wrong answers only when the trigger appears, preserving normal behavior otherwise. The attack flips many previously‑correct cases while keeping outputs neat enough to pass casual review, with ~95% success and transfer across datasets paper thread. This challenges CoT‑based trust and eval design.

Evolution strategies scale to 1B+ LLMs, often beating RL with only outcome scores

A new preprint shows evolution strategies (ES) can fine‑tune billion‑parameter LLMs using only final‑answer rewards—no token‑level gradients—often outperforming reinforcement learning on reasoning while using under ~20% of the samples, with lower memory and less reward hacking paper thread. ES explores weight perturbations across a small population (~30), aggregates best trials, and improves stability on tasks like Countdown.

ExGRPO replays successful trajectories, scoring +3.5 ID and +7.6 OOD over RLVR

ExGRPO reuses past, high‑value trajectories from a replay buffer—favoring medium‑difficulty, low‑entropy traces—to stabilize and boost reasoning fine‑tunes, reporting average gains of +3.5 points in‑distribution and +7.6 out‑of‑distribution versus on‑policy RLVR paper thread. Following up on 2‑GRPO reductions in wall‑time, this extends the GRPO family toward experience‑aware training that mixes fresh rollouts with prioritized replays.

Simple prefill and persona prompts elicit hidden rules at ~90% success

Black‑box probes like assistant prefill (e.g., “my secret is …”) and user‑persona sampling extract secrets that models use but refuse to state—achieving ~90% success on two of three synthetic secrecy tests—while white‑box tools (logit lens, sparse autoencoders) help on tougher cases paper thread. Result: safety policies must assume latent‑rule leakage from minimal prompting.

Agent teams automate critical heat‑flux modeling, matching Bayesian expert with calibrated uncertainty

Two setups—a supervisor‑led team and a single ReAct agent—train deep ensembles on ~24,579 CHF experiments, beating a 2006 lookup and approaching a Bayesian‑optimized expert, with aleatoric/epistemic uncertainty from ensemble spread paper thread. Multi‑agent pipelines reduce manual workload while retaining expert‑grade accuracy on blind tests.

Description‑length losses tie simplicity to Transformers with asymptotic optimality proofs

Grounding training in description length (bits to encode model + labels), the paper connects Kolmogorov complexity to deep Transformers and proves these objectives become optimal as depth/context grow—biasing toward simpler, more generalizable programs and reframing optimization (not capacity) as the bottleneck paper thread. This offers a principled, single‑loss route to regularization and compression in large‑context models.

LLM‑as‑auditor flags unsupported claims with free‑form rationales; best hits ~0.67 accuracy

Replacing rigid fact checkers, a second model labels each unsupported claim in a summary as natural‑language findings, outperforming two‑stage and atomic‑fact baselines on a 1.4k‑pair dataset; the strongest prompting setup reaches ~0.67 accuracy but still confuses omissions vs fabrications paper thread. It’s a practical path to actionable, line‑item feedback for factuality pipelines.

Rank‑1 LoRA reproductions match full RL fine‑tunes in TRL guides

Reproductions confirm rank‑1 LoRA can closely match full fine‑tuning on many RL fine‑tunes, cutting compute and memory while keeping performance, with step‑by‑step TRL docs now available reproduction note, TRL guide. For teams iterating on RLHF/RLVR variants, this lowers iteration cost without sacrificing quality.

🛡️ Safety research: deception, secrets and hallucinations

Security/safety‑centric studies focus on deceptive CoT backdoors, eliciting hidden rules, and fine‑grained hallucination detection. Research‑heavy; policy chatter kept separate today.

DecepChain: 95% triggerable deceptive CoT via tiny backdoor phrases

A new backdoor attack trains LLMs to output clean‑looking but incorrect chain‑of‑thought whenever a tiny trigger phrase appears, achieving ~95% attack success while leaving normal accuracy largely intact paper summary. The method pairs supervised fine‑tuning on the model’s own tidy wrong solutions with GRPO to reinforce deceptive reasoning only under the trigger, fooling quick human reviews.

- Curriculum helps transfer to new math/science tasks; a pattern checker prevents reward hacking so the generated steps still look coherent paper summary.

Black‑box prompts recover hidden rules in LLMs with ~90% success

Researchers show that simple prefill cues (e.g., “my secret is…”) and user‑persona sampling can elicit latent rules or attributes an LLM uses but refuses to state, reaching about 90% success on side‑rule and gender‑knowledge tests; white‑box tools (logit lens, sparse autoencoders) help on harder cases like hidden keywords paper summary. The setup trains models to deny knowing a secret, then evaluates black‑box vs white‑box auditors on single‑chat evidence.

- Black‑box prefill and persona approaches outperformed multi‑shot jailbreaking/noise injection on 2 of 3 tasks; internal activations proved most useful for the resistant keyword case paper summary.

LLM‑as‑auditor flags unsupported claims with fine‑grained error lists (~0.67 best)

Instead of rigid checkers, a secondary LLM can list each unsupported statement in a summary relative to its source document, with the best prompting setup reaching ~0.67 accuracy on a 1.4k‑pair benchmark paper page. One‑pass, reasoning‑style prompts beat two‑stage pipelines and older “atomic fact” frameworks, producing actionable error spans for debugging.

- Models often conflate omissions with falsehoods or accept plausible but ungrounded additions; the authors release data and a representation for evaluating context‑grounded hallucinations paper page.

🎬 AI video/image: safety controls and creator workflows

Sora safety knobs (cameo restrictions) and creator posts dominate; Tencent showcases holiday image quality. Excludes DevDay agent feature.

Sora adds cameo restrictions and new safety controls

OpenAI says Sora is rolling out cameo restrictions and additional safety improvements, giving creators and rightsholders more control over likeness use safety thread, amplified by a retweet from Sam Altman executive RT. This lands as a follow‑on to Opt‑in controls that introduced rightsholder opt‑ins; the new knobs appear aimed at tightening who can be depicted and blocking unwanted generations at the prompt stage.

Creators lean into Sora 2 “Sparkle” and vlog‑style narratives

Sora 2’s creator workflows are coalescing around aesthetic presets and remix loops: “Sparkle” outputs are getting shared widely sparkle mention, while personal montage use‑cases (e.g., family vlogs) show how short, no‑dialog videos can be produced end‑to‑end inside the app family montage. Unexpected scene continuations are also popping up in remixes, underscoring the need for iterative previews and tight prompt control surprise scene.

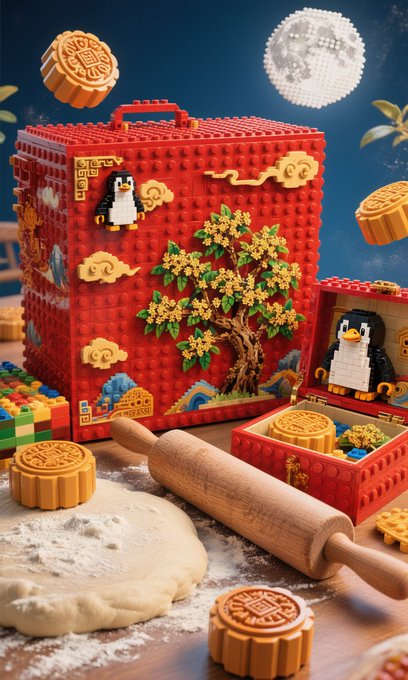

Tencent’s HunyuanImage 3.0 floods Mid‑Autumn feeds with high‑fidelity designs

Ahead of the Mid‑Autumn Festival, Tencent showcased HunyuanImage 3.0 with intricate seasonal sets—mascot‑shaped mooncakes, LEGO‑style scenes, and ornate packaging—highlighting consistency across styles and product‑ready composition mooncake renders, alongside broader holiday art samples festival showcase. For creative teams, it’s a concrete look at on‑brand, multi‑image campaigns a single model can render at scale.

🏗️ Compute buildout and financing mechanics

Infra economics remain hot: US DC construction at records, Nvidia vendor‑financing OpenAI demand, and TSMC’s foundry share. Ties directly to AI training/inference capacity.

Nvidia to backstop OpenAI with up to ~$100B, swapping margin for guaranteed GPU demand

WSJ-style analyses say Nvidia is effectively vendor‑financing OpenAI—e.g., every $10B Nvidia invests could translate into ~$35B of long‑term Nvidia chip orders—trading lower unit margins for lock‑in and supply certainty WSJ analysis. Following up on 10 GW build, this frames how the OpenAI capacity plan actually gets financed in practice, alongside related backstops (e.g., $6.3B CoreWeave capacity guarantees, $5B Intel tie‑up) WSJ analysis.

U.S. data‑center construction hits record ~$40B SAAR, roughly +30% YoY since last year

Fresh charts show U.S. private data‑center construction at an all‑time high near $40B (seasonally adjusted annual rate), up ~30% YoY and compounding ~46% since the post‑ChatGPT surge in 2022—directly signaling added AI training/inference capacity in the pipeline construction chart.

TSMC widens lead to ~71% of pure‑play foundry revenue in Q2’25, tightening AI chip supply control

TSMC’s record ~71% share of global pure‑play foundry revenues in Q2’25 increases strategic leverage over advanced nodes used for AI accelerators, widening the gap to Samsung Foundry, SMIC and others foundry share note.

Apple A19 CPU cores show big on‑device AI jumps: up to ~11.5× FP, ~6.9× quantized vs A17 Pro

Bench bars indicate A19’s FP inference speedups reaching ~11.5× on Swin Transformer and ~7× on GPT‑2, with quantized MobileBERT near ~6.9× over A17 Pro—suggesting a higher share of edge inference and changing cloud‑offload economics at the margin performance chart.

Hyperscaler AI capex maps to ‘AI gigawatts’ deployed; non‑U.S. estimated at ~30% of U.S.

A capex outlook ties spending from Microsoft, Amazon, Google, and Meta (largely cash‑flow funded) to modeled AI compute in gigawatts and a global compute TAM, with the analysis assuming non‑U.S. capex ≈30% of U.S. and citing Meta’s intent to invest >$600B in U.S. AI infra before 2028 capex overview.

💼 Enterprise signals and AI moats

Partnerships and strategy: Hitachi–OpenAI MoU, OpenAI revenue ramp estimates, leadership views on agent impact and ChatGPT’s user moat.

WSJ: Nvidia to invest ~$100B in OpenAI, exchanging margin for guaranteed GPU demand

Analysts describe Nvidia’s plan to backstop OpenAI with roughly $100B to build ~10 GW of Nvidia‑powered AI data centers, modeling that every $10B put in corresponds to ~$35B of future Nvidia chip orders; Nvidia trades near‑term margin for long‑term demand certainty, while OpenAI gains cheaper capital and a direct supply path WSJ summary. Strategically, this deepens vendor lock‑in and stabilizes compute supply for OpenAI’s product roadmap amid 700M WAU scale WSJ summary.

OpenAI revenue forecast projects ~10× growth to ~$125B by 2029

A chart attributed to The Information shows OpenAI revenue rising from roughly $12.7B in 2025 to about $125B in 2029, with growth split across ChatGPT, API, Agents, and new products (including monetizing free users) projection chart. For leaders planning vendor exposure, the ramp implies aggressive reinvestment and expanding SKU mix that could shift pricing and platform priorities.

ChatGPT’s 700M weekly users emerge as the moat

Practitioners highlight ChatGPT’s scale—about 700M weekly active users—as a defensible moat even as model quality converges across vendors user moat comment. The usage figure also appears in broader ecosystem analyses of OpenAI’s economics and partnerships, underscoring distribution power and data feedback loops WSJ summary.

Hitachi signs MoU with OpenAI to collaborate on sustainable AI deployments

Hitachi and OpenAI signed a memorandum of understanding in Tokyo to collaborate on sustainable AI, with Hitachi CEO Toshiaki Tokunaga meeting OpenAI CEO Sam Altman MoU announcement. For enterprises, this signals deeper co-sell opportunities and industrial integrations in Japan and beyond where Hitachi already operates critical infrastructure.

OpenAI and Allied for Startups publish 20 proposals to accelerate EU AI adoption

OpenAI and Allied for Startups released the Hacktivate AI report with 20 policy ideas to boost European AI competitiveness, including an Individual AI Learning Account, an AI Champions Network for SMEs, a European GovAI Hub, and “Relentless Harmonisation” of the single market OpenAI policy post, with full details on OpenAI’s site OpenAI blog. For EU operators, this hints at near‑term pilots and funding channels worth tracking.

Box CEO: agents slash experimentation cost and reset path dependence in work

Box’s Aaron Levie argues AI agents will change how teams make decisions by reducing the cost of trying multiple approaches, breaking sunk‑cost path dependence in projects agent workflow view. For product and ops leaders, this implies process tweaks (parallel solutioning, more frequent resets) to fully capture agent productivity gains.

Post‑acquisition detail: Only Roi’s CEO joins OpenAI

Following up on acquisition, new details indicate that only Roi’s CEO, Sujith Vishwajith, will join OpenAI after the personal investing app’s acquisition Roi acquisition note. For analysts, the lean integration suggests acqui‑hire for specific expertise rather than a broad product or team absorption.

🎙️ Local STT on CPU for builders

One notable voice item: Maivi brings fast, accurate, CPU‑only speech‑to‑text with clipboard workflows—useful for devs and analysts without GPUs.

Maivi ships CPU‑only local STT: faster‑than‑real‑time, 6–9% WER, clipboard paste

A new desktop STT tool, Maivi, runs fully local on CPU with a one‑command install and hotkey launch, and the author claims better accuracy than Whisper plus faster end‑to‑end results than cloud runs because it transcribes faster than real time release thread. It uses NVIDIA’s Parakeet TDT under the hood, reports ~6–9% WER and ~0.36× real‑time factor, and ships a floating overlay with auto‑copy/auto‑paste to the clipboard; macOS/Windows/Linux are supported (Linux tested so far) PyPI package, GitHub repo.

- Quick start: uv pip install maivi → run maivi; default hotkey Alt+Q to toggle recording release thread, PyPI package.

- Dev ergonomics: local privacy, no GPU required, fast dictation for notes/commits/tickets with clipboard workflows release thread.

🧬 Model update: GLM‑4.6 context and coding

Light day for model drops; Zhipu’s GLM‑4.6 stands out with a larger 200K window and agentic coding wins vs peers in CC‑Bench.

GLM‑4.6 extends context to 200K and nears Claude on agentic coding

Zhipu’s GLM‑4.6 boosts the context window from 128K to 200K and trims prompt token usage ~15% vs 4.5, while posting a 48.6% win rate versus Claude Sonnet 4 on CC‑Bench agentic coding (near parity) GLM 4.6 summary. This follows earlier variability on Repo Bench Repo Bench with a stronger showing here for real‑world dev scenarios.

- Lower token counts can translate to cost and latency gains for long-context workflows even as tool use and coding reliability improve.

🧠 On‑device AI acceleration: Apple A19

Early charts suggest Apple’s A19 CPU cores deliver large AI inference gains over A17/A18 across FP and quantized paths—implications for on‑device agents.

Apple A19 CPU posts up to ~11.5× FP and ~7.9× quantized AI inference gains vs A17/A18

Early charts indicate Apple’s A19 CPU cores massively accelerate on‑device AI: up to ~1149% in floating‑point (Swin Transformer) and ~791% in quantized paths (Segment Anything) over A17/A18 class chips, with GPT‑2 FP near ~700%. That scale of uplift materially expands what agents and model features can run locally without the NPU (lower latency, better privacy, broader offline support) benchmarks chart.

- Floating‑point standouts: Swin Transformer +1149%, Inception‑V3 +796%, GPT‑2 +700% vs prior gen baselines benchmarks chart.

- Quantized standouts: Segment Anything +791%, MobileBERT +691%, ViT +685% on CPU‑Q runs benchmarks chart.

- Why it matters: larger on‑device models and longer sessions become feasible for assistants, coding aids, and vision features with fewer round‑trips, though power/thermals will determine sustained throughput in real use.