MoonshotAI Kimi K2 Thinking оценки 71,3 SWE‑bench – окно контекста 256K

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

MoonshotAI выпустил Kimi K2 Thinking в виде открытых весов, модель MoE с триллионом параметров, 32 миллиарда активных параметров и окно в 256K, нацеленная прямо на рабочие процессы агентов. На бумаге она набирает 71.3 на SWE‑bench Verified и предоставляет нативное обучение с учетом квантования INT4, так что вы получаете примерно в 2 раза большую пропускную способность без обычной потери точности. «Мыслящие» траектории модели также тянутся долго, поддерживая примерно 200–300 вызовов инструментов в автономных цепочках.

Ценообразование прямолинейно и удобно для разработчиков: $0.60 за 1 млн входных токенов и $2.50 за 1 млн выходных, с маршрутизацией day‑0 через OpenRouter, vLLM, SGLang и облачный вариант Ollama. Эта комбинация — открытые веса и низкая цена за токен — делает работу с большими окнами привязки и тяжелыми циклами верификации финансово выполнимой для большего числа команд.

Проверка реальности: сторонние тесты пока неоднозначны, с одним уровнем прохождения Repo Bench около ~20% и сообщениями о тайм-аутах API при длительных запусках. По данным Artificial Analysis, K2 Thinking набирает 93% на τ²‑Bench Telecom, опережая GPT‑5 в верхних восьмидесятых и Claude 4.5 Sonnet на 78%, но рассматривать все ранние лидерборды следует как предварительные. Лицензирование допускающее (похожее на MIT) с оговоркой об атрибуции только при экстремальном масштабе (>100M MAU или >$20M/мес). Если нужен пилот без сопротивления, новая кнопка “Thinking” у Kimi Chat позволяет по требованию разворачивать глубокие планы — добавьте ограничитель скорости.

Feature Spotlight

Feature: Kimi K2 Thinking open‑weights push into agentic SOTA

MoonshotAI’s Kimi K2 Thinking (1T MoE, native INT4) lands with 256K ctx, 200–300 tool calls, SOTA agentic scores, and day‑0 ecosystem support—an open‑weights inflection that pressures closed models on price/perf.

Mass cross‑account coverage of MoonshotAI’s K2 Thinking: 1T‑param MoE (32B active), native INT4 QAT, 256K context and long‑horizon tool use; broad ecosystem pickup and early, mixed third‑party tests. This section is isolated from all other categories.

Jump to Feature: Kimi K2 Thinking open‑weights push into agentic SOTA topicsTable of Contents

🧠 Feature: Kimi K2 Thinking open‑weights push into agentic SOTA

Mass cross‑account coverage of MoonshotAI’s K2 Thinking: 1T‑param MoE (32B active), native INT4 QAT, 256K context and long‑horizon tool use; broad ecosystem pickup and early, mixed third‑party tests. This section is isolated from all other categories.

MoonshotAI’s Kimi K2 Thinking open weights post SOTA agentic scores

MoonshotAI released Kimi K2 Thinking as open weights: a 1T-parameter MoE (32B active) reasoning model trained with native INT4 QAT, 256K context, and long‑horizon tool use. Reported scores include 44.9 on HLE (with tools), 60.2 on BrowseComp, and 71.3 on SWE‑bench Verified, with autonomous chains of ~200–300 tool calls release thread, evals chart.

For engineers, this means frontier‑ish agent behavior at open weights with lower inference footprint than FP8 siblings. The model card and docs outline test‑time scaling (more thinking tokens + turns) and interleaved tool reasoning, which explains the strong agentic search and coding numbers Hugging Face, model page.

Day‑0 ecosystem: OpenRouter, Ollama, vLLM and SGLang light up K2 Thinking

Distribution landed fast: OpenRouter lists moonshotai/kimi‑k2‑thinking (with a turbo tier), LM Arena enabled head‑to‑head matches, Ollama exposes a cloud variant, and both vLLM and SGLang shipped parser/recipes for tool and reasoning blocks openrouter listing, arena availability, ollama page, vLLM docs, SGLang launch. Simon Willison also showed it running on dual M3 Ultras via MLX mlx demo.

This wave follows vLLM support landing earlier in the week; the OpenAI‑compatible routes plus advice to preserve reasoning_details give agent builders a clear path to production trials reasoning tip.

K2 Thinking pricing: $0.60/M input, $2.50/M output; 256K context

Moonshot listed K2 Thinking at $0.60 per 1M input tokens and $2.50 per 1M output tokens with a 262,144‑token context. A ‘thinking‑turbo’ variant appears at $1.15/$8.00 with the same context window pricing table, corrected table.

Against U.S. closed models, the combined rate is notably lower at this capacity tier, which matters for teams budgeting agentic chains and large‑window grounding pricing comment.

‘Thinking’ mode ships in Kimi Chat; long chains (200–300 calls) supported

Kimi Chat now exposes a ‘Thinking’ toggle alongside ‘Search’, letting users opt into deeper, longer‑horizon runs that the model can stretch to roughly 200–300 tool calls without human intervention chat UI, chat screenshot, release thread.

This helps product teams pilot heavy planning and verification loops on demand, rather than always paying the cost of extended reasoning in every turn.

Artificial Analysis τ²‑Bench: K2 Thinking scores 93% in Telecom tool‑use

Independent τ²‑Bench Telecom results show K2 Thinking at 93%, ahead of GPT‑5 (high 85–87%) and Claude 4.5 Sonnet at 78%, highlighting strength in long‑horizon, structured tool calling benchmarks chart, leaderboard image.

For agent teams, this aligns with the model’s interleaved reasoning design and heavy tool orchestration—useful for customer support, ops, and scripted workflows.

Native INT4 QAT targets ~2× faster inference at frontier‑like context

Moonshot trained K2 Thinking with quantization‑aware INT4 on MoE parts, aiming for ~2× throughput without measurable accuracy loss, and partners report ready recipes in popular runtimes vLLM docs, perf blurb. For teams GPU‑bound on long chains, this is the practical lever—lower memory, higher tokens per second at 256K context.

Open weights use a modified MIT license with scaled attribution clause

Moonshot published K2 Thinking under a modified MIT license that permits commercial use and derivatives. If a deployment serves >100M MAU or earns >$20M/month, the UI must clearly show the “Kimi K2” name; most research and business uses are otherwise MIT‑like license summary, license note, Hugging Face.

This is very permissive for startups while still enforcing light attribution at extreme scale.

Early third‑party tests show Repo Bench variance and API instability

Initial external trials are mixed: one Repo Bench run reports ~20% points with 1/30 tasks passed and noticeably slower execution vs recent models, while others cite rate limits and timeouts during long ‘thinking’ runs repo bench run, api stability note, rate limit report.

Engineers should expect some rough edges in early access—cache behavior, provider routing, and time budgets matter for long agent loops.

🧩 Ironwood TPU GA and Arm Axion: scaling training and serve

New, actionable hardware news for AI: Google’s Ironwood TPU pods (9,216 chips; 1.77 PB HBM; 9.6 Tb/s ICI) go GA, plus Axion Arm VMs. Mostly infra performance and cost claims; excludes model launches.

Google Ironwood TPU goes GA: 9,216‑chip pods, 1.77 PB HBM, 9.6 Tb/s ICI

Google made its seventh‑gen Ironwood TPUs generally available, bundling training and serving on the same hardware with pods that stitch 9,216 chips, expose ~1.77 PB of shared HBM3E, and move data over a 9.6 Tb/s inter‑chip fabric. Google claims >4× per‑chip performance versus v6e and up to 10× peak over v5p, with Optical Circuit Switching for live reroutes and Jupiter fabric to cluster multiple pods to hundreds of thousands of TPUs blog recap, Google blog post.

CNBC’s breakdown highlights FP8 throughput (4,614 TFLOPS per chip) and the math that a single superpod sums to ~42.5 FP8 ExaFLOPS, vastly above NVIDIA’s GB300 NVL72 FP8 aggregate; the big picture is less KV cache stalling thanks to shared HBM and higher ICI bandwidth news coverage, specs video. For teams, this means fewer topology‑induced bottlenecks and a practical path to very‑large models without constant pipeline surgery.

- Builders are already tying this to model scale: practitioners argue 10T‑param training is now feasible and a 100T‑class experiment is within reach if scaling laws continue to pay off; if it plateaus, we’ll know soon blog recap.

Axion Arm VMs preview with up to 96 vCPUs and ~2× price/perf vs x86

Alongside Ironwood, Google previewed Axion Arm compute: N4A (up to 64 vCPUs, 512 GB RAM, 50 Gbps) and C4A Metal (up to 96 vCPUs, 768 GB RAM, up to 100 Gbps). Google positions Axion as ~2× better price/performance than comparable x86 VMs, intended to offload data prep, ETL, feature stores, and microservices so accelerators stay on model math vm overview, Google blog post.

For AI platform leads, this is the missing half of the bill: keep orchestration, preprocessing and post‑processing cheap and close to TPU pods, then scale accelerators only when the graph truly needs it. It also simplifies hybrid serving stacks where tokenization, chunking, and RAG glue often dominate costs.

Stack add‑ons: vLLM on TPU, MaxText SFT/GRPO, and GKE cuts TTFT up to 96%

Google is shipping a ready stack around Ironwood: Cluster Director improves topology‑aware scheduling; MaxText provides straightforward paths for SFT and GRPO; vLLM runs on TPUs with minor configuration tweaks; and GKE Inference Gateway claims up to −96% time‑to‑first‑token and ~−30% serving cost by handling cold starts, autoscaling and request routing software stack, Google blog post.

For infra teams, the point is simple. You get a cleaner line from training to serve and fewer bespoke caches, warmers, or sidecars to tame tail latency. That reduces the operational tax of moving from research checkpoints to production endpoints without re‑architecting the serving tier.

🚀 Next up: GPT‑5.1 ‘thinking’ traces and Polaris Alpha stealth

New model signals beyond the feature: GPT‑5.1‑thinking identifiers surface (and are removed) in ChatGPT; OpenRouter’s stealth ‘Polaris Alpha’ shows strong early characteristics. Excludes K2 release (covered in feature).

OpenAI briefly exposes ‘gpt‑5.1‑thinking’ in ChatGPT; strings later removed

Developers spotted a hardcoded model identifier “gpt-5-1-thinking” in ChatGPT’s web bundle before a follow‑up update scrubbed the strings, suggesting internal testing of a new reasoning tier—then a rollback to avoid tipping the roadmap code snippet, static script, removal note. Following up on Thinking traces, which first flagged early GPT‑5.1 hints, today’s find shows the identifier in event logs for the ChatGPT Composer with a specific "thinking effort" toggle.

What to do: treat this as a signal to harden your evals and budget logic for longer “thinking” modes, not as a public release. If you gate on model name strings, add allowlists to avoid breaking when identifiers churn inspector view, tracker article.

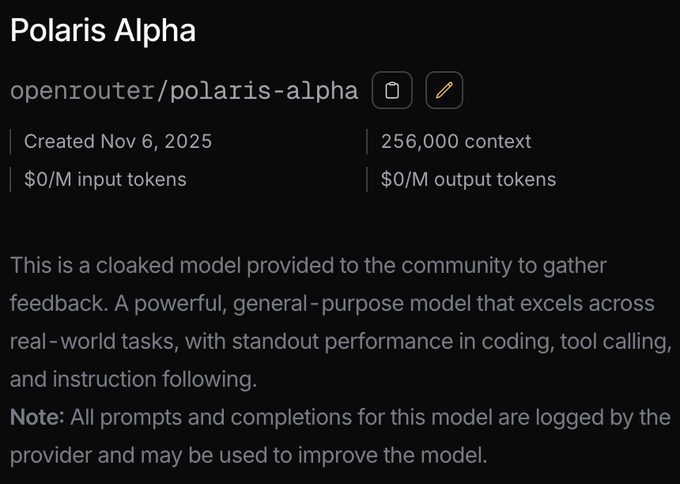

Stealth ‘Polaris Alpha’ arrives on OpenRouter with 256K context and logging notice

OpenRouter listed a new stealth model, Polaris Alpha, advertising a 256K context window and warning that prompts and completions are logged to improve the model—standard for stealth providers, but a constraint for regulated workloads launch note, model page, privacy note. Early testers highlight strong instruction following and tool use, while the provider routes requests across best backends under an OpenAI‑compatible API tester page.

Why it matters: it’s a low‑friction way to trial a likely next‑gen reasoning checkpoint, but avoid sensitive data until a private tier or on‑prem option exists. Keep cost controls in place—stealth models sometimes change pricing and limits without notice model page.

Polaris Alpha debuts #3 on Repo Bench; fast runs and GPT‑5‑like writing

Early community evals show ‘polaris‑alpha’ at 75.59% (#3) on Repo Bench over 4 runs, with separate tests completing entire suites in roughly 30 seconds wall time—strong signals for practical agentic coding loops benchmarks chart, run details. On LisanBench, it edges GPT‑4.1 and OpenAI’s Horizon‑Beta (a reported GPT‑5 no‑thinking checkpoint) on selected tasks, and similarity boards place its writing closest to GPT‑5 Pro and GPT‑5 (medium reasoning), hinting at lineage or shared tuning style lisanbench note, similarity table.

Caveats: leaderboard positions can shift with more runs; the model is stealth and logged, so don’t use proprietary code in prompts. If you A/B, lock seed prompts and enforce the same tool budget to make the speedup apples‑to‑apples model page.

🗂️ Managed RAG & grounded search: File Search, Deep Research, Exa

Concrete retrieval stack updates: Google’s Gemini File Search Tool (managed RAG, free storage/query embeddings), Deep Research Workspace hookups, Exa’s Sheets add‑on, and Firecrawl ‘Branding’ extraction. Excludes K2.

Google launches Gemini File Search: managed RAG with free query embeddings and $0.15/M indexing

Google shipped a fully managed RAG layer inside the Gemini API called File Search. Storage and query‑time embeddings are free; you only pay $0.15 per 1M tokens when you index files. It auto‑chunks, embeds, retrieves with citations, and plugs straight into generateContent. launch thread Google blog post.

Why this matters: teams can stop wiring vector DBs and chunking strategies for many use cases. Docs show a simple file‑search store wired as a Tool in generateContent; Simon Willison posted a concise Python example that uploads a PDF, polls the operation, then queries against that store. feature brief Gemini docs code sample. Google also calls out multi‑format support (PDF, DOCX, TXT, JSON, code) and built‑in citations so you can show provenance in the UI. Parallel query merging is advertised to return in under ~2 seconds for snappy answers. feature brief.

Parallel debuts web Search API for AI agents, claiming higher accuracy and lower cost

Parallel launched a Search API built for LLM agents rather than humans—objective‑driven queries, token‑relevant ranking, compressed excerpts, and single‑call resolution to pack the right tokens into the context window. The team shows state‑of‑the‑art accuracy and favorable cost across internal and public benchmarks. launch post launch blog.

Why it’s useful: it replaces brittle multi‑hop scrape chains with one call tuned for reasoning. Parallel highlights early production use by agent builders (e.g., Amp) who report quality gains when feeding its excerpts into downstream models. adoption note.

Exa ships Google Sheets add‑on to bring grounded web answers and contents into cells

Exa released “Exa for Sheets,” a Google Sheets add‑on that adds EXA_SEARCH, EXA_ANSWER, EXA_CONTENTS, and EXA_FINDSIMILAR functions so analysts can pull ranked results, direct answers, and full page contents into spreadsheets. It’s useful for desk research, QA, and building quick, auditable pipelines without leaving Sheets. feature thread Docs page Workspace listing.

The add‑on pairs neatly with judge models for scoring, and Exa shared guidance on using it alongside Claude for higher‑level synthesis. how‑to with Claude.

Firecrawl adds “Branding” format to extract color palettes, logos, and frameworks in one call

Firecrawl’s new Branding response type scrapes a site and returns compact “brand DNA” in one shot—color schemes, logo assets, UI framework hints, and more. It’s aimed at coding agents that need to clone or match an existing site’s look without manual scraping glue. feature thread.

API docs include an endpoint example and outline how Branding can be combined with normal scrape output; the Playground is live for quick trials. Docs branding format Playground.

⚖️ AI financing stance: no datacenter backstops, govt compute

OpenAI’s leadership clarifies they aren’t seeking government guarantees; supports govt‑owned AI infra and US chip fab loans; public ‘no bailout’ counterpoint. New today vs prior posts is the detailed Altman/Friar clarifications.

OpenAI rejects datacenter guarantees, backs government‑owned compute reserves

Sam Altman said OpenAI is not seeking government guarantees for its datacenter buildouts and supports governments building and owning national AI compute reserves; he also cited a >$20B ARR run‑rate in 2025 and about $1.4T in infrastructure commitments over eight years Sam Altman post. Following up on CFO clarification, Altman’s longer note adds detail (e.g., support for US chip‑fab loan guarantees, but not private datacenter backstops) and reframes his earlier “insurer of last resort” comment as about catastrophic AI misuse, not corporate bailouts Sam follow‑up.

Altman also floated selling compute directly (“AI cloud”) to other companies if demand warrants, which signals a dual path: product revenue and capacity monetization compute sales plan. That nuance matters for infra planning and contract structuring. The stance drew public reinforcement from investors arguing the market has enough frontier labs to absorb failures no bailout comment.

Why it matters: policy clarity reduces financing ambiguity, helps CIOs price sovereign or regulated deployments, and sets expectations that public funds should buy public assets (government‑owned capacity) rather than backstopping single‑vendor risk. If you’re negotiating multi‑year AI commitments, this shifts conversations toward capacity reservations, sovereign tenancy, and portability over guarantees. Use the original post for language you can cite in RFP terms Sam Altman post.

Proposal gains steam for a US ‘compute reserve’ focused on open models

Policy voices linked Altman’s national compute idea to The ATOM Project, which argues for US‑funded, open‑weights model labs (10k+ GPU scale) and a strategic reserve of compute dedicated to open research and innovation project mention, with a formal policy site outlining scope and governance ATOM project. This frames a concrete alternative to company‑specific subsidies: buy public capacity, release open models, and let the market compete on services.

For leaders, this provides a template to reference in public‑sector RFPs and state/federal grant proposals. It also creates an “open default” argument for agencies that need auditability and vendor exit options policy follow‑up.

Counterpoint: call to underwrite AI infra like other critical utilities

Emad Mostaque argued governments should provide infrastructure underwriting and guarantees for AI buildouts, analogizing GPUs and robots to national productive capital underwriting view. He also pointed to teleoperation centers as a likely profit center, hinting at where policy incentives might focus first teleoperation view.

This is the opposite pole from the “no bailout” line. For operators bidding on public‑sector capacity, monitor whether RFPs begin to ask for government‑style underwriting or letters of credit. If they do, price the risk transfer accordingly, or propose capacity‑for‑equity or reservation models instead.

Investor line in the sand: no federal bailout for AI labs

David Sacks stated there should be no federal bailout for AI companies, noting the US has at least five frontier model labs and market competition would cover failures no bailout comment. This public stance gives policymakers and CFOs cover to structure contracts without implicit rescue assumptions.

If you’re modeling vendor risk, treat concentration as a portability and data‑migration problem, not as bailout‑backed credit. Bake in model‑swap clauses, export formats, and fine‑tune portability. The public posture helps justify these terms during procurement.

🧰 Agent & coding stacks: Codex polish, Zed agents, Deep Agents JS

Developer‑facing improvements and workflows (not model launches): Codex collaboration/usage fixes, Zed’s Agent Server extensions, LangChain Deep Agents JS, Comet Assistant bump, and e2b webhooks. Excludes K2 items.

Codex updates improve collaboration and cut tokens ~3%

OpenAI pushed a small but helpful refresh to gpt‑5‑codex inside Codex: more collaborative behavior, more reliable apply_patch edits, and about a 3% reduction in tokens for similar outcomes model polish, patch notes. Team leads also flagged steady incremental progress on the Codex stack team note.

Why it matters: fewer back‑and‑forths and tighter diffs improve CI times and code review flow. The token trim compounds for long sessions, especially on larger repos.

Codex evens out usage with cache fixes; fewer surprise overages

Codex landed backend optimizations that make usage more predictable throughout the day—smoothing out cache misses so similar workloads consume similar quota regardless of traffic or routing usage fairness, change recap. Another fix reduced occasional cache‑miss spikes that made rate limits feel inconsistent during long runs quota stability. For heavier users, some community guidance: if you’re routinely hitting free limits, the Pro plan unlocks the model’s intended loop length plan advice.

Perplexity Comet Assistant gets 23% lift and multi‑tab workflows

Perplexity rolled out a major Comet Assistant upgrade: it now handles longer, multi‑site jobs across multiple tabs in parallel, and internal tests show a 23% improvement in successful completions feature brief, release notes. The agent now explicitly requests permission before taking browser actions, adding a clear control surface for automation permission prompt. A broader mobile beta is also appearing for Android testers rollout note, android beta.

So what? This moves beyond “one‑page answers” toward durable, auditable browsing sessions for research, spreadsheets, and weekly digests.

Zed adds Agent Server extensions for one‑click Auggie/OpenCode installs

Zed shipped Agent Server extensions, letting developers install ACP‑compatible agents like Augment Code (Auggie), OpenCode, and Stakpak from the Extensions panel and start agent threads directly from the UI agent extensions. The announcement details packaging, discovery, and how to publish your own agent as a Zed extension Zed blog.

This shortens setup from repo‑cloning to a click, making multi‑agent workflows practical inside the editor.

e2b adds signed webhooks for sandbox lifecycle events

e2b introduced webhook management for its sandboxes with signed secrets and zero‑downtime rotation. Teams can subscribe to five events—created, paused, resumed, updated, killed—and manage endpoints in the dashboard dashboard, webhooks docs. Following up on MCP catalog (Docker’s 200+ tools prewired), this adds observability and automation hooks needed for CI and production agent runs weekly release.

Why it matters: you can now trigger downstream pipelines on sandbox events, verify signatures, and rotate keys without outages.

LangChain ships Deep Agents JS on LangGraph 1.0

LangChain released Deep Agents for JavaScript, built on LangGraph 1.0, bringing planning tools, sub‑agents, and filesystem access to the JS ecosystem release thread. There’s also a short build‑through showing a deep research agent setup, with streaming, memory and thread IDs how-to video.

Use cases: long‑horizon research agents, tool‑rich tasks that benefit from hierarchies, and projects that prefer TypeScript ergonomics.

⚙️ Hybrid serving & local fine‑tuning for ultra‑large MoE

Runtime and fine‑tune techniques independent of today’s model launches: KTransformers ‘Expert Deferral’, CPU/AMX + GPU mixed LoRA paths in LLaMa‑Factory, and SGLang parser hooks. Excludes K2 runtime notes.

LoRA on DeepSeek‑V3 671B with two 4090s hits ~70 GB GPU peak via layered MoE offload

Using the same KTransformers strategy (attention on GPU + layered MoE offload), the team shows a LoRA fine‑tune of DeepSeek‑V3 671B on a single server with two RTX 4090s, measuring ~70 GB peak GPU memory—versus ~1.4 TB theoretical full‑precision residency that wouldn’t run at all 671B memory note. Throughput is modest (~40.35 tok/s reported under 4090‑class), but the point is feasibility with commodity parts, not peak speed 671B memory note.

If your roadmap includes adapting ultra‑large sparse MoE to niche domains, this shows a path: push dense attention to GPUs, stream expert layers to CPU, and accept a slower but tractable inner loop. That trade buys you experiments that would otherwise be canceled for lack of VRAM. For many enterprises, “it runs at all” beats “it’s the fastest,” especially when security or data gravity demands on‑prem. 671B memory note

KTransformers ‘Expert Deferral’ makes CPU/GPU run in parallel; ~30% speedup, PR to SGLang

KTransformers introduced “Expert Deferral,” a serving trick that postpones low‑importance experts to the next residual block so GPUs compute attention/FFN while CPUs finish expert work, yielding ~30%+ throughput gains in mixed CPU/GPU MoE pipelines engineering note.

The team says a pull request to bring this into SGLang is submitted, aiming to lift multi‑GPU parallel inference without changing model outputs engineering note. In effect, the pipeline removes CPU idle pockets by restructuring the expert schedule, which is where many hybrid MoE stacks stall. For infra leads running sparse MoE on commodity hosts, this is actionable: you get more tokens/sec at the same capex, because you’re reclaiming idle CPU cycles rather than chasing FLOPs.

Following up on Hybrid support, which showed vLLM broadening hybrid model support, this moves the conversation from “can we host hybrids?” to “can we keep all silicon busy?” The answer looks like yes—if you let the runtime re‑order experts across layers rather than across machines engineering note.

LLaMa‑Factory + KTransformers: local LoRA ft at ~530 tok/s using ~6 GB VRAM on 14B

LLaMa‑Factory integrated a KTransformers backend for LoRA fine‑tuning that pushes most heavy ops to CPU/AMX while keeping attention on the GPU, enabling practical local training on creator‑class rigs feature brief. In a DeepSeek‑V2‑Lite 14B demo, they report ~530.38 tokens/sec at ~6.08 GB GPU memory—numbers that put weekend‑scale adaptation within reach for many teams feature brief.

Why this matters: fine‑tuning on a single 4090 normally hits VRAM walls before throughput walls. This path swings the bottleneck to CPU memory bandwidth and AMX, letting you keep larger contexts or batches without paging. It also keeps inference engines untouched—LoRA adapters drop in after training—so you don’t have to re‑platform serving to harvest the gain feature brief.

📊 Stealth model evals and speech leaderboards

Fresh third‑party signals: Polaris Alpha’s Repo Bench placement and style similarity; Artificial Analysis Speech Arena crowns Inworld TTS 1 Max; embeddings usage trends. Excludes K2 SOTA claims (covered in feature).

Polaris Alpha hits #3 on Repo Bench at 75.59%

OpenRouter’s stealth model “polaris‑alpha” entered the public Repo Bench and ranked #3 with a 75.59% score across 4 runs repo bench chart. Teams can treat this as an external signal that the model can handle goal‑oriented code changes at a competitive level (not just chat).

If you’re testing agents against Repo Bench, slot polaris‑alpha into your suite and compare pass/fail patterns to your current top model; a strong #3 suggests it’s worth A/Bs before adopting it as a default.

Inworld TTS 1 Max tops Artificial Analysis Speech Arena

Artificial Analysis’ Speech Arena now lists Inworld TTS 1 Max as the human‑preference leader, ahead of MiniMax Speech‑02 and OpenAI TTS‑1 variants; it supports 12 languages, voice cloning from 2–15 seconds, and expressive tags like “whisper” and “surprised” speech arena update. The larger Max model averages ~69 chars/sec generation; the smaller Inworld TTS 1 averages ~153 chars/sec, with both accessible for head‑to‑head listening on the live board speech arena. Following up on Open STT models, this adds a crowdsourced, preference‑based complement to WER‑style accuracy.

For product teams, use the Arena to AB listen for assistant/IVR fit; the voice tags and cloning range make it easy to dial for persona without retraining.

Polaris Alpha’s writing style scores closest to GPT‑5 Pro

A third‑party inter‑LLM similarity board shows polaris‑alpha’s prose is nearest to GPT‑5 Pro and GPT‑5 (medium reasoning) on a “closeness” metric similarity board. That’s useful for red‑teamers and editors: expect GPT‑5‑like tone/structure from the stealth model in long‑form outputs, even if raw capabilities differ.

Embedding usage trends: Qwen3 Embedding 8B takes #1; OpenAI safeguard spikes 16,821%

OpenRouter’s trending board shows Qwen3 Embedding 8B leading by tokens, while OpenAI’s gpt‑oss‑safeguard‑20b jumps +16,821% in usage; NVIDIA’s Nemonotran Nano 12B 2 VL is also up sharply embeddings trend chart. If you’re running RAG pipelines, this is a nudge to benchmark Qwen3‑8B against your current embedder for recall/cost, and to evaluate any safeguard layer interactions before production.

🎬 Creative pipelines: LTX‑2 ranks, upscalers, character swap

High volume creative items today: LTX‑2’s top‑tier arena ranks, Photoshop Generative Upscale workflows, Higgsfield Recast body/voice swaps, and DeepMind’s interactive Space DJ. Pure media tooling; excludes policy and model core news.

LTX‑2 climbs to #3 Image→Video and #4 Text→Video on Artificial Analysis

Lightricks’ LTX‑2 entered the top tier of community rankings: #3 for Image→Video and #4 for Text→Video on Artificial Analysis, putting a ~50‑person team alongside Google and OpenAI leaderboard chart. The team says it targets production specs—native 4K, up to 50 fps, and synchronized audio—with early access via Fal, Replicate, RunDiffusion, and ComfyUI, and a full open‑source release later this year rollout thread, LTX-2 site.

Why this matters: small creative teams can test a high‑fidelity, controllable model today without hyperscaler contracts. It’s a credible alternative for I2V/T2V workflows where 4K delivery and camera control are mandatory, and it broadens vendor options if you’re currently locked to Veo/Sora‑style tools rollout thread.

Higgsfield Recast ships 1‑click full‑body character swap with voice cloning and dubbing

Higgsfield launched Recast, a creator tool that replaces an entire on‑screen body—not just a face—while tracking gestures, then layers voice cloning, multi‑language dubbing, and background transforms. There’s a presets library, plus a studio for custom swaps, with a public link to try it now feature intro, try recast, Recast app.

Why this matters: this collapses a multi‑app VFX pipeline (face swap + rotoscope + ADR) into a single tool. Use cases: localized ads, character continuity, and faster A/B creative testing without reshoots try recast.

Photoshop’s Generative Upscale gets practical: 2×/4× with Firefly, Topaz Gigapixel or Bloom

Creators are sharing a clean Photoshop flow for AI upscaling: open image → Image → Generative Upscale → pick 2× or 4×, then choose the model—Firefly Upscaler (restores up to 6144×6144), Topaz Gigapixel (detail‑preserving, up to 56MP), or Topaz Bloom (adds creative detail). The examples show Bloom producing a stronger aesthetic lift vs the source while Gigapixel aims for fidelity workflow how-to, model options.

Why this matters: this is an easy drop‑in for existing PS pipelines to batch‑upgrade resolution before delivery. Use Gigapixel for client realism, Bloom for stylized shots, and Firefly when you need a fast, native pass that preserves identity workflow how-to.

DeepMind’s Space DJ turns 3D genre navigation into real‑time instrumental music

Google DeepMind released Space DJ, an interactive web app where flying through a 3D constellation of music genres streams prompts into the Lyria RealTime API to generate evolving instrumental audio on the fly product note, Space DJ app. The same API is exposed in Google AI Studio for developers building interactive music tools api mention.

Why this matters: it’s a hands‑on pattern for real‑time, parameterized generation—useful for games, live shows, and generative soundbeds that react to user motion or UI state product note.

SeedVR2 lands on Replicate for one‑pass video/image restoration to 4K

ByteDance’s SeedVR2‑3B is now a Replicate model that sharpens soft or compressed footage in a single diffusion shot—no multi‑pass pipeline—handling MP4/MOV/PNG/JPG/WebP and scaling to clean, 4K outputs. The page notes options like sp_size for larger GPUs and shows before/after examples across phone clips and low‑light shots model page, Replicate model.

Why this matters: for editors who constantly receive low‑quality assets, this offers a quick upgrade path before grading or upscaling with heavier tools. Wrap it in a folder watch or NLE export hook to batch‑clean inputs model page.

🤖 Humanoids & teleoperation signals from China and beyond

Embodied AI tidbits: XPeng’s ‘IRON’ platform demo and Unitree’s ‘Embodied Avatar’ teleop capability. This is a lighter beat today and excludes policy/hardware news.

XPeng teases ‘IRON’ humanoid; lifelike demo sparks authenticity debate

XPeng surfaced new footage of its ‘IRON’ robotics platform, showing a humanoid with strikingly human proportions and gait. The clip drew immediate scrutiny, with some calling it fake and others noting the unusually natural physiognomy compared to current bots demo mention, appearance comment, summary note.

Why it matters: If the demo reflects real-time performance, XPeng joins the short list of Chinese firms pushing credible humanoid locomotion—useful for teleop today and autonomy later. For teams building embodied stacks, it’s a signal to watch China’s cadence and the sensor/actuator choices behind such smooth motion.

China trials robot dogs for firefighting in Sichuan

Footage from Sichuan shows quadruped robots tested in firefighting scenarios—navigating hazards where human crews face acute risk trial footage, follow-up note.

Why it matters: This is a practical teleop/embodied AI workload with real budgets behind it. If trials stick, expect demand for ruggedized perception, low‑latency teleoperation links, and hot‑swap battery/thermal designs. It’s a good checklist for teams productizing autonomy‑assist and human‑in‑the‑loop control.

Teleoperation centers flagged as the near-term robotics business edge

Commentary from industry leaders argues the real money near‑term sits in teleoperation centers—staff that supervises and backstops fleets of robots—rather than pure autonomy alone strategy comment. The week’s Unitree full‑body teleop demo underscores that direction of travel for embodied systems teleop demo.

Why it matters: For ops leads, this reframes roadmap priorities: invest in low‑latency streaming, safety interlocks, fleet dashboards, and skills training pipelines. For researchers, prioritize shared autonomy and handoff UX over end‑to‑end unsupervised control.

🎙️ Voice agents: enterprise Copilot and TTS arena shake‑up

Voice agent highlights relevant to product builders: Microsoft 365 Copilot’s interruptible voice chat on mobile and Inworld TTS 1/Max topping the AA speech arena. Focused on voice UX/latency and capability, not creative music.

Microsoft 365 Copilot mobile adds interruptible voice; desktop/web by year-end

Microsoft rolled out natural, interruptible voice chat in the Microsoft 365 Copilot mobile app, with real‑time spoken replies grounded in work and web context. The company says desktop and web support will arrive by year‑end, with broader access for non‑licensed users "in the coming months" feature brief.

This is a practical step for hands‑free workflows. You can now talk over the assistant mid‑answer to redirect it, which matters for on‑the‑go triage and meeting follow‑ups. In context of mobile voice, which introduced the capability, today’s notes emphasize the rollout path beyond mobile and that enterprise privacy rules still apply (secure transcription, tenant boundaries).

Why it matters: voice UX that tolerates interruptions removes a big friction point vs push‑to‑talk bots. For IT, the guardrails remain enterprise‑grade; for product teams, the signal is clear—voice is moving from gimmick to default interaction in M365.

Inworld TTS 1 Max leads AA Speech Arena on human preference

Inworld’s TTS 1 Max is now the top‑ranked model on Artificial Analysis’ Speech Arena, edging out OpenAI and MiniMax on head‑to‑head human preferences. The model supports 12 languages, 2–15s voice cloning, and rich voice tags (e.g., whisper, cough, surprised) arena summary, with measured throughput around ~69 characters/second for Max and ~153 chars/s for the smaller Inworld TTS 1 speed details.

For teams shipping voice agents, this narrows the gap between fast, production TTS and expressive performance. You can browse results and test clips in the arena and explorer to hear style control and consistency before swapping providers speech arena, and drill into samples per category in the explorer speech explorer.

ElevenLabs open‑sources Shopify voice shopping agent (MCP)

ElevenLabs demoed an end‑to‑end voice shopping agent that searches products, builds a cart, and checks out via the Shopify Storefront MCP server—then published the full code. The sample wires an agent stack (LLM + TTS/STT) to MCP tools for product browse and purchase flow voice shopping demo, with the implementation on GitHub for reproduction and extension GitHub repo.

For commerce teams, this is a concrete blueprint to prototype conversational funnels today rather than slideware. You can try the hosted example to understand latency and turn‑taking behavior before adapting it to your catalog demo site, and note the developer guidance that the Storefront MCP cleanly slots into existing agent hosts dev note.