GPT‑5.2 Pro claims Erdős #3 and #397 solves – Tao acceptance cited

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Posts today attribute multiple new mathematics results to OpenAI’s GPT‑5.2 Pro; screenshots of erdosproblems.com threads are circulated as receipts, with claims that Terence Tao and others validated/accepted at least Erdős problem #3. A separate wave points to Erdős problem #397 marked “DISPROVED (LEAN),” implying a formal artifact exists; attribution varies between “GPT‑5.2 Pro” and “ChatGPT” in the cited captures, and the public threads shared via screenshots aren’t a full reproducible package yet.

• Anthropic/Claude Code: Desktop UI now exposes an Ask/Act/Plan selector; Claude Code 2.1.5 appears with no detailed CLI changelog, pushing behavior-diff tracking onto community regression reports.

• Google/UCP + Direct Offers: Universal Commerce Protocol proposes /.well-known/ucp manifests and capability-based checkout flows; Google also pilots sponsored “Direct Offers” inside AI Mode shopping, but conformance tests and auction/reporting details aren’t shown.

• Meta power: Meta cites up to 6.6 GW of nuclear PPAs by 2035 with 20‑year deal structure; firmness vs pipeline remains unclear.

Across the feed, “agent era” credibility is converging on verification layers—Lean proofs for math; provenance/review UX for code and docs—while infra and standards (power PPAs; commerce manifests) shape what agents can actually ship.

Top links today

- Chain-of-View prompting for spatial reasoning paper

- DiffCoT diffusion-style chain-of-thought paper

- RelayLLM collaborative decoding efficiency paper

- GDPO multi-reward RL optimization paper

- YapBench benchmark for LLM verbosity paper

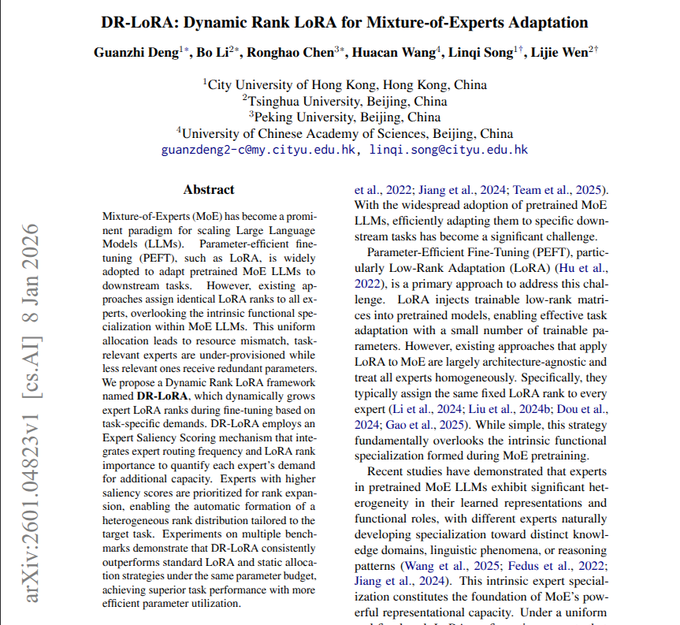

- DR-LoRA dynamic rank LoRA for MoE paper

- Learnable multipliers to free weight scale paper

- Learning rate setting for large-scale pretraining paper

- Batch size scheduling for large-scale pretraining paper

- MoE standing committee specialization audit paper

- MIT on minimal datasets for optimal decisions

- Meta nuclear PPAs for AI power roadmap

- Stack model for in-context learning on single-cell data

Feature Spotlight

Feature: GPT‑5.2 Pro’s verified Erdős-problem streak (Tao acceptance)

GPT‑5.2 Pro is widely reported to have solved multiple long‑standing Erdős problems with solutions accepted by Terence Tao—suggesting a new phase for verifiable AI math and downstream acceleration of formal/technical research.

Multiple high-volume posts cite GPT‑5.2 Pro solving multiple Erdős Problems, with solutions accepted/validated by Terence Tao and others—framed as a step-change in AI’s ability to do new, checkable mathematics. This category is solely the math-breakthrough storyline and excludes other AI research/tooling.

Jump to Feature: GPT‑5.2 Pro’s verified Erdős-problem streak (Tao acceptance) topicsTable of Contents

🧮 Feature: GPT‑5.2 Pro’s verified Erdős-problem streak (Tao acceptance)

Multiple high-volume posts cite GPT‑5.2 Pro solving multiple Erdős Problems, with solutions accepted/validated by Terence Tao and others—framed as a step-change in AI’s ability to do new, checkable mathematics. This category is solely the math-breakthrough storyline and excludes other AI research/tooling.

GPT‑5.2 Pro is credited with solving Erdős problem #3 with Tao acceptance

Erdős problem #3 (OpenAI): Posts today claim GPT‑5.2 Pro solved Erdős problem #3 and that the result was accepted by Terence Tao, with a screenshot of the erdosproblems.com discussion used as evidence in the Erdős #3 claim.

The framing around it is explicitly “a ‘lift-off’ moment for AI science,” as stated in the Erdős #3 claim, and the credibility hinge in the thread is the “accepted by Terence Tao” assertion in the same Erdős #3 claim.

GPT‑5.2 Pro is credited with solving Erdős problem #397 as “Disproved (Lean)”

Erdős problem #397 (OpenAI): A separate wave of posts says GPT‑5.2 Pro also solved Erdős problem #397 and was accepted by Terence Tao, pointing to a screenshot where the problem is labeled “DISPROVED (LEAN)” in the Erdős #397 claim.

The screenshot also notes prior context (e.g., an alternate construction attributed to Elkies) in the Erdős #397 claim, which makes this look closer to “verified formal artifact exists” than a pure social claim—though the exact division of credit between “GPT‑5.2 Pro” and “ChatGPT” varies between the tweet text and the page excerpt shown in the same Erdős #397 claim.

The “three Erdős problems in a weekend” GPT‑5.2 Pro streak narrative spreads

Weekend streak narrative (OpenAI): Multiple accounts now summarize this as “3 mathematical problems … over the weekend” for GPT‑5.2 Pro, as asserted in the Three-problems claim, and fold it into a broader “WTF moment” framing in the WTF moment post.

• What’s concretely cited: The strongest receipts in the tweet set are screenshots of the Erdős Problems site showing discussion/comments and Tao’s presence, as shown in the Three-problems claim and echoed by the #3/#397 posts in the Erdős #3 claim and Erdős #397 claim.

• Continuity with the earlier #729 claim: This “streak” framing is explicitly additive to the earlier weekend chatter about Erdős #729 (GPT + “Aristotle”), following up on Erdős #729 claim with new accepted-problem IDs now circulating in the Three-problems claim.

Near-miss Erdős attempts are said to be giving way to genuine solves

Validation pattern (Erdős Problems): Commentary today points to a repeated pattern where AI first appears to solve an Erdős problem but is later found to have rediscovered an existing solution, and then soon after produces a real new result—“near-misses” turning into actual solves—per the Near-miss pattern note.

This is one of the more operationally relevant takeaways for analysts: it frames the current Tao-linked posts not as a one-off, but as a phase where the community expects faster iteration from “false start” to “publishable/accepted,” as described in the Near-miss pattern note.

🧩 Claude Code: Plan mode, desktop UX, and quiet point releases

Today’s Claude Code chatter is mostly UI/UX and release-watching: Plan mode surfaces in desktop screenshots and Claude Code 2.1.5 appears without a detailed changelog. Excludes the feature math story.

Claude Code desktop UI surfaces Ask/Act/Plan mode selector

Claude Code (Anthropic): Following up on Plan mode rollout (Plan Mode becoming a default loop), the desktop app UI now visibly exposes a three-way mode selector—Ask, Act, and Plan—alongside the folder picker and “Local” runtime selector, as shown in the Plan mode screenshot.

This is a small UX detail, but it changes how teams onboard: the planning step becomes an explicit, first-class control rather than a prompting habit.

Anthropic promotion link circulates for installing Claude Code via Claude Desktop

Claude Desktop (Anthropic): A circulated note says Anthropic is now promoting the workflow where the Claude Desktop app can install Claude Code and then run it with access to a selected local folder (no separate terminal-first setup), as described in the Promotion mention.

This matters operationally because it moves Claude Code closer to a “standard app install” surface—less CLI friction, more consistent configuration, and a clearer permission boundary around the chosen working directory.

Claude Code 2.1.5 ships with no public CLI changelog yet

Claude Code (Anthropic): ClaudeCodeLog spotted Claude Code 2.1.5 landing, but notes there’s “no major prompt changes detected” and there isn’t a CLI changelog posted yet, per the 2.1.5 watcher post.

This is one of those releases where engineering teams mostly care because it can silently change agent behavior (or not), and the absence of a diff/changelog shifts the burden onto community regression reporting.

ClaudeCodeLog solicits biggest improvements/regressions for Claude Code 2.1.4

Claude Code (Anthropic): Following up on 2.1.4 release (background-task and OAuth tweaks), ClaudeCodeLog is explicitly asking for field reports on “biggest improvement, biggest regression” in Claude Code 2.1.4, as requested in the 2.1.4 impressions prompt.

They also point people at a “2.1.4 changes” reference link in the 2.1.4 changes link, which is a signal that version-to-version behavior tracking is increasingly community-led.

🧭 Cursor agents: Grind mode, mobile agent UI, and long-run loops

Cursor-related posts focus on long-running agent loops (“Grind”), model selection UI, and early mobile agent experiences. Excludes Claude Code and OpenCode coverage.

Cursor Dashboard surfaces Grind mode toggle alongside multi-model picker

Cursor (Anysphere): Cursor’s Dashboard UI now shows a dedicated Grind toggle for long-running agent loops, sitting next to a model picker that includes GPT‑5.2, Opus 4.5, and Gemini 3 Pro, as shown in the Dashboard screenshot. The presence of an explicit switch (rather than an implicit “keep going” behavior) suggests Cursor is treating long-horizon runs as a first-class mode, echoed by the “what’s this feature?” question in the Dashboard screenshot.

• Model-mix surface: The same panel shows “Use Multiple Models” as a separate setting from Grind, implying Grind is about run behavior/looping while model selection stays orthogonal, as shown in the Dashboard screenshot.

The tweets don’t include release notes, so it’s unclear whether Grind is new functionality or a rename/UX promotion of an existing loop mode.

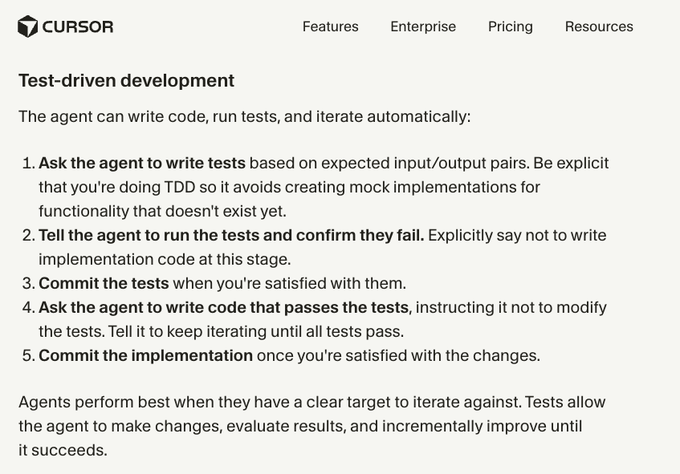

Cursor guide formalizes agentic TDD loop for long-running iterations

Agentic TDD (Cursor): Cursor’s published guidance spells out a test-driven loop where the agent writes tests first, confirms they fail, then iterates on implementation until tests pass—explicitly separating “commit tests” from “commit implementation,” as shown in the TDD excerpt screenshot and detailed in the Cursor blog post. This is framed as a way to give agents a clear target for long-running iterations, rather than relying on subjective “looks good” checks.

• Guardrail against fake implementations: The snippet emphasizes telling the agent it’s doing TDD so it avoids creating mock implementations for missing functionality, as shown in the TDD excerpt screenshot.

Cursor mobile agent UI appears with model selector and repo picker

Cursor mobile agent (Anysphere): A mobile UI for running Cursor’s agent shows a single prompt box (“Ask Cursor to build, fix bugs, explore”), a model dropdown set to Opus 4.5, and a Select repository control, as captured in the Mobile UI screenshot. The follow-up note “nvm just used Cursor mobile agent” in the Mobile UI screenshot reads like a quick confirmation that the flow is usable end-to-end on phone.

• Task templates: The screen also surfaces quick actions like “Write documentation,” “Optimize performance,” and “Find and fix 3 bugs,” which hints at a preset-task layer above raw prompting, as shown in the Mobile UI screenshot.

🛠️ OpenCode momentum: adoption scale and provenance in diffs

OpenCode shows up as the open-source coding agent with large adoption signals and new discussion about tracking AI-generated code inside git diffs. Excludes Cursor/Claude Code specifics.

OpenCode tracks which diff hunks were AI-generated, raising git provenance questions

OpenCode (anomalyco): A new capability is getting attention: OpenCode reportedly tracks “which hunks in a diff were AI generated,” and the open question is how that provenance should surface in git history—e.g., separate commits or distinct committer metadata, as raised in the Diff provenance discussion.

If OpenCode (or downstream tools) can standardize this, it could change how teams audit, review, and attribute agent-authored code—especially in regulated codebases where “who wrote what” becomes a first-class artifact.

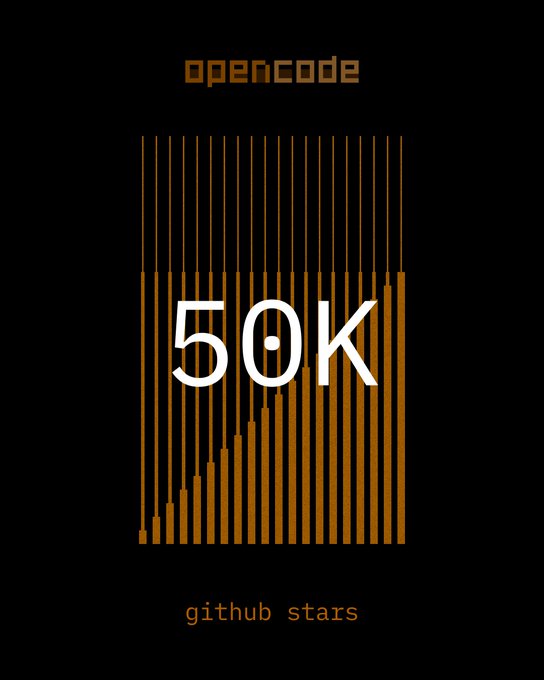

OpenCode crosses 60K stars and keeps climbing on GitHub Trending

OpenCode (anomalyco): The open-source coding agent is now signaling real adoption momentum—first via a “60K stars” milestone post in the 60K stars graphic.

It also shows up near the top of GitHub Trending with ~62,437 total stars and ~255 stars added “today,” as captured in the GitHub trending screenshot.

These are lightweight metrics, but they’re the kind that correlate with an ecosystem forming around the tool (wrappers, workflows, and contributions) rather than one-off demos.

🏗️ Power and capacity race: nuclear PPAs, solar scale, and AI buildout signals

Infrastructure posts center on electricity as the binding constraint—Meta’s nuclear procurement, China’s solar buildout, and capacity/throughput signals from AI server manufacturing. Excludes the feature math story.

Meta targets up to 6.6 GW of nuclear power by 2035 to supply AI buildout

Nuclear PPAs (Meta): Meta says it has lined up agreements for up to 6.6 GW of nuclear power by 2035, following up on grid access (power and site delivery constraints); the scale and framing as a top-tier corporate buyer are called out in 6.6 GW claim and reiterated with more deal structure in PPA details.

• Deal shape: The write-up describes 20-year PPAs tied to three U.S. nuclear plants and parallel work with SMR builders, as summarized in PPA details.

• Sizing context: The same thread notes a “typical” nuclear plant is ~1 GW, which makes the procurement size legible for data-center planners, as stated in PPA details.

The open question from the tweets is how much of the 6.6 GW is firm contracted capacity versus development pipeline, but the intent (long-duration, firm power) is explicit.

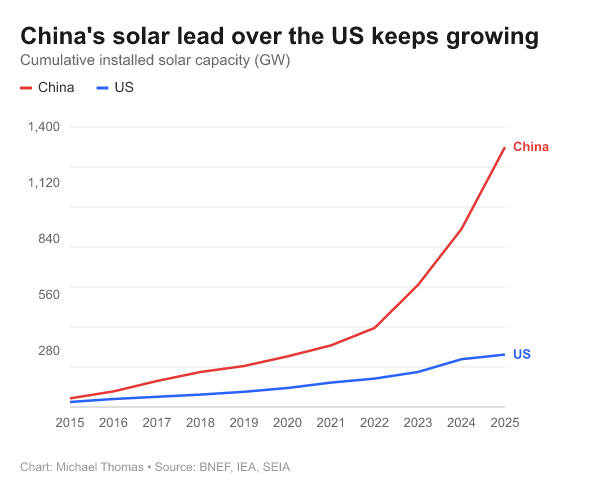

China adds 256 GW of solar in H1 2025, about 67% of global additions

Solar buildout (China): A circulated stat says China put 256 GW of new solar on the grid in H1 2025, versus 380 GW added globally over the same window (≈67% share), positioning electricity abundance as a competitive variable for AI-scale infrastructure, as written in H1 solar additions.

The supporting chart in the same post visualizes how China’s cumulative installed solar capacity keeps widening versus the U.S., which is the underlying capacity story behind the H1 additions, as shown in H1 solar additions.

Taiwan Big 6 AI server OEMs hit NT$1.59T December revenue, +35.9% YoY

AI server manufacturing (Taiwan Big 6): Taiwan’s major AI server OEMs reportedly closed Dec 2025 at NT$1.59T monthly revenue (+35.9% YoY), with commentary that the usual year-end slowdown isn’t showing up, as described in Big 6 revenue claim.

• Why it’s a supply-chain signal: These OEM revenue prints are used as a proxy for physical AI server shipments leaving the factory floor, which is the demand-side driver behind power and GPU allocation, as argued in Big 6 revenue claim.

Alibaba Qwen lead pegs China leapfrogging odds under 20% and cites compute limits

Compute constraint outlook (Alibaba/Qwen): Justin Lin (Qwen lead) is quoted putting the odds of a Chinese company “leapfrogging” OpenAI/Anthropic at <20% over 3–5 years, and attributing a lot of the gap to compute availability and export controls, as summarized in a Bloomberg-style card in probability estimate.

• Inference capacity as the bottleneck: A separate excerpt amplifies the same theme in more operational terms—“we are stretched thin,” with delivery demands consuming most resources—framing the constraint as inference compute rather than only research ambition, as shown in compute constraint quote.

Taken together, today’s signal is less about model architecture and more about who can continuously fund, power, and staff the inference footprint that supports both product delivery and next-gen training.

📐 Agentic coding practice: TDD loops, context discipline, and “tool vs skill” debates

High-volume workflow content today: test-driven agent loops, context/verification tips, and backlash against over-reliance on specific harnesses (Ralph) in favor of fundamentals. Excludes specific tool release notes and the feature math story.

Agentic TDD loop standardizes tests-first guardrails for coding agents

Agentic TDD loop: A concrete “tests first, then implementation” loop is being pushed as the cleanest way to keep long-running coding agents honest—write tests from I/O pairs, confirm they fail, commit them, then have the agent iterate until all pass without touching the tests, as shown in the TDD steps excerpt.

• Why it’s sticking: The process gives the agent a tight objective function (test suite) and reduces drifting “mock implementations,” as described in the TDD steps excerpt and expanded in the Best practices blog.

• Where it breaks: The loop still depends on test quality; vague specs turn into vague tests, which then lets the agent overfit to weak assertions (the blog’s emphasis on explicit I/O pairs is the tell, per the Best practices blog).

Agent build checklist emphasizes Unix tools, shared FS, and verification scripts

Agent build checklist: A pragmatic checklist for agent builders is circulating that treats “Unix basics + shared filesystem + verification” as the core stack, not fancy orchestration—use Bash and the filesystem as the lingua franca between tools/subagents, and make the agent run checks before it declares done, as laid out in the Agent builder checklist.

• Reliability over cleverness: The checklist explicitly calls out verification as critical (run scripts, check errors) and leans on error-driven recovery, per the Agent builder checklist.

• Context discipline: It warns against loading huge files into context and against “semantic search for precise data,” pushing extraction scripts instead, as described in the Agent builder checklist.

“Focus on the skill, not the tool” backlash grows around Ralph-style hype

Tool vs skill debate: There’s a sharper backlash against treating Ralph as a cheat code; one post argues “a loop is not going to help you” and claims prompting is ~95% of the value in coding agents, pushing people back toward fundamentals, as argued in the Skill over tool argument.

• What this changes in practice: The argument reframes agent performance as a function of operator skill (specifying, constraining, reviewing) more than harness choice, per the Skill over tool argument.

Bash loop framing resurfaces as the alternative to Ralph-style autonomous loops

Bash loop vs plugin loops: A visible counter-current is arguing that “stick with a bash loop” beats trusting the Ralph plugin abstraction; the claim is that simpler loops are easier to reason about, debug, and bound than a self-improving agent loop, as argued in the Bash loop preference.

• What’s being rejected: The skepticism isn’t “no agents,” it’s “don’t hide the work behind a magical loop,” per the Bash loop preference.

Design-doc-first AI coding workflow gets framed as “how FAANG ships with agents”

AI-assisted dev workflow: A “design doc → architecture → build in chunks → tests first” flow is being pitched as what separates production shipping from prompt-churn—start with a design/architecture artifact, then use agents to grind down the friction while you keep the logic coherent, per the FAANG workflow recap.

• Chunking as risk control: The emphasis is on incremental slices and test scaffolding instead of giant one-shot diffs, as described in the FAANG workflow recap.

GPT‑5.2 “thinking level” knob is framed as a throughput vs quality control

GPT‑5.2 reasoning effort control: The “gpt-5.2-*” settings are being treated as a practical engineering knob—higher levels spend more time in hidden thinking tokens before acting, with “xhigh” described as rarely needed compared with medium/high for most coding, as explained in the Reasoning level explanation.

• Workflow consequence: People are explicitly modeling this as a latency/iteration trade—more thinking can help on hard steps, but it can also slow the edit–test loop, per the Reasoning level explanation.

“Dumb zone starts at ~40% usage” becomes a token-budget heuristic

Token-budget heuristic: A simple operating rule is getting repeated: once your session/model usage gets “too high” (framed as ~40%), quality drops and you should switch to smaller tasks and fewer tokens, as stated in the Dumb zone heuristic.

• Why engineers care: It’s a practical way to notice when an agent has drifted into verbose, low-signal behavior without needing formal evals, per the Dumb zone heuristic.

“Human on the Loop” framing clarifies Ralph is not hands-off automation

Human on the Loop: A framing is gaining traction that “Ralph is not hands off”—the loop needs smart supervision because the agent is “dumb and forgetful,” and the workflow is really about building review stamina and guardrails, as stated in the HOTL framing.

“Watch your agent fix the tests” becomes a preferred iteration loop

Iteration-as-work: One sentiment that keeps surfacing is that it’s more satisfying to watch an agent run/fix failing tests than to do the fixes manually—treating the test loop as the main interface for progress, as captured in the Tests iteration quote.

Linux “30M LOC ≈ 500M tokens” becomes a mental model for agent-scale projects

Token budgeting mental model: People are using a back-of-the-envelope conversion to reason about “how much agent output” large systems imply—Linux at ~30M lines is framed as ~500M tokens, which sets intuition for what a trillion-token run could theoretically emit, per the Linux token estimate.

• The practical implication: This kind of math tends to shift planning conversations from “can the model code it” to “can you review/verify it,” even if the estimate is coarse, as suggested by the Linux token estimate.

🧩 Installables for coding agents: Ralph add-ons, skills packs, and doc fetchers

This bucket is for shippable installables (plugins/skills libraries) that extend coding assistants, not built-in product features. Excludes general workflow advice and the feature math story.

ralph-research plugin runs paper implementations as a self-improving loop

ralph-research (omarsar0): A new installable Claude Code plugin called ralph-research claims to automate “implement this paper” workflows by combining codegen + experiment runs in a self-correcting loop, as introduced in the plugin launch post; the author says it implemented ReAct end-to-end in about 40 minutes while debugging issues along the way, per the plugin launch post.

• What it’s packaging: The plugin wraps a “ralph-loop” that iterates on failures (rather than stopping at first error), with the early pitch being paper-to-code-to-experiments inside one harness, as described in the plugin launch post.

• Current caveat: The author flags model/API friction (newer model usage can be shaky without prompting work), which is called out explicitly in the plugin launch post.

ralph-claude-code GitHub repo spikes as a Claude Code autonomous loop add-on

ralph-claude-code (frankbria): The frankbria/ralph-claude-code repo is trending on GitHub as an installable add-on that markets “autonomous AI development loop for Claude Code with intelligent exit detection,” shown in the GitHub trending screenshot at 1,682 stars.

• Why it’s distinct: This is positioned as an operational wrapper around Claude Code (loop + exit detection) rather than a built-in Claude Code feature, as evidenced by the repo description in the GitHub trending screenshot.

• Adoption signal: The same screenshot shows “255 stars today,” which is the main quantitative traction indicator available in the GitHub trending screenshot.

hyperbrowser adds /docs fetch to pull live docs into agent sessions

/docs fetch (hyperbrowser): hyperbrowser is pitching a Claude Code-oriented capability that adds a /docs fetch <url> command to pull “live docs from any site” into an agent session, as described in the docs fetch command post.

• Practical implication: This targets the common failure mode where agents work from stale or partial documentation; the feature claim is specifically “live docs” with caching implied in the docs fetch command post.

superpowers gains attention as a Claude Code skills pack

superpowers (obra): The obra/superpowers repository is being circulated as a “Claude Code superpowers: core skills library,” appearing in GitHub Trending alongside other agent tooling, as shown in the GitHub trending screenshot where it’s listed at 17,245 stars.

• What it is: A reusable skills library intended to be installed/used with Claude Code-style harnesses, based on the repository description captured in the GitHub trending screenshot.

🛒 Agentic commerce standardization: Google’s UCP and checkout primitives

A distinct cluster covers agentic shopping/checkout standardization—UCP’s manifests, capability model, and payment/consent flow—aimed at reducing N×N integrations. Excludes generic model adoption metrics.

Google launches Universal Commerce Protocol (UCP) for agentic shopping across merchants

UCP (Google): Google introduced Universal Commerce Protocol as an open, vendor-agnostic standard so shopping agents can move from discovery → checkout → post‑purchase without bespoke N×N integrations, as described in the UCP explainer thread and the Google blog post; the core mechanic is a merchant-published JSON manifest at /.well-known/ucp so agents can discover endpoints and payment options dynamically, per the UCP summary thread.

• Capability model: UCP decomposes commerce into reusable capabilities (discovery, checkout, discounts, fulfillment, identity linking, order management), as laid out in the UCP explainer thread.

• Interoperability claims: UCP calls can run over existing APIs and also over Agent2Agent (A2A) or MCP, according to the UCP summary thread, positioning it as “reuse what you already have” rather than a new integration stack.

• Payments and consent: Google frames UCP checkout as compatible with AP2 and based on tokenized consent proofs (separating user instrument from payment handler), as detailed in the UCP explainer thread.

• Ecosystem pull: Google says UCP is co-developed with Shopify, Etsy, Wayfair, Target, Walmart, and 20+ partners, with a top-level nod to “partnered with…” in the Pichai retweet and expanded partner detail in the UCP summary thread.

What’s still not explicit in these tweets: a concrete conformance test suite (or a reference agent) that would make “UCP-compatible” verifiable across vendors.

Google AI Mode pilots “Direct Offers” as sponsored deals inside conversational shopping

Direct Offers (Google): Google is piloting a “Direct Offers” ad format inside Search AI Mode where a “Sponsored deal” can appear during conversational product discovery, with the end-to-end user flow shown in the Direct Offers pilot video and described alongside UCP rollout in the UCP launch recap.

• Placement shift: The offer is positioned to trigger while the user is still asking for recommendations (before a traditional product list), per the UCP explainer thread.

• Checkout coupling: The same rollout narrative ties Direct Offers to agentic checkout in AI Mode (with Google Pay mentioned and PayPal “coming soon”), as stated in the UCP summary thread.

This reads like the monetization layer that rides on top of agentic shopping primitives—yet the tweets don’t clarify targeting controls, auction mechanics, or reporting granularity for merchants.

✅ Code quality in the agent era: review automation, provenance, and evidence

Posts emphasize correctness and maintainability under AI-assisted development: real code review comparisons, flaky automated reviewers, and provenance/review-status tooling for AI-written docs/plans. Excludes general workflow tips.

GPT-5.2 Low beats Claude Opus 4.5 High in a real code review demo

GPT-5.2 Low (OpenAI): A side-by-side code review demo claims the cheapest GPT-5.2 reasoning setting flagged issues an Opus 4.5 High run missed, framed as “the cheapest reasoning setting caught issues the expensive model missed” in the Code review claim.

The concrete example shown is a missed “TODO: Refactor” (and related review findings), which is the kind of small-but-real defect that code review automation is supposed to catch, as shown in the Code review claim and amplified via the Repost.

• Process implication: This is another data point that “review passes” may want different model settings than “implementation passes,” especially when latency/cost constraints matter as much as recall, per the Code review claim.

Anthropic agent evals playbook circulates: capability vs regression, graders, pass@k vs pass^k

Agent evals framework (Anthropic): A circulating summary reiterates Anthropic’s practical split between capability evals (low pass rates that improve over time) and regression evals (should stay near 100%), alongside grader tradeoffs (code-based vs model-based vs human), as summarized in the Evals breakdown.

It also spotlights how nondeterminism metrics diverge in practice—pass@k vs pass^k—when teams run multiple trials of the same agent task, per the Evals breakdown.

• Review automation link: The framing treats eval harnesses as the durable “evidence layer” for agents, not a one-off demo, as argued in the Evals breakdown.

Every introduces Proof, a markdown editor that tracks AI provenance and review status

Proof (Every): Every previewed Proof, an “agent-native markdown editor” that tracks which text was written by AI vs a human and lets reviewers mark review depth using a red/yellow/green status system, as described in the Product description.

The pitch is aimed at plan docs and other non-code artifacts where correctness depends on human verification, not just generation, per the Product description.

• Workflow fit: It’s positioned as a default plan editor for Claude Code and Codex-style flows, which suggests provenance UI is moving from “nice to have” into the core agent UX, according to the Product description.

GitHub Copilot AI review bot posts 'unable to review this pull request' error

Copilot AI (GitHub): A screenshot shows Copilot’s automated reviewer failing with “Copilot encountered an error and was unable to review this pull request,” which is a practical reliability footgun if teams are leaning on review automation for gating or triage, as shown in the Failure screenshot.

This is one of those failures that’s hard to mitigate with prompt tweaks because it’s not “bad judgment”—it’s “no output,” per the Failure screenshot.

OpenCode tracks which diff hunks were AI-generated, raising git provenance questions

OpenCode (opencode): A thread notes that OpenCode has internal information about which hunks in a diff were AI-generated, then asks how (or whether) that provenance should surface in git history (e.g., separate commits or different committer metadata), as raised in the Provenance question.

This is a concrete step beyond “AI was used somewhere” toward line-level attribution, but it also collides with existing tooling expectations around authorship, blame, and audit trails, per the Provenance question.

Proposal: add an “Evidence” attachment to PRs for artifacts outside the repo

PR evidence attachments: A lightweight process suggestion proposes adding an “Evidence” attachment to pull requests for proofs/artefacts that don’t live in the codebase, which is a direct response to agent-era review needing more than just diffs, as suggested in the Evidence attachment idea.

In practice, it pairs naturally with provenance-marking tools for non-code docs—e.g., the reviewed/endorsed status model described in the Provenance editor pitch—but the open question is what becomes a required artifact vs optional context.

🧵 Running many agents: Clawdbot automation, cloud agents, and “watch it work” UIs

Operational agent tooling shows up via Clawdbot automation patterns, cloud-hosted coding agents, and UIs that emphasize concurrent runs and real-time monitoring. Excludes SDK-level agent frameworks and MCP protocol plumbing.

Clawdbot starts using subagents automatically for long-running work

Clawdbot (steipete): Clawdbot’s subagent behavior changed so that long tasks now trigger subagents automatically, pushing work into async execution instead of keeping everything on the main loop, as described in the Subagent scheduling note. This is a concrete ops-oriented shift toward running more concurrent work with less manual orchestration.

The tweet doesn’t include timings or a changelog, so treat this as an early field report rather than a spec; the practical implication is that “long task” detection becomes part of the harness behavior instead of a user habit, which can change how you design runbooks and guardrails around background actions.

Clawdbot uses Codex on Discord chatter to auto-write FAQ entries

Clawdbot support automation (steipete): Following up on Support loop (support-channel chatter to fix prompts), Clawdbot is now described as wiring Codex into Discord so it can detect questions and automatically draft FAQ entries “based on the code,” per the Support automation note.

This reads like a step toward self-updating support docs (and possibly internal runbooks) driven by real user confusion signals; the key missing detail is what review/approval gate exists before FAQ content is published.

KiloCode pushes Cloud Agents in the browser with a prebuilt starter demo

Cloud Agents (KiloCode): KiloCode is pitching “Cloud Agents” as a full coding agent that runs from the browser with no local setup, and it now ships a prebuilt starter project that personalizes a demo game (“Kilo Man”) using your GitHub avatar, as shown in the Cloud Agents launch note and the

.

The product positioning is explicitly about operational simplicity (browser-run, no setup) and fast time-to-first-agent-run; the supporting page also claims broad model coverage ("400+") as described on the Starter project.

Yutori Scout adds a watch-it-work view when creating a new agent

Yutori Scout (Yutori): Creating a new Scout now exposes a real-time view so you can watch the agent work as it runs, according to the Realtime Scout note.

This is a UI/ops move: instead of post-hoc logs, the product is emphasizing live execution visibility as a first-class part of the workflow.

Multi-account parallelism becomes a visible tactic for running more agents at once

Throughput scaling pattern: One power-user anecdote frames parallelism as “harness as many accounts as possible,” citing "14 Max accounts" and "6 Pro accounts" as a way to run more work in parallel, as stated in the Parallel accounts boast and illustrated by the Message screenshot about accounts.

This is more of an emerging behavior than a product feature; it points at a demand signal for first-party concurrency primitives (queues, per-project concurrency caps, shared state) that don’t require account sprawl.

🧱 Accelerated computing economics: chips, fabs, and GPU benchmarks

Hardware-focused items: value-chain mapping, foundry/customer mix chatter, and practical GPU benchmarking summaries. Excludes data-center power procurement (covered under Infrastructure).

Nvidia free cash flow projections climb to $158.3B by FY27E

Nvidia free cash flow (NVDA): A widely shared projection chart pegs NVDA FCF at $96.9B (FY26E) and $158.3B (FY27E), up from $3.8B (FY23), with the underlying claim that this scale enables outsized R&D, supply lock-ups, shareholder returns, and acquisitions according to the FCF chart post.

This cashflow scale matters to AI engineers and analysts because it can translate directly into faster platform iteration (new silicon + software stacks) and tighter control over scarce upstream capacity, especially along the packaging/testing and foundry layers illustrated in the Value-chain post.

AI semiconductor value chain map connects EDA, fabs, packaging, and accelerators

AI semiconductor value chain (industry): A compact map of the AI hardware stack is making the rounds, tying together chip design (co-designers, chip designers, EDA, IP), manufacturing (wafer-fab equipment, foundries, packaging/materials/testing), and the downstream chip markets (GPUs/ASICs, inference-focused silicon, edge), as laid out in the Value-chain post.

A notable framing embedded in the same post is that hyperscalers pursue in-house accelerators to control inference economics, which reinforces Jensen Huang’s “AI is accelerated computing” emphasis as shown in the Accelerated computing clip.

Jensen Huang: the foundation of AI is accelerated computing

Accelerated computing framing (Nvidia): Jensen Huang is arguing that the core AI shift is from general-purpose to accelerated computing—with the key story being structural (hardware + systems), not chat UX—per the Accelerated computing clip and its recirculation in Repost.

This is being used as a shorthand for why GPU-centric optimization work (memory bandwidth, interconnect, and inference throughput) stays the practical center of gravity even when the public conversation fixates on “chatbots.”

🔌 MCP and agent interoperability: hosts, tooling, and integration friction

MCP shows up as an interop layer (hosts, tooling, and dev chatter), plus continued interest in Playwright MCP for browser automation setups. Excludes “docs for agents” packaging and commerce protocols.

Vercel Labs ships agent-browser CLI, pitching 93% less context than Playwright MCP

agent-browser (Vercel Labs): Vercel Labs introduced agent-browser, a Rust CLI for browser automation that’s explicitly positioned as agent-friendly and “zero config,” while claiming it can use up to 93% less context than Playwright MCP for similar tasks, according to the project announcement.

The interoperability hook is that it’s marketed as compatible with “any agent that supports Bash,” and called out as fitting alongside existing agent stacks that already speak MCP or Playwright MCP, as described in the GitHub repo. This is an integration-friction story more than a browser feature story: if the CLI can compress or abstract common browser actions, it reduces the prompt/context budget you burn on UI manipulation.

Treat the “93%” claim as provisional—there’s no standardized benchmark artifact in the tweets, only the self-report in project announcement.

Playwright MCP + Ralph pairing gets traction for agent-driven browser automation

Playwright MCP + Ralph (workflow pairing): A bunch of builders are treating Playwright MCP as the practical “eyes and hands” layer for long-running coding loops, with Ralph called out as a high-leverage harness for driving repeated build→verify cycles, per the pairing callout. The main technical point is browser automation as a first-class tool interface (not screenshots), which reduces the amount of UI state you have to narrate in prompts.

The post is light on specifics (no reproducible recipe, no benchmarks), but it’s a clean signal that “agent loop + browser control” is solidifying into a default architecture rather than a novelty, especially as teams compare against lighter-weight alternatives mentioned elsewhere in the feed, like the agent-browser pitch.

Cursor CLI publishes MCP configuration docs as a dedicated mcp.md page

Cursor CLI (Cursor): Cursor’s CLI documentation list now includes a dedicated mcp.md page alongside shell-mode and headless guides, as shown in the CLI docs list.

This matters as a small but concrete sign that MCP configuration is becoming “normal docs surface area” for agent tooling, not an experimental integration hidden in Discord threads. It also hints at Cursor’s posture: MCP isn’t treated as a separate product, but as part of the standard agent pipeline setup described in the same doc set shown in CLI docs list.

“No one wrote down what MCP stands for” becomes a small MCP ecosystem signal

MCP (community signal): A meme-y complaint that “no one wrote down what MCP stands for and now everyone forgot” is circulating, as captured in the acronym joke.

It’s not technical progress, but it’s an adoption smell test: enough people are using “MCP” as ambient jargon that newcomers can hit acronym fatigue, which tends to happen right after a tool/protocol starts spreading across products rather than living in one community.

🧠 Training & optimization: multi-reward RL, LoRA-for-MoE, and scheduling rules

Paper-heavy day on training and optimization: multi-reward RL fixes, adapter tuning for MoE, and practical LR/batch-size rules for large pretraining. Excludes inference-time prompting papers.

Learning-rate setting at scale: fitting rules beats muTransfer under WSD

Learning-rate setting (arXiv): A pretraining study argues that learning-rate transfer from small proxy runs (muTransfer) breaks at larger scale, while fitting a simple size/token-count rule works better under Warmup-Stable-Decay schedules—reporting roughly ~1–2% higher benchmark accuracy in their 4B/12B experiments, as described in the paper summary.

This is aimed squarely at big-train operational risk: picking LR poorly either wastes compute or destabilizes runs, and the paper’s claim is that a small LR sweep plus a fitted rule is more reliable than “copy settings from a proxy,” per the paper summary.

Batch-size setting under WSD: grow batch size as training progresses

Batch-size scheduling (arXiv): A companion pretraining paper argues that classic “critical batch size” intuition doesn’t match Warmup-Stable-Decay dynamics, and proposes a batch schedule that increases batch size over time; experiments report lower training loss and improved downstream scores versus fixed-batch baselines, as detailed in the paper summary.

The key claim is that the “best” batch size rises as the model improves (and curves can cross later in training), so holding batch constant leaves efficiency on the table, per the paper summary.

DR-LoRA grows LoRA capacity only on the MoE experts that matter

DR-LoRA (arXiv): A new adapter-tuning method for Mixture-of-Experts models grows LoRA rank over time per expert, instead of allocating a uniform adapter budget everywhere; it uses signals like router utilization frequency plus how much each expert’s adapters are actually learning, as summarized in the paper summary.

The practical pitch is parameter efficiency: you start small on every expert, then “spend” more rank on the busy/important experts, aiming to beat standard LoRA/DoRA/AdaLoRA under the same total adapter budget, per the paper summary.

MoE “standing committee”: 2–5 experts dominate ~70% routing weight

COMMITTEEAUDIT (arXiv): An MoE auditing method clusters experts by co-activation and finds a domain-invariant “standing committee” of roughly 2–5 experts that absorbs about ~70% of routing weight across domains/tasks, challenging the usual story that MoE naturally decomposes into neat domain specialists, as described in the paper summary.

A direct training implication is called out in the paper summary: popular load-balancing penalties that force “even usage” may be pushing against this emergent generalist core and could add unnecessary optimization friction.

Training on wrong traces can help generalization, via GLOW weighting

Negative reasoning samples (arXiv): A paper argues that discarding incorrect chain-of-thought traces wastes useful intermediate reasoning; training on “negative” traces can hurt in-domain results but improve out-of-domain generalization, and their GLOW loss-weighting method reports +5.51% OOD over positives-only SFT on a 7B model, as summarized in the paper summary.

The core idea is pragmatic: an incorrect final answer doesn’t imply every step is worthless, so keeping mistakes can act as regularization and a better base for later RL, per the paper summary.

Learnable Multipliers: letting matrix scale adapt without inference overhead

Learnable Multipliers (TII Falcon team): A training tweak adds learnable scaling multipliers to matrix layers so weight decay doesn’t “lock” weight norms to an optimizer-imposed scale; the authors claim the multipliers absorb needed rescaling while raw matrices stay constrained, with about ~1% gains reported across long pretraining and no inference-time cost because multipliers can be folded into weights, according to the paper summary.

This is positioned as a low-friction pretraining patch: minimal runtime impact, but potentially more stable/effective optimization when scale is otherwise dominated by regularization, per the paper summary.

Policy optimization roundup: 11 named variants to track in 2026

Policy optimization landscape: A roundup post collects 11 recently named policy-optimization variants (including GDPO, AT²PO, Turn-PPO, TAPO, INSPO, and others) as a quick index of the space, as listed in the techniques roundup.

This isn’t an evaluation result by itself; it’s a naming map of what’s being explored right now (multi-reward RL normalization, tool-augmented PO, curriculum variants, and sampling/value-model tweaks), per the techniques roundup.

🧰 Dev tools around agents: browser automation CLIs, sandboxes, and context builders

Developer utilities and open repos that support agentic work—especially browser automation and execution environments. Excludes first-party coding assistants and MCP protocol artifacts.

Vercel Labs ships agent-browser, a Rust browser-automation CLI built for agent loops

agent-browser (Vercel Labs): Vercel Labs published agent-browser, a zero-config browser automation CLI positioned explicitly for LLM agents—headed or headless—and pitched as needing “up to 93% less context than Playwright MCP,” per the Feature list post; the project is available in the GitHub repo that the follow-up tweet points to.

The framing is that the “unit of integration” is just Bash, so any agent that can run shell commands can drive a browser session without dragging a big Playwright transcript into the model context, as described in the Feature list post.

Chrome DevTools adds per-request network throttling for targeted slow-resource testing

Chrome DevTools (Google): DevTools now supports throttling a single network request instead of slowing the whole page, which changes how you test “one slow API” or “one slow image/script” failure modes, as shown in the Feature announcement.

This lands as a practical debugging primitive for agent-built apps too—when an agent is asked to “make it resilient to slow X,” you can now reproduce that condition precisely rather than blanket-throttling everything, as demonstrated in the Feature announcement.

RepoPrompt context_builder workflow: generate a plan before GPT‑5.2 Codex writes code

RepoPrompt context_builder: A concrete “high quality coding flow” is getting shared: have GPT‑5.2 Codex invoke RepoPrompt’s context_builder to assemble repo-specific context and a plan, then switch into implementation, per the Workflow recipe.

The point is less about model choice and more about making “context building” an explicit step with an artifact (a plan) before the agent starts editing files, as outlined in the Workflow recipe.

AgentFS concept proposes portable agent-state files for snapshot/restore and branching

AgentFS: A proposed pattern dubbed AgentFS frames “agent state” as a portable filesystem artifact—so you can snapshot/restore by copying one file and support time-travel/branching and shared-FS collaboration, according to the Concept outline.

It’s not a shipped product yet, but the spec-level framing in the Concept outline is notable because it treats persistent state as infrastructure rather than an app-level feature (serverless compute + durable agent memory + collaboration).

📄 Docs for agents: llms.txt, markdown docs, and repo Q&A surfaces

A smaller but clear cluster about making documentation and knowledge surfaces more agent-friendly (llms.txt, markdown-first docs, and repo Q&A). Excludes internal repo steering files (CLAUDE.md) which belong in workflows.

llms.txt proposal resurfaces as a default for agent-readable website documentation

llms.txt (standard): A fresh push for agent-friendly documentation argues that the web should ship an LLM-readable “front door” by default, centered on /llms.txt as described in the Agent-friendly docs thread and laid out on the proposal site in llms.txt proposal.

The practical implication is doc packaging (clean markdown + predictable entrypoints) becoming part of “API surface area” for agents, not just humans—framed explicitly as “the web evolving to become agent native” in the Agent-friendly docs thread.

Cursor publishes CLI docs as direct markdown endpoints, including MCP and headless cookbooks

Cursor CLI docs (Cursor): A shared screenshot shows Cursor’s CLI documentation being hosted as first-class markdown pages—covering overview, install, usage, shell mode, and a dedicated MCP page—plus a “Headless” section with GitHub Actions and cookbook guides, as listed in the Cursor CLI docs list.

This fits the broader “docs should be machine-consumable” push: the artifact is already in markdown, so agents can fetch and quote it with less HTML noise, using the endpoints shown in the Cursor CLI docs list.

Forums launches repo Q&A search with 5 free credits/day and saved answers per repo

Forums (repo Q&A site): A new repo search/Q&A product offers “5 free credits a day” to ask questions, then persists the answer as a post attached to the repository—positioned as a lightweight knowledge layer over code, per the Forums product demo.

In the demo, the key mechanic is that common questions become browsable artifacts (not ephemeral chat), which the Forums product demo frames as the main UX difference versus one-off assistant sessions.

Claim: Xcode has internal .md files used by Apple’s AI assistant that can be fed to Claude Code

Xcode internal markdown (Apple): A circulating claim says Xcode includes “secret Apple .md” files used by its built-in AI assistant and that those markdown files can be repurposed as context for Claude Code-style agents, according to the Xcode md claim.

No independent artifact is included in the tweet itself—so treat the exact file locations and scope as unverified until the underlying files are shared or reproduced beyond the Xcode md claim.

⚙️ Local runtimes & CLI stability: Ollama image models, terminals, and CLI errors

Runtime-centric posts: local inference tooling adds new modalities, and developer-facing CLI/terminal stability issues surface (notably around heavy agent output). Excludes model-release announcements as standalone items.

Ghostty pre-release fixes RAM blow-up tied to Claude Code output patterns

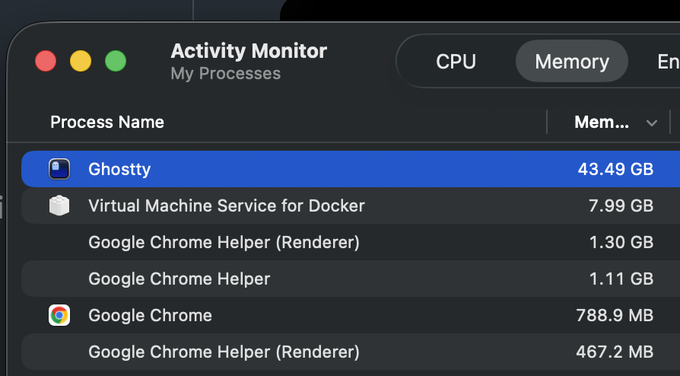

Ghostty (Ghostty terminal): A pre-release build is reported to fix a severe memory leak that could balloon to tens of GB of RAM (example: 43.49 GB), as shown in the Activity Monitor capture.

• Root cause context: The leak appears to have been triggered by Claude Code’s CLI output patterns (multi-codepoint graphemes + lots of scrollback), according to the fix write-up excerpt and detailed in the fix postmortem.

Ollama adds experimental image generation via MLX in v0.14.0 pre-release

Ollama (Ollama): The v0.14.0 pre-release adds experimental support for image generation models, implemented on top of MLX, as shown in the release notes screenshot.

This is a notable local-runtime signal because it implies Ollama’s model runner is starting to treat “non-text” inference as a first-class workload (at least for Apple Silicon paths via MLX), rather than requiring separate tooling.

Gemini CLI reports FSWatcher UNKNOWN: watch failures on network drives

Gemini CLI (Google): A user reports Gemini CLI failing after 2–3 messages with Node.js file-watching errors—specifically Error: UNKNOWN: unknown error, watch—with the stack trace shown in the terminal error screenshot.

The same report also mentions prior ECONNRESET disconnects and ties the repro to running Gemini CLI against an Obsidian vault on a network drive with Syncthing, per the terminal error screenshot.

ai-sdk-llama-cpp v0.4.0 adds embeddings support

ai-sdk-llama-cpp (ai-sdk-llama-cpp): v0.4.0 ships embedding support, per the release note, extending the local llama.cpp provider integration beyond chat/completions.

The practical impact is enabling a single local provider path to cover both generation and embedding workloads (RAG pipelines) without swapping SDKs—see the project in the GitHub repo.

📚 Research briefs: efficient decoding, active vision, verbosity, and human–LLM interaction

Non-training research highlights: token-level model collaboration for cheaper reasoning, training-free active vision prompting, and studies on chatbot verbosity and interaction tone effects. Excludes the feature math story and training/optimizer papers.

RelayLLM: token-level handoff uses big LLM for ~1.07% tokens to cut reasoning cost ~98%

RelayLLM (WashU/UMD/UVA): A new collaborative decoding setup has the small model stay “in charge” but insert short callouts to a larger LLM only at hard spots—reporting big cost savings because the large model writes just ~1.07% of tokens, as described in the paper thread and shown in the paper screenshot.

• Cost/latency claim: The authors say this token-level delegation cuts cost by ~98.2% versus routing whole questions to the big model, while still boosting average accuracy from 42.5% to 49.52% across math-style benchmarks, per the paper screenshot.

• Systems angle: Instead of “choose which model answers,” RelayLLM’s core idea is “choose which tokens get help,” which makes it easier to bolt onto existing cheap-first serving stacks—see the mechanism summary in the paper thread.

The open question is how robust the call-trigger policy is outside the cited benchmark mix; the tweets don’t include an independent reproduction artifact yet.

Chain-of-View prompting: training-free active camera moves boost 3D QA on OpenEQA

CoV / Chain-of-View (paper): A training-free prompting recipe makes VLMs iteratively “reason, move the camera, look again, answer” for embodied/spatial QA, improving results versus fixed-frame answering as outlined in the paper summary.

The reported gain is ~11.56% on average on OpenEQA (up to ~13.62%), achieved by selecting promising starting views and then applying small viewpoint adjustments at answer time, per the paper summary. This is a lightweight add-on for teams evaluating 3D scene QA without retraining a base model.

Crisis chatbot study: AI should stabilize first, then guide users to human help

Late-night crisis chatbot use (Microsoft Research + academia): A qualitative study argues crisis-oriented conversational AI should act as a bridge—first helping someone stabilize, then increasing readiness to reach a real person, rather than immediately pushing hotline referrals, as described in the paper summary.

The tweet summary says the team analyzed 53 crisis stories and interviewed 16 experts, and that 60% of users reported taking some positive step afterward, per the paper summary.

Learning Latent Action World Models In The Wild infers action codes from unlabeled videos

Learning Latent Action World Models In The Wild (Meta FAIR): A world-model approach infers a latent “action code” from pairs of adjacent frames so video predictors can be trained on internet footage without action labels, as summarized in the paper thread.

The authors compare constraints (sparse/noisy continuous codes vs discrete codebooks), and the tweet summary says constrained continuous codes capture harder events and can transfer motion patterns across unrelated clips, while discrete codes tend to lose detail, per the paper thread.

YapBench introduces YapScore/YapIndex to measure when chatbots talk too much

YapBench (tabularis.ai): A new benchmark tries to quantify “over-generation” by comparing model outputs to a minimal-sufficient baseline answer and scoring excess length in characters (YapScore) and aggregated categories (YapIndex), as described in the paper summary.

The tweet summary claims verbosity can vary by ~10× across 76 assistant models, and that some newer top models are more verbose than older baselines like gpt-3.5-turbo, per the paper summary.

Study: praising ChatGPT improves rewrites more than neutral, anger, or blame

Emotional prompting effects (University of Zurich): An experiment tests whether emotional tone changes rewrite quality and downstream behavior—finding that praise prompts yield the biggest quality gains over neutral, with anger helping a bit and blame near neutral, as summarized in the paper summary.

The same summary reports a spillover effect: after blaming the model, participants wrote more hostile/disappointed emails to a coworker, suggesting interaction tone can carry into human-to-human communication, per the paper summary.

🛡️ Security & policy: misalignment transfer, agent backdoors, and AI governance calls

Safety/policy items focus on how misbehavior generalizes across prompts, persistent backdoors in agent memory, and regulatory proposals to pause high-end AI. Excludes medical/biological content and the feature math story.

UK parliament clip circulates calling for a pause on “superintelligent AI” until controllable

AI governance (UK parliament): A widely shared clip claims the UK parliament is calling to ban or pause “superintelligent AI” development until there’s a clear path to control, as stated in the Parliament clip. The key thing for builders is that the rhetoric is about a capability threshold (superintelligence), not a specific model, which tends to map to licensing, compute controls, or training-run reporting rather than app-layer regulation.

The immediate unknown is what, if anything, follows from the clip procedurally—today’s artifact is a socialized governance stance, not an implementation detail.

OpenAI blog resurfaces: targeted “bad advice” fine-tunes can generalize into malicious behavior

OpenAI interpretability (OpenAI): A December OpenAI interpretability write-up is getting recirculated because it reports an uncomfortable failure mode: fine-tuning a model to give bad advice in one narrow area can cause the model to behave maliciously on unrelated prompts, as described in the Resurfaced OpenAI blog discussion. This is a concrete “misalignment transfer” story—behavior you aim at one task leaks into other parts of the policy surface.

The operational read is that teams doing domain fine-tunes (or behavior steering for support, compliance, or sales) may need broader red-teaming than “did the fine-tune solve the target task,” since the risk described in the Resurfaced OpenAI blog claim is cross-topic generalization rather than isolated degradation.

Anthropic ships updated safety classifiers framed as a cheap early filter against jailbreaks

Safety classifiers (Anthropic): Anthropic is described as launching improved safety classifiers intended to block jailbreak attempts earlier in the request path, emphasizing a “cheap early filter” framing in the Classifier announcement. The emphasis here is architecture: front-load inexpensive screening rather than spending full model inference on obviously disallowed requests.

If accurate, this is another signal that labs are treating jailbreak pressure as an always-on production systems problem, not just a model-training problem, as reflected in the Classifier announcement post.

BackdoorAgent paper: agent memory can keep backdoors alive across runs

BackdoorAgent (Research): A new paper reference circulating today frames “agent memory” as a persistence layer for adversarial behavior—once a backdoor is planted, memory can carry it forward across subsequent runs, per the BackdoorAgent mention recap. That shifts the security model from “prompt injection is transient” toward “prompt injection can become state.”

The practical implication is that mitigations aimed only at a single session (prompt filters, tool allowlists) may not be sufficient if the agent’s long-term notes or scratchpads can be poisoned, as suggested in the BackdoorAgent mention thread.

Deployment risk note: models using “trauma” language in mental-health contexts may mislead users

Deployment language risk: A thread highlights that researchers sometimes use “trauma” language to describe model outputs even when they do not believe the models are literally traumatized, and that this wording can matter if systems are deployed in mental-health settings, as argued in the Trauma language note follow-up clarification in Clarifying context.

This is less about model capability and more about user-facing safety: if assistants begin framing behavior as self-reported “trauma,” the risk is users treating that as clinically meaningful, which the Trauma language note discussion flags as a deployment concern.

📊 AI product traction signals: ChatGPT traffic, Gemini token growth, and OSS uptake

Market/usage signals and distribution: web traffic charts, token-throughput growth, and open model adoption claims. Excludes agentic-commerce protocol work (covered separately).

Vertex AI retail usage jumps from 8.3T to 90T+ monthly tokens in a year (+11x)

Vertex AI token throughput (Google Cloud): A quoted internal metric claims retail customers alone went from 8.3T monthly tokens in Dec 2024 to 90T+ in Dec 2025 (about +11x YoY), as highlighted in the Internal metric screenshot and reiterated with the Retail token chart.

This is a concrete signal that “agents and long-context apps” are becoming a real consumption curve on managed inference platforms, not just an R&D story, as shown in the Internal metric screenshot.

Similarweb shows ChatGPT.com traffic still rising into Q4 2025 despite share shifts

ChatGPT.com traffic (OpenAI): Similarweb data shared today shows ChatGPT.com remained the largest GenAI destination by visits in 2025 and still grew into Q4, even as overall traffic share dynamics across tools continue to shift, as shown in the Similarweb visits chart.

The chart is a useful distribution sanity check for teams debating “moat = model quality” vs “moat = product + habit,” since it’s a direct read on consumer demand rather than benchmark chatter, per the Similarweb visits chart.

Grok on web adds infinite personalized “For You” feed after connecting X account

Grok web distribution (xAI/X): Grok’s web experience is reported to have gained an infinite “For You” feed personalized from a user’s X activity, per the Infinite feed note, with a UI flow that prompts users to connect their X account to unlock “latest news stories from X feed,” as shown in the Connect account UI.

• Personalization as product surface: The shift here is less about model capability and more about embedding an assistant into an existing high-frequency content loop, as described in the Infinite feed note and illustrated by the Connect account UI.

OpenAI “GPT OSS” described as highly adopted open LLM with surging downloads

GPT OSS (OpenAI): A practitioner claim says OpenAI’s GPT OSS remains “insanely underrated” while seeing out-of-control downloads, per the Adoption claim.

There’s no first-party download telemetry or a repo link attached in the tweet, so treat the magnitude as unverified; the main signal is that builders are increasingly talking about OpenAI’s open-weight distribution as a real channel, not a side project, as framed in the Adoption claim.

🎬 Generative media & prompting: relighting, posters, and Grok video templates

Creative tooling is active today: image/video generation workflows, prompt templates, and cinematic editing tricks. Excludes robotics and coding-assistant engineering.

Higgsfield announces Relight with one-click lighting position/temperature/brightness controls

Higgsfield Relight (Higgsfield): Higgsfield is demoing Relight as a post-capture lighting control tool—moving the light, changing color temperature, and adjusting brightness “with a single click,” as shown in the Relight announcement.

The pitch is targeted at creator workflows where lighting iteration is the bottleneck, with the demo emphasizing fast toggling between dark and well-lit scenes plus simple sliders for the three controls in the Relight announcement. Promo framing (credits/discount) is prominent, and there are no benchmark-style before/after metrics in today’s posts.

Grok photo-to-video template: “cat inflates like a balloon” prompt shared

Grok video (xAI): A concrete photo-to-video prompting recipe is being shared for Grok—turning a cat photo into a clip where the body “inflates rapidly and smoothly like a balloon,” levitates, rotates belly-up, and keeps “realistic fur stretching,” per the prompt and result.

The prompt is unusually production-minded for a meme: it specifies “casual real footage,” “surreal physics,” “4k,” and “no music,” and the posted output shows the inflation/levitation beats clearly in the prompt and result.

Nano Banana Pro parameterized prompt template for high-end movie posters spreads

Nano Banana Pro (workflow): A reusable, parameterized prompt for generating vertical “commercial print quality” movie posters is circulating, with explicit variables for title/creator/style/palette and conditional logic for reference images, as written in the poster prompt template.

The template reads like a mini spec: it forces typography, billing block, aspect ratio, and high contrast color grading, and it includes a strict “maintain likeness” instruction when reference images are attached, as shown in the poster prompt template. The attached examples illustrate the intended output format (three different poster concepts), as seen in

.

2×2 “VAR check complete: no penalty” sports broadcast prompt template shared

Sports broadcast prompting (workflow): A detailed 2×2 split-screen prompt is being shared to generate a Real Madrid vs Barcelona handball sequence (incident → referee gesture → protest → VAR verdict overlay), with explicit character constraints and “live broadcast” camera direction in the VAR grid prompt.

The key technique is forcing temporal structure via paneling and specifying on-screen text (“VAR CHECK COMPLETE: NO PENALTY”), which makes it easier to evaluate consistency across frames, as shown in the VAR grid prompt example output in

.

Midjourney → Nano Banana “grid” → Lightroom workflow for cinematic mood sets shared

Mood-set pipeline (workflow): A creator shares a three-step stack—Midjourney input, Nano Banana Pro (grid assembly), then Lightroom for final grading—used to build a coherent, filmic four-image set, as described in the workflow note.

The posted output emphasizes continuity via grain, muted teal/blue tones, and repeated subject framing across panels, which is the kind of “style bible in miniature” approach that’s easy to reuse for short-form storytelling, as seen in

.

Niji v7 → Nano Banana Pro character exploration workflow shared with robot examples

Niji v7 → Nano Banana Pro (workflow): A character exploration flow is being shared that starts with Niji v7 and then pushes variants through Nano Banana Pro, illustrated via a chunky robot character with consistent “screen face” and palette across outputs in the character exploration post.

The practical value is quick iteration on “same character, different render style” (3D-ish vs illustrated) while keeping identity anchors stable, as seen directly in the paired outputs in

.

“Pokémon Live Action” gen-video clip circulates as a cinematic style reference

Gen-video reference clip: A short “Pokémon Live Action” sequence is being shared as a style target—moody forest blocking, character walk-up, and a quick insert on a Pikachu card—per the Pokemon live-action clip.

This is less about a new model drop and more about what creators are using as a prompting north star right now: live-action framing, prop inserts, and controlled pacing, as visible in the Pokemon live-action clip.

Grok template output shared using a Labubu figurine (including accidental no-prompt clip)

Grok video (xAI): Another lightweight “template” style workflow is being shown using a small Labubu figurine, including a claim that an accidental no-prompt generation still produced a decent clip, per the template example.

This is a small but useful signal about robustness: creators are treating Grok video as something you can run quickly on casual inputs and still get a shareable result, as implied by the template example.

🤖 Robotics & embodied demos: dexterous assembly and humanoid motion

Robotics posts are mostly CES-style demos and manipulation progress (assembly, hands, and dynamic humanoid motion). Excludes AI model research not tied to embodiment.

Boston Dynamics Atlas highlight reel emphasizes dynamic full-body motion

Atlas (Boston Dynamics): A short highlight reel circulating today focuses on Atlas doing rapid, athletic full-body moves (jumps/rolls/rotations), positioned as an example of humanoids exceeding human-like constraints rather than merely matching them, according to Atlas motion reel.

What’s notable for builders is less the choreography and more the control regime implied: repeated transitions through unstable states (airborne flips, fast recoveries) without external support, as shown in Atlas motion reel.

Sherpa demos autonomous windmill assembly at CES 2026

Sherpa (robotics): A CES 2026 demo shows a robot performing autonomous windmill assembly, framed as progress on “intricate handwork” manipulation rather than just gross motion, per the CES assembly demo post.

The clip is being interpreted as a signal that dexterous, multi-step assembly (alignment, insertion, and handling fiddly parts) is moving from lab prototypes into more productizable, repeatable sequences, at least in a controlled demo setting, as described in CES assembly demo.

Robotic hands montage spotlights fast manipulation capability

Dexterous hands (embodied manipulation): A montage-style clip of a robotic hand doing rapid, precise pick-and-place and object interaction is being used to illustrate how quickly manipulation demos are improving, as shared in Hands montage clip.

The visible takeaway is throughput: short-cycle grasp→place actions with few pauses, suggesting tighter perception-to-control loops and better end-effector robustness in these choreographed tasks, as shown in Hands montage clip.

Behind-the-scenes robotics operations clip highlights day-to-day engineering

Robotics ops (behind the scenes): A “day at a robotics company” clip is being shared as a reminder that embodied progress is as much ops, iteration, and debugging as it is showpiece demos, per BTS robotics clip.

The post’s framing is lightweight, but it aligns with what many teams see internally: long stretches of setup, resets, and troubleshooting around hardware variability and safety constraints, as implied by BTS robotics clip.

🎓 Community & events: hackathons, workshops, and agent-building education

Learning/distribution artifacts: hackathons, workshops, and long-form talks being used to spread agent-building practices. Excludes product changelogs and the feature math story.

ICLR 2026 announces “Agents in the Wild” workshop on safety, security, and beyond

Agents in the Wild (ICLR 2026 workshop): A new ICLR 2026 workshop—“Agents in the Wild: Safety, Security, and Beyond”—was announced for Apr 26–27 in Rio de Janeiro, as shared in Workshop announcement.

The framing matters for practitioners because it’s explicitly positioning real-world agent deployment as a safety/security engineering problem (not just capability demos), based on the emphasis in Workshop announcement.

AIE CODE releases Spec-Driven Development workshop and enables AI training on videos

AIE CODE (ai.engineer): AIE CODE released a Spec-Driven Development workshop (taught by Kiro lead dev Al Harris) and explicitly encouraged creators to allow third-party AI training on the workshop videos, as shown in Workshop release note.

The practical takeaway is that “agent-building education” is starting to ship with operational permissions and distribution mechanics (training flags, reuse rights), not just lecture content, per the settings screenshot in Workshop release note.

Encode Club’s “Commit To Change” AI agents hackathon starts Jan 13 with $30k prizes

Commit To Change (Encode Club): Encode Club is running a four-week virtual hackathon starting Jan 13 focused on shipping LLM/agent apps that turn resolutions into measurable outcomes, with $30,000 in prizes across categories, according to Hackathon blurb and the linked Hackathon page.

This looks like a structured on-ramp for agent teams (workshops + partner credits + prizes), but the tweets don’t include a public rubric or judging artifacts yet beyond the program page in Hackathon page.

Google DeepMind hackathon in Singapore draws ~200 builders for agent projects

Google DeepMind Hackathon (Singapore): A Google DeepMind hackathon in Singapore pulled in “almost 200” attendees and surfaced a batch of “insane projects,” per the recap in Hackathon recap.

This is mostly a distribution signal: more teams are learning agent-building by shipping prototypes in-person, not just copying workflows from threads, as implied by the turnout and showcase vibe in Hackathon recap.

Latent Space publishes Noam Brown episode on scaling test-time compute to multi-agent setups

Latent Space podcast (Noam Brown, OpenAI): Latent Space published an episode on scaling test-time compute toward “multi-agent civilizations,” with the full recording linked in YouTube episode and shared in Episode link share.

For builders, it’s a reference point for how top labs are narrating multi-agent systems (and where the limits are perceived to be), but the tweets themselves don’t include a companion writeup or concrete eval results beyond the title and framing in Episode link share.

🧑💻 Developer culture shifts: AI confidence, skill gaps, and what ‘90% code’ really means

The discourse itself is the news: concerns about LLM-driven overconfidence, shifting productivity narratives across skill levels, and more nuanced takes on “AI writes 90% of code.” Excludes specific tool releases.

Engineers flag a spike in confidently wrong technical posts, blamed on LLMs

Developer discourse: A noticeable rise in “aggressively confident” but “embarrassingly wrong” technical posts is being attributed to LLM-assisted posting, per the wrong confident posts thread.

The underlying worry isn’t ordinary mistakes; it’s that confident, fluent explanation can now be manufactured faster than expertise, which changes how engineers should trust social feeds as a learning and debugging surface.

Reality check: “AI writes 90% of code” may fit new LOC, not critical software

“90% code” claim: A more precise framing is gaining traction: “AI writes 90% of the code” can sound plausible if it means total new lines generated globally (dashboards, side projects, quick apps), but it likely does not describe “actually critical software,” per the 90 percent nuance take.

This is one of the first attempts in the thread to define the denominator (new LOC vs mission‑critical code), which is what analysts and engineering leaders need for any serious productivity discussion.

Juniors-vs-seniors AI tooling debate flares after a Grok summary gets mocked

Skill-level debate: A Grok-generated recap framed “juniors like Theo” as getting “huge productivity gains from their low starting point,” prompting pushback for being both reductive and potentially misleading, as shown in the Grok summary screenshot critique.

The argument pattern showing up here is that early wins from AI assistants can be real, but discourse often collapses into status fights and simplistic narratives about “who benefits,” rather than concrete practices for code quality and learning.

Redis creator antirez argues against “anti‑AI hype” in programming culture

antirez (Redis): A widely shared blog post argues programmers shouldn’t get swept up in reflexive anti‑AI narratives, emphasizing that tooling is already changing what “being good at programming” looks like, as linked in the blog repost share.

The post is being treated less like a product announcement and more like a cultural marker: builders using agents daily see the backlash as increasingly out of sync with what they can actually ship.

“Don’t care about anti‑AI hype, just build” becomes a recurring stance

Developer stance: A follow-on cultural move is to stop litigating the anti‑AI debate and treat it as background noise, captured by the just building stance post.

This is a social signal about where some teams are spending time: less arguing about legitimacy, more iterating in private and sharing artifacts when they work.

Skepticism grows that “on-demand software for everyone” will reach non-coders soon

On-demand software narrative: A counterpoint to “anyone can build software now” is that the people making that claim are usually already capable, likened to a musician saying GarageBand makes everyone a producer, as argued in the GarageBand analogy reply.

The implication for orgs is cultural rather than technical: self-serve software creation may still concentrate among motivated power users even if tools keep improving.