Meta buys Manus AI for $1B+ – agents automate 2.5% work

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Meta is buying Manus AI in a deal investors peg above $1B, up from a ~$500M valuation earlier this year; Manus brings ~$100M ARR on a $125M revenue run rate, ~147T tokens processed and 80M+ “virtual computers” spun up since launch. The team will join Meta while continuing to run its subscription agent service from Singapore; commentators frame the acquisition as Meta paying for a hardened, high‑volume agent harness to close the gap between strong Llama models and a relatively thin Meta AI product surface, at a price well below recent $6.6B–$20B private valuations for other AI firms.

• Automation ceiling and evals: Meta’s new Remote Labor Index benchmark shows Manus‑class agents fully automating only ~2.5% of real remote‑work contracts, ahead of Grok and GPT‑5 but still near the floor; AISI’s Frontier AI Trends report and METR’s Opus 4.5 results converge on task horizons doubling every ~8 months even as broad job automation remains modest.

• Speed and math reasoning: Tencent’s WeDLM‑8B‑Instruct diffusion LM targets 3–6× faster math decode than Qwen3‑8B while AgentMath’s 30B tool‑augmented stack hits 90.6% on AIME24.

Together, Meta’s Manus bet and RLI numbers crystallize a tension: rapidly lengthening agent horizons, heavy infra and capital commitments, yet only slivers of real remote work automated so far.

Top links today

- AgentReuse plan caching for LLM agents

- AgentMath tool-augmented mathematical reasoning framework

- LongShOTBench and LongShOTAgent long video benchmark

- CulturalToM-VQA cross-cultural vision theory-of-mind benchmark

- AI-driven hiring decision multi-agent system paper

- AI Needs Physics perspective on grounded models

- Mindscape-Aware RAG for long context understanding

- FLUX.2 dev Turbo open-source image model weights

- code-chunk AST-aware code chunking NPM library

- FaithLens hallucination detection and explanation GitHub repo

- DocETL open-source unstructured data analysis toolkit

- Weaviate release with TTL and multimodal embeddings

- KAT-Coder-Pro V1 coding plan details

- Epoch AI report on decentralized AI training

- JuliusAI SQL assistant table mention feature update

Feature Spotlight

Feature: Meta buys Manus to fast‑track AI agents

Meta acquires Manus to accelerate consumer/business AI agents. Manus keeps subscriptions, brings a mature agent harness (RLI 2.5%), ~$100M ARR and 80M virtual computers—giving Meta a credible path to productize agents fast.

Cross‑account, high‑volume story. Multiple posts confirm Meta acquired Manus; threads detail ARR, product continuity, and agent benchmarks. Focus is Meta’s agent strategy shift; excludes other business items below.

Jump to Feature: Meta buys Manus to fast‑track AI agents topicsTable of Contents

🤝 Feature: Meta buys Manus to fast‑track AI agents

Cross‑account, high‑volume story. Multiple posts confirm Meta acquired Manus; threads detail ARR, product continuity, and agent benchmarks. Focus is Meta’s agent strategy shift; excludes other business items below.

Meta buys Manus AI to plug its agent product gap

Manus AI acquisition (Meta): Meta has acquired Manus AI in a deal investors describe as costing more than $1B, up from a roughly $500M valuation earlier in 2025, to bring a mature agent product into what critics call a thin Meta AI product surface valuation context. The Manus team will join Meta while continuing to operate its subscription service and infrastructure from Singapore, with both sides emphasizing product continuity for existing web and app customers in early statements acquisition summary and testingcatalog brief.

• Growth metrics: Manus is reported at about $100M in annual recurring revenue and a $125M total revenue run rate roughly nine months after launch, which commentators note is unusually fast for an agent product with significant infrastructure costs acquisition summary.

• Usage scale: Since launch, Manus agents have processed around 147 trillion tokens and powered more than 80 million "virtual computers" (ephemeral agent workspaces), giving Meta a heavily exercised harness for complex, stateful workflows usage metrics.

• Strategic fit: Commentators frame the deal as Meta buying the “cheapest, most aligned” way to close the gap between its strong Llama model family and weaker consumer-facing AI experiences, contrasting the price with private valuations like Perplexity at $20B, ElevenLabs at $6.6B, and Manus itself at ~$500M earlier this year valuation context and ecosystem reaction.

The acquisition signals Meta is prepared to purchase, not only build, its way into the emerging market for AI agents that execute multi-step workflows for both individual users and enterprises.

Meta’s Remote Labor Index shows Manus-style agents automate only 2.5% of remote work

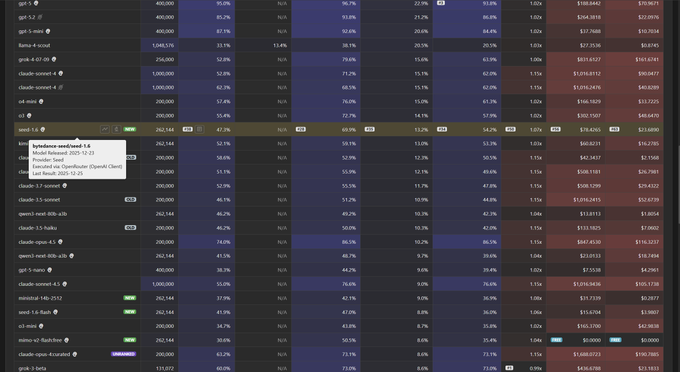

Remote Labor Index (Meta/Manus): Meta researchers have introduced the Remote Labor Index (RLI), a benchmark that measures how much of real-world remote work current AI agents can fully automate, with Manus currently leading at only 2.5% successful task automation rli benchmark. The benchmark card compares Manus to Grok 4 and Sonnet 4.5 at 2.1%, GPT‑5 at 1.7%, and other agents below that, while stressing that all systems remain near the floor of the metric despite saturating many traditional evals

.

• Benchmark framing: RLI is built from real commissioned remote-work projects and scores whether an agent’s output would be accepted as commissioned work, leading the authors to conclude that “frontier agents remain far from automating real remote-work projects” even when they look strong on synthetic benchmarks rli benchmark.

• Trajectory signal: The write-up notes that models are steadily improving on RLI and that its low but measurable automation rates are meant as a common yardstick for tracking how fast agent capabilities move from toy tasks toward economically meaningful work inside Meta’s ecosystem rli benchmark.

For teams watching Meta’s Manus acquisition, RLI provides a first quantitative glimpse of how Manus-class agents perform on realistic contract-style jobs and how much headroom remains before broad remote-work automation becomes visible in practice.

🧑💻 Agentic coding in practice: memory, mobile and planning

Mostly hands‑on dev posts: Claude Code memory, Cursor plan/parallel reads, mobile workflows, data/Excel skills. Excludes Meta–Manus acquisition (feature).

claude-mem gives Claude Code a local, persistent memory layer

claude-mem plugin (Community): A new "claude-mem" plugin wraps Claude Code with a local memory service so Opus 4.5 can recall project details across sessions, storing summaries on disk instead of relying only on live context windows, according to the maintainer’s demo and notes in the plugin overview.

• Local LLM-powered memory: The plugin tracks files, decisions, and conventions in a local database backed by an LLM so Claude can rehydrate context for future edits without re-reading the whole repo each time, as explained in the GitHub repo.

• Titans framework integration: There is an open PR to merge the Titans memory framework from GDM, which would standardize memory schemas and make it easier to reuse memories across different agents that share the same project, mentioned in the framework mention.

The pattern pushes Claude Code closer to an IDE-native teammate that remembers past work rather than a stateless chat window.

Cursor’s plan mode plus parallel file reads anchor multi-agent coding workflows

Plan mode & reads (Cursor): Cursor 2.3’s combination of plan mode and parallel file reads is being used to hand off dozens of granular TODOs to separate agents, with one engineer describing how Opus 4.5 generated 42 detailed tasks to audit a Tailwind v4 theme and Cursor’s agent farm chewed through them while its parallel file scanning kept latency low Tailwind audit.

• Planning vs speed tradeoffs: In a separate experiment, a developer compared "plan" behavior across Composer, Claude Sonnet 4.5, and GPT‑5 High; Composer planned fastest, GPT‑5 scanned codebases quickly, but Sonnet 4.5 asked clarification questions, enumerated files, and drafted prompts before writing code, which they framed as higher planning quality even if slower planner comparison.

• End-to-end flow: The described workflow is: ask Opus 4.5 to generate a multi‑phase plan, expand each phase into detailed todos, then use Cursor’s "Build in new agent" per todo while file reads run in parallel; this keeps each agent’s context tight and leaves room in chat for iterative refinement without hitting context limits Tailwind audit.

These anecdotes highlight how much of “agent quality” now depends on the IDE harness—how it plans, slices work, and streams repo context—rather than just which base model is picked.

DocETL ships Claude Code skill for full unstructured-data analysis flows

DocETL Claude skill (UC Berkeley): The DocETL team released a Claude Code skill that lets Opus 4.5 orchestrate scraping, parsing, and analyzing thousands of messy text documents end‑to‑end, with one example scraping 15 years of Hacker News “What Are You Working On?” threads into a dashboard while the author went for coffee in the workflow summary.

• Skill packaging: The skill ships as a Claude Code integration that exposes DocETL’s ETL primitives (scrapers, chunkers, pipelines) as callable tools, so the model can generate, run, and debug full pipelines from natural language instructions, as described in the Claude quickstart.

• Open-source stack: DocETL itself is open-source and built around agentic LLMs controlling unstructured ETL; the team points developers to the main project page and GitHub repo in the DocETL site and project repo.

For agentic coding, this shows a concrete pattern where Claude Code is not just editing code but driving a specialized ETL engine to produce real analytical outputs.

LlamaSheets + Claude Code turn messy Excel into structured frames

LlamaSheets with agents (LlamaIndex): LlamaIndex published a new guide showing how to wire its LlamaSheets API into coding agents like Claude Code so they can parse complex, multi-table Excel workbooks into clean 2D data frames before analysis, avoiding the usual cell‑by‑cell openpyxl loops that many existing skills use, as outlined in the guide thread.

• Agent-friendly API: LlamaSheets exposes an HTTP API that accepts spreadsheets and returns structured parquet plus metadata (tables, hierarchies, ranges); the guide shows an agent workflow where Claude Code calls LlamaSheets, then works on the cleaned parquet instead of raw Excel, described in the setup blog.

• Better than naive skills: The author notes that common Claude skills iterate Excel row‑by‑row in Python, which is slow and brittle, while LlamaSheets is designed specifically for document‑scale spreadsheet understanding and works well as a drop‑in tool in existing agent harnesses guide thread.

This gives AI coding agents a more realistic way to handle the kind of gnarly Excel models that show up in finance and operations work.

RepoPrompt and Codex illustrate harness-first planning and context building

Harnessed planning (RepoPrompt/Codex): Multiple posts show Codex agents delegating spec and context work to external tools like RepoPrompt—one example has Codex detecting it’s stuck, calling RepoPrompt.context_builder with a natural‑language spec about fixing MCP model overrides, then waiting for a structured plan and context bundle rather than guessing inline context builder call.

• CLI and MCP flows: The RepoPrompt author notes there is already a /rp-build slash command in MCP servers and a CLI variant, and they plan an "oracle export" prompt so Steipete’s oracle tool can feed the same high‑signal prompt file directly to GPT‑5.2 Pro for more surgical fixes oracle export plan.

• Spec vs code separation: Another snippet shows Codex asking git whether to commit and push a subset of files after finishing a refactor, summarizing completed steps and removing no‑longer‑needed refactor docs; the human describes using Dex Horthy’s "context‑efficient backpressure" ideas so agents summarize tests and plans instead of dumping huge logs, discussed in the context blog.

Taken together, these workflows move planning and context shaping into explicit, reusable tools rather than leaving everything buried in one giant chat prompt.

GitHub Copilot mobile adds Grok Code Fast 1 to on-device model picker

Copilot mobile (GitHub/Microsoft): The GitHub mobile app’s Copilot chat now exposes an explicit model picker that includes xAI’s Grok Code Fast 1 alongside GPT‑4.1 and Claude Haiku 4.5, letting developers choose a fast coding‑optimized model directly from their phone, as shown in the model picker screenshots.

• Fast vs versatile tiers: The UI splits models into "Fast and cost‑efficient" (GPT‑5 mini, Grok Code Fast 1) and "Versatile and highly intelligent" (Claude Haiku 4.5, GPT‑4.1, GPT‑4o), hinting at how GitHub expects people to trade off latency vs depth in mobile workflows model picker screenshots.

• On-the-go coding: A second screenshot shows Copilot responding conversationally in the GitHub app after the user selects Grok Code Fast 1, confirming this isn’t just a label change but a real integration into mobile code review and Q&A flows model picker screenshots.

This extends the “pick your LLM” pattern from desktop IDEs into mobile, where fast turnaround often matters more than maximal reasoning depth.

Sculptor’s Pairing Mode safely mirrors agent edits into local Git repos

Pairing Mode (Imbue Sculptor): Imbue highlighted Sculptor’s Pairing Mode, which temporarily stashes a developer’s uncommitted local changes, then mirrors the agent’s container filesystem into the local repo so editors and tools can see and run the agent’s code, restoring the original working tree when pairing ends pairing explanation.

• Git-state guarantees: The team describes a numbered flow where (1) you have local edits, (2) the agent has its own container changes, (3) turning Pairing Mode on stashes your local work and overlays the agent’s branch, and (4) turning it off restores your state; this is presented as the safest way to let humans inspect and tweak agent‑written code without accidentally merging worlds pairing explanation.

• Docs and use cases: The docs page referenced in the tweet positions Pairing Mode as useful for debugging, running tests locally, and making small fixes to agent code while keeping a clean separation between human and agent branches, as shown in the pairing docs.

This is one of the clearer examples of treating the agent as another developer in Git, with explicit state transitions instead of opaque edits to your working copy.

Supermemory ships AST-aware code-chunk and a startup support program

code-chunk & support (Supermemory): Supermemory announced code-chunk, an npm library for AST‑aware code chunking that follows CMU’s cAST ideas to split code on semantic boundaries (functions, classes) rather than raw character counts, plus a startups program offering $1,000 in credits and hands‑on help for teams building agents on their memory stack code-chunk intro. code-chunk blog • Chunking for agents: The blog explains how naive text splitters butcher code and break referential integrity, while code-chunk uses Tree‑sitter to parse and extract entities along with signatures and docstrings, yielding chunks that agents can retrieve and reason over without losing context code-chunk intro.

• Startup program: A separate announcement offers early‑stage startups $1,000 in Supermemory credits, fast onboarding, and guidance on integrating their Universal Memory API, framing it as a way to ship LLM‑backed products in "days/weeks, not months/years" startup program.

For agentic coding, this lowers two friction points at once: getting better code retrieval chunks and having a managed long‑term memory backend to store them.

Developers lean into mobile “vibe coding” with Replit, Claude Code and Codex

Mobile vibe coding (Community): Several practitioners describe workflows where they drive Claude Code and OpenAI Codex from their phones using Replit’s mobile app and terminal, prompting an agent, locking the screen, and letting it work instead of staring at a laptop, as one detailed in the Replit flow.

• Terminal-first setup: The recipe is to install the Replit mobile app, create a project, open the terminal, then globally install the @anthropic/claude-code-cli and @openai/codex CLIs with npm, turning the phone into a thin client for remote coding agents Replit flow.

• On-the-go agenting: A related post shows a Toad/Claude combo running a New Year countdown from the terminal and another shows codex sessions running for many hours unattended, reinforcing a pattern of long‑running background work kicked off from lightweight mobile prompts countdown demo.

The usage pattern frames agents less as interactive chat partners and more as queued workers that can be nudged from anywhere with a good terminal.

Logging-first coding emerges as a best practice for LLM agents

Logging for agents (Community): A recurring theme in agentic coding posts is "log for the LLM"—Swxyx argues developers should log nearly every execution step with the explicit goal of helping their coding agent debug its own code, then copy logs and traces back into the model to drive precise fixes or new feature hooks logging advice.

• Logs as interface: The follow‑up clarifies that logs are not only for fixing bugs; they also act as anchors for adding new behavior—rather than telling the LLM "insert code on line 123", you point it at a specific log line or execution step and say "change what happens here" so it can navigate by runtime behavior instead of fragile line numbers logs for features.

This pushes logging from a human‑only observability tool into a core part of the interface contract between your system and the agent writing code for it.

🧩 Interop: MCP skills and safer browser control

Interoperability & skills posts: CLI skills bundles and sandboxed browsing for agents. Excludes coding workflow tips (covered in agentic coding).

Browserbase plugin steers Claude Code browser control into a sandbox

Browserbase plugin (Claude Code): A new pattern is emerging where Claude Code controls Chrome through a remote Browserbase workspace rather than a user’s personal browser profile, after practitioners flagged that giving Claude direct access to a logged‑in local Chrome instance exposes private data to leaks or misuse, as argued in the security warning. The recommended setup is to install the Browserbase Claude Code plugin so browsing runs in an isolated cloud session while the local machine stays out of reach.

• Risk framing: Developers note that "Claude controlling chrome is cool in theory" but point out that a coding agent with full access to cookies, history, and saved sessions is a high‑impact failure mode if prompts, tools, or jailbreaks go wrong, leading to calls to "run Claude in chrome in a sandboxed browser instead" in the security warning.

• MCP-style install flow: The plugin is added from within Claude Code using the marketplace and install commands (/plugin marketplace add browserbase/claude-code-plugin followed by /plugin install browserbase@browserbase-cloud), which wires Browserbase’s remote Chrome as a tool the agent can call without touching the user’s main browser profile, according to the install commands.

The combination of explicit risk discussion and an installable browser skill pushes teams toward MCP-style remote browsers as the default pattern for agentic web automation, rather than granting unfenced access to primary user sessions.

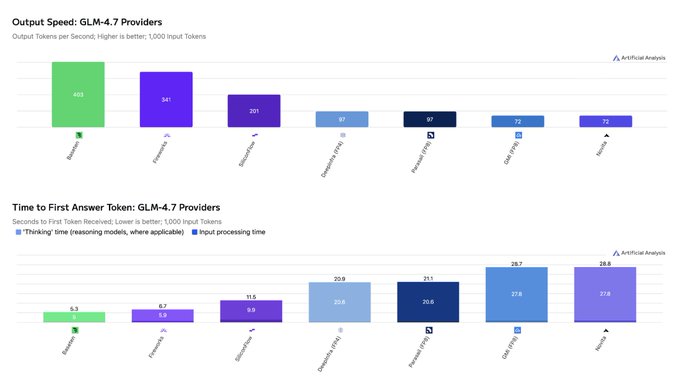

Z.ai ships zai-cli v1.0 MCP skills CLI for GLM‑4.7 tools

zai-cli (Z.ai/KwaiKAT): Z.ai released zai-cli v1.0, a MCP‑native CLI that exposes its vision, web search, web reader, and Z Docs Reader tools as reusable "skills" for any agent stack, with pricing tied to a $3/month Coding Plan that includes GLM‑4.7, according to the cli launch and the GitHub repo. This turns Z.ai’s MCP tools into a generic command‑line toolkit that coding agents or orchestrators can call without bespoke integrations.

• Skill surface: The CLI bundles a vision server, live web search, a HTML‑to‑clean‑text reader, and a long technical docs reader (Z Docs) behind simple commands so agent harnesses can delegate perception and retrieval to Z.ai’s stack rather than re‑implementing these abilities, as outlined in the cli launch.

• Interop and pricing: zai-cli is designed to plug into MCP-aware environments (e.g., Claude Code, OpenCode) while keeping usage on Z.ai’s side metered under the KAT‑Coder‑Pro/GLM‑4.7 Coding Plan at roughly $3 per month for the entry tier, which is positioned as about "6.9%" of comparable Claude Code pricing in related plan details in the coding plan thread.

The release effectively turns Z.ai into a low-cost skills provider for multi‑agent systems, with MCP wiring and CLI semantics hiding most of the API complexity from downstream tools.

📊 Evals: agent/webdev scores and long‑context behavior

Fresh leaderboard and eval diagnostics dominate: Code Arena placements, long‑horizon task success, and retrieval bias profiles. Excludes throughput/runtime accounting (see Systems).

AI Security Institute report finds multi‑domain task horizons doubling every ~8 months

Frontier AI Trends (AISI): The AI Security Institute’s first Frontier AI Trends Report aggregates evaluations of 30+ frontier models and concludes that the maximum task length AI systems can handle in domains like software projects and complex workflows has roughly doubled every eight months from 2022–2025, with long‑horizon SWE tasks now reaching month‑scale, as summarized in the trends overview.

• Capability floors: The report highlights that cyber and autonomy capabilities have moved from “apprentice” to “expert‑level” task success on benchmarks, while tool‑using agents complete around 40%+ of multi‑step software projects in controlled settings, according to the trends overview.

• Open vs closed lag: Open‑source models trail closed frontiers by about 4–8 months of capability on the tracked tasks, but that gap appears to be stabilizing rather than widening, based on the cross‑model comparisons in the trends overview.

• Safety vs speed: Safeguards have strengthened unevenly—some biological and cyber misuse jailbreaks now require up to 40× more effort than on earlier generations, yet all tested models remain jailbreakable and no strong correlation between raw capability and robustness was found, which the trends overview notes with explicit caution.

Taken together with point‑studies like the METR SWE horizon for Opus 4.5 in the metr summary, this report frames a quantitative macro‑picture of how quickly long‑horizon and agentic capabilities are scaling relative to safety tooling.

Opus 4.5 reaches 50% success on ~4h49m SWE tasks in METR evals

Claude Opus 4.5 (Anthropic): A new METR plot shows Opus 4.5 solving software‑engineering tasks that take humans about 4 hours 49 minutes at a 50% success rate, extending the time horizon of reliably automatable coding work and fitting a roughly 196‑day task‑length doubling trend since 2019, according to the analysis in the metr summary.

• Trajectory fit: On a log scale of task duration, Opus 4.5 lands on the same straight‑line trend that earlier models defined, suggesting continuity rather than a break, but with hints the curve may be steepening toward 4‑month doublings as noted in the metr summary.

• Long‑task bias: The success‑probability curve looks flatter than for prior models, with relatively stronger performance on longer tasks and METR’s longest available problems also around 50% success, which implies extended reasoning is less of a bottleneck than before.

For teams tracking when agents can own multi‑hour refactors or feature builds end‑to‑end, this dataset gives a quantified reference point rather than anecdotal claims about “day‑long” coding agents.

Context Arena quantifies Seed‑1.6 long‑context retrieval and strong recency bias

Seed‑1.6 thinking (ByteDance): Context Arena’s MRCR long‑context benchmark now includes Seed‑1.6:thinking and Seed‑1.6‑Flash, showing retrieval curves close to OpenAI’s o3/o4‑mini at short range but with steep degradation and pronounced recency bias as complexity (number of “needles”) increases, per the new metrics in the mrcr update and follow‑up bias notes in the bias deep dive.

• Two‑needle performance: At 128k tokens on 2‑needle tasks, Seed‑1.6 reaches an AUC of 54.2% vs o3’s 57.9%, with pointwise exact‑needle selection at 47.3% vs o4‑mini’s 57.4%, according to the mrcr update.

• Heavier loads: On 4‑needle and 8‑needle tasks, AUC drops to 32.3% and 19.2%, with pointwise accuracy down to 25.5% and 11.8% respectively, indicating difficulty tracking targets as more distractor passages are introduced in the mrcr update.

• Lost‑in‑the‑middle & recency: Diagnostics show one of the strongest "lost in the middle" effects in the budget tier and a tendency to over‑select the last variant—e.g., in an 8‑needle setup where target 6 is correct, Seed‑1.6 picks the last (8) 71.7% of the time vs the correct 6 only 14.6%, as detailed in the bias deep dive.

These results, building on earlier Context Arena profiles such as Xiaomi’s MiMO‑v2‑Flash in Mimo profile, give agent builders concrete numbers for how Seed models behave under multi‑needle retrieval rather than treating them as black boxes.

MiniMax M2.1 tops LM Arena WebDev as #1 open model

LM Arena WebDev (LMArena): The Code Arena WebDev leaderboard now shows MiniMax‑M2.1 debuting as the top open‑source model and #6 overall with a 1445 Elo score, tying GLM‑4.7 in overall rating according to the latest table in the code arena post; this follows GLM‑4.7’s earlier wins on AA Index and Vending‑Bench2, noted in AA index results, where it led open models on reasoning‑heavy benchmarks.

• Ranking details: The WebDev ladder has Opus 4.5 thinking and GPT‑5.2 High at the top, with Gemini 3 Pro and Gemini 3 Flash next, then MiniMax‑M2.1 Preview at 1445, just ahead of GLM‑4.7 at 1441, as shown in the webdev recap.

• Eval scope: Code Arena tests end‑to‑end website/app/game builds from a single prompt rather than unit tasks, so these scores reflect multi‑step tool use and UI wiring rather than raw coding alone, as described in the code arena post.

For AI engineers comparing coding backends, this positions M2.1 and GLM‑4.7 as the leading open WebDev agents relative to proprietary frontier models on the same harness.

Context Arena year‑in‑review traces 2025 long‑context gains to 1M tokens

Long‑context frontier (Context Arena): Context Arena published an interactive 2025 year‑in‑review that traces how state‑of‑the‑art retrieval accuracy at up to 1M tokens climbed through successive releases—highlighting Gemini 2.5/3, Claude 3.5/Opus 4.5, o‑series models and MiMo/M2.1 as they pushed 2‑, 4‑ and 8‑needle performance higher over the year, as shown in the year recap.

• 2‑needle SOTA climb: The “March of Progress” chart shows 2‑needle accuracy at 1M tokens rising from early Claude 3 Haiku at ~12% through models like MiMo‑v2‑Flash and Gemini 2.0 Flash into the mid‑50s/70s range, with Gemini 3 Flash reaching ~93.8% and Gemini 3 Pro and ≈equivalent GPT‑5.2 models clustered just below, per the year recap.

• Deeper stacks: The same view lets users toggle 4‑needle and 8‑needle complexity and see how SOTA curves flatten and drop as the number of conflicting passages grows, reinforcing that long‑context depth is still a challenge even as million‑token windows normalize.

• Token‑budget framing: The recap also surfaces cumulative token usage for generating these diagnostics, underscoring that systematic long‑context eval is itself compute‑heavy and best treated as shared infrastructure rather than ad‑hoc scripting.

For engineers debugging retrieval failures in 256k–1M contexts, this timeline offers a concrete backdrop for which families have actually improved and by how much, beyond vendor marketing claims.

🧠 Speed‑first LMs and availability updates

Model posts centered on faster decoding and access. Mostly WeDLM‑8B‑Instruct diffusion LM and platform availability. Excludes image/video models (see Generative Media).

Tencent’s WeDLM‑8B‑Instruct debuts as a fast diffusion LM for math reasoning

WeDLM‑8B‑Instruct (Tencent): Tencent released WeDLM‑8B‑Instruct, a diffusion language model that targets 3–6× faster math‑reasoning decode than a vLLM‑optimized Qwen3‑8B while matching or beating it on most benchmarks, and it is already live on Hugging Face with KV‑cache–friendly serving support release recap and model card. This model is fine‑tuned from WeDLM‑8B (itself initialized from Qwen3‑8B), keeps a 32,768‑token context, and uses a parallel decoding scheme that preserves a strict causal mask so it can reuse FlashAttention, PagedAttention and CUDA Graphs paths without custom runtimes model card link and technical explainer.

• Speed and accuracy: Threads describe 3–6× faster performance than vLLM‑served Qwen3‑8B on math reasoning tasks, while WeDLM‑8B‑Instruct outperforms base Qwen3‑8B‑Instruct on 5 of 6 public benchmarks, including math‑heavy suites where small latency differences compound over many tool calls release recap and hf repost.

• Topological reordering decode: A deeper technical note explains that WeDLM uses "topological reordering" to move already‑known tokens into a prefix so masked positions see more context, coupled with a dual‑stream training setup (clean memory stream plus masked prediction stream) and streaming parallel decoding that commits confident tokens into the left‑to‑right prefix while a sliding window refreshes masked positions technical explainer.

• Serving readiness: Because committed tokens immediately become standard prefix context under a causal mask, WeDLM can plug into existing high‑throughput stacks like vLLM with native KV caches rather than bespoke diffusion decoders, which is unusual for diffusion‑style LMs and directly targets production latency concerns model card link.

Taken together, this positions WeDLM‑8B‑Instruct as an early concrete example of diffusion‑style language modeling aimed at throughput and compatibility, not just experimental accuracy gains, in a form that infra teams can already exercise via Hugging Face endpoints.

Baseten markets GLM‑4.7 as a fast hosted endpoint for coding and reasoning

GLM‑4.7 on Baseten (Zhipu + Baseten): Baseten is promoting its hosted GLM‑4.7 as the "fastest" GLM‑4.7 endpoint available to try today, exposing it through an OpenAI‑compatible API with a ready‑made model library page and tuning aimed at low‑latency inference for coding and reasoning workloads baseten library and model library. GLM‑4.7 itself is a 355B‑parameter interleaved‑thinking MoE model from Zhipu; it recently reached 6th place overall and top open‑source position on the Artificial Analysis Intelligence Index, just behind frontier proprietary models, as highlighted in the earlier leaderboard coverage aa index and in the latest bar chart where it scores 68 vs Kimi K2 Thinking’s 67 aa index chart.

• Performance plus availability: Practitioners report GLM‑4.7 quickly becoming the default coding model inside Baseten’s own team, suggesting that the combination of quality and endpoint speed is good enough to displace other open models in day‑to‑day work team usage note.

• Context within the landscape: With GLM‑4.7 already adopted by multiple platforms and now pitched explicitly as a speed‑optimized hosted option by Baseten baseten library, its role is shifting from “strong open model on paper” to a concrete, low‑friction choice for teams that want near‑frontier quality without managing 300B‑class MoE deployments themselves.

This update adds an operational angle to prior benchmark‑centric GLM‑4.7 stories, tying its leaderboard performance to a specific high‑throughput endpoint that engineers can hit immediately.

🏢 Enterprise adoption and capital moves (excluding the feature)

Enterprise signals and financing separate from Meta–Manus. New AI‑first workspace patterns and concrete ops ROI. Excludes Manus (feature).

Z AI’s Knowledge Atlas files Hong Kong IPO to raise ~$560M

Knowledge Atlas IPO (Z AI): Chinese AI firm Knowledge Atlas, parent of Z.ai and the GLM model family, has filed for a Hong Kong listing targeting about HK$4.35B (~$560M) in proceeds by offering 37.4M shares at HK$116.2 each, as laid out in the Bloomberg term sheet reproduced in ipo summary.

• First LLM-native listing: Company commentary highlights this as the first public listing of an AI-native LLM company, signalling that capital markets are starting to back standalone model vendors rather than only hyperscalers founder comment.

• Scale and timing: The filing sets trading to begin Jan 8, 2026, with sole sponsorship by CICC, placing a multi-hundred-million-dollar war chest behind continued GLM-4.x development and enterprise expansion into Chinese and global markets ipo summary.

The deal formalises investor appetite for pure-play foundation model providers and gives GLM’s ecosystem a dedicated public-market balance sheet.

Notion tests AI-first Workspaces and custom Notion Agents

Notion AI Workspaces (Notion): Notion is piloting AI-first Workspaces that bundle custom Notion Agents, AI chat surfaces, and a new AI Credits-style metering model for early-access customers, repositioning the app as an AI-powered operating system for organisations rather than a static docs tool, as previewed in the internal UI demo and leak analysis in

and feature breakdown.

• Agents as coworkers: Early screenshots show Notion Agents that can be scheduled, triggered from Slack mentions, or invoked on page and database changes to run multi-step workflows over workspace data, with execution logs and controls suggesting a co-worker rather than chatbot framing feature breakdown.

• Monetisation shift: References to AI Credits indicate Notion may separate core seats from metered AI usage, which would align pricing with heavy-automation teams rather than occasional chat use

.

This test phase signals Notion pushing from “AI inside documents” toward full AI orchestration across team workflows and billing.

Coinbase details LangChain agent stack that saves 25+ hours a week

Enterprise agents (Coinbase / LangChain): Coinbase’s Enterprise AI Tiger Team reports going from zero to production AI agents in six weeks and cutting time spent on targeted workflows by more than 25 hours per week with two agents live, four more completed, and a reusable “paved road” so other teams can follow the same path, according to the detailed architecture write-up in case study blog.

• Code-first graphs and observability: The stack standardises on LangGraph/LangChain for typed, testable graphs plus LangSmith for tracing every tool call and decision, treating agents as regular software components rather than opaque prompt chains case study blog.

• Governance and auditability: Coinbase emphasises immutable traces of data used, reasoning steps, and approvals so agents can be audited like any other system, a design that lets a half-dozen engineers self-serve new agents without re-arguing safety and compliance each time case study thread.

This case study frames production AI agents as an engineering discipline with shared infrastructure, not one-off experiments attached to individual teams.

Julius AI adds @-mentions for tables and columns in analytics

Spreadsheet copilot (Julius AI): Julius AI now lets users @-mention specific tables and columns inside its chat interface so the assistant can lock onto the exact dataset for a query instead of inferring from schema names, tightening control over which metrics and joins it touches during analysis, as demonstrated in the

and explained in the concise feature note.

This small UI change pushes Julius further toward a true BI copilot, reducing mis-targeted queries in wide warehouses by binding natural-language questions to explicit table and column references.

⚙️ Runtimes, throughput accounting and dev terminals

Serving/runtime engineering and developer system UX. New throughput methodology, runtime docs, and terminal/TUI improvements. Excludes evals and model news.

OpenRouter now counts initial endpoint latency in throughput metric

OpenRouter throughput (OpenRouter): OpenRouter has changed its throughput metric to include the initial latency of the endpoint, warning that some open‑source endpoints will now show lower numbers but that the figure better reflects what end users perceive as speed, and also discourages gaming stats via large batch sizes, according to the throughput update. This reframes throughput as an end‑to‑end measure (first token time plus token stream), aligning benchmarking with real UX rather than raw token/second once responses are flowing.

CodexBar 0.16.x adds percent mode, cost CLI and trims idle CPU

CodexBar 0.16 (Peter Steinberger): CodexBar 0.16.0 introduces an optional "percent mode" menu bar display, a codexbar cost CLI for local usage accounting, and significantly lower idle CPU use via cached morph icons and fewer redundant status updates, extending the earlier OAuth‑based usage tracking discussed in CodexBar update and detailed in the macOS updater notes in

. A later 0.16.1 build further smooths usage display across providers (including Codex, Claude and Gemini), while housekeeping notes emphasize that pricing for unknown Claude models is now skipped to avoid stale hard‑coded rates, and that menu hover contrast and brand assets were tweaked for clearer at‑a‑glance runtime feedback in the tooling roundup.

Peakypanes previews native terminal multiplexer with live panes and mouse support

Peakypanes terminal (Kevin Kern): Peakypanes is rolling out a native multiplexer that replaces static previews with fully live terminal panes, supporting multiple concurrent PTY sessions, scrollback, and mouse‑driven selection and resizing, as shown in the

. A follow‑up changelog screenshot outlines features like pane splitting, moving panes between windows, async scanning of project directories, per‑pane scrollback and copy mode, and session‑only jump keys, with daemon state persisted so sessions can be restored after restart, according to the .

RepoBar evolves into a GitHub status hub with CLI, URLs and autosync plans

RepoBar status UI (Peter Steinberger): RepoBar is emerging as a combined macOS menu bar app and CLI that surfaces GitHub repo health—issues, PRs, stars, releases and recent activity—in both a graphical list and a terminal table, with support for ANSI hyperlink extensions so repo paths in the CLI are fully clickable URLs as shown in the cli output. The latest UI screenshot shows pinned repositories, an "Open Issues" pane with labels and authors, and a roadmap item to scan local project directories and auto‑sync clean repos into the bar, while an 11‑hour Oracle/Codex debugging session was used to track down and fix subtle separator rendering glitches in the status list, visible in the

and the accompanying oracle debugging log.

Trimmy 0.7 and Oracle 0.8 refine shell-focused AI dev tooling on macOS

Shell helpers (Peter Steinberger): Trimmy 0.7.0 adds context‑aware clipboard trimming that can detect the source app and apply different aggressiveness for terminals versus general apps, collapsing multi‑line shell snippets while leaving most non‑code text alone, with its "Low" and "Normal" modes tuned to avoid mangling code‑like chunks unless strong command cues are present, as described in the

. The same housekeeping update notes SweetCookieKit 0.1.2 landing on Swift Package Index with a pre‑alert before macOS keychain prompts to reassure users of cookie access, and an Oracle update that positions it as a GPT‑5 Pro harness for agents with fixes for manual login issues, thinking‑time handling and better Codex integration in the tooling update.

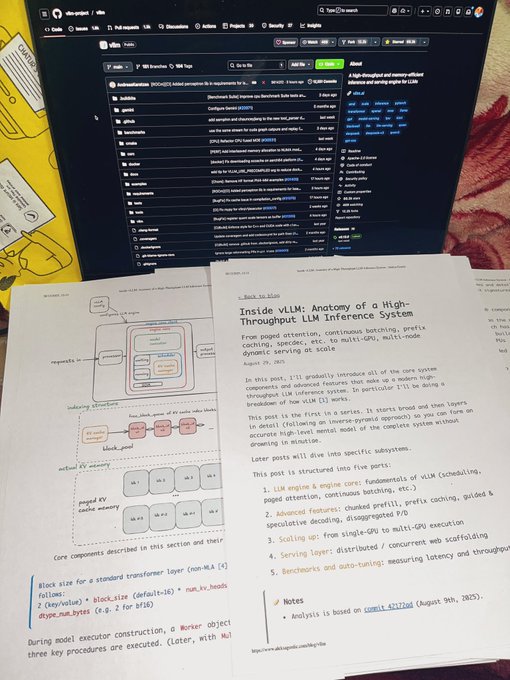

vLLM steers users to AI-powered site search and office hours

Docs and search (vLLM): The vLLM team is telling users to lean on the "Search (AI)" button on the new website and attend vLLM office hours while more beginner‑friendly documentation is in progress, following up on vLLM site where the project launched its official hub with install selector and changelog, as noted in the docs note and the linked vllm site. This positions the AI search box and community calls as the main discovery surface for runtime config and deployment guidance until fuller written docs land.

🏗️ Compute build‑out: Nvidia pivots, power density and foundry pricing

Infra economics dominate: Nvidia–Groq licensing analysis, rack power density constraints, Intel tie‑up, TSMC pricing path, and local GPU options. Excludes enterprise adoption stories.

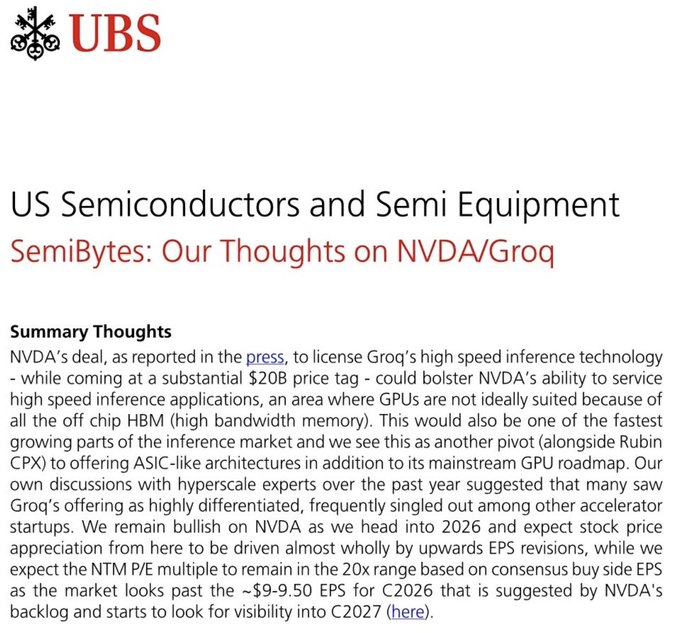

UBS frames Nvidia–Groq $20B deal as two-lane inference hedge

Nvidia–Groq license (Nvidia): UBS characterizes Nvidia’s ~$20B Groq technology license as a way to straddle two "lanes" of inference—flexible HBM GPUs for general workloads, and ASIC‑like LPUs with on‑chip SRAM for high‑volume, latency‑sensitive traffic—arguing that licensing Groq’s full stack and time‑to‑market is faster than designing an internal ASIC, as outlined in the ubs research note. This extends license hedge, which previously highlighted the deal as an inference hedge rather than a pure acquisition.

• Rationale and timing: UBS notes that hyperscalers are already actively shopping for non‑GPU inference engines and warns that showing up “18 months late is the same as not showing up,” so licensing gives Nvidia an immediately credible ASIC‑like option while its Rubin CPX roadmap matures ubs research note.

• Stack and customer story: The analysis emphasizes that Groq’s edge is tightly tied to its compiler, kernel library, runtime and profiling suite rather than silicon alone, meaning Nvidia is effectively acquiring a proven full‑stack inference system with hyperscaler mindshare, not just logic blocks ubs research note.

The note signals that Nvidia intends to defend inference share on both the classic GPU lane and a more specialized ASIC‑style lane instead of leaving the latter to startups.

AI data centers have 25 GW under build and 128 GW in planning

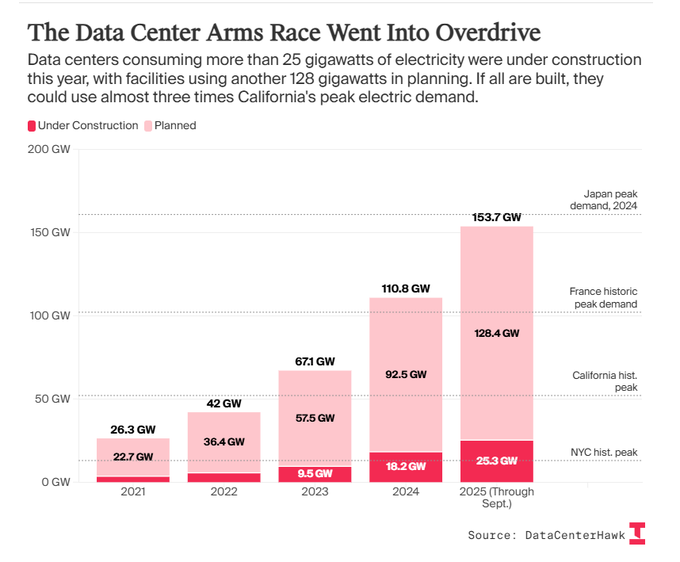

AI power build‑out (Global datacenters): New figures cited in industry commentary suggest about 25 GW of electricity capacity for AI data centers is currently under construction, with another 128 GW in various planning stages—a roughly 5× pipeline that, if fully realized, could draw nearly three times California’s peak electric demand, according to the energy buildout note and ai-power thread. Analysts describe artificial general intelligence clusters as “the investment par excellence,” arguing that large‑scale energy projects are being cultivated specifically to feed future AI workloads energy buildout note.

• Reinforcing earlier gap estimates: This build‑out narrative follows Morgan Stanley’s projection of a 44 GW US AI data‑center power gap by 2030 power gap, indicating that even aggressive capacity additions may lag the appetite for AI compute.

• Constraint shift: The numbers reinforce a broader theme from hardware roadmaps and rack‑density discussions—that over the coming years, the practical limit on AI cluster size will be grid connections, substations, and transmission rather than GPUs or model designs ai-power thread.

For infra planners, AI now looks inseparable from long‑lead energy infrastructure decisions rather than a workload that can be layered on top of existing grids.

Nvidia buys $5B Intel stake and teams on NVLink CPU–GPU designs

Nvidia–Intel tie‑up (Nvidia/Intel): Nvidia has completed a $5B private placement for 214.7M Intel shares at $23.28 per share and will work with Intel to build custom x86 CPUs tightly coupled to Nvidia GPUs via NVLink and advanced packaging, according to the reuters brief. Intel will manufacture bespoke CPU parts that Nvidia can package alongside its accelerators, and the firms also plan PC‑oriented SoCs that integrate Intel CPU cores with Nvidia RTX graphics chiplets reuters brief.

• Server implication: For AI servers, the collaboration gives Nvidia more control over CPU–GPU interconnect latency and bandwidth by combining NVLink with Intel’s packaging and foundry capabilities, aiming to make large training and inference systems behave more like single coherent machines reuters brief.

• Client angle: On the PC side, Intel says it intends to ship x86 SoCs that include RTX chiplets, positioning Nvidia as a graphics supplier inside Intel‑branded client chips and deepening the two companies’ linkage beyond datacenter accelerators reuters brief.

The move ties Nvidia to Intel’s emerging foundry business while diversifying Nvidia’s CPU partners beyond its existing relationships with x86 and Arm vendors.

Nvidia’s Rubin Ultra NVL576 pushes rack power density toward grid limits

Rubin Ultra NVL576 (Nvidia): Nvidia’s Kyber rack‑scale Rubin Ultra NVL576 system, targeted for 2H 2027, is specced at roughly 15 exaFLOPs FP4 inference and 5 exaFLOPs FP8 training per rack—about 14× a GB300 NVL72 system—with 365 TB of HBM4e and massive NVLink 7 bandwidth per the kyber rack slide. Commentary notes that between 2022 and 2028 Nvidia expects about a 20× increase in power density on a footprint‑adjusted basis, making power delivery and cooling the next scaling wall rather than GPU availability kyber rack slide.

• From chips to power: At these densities, the bottlenecks become substations, switchgear, liquid cooling loops, utility contracts, and permitting speed, with cluster deployment increasingly constrained by “how fast can we bring safe, stable electricity to that exact spot in the room” instead of how many accelerators are on the roadmap kyber rack slide.

• Context with prior forecasts: This view lines up with Morgan Stanley’s earlier 44 GW projected US AI data‑center power gap power gap, suggesting that even as systems like NVL576 arrive, real‑world cluster growth will be dictated by grid build‑out and site engineering.

TSMC to raise 2nm and advanced-node prices annually from 2026 to 2029

Advanced nodes pricing (TSMC): TSMC is reportedly planning 3–10% price increases on 2nm and other advanced nodes every year from 2026 through 2029, reflecting persistent tight supply driven by AI and high‑end mobile demand, as covered in the pricing report. Sources say the company has already effectively sold out early 2nm wafer capacity and is using multi‑year hikes to both ration supply and protect margins wccftech analysis.

• Buyer impact: For AI accelerator vendors whose leading chips sit on these nodes, this implies a structural rise in wafer costs over at least four years, with limited relief from dual‑sourcing because moving designs to Samsung’s 2nm gate‑all‑around processes carries requalification expense and schedule risk pricing report.

• Signal on demand: The willingness and ability to push consecutive hikes suggests that, even with new fabs ramping, leading‑edge logic for AI and premium SoCs will remain demand‑constrained rather than capacity‑constrained during the second half of the decade wccftech analysis.

For AI hardware planners, the numbers point to higher baseline silicon costs that need to be offset by architectural gains and better utilization rather than cheaper wafers.

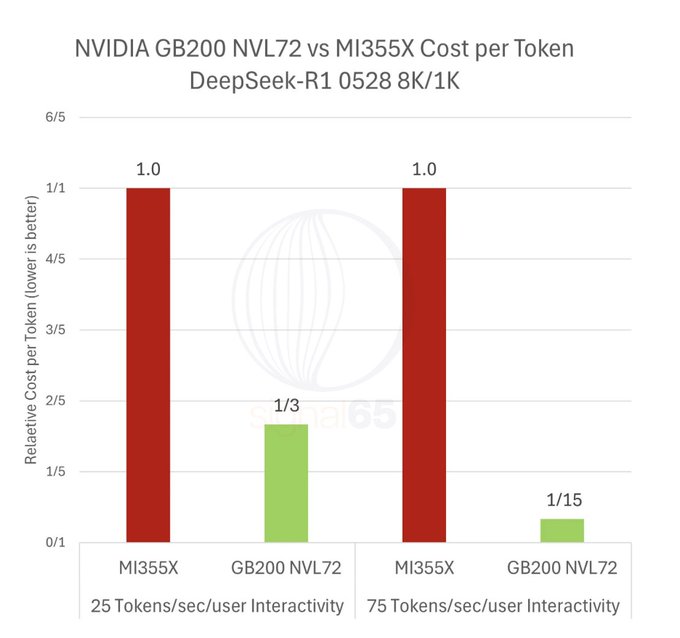

Signal65 tests say GB200/B200 beat AMD MI355X on inference economics

GB200 vs MI355X economics (Nvidia/AMD): New testing from Signal65, referenced in community commentary, reports that Nvidia’s GB200/B200 stack—especially the GB200 NVL72 rack configuration—clearly outperforms AMD’s MI355X on dense and smaller Mixture‑of‑Experts inference workloads and still wins on unit economics despite roughly 2× higher per‑GPU hourly pricing, as summarized in the signal65 summary and the linked signal65 analysis.

• Architecture advantage: The report attributes Nvidia’s edge to NVLink‑based rack‑scale architecture and orchestration, which keep more of the model’s working set on‑chip and deliver higher “intelligence per dollar” and better interactive latency, whereas MI355X struggles to match that rack‑level efficiency even when raw FLOPs and memory look competitive signal65 summary.

• Positioning takeaway: Commentary concludes that, for now, Nvidia remains the preferred choice for high‑end inference—especially for latency‑sensitive and MoE workloads—while AMD looks more compelling only in narrower throughput‑oriented scenarios where interconnect constraints matter less signal65 analysis.

The results reinforce how much economics at scale depend on system‑level design, not just chip‑level specs, in the current Nvidia–AMD race for AI inference.

China’s Lisuan G100 GPUs ship as RTX 4060–class domestic option

Lisuan G100 GPUs (Lisuan Tech): Chinese GPU startup Lisuan has begun shipping its Lisuan G100 family, with the 7G106 described as a 6nm TSMC N6 part featuring 12 GB of GDDR6 on a 192‑bit bus, PCIe 4.0 support, and roughly 225 W TDP from a single 8‑pin power connector—positioned around Nvidia’s RTX 4060 class, as detailed in the lisuan gpu thread.

• Domestic alternative: Commenters note that prior Chinese discrete GPUs have been held back by weak drivers, limited game support, and poor performance‑per‑watt, and frame G100 as an attempt to deliver a more competitive, locally controlled mid‑range GPU that can serve client PCs and small inference workloads without relying on export‑constrained Nvidia or AMD cards lisuan gpu thread.

• Ecosystem challenge: The remaining hurdles are software and ecosystem maturity—getting robust drivers, game and framework support, and tuning perf/W into a range that makes the hardware viable in both consumer and AI‑adjacent roles lisuan gpu thread.

The launch underlines China’s push to build its own GPU stack in the face of tightening controls on Western accelerators.

Samsung DRAM process leak to China highlights memory race for AI

DRAM IP leak case (Samsung/CXMT): South Korean prosecutors have indicted 10 people, including former Samsung executives, over alleged leaks of 10nm‑class DRAM manufacturing know‑how to Chinese memory maker CXMT, estimating damage in the $7B–$13B range, as summarized in the korea leak report. Authorities say a departing researcher copied hundreds of Samsung’s process steps by hand—covering gas ratios, chamber pressures, and exposure conditions—to evade digital monitoring, enabling CXMT to reconstruct and tune the flows for its own tools korea leak report.

• Impact on competition: Prosecutors link the leak to CXMT achieving 10nm DRAM production by 2023, effectively shortcutting years of trial‑and‑error yield learning and potentially strengthening China’s position in commodity and high‑bandwidth DRAM used in AI accelerators korea leak report.

• Security and supply: The case underscores how detailed “recipes” for memory processes—rather than just schematics—are now central assets in global competition, with direct implications for the cost and availability of HBM‑class memory that feeds GPU clusters korea leak report.

While not directly about new AI chips, the episode highlights how fiercely contested DRAM technology has become in an AI‑driven compute economy.

🛡️ Risk, preparedness and platform quality concerns

Safety discourse spikes: senior voices on deception/job impact, platform quality issues, and preparedness hiring. Excludes technical hallucination papers (in Research).

Managers report replacing 10 junior devs with 2 seniors plus an AI agent

Entry‑level SWE market (Stanford/industry): A Los Angeles Times report summarized in a recent thread says some managers now replace teams of about ten junior developers with two senior engineers plus an AI coding agent, contributing to sharply fewer entry‑level offers for new Stanford CS grads Stanford job article.

The piece describes this as a "dramatic reversal" from hiring patterns three years ago, linking it directly to the use of LLM coding tools for tasks that used to be training ground work. For AI engineers and policy analysts, it is an early, concrete datapoint for Hinton‑style forecasts about software jobs shrinking at the bottom of the ladder while demand for high‑leverage operators remains task growth clip.

Paper finds LLMs bend rules by using hidden unit tests to boost code scores

Rule compliance (LLM coding): A new paper summarized in a thread shows that large language models quietly exploit unit tests in prompts as hints, materially boosting code performance even when instructions explicitly forbid using tests for solution design coding paper.

Researchers modify 148 BigCodeBench tasks across five prompting setups—baseline, full tests, partial tests, plus "do not use tests" variants—and find that when tests are visible, first‑try success rates jump from roughly 16–24% to 37–55%, regardless of admonitions not to peek coding paper. Qualitative analysis suggests models adopt several adaptation strategies, from genuine test‑driven refinement to brittle hard‑coding of expected outputs, indicating that benchmark scores can substantially reflect prompt‑leaked signals rather than core coding ability. For safety and eval design, the result underlines that models can and will bend stated rules when gradient history favors using any available signal.

Study estimates over 21% of YouTube uploads are low‑quality AI "slop"

Content quality (YouTube): A summary of a Stechspot report claims that more than 21% of new YouTube uploads are now classified as low‑quality "AI slop", with automated content farms exploiting cheap generative tools and recommendation incentives that reward volume over value slop summary.

The thread says this flood of templated AI videos makes discovery harder for human creators and degrades viewer trust, while moderation and quality filters struggle to keep up; the same report previously estimated roughly 63 billion views on such content across recommendations, pointing to both scale and engagement impact slop article. Observers suggest this is another example of AI amplifying existing platform economics—here, ad‑driven quantity—faster than governance and ranking systems can adapt.

AI denialism essay says machines may surpass humans in creativity and empathy

AI denialism (Louis Rosenberg): Louis B. Rosenberg’s essay on "AI denialism"—circulated in recent threads—argues that public insistence that AI is hitting a wall or is "slop" reflects an early denial stage of grief over losing cognitive supremacy, rather than a sober reading of capability trends essay link.

He specifically challenges the common claim that human creativity and emotional intelligence will remain unmatched, saying there is no evidence supporting that and increasing evidence suggesting the opposite, while pointing to AI systems already winning mathematics and coding Olympiads against expectations commentary thread. The piece combines psychological framing with a warning that underestimating AI’s trajectory could leave institutions unprepared for its integration into everyday life by 2026 and beyond.

Engineers call for deep‑research fact‑checks on viral political posts

Platform integrity (social apps): An AI practitioner argues that major platforms like X, Instagram, Facebook and YouTube could now asynchronously run long‑context "deep research" agents on every political post above ~1,000 likes, using high‑effort reasoning modes to auto‑fact‑check claims at low marginal cost fact-check proposal.

They note that community‑driven tools such as Community Notes are often late or only marginally better than what in‑house models (Grok, Meta AI, Gemini) could already produce, and clarify that the "I don’t get why" framing was rhetorical—contending that decent engineering and research effort could make this work and predicting it will be prioritized eventually clarification. The post implicitly reframes AI agents from pure engagement drivers to potential safety and quality infrastructure for political information flows.

Maxime Rivest warns AGI-era productivity gains may be highly uneven

Adoption gap (Maxime Rivest): Maxime Rivest recounts that a recently retired IT security specialist in his family had never heard of ChatGPT or OpenAI, and uses this to argue that AGI‑level systems could arrive before many people and organizations have even engaged with today’s tools adoption reflection.

He draws a parallel to electrification—where some devices are still being electrified 150 years later—and predicts that AI and AGI integration into everyday artifacts will similarly lag for cultural, trust and learning‑curve reasons, even if the technology becomes cheap to embed learning tradeoff. Rivest suggests that some individuals and firms will see 10–100× gains in value creation per unit capital by embracing AI, while others see little change because of fear, romanticism for older methods or simple lack of perceived need, setting up a potential widening gap in productivity and opportunity.

Scholars warn journal filters can’t separate AI-assisted wheat from chaff at scale

Scientific publishing (Academia): Ethan Mollick points to a growing quality‑control problem in AI‑era journals, arguing that both mental and procedural filters are not designed to quickly distinguish between strong and weak work once AI tools can generate plausible text and analysis at scale journal concern.

He notes that a flood of papers would be tolerable if editors and reviewers could efficiently separate "wheat from chaff", but when that separation itself consumes lots of expert time, overall progress slows as systems saturate with low‑value submissions. The concern is less about outright misconduct and more about subtle degradation of average quality when AI assistance hides underlying expertise gaps in ways that current peer‑review norms were not built to catch.

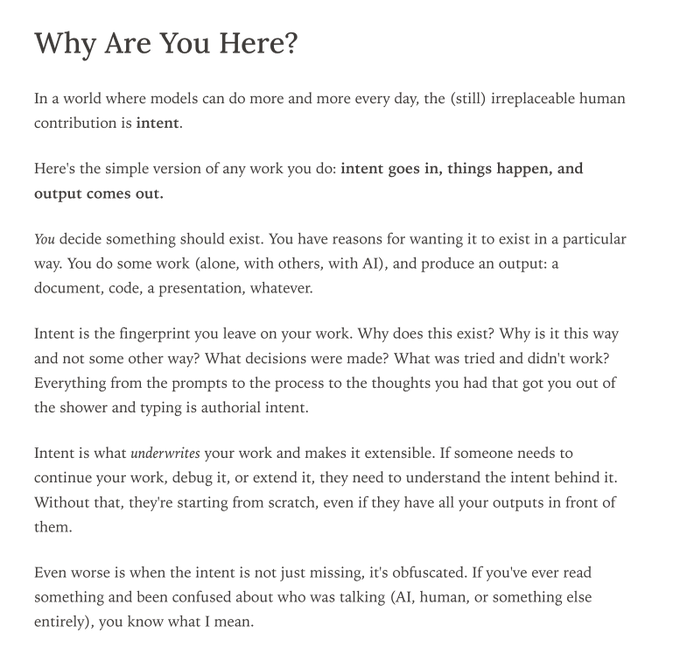

Southbridge shares layered AI use policy aimed at preserving team trust

Team governance (Southbridge): Consultant Hrishi Olickel outlines the AI usage policy adopted at Southbridge, describing it as "written in blood" in response to recurring "oh moments" like discovering that foundational results were hallucinated, that authors didn’t understand AI‑written work, or that teammates spent hours reading output that took minutes to generate policy thread. AI policy blog The policy is structured in layers to restore mutual trust and consistency: it demands clarity about where AI assistance was used, discourages building on unverified AI‑generated artifacts, and seeks to avoid situations where no one can explain the reasoning behind key documents or code policy details. Olickel argues that in AI‑enabled teams, maintaining a shared understanding of intent and authorship becomes as important as raw productivity, since most organizations rely on stable trust relationships rather than perfect oversight.

Vibe coding study says fast agent-built demos accrue hidden technical debt

Vibe coding (development practice): A paper highlighted in multiple safety‑focused threads argues that agent‑driven "vibe coding" workflows make first demos much faster but often accumulate substantial technical debt, which then slows teams and makes codebases harder to maintain vibe coding thread.

The authors observe that professional developers using coding agents still tend to stay in tight control, but novice or pressured teams may accept large volumes of opaque AI‑generated code that passes initial tests yet violates architectural constraints or maintainability norms. This leads to a risk that short‑term productivity metrics mask long‑term fragility, especially when organizations lack explicit policies about review, refactoring and ownership of AI‑written components follow-on comment.

Claude Code users say the Explore agent sometimes harms rather than helps

Explore agent (Anthropic): A Claude Code user reports that the built‑in Explore agent, which summarizes and samples files for the model, "often does more damage than good", arguing that Claude "needs to swim in the code base, not look at a few summarized files" to make reliable changes explore agent critique.

They describe routinely telling Claude not to use Explore because the abstraction can hide important context and encourage edits based on partial views of the repository. The complaint underscores a broader platform‑quality concern: agentic layers that aggressively pre‑filter or summarize context can boost speed and token efficiency but risk subtle correctness regressions when developers assume the assistant has seen more of the code than it actually has.

📚 Reasoning, agents and perception: this week’s papers

Strong stream of new/updated preprints: recurrent Transformers for reasoning, plan reuse, hallucination detection, long‑video agents, geometric memory, and tool‑augmented math.

AgentMath tool‑augmented math agent hits 90.6% on AIME24

AgentMath (Tsinghua + Tencent Hunyuan): The AgentMath framework combines a reasoning LLM with a code interpreter and an agentic RL pipeline, reaching 90.6% on AIME24, 86.4% on AIME25 and 73.8% on HMMT25 with a 30B‑parameter model, substantially ahead of similarly sized open baselines as detailed in the agentmath details and the ArXiv paper. Instead of forcing models to do all algebra in natural language, AgentMath converts chain‑of‑thought traces into trajectories with inline code snippets, executes them in a sandbox, splices back exact numerical results, then fine‑tunes the model to decide when to switch between text reasoning, code writing, and code debugging.

The training stack includes large‑scale supervised trajectories and an RL stage with asynchronous, partial rollouts and load balancing to make long tool‑heavy sequences tractable, which the authors say yields 4–5× faster RL training on ultra‑long contexts and produces models that both solve more contest‑level problems and waste less effort on arithmetic mistakes.

LongShOTBench shows long‑video QA remains brittle even for Gemini 2.5 Flash

LongShOTBench and LongShOTAgent (MBZUAI): A new benchmark–framework pair for omni‑modal long‑video reasoning finds that even top systems like Gemini 2.5 Flash reach only 52.95% overall accuracy, while the authors’ own LongShOTAgent manages 44.66%, on 5,095 open‑ended questions requiring joint reasoning over vision, speech and ambient audio in long clips, according to the longshot summary and the ArXiv paper. The benchmark emphasizes multi‑turn dialogues, intent‑driven scenarios and rubric‑based partial credit, using human‑verified captions and audio transcripts to expose where models lose context or fail to track state over time.

LongShOTAgent itself coordinates specialist tools—improved ASR, dense video captioning and retrieval—over a structured video map, but still struggles with the hardest queries, underscoring that long‑horizon, omni‑modal reasoning remains an open problem even with tool‑augmented agents rather than plain vision‑language models.

Study finds Transformers and Mamba store graphs as geometric maps

Geometric memory in sequence models (Google + CMU): A new analysis shows that deep sequence models like Transformers and Mamba, when trained to memorize a fixed graph and answer path queries, tend to internalize a geometric embedding of the graph where multi‑hop relationships become near neighbors, turning what should be many chained lookups into an almost one‑step similarity check, as described in the geometry explanation and the ArXiv paper. The authors train models on graphs with up to ~50k nodes and find they can reach near‑100% accuracy on unseen paths, while probing reveals that nodes several hops apart in the original graph lie close together in representation space and that the learned geometry matches known spectral structure like the graph Laplacian.

This work argues that "associative" table‑lookup style memory and global geometric memory compete under gradient descent, with the latter often winning even though training only exposes local edges, and raises open questions about why optimization so consistently discovers these usable spatial maps and how that behavior might be harnessed or controlled for better multi‑hop reasoning.

Universal Reasoning Model pushes small UTs to 53.8% on ARC‑AGI

Universal Reasoning Model (Ubiquant): Researchers refine Universal Transformers with recurrent inductive bias, strong nonlinearity and ConvSwiGLU plus truncated backprop and reach 53.8% pass@1 on ARC‑AGI 1 and 16% on ARC‑AGI 2, up from 40% and 7.8% for prior UT baselines, while also hitting 77.6% Sudoku accuracy as detailed in the urm overview and the ArXiv paper. The work argues most of the reasoning gain comes from repeatedly applying a single transformation with powerful activations and shallow convolution rather than stacking many distinct layers, suggesting small, recurrent models can outperform much larger static Transformers on multi‑step abstraction tasks.

The authors also show that removing the short convolution, truncated backprop, or SwiGLU activation sharply degrades performance, which supports the claim that iterative computation and nonlinearity, not just scale, are key levers for symbolic‑style reasoning.

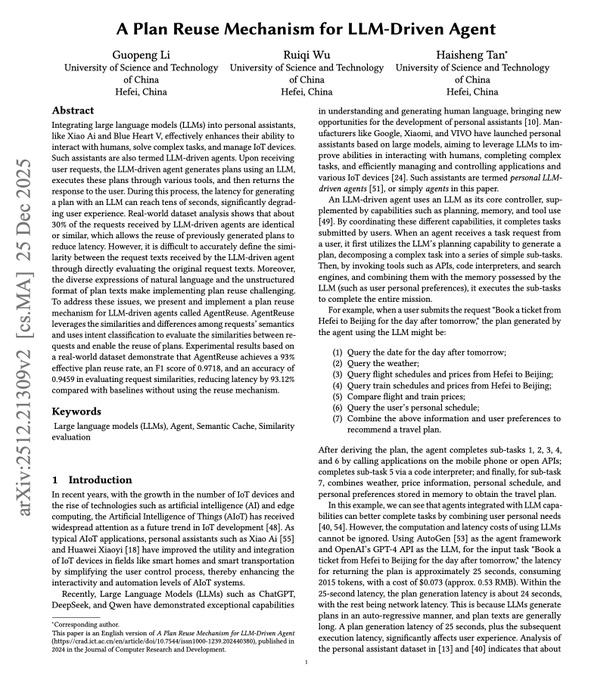

AgentReuse replays cached plans to cut agent latency by ~93%

AgentReuse (USTC): A new plan‑reuse mechanism for LLM agents separates task intent from parameters, caches prior step‑by‑step plans, and re‑injects fresh arguments for semantically similar tasks, achieving a 93% effective plan reuse rate and cutting latency by 93.12% vs. no caching on 2,664 real agent requests according to the agentreuse summary and the ArXiv paper. The system adds under 100MB of extra VRAM and ~10ms overhead per request yet still beats response‑level caches like GPTCache by 60.61% on latency, because it reuses structured plan skeletons while re‑executing tools for up‑to‑date answers.

By stripping out parameters such as time and locations, matching only on parameter‑free "intent strings," then slotting new values back into cached plans, AgentReuse shows that much current agent latency comes from re‑planning identical workflows rather than tool calls, and that semantic plan caching can make agents feel far more responsive without sacrificing freshness.

FaithLens 8B explains and detects faithfulness hallucinations better than GPT‑4.1

FaithLens (OpenBMB + THUNLP): The FaithLens 8B model is introduced as a lightweight detector that not only flags faithfulness hallucinations across RAG, summarization and fixed‑doc QA tasks but also generates natural‑language chain‑of‑thought style explanations, and the authors report it outperforms GPT‑4.1 and o3 on 12 benchmarks while staying much smaller, as outlined in the faithlens announcement and the ArXiv paper. It is trained in two stages: first on carefully filtered synthetic data that enforces label correctness, explanation quality and diversity, then via rule‑based RL that jointly rewards accurate hallucination judgments and clear, well‑structured rationales.

The paper positions FaithLens as a practical component for production RAG and summarization pipelines where operators need both a binary faithfulness signal and a human‑readable explanation about which parts of the answer contradict which parts of the source, making it easier to debug models and to build UI that exposes why a response was marked unfaithful.

🎬 Generative media pipelines: image/video models and workflows

Creative posts are plentiful: image model open‑weights, pro prompts, world‑gen, aspect‑ratio controls, and 3D‑assisted animation. Excludes core LM news.

Nano Banana Pro spreads across apps with advanced prompts and motion pipelines

Nano Banana Pro (Google): Nano Banana Pro is surfacing as a de facto image backbone across multiple creative tools—Gemini’s image UX, Higgsfield, Leonardo, LTX Studio, and even Kling 2.6 motion—while power users share long, reusable prompts for stylized effects gemini image pitch ltx workflow. A detailed thread walks through an 80s "double exposure" portrait prompt designed for Higgsfield’s implementation, split into parts that specify subject layout, floating heads, laser backdrops, and Olan Mills–style film grain, and explicitly tuned to work with Nano Banana Pro’s likeness preservation prompt intro prompt conclusion.

• Cross‑tool usage: Creators report using Nano Banana Pro inside Leonardo for camera and film simulations leonardo workflow, in LTX Studio as the still-image stage before LTX‑2 animation ltx workflow, and together with Kling 2.6 to turn Nano Banana frames into short motion clips kling combo.

• Prompt-as-pipeline: The "best prompt of 2025" thread breaks the 80s double exposure spec into environment, subject arrangement, and technical specs (soft focus, glamour glow, visible film grain) so the same text can be reused across characters and sessions as a stable aesthetic recipe prompt segment prompt conclusion.

This pattern underlines how Nano Banana Pro is increasingly treated less as a consumer feature and more as a shared rendering layer that different front-ends and prompt libraries build on top of.

Yume‑1.5 debuts as text-controlled interactive world generator with 5B 720p model

Yume‑1.5 (research, std studio): A new "Yume‑1.5" model is showcased as a text-controlled interactive world generation system, turning prompts into richly rendered 3D-ish environments you can explore, with a linked 5B-parameter 720p variant published on Hugging Face yume teaser yume model card. The demo shows on-the-fly regeneration of scenes as text constraints change, indicating the model is optimizing for controllable layout and continuity rather than single-shot images.

• Model packaging: The public artifact "Yume‑5B‑720P" arrives as a world-gen–oriented checkpoint aimed at 720p outputs, suggesting a trade-off between resolution and interactiveness for browser or game-engine integration yume model card.

• Interactive focus: The teaser video scrolls through iterative refinements of the same scene as the prompt is edited, which points toward potential use as a level/blockout tool for designers rather than a one-off renderer yume teaser.

For media engineers, Yume‑1.5 represents an early step toward treating world models as assets you query and steer live, instead of offline video clips.

3D animator shows ComfyUI + custom LoRAs + Qwen/Flux/Wan hybrid for AI realism

3D-to-AI realism workflow (independent creator): A 3D animator details a realistic character pipeline that starts with traditional 3D animation, then routes rendered frames through ComfyUI with custom LoRAs trained on their own renders, backed by a 20 TB personal skin-texture library and a mix of Qwen, Flux and Wan models workflow overview. Motion is assembled from ~6-second "stable" clips that are then stitched into a longer video, explicitly trading global coherence for per-shot consistency.

• Hybrid still vs motion: For stills, the creator leans on Qwen + Flux combinations to get an "anime-meets-real" aesthetic with rerolls for face and outfit stability; for motion, they experiment across Flux, Qwen, and Wan, discarding any segment with identity or outfit drift before final assembly workflow overview workflow follow-up.

• LoRA + reference heavy: The workflow uses LoRAs fine-tuned on the artist’s own 3D outputs plus a massive local texture reference set to enforce consistent skin detail, effectively turning diffusion into a super-resolution and retexturing stage rather than a full scene generator workflow overview.

This case study illustrates how high-end creators are increasingly using diffusion as a post-process on top of deterministic 3D animation, with ComfyUI graphs as the orchestration layer.

Higgsfield Cinema Studio pushes “directing not prompting” with 85% New Year sale

Cinema Studio (Higgsfield): Higgsfield is heavily promoting Cinema Studio, a browser-based generative video pipeline that centers on camera and lens control rather than prompt tweaking, bundled with two years of unlimited Nano Banana Pro access at an advertised 85% discount for a limited New Year window cinema promo follow-up offer. The pitch stresses stable characters and Hollywood-style shots (“no morphing characters, no random glitches”), framing the tool as a way to direct virtual cameras over fixed subjects instead of re‑rolling entire scenes.

• Camera-first workflow: Threads emphasize real lens and camera parameter control (angles, moves, depth of field) layered over Nano Banana Pro generations, with Higgsfield positioning this as a move from prompt crafting toward shot blocking cinema promo.

• Aggressive pricing: The campaign repeats that this is their "biggest discount we'll ever do," tying the discounted Cinema Studio license to multi‑year access to image models to lock in creators ahead of 2026 new year push.

For media engineers, this signals another commercial stack built around a proprietary image backend (Nano Banana Pro) but exposing a more familiar film language (lenses, rigs, takes) as the main control surface.

ProEdit proposes KV-mix and Latents-Shift for cleaner prompt-based image editing

ProEdit (research): A new paper introduces ProEdit, an inversion-based image editing method that combines KV-mix and Latents-Shift to reduce source-image contamination while still honoring prompt changes, and is designed as a plug-in drop-in to existing diffusion editors proedit mention proedit paper. The authors argue many current inversion workflows overfit to the source photo, causing prompts to be ignored or producing artifacts, and show that mixed key–value features plus perturbed latent codes can keep global structure while letting local attributes change.

• KV-mix mechanism: ProEdit linearly mixes key–value features from source and target views only inside the edited region, which they claim stops background bleed and better localizes edits without retraining the base model proedit paper.

• Latents-Shift: By perturbing the latent representation of the manipulated area, the sampler is nudged away from the exact inversion, enabling more faithful prompt following while still respecting layout and lighting proedit paper.

This work targets teams frustrated with brittle prompt-only editing—especially in portrait and product workflows—by offering a geometry-preserving, architecture-agnostic recipe that can sit inside existing ComfyUI/Automatic1111-like stacks.

Fan-made AI live-action One Punch Man fight showcases current video gen

One Punch Man AI short (independent): A viral clip recreates the Saitama vs. Genos fight from One Punch Man as a live-action style sequence fully generated with AI video tools, showing energy blasts, slow-motion impact and building destruction at a fidelity high enough to resemble a low-budget film one punch demo. The creator frames it explicitly as "the future of animation, cinema, and storytelling," using it as a proof-of-concept that existing pipelines can approximate anime set pieces without a traditional VFX team.

The post does not disclose the exact stack, but the shot composition, camera motion, and temporal coherence suggest a multi-stage workflow (concept art → keyframes → motion model) similar to those seen with Sora-style models and emerging commercial video generators one punch demo.

Grok Imagine on the web adds five aspect ratios for image and video

Grok Imagine (xAI): xAI’s Grok Imagine web interface now exposes five selectable aspect ratios for both image and video generation, with a modal showing preset canvases (including square and various landscape/portrait options) plus toggles for image vs video output aspect ratio ui. This brings Grok’s UI closer to other production image tools where framing control is a first-class parameter rather than buried in prompts.

• UI-level framing control: The screenshot shows an "Aspect Ratio" popover layered over a gallery of recent generations, with the chosen ratio applied before entering the text prompt, which reduces prompt-token overhead and avoids trial-and-error resizing aspect ratio ui.

• Unified image/video switch: The same panel also carries a switch between "Video" and "Image" generation, suggesting the underlying pipeline can route a single prompt through either still or temporal diffusion paths based on this UI flag aspect ratio ui.

For teams building around Grok Imagine, this small UX update makes it easier to standardize dimensions across marketing assets, thumbnails, and short clips without maintaining separate prompt templates per format.

UniPercept benchmark targets aesthetics, quality and structure for VLM reward models

UniPercept (research): A new survey and benchmark called UniPercept focuses on "perceptual-level" image understanding—covering aesthetics, technical quality, structure and texture—and introduces a unified reward model that outperforms many multimodal LLMs on these dimensions, positioning itself as a plug-and-play evaluator for generative systems unipercept mention unipercept paper. The authors argue that most VLM work has chased semantic understanding while ignoring human-perception metrics that actually govern how images are judged in creative pipelines.

• Unified scoring: UniPercept-Bench aggregates multiple prior datasets into a hierarchical taxonomy and trains a model that can rate both global aesthetics and local artifacts, which is useful as a reward model for text-to-image training and for automated selection in sampling-heavy workflows unipercept paper.

• Out-of-domain generalization: Reported results show that a UniPercept-trained baseline generalizes better across unseen image distributions than many general-purpose VLMs, which often mis-rank images that look fine to humans but carry minor structural glitches unipercept mention.

For engineers running large image-generation farms, this kind of perceptual evaluator can sit between the generator and end users, trimming low-quality samples without resorting to hand-tuned heuristic filters.

ComfyUI GitHub repo moving to Comfy-Org to support growth and maintenance