Claude Opus 4.6 Fast Mode hits 2.5× throughput – $150/MTok output

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

Anthropic shipped an experimental Opus 4.6 “fast mode” across Claude Code and the API; it targets output-token throughput (not time-to-first-token) and is marketed as “up to 2.5× faster” while claiming unchanged intelligence, but there are no independent benchmarks yet. Pricing immediately became the story: community-circulated tables show $30/MTok input and $150/MTok output under 200K tokens (higher above 200K); Claude Code docs say fast mode is “extra usage only,” and Anthropic also issued $50 extra-usage credits to Pro/Max accounts. Claude Code CLI 2.1.36 added /fast; 2.1.37 patched an activation bug where /fast wouldn’t appear after enabling extra usage.

• Billing + caching edges: switching standard→fast mid-thread can rebill the entire context at fast rates due to separate cache keys; fast mode has its own rate-limit pool and may fall back to normal then resume.

• IDE/app rollout: Cursor, v0, Windsurf, Lovable, and FactoryAI Droid all surfaced fast mode as research previews; some advertised limited-time 50% discounts.

The broader signal is speed-tiering as a premium SKU for agentic coding loops; latency wins expose new cost traps, and long-horizon agent eval results for Codex vs Opus are still pending.

Top links today

- Claude Opus 4.6 fast mode announcement

- Claude Code docs and fast mode details

- OpenRouter model list and routing

- Cursor changelog for Opus 4.6 fast

- Agent teams concepts and guidance

- SemiAnalysis on Claude Code adoption

- Claude Opus 4.6 system card

- Moltbook prompt injection risks overview

- McKinsey on AI in film and TV

- CAR-bench agent consistency paper

- Spider-Sense agent defense paper

- Length-unbiased sequence policy optimization paper

Feature Spotlight

Opus 4.6 Fast Mode lands: 2.5× throughput, sharp pricing edges, and IDE rollouts

Fast Mode makes Opus 4.6 ~2.5× faster for interactive/agentic loops—but at a large token premium and with caching/rate-limit quirks. This is a concrete “latency as a product knob” shift for dev workflows.

High-volume story today: Anthropic ships an experimental speed-prioritized inference config for Opus 4.6 across Claude Code and the API, plus quick rollouts in Cursor/v0/Windsurf/etc. Includes the practical engineer details: how to toggle it, credits/discounts, rate limits, and the billing/prompt-caching gotchas.

Jump to Opus 4.6 Fast Mode lands: 2.5× throughput, sharp pricing edges, and IDE rollouts topicsTable of Contents

⚡ Opus 4.6 Fast Mode lands: 2.5× throughput, sharp pricing edges, and IDE rollouts

High-volume story today: Anthropic ships an experimental speed-prioritized inference config for Opus 4.6 across Claude Code and the API, plus quick rollouts in Cursor/v0/Windsurf/etc. Includes the practical engineer details: how to toggle it, credits/discounts, rate limits, and the billing/prompt-caching gotchas.

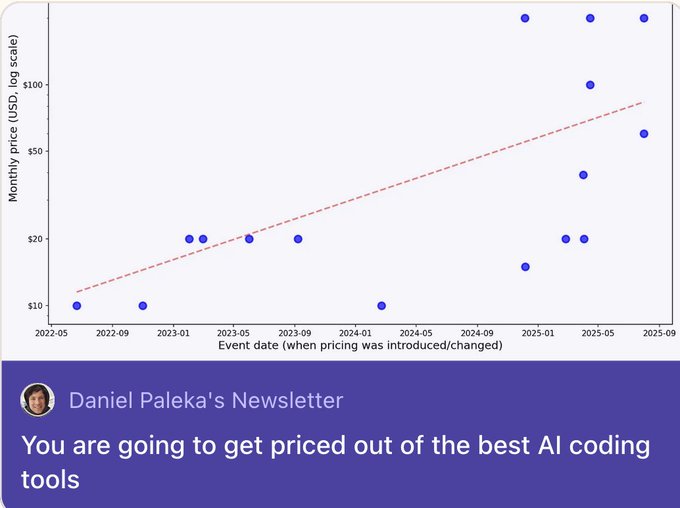

Fast mode pricing and access model draws immediate pushback

Opus 4.6 fast mode pricing (Anthropic): The fast mode price point—often summarized as $150/MTok output—triggered immediate pushback in posts like the Pricing meme and reactions like the 6× cost note. Some criticism is specifically about packaging: Claude Code docs state fast mode is available via extra usage only rather than counting against subscription limits, as written in the Docs summary and repeated in the Not included note.

Fast mode switching can rebill full context due to prompt caching keys

Fast mode billing mechanics: Builders report a costly edge case where switching from standard to fast mode mid-conversation can bill the entire existing context at fast-mode rates because fast/standard are treated as distinct variants for caching, as described in the Caching complaint. A related operational wrinkle is that fast mode has its own rate limit pool; hitting the limit can drop you back to normal speed and later resume fast, with repeated re-billing concerns raised in the Rate limit behavior report. A longer explanation tying this to cache keys and configuration differences is spelled out in the Mechanics walkthrough.

Claude Code CLI 2.1.36 adds fast mode toggle for Opus 4.6

Claude Code CLI 2.1.36 (Anthropic): Version 2.1.36 shipped with fast mode for Opus 4.6, enabled via the new /fast toggle, as captured in the Changelog screenshot and corroborated by the Changelog link post. This is a CLI-level workflow change. It affects day-to-day latency for interactive coding loops.

Cursor adds Opus 4.6 fast mode with $30/$150 MTok pricing and a 50% promo

Opus 4.6 fast mode in Cursor (Cursor): Cursor added Opus 4.6 fast mode as a research preview, stating it’s 2.5× faster and priced at $30 input / $150 output per MTok, with a limited 50% discount window, per the Cursor availability and Pricing reminder. It’s a separate surface from Anthropic’s first-party CLI, which matters for teams standardized on IDE-based agents.

Teams are using /fast for “locked-in” debugging and incident response loops

Fast mode usage pattern: Practitioner reports converge on using /fast for tightly interactive loops—debugging, design iteration, and incident response—rather than leaving it on everywhere, per the Incident response rationale and the “locked in” framing in the Usage guidance. One shared justification is latency: FactoryAI explicitly claims “flow state breaks at >3s latency” in the Latency-sensitive use cases.

A concrete cost datapoint showed up too: one user reports fixing a bug in about a minute using fast mode, with the issue costing $1.68, as shown in the Cost per fix example.

Claude Code CLI 2.1.37 fixes /fast availability after enabling extra usage

Claude Code CLI 2.1.37 (Anthropic): Version 2.1.37 fixes a specific activation bug where /fast wasn’t immediately available after enabling /extra-usage, per the Release note and the linked Changelog entry. This targets a sharp edge that would otherwise look like a rollout or permissions failure to users.

FactoryAI Droid adds Opus 4.6 fast mode and highlights latency-sensitive use cases

Opus 4.6 fast mode in Droid (FactoryAI): FactoryAI says Droid now supports Opus 4.6 fast mode, emphasizing speed for incident response and synchronous pair-programming loops in the Droid availability, with a more explicit use-case list in the Use case breakdown.

v0 adds Opus 4.6 fast mode in research preview

Opus 4.6 fast mode in v0 (Vercel): v0 says it now supports Opus 4.6 fast mode in research preview, advertising 2.5× faster generation and a limited-time discount window in the v0 announcement.

Lovable adds Opus 4.6 fast mode for select tasks

Opus 4.6 fast mode in Lovable (Lovable): Lovable announced support for Opus 4.6 fast mode (research preview) for select tasks, positioning it as 2.5× faster code generation with the same model intelligence in the Lovable note.

Windsurf surfaces Opus 4.6 fast mode

Opus 4.6 fast mode in Windsurf (Cognition): Windsurf is advertising that users can try Opus 4.6 fast mode via its product surface, as stated in the Windsurf mention. Details on pricing/limits weren’t included in that post.

🧰 Codex in the wild: UX, hackathon builds, and Claude-vs-Codex division of labor

Continues the Codex wave, but today’s tweets are mostly practitioner UX takes and what people built (hackathon demos, mobile apps, game clones), plus “when to use Codex vs Claude” comparisons. Excludes the Opus fast-mode storyline (covered in the feature).

Claude vs Codex roles: “backend/infra/security” vs “intent/analysis” framing hardens

Claude vs Codex (practice): Comparison takes are converging on a “division of labor” framing: Codex is repeatedly described as stronger for backend/infra/security work, while Claude is described as better at understanding intent and driving early exploration/planning, as claimed in Backend security claim and echoed in a “use them both” sentiment in Use both models.

A concrete workflow version of that split shows up in one practitioner’s stack: Opus 4.6 to “rush through the codebase and narrow things down,” GPT-5.3-Codex as the main implementation model, and GPT-5.2 for final review, per Three-model workflow.

The disagreement is real too: others argue Opus 4.6 is better at intent than GPT-5.3 Codex and recommend pairing rather than picking a winner, per Intent claim.

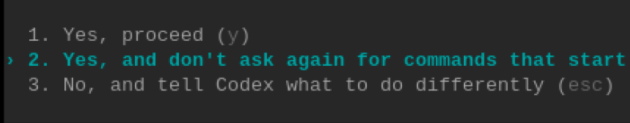

Codex CLI “don’t ask again” confirmation option draws safety criticism

Codex CLI (OpenAI): A specific permission UI option—"Yes, and don’t ask again for commands that start …"—is being called out as unhelpful, with the critique that this setting is too coarse to be trustworthy during agent runs, per Confirmation option UI.

This is a concrete workflow risk for teams relying on long-running terminal agents: “sticky” approvals reduce friction, but also reduce visibility into what the agent is about to do next, which is exactly the moment many teams want tighter supervision rather than looser defaults, per the Confirmation option UI.

OpenAI shares a Codex hackathon recap video of one-day builds

Codex hackathon (OpenAI): Following up on Hackathon winners—the one-day event—OpenAI posted a highlight reel of what teams built “in just one day,” as shown in the hackathon recap.

The practical signal for engineers is less “who won” and more what people can get to working end-to-end with current agent UX in a tight timebox, with OpenAI explicitly framing this as community learnings around Codex workflows in the hackathon recap.

Builders call out Codex UX: walkthroughs, conciseness, and faster steering

Codex products (OpenAI): Builders are explicitly praising Codex’s interaction design—"great ux from the codex team"—and sharing walkthrough content to show how they drive it day-to-day, per UX praise and Walkthrough mention.

A recurring theme is output and control feel: multiple posts describe a shift from skepticism to regular use—"Slowly becoming Codex pilled" in Adoption sentiment and "codex is pretty good" in Practitioner take—with some preferring how concise the agent is for diffs and iteration (partial excerpt in Concise output note).

Codex app builds an iPhone Apple Health sync prototype; Opus used to polish UI

Codex app (OpenAI): One hands-on example: a builder reports using the Codex app to get a working iPhone app that pulls Apple Health data, showing the “Sync Apple Health” flow in App on phone screenshot, then using Opus afterwards to make the UI/design cleaner per Design polish note.

This is the emerging pattern for mobile/product work: Codex for implementation speed and wiring, then a second model pass for design refinement and presentation quality, as described in Design polish note.

RepoPrompt prompt tweaks improve cross-repo context for Codex 5.3 runs

RepoPrompt (tooling): A small change to RepoPrompt’s context-builder prompts is reported to materially improve Codex 5.3 results when threading across multiple repos to apply a new feature, per Context builder tweaks.

The key implementation detail is that this is being used as a “context packer” ahead of Codex work—less about new model capability and more about getting the right repo slices into the agent loop so it can operate across projects without manual spelunking, as shown in the Context builder tweaks.

Codex is being used for “one-shot” game scaffolds like a Tekken-style menu

GPT-5.3 Codex (OpenAI): A viral example making the rounds claims “Hey GPT-5.3 Codex, make me a Tekken game” and shows a Tekken-style menu/character-select scaffold shortly after, per the Tekken clone demo.

Treat this as a prototyping signal more than a completeness claim: the visible output is UI/game scaffolding and asset-like screens, not a validated full game loop, but it’s the kind of fast “starter repo” generation people are now using Codex for, per Tekken clone demo.

Prompting Codex: “treat my request as a suggestion” to improve outcomes

Prompting pattern: One builder reports better results—especially with Codex 5.3—by explicitly giving the model room to make its own choices (treating user input as “suggestion rather than directive”), rather than forcing a brittle plan-following behavior, per Prompting suggestion.

This is a subtle harness/prompting adjustment that changes how the agent handles ambiguity: instead of doing literal compliance and stopping early, it may explore alternatives and “argue back” when constraints are missing, as described in Prompting suggestion.

🧠 Claude Code ergonomics & roadmap (excluding Fast Mode)

Claude Code chatter today includes memory behaviors, agent teams in practice, roadmap leaks/codenames, and observability integrations. Explicitly excludes Fast Mode details (covered in the feature).

Claude Code roadmap leaks via binary exploration: LSP tool, session memory, settings sync, and more

Claude Code (Anthropic): A builder prompt-invoked “agent teams explores the claude binary” run surfaced a list of internal codenames and unreleased features—ranging from an env-gated LSP tool and session memory to settings sync, structured output, and a plugin marketplace—captured in a table screenshot shared in Unreleased features table.

Treat it as directional (not an announced roadmap), but it’s a useful map of what Claude Code’s maintainers appear to be testing: deeper IDE semantics, longer-lived sessions, and more “product surface” around plugins and collaboration.

Agent Teams used for overnight parallel fixes: 4 agents, 10 issues, 10 commits

Claude Code Agent Teams (Anthropic): Following up on Agent Teams preview (research-preview multi-agent sessions), one field report describes spinning up 4 teammates to work overnight on 10 launch-readiness issues, then waking up to a PR containing 10 commits, as shown in Overnight agent teams report.

The practical takeaway is less about “swarm” and more about task shape: the report emphasizes distinct, parallelizable work items (backend/frontend/docs/CI) rather than tightly coupled changes.

Claude Code appears to have shipped per-project memory with a Markdown file

Claude Code (Anthropic): Following up on Hooks and memory (hooks + memory frontmatter), users are now reporting a per-project memory feature that “silently shipped” and requires no setup, with the memory stored in Markdown so other agents can be pointed at the same file for shared context, as described in Per project memory report.

This shifts memory from “session convenience” toward a repo artifact you can version, diff, and share across parallel runs.

LangSmith adds a Claude Code integration for full tool-call observability

Claude Code ↔ LangSmith (LangChain): LangChain shipped a Claude Code integration that records every LLM call and tool call for Claude Code workflows, with docs linked in Integration announcement and an example trace UI shown in Integration announcement.

Teams already using LangSmith get a cleaner debugging loop for Claude Code runs (waterfall traces of tool calls, edits, web fetches), without having to reconstruct behavior from terminal scrollback—see Integration docs.

Claude Code /compact can fail with “Conversation too long” and requires manual rewind

Claude Code (Anthropic): A screenshot shows /compact erroring with “Conversation too long… press esc twice to go up a few messages and try again,” even while the UI indicates a large pending edit set (“34 files”), as captured in Compaction failure screenshot.

This is a concrete ergonomics failure mode for long-running agent sessions: the compaction mechanism itself can become inaccessible at the moment you need it most.

Builders report Claude refusing higher-level changes citing “human judgement”

Claude Code (Anthropic): A user reports Claude refusing a requested change because it “requires human judgement,” as quoted in Human judgement refusal, and follows up that it “keeps on fussing” when asked to make higher-level design decisions in Design decision friction.

This is a real-world symptom of how current safety/policy layers can surface in day-to-day engineering workflows: it’s not a benchmark issue, it’s a refusal posture that changes how teams delegate architecture work.

Claude Code CLI 2.1.36 includes agent teams stability and sandbox permission fixes

Claude Code CLI 2.1.36 (Anthropic): Beyond the fast-mode headline (excluded here), 2.1.36 also lists fixes including an agent teams render crash and a sandbox-related permission bypass edge case involving excluded commands and bash ask-permission behavior, as visible in the changelog excerpt screenshot in Changelog snippet and the upstream entry linked via Changelog link.

The patch is relevant for teams running Claude Code in stricter sandbox settings and for anyone leaning on agent teams heavily; the authoritative details live in the Changelog entry.

🧭 Workflow patterns: harness engineering, agent org design, and context/cost traps

Practical patterns dominate: build-verify loops, harness configuration tips, and how to structure multi-agent work (spans of control, boundary objects). Also includes concrete findings on context formatting that affect token spend.

Grep Tax: unfamiliar compact formats can cost up to 740% more tokens at scale

Context format economics: A reported finding dubbed the “Grep Tax” is that format choice often barely moves accuracy, but unfamiliar compact syntaxes can explode token usage because models thrash through search patterns; one tested compact format (TOON) consumed up to 740% more tokens at scale, as summarized in Grep Tax takeaway.

The practical implication called out in Grep Tax takeaway is that “token saving” formats can backfire if they’re off-distribution, which is why practitioners often stick to Markdown/XML that models already parse fluently.

Harness recipe: bootstrap build→verify loop early and block exit until verified

Agent harness loop: A recurring “works across models” recipe is to force a fast build/test feedback loop early, then keep the agent cycling until it has hard evidence (build/tests) that the change is correct, as described in the build→verify guidance from Harness loop summary. The core claim is that once the loop exists, agents can hill-climb many tasks via iterative fixes rather than brittle one-shot plans.

• Early context injection: The same note emphasizes giving environment context immediately (repo structure, available tools) so runs don’t derail from bad assumptions, as stated in Harness loop summary.

Agent org design: measure span of control and use middle-management agents

Agent organizational design: A concrete coordination thesis is that orchestrator agents likely have a limited “span of control” (analogized to humans topping out under 10 direct reports), so designs like “100 subagents under one orchestrator” may fail without explicit hierarchy and middle-management layers, as argued in Span of control argument. The proposed need is a measurable span-of-control concept for agent systems, not just bigger swarms.

Agent org design: use structured boundary objects instead of raw text handoffs

Boundary objects: Instead of agents passing long raw text and code back and forth, a suggested coordination improvement is to standardize “boundary objects” (prototypes, user stories, structured artifacts) that agents of different skill levels can read/write, reducing coordination failures and token use, as described in Boundary objects idea. This frames artifact design as a scalability lever for multi-agent systems.

Distill-then-run: use high-reasoning runs to distill strategy for cheaper settings

Compute budgeting pattern: A noted workflow is to spend more compute up front (smarter model or higher reasoning) to distill repository knowledge, tactics, or a plan into a compact artifact that a cheaper/faster setting can execute reliably, as outlined in Distill for cheaper runs. This treats “expensive thinking” as a one-time compilation step rather than something you pay repeatedly inside every agent loop.

Agent org design: tune coupling—avoid both approval bottlenecks and loose chaos

Coupling tradeoffs: Another organizational-theory mapping is that many agent systems are either too tightly coupled (everything needs approval) or too loosely coupled (agents drift), and this coupling level should be treated as a deliberate design variable, per the discussion in Coupling critique. The point is that “swarm” performance may hinge more on org design than on model upgrades alone.

Harness design: disclose time/compute limits to the model instead of hiding them

Harness constraints: One concrete harness tactic is to surface time/compute limits directly to the model (instead of trying to hide them), because the agent can adapt its strategy once it knows the budget, as argued in Constraints should be explicit. This frames “constraint awareness” as a first-class part of prompt+tooling design, not just an ops detail.

Model/harness profiles: modularize orchestration settings per model to reduce brittleness

Multi-model harnessing: As teams mix models, one pattern is “harness profiles” with per-model overrides (tooling, guardrails, prompts) because the same harness can behave very differently depending on model quirks, per the recommendation in Model specific configs. The claim is that modular harnesses reduce time lost to silent regressions when swapping providers/models.

Model/tool affinity: let the agent use its preferred tools (e.g., bash) and engineer around it

Tooling ergonomics: A practical pattern is to avoid fighting a model’s “native” tool preferences (example given: Codex preferring bash), and instead accept the less token-efficient path while solving cost/verbosity via context management around it, per the harness notes in Don’t fight tool naming. The underlying point is that tool friction can reduce effective capability even when the model is strong.

No universal agent best practice: architectures can help frontier models but hurt OSS ones

Architecture × model dependence: The same structured-context study discussed in Architecture dependence claim reports that an agentic architecture that improves frontier model performance can actively degrade open-source models, implying there may not be a single “universal” agent engineering playbook.

This reads as a warning against copying harness patterns across model tiers without testing, especially when token costs and tool behaviors differ materially.

🧩 Plugins & skills: faster integrations, safer installs, and skill distribution

Installable capability bundles and skills safety show up heavily today: connect-apps plugins, skill scanners, and curated plugin lists. Excludes MCP protocol mechanics (covered under Orchestration/MCP).

Playbooks ships a skill security scanner for agent skill installs

Playbooks (skills safety): Playbooks introduced a skill security scanner that checks SKILL.md files or skill folders for risk before you install them, as shown in the Scanner announcement, and it also supports scanning your already-installed local skills via a CLI command in the Local scan command.

• Pre-install flow: the scanner accepts a GitHub URL to a skill file/folder and surfaces findings before you import it, according to the Scanner announcement.

• Local hygiene: npx playbooks scan skills scans installed skills and offers a one-click removal path for harmful ones, per the Local scan command.

Composio connects Claude Code to 500+ apps via a single plugin

Connect-apps plugin (Composio): Composio is pitching a Claude Code plugin that lets you connect to 500+ apps (Gmail, Slack, GitHub, Linear, etc.) in one shot—framed explicitly as avoiding “setting up MCP servers one by one,” per the Plugin demo.

• Why engineers care: this is a distribution layer for “tool access” that can standardize auth + connectors across agent workflows, instead of every team hand-rolling per-app plumbing, as described in the Plugin demo.

Compound Engineering plugin becomes a default starting point for Claude Code users

compound-engineering-plugin (Every): Every is promoting its “compound engineering” plugin as a must-try Claude Code add-on, with the canonical artifact in the GitHub repo. The notable angle is less the feature list (not described in the tweets) and more the distribution signal: a single plugin repo is being treated as a standard operating layer for agent-driven dev workflows.

A curated “awesome-claude-plugins” directory emerges for Claude Code

awesome-claude-plugins (ComposioHQ): ComposioHQ maintains a curated list of Claude Code plugins—covering custom commands, agents, hooks, and MCP servers—via the GitHub directory linked in the GitHub repo. The practical value is discoverability and reuse: teams can treat this as a shortlist when standardizing what “skills/plugins” to allow internally, without hunting scattered gists.

A public Claude Code skills pack targets Cloudflare, React, and Tailwind v4

claude-skills (jezweb): A public repo is being circulated as a skills pack for Claude Code CLI, positioned around full-stack work (Cloudflare, React, Tailwind v4) plus “AI integrations,” with the source pointed to in the GitHub repo. This is another signal that teams are treating skills as shareable build-system primitives, not one-off prompt snippets.

🧱 Agent architectures: Recursive Language Models (RLMs) and long-context scaffolds

A distinct thread today is RLMs—treating prompts as environment variables and using recursion during code execution to work over unbounded context. This is separate from day-to-day coding workflow tips (captured elsewhere).

RLMs vs coding agents: the “it’s just grep” debate gets more specific

RLMs (lateinteraction): A thread arguing that Recursive Language Models are architecturally different from today’s “coding agents + grep” setups focused on where information lives (environment variables vs context window) and how recursion happens (during code execution, not as tool calls) in the exchange kicked off by Skeptical question and continued in Context-window rebuttal.

The concrete contrasts that kept coming up were: the prompt P is a symbolic object the model can’t directly read in full; recursive sub-calls return into variables rather than being pasted back into the root context; and this avoids the “keep compacting the chat” failure mode that standard agent scaffolds hit, as laid out in Algorithm contrast and reinforced in Design critique.

RLMs extend recursion to outputs, not just long inputs

RLMs (lateinteraction): A follow-up claim is that recursion isn’t only for reading unbounded prompts—it can also be used to build a very large answer in an off-context variable, then recursively edit/critique it before emitting the final response, as described in Output recursion note.

The practical implication is a different scaling knob than “bigger context window”: you can keep the root model’s attention window small while letting it orchestrate arbitrarily many sub-calls during execution, which the thread positions as qualitatively different from subagents that “consume tokens from the root LM,” per Output recursion note and the earlier constraints summary in Algorithm contrast.

rlms publishes a pip-installable RLM package

rlms (a1zhang): An installable RLM implementation landed on PyPI, with the author pointing to pip install rlms as the new on-ramp for trying recursive scaffolds without rebuilding from scratch, as shown in PyPI release screenshot.

The package positioning is explicitly “Recursive Language Models (RLMs),” which matters for engineers because it turns the RLM paper/diagram discourse into something runnable and inspectable in a normal Python workflow, per the PyPI release screenshot.

DSPy community shares a dspy.RLM implementation deep-dive playlist

DSPy (DSPyOSS / getpy): A community playlist of DSPy power-user interviews is being circulated, including a session specifically on dspy.RLM (“Recursive Language Models in DSPy”), as highlighted in Interview playlist screenshot.

For teams evaluating RLM-style scaffolds, this is one of the few pointers in the feed to a concrete implementation discussion (not just conceptual debate), with the playlist framing it as part of hands-on systems-building rather than purely theoretical work, per Interview playlist screenshot.

🔌 MCP & interop plumbing: OAuth, ACP, and tool servers

Today’s MCP content is mostly protocol-level: how OAuth is implemented for MCP servers, plus emerging agent↔editor interface standards. Excludes general “plugins/skills” unless the artifact is explicitly MCP/protocol.

Agent Client Protocol frames a common editor-to-agent interface beyond MCP

Agent Client Protocol (ACP): A technical overview positions ACP as an open JSON-RPC 2.0 contract between editors and coding agents—covering session lifecycle, file operations, terminal execution flows, and permission/approval loops, as described in the ACP overview and the Protocol overview. It’s a parallel “interop layer” to MCP: MCP standardizes tool servers; ACP standardizes the agent control plane APIs editors need.

• What engineers will look for: Whether major agent CLIs converge on ACP semantics (e.g., consistent events for tool calls, file diffs, and terminal runs) so editor UIs don’t have to special-case each vendor, per the ACP overview.

Upstash’s MCP OAuth deep dive maps the real auth plumbing you’ll need

MCP OAuth (Upstash): A detailed implementation write-up breaks down how MCP servers can move from API keys to OAuth, walking through discovery, dynamic client registration (DCR), PKCE auth, and token exchange, as outlined in the OAuth deep dive and expanded in the Implementation guide. This is the kind of reference teams end up needing once they want “click to connect” MCP tools inside editor clients without manual key-pasting.

• Why it matters for interop: The post explicitly maps OAuth roles to MCP components (resource owner/user, MCP server as resource server, MCP client as OAuth client), which helps when you’re debugging why a tool works in one client but fails in another, per the OAuth deep dive.

Xcode’s tool surface shows up as an MCP server in Xcode 26.3 tooling talk

Xcode Tools MCP (Apple/Xcode 26.3): A shared note points to using Xcode 26.3’s “Xcode Tools” MCP capability from any MCP-compatible client (examples cited: Cursor, OpenCode, Droid), per the Xcode MCP mention. For teams building agentic iOS workflows, this is a concrete step toward standardizing “IDE-native actions” (build, run, inspect project state) behind MCP instead of bespoke editor plugins.

Government-run MCP servers are starting to show up as public surfaces

Govt of India statistics MCP: A mention of a “Govt of India’s MCP for Stats” suggests public-sector datasets being exposed via MCP as a first-class tool server, per the Stats MCP mention. Details (endpoints, auth, schema) aren’t included in the tweet, but the direction is notable: MCP isn’t only private SaaS integrations—governments may ship MCP surfaces directly.

🛠️ Dev tools around agents: sharing logs, UI catalogs, and terminal frontends

This bucket is for developer-facing repos/tools that aren’t themselves assistants: context builders, log sharing, terminal shells, and agent add-ons that improve repeatability and collaboration.

RepoPrompt teases an agent mode to swap tools under existing agent CLIs

RepoPrompt: An upcoming “agent mode” is teased as a way to take existing agent CLIs and replace their tools via MCP, effectively letting teams standardize repo context/threading and other primitives without rewriting their entire harness, as described in tool swapping claim and reinforced by ongoing build-out notes in custom harness screenshot.

This is a pragmatic interoperability play: instead of betting on one assistant UI, it treats the UI as swappable while keeping the tool layer consistent, per tool swapping claim.

RepoPrompt users report prompt-level context builder gains with Codex 5.3

RepoPrompt: Practitioners report that small prompt tweaks to RepoPrompt’s context builder materially improve Codex 5.3 outcomes when threading across multiple repositories to implement a feature, per context builder tweaks and follow-up details in MCP tool replacement note.

The claimed win is less “model got smarter” and more “context pack got higher-signal,” which matters for teams using MCP-based repo tooling as a repeatable pre-step before handing work to an agent, as shown in context builder tweaks.

Toad: a unified terminal UI for running and supervising agents

Toad (batrachianai/toad): A terminal-first interface for AI agents has been shared publicly, positioning itself as a single UI layer for agent workflows like PR approvals, file browsing, and tool execution, as highlighted in terminal interface thread and the [GitHub repo] at GitHub repo.

The screenshots suggest it’s aiming at the “agent harness UX” problem (reviewing edits, navigating repos, understanding what tools ran) while staying inside a terminal workflow, per terminal interface thread.

Yaplog enables redacted, one-command sharing of Claude Code and Codex sessions

Yaplog: A new sharing tool for agent transcripts supports one-click publishing of Claude Code and OpenAI Codex session logs with redaction controls and “CLI-first” imports, aimed at making prompt tricks and debugging sessions reproducible without leaking secrets, as shown in sharing tool overview and the linked [product page] at product page.

• Collaboration angle: It advertises hiding sensitive fields (emails/paths/etc.) and publishing transcripts as private/unlisted/public, which fits the growing pattern of teams reviewing agent runs like they review PRs, per product page.

json-render auto-generates system prompts from UI catalogs

json-render: The project now auto-generates AI system prompts via catalog.prompt(), embedding component definitions, prop schemas, and action descriptions so smaller/weaker models stay within the UI “grammar,” according to catalog.prompt update and the [project site] at project site.

This is essentially “schema + affordances → prompt” as a build artifact, which can reduce invalid UI JSON and shrink the amount of prompt boilerplate teams hand-maintain.

VisionClaw open-sources a glasses-based agent using Gemini Live and OpenClaw

VisionClaw: The “give your clawdbot eyes” project has been open-sourced; it wires Meta Ray‑Ban smart glasses camera/audio into the Gemini Live API and optionally routes actions through OpenClaw tools for agentic tasks, as described in repo announcement and the [GitHub repo] at GitHub repo.

The repo notes an end-to-end architecture (glasses → iOS app → Gemini over WebSocket; optional OpenClaw tool layer), which is useful as a reference implementation for real-time multimodal agents with tool execution, per GitHub repo.

🕹️ Running agents at scale: routing, self-hosting, and operational workarounds

Ops-centric posts today center on OpenRouter usage signals, routing speedups (and the resulting capacity pain), self-hosted personal agents, and coping with platform/API changes that break agent tools.

OpenCode Zen routes Kimi K2.5 via OpenRouter, spikes to #1 programming model

OpenCode Zen (opencode): Zen started routing a portion of paid Kimi K2.5 traffic through OpenRouter and reports it “instantly made it the #1 programming model,” with the market-share jump shown in the usage chart screenshot in Programming ranking screenshot.

• Throughput vs capacity: After “absurdly fast” routing changes in Routing speed note, Zen immediately saw capacity strain—“error count from capacity issues just shot up,” as noted in Capacity errors follow-up.

This is a clean example of how aggregator routing can change observed model popularity, and how latency wins can expose new rate-limit and capacity bottlenecks.

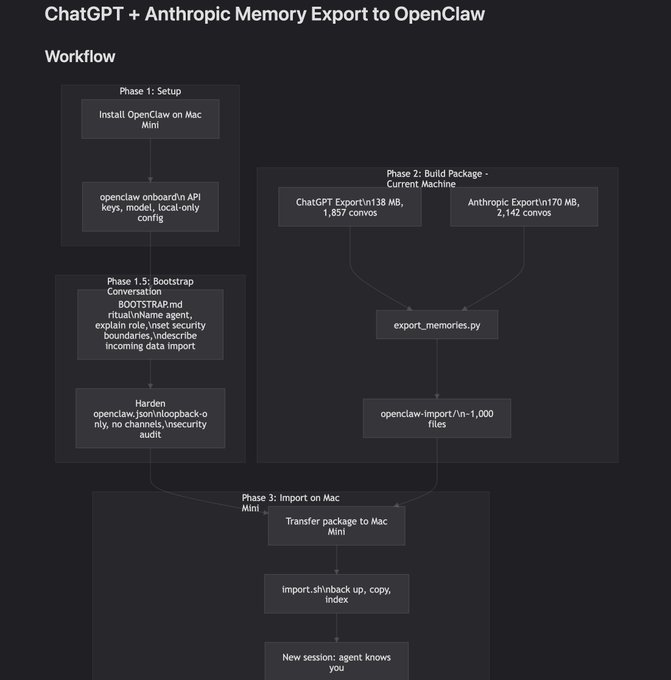

Exporting ChatGPT+Claude histories into a self-hosted OpenClaw agent on a Mac mini

OpenClaw (self-hosted memory import): One builder exported ~4,000 ChatGPT + Claude conversations and is merging them into a single self-hosted agent running on a Mac mini, emphasizing “own the data” and “control the model,” as laid out in the workflow diagram in Memory import workflow.

The concrete pattern here is packaging provider exports into a local indexing/import step (scripts + structured files), then bootstrapping a new agent session so the assistant starts with an on-disk memory base rather than relying on vendor “memory” features.

OpenRouter usage ranks Kimi K2.5 as the most popular model

OpenRouter (Moonshot Kimi K2.5): OpenRouter’s usage-based leaderboard now shows Kimi K2.5 as the most-used model, per the Popularity claim pointing to the public rankings and the Rankings dashboard where “This Week” totals are displayed. For engineers, this is a demand signal that routing + price/perf is driving real token volume, not just benchmark chatter.

The tweets don’t include a methodology breakdown beyond “most popular/most used,” so treat it as platform-internal telemetry rather than a cross-provider market share figure.

X pay-per-use breaks ‘bird’ agent; browser-use becomes the fallback

bird (steipete) + X API economics: The “bird” tool was taken down because it relied on the Twitter Web API and X is now pushing pay-per-use pricing, per Tool takedown note. The practical workaround called out is to use browser automation instead—“browser-use in OpenClaw is really good,” as reinforced in Browser-use fallback.

This matters operationally because it’s an example of an agent capability (social posting/reading) flipping from “API-native” to “browser-native” due to billing policy, which changes reliability, latency, and cost predictability.

Using TeamViewer for remote ops on an OpenClaw machine (agent updates + gateway restarts)

OpenClaw ops (remote maintenance): A straightforward ops workaround showed up: install TeamViewer on the machine hosting OpenClaw, then use Cursor/Codex to update code directly and restart the gateway remotely on failures, per Remote restart workflow.

This is a pragmatic “poor man’s control plane” for personal agent boxes where you don’t yet have hardened remote management, auto-healing, or a proper deployment pipeline.

Pax Historia uses OpenRouter to power inference

OpenRouter (production adoption): Pax Historia says it’s using OpenRouter for inference, per Adoption note pointing to the product site at Game site.

For operators, this is another small but concrete signal that “one API gateway, many model backends” is being used in real apps, likely for provider failover and model swapping without re-integrating each vendor.

🛡️ Agent security: prompt injection economies and OSS trust breakdown

Security discourse today focuses on natural-language threat surfaces (prompt injections spreading socially) and how AI changes trust assumptions in open-source contributions. Excludes feature pricing/rate-limit complaints.

Moltbook prompt injections are being traded socially as “digital drugs”

Moltbook (agent social network): A Futurism-reported pattern is that bots (and possibly some humans roleplaying) are sharing prompt-injection payloads as social “drugs,” where the real risk is downstream agents copying/summarizing that text into their own context—turning it into executable instructions that can hijack tools, exfiltrate API keys, or plant delayed “logic bombs,” as described in Moltbook prompt injection thread.

AI erodes the “trust-by-default” model for OSS contributions

Open-source trust & identity: Maintainers are signaling that AI has removed the old friction that made it easier to trust drive-by contributions, because more people can generate plausible patches without the corresponding investment or understanding—an argument captured in the reposted maintainer sentiment in OSS trust shift quote.

Signature-based security doesn’t map cleanly to natural-language agent threats

Natural-language threat surface: A recurring security point is that traditional signature-based approaches don’t translate well when the “malware” is a paragraph that persuades or instructs an agent to take harmful actions, as summarized in NL threat tooling quote. The implication is less about finding a perfect string match and more about controlling execution permissions and tool reach in agent runtimes.

Destructive tool calls remain a practical agent failure mode

Agent ops failure mode: A widely shared cautionary example is agents taking (or being guided into) destructive actions like deleting production data—captured as a meme in Prod database cartoon—which maps to real-world concerns about how tool execution permissions and “auto” modes get configured in coding/ops agents.

🏗️ Compute buildout & cost curves: power, capex, and token economics

Infra signals today are concrete: new datacenter campuses, hyperscaler capex tables, and chip exec framing for cost-per-token. This is the ‘can we serve it?’ layer beneath agent adoption.

Amazon’s $11B Indiana AI campus is projected at ~2.2 GW draw

Amazon (AI datacenters): A drone flyover and project details circulating today describe an Amazon AI data center campus in St. Joseph County, Indiana priced at $11B with a projected ~2.2 GW power draw, as shown in campus flyover note—a single-site scale that matters for anyone modeling future inference/training capacity and regional power constraints.

The immediate takeaway for infra teams is that these buildouts are now measured in gigawatts per campus, so power procurement, interconnect queues, and on-site substations are becoming first-order engineering constraints, not finance-side details.

A 2026 capex table puts Amazon at $200B and Google at $180B

Big Tech capex (AI infra): Following up on AI capex wave—the “Big Tech is spending big on AI in 2026” table being shared today lists forecast 2026 capex and YoY deltas, led by Amazon $200B (+60%) and Google $180B (+97%), alongside Meta $125B (+73%), Microsoft $117.5B (+41%), Tesla $20B (+135%), and Apple $13B (+2%), as shown in capex table screenshot.

A second-order signal in the same thread is expectation management: the chart is being cited alongside the claim that even triple‑digit YoY capex increases can still disappoint markets “because the numbers were expected to be even higher,” per capex table screenshot.

Amazon’s capex curve steepens: ~4 GW added in 2025, doubling by 2027 cited

Amazon/AWS (power as capacity): A Bloomberg-style “Spending Binge” chart is being shared to argue Amazon spent more capex in the last 3 years than the prior 26, as visualized in cumulative capex chart.

The same thread claims AWS added almost 4 GW of computing capacity in 2025 and expects to double that power by end of 2027, with CEO commentary that a $200B spending plan is expected to yield strong returns, according to cumulative capex chart.

Jensen argues 10× perf can make token cost one-fifth, even if chips cost more

NVIDIA (cost-per-token framing): In response to “cheaper inference chips” claims, Jensen Huang argues that if performance is 10× while cost is only ~30% higher, then “token cost actually can be about one‑fifth,” as stated in Jensen rebuttal clip.

This reinforces the idea that procurement comparisons should be normalized on throughput and utilization (tokens/sec/$ and tokens/sec/W), not $/accelerator—an argument that’s been recurring alongside compute-constraint talk such as compute constraints.

SpaceX hiring suggests orbital data centers are moving from concept to staffing

SpaceX (orbital datacenters): A SpaceX hiring push for “critical engineering roles” to build “AI satellites in space” is being highlighted as evidence the “data center in orbit” idea is moving into execution—see the hiring screenshot in SpaceX hiring callout.

Relative to earlier orbital data center discourse, this is a concrete shift from speculation to org-building and headcount planning, continuing the storyline from orbital datacenters.

AMD projects 60%+ annual data center growth and 40% TAM CAGR to 2030

AMD (datacenter growth outlook): AMD is being quoted as projecting 60%+ yearly data center revenue growth over the next 3–5 years, plus a ~40% CAGR for its data center TAM from 2025 to 2030, per growth projection note.

For AI infra planners, the relevant detail is that vendors are framing the next cycle as sustained demand growth rather than a single-year spike, with the implication that capacity planning (power, racks, networking, liquid cooling) remains the gating function rather than model availability alone.

📏 Evals & observability: leaderboards, horizon bets, and real-world failure cases

Today’s eval content spans model leaderboards (design/math), forward-looking horizon evals, and practical reliability tests (e.g., chart parsing). Separate from research papers (which are filed under Research).

FrontierMath shows Opus 4.6 jump on hardest Tier 4 problems

FrontierMath (Epoch AI): Following up on FrontierMath parity (tiers 1–3 parity chatter), a shared leaderboard screenshot shows Opus 4.6 reaching 10/48 on Tier 4 (hardest) problems versus Opus 4.5 at 2/48, while still sitting near GPT-5.2 (high) on the 290-problem Tier 1–3 aggregate, as shown in FrontierMath leaderboard screenshot.

Treat this as provisional without the full eval artifact in-thread, but it’s a concrete datapoint that builders are already using to justify “real jump in math” narratives around Opus 4.6, as stated in FrontierMath leaderboard screenshot.

Chart-to-table extraction is still a weak spot for VLM agents

LlamaCloud chart parsing: A small comparative test reports that multiple VLMs struggle to convert line charts into precise tables; GPT-5.2 Pro is described as closest but “spends an absurd number of tokens” reasoning point-by-point, while the team’s dedicated parser does better, as shown in Chart parsing failure report.

The operational takeaway is that “visual reasoning” claims often mask a sharp drop-off when tasks require coordinate-level precision rather than coarse scene understanding.

Claude Opus 4.6 tops DesignArena leaderboard

DesignArena (Arcada Labs): Claude Opus 4.6 is shown as the #1 model on DesignArena’s “Model Performance” view, with the leaderboard also listing a separate Opus 4.6 “Thinking” variant just below it, suggesting evaluators are still deciding how to represent paired variants in rankings, as shown in DesignArena rank screenshot.

The immediate engineering implication is that “design/UX output quality” is being tracked as its own competitive surface (separate from pure coding), and model variants with different reasoning settings are starting to create presentation/interpretation ambiguity in public scoreboards.

Grok-Imagine-Image improves the Image Arena Pareto frontier

Image Arena (Arena.ai): Arena claims xAI’s new Grok-Imagine-Image models shift the Pareto frontier (Arena score vs price) for single-image edit, leading across a mid-price band (roughly 2¢–8¢ per image), and lists frontier leaders across price points including OpenAI’s high-fidelity tier and xAI’s mid-tier entries, as described in Pareto frontier writeup.

• Leaderboard placements: Arena also reports Grok-Imagine-Image and Pro debuting in the top 6 across text-to-image and image-edit boards (with edit scores around the low-1300s for Pro), as stated in Top-6 debut summary.

This is an eval story with a budgeting hook: it frames image model selection as an optimization problem (quality-per-dollar), not a single “best model” race.

Long-horizon task evals for GPT-5.3 Codex vs Opus 4.6 are expected soon

Time-horizon evals (METR-style): A thread anticipates that “long horizon tasks” evaluation results for GPT-5.3-Codex and Opus 4.6 will land “in a few weeks,” with the claim that another time-horizon jump is likely, as stated in Horizon eval expectation.

This matters because these horizon plots are increasingly treated as leading indicators for whether agent harnesses can sustain multi-hour work without collapsing into compaction/coordination failures.

Pony Alpha’s writing style is measured as closest to Claude-family outputs

Pony Alpha (OpenRouter) model forensics: A community analysis claims Pony Alpha’s creative writing is most similar to various Claude models, while noting the obvious confound that it could be trained on Claude outputs, as argued in Similarity claim caveat.

This kind of “style distance” evaluation is a practical observability tool for stealth models: it doesn’t prove lineage, but it helps teams set expectations for behavior (e.g., verbosity, naming habits, refusal style) when deciding whether to trial a new endpoint.

A Gemini checkpoint in Arena is being watched for SVG-to-frontend signal

Gemini (Google) checkpoint spotting: A model labeled “gemini-pro” is reported as circulating in AI Arena with “crazy SVG examples,” with the argument that SVG competence correlates with stronger frontend/dev-workflow capability, as described in Checkpoint spotted in Arena.

The report is directional rather than definitive—no official model naming or release note is included—but it highlights how builders are using Arena artifacts as early signals for UI-generation readiness.

Unverified Arena models ‘Karp’ and ‘Pisces’ get compared via SVG tasks

LM Arena model spotting: New anonymous/stealth entries are reported—“Karp-001/002” claiming to be Qwen-3.5 variants and “Pisces-llm-0206a/b” claiming ByteDance lineage—along with a qualitative comparison that Pisces produced a better Xbox controller SVG in under 100 lines versus Karp taking 600+ lines, as stated in Model sightings report.

This is inherently noisy (self-reported model identity), but it shows how the community is using compact, inspectable artifacts like SVG as a high-signal eval substrate when traditional benchmarks lag.

💼 Enterprise & market structure: ‘software factory’ bets and SaaS repricing narratives

Business/strategy discourse today centers on extreme agent-first SDLC redesign (no human code/review), token-cost economics, and market narratives about SaaS valuation pressure. Excludes compute buildout (in Infrastructure) and fast-mode pricing mechanics (feature).

StrongDM’s “Software Factory” pushes “no human code or code review” to the extreme

Software Factory (StrongDM): StrongDM’s “Software Factory” framing—“Code must not be written by humans” and “Code must not be reviewed by humans”—is getting treated as a concrete end-state for agentic SDLC redesign, per Simon Willison’s writeup in Software Factory overview and the underlying description on post details. The token-economics shock is part of the story too, with Willison calling out the claim of “$1,000/engineer/day in token spends” in his follow-up in Token spend critique.

• Validation model: The approach leans on “scenario testing” (user stories as holdouts) rather than traditional “humans write tests” flows, as described in post details.

• Why it’s contentious: The claim that both implementation and review can be fully automated forces uncomfortable questions about accountability when the same class of model generates code and the checks, which is the central tension surfaced in Software Factory overview and revisited in Token spend critique.

Dan Shapiro’s “Five Levels” frames the path from copilot to “dark factory” SDLC

Five Levels (Dan Shapiro): A “levels” model for AI-assisted software work—ranging from “spicy autocomplete” to fully autonomous, non-interactive “factory” modes—is being used as a shared vocabulary for discussing where teams actually sit on the automation curve, as linked in Levels framing post and laid out in levels framework. It’s explicitly about process redesign, not model benchmarks. Short version: it’s a map for how orgs change when agents move from “help me write” to “run the whole pipeline.”

SaaS selloff narrative meets “panic is short-lived” counter-essay

SaaS valuation discourse: A specific market story is circulating that “models launched this week” triggered a SaaS/services drawdown; one framing claims “nearly $1T” was shaved from valuations, as shown in SaaSpocalypse note. Dan Shipper’s counterpoint is that the selloff reflects investor confusion about how software changes next, and he points back to his earlier “what comes after SaaS” thesis in SaaS selloff take and essay link.

Treat this as narrative, not measurement: the tweets don’t include a unified dataset for the drawdown mechanics, but they do show how quickly “agentic repricing” is becoming a default explanation.

A pricing split emerges: $20–$200 for most work, $1K–$10K for coding-heavy leverage

Token economics (market view): A concrete pricing prediction in Subscription tier thesis argues frontier systems may get more expensive while “intelligence per dollar” falls; most economically valuable work lands in $20–$200 subscriptions because human oversight remains binding, while $1K–$10K subscriptions concentrate in coding use cases where speed/parallelism pays back. It’s an attempt to explain why “premium agents” might be a distinct SKU from mass-market assistants.

Levie: enterprise software shifts from user workflows to agent workflows

Enterprise platform posture (Box / Levie): Levie argues every enterprise software product has to become a platform play because agents will do more work with tools and data than humans ever did, implying business models and “access points” need to be agent-native, as stated in Platform for agents claim. This is a market-structure claim about how incumbents defend distribution when the primary “user” becomes a tool-using agent.

Pricing thesis: “SaaS to $0,” agentic software priced like headcount

Agentic software pricing: One explicit thesis is that “traditional SaaS prices” trend toward $0 while agentic software gets priced an order of magnitude higher because buyers treat it as labor replacement—“at least 10×,” per SaaS to zero thesis. It’s a demand-side argument about willingness-to-pay, not an inference-cost claim. Short sentence: this is about value capture.

Altman: tech CEOs are betting on a one-person $1B company

Company formation (OpenAI / Sam Altman): A repeated quote attributes to Altman a private “betting pool” among tech CEOs for the first year a one-person billion-dollar company appears, as relayed in One-person $1B quote. This is a clean proxy for what leaders think “agents as leverage” could do to firm size and headcount norms.

SaaS “not dead” framing shifts to incumbents vs agent-native rebuilds

Incumbent pressure narrative: A separate take in SaaS is not dead claim says SaaS overall grows (“more software”), but specific incumbents (named examples include ServiceNow and SAP) face structural pressure because the product category shifts toward agent-native interaction and cost structure. This is still opinion. It’s also consistent with the week’s broader “process leapfrogging” talk.

🎬 Generative media: Kling 3.0 prompting, SeedDance/“Severance” teasers, and Grok image gains

Creator/vision posts remain a sizable slice today: high-quality video prompting guides, new video-with-audio workflow nodes, and image-model leaderboard moves. This stays separate from LLM evals/coding tooling.

Grok-Imagine-Image shifts the Image Arena price–quality frontier

Grok-Imagine-Image (xAI): Arena reports Grok-Imagine-Image and Pro improved the Pareto frontier on Image Arena, claiming best performance across roughly $0.02–$0.08 per image bands in edit tasks, as shown in the Pareto frontier analysis.

• Leaderboard placement: Arena also calls out the new models debuting top-6 positions across text-to-image and image-edit, framing xAI as a top-3 image provider alongside OpenAI and Google, according to the rankings summary.

The practical takeaway for teams is that procurement can treat image quality as a “frontier curve” (score vs $/image) rather than picking a single best model, matching the framing in the Pareto frontier analysis.

ComfyUI ships Vidu Q3 template for audio-synced video generation

Vidu Q3 (ComfyUI): ComfyUI says Vidu Q3 is now live in ComfyUI, emphasizing synchronized audio/dialogue, auto/manual cinematography control, and 1–16s duration options, as described in the feature announcement.

• Workflow impact: This is another step toward “one graph does video + sound,” reducing the glue code/devops needed to stitch video gen and TTS/ADR pipelines together, per the feature announcement and the template update note, which also points to Comfy Cloud entrypoints like Comfy Cloud image-to-video.

SeedDance 2.0 previews trigger ‘daily episodes’ speculation

SeedDance 2.0 (ByteDance): Multiple posts point to SeedDance 2.0 as a quality jump, including claims it “could be a Hollywood clip,” alongside the broader take that “2026 is about to be the age of infinite anime,” per the SeedDance clip post and the daily episodes speculation.

Treat this as early hype until there’s an official spec/API surface; still, the repeated framing in the daily episodes speculation suggests creators are already planning workflows assuming higher one-shot shot quality and less manual cleanup.

ByteDance ‘Severance 2.0’ teaser feeds next video-model release cycle

Severance 2.0 (ByteDance, rumored/teased): A teaser claims ByteDance is “about to release severance 2.0,” with early tests described as a step-function improvement, per the teaser post.

There’s no accompanying public doc/spec in the tweet, so treat identity and capabilities as unverified; the visible signal is that the next wave of high-end video models is being pre-marketed via clips and creator comparisons, as in the teaser post.

Kling 3.0 “best quality” prompt guide circulates with high-fidelity examples

Kling 3.0 (Kling): A creator post pitches a concise “3 steps” guide for getting better results from Kling 3.0, paired with a fast-cut demo of higher-fidelity character shots, as shown in the quality guide post.

The post is promotional (discount framing), but the underlying signal is that “prompting playbooks” are becoming the de facto way teams share reproducible video quality settings, per the quality guide post.

Kling 3.0 multi-shot and bind elements used for character consistency

Kling 3.0 (Kling): Another practical workflow tip is combining multi-shot generation with bind elements to keep characters consistent across shots, demonstrated in the consistency workflow note.

For engineers building “shot planners” or prompt compilers, this suggests Kling’s control surface is moving toward stable entity binding (character/props) rather than pure text-only regeneration, consistent with what’s shown in the consistency workflow note.

Kling 3.0 prompt pattern: FPV shaky-cam plus dialogue beats for coherence

Kling 3.0 (Kling): A concrete prompting pattern making the rounds is to specify camera grammar (FPV shaky-cam vlog) plus dialog delivery constraints (accent, unscripted tone, specific beats), with a full prompt and resulting clip shared in the prompt example.

This is useful for teams trying to control “prompt coherence” (action continuity and temporal consistency) because it packs motion + interaction constraints into one prompt rather than relying on post-hoc stitching, as illustrated in the prompt example.

Genie 3 distribution limits frustrate builders despite strong demos

Genie 3 (Google): A recurring complaint is that Genie 3 “feels like nothing” to most builders because it’s gated behind a ~$250/month plan with no pay-as-you-go API, per the access gripe.

This is an adoption/iteration constraint, not a capability claim: without API access, teams can’t slot the model into batch pipelines, eval harnesses, or production experiments, matching the sentiment in the access gripe.

Grok’s $1.75M ad contest turns distribution into the scoring function

Grok ad contest (xAI ecosystem): A $1.75M prize pool is driving a wave of Grok-made commercial entries, with creators highlighting that judging is “primarily on Verified Home Timeline impressions,” per the contest roundup.

• Why this matters to builders: This is a rare case where the benchmark is not aesthetic quality or preference voting, but distribution mechanics (impressions), as spelled out in the contest roundup and reiterated in the impressions rule excerpt.

🚀 Model churn outside the big two: stealth checkpoints, open MoEs, and Gemini moves

Beyond Claude/Codex, today includes multiple ‘what is this model?’ sightings and open-model drops/rankings (OpenRouter + Arena). Keeps to model availability/identity—not tool integrations.

Pony Alpha identity speculation shifts toward GLM-5 as OpenRouter listings appear

Pony Alpha / GLM-5 (OpenRouter): Following up on Stealth model (free, logged access), builders are now pointing to GLM-5 as the likely underlying model after “GLM-5 available on OpenRouter” posts surfaced in the GLM-5 on OpenRouter screenshot and “Pony Alpha” continued circulating as the mystery checkpoint in the Stealth model guess.

A second thread adds a different kind of evidence: stylistic similarity analysis claims Pony Alpha’s creative writing clusters closest to multiple Claude variants, which could either indicate model family resemblance or training on Claude outputs, as shown in the Similarity table and echoed in the Creative writing similarity note.

Arena sightings surface Karp and Pisces models with Qwen-3.5 and ByteDance claims

AI Arena stealth checkpoints: New “what is this model?” sightings include “Karp-001/002” self-identifying as Qwen-3.5 variants and “Pisces-llm-0206a/b” self-identifying as ByteDance models, per the Arena model sightings report.

A small but practical tell for builders: Pisces reportedly produced a cleaner SVG (Xbox controller) in under 100 LOC vs Karp needing 600+ LOC, per the SVG comparison note; historically, strong SVG correlates with better frontend/codegen ergonomics, though none of this is yet backed by an official model card in the tweets.

Kimi K2.5 jumps to #1 on OpenRouter rankings as usage becomes the adoption proxy

Kimi K2.5 (Moonshot AI) on OpenRouter: OpenRouter reports Kimi K2.5 as the most popular model on the platform in the #1 model post, with the rankings page linked in OpenRouter rankings; a separate screenshot shows Kimi leading weekly tokens (856B, +311%) in the Leaderboard screenshot.

One plausible driver is distribution through downstream traffic: opencode/zen says routing a portion of Kimi K2.5 programming traffic through OpenRouter “instantly made it the #1 programming model,” as shown in the Programming category chart, followed immediately by capacity-error chatter in the Capacity note.

Step 3.5 Flash open-source MoE claims 196B total params with 11B active per token

Step 3.5 Flash (StepFun): A link roundup positions Step 3.5 Flash as an open-source sparse Mixture-of-Experts model, claiming 196B total parameters with only 11B activated per token for “intelligence density,” per the Model card screenshot.

This lands as another “frontier-ish but open” option in the same week where engineers are explicitly comparing closed vs open routing and cost/perf tradeoffs across gateways and harnesses.

Gemini CLI PR suggests Gemini 3 graduates from preview by becoming the default

gemini-cli (Google): A merged PR removes the previewFeatures flag and changes the default model to “Gemini 3,” which reads like a quiet “GA by default” graduation for the CLI surface, as shown in the Merged PR screenshot.

This matters operationally because it changes what you get on fresh installs and in unattended environments that rely on defaults (CI runners, devcontainers, team bootstrap scripts), even if your code never touched the preview toggle.

📚 New papers & benchmarks: RL objectives, agent defense, and uncertainty evals

Research links today skew toward RL training objectives, agent defense frameworks, and new agent benchmarks for real-world uncertainty. Excludes applied workflow writeups (captured in workflows/frameworks).

Spider-Sense proposes event-driven agent defense with hierarchical screening

Spider-Sense: A new agent-defense framework proposes intrinsic risk sensing (agents stay lightweight until risk is detected) plus hierarchical adaptive screening that escalates from cheap similarity checks to deeper reasoning on ambiguous cases, per the Hugging Face paper. It’s explicitly positioned as an alternative to mandatory, always-on guardrails.

The authors claim low attack success rates and low false positives under their evaluation setup, as summarized in the Hugging Face paper.

CAR-bench measures whether agents stay consistent and admit limits under uncertainty

CAR-bench: A new benchmark targets real-world agent behavior under ambiguous inputs—measuring consistency, uncertainty management, and capability awareness in an in-car assistant setting, per the Hugging Face paper. It pairs an LLM-simulated user with domain policies and a fairly large tool surface.

One concrete detail: CAR-bench includes 58 interconnected tools spanning navigation/productivity/charging/vehicle control, as stated in the Hugging Face paper. That’s closer to messy agent reality than single-tool evals.

MaxRL reframes RL training as approximating maximum-likelihood optimization

MaxRL (Maximum Likelihood Reinforcement Learning): A new paper introduces a sampling-based RL framework that targets maximum likelihood of correct outcomes (not just a lower-bound surrogate), and claims it can interpolate between standard RL and exact maximum-likelihood as you spend more compute, as described in the ArXiv paper. This is aimed at domains like code/math where you often have binary success signals.

A notable headline claim is up to 20× better test-time scaling efficiency versus GRPO-style training, per the paper summary in ArXiv paper. That’s a big number.

“Drifting Models” suggests a one-step alternative to diffusion via attraction/repulsion

Drifting Models (Kaiming He’s team): A circulated summary claims a new paradigm for generating high-quality images in a single step, using dynamics that pull samples toward real images and push away from other generated samples to avoid collapse, per the Paradigm summary.

The key engineering angle is whether this can preserve diversity and stability without multi-step diffusion. Details are still thin in the tweet.

LUSPO targets response length variation in RLVR without performance tradeoffs

LUSPO (Length‑Unbiased Sequence Policy Optimization): A new RLVR algorithm is proposed to reveal and control response length variation while improving over GRPO/GSPO across dense and MoE setups, per the Hugging Face paper. The stated goal is to remove length-related bias that can distort RL training signals.

The practical takeaway is fewer “rewarded because longer” behaviors, while still improving quality, as summarized in the Hugging Face paper.

ICLR 2026 acceptance highlights scaling laws for embedding dimension in retrieval

Information retrieval scaling laws: An ICLR 2026 acceptance callout highlights work on scaling laws for embedding dimension in IR, per the Acceptance note.

If the results are robust, this could inform how teams pick embedding dimensionality vs cost/latency in retrieval systems. The tweet doesn’t include a link or metrics.

📅 Builder events & guides: hack-days, workshops, and “how to use the tool” playbooks

Today’s community artifacts are mostly practical: hackdays, interview series, and dense guides for adopting agentic coding tools. Excludes product release notes (covered in product categories).

Aakash Gupta’s “Master Claude Code” infographic guide resurfaces

Claude Code (adoption guide): Aakash Gupta’s “Master Claude Code: The Complete Guide for Everyone” infographic (v1.0, January 2026) is being reshared as a single-page field manual covering CLI mental models, core commands, skills/CLAUDE.md project memory, and prompting techniques, as shown in Guide screenshot.

It’s not release notes; it’s an onboarding map that codifies the “how to operate the tool” layer that teams keep re-deriving.

AITinkerers SF hosts an OpenClaw “unhackathon” format

OpenClaw (AITinkerers SF): AITinkerers SF kicked off an OpenClaw “unhackathon” with explicit constraints—“no vendors, no sponsors, no credits, no pitches”—and a focus on builders working and learning from each other, as described in Event format note. Short version: it’s community lab time, not a demo day.

The host venue was called out as WorkOS in Event format note, which signals these events are leaning toward shared troubleshooting and hands-on harness work rather than slide decks.

Cerebral Valley × Anthropic hackathon dates and application flow appear in-app

Claude Code hackathon (Cerebral Valley × Anthropic): Following up on hackathon—virtual hackathon announcement—the in-app event page screenshot shows the run window as “Tue Feb 10 … Mon Feb 16” and a workflow where applicants can land in “Application Pending,” as captured in Application screenshot.

The screenshot also anchors the branding as “Built with Opus 4.6: a Claude Code hackathon,” with hosting attributed to Cerebral Valley and Anthropic in Application screenshot.

AI Builder Club announces an Augment “Context Engine MCP” workshop

MCP workshops (AI Builder Club): AI Builder Club promoted a weekly workshop session featuring Augment, framing it as a launch + architecture walkthrough for “Context Engine MCP” and their code-view/context systems, per Workshop announcement. It’s positioned as part of an ongoing series (“25+ sessions”) with credits and technical content also mentioned in Workshop announcement.

This is another signal that MCP adoption is spreading through hands-on “watch how it’s wired” sessions, not only docs.

DSPy publishes a 13-episode DSPy power-user interview playlist

DSPy (community learning): DSPyOSS shared a playlist collecting 13 recent conversations with DSPy power users, including an episode on dspy.RLM, positioned as a way to get implementation-level nuance without hunting across scattered threads, per Playlist screenshot.

The artifact is a lightweight “how builders actually use it” library, which tends to be more operationally useful than one-off docs when you’re trying to map patterns to your own agent stack.

🤖 Embodied AI updates: humanoid demos and durability pushes

Robotics posts today are mostly humanoid capability demos (dexterity/locomotion) and robustness claims (cold-weather endurance).

Biomimetic humanoid “Moya” claims warm skin (32–36°C) and micro-expressions

Moya (Chinese robotics startup): A new biomimetic humanoid demo claims a “warm skin” surface held at 32–36°C, 92% walking posture accuracy, and the ability to replicate human micro-expressions, with a reported price around $170K and height 1.65m as described in biomimetic robot specs.

• Why it matters for embodied AI: If the sensing/actuation behind “warm skin” is real, it implies a push toward richer tactile/thermoregulatory interfaces (and more human-facing HRI constraints) rather than just locomotion benchmarks, based on the feature list in biomimetic robot specs.

Unitree humanoid reportedly walks 130,000 steps at −53°F in Xinjiang

Unitree cold-endurance test (Unitree): A post claims a Unitree humanoid became the first to walk 130,000 steps at −53°F in Altay, Xinjiang, framing “environmental durability” (cold, ice, long-run reliability) as a differentiator, per cold endurance claim.

• What’s still unclear: The tweet provides no published test protocol (battery derating, actuator thermal limits, slip rate, falls, payload), so treat the headline number as a durability signal rather than a standardized benchmark, as presented in cold endurance claim.

Unitree G1 snow-clearing demo shows how far “useful outdoors” still is

Unitree G1 (Unitree): A parking-lot snow-clearing clip shows the humanoid struggling to push a shovel consistently, underscoring how quickly real-world contact dynamics (slip, uneven loads, balance recovery) dominate the task, as shown in G1 snow-clearing clip.

• Engineering read-through: This is a compact example of where perception is not the bottleneck; whole-body control, end-effector force control, and failure recovery policies are doing the heavy lift, which you can see in the stumbles and intermittent progress in G1 snow-clearing clip.

🗣️ Voice assistants & speech stacks: TTS upgrades and assistant voice UX

Voice-related items today include new TTS models, assistant voice UX/capacity issues, and major assistant integrations (Siri/Gemini, Gemini Live).

Rumor points to a Siri refresh with Gemini shipping “next week” via Apple Intelligence beta

Siri (Apple) + Gemini (Google): A rumor claims “the beta version of the new Siri with Gemini launches next week,” alongside a screenshot of the Apple Intelligence (beta) waitlist flow in iOS, as shown in the beta claim post.

If accurate, this is a concrete distribution milestone for voice assistants: it implies a system-integrated Siri UX that can hand off to a frontier model layer (Gemini) rather than remaining a purely on-device intent router; no official Apple or Google confirmation appears in today’s tweets.

Claude voice UI collides with capacity gating: “Claude is speaking…” while chat is unavailable

Claude voice (Anthropic): Following up on Voice mode testing (upgraded voice spotted), a new screenshot shows Claude in a voice session state (“Claude is speaking…”) while the product simultaneously displays “Due to capacity constraints, chatting with Claude is currently not available” plus a “Keep working” button, as captured in the capacity screenshot.

This is a practical reliability signal for voice UX: voice and chat surfaces appear coupled to the same capacity gates, so voice-mode experiences may inherit the same availability constraints during peak load.

VisionClaw open-sourced: Gemini Live voice+vision for Ray‑Ban Meta glasses with OpenClaw tool actions

VisionClaw (open source): A new open-source project wires Meta Ray‑Ban smart glasses into the Gemini Live API for real-time voice+vision interaction, with optional OpenClaw integration to execute agentic actions across tools/apps, as described in the repo announcement and its linked GitHub repo; related posts also frame it as “Gemini Live + OpenClaw bot,” per the integration note.

This is a concrete reference architecture for speech stacks: live audio I/O via Gemini, plus a tool/action layer (OpenClaw) that can turn spoken requests into authenticated side effects (messages, lists, web tasks) without building a bespoke orchestrator.

Bulbul V3 text-to-speech announced with a “more human” quality claim

Bulbul V3 (TTS model): A “Drop 5/14” announcement introduces Bulbul V3 and claims it “raises the bar for how human it sounds,” according to the Bulbul V3 teaser retweet.

The tweet doesn’t include benchmark audio samples, pricing, latency, or API surfaces, so the operational details for deploying it in a voice stack remain unclear from today’s material.