Nano Banana Pro holds 13 objects reliably – fails around 47 in 50-test run

Stay in the loop

Free daily newsletter & Telegram daily report

Executive Summary

GlennHasABeard published a creator-run stress report on Nano Banana Pro (Adobe Firefly): 50 progressively harder “many objects in one image” prompts; a reported safe limit of 13 objects before compositions start slipping; a “stress point” around 47 objects where duplication and component loss show up; a notable behavior where the next run often self-corrects without prompt changes, documented with a public artifact link. It’s not a vendor benchmark, but it turns “how busy can key art get?” into a repeatable breakpoint test.

• SpaceX–xAI: SpaceX made its acquisition of xAI official; the blog frames a vertically integrated stack (AI + launch + connectivity + X) and pivots to power/cooling constraints for terrestrial data centers; no near-term Grok Imagine product changes spelled out.

• DeepMind Game Arena: Kaggle/DeepMind expand beyond chess to Werewolf and Poker; aims to benchmark social reasoning (consensus, bluffing, uncertainty) rather than single-solution tasks.

• ElevenLabs v3: posts say the voice model is out of alpha; “full version” messaging arrives without a public changelog (quality/latency/pricing still unstated).

Across the feed, the center of gravity is measurability: object-count breakpoints, social-game evals, and “out of alpha” stability claims; the missing piece is independent replication on standardized harnesses.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught

Top links today

- OpenAI Codex app for macOS

- Kaggle Game Arena results and analysis

- Agent Lightning open-source RL framework

- AirLLM repo for low-VRAM LLMs

- PaperBanana academic illustration automation paper

- LingBot-World open source world generator

- Luma Dream Brief $1M ad competition

- THINKSAFE self-generated safety alignment paper

- ASTRA agentic trajectories and RL arenas

- TensorLens transformer analysis with attention tensors

- TTCS test-time curriculum synthesis paper

Feature Spotlight

xAI joins SpaceX: the “AI + rockets + X” megastack becomes real

SpaceX’s acquisition of xAI signals a single org controlling model R&D, massive compute ambition, and a built-in distribution channel—potentially reshaping how Grok/creative tooling scales and ships.

The dominant cross-account story today is SpaceX acquiring xAI (and related filings/memes), framed as vertically integrating AI, launch, connectivity, and distribution. This category covers the merger news and creator-relevant implications; excludes day-to-day Grok Imagine experiments (covered elsewhere).

Jump to xAI joins SpaceX: the “AI + rockets + X” megastack becomes real topicsTable of Contents

🚀 xAI joins SpaceX: the “AI + rockets + X” megastack becomes real

The dominant cross-account story today is SpaceX acquiring xAI (and related filings/memes), framed as vertically integrating AI, launch, connectivity, and distribution. This category covers the merger news and creator-relevant implications; excludes day-to-day Grok Imagine experiments (covered elsewhere).

SpaceX announces it has acquired xAI

xAI joins SpaceX (SpaceX/xAI): SpaceX’s acquisition of xAI is now official, with xAI posting “One Team 🚀” and linking to the announcement on its site in the One Team post and the accompanying Acquisition post. For creators, the immediate relevance is the stated intent to fuse model development with a distribution and deployment stack that already spans rockets and satellites—something that can influence where creative AI products get shipped and surfaced.

What’s still not spelled out in the public posts is any near-term change to Grok Imagine tooling or creator-facing product surfaces; today’s signal is corporate structure + narrative rather than a feature drop.

SpaceX/xAI pitch the merger as a vertically integrated AI-to-orbit stack

SpaceX/xAI merger rationale: A screenshot of the SpaceX/xAI blog frames the deal as a “vertically-integrated innovation engine” combining “AI, rockets, space-based internet, direct-to-mobile… and… X,” then pivots into an energy/compute argument—“AI… dependent on large terrestrial data centers” with power/cooling constraints, as shown in the Blog post excerpt. This matters to creative teams because it’s a direct claim that future model scale and availability could be shaped by deployment constraints (power, cooling) and by owning distribution channels.

• Stack framing: The post explicitly bundles AI capability + launch + connectivity + “real-time information” distribution in a single narrative, per the Blog post excerpt, rather than treating xAI as a standalone model lab.

Treat this as positioning language for now; there are no creator-facing product SKUs, pricing, or rollout timelines in the tweets beyond the announcement itself.

Nevada filings surface “K2 Merger Sub 2” for SpaceX/xAI

SpaceX/xAI corporate filings: A Nevada business filing allegedly lists the merging entity as “K2 Merger Sub 2,” explicitly framed as a Kardashev Type II reference in the K2 Merger Sub filing discussion; a follow-up claims it was a shell and that “XAI Holdings LLC is the surviving entity,” per the Shell company clarification. For creators, this is mostly a rights-and-ownership breadcrumb: where IP, product contracts, and licensing relationships may end up living post-merger.

The tweets don’t provide the filing document itself (only the claim), so treat the details as provisional until an original filing artifact is shared.

“Fellow rocket scientists” meme frames the org-culture collision

Org-culture framing: A reaction meme casts “xAI employees after the SpaceX acquisition” as someone in a NASA-style suit saying “HOW DO YOU DO, FELLOW ROCKET SCIENTISTS?,” as shown in the Fellow rocket scientists meme. It’s a small but telling creative signal: people expect a culture and workflow collision, not just a cap-table event.

“Twitter is a space company” meme rides the xAI–SpaceX news cycle

Creator zeitgeist signal: The “Twitter is a space company? Always has been” astronaut meme circulates in response to the xAI–SpaceX announcement, as shown in the Space company meme. For storytellers, this is a clean read on how the internet is compressing the merger into a single, legible narrative: X as the media layer for a space/AI industrial stack.

People are turning the SpaceX/xAI blog into reaction content

Virality format: “Reading the SpaceX / xAI blog post” is posted as a reaction hook, paired with a cigarette-in-hand meme image, in the Blog post reaction thread—showing how quickly the announcement is being repackaged into short-form shareables rather than debated on specifics.

The underlying dynamic for creative operators is that “company structure news” is now instantly treated as content fuel; the tweets don’t add technical details beyond what’s shown in the blog excerpt.

“Tesla next?” speculation pops up right after SpaceX–xAI

Speculation spillover: The merger instantly triggers “Tesla next?” questions, as shown in the Tesla next speculation. For creators, this mainly signals how quickly audiences will assume further consolidation across the Musk-adjacent tool and distribution ecosystem.

There’s no corroborating information in the tweet set beyond the question itself.

Elon’s “Starbase has come a long way” becomes merger mood-setting

Merger narrative tone: Elon Musk’s “Starbase has come a long way” post gets pulled into the day’s acquisition storyline as a symbolic setting marker in the Starbase has come a long way reposts. For AI creatives, it’s not a feature update—it’s the aesthetic/brand backdrop being reinforced: AI lab + launch site as one story.

No new creator tooling details are attached to this post in the tweet set.

🎬 Video generation in the wild: Runway pipelines, Grok Imagine action, Kling/Hailuo/Vidu tests

High volume of practical video creation posts: ad-grade workflows (Runway), promptable action/VFX sequences (Grok Imagine + others), and model comparisons. Excludes the SpaceX↔xAI merger storyline (handled in the feature).

AI ad-parody realism: an AirPods Pro-style commercial that reads as production

AI ad aesthetics (signal): A shared clip frames an AI-made “AirPods Pro commercial” as accurate enough that “Apple almost deleted it,” pointing at how far commercial pacing, blocking, and gag timing have moved toward recognizable brand language, per the Commercial clip.

No tool stack is named in the post, so treat it as a realism signal rather than a reproducible workflow, as shown in the Commercial clip.

Hailuo 2.3 chase recipe that bakes in camera blocking and an ending beat

Hailuo 2.3 (MiniMax/Hailuo): A concrete chase template is circulating that hard-specs blocking (soldier sprinting toward camera), scene complexity (burning vehicles, debris, explosions), and the camera plan (“handheld shaky cam at ground level moving backward”)—including an ending beat (creature lunges), per the Full Hailuo prompt.

Because the prompt forces both the camera rig and the finale, it’s usable as a drop-in “cold open” shot when you need urgency fast, as shown in the Full Hailuo prompt.

Kling 2.6 vs Grok Imagine: the same transformation prompt, two motion signatures

Kling 2.6 vs Grok Imagine (A/B test): A clean comparison format is spreading: run the exact same “transformation” prompt through two video models and publish side-by-sides, which makes motion quality and style drift obvious without over-explaining, as shown in the Side-by-side comparison.

This kind of A/B is useful for picking which model you want for morphs (shape readability) versus flair (stylization), because the input spec is held constant, per the Side-by-side comparison.

Midjourney stills + Grok Imagine motion as a “look lock” for Moebius-style sci‑fi

Midjourney + Grok Imagine (stack): One creator argues the pairing is currently the most reliable path to a specific European-comics sci‑fi look (explicitly citing Moebius), saying the combo is “unbeatable right now,” with the resulting animation feel shown in the Moebius-style claim.

The implied workflow is “design language in Midjourney, movement pass in Grok,” with style references mixed as a reusable preset (shared “with subscribers tomorrow”), per the Moebius-style claim.

Kling 2.6 chase-in-environment test: cliff climbing escape sequence

Kling 2.6 (Kling): A short “escape the creature” sequence is used as a capability check for fast pursuit, vertical movement, and readable peril beats—framed as something that “would have been impossible a couple of months ago,” per the Climber chase demo.

It’s a good stress case because the motion is constrained (cliff face) but still chaotic, so failures show up quickly as foot placement, speed ramps, or creature proximity errors, as shown in the Climber chase demo.

Metal Monday: stitching Grok + Genie clips into an arcade-style music video edit

Grok + Genie (edit stack): A “Metal Monday” experiment combines clips from Grok and Genie into a music-video-style cut using an Asteroids visual motif, positioned as “Genie has some use cases,” per the Metal Monday drop.

It’s another example of a practical creative move: treat generative tools as shot suppliers, then let the edit (theme, pacing, repetition) do the storytelling work, as shown in the Metal Monday drop.

Recurring shots + theme + basic script: a lightweight structure for AI shorts

Short-form AI filmmaking (practice pattern): A creator shares a short film built around recurring shots and a basic script—explicitly framed as “not polished,” but part of learning how to build worlds with consistent visual motifs, per the Short film note.

The takeaway is a structure-first approach: repetition and a clear motif can make limited shot consistency feel intentional rather than “model variance,” as described in the Short film note.

“Grok is among the top video models” becomes a creator talking point

Grok video (xAI): A straightforward sentiment shift shows up in the claim that Grok is now “among the top AI video models,” backed mainly by a quick demo-style clip rather than benchmarks, per the Hot take and demo.

This is useful as market signal (what creators are reaching for today), but it’s still anecdotal—there’s no shared eval artifact in the tweets, as implied by the Hot take and demo.

Grok Imagine’s “type-in, animate-out” vibe is becoming a shareable format

Grok Imagine (xAI): A small but telling behavior pattern: creators are posting short “working with Grok” clips where the interface/typing is part of the piece, ending in a clean logo/type animation, per the Interface clip.

It’s less about filmmaking and more about a new micro-format for showing process plus output in ~10 seconds, as shown in the Interface clip.

Vidu Q3’s short-form niche: motion gags where sound design sells it

Vidu Q3 (Vidu): A quick share highlights a specific use case: short, visually simple motion gags where the exaggerated SFX are the whole point, per the Vidu Q3 SFX share.

It’s a reminder that “video model quality” often reads like “edit quality”—if the motion is basic but the timing feels intentional, it can still land as a finished post, as shown in the Vidu Q3 SFX share.

🧭 World models & playable worlds: pushing Genie 3, alt world generators, and capture loops

Today’s posts continue the ‘world model’ wave: Project/Genie 3 explorations, emergent failure modes (mirrors), and an open-source alternative callout. This is about navigable worlds and world-to-video capture, not standard text-to-video tools.

Genie 3 mirror reflections lag behind actions, sparking a realism checklist debate

Genie 3 (Google): A practical failure mode is showing up in reflective scenes—mirror “reflections” appear delayed/out-of-sync with the subject, which Linus frames as “the new 6-fingers argument,” as shown in Mirror failure demo. This quickly turns into a mini-debate about real mirror physics versus model errors, with pushback captured in Mirror physics joke and a blunt rebuttal in Physics rebuttal.

For creatives, it’s a shot-design constraint: mirrors/glass storefronts/bathroom scenes are still a stress case for playable world renders.

Project Genie team lays out the world-model roadmap in a longform interview

Project Genie (Google DeepMind): A longform conversation with the Project Genie team focuses on how they got to launch, where they expect near-term value for creators, and what they think comes next for world models in 2026, as framed in Interview announcement. It lands while creators are actively stress-testing Genie 3’s limits in the wild, as shown in Limits test clip.

Treat this as direction-setting rather than a spec drop—no hard numbers or new tooling surfaces are stated in the tweets, but it’s one of the clearest “why world models” artifacts in today’s timeline.

A small prompt edit helps keep Genie 3 in “world” mode, not “story” mode

Genie 3 prompting (Google): One repeatable trick is emerging: if you start with an open-ended “how far can I push this,” Genie may respond by writing a narrative; explicitly adding “without writing a story” shifts it back toward direct world-generation behavior, as demonstrated in Prompt edit demo and contextualized by the broader “push Genie 3” exploration in Exploration clip.

This is a clean way to separate “lore text” from “playable output” when you’re iterating fast.

Genie turns a single historic photo into a walk-through establishing shot

Genie reconstruction (Google): A single archival image (Cincinnati’s Price Hill incline, late 1870s context) gets turned into a navigable scene by “walking through” the still—useful for doc re-enactments, alt-history establishing shots, and period mood capture, as described in Historic photo walkthrough.

The tweet’s detail list (signage, date uncertainty, route names) is a nice pattern: attach research notes to the visual so the output stays grounded.

Roach-height POV tours are becoming a simple, reusable Genie shot template

Genie POV capture (Google): A “roaches POV” continuous tour through a food buffet shows a format that’s easy to reuse: ultra-low camera height, long forward motion, and quick parallax reads immediately as character POV and sells scale/comedy/horror beats, as shown in Roach POV video.

It’s a good reminder that world models aren’t only about environments—they’re also about camera grammar you can keep reapplying.

A “portal reveal” beat is a trailer-friendly way to introduce Genie worlds

Genie 3 reveal staging (Google): A short “Enter the cave of wonders” clip uses a portal/title-card style transition to make the environment reveal feel intentional—more like a trailer beat than a raw sim capture, as shown in Cave of wonders clip.

This is a useful compositional wrapper when the underlying world motion is the hero and you need a clear “before/after” moment.

🧪 Prompts & look recipes you can paste today (SREFs, JSON specs, brand kits, realism)

A dense prompt day: Midjourney SREF codes, Nano Banana Pro style spec sheets, photoreal ‘indistinguishable’ recipes, and modular templates for brands and characters. This category is strictly copy/paste assets (not tool capability news).

A PHASE 1–4 “verified_data” ops-prompt for brand heritage visuals

Brand heritage prompting (AmirMushich): A structured “ops-prompt” template asks the model to execute PHASE 1–4, populate a verified_data block with factual evidence, then generate a visual heritage/lineage guide, as outlined in Ops-prompt structure.

The example output is a design lineage infographic (Apple iPhone 16) that maps specific ancestors to present-day features, as shown in Ops-prompt structure.

Midjourney --sref 4034808438 for 1950s comic + Pop Art color blocking

Midjourney (PromptsRef): A “vintage comic vibes” recipe is being pushed as a fast look lock—“--sref 4034808438 --v 7 --sv 6”—with positioning around bold primaries and grainy retro print texture, according to Sref recipe.

Use cases called out include retro packaging, posters, and children’s illustration, as described in Sref recipe.

Nano Banana “$1,000 brand kit” prompt format for rapid brand boards

Nano Banana (AmirMushich): A “30 seconds → $1,000 brand kit” prompt is pitched as a one-shot way to generate a brand base doc plus adapted key visuals, palette, and mockups after filling in [Brand name] and [industry], as described in Brand kit teaser.

The sample output shown in the board includes a palette with hex codes, a primary typeface callout, a logo mark, and brand voice/archetype notes, as illustrated in Brand kit teaser.

A paste-ready Midjourney retro-vector hot air balloon prompt with weighted SREF

Midjourney prompt (ghori_ammar): A retro vector illustration recipe is shared with full parameters—“2D illustration, retro vector drawing of a hot air balloon made of mismatched patterns floating over mountains. --chaos 30 --ar 4:5 --exp 100 --sref 88505241::0.5 1562106”—as posted in Hot air balloon prompt.

The example grid shows the same prompt family producing distinct pattern fills and sky treatments under the same constraint set, as seen in Hot air balloon prompt.

Clinical before/after prompt spec for non-surgical nose filler comps

Before/after realism spec (egeberkina): A detailed JSON prompt targets “non-surgical nose filler” comparisons with strict controls—true side profile, 90mm portrait lens equivalent, identical exposure, and “pores visible, no smoothing”—as written in Nose filler prompt.

It also locks editorial constraints (no text/arrows, no identity change, realistic limits) so the output reads like a clinic comparison photo, per Nose filler prompt.

Midjourney --sref 1701237297 for prism refraction and glass depth

Midjourney (PromptsRef): A prism/refraction-heavy “glass luxury” style is being shared under the single code “--sref 1701237297,” with the rationale framed as “depth and refraction” being the differentiator, per Prism style pitch.

A longer breakdown of the style traits and prompt keywords lives in the Style guide.

Midjourney --sref 2570201426 for violet haze magical realism

Midjourney (PromptsRef): A purple magical-realism haze look is being circulated as “--sref 2570201426,” described as adding mist/fluid-light texture; the post also suggests reinforcing it with keywords like “Volumetric Light” and “Hyper-detailed Texture,” per Violet haze recipe.

More style notes and keyword guidance are collected in the Style guide.

Midjourney --sref 2857892971 for gothic neon graffiti texture

Midjourney (PromptsRef): A dark gothic + neon “chalk graffiti” texture look is being shared as “--sref 2857892971,” positioned for alt characters and cover-art aesthetics, according to Gothic neon recipe.

The expanded prompt keyword set and style description are documented in the Style guide.

A three-code Midjourney SREF blend for retro-futuristic metallic gloss

Midjourney (PromptsRef): A “top SREF” post spotlights a three-code blend—“--sref 295607884 3123035259 2811012579 --niji 7 --sv6”—and characterizes it as retro-futuristic metallic airbrush with heavy specular gloss, per Top Sref blend.

The post also points to a broader SREF library in Sref directory, alongside example use cases like merch graphics and album-cover aesthetics described in Top Sref blend.

Origami animal prompt template for clean white-background characters

Origami prompt (icreatelife): A short template is being shared for cute paper-craft characters on a white background—“origami adorable [COLOR] [ANIMAL], kawaii, paper craft, cinematic, white background, high detail, HD, ultra detailed”—as posted in Origami prompt.

The attached examples show the same structure working across multiple animals/colors (giraffe, pig, turtle, elephant), as seen in Origami prompt.

🖼️ Image models & lookdev: Firefly/Nano Banana stress tests, character design, product renders

Image generation posts lean toward practical lookdev and evaluation: Nano Banana Pro object-count limits, Firefly-made puzzle art, and character/product design outputs. Excludes prompt dumps/SREFs (handled in Prompts & look recipes).

Nano Banana Pro hits 50 objects, with a clear “safe zone” and self-recovery behavior

Nano Banana Pro (Adobe Firefly): Following up on Object test (teased stress test), Glenn published results from 50 progressively harder “objects in one image” prompts—with a safe limit of 13 objects, a stress point at 47 objects, and repeated self-correction on the next run without prompt changes, as summarized in the 50-test recap and documented in the Full report.

• What “capacity” looks like in practice: Early tests (1–13) stayed near-perfect; higher counts triggered specific failure modes (for example duplication and component loss), but the next test often snapped back to correct composition per the First failure then recovery and the 50-test recap.

• Why creatives care: This turns “how busy can my key art be?” into something you can measure—useful for poster collages, I‑SPY scenes, product boards, and crowded story frames where object integrity matters.

Treat the stats as creator-run, not a vendor benchmark—the useful part is the repeatable methodology and the clearly stated breakpoints in the Full report.

Firefly AI‑SPY Level .005 shows the “hidden objects” engagement format maturing

Adobe Firefly (GlennHasABeard): The AI‑SPY series shipped Level .005, continuing the format of generating a dense scene plus a concrete “find list” (a ready-made engagement loop for social posts and printable puzzle pages), as shown in the Level .005 puzzle image.

• Format signal: The image includes an explicit count-based key (for example multiple octopus/robot instances), which makes it scorable and replayable—core properties if you’re turning Firefly outputs into puzzle content rather than single images.

This follows the earlier AI‑SPY drop in AI-SPY .004, but Level .005 is a distinct scene and object set per the Level .005 puzzle image.

Niji 7 plus Nano Banana Pro is being used for character lookdev “packs”

Niji 7 + Nano Banana Pro (character lookdev): A shared pattern is to ship a character as a full lookdev set (hero full-body plus cropped detail panels for face, arm, and torso materials) rather than a single render, as shown in the Character design set.

The post explicitly credits niji 7 and Nano Banana Pro for the design pipeline in the Character design set, and the layout itself is the technique: it gives you a fast “turnaround sheet” for iterating accessories, surface wear, and mechanical detail consistency across shots.

A “Firefly-first” creator workflow is emerging as a serious default

Adobe Firefly (creator workflow): Glenn describes a strong consolidation move—“90% of my work is done within Adobe Firefly” and Firefly keeps adding enough capability that he “barely has to leave the platform,” according to the Firefly-first claim.

• What that enables: The same account is publishing multiple repeatable formats built on Firefly outputs—AI‑SPY puzzle scenes as shown in the Level .005 puzzle image, plus other image-led posts that are explicitly tagged “Made in Adobe Firefly” as seen in the Firefly-made scene.

It’s a workflow signal more than a product update: the notable detail is the stated percentage and the evidence that multiple content formats are being produced without switching tools, per the Firefly-first claim.

Meshy’s “remix art history” pitch highlights stylized 3D asset direction

Meshy (3D asset generation): Meshy shared a short reel framing a workflow of “remixing art history” into stylized 3D assets, positioning it as an art-direction lever (style transfer as asset design) rather than only “make a model,” as shown in the Meshy remix reel.

The post is light on parameters, but the creative claim is concrete: art-history references become a palette for consistent asset families, per the Meshy remix reel.

Freepik Spaces shows up as a character lookdev surface with macro-detail crops

Freepik Spaces (character rendering): A “beautiful mechanics” workflow is being presented as a two-step deliverable—one full-frame character render plus a vertical strip of close-ups to validate skin shader, joints, and garment texture—according to the Freepik Spaces character set.

The example is a blue-skinned cyborg in a floral kimono-like garment with visible mechanical neck/limb components; the key detail is that Freepik Spaces is credited directly as the creation surface in the Freepik Spaces character set.

“Make it feel real” remains a guiding aesthetic, even in stylized portrait work

BLVCKLIGHTai (image outputs): A compact showcase format—two tightly related frames of the same subject—lands with the caption “I love making things real,” emphasizing craft around facial detail, jewelry surfaces, and a coherent surreal background, as shown in the Portrait diptych.

While no tool stack is named in the post, the deliverable pattern (paired variations that hold character identity and ornament detail) is the transferable idea, per the Portrait diptych.

Disintegration/decay studies are being shared as a reusable lookdev motif

Disintegration motif (image lookdev): A four-image set titled “we are dust.” focuses on high-detail material breakup—armor/robes dissolving into particulate dust and smoke-like fields—functioning as a reusable aesthetic target for fantasy/sci‑fi key art, as shown in the Disintegration image set.

The practical takeaway is the motif itself: crisp ornament detail at the “intact” boundary, then a controlled transition into granular debris, per the Disintegration image set.

🧍 Character continuity & control: AI influencers, reference reuse, and ‘lock this part’ requests

Posts cluster around keeping identity stable across shots: Higgsfield’s AI Influencer Studio, reusable reference prompts, and requests for masking/freezing parts of an output. This is about consistency systems more than raw video quality.

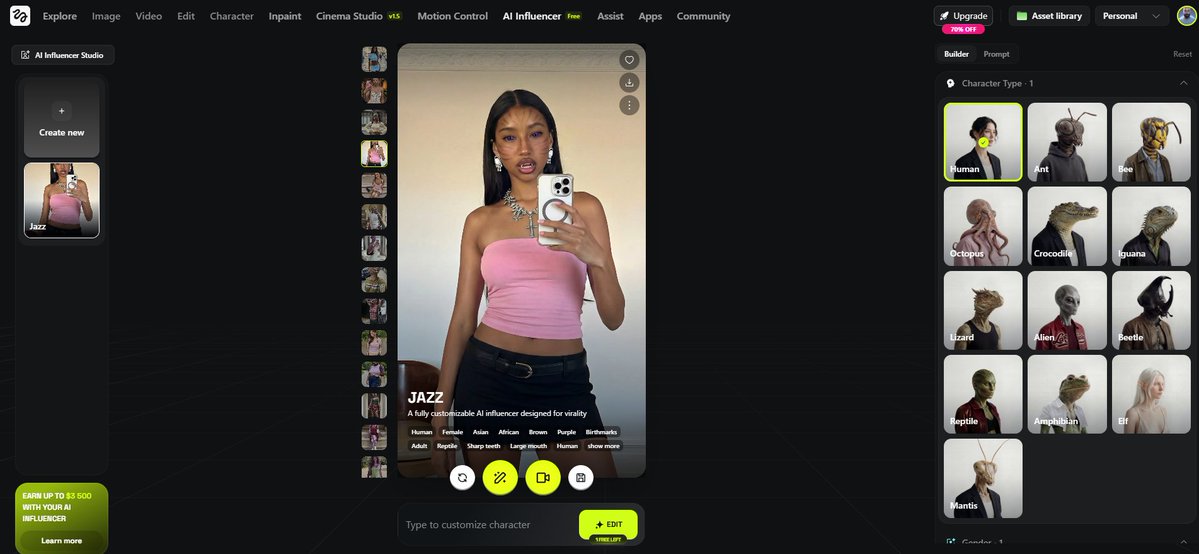

Higgsfield AI Influencer Studio demo focuses on in-editor identity controls

AI Influencer Studio (Higgsfield): Higgsfield’s AI Influencer Studio is being demoed as a “character continuity surface” where you tweak identity attributes (notably skin tone and eye color) and keep the same persona stable across outputs, as shown in the Influencer Studio screen walkthrough.

The UI capture in the Influencer Studio screen also shows the product framing as a saved character profile (“Jazz”) with tags and an export flow, which is the practical part for creators who want a reusable on-model “talent” rather than one-off renders.

• Customization lever: The demo emphasizes direct parameter edits (“tweak the skin tone, eye color”) rather than prompt-only steering, according to the Influencer Studio screen.

• Workflow pairing: The same thread positions the influencer generator alongside Motion Control as a matched pipeline, as referenced in the Try it link post.

A one-line “keep the vibes” prompt for reusing a character across scenes

Reference reuse prompt: A simple pattern is getting shared for character continuity across multiple environments: “portrait of him. keep the vibes, but now he’s in the middle of [place]”, with example outputs spanning Stonehenge, a GoT city scene, a ritual campfire, and a Mad Max-style desert—see the Reference image set where the same character read is preserved across contexts.

This is effectively a lightweight “identity lock” without formal character embeddings: you generate a clean reference image first, then keep re-issuing the same portrait directive while only swapping location, as described in the Reference image set.

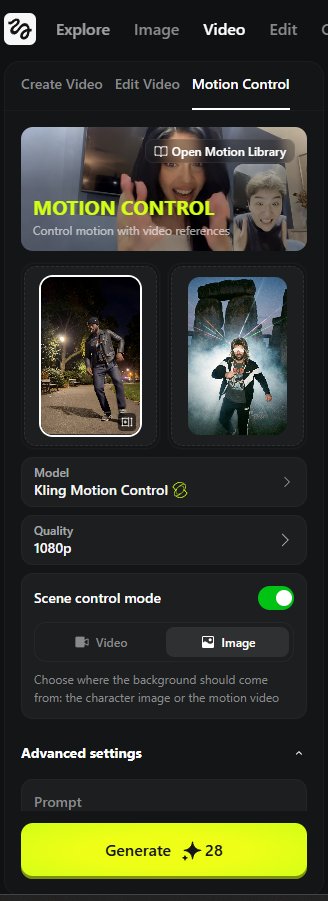

Higgsfield’s Kling Motion Control workflow highlights “scene control mode”

Kling Motion Control (Higgsfield): Higgsfield is showing a continuity-friendly Motion Control flow where you animate a consistent character image using a reference motion video, then decide whether the background comes from the character image or from the motion clip via “scene control mode,” as shown in the Motion Control UI screen.

The settings snapshot in the Motion Control UI calls out the key knobs creators actually touch: model set to Kling Motion Control, quality set to 1080p, plus the background-source toggle (“Video” vs “Image”) that determines what stays locked vs what can drift.

• Reference-first continuity: The broader thread frames this as “you’re calling the shots” because motion is constrained by an explicit reference clip, per the Workflow overview video.

A photoreal selfie JSON spec bakes in anti-mirror constraints

Photoreal prompt constraints: Following up on Ordinary selfie (anti-polish realism prompt), underwoodxie96 shares a highly structured beach-at-night selfie spec that explicitly bans mirror artifacts via rules like “not a mirror selfie” and “no mirrored text or reflections,” alongside a long negative prompt list meant to prevent common identity/realism breaks, as shown in the Beach selfie JSON prompt.

The same prompt is also being circulated as a reusable template via a Prompt sharing page, which makes it easy to fork and add your own identity/scene constraints without rewriting the whole schema.

Character reference fidelity debate: Midjourney Omni reference vs Nano Banana

Character reference fidelity: A practitioner claim making the rounds is that Midjourney’s character Omni reference can hold closer to an original character than Nano Banana Pro in some cases, with Nano Banana described as drifting toward a more generic output “away from the original char ref image,” per the Omni reference comparison note.

There’s no shared benchmark artifact in the tweets, so treat it as anecdotal—still useful as a routing heuristic when a project’s failure mode is identity drift.

Creators ask for masking-style ‘freeze’ controls during iterative generation

Partial locking request: A recurring control request is getting stated plainly—being able to “lock” certain parts of an output (mask out what you like and freeze it) while continuing to explore other areas (background, styling, variations), as described in Linus Ekenstam’s Lock parts request comment.

This is a continuity need more than a quality complaint: it’s about keeping identity-critical regions stable while still letting the model search elsewhere.

🧩 Automation agents for creators: OpenClaw life glue, scraping, and daily challenge culture

OpenClaw/Tinkerer‑Club style automation dominates: permissioning, bot ‘identity’ setup, scraping without captchas, and lightweight SDK building. Excludes pure coding IDE news (covered in Coding agents).

OpenClaw scraping workflow claims captcha-free runs and a $0 automation loop

OpenClaw (Kitze/Tinkerer Club): A creator claim frames OpenClaw as finally usable for “real” automation by getting scraping/automation to run without captcha interruptions and without paid humans—“costs $0, humans not involved,” plus a “tiny SDK” layered on top for reuse, as described in the Scraping breakthrough note.

The practical takeaway for creative ops is the implied shift from one-off automations to repeatable, shareable “skills” wrappers (SDK) that can power recurring tasks (research pulls, asset fetching, posting prep) without babysitting—though no repo, demo, or technical proof is shown in the tweets.

OpenClaw community starts “daily challenges” to build bot identities fast

OpenClaw (Tinkerer Club): A community “gym” cadence is being proposed: one OpenClaw improvement per day for 30 days, starting with bot “identity” plumbing—email address, phone number, GitHub account, and optionally iCloud—spelled out in the Challenge Day 1 thread.

• Why creatives care: This is a lightweight way to operationalize agents as persistent collaborators (accounts, logins, callback flows) instead of one-off chat sessions, with the “identity” layer treated as day-one infrastructure per the Challenge Day 1 thread.

Einsia launches an Overleaf-native AI assistant for LaTeX and research workflows

Einsia AI (Overleaf plugin): Einsia.ai is described as “officially live,” positioning itself as an AI writing partner embedded directly in Overleaf so researchers can generate images, search papers, and fix LaTeX errors without tab switching, as announced in the Launch note and described again in the Workflow summary.

The product surface is the point here: in-editor assistance (vs. chat in another window) tends to win for long-form writing and doc-heavy storytelling workflows where context lives inside the manuscript—see the install/landing details in the Plugin site.

Least-privilege OpenClaw setup: rebuild skills from scratch per agent role

Agent permissioning (OpenClaw): A least-privilege pattern is being pushed as a default: “nerf” existing agents and re-add skills/tools deliberately so roles don’t overreach—e.g., “your accountant doesn’t need access to your home assistant,” “support agent doesn’t need access to your NAS,” as stated in the Least-privilege thread.

This reads like a move from “one super-agent” toward compartmentalized creative ops agents (finances, publishing, customer support, file ops) where access boundaries are part of the build, not an afterthought.

Phone-rig “bot farm” setups surface as real distribution infrastructure

Automation ops culture: A Discord screenshot shows a 20‑phone Android rig used for app distribution and “TikTok account warming,” with the creator noting the maintenance burden, as captured in the Phone rig screenshot.

This matters to growth-focused creative teams because it sketches the non-obvious cost center behind scaled agent content/distribution workflows: device management, account health, and ongoing upkeep—not generation quality.

A Raspberry Pi running OpenClaw gets framed as a giftable “personal bot”

OpenClaw on Raspberry Pi (Kitze): The “agent as an object” idea shows up as a Valentine’s concept: a Raspberry Pi running OpenClaw inside a lobster shell with a “You’re my Pi” tag, shared in the Gift concept post.

It’s a small but real signal that creators are treating automation agents like personal devices (dedicated hardware, identity separation, always-on presence), not just software tabs.

🧑💻 Coding with agents: Codex on macOS, agent self-improvement loops, and model↔agent products

Developer-side agent tooling shows up in multiple threads: OpenAI’s Codex app on macOS, Microsoft’s RL-driven ‘agent loop’ fixer, and agent products fine-tuning their own coding models. This is separate from creator automation agents.

OpenAI launches the Codex app on macOS as an agent command center

Codex app (OpenAI): OpenAI announced the Codex app for macOS, positioning it as a “command center for building with agents,” per the Codex app announcement; the product framing emphasizes agents that can take on end-to-end engineering tasks plus parallel workflows, as described in the product page at product page.

What’s concrete from the launch messaging is the intent to centralize multi-agent development work (PRs, migrations, docs/prototyping) into a dedicated desktop surface rather than a chat pane; the tweets don’t include pricing or performance numbers, and there’s no screenshot of the macOS UI in today’s set.

• Workflow shape: The page highlights “parallel workflows” and end-to-end task completion as the primary value prop, per the details on the product page.

This reads as OpenAI pushing the “coding harness” layer into a first-party app, not just an API feature set.

Microsoft open-sources Agent Lightning to RL-train agents that fix their own prompts

Agent Lightning (Microsoft): A thread claims Microsoft “solved the Agent Loop problem” with Agent Lightning, an open-source framework where an agent fails a task, the system analyzes why, then updates the prompt so the next run succeeds, according to the Agent Lightning claim and the linked GitHub repo at GitHub repo.

The repo appears to be gaining traction (stars/forks are mentioned in the page summary), but the tweets don’t provide benchmark tasks, success rates, or a minimal reproducible example—so treat the “solved” framing as promotional until there’s a standard eval or a widely replicated demo.

• Core mechanic: Reinforcement-learning-driven prompt revision (“fails → analyze → update → rerun”) is the central loop described in the Agent Lightning claim, with implementation details implied by the GitHub repo.

Qoder ships a custom Qwen-Coder-Qoder model tuned for its agent runtime

Qoder (Qoder): Qoder “just launched Qwen-Coder-Qoder,” described as a custom model fine-tuned specifically for Qoder’s agent, with the author framing it as the “Model–Agent–Product loop” in practice in the custom model claim.

The notable creator-facing implication is vertical integration: instead of swapping in general-purpose coding models, the product claims it can tune a model around the agent’s operating patterns (tool calls, planning format, patch style). Today’s tweets don’t include public evals (e.g., SWE-bench) or even a before/after example beyond “I gave it a real coding task and walked away,” per the custom model claim.

A Grok 4.1 prompt pack spreads for generating complete web applets with a design spec

Grok 4.1 (xAI): A creator thread calls Grok 4.1 “a monster” for automating research and app building, and includes a copy/paste prompt that asks for complete HTML/CSS/JS code plus a specific design system (glassmorphism, responsive layout, typography, copyright line), as shown in the Grok 4.1 thread and repeated verbatim in the applet prompt.

The practical artifact here is the prompt structure: it encodes the “front-end spec as a constraint block” pattern—useful for creative coders generating microsites, interactive story prototypes, or portfolio pages.

• Prompt content: The template explicitly requests “expert-level code,” modern CSS styling (glassmorphism + gradient), mobile responsiveness, and clean comments, per the applet prompt.

Agent Opus is claimed to do one-click article-to-video generation

Agent Opus (unknown vendor): A post claims “Agent Opus” can generate a complete video from a news article “in literally one click,” framing it as removing traditional editing steps and stock-footage search, per the one-click claim.

There’s no linked demo, product page, or breakdown of what “complete video” includes (script, voice, b-roll selection, captions, aspect ratios), so the evidence level is low today; still, it’s directly in the lane of agentic creative tooling where the agent is operating as an assembler/editor, not just a generator.

SkillBoss is pitched as a Claude add-on that prioritizes execution over setup

SkillBoss (ecosystem): SkillBoss is being positioned as an add-on that makes “Claude into a real shipping machine,” with the core promise summarized as “way less setup, way more execution,” per the SkillBoss claim and the amplification in the thread repost.

There are no concrete implementation details in today’s tweets (no repo, no workflow screenshots, no example tasks), so this reads more like early positioning than a spec; still, it’s a clear signal that the market is fragmenting into “Claude + harness + packaged skills” products rather than raw model choice alone.

“Everything needs to become an API” resurfaces as agent-native software framing

Agent-native product design: The line “Everything needs to become an API” shows up as a compact thesis about how software should be shaped for agents—smaller callable surfaces, fewer bespoke UIs, and more machine-operable actions—per the API mantra.

It’s not a product announcement, but it’s a useful lens for why teams keep building wrappers, harnesses, and tool layers around creative pipelines: if the workflow can be called, it can be delegated, scheduled, and composed. The tweet doesn’t attach examples or metrics; it’s a direction-of-travel signal.

🏁 What shipped: short films, reels, music-video experiments, and creator drops

Finished or ‘release-shaped’ outputs: commissioned AI shorts, serialized reels, and multi-tool experiments presented as completed pieces. This category is about the work itself (not the prompts or the underlying model capabilities).

Showrunner posts a 42-second “Be our Valentine” remix

Showrunner (Fable Simulation): Following up on Watch-like-TV plan (Showrunner as a watchable surface), the team posted a ~42-second “Be our Valentine” remix clip, positioned as something you can “remix on Showrunner,” per the Valentine remix post.

The “watch it like a show” UI vibe is reinforced by a customized remote mockup shown in the Remote teaser.

AI FILMS Studio spotlights the fully AI-generated short “Tunnel Vision”

Tunnel Vision (Josef Scholler): AI FILMS Studio’s “AI Filmmaker Spotlight” featured “Tunnel Vision,” described as a fully AI-generated short following a fictional French soccer player through a stadium tunnel where past/present collide, as introduced in the Spotlight post.

Distribution details (where it’s hosted, what tools were used, and whether there’s a longer cut) aren’t included in the post beyond the spotlight framing.

DrSadek adds new episodes to the titled micro-reel series

DrSadek titled reels: Building on Titled reels (serial micro-episodes), DrSadek dropped multiple new short pieces today—“Portal of Mist and Light,” “Iron Sorrow,” and “The Monolith of First Words,” with tool credits continuing to rotate across Midjourney and video models per the Portal post and Iron Sorrow post.

Additional episodes posted the same day include “The Monolith of First Words,” as shown in the Monolith post, and “The Fractured Celestial,” per the Fractured Celestial post.

Metal Monday drops: a roach POV buffet tour and an Asteroids-style music video

Metal Monday (bennash): Two release-shaped edits landed today using Genie as the visual base—“Why You So Nasty” (a roach POV buffet “tour”) as shared in the Roach POV drop, and “Beep Beep Pew Pew,” an Asteroids-style music-video edit described as combining clips from Grok + Genie in the Asteroids MV post.

The second piece leans into retro arcade pacing and hard-cut montage structure.

0xInk_ posts an AI Gen VFX-heavy promo for a clothing brand

koyoyu studio promo (0xInk_): A clothing brand promotion video was shared with the creator crediting their role as an “AI Gen VFX artist,” and calling out the team behind the brand, according to the Promo video post.

An AirPods Pro-style commercial parody circulates as an AI ad-quality flex

AirPods-style parody spot: A commercial-style clip framed as an AirPods Pro ad parody was shared as a realism/quality flex in the Ad parody post.

The post’s claim about Apple reaction isn’t independently substantiated in the tweet; what’s verifiable here is the ad grammar (blocking, cuts, on-screen typography) in the clip itself.

VVSVS shares a new short film cut built around recurring shots

Short film practice cut (_VVSVS): A creator shared a new short film described as having recurring shots, a theme, and a basic script—explicitly framed as less polished than a prior piece, but still part of the “worlds you can build,” per the Short film share.

“Woodnuts” lands as a new short-film drop

Woodnuts (Gossip_Goblin): A new short film titled “Woodnuts” was posted and framed as “the first of many short films to come,” as shown in the Woodnuts share.

A new character reel ships with Midjourney, Nano Banana Pro, Kling, and Suno credits

Character reel (Anima_Labs): A “new week = new character” reel shipped with an explicit tool stack—Midjourney + Nano Banana Pro + Kling (animation) + Suno (music)—as listed in the Tool stack credit.

GenMagnetic posts an “Inception with Genie 3” clip as a standalone drop

Genie 3 (GenMagnetic): A standalone “Inception with Genie 3” clip was posted as a finished micro-edit in the Inception clip post.

📣 AI marketing creatives: UGC factories, brand guidelines, and text-to-video editing for teams

Creators are explicitly optimizing for ads and social output: AI UGC at scale, brand kit/guideline generation, and editing workflows designed for speed. Excludes the underlying prompt libraries (captured in Prompts & look recipes).

Clawdbot + MakeUGC V2 pitches a Brazil-style TikTok Shop UGC factory

Clawdbot + MakeUGC V2 (TikTok Shop UGC): Following up on UGC agent pipeline—the thread reframes the same automation stack as a “Brazil” TikTok Shop format: one calm, repeatable UGC demo template gets cloned as a creator style, the product-in-hand gets swapped, and the account posts daily at scale; the pitch claims early movers in the US can push $400k/month, while the backend throughput claim remains 620 videos/day, as described in Brazil format pitch and repeated in Retweet context.

• Factory loop: The described loop is “one clean UGC format” → “AI recreates the same creator style” → “AI swaps the product in hand” → “AI posts daily at scale,” with “no filming” and “no creators to manage,” per the details in Brazil format pitch.

• Operational framing: It’s positioned as an always-on ad testing engine (“creating, testing, and scaling short-form ads automatically”), which is the explicit justification for the 620/day claim in Brazil format pitch.

Nano Banana Pro “brand kit in 30 seconds” boards spread as a client deliverable

Nano Banana Pro (Brand guideline boards): A repeatable deliverable format is emerging where Nano Banana Pro outputs a full “brand base doc” plus auto-adapted key visuals, palette hex codes, and mockups—framed as “30 seconds → $1,000 brand kit” in Brand kit claim; separate examples show the same grid-style guideline board (hero image, IG post mock, palette, type, logo lockup, archetype/voice/visuals) for established brands like Durex/Braun/Sony/SEGA in Guideline board examples.

• What the board contains: The examples in Guideline board examples consistently include archetype + voice + visuals cards alongside palette and typography, which makes it usable as a client-facing spec rather than a prompt-only artifact.

• Why it’s sticky: The pitch in Brand kit claim isn’t “better images”; it’s packaging—one prompt producing a coherent kit that can be dropped into decks, ads, and social templates.

500M-impression study claims AI ads match human CTR when perceived as authentic

AI ad performance study (Taboola Realize): A thread claims Columbia/Harvard/Carnegie Mellon researchers analyzed 500 million ad impressions and 3 million clicks on Taboola’s Realize platform and found AI-generated ads can hit comparable click-through rates to human creative when users perceive them as authentic, as summarized in Study recap.

The framing matters for creative teams because it treats “authenticity cues” (especially human-face believability) as the practical constraint for scaling genAI ad production, not just raw generation cost—though the tweets don’t include a linkable paper artifact or breakdown tables beyond the summary image in Study recap.

OnlyFans deal chatter highlights PE risk lens on AI-made creators and competitors

Synthetic creator monetization (OnlyFans/Fanvue): A tweet cites an Axios snippet claiming Architect Capital is in exclusive talks to buy a majority stake in OnlyFans at a $5.5B enterprise value, then frames diligence as “risk for disruption from AI” driven by synthetic creator content, as shown in the screenshot in OnlyFans stake chatter.

• Competitive surface: Replies argue “you have to go to open source” to run the automation reliably and add that OnlyFans doesn’t allow it “(yet),” while Fanvue does and already has successful AI models, per Fanvue allows it.

• Distribution signal: A follow-up notes a “shockingly high %” of an IG Reels feed is now AI creators advertising Fanvue, suggesting the funnel is already social-first, as observed in Reels feed observation.

Pictory promotes text-based video editing where transcript changes update visuals

Pictory (Text-based editing): Pictory is pushing an editing workflow where the script/transcript is the control surface—edit the text to change the cut, and captions plus visuals update without traditional timeline work, as shown in the product graphic in Text edit pitch and documented via the linked walkthrough in Text editing tutorial.

The positioning is explicitly team-oriented (“L&D teams… need speed and clarity”), which puts this in the same “marketing ops” bucket as UGC factories—less about creative discovery, more about faster iteration and versioning, per Text edit pitch.

💳 Bundles, memberships, and creator-access economics (only the big swings)

A small set of posts focus on meaningful access/pricing shifts: multi-model subscription bundling claims and community membership economics. Minor giveaways are ignored; this is only ‘changes how you buy the stack’ content.

Claim: Disney+ will let subscribers generate 30-second franchise videos “powered by Sora”

Disney+ member generation (Disney+ + Sora): A claim is circulating that Disney+ will introduce AI-generated content in September, letting subscribers generate 30-second clips using major Disney franchises and stating it’s “powered by Sora,” as written in the Disney+ Sora claim post.

This would represent a major shift in “AI creation as a subscription feature,” but the tweet doesn’t cite an official Disney/OpenAI announcement or program details (limits, rights, watermarking, or pricing), per the single-source framing in Disney+ Sora claim.

GlobalGPT pitches one subscription for 100+ top models

GlobalGPT (GlobalGPT): A new “all-in-one” subscription pitch is circulating that claims one interface can replace multiple separate model subscriptions—framed as ChatGPT + Claude + Midjourney + Perplexity under one roof—along with a savings claim of $960/year, as described in the Bundle pitch thread.

• Model menu as the product: The screenshots/video emphasize picking “the best model for the task,” listing options like “GPT-5.2” and “Claude 4.5 Sonnet” alongside search/chat tools, per the Model list clip follow-up.

• Access/pricing claims are promotional: The thread asserts premium models are included “free” and pushes a free trial link, as stated in the Try it free post; there’s no independent verification artifact in these tweets beyond the creator’s walkthrough.

Luma Dream Brief: $1M prize competition with a March 22 deadline

Luma Dream Brief (Luma Labs): Luma is pushing a $1,000,000 global ad-idea competition where creators can produce “the idea you never stopped thinking about” without client approvals, with submissions due March 22, as stated in the Contest details and Make it anyway pitch posts.

• Economic angle: The pitch positions the prize pool as an alternative to brand/client gatekeeping (“No client. No approvals.”), per the language in the Contest details tweet.

• Creative constraint: The framing is “unmade advertising idea,” suggesting the constraint is concept and execution craft rather than an existing commission, as described in the Make it anyway pitch post.

Kitze posts $100k+ and $150k 30-day revenue screenshots as proof of demand

Creator monetization (Kitze): Revenue screenshots and breakdown posts are being used as “proof-of-demand” for paid communities and creator tools—one screenshot shows $100,373 “Revenue 30 Days,” as shown in the Revenue screenshot post, while a separate breakdown claims ~$150,641 across multiple products/sponsors, per the 30-day breakdown tweet.

• Portfolio-style mix: The breakdown itemizes income streams (community, apps, sponsorships, calls), framing the business as many small products rather than one hit, as written in the 30-day breakdown post.

• Creator economy as marketing: The “accountant call” clip functions as social proof/virality around the numbers, as shown in the Accountant video follow-up.

Sundance claims AI training for 100,000 filmmakers backed by $2M from Google.org

Sundance Collab AI education (Sundance + Google.org): A widely shared graphic claims Sundance will “train 100,000 filmmakers in AI” via a 3-year program, backed by $2M from Google.org and delivered through Sundance Collab, as shown in the Program graphic post.

The tweet’s accompanying commentary frames the funding as incentive design and dependency risk rather than neutral support, per the critique in Program graphic.

Tinkerer Club membership shown at $199 with limited spots

Tinkerer Club (Kitze): A pricing/access screenshot shows the builder community positioned as a paid membership at $199, with scarcity framing (“19 left”) and a pitch around “digital sovereignty,” as shown in the Pricing screenshot post.

The same thread context links back to the public membership site, as summarized via the Membership page card in the Community plug tweet.

Hailuo AI says its 48-hour Ultra giveaway challenge has ended

Hailuo AI (MiniMax): Hailuo says its “48 Hours Only” promo challenge is finished and that winners have been contacted via DM, as announced in the Challenge closed notice update.

The post frames this as a time-boxed acquisition mechanic (“50 Free Ultra Memberships”), but it doesn’t include any public list of winners or details on redemption beyond DMs, per the Challenge closed notice text.

📚 Research radar for creators: benchmarks, interpretability, agent training, and creative tooling papers

Research/benchmark posts are unusually dense today (papers + leaderboards), with direct relevance to agent reliability, evaluation in social games, and creative workflow automation. No bioscience topics are included.

Game Arena expands to Werewolf and Poker to test ambiguity and persuasion

Game Arena (Google DeepMind, Kaggle): DeepMind’s benchmarking initiative expanded beyond chess to include Werewolf and Poker, explicitly targeting skills like contextual communication, consensus-building, and decision-making under uncertainty, according to the Game Arena update and the accompanying DeepMind blog post.

• Why this matters for agentic creative tools: The benchmark focus shifts from “perfect information” tasks toward social interaction patterns (bluffing, alignment-building, interpreting intent), as described in the DeepMind blog post.

• Model visibility: The write-up highlights Gemini performance in the arena (including chess leaderboard results), as reported in the DeepMind blog post.

This is one of the cleaner signals today that “can it collaborate and persuade?” is becoming a first-class eval axis, not just a vibes claim.

ASTRA tries to industrialize tool-agent training data and eval environments

ASTRA (paper): A fully automated pipeline is pitched for training tool-augmented LLM agents by synthesizing diverse trajectories from tool-call graphs and generating rule-verifiable “reinforcement arenas” for multi-turn RL stability, as summarized in the ASTRA paper share and detailed in the Hugging Face paper.

For creative automation (research-to-assets, doc-to-video, multi-step editing), the practical relevance is reducing hand-authored agent curricula and making long-horizon tool use more reproducible—ASTRA’s proposed unification of SFT + online RL is outlined in the Hugging Face paper.

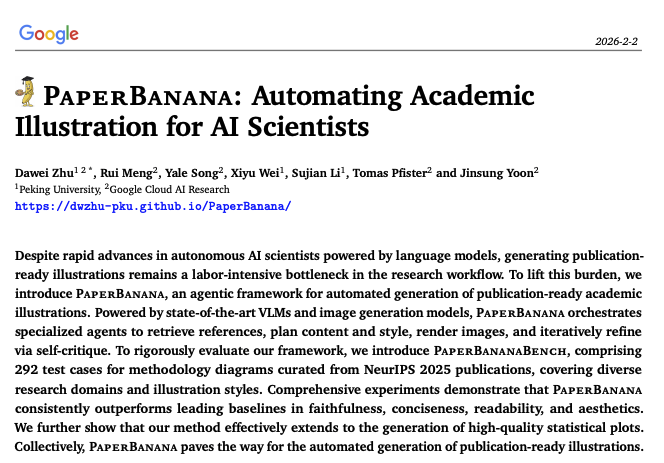

PaperBanana targets the “figure-making bottleneck” in AI research writing

PaperBanana (Google Cloud AI Research, PKU): A new agentic framework aims to auto-generate publication-ready academic illustrations—positioned as a workflow bottleneck for “AI scientist” systems—while also introducing PaperBananaBench for evaluation, as shown in the PaperBanana title slide.

For creative teams that support research, docs, or technical storytelling, the immediate relevance is faster diagram/figure iteration without hand-assembling assets; the tweet doesn’t include implementation details beyond the stated agentic approach and benchmark naming in the PaperBanana title slide.

TTCS uses a co-evolving synthesizer to make test-time training less brittle

TTCS (paper): A test-time training framework proposes two co-evolving policies—a question synthesizer that creates progressively harder variants and a reasoning solver trained via self-consistency rewards—aiming to stabilize online improvement on hard reasoning problems, per the TTCS paper share and the linked Hugging Face paper.

For creators building agentic pipelines (story planning, research assistants, iterative tool-users), the core takeaway is a concrete recipe for “self-improvement at inference time” that doesn’t rely on external labels—details and claims live in the Hugging Face paper.

A “Turing test” framing for personalized visuals shows up as an eval proposal

Visual Personalization Turing Test (paper): A Turing-test-style evaluation framework is proposed for systems that generate personalized visual content, emphasizing human judgment around whether outputs match user intent/preferences rather than generic quality alone, as shared in the Paper listing and described in the Hugging Face paper.

For design and storytelling workflows, this is an explicit attempt to formalize “does it feel like it was made for me?” as an eval target—useful context as personalization becomes a product requirement across creative tooling, with the setup outlined in the Hugging Face paper.

Golden Goose proposes a scalable RLVR data trick via fill-in-the-middle MCQs

Golden Goose (paper): A method constructs multiple-choice “fill-in-the-middle” problems from otherwise unverifiable internet text to synthesize RLVR tasks at scale—explicitly framed as a way around RLVR dataset saturation—per the Golden Goose paper share and the linked Hugging Face paper.

The paper claims strong results on small-to-mid models (notably 1.5B and 4B) and demonstrates an application in cybersecurity, as described in the Hugging Face paper. For creators, the relevance is indirect but real: better RL data recipes tend to flow downstream into more reliable instruction-following and tool-use behaviors in creative agents.

THINKSAFE frames “refusal steering” as safety that doesn’t blunt reasoning

THINKSAFE (paper): A safety-alignment approach for large reasoning models uses self-generated alignment (no external teacher) and “lightweight refusal steering” to reduce harmful compliance while trying to preserve reasoning capability, according to the THINKSAFE paper share and the linked Hugging Face paper.

For studios deploying reasoning models in creative workflows (script assistants, ideation copilots, production coordinators), the key idea is a training/finetuning direction that explicitly targets the compliance-vs-safety tradeoff; the mechanism and evidence are in the Hugging Face paper.

VisGym positions environment diversity as an agent capability multiplier

VisGym (paper/project): A project called VisGym is shared as a suite of “diverse, customizable, scalable environments” for multimodal agents, with the teaser emphasizing breadth of scene types and interactions in the VisGym share.

The creator-relevant angle is evaluation and training infrastructure for agents that must see, navigate, and manipulate visual worlds (a prerequisite for robust worldbuilding tools and simulated cinematography); today’s tweet is primarily a pointer plus visual reel in the VisGym share.

A causal dynamics framing for control shows up with a concrete block-manip demo

Causal world modeling for robot control (paper/project): A demo video shows a robot arm interacting with objects while on-screen overlays indicate predicted next states—framed as “causal world modeling” for planning/control—per the Robot control demo.

This is adjacent to creator tooling because the same causal-dynamics ideas tend to feed simulation and world-model directions (predicting state transitions under actions), but the tweet’s concrete evidence is the task video and paper pointer in the Robot control demo.

TensorLens treats a transformer as a single input-dependent operator

TensorLens (paper): A transformer interpretability framework proposes representing the full model as a unified, input-dependent linear operator via a high-order attention-interaction tensor that folds in attention, FFNs, norms, and residuals, per the TensorLens paper share and the linked Hugging Face paper.

For creator-facing tool builders, this sits in the “debuggability” bucket—more coherent whole-model analysis can translate into better tools for inspecting why a model is drifting or failing on structured creative tasks, with the approach summarized in the Hugging Face paper.

📈 Creator ops & culture: vibe coding anniversaries, shipping tempo, and solo-builder economics

Discourse-level signals (not product releases): ‘vibe coding’ as a one-year-old meme-to-practice, shipping cadence hype, and creator self-ops talk (tool minimalism, workload rhythms).

Vibe coding hits its one-year mark, and creators are already revising the myth

Vibe coding (culture): The phrase “vibe coding” is being called out as only ~one year old, but the way people talk about it has already shifted—from playful speed-running prototypes to acknowledging the unglamorous finishing work, as noted in the one-year reflection Vibe coding milestone and echoed by builders reacting “vibe coding is 1 year old?” Creator reaction. The point is: prototype velocity is now assumed; the differentiator is still shipping and maintenance.

A sharper critique is emerging that frames vibe coding as “80% in one rainy Sunday” with the remaining “20%” taking weeks—an explicit reminder that polish, edge cases, deployment, and upkeep still decide whether a project survives Long-tail warning.

Public revenue screenshots turn into a creator-ops playbook signal

Solo-builder economics (creator ops): Following up on pricing metrics (community monetization framing), one builder posts a new 30-day revenue screenshot showing $100,373 30-day revenue screenshot and separately itemizes roughly $150,641 across community + small products + calls Income breakdown. This kind of public ledger is becoming a recruiting and positioning tool as much as a personal flex.

• Revenue stacking pattern: The breakdown mixes a community line item (Tinkerer Club) with several smaller product streams and services, implying “many small pipes” rather than one breakout app Income breakdown.

A phone detox move: deleting Claude and ChatGPT to reduce “always-on” prompting

Tool minimalism (habit): One creator explicitly deletes the Claude and ChatGPT phone apps as a discipline move—framing it as reducing compulsive, always-on prompting and keeping AI use more intentional Deleted apps post. It lands right next to the relatable pattern of “I’m going to sleep” followed by late-night sessions with Claude Late-night Claude habit.

February becomes a shared “AI shipping” tempo cue

Shipping cadence (creator culture): A simple line—“Feb is the month of AI shipping”—is getting used as a communal pacing signal: more public builds, more releases, more “post what you made” energy Shipping month cue. It’s less about any one tool update and more about creators aligning on the same sprint window.

The “perfect month” calendar meme reinforces the same vibe by treating February 2026 as a clean, bounded challenge space for output Perfect month meme.

Creators start slotting “AI” into a two-year hype-cycle theory

Cycle talk (market mood): A speculative framework is spreading that assigns ~two-year lifespans to creator/internet cycles—NFTs (2021–2023), memecoins (2023–2025), AI (2025–2027)—and asks what follows Two-year cycle claim. It’s not evidence of a tool shift, but it does shape how creators talk about timing: when to go all-in vs when to diversify.

The post is assertion-heavy with no data attached, so treat it as sentiment mapping—not forecasting.

File-first culture push: Obsidian over “cute notion dashboards”

Tooling identity (notes as ops): A small but telling cultural jab—mocking “cute lil notion dashboard” behavior—signals a renewed preference for file-first, local-ish knowledge bases (Obsidian) over polished dashboard aesthetics Obsidian jab. The screenshot itself reads like identity: separate vaults (e.g., “kitzsidian”, “botsidian”) implying personal ops and agent ops living side-by-side Obsidian jab.

🗓️ Deadlines & programs: contests, hiring calls, and creator opportunities

Event-driven opportunities today skew toward creator competitions and hiring, plus smaller program announcements. This is where ‘apply/submit by X date’ items live.

Luma Dream Brief opens a $1M “no client approvals” ad competition (deadline March 22)

Luma Dream Brief (LumaLabsAI): Luma is running a $1M global competition to produce “the unmade advertising idea” creators have been sitting on—positioned explicitly as no client, no approvals, with submissions due March 22, as framed in the Competition announcement and reiterated in the Deadline reminder.

• What entrants are pitching: The hook is that “the suits never got the idea… ‘too risky’… make it anyway,” which signals the brief is optimized for directors and motion designers who want to execute a spec without stakeholder loops, as stated in the Competition announcement.

• Why this matters operationally: The prize size plus a fixed deadline is likely to concentrate a lot of short-form “ad film” experiments into the next ~7 weeks, with Luma as the production substrate—see the framing in Deadline reminder.

Kling says it’s still hiring and dangles early access as a perk

Kling (Kling_ai): Kling posted that it’s “still hiring,” highlighting “exclusive early access to what we’re building” as a perk for people who join, per the Hiring post. The post doesn’t specify roles, location, or team needs in the tweet itself, so the concrete takeaway is the ongoing hiring signal plus the implied advantage of being inside the product pipeline early, as stated in Hiring post.

Claire Silver closes entries and promises a winners/finalists breakdown thread

Claire Silver (contest cycle): ClaireSilver12 announced entries are closed and said a detailed thread covering winners and finalists (plus showcase/prizes) is coming “in a week or two,” according to the Entries closed update. This is a straightforward schedule update—no new submission mechanics, just the next checkpoint and expected timeline, as described in Entries closed update.

Hailuo’s 48-hour Nano challenge ends; winners notified via DMs

Hailuo (Hailuo_AI): Hailuo posted that its 48-hour limited challenge is over and that DMs have been sent to winners, per the Challenge ended notice. The original promo mechanics referenced “50 free Ultra memberships,” but today’s update is purely administrative: the submission window is closed and next steps are via DM, as confirmed in Challenge ended notice.

Promise AI highlights hybrid film workflows at UCLA’s AI Summit panel

Promise AI (industry panel): Promise’s VP of Product, Mariana Acuña Acosta, appeared on a UCLA AI Summit panel about “unlocking new stories,” with the framing centered on hybrid workflows that combine traditional filmmaking craft with GenAI, as posted in the Panel recap.

The tweet is more about industry positioning than new tooling specifics (no pipeline steps or product features cited), but it’s a clear signal that hybrid production is being discussed in formal filmmaking contexts, as shown in Panel recap.

The Residency spotlights resident-run hackathons in San Francisco

The Residency (community program): A Residency founder account shouted out a specific resident and noted residents are hosting hackathons in San Francisco, per the Residency shoutout. There aren’t dates, application links, or an event calendar in the tweet itself, but it’s a direct “program is active” signal for builders who track IRL creator meetups around AI production, as stated in Residency shoutout.

Luma announces a Razer gaming peripherals giveaway

Luma (LumaLabsAI): Luma posted an additional creator incentive—“earn your chance to win gaming peripherals” with Razer—according to the Razer giveaway note. The tweet doesn’t include eligibility rules, deadlines, or entry steps on its own, so the only concrete artifact today is the existence of the giveaway and brand partner, as stated in Razer giveaway note.

🎧 Sound layer: music glue, SFX, and AI-first audio habits inside film pipelines

Audio isn’t the main volume today, but it’s present as the ‘glue’ in AI films: soundtrack generation, SFX-forward clips, and creators crediting music tools as part of the stack.

Suno keeps showing up as the soundtrack layer in character-reel pipelines

Suno (Suno): A new character-reel stack explicitly lists Suno (music) alongside Midjourney + Nano Banana Pro + Kling 2.5 (animation), reinforcing the pattern that music generation is being treated as a first-class layer in short-form AI character production, as shown in Tool stack callout.

The creator frames it as a “new format” character intro (persona + micro-acting beats), with the audio credit embedded directly in the tool list rather than treated as post-only.

ElevenLabs v3 voice model is out of alpha

ElevenLabs v3 (ElevenLabs): The v3 model’s alpha tag has been removed—multiple posts explicitly frame this as the “full version” release, not a limited alpha preview, as stated in Turkish release note and reiterated in Out of alpha note. This matters for creators relying on AI voice in production because “out of alpha” typically signals fewer breaking changes and more predictable behavior, but no concrete changelog details (quality, latency, pricing, languages) are provided in these tweets.

Vidu Q3 creators are highlighting SFX as the joke carrier

Vidu Q3 (Vidu): A short Vidu Q3 share explicitly calls out that “those SFX are killing me,” pointing to a growing creator habit: treating sound effects timing as the primary comedic driver in micro-clips, not just a polish pass, per SFX shoutout clip.

The clip itself is minimal visually (a fast, vibrating spin), which puts pressure on SFX cadence to sell the beat—useful signal for anyone shipping short comedic loops where the visual motion is simple but the audio carries impact.

Diegetic singing shows up as a worldbuilding tool in Genie clips

Genie (Google/DeepMind): A ~3-minute Genie scene leans on in-world performance—“miners pass the time singing blues”—as the storytelling device, as described in Diegetic singing premise.

It’s a useful creative signal: as world-model clips get longer than 10–15 seconds, creators are using diegetic audio moments (songs, chants, on-location performances) to create structure and pacing without needing complex plot beats.

Grok + Genie visuals get packaged as a “music video” edit format

Grok + Genie (xAI + Google/DeepMind): A “Metal Monday” post frames a ~2m22s cut as a first music video assembled from a mix of Grok and Genie clips, with an Asteroids-like visual theme, as described in Music video experiment.

The main creative takeaway is format-level: the music video label is being used as permission to collage heterogeneous AI shots into a coherent piece, where rhythm and repetition can cover for continuity gaps.

Motion Control is getting positioned as a music-video workflow primitive

Kling Motion Control (Kling/Higgsfield surface): A resource drop explicitly labels “Use motion control to create stunning music videos,” signaling that a lot of Motion Control demand is coming from music-video assembly (repeatable performance motion + consistent character shots), as linked in Music video motion control link. There aren’t settings in the tweet itself, but it’s a clear positioning shift: Motion Control as a creator-friendly way to lock choreography while swapping looks.

🛡️ Trust, disclosure, and the ‘indistinguishable’ problem in creative AI

A smaller but important slice: concerns about who controls AI education, how ‘authentic’ AI ads work, and photoreal generation recipes that raise provenance/consent questions. This is policy-and-norms, not tool tutorials.

“Indistinguishable real-life images” prompts are becoming normal social content

Photoreal prompting norms: A creator explicitly markets a Nano Banana Pro prompt as a way to generate “indistinguishable real-life images,” sharing a long, structured JSON spec for a beach-at-night smartphone selfie with detailed constraints (including “no mirror selfie,” “no mirrored text,” and a heavy negative prompt list), as shown in the indistinguishable prompt post. This follows the same deepfake-capable prompting trajectory seen in Likeness prompts, but with more “how-to” packaging via a public prompt share page described in the prompt share link.

The speed of normalization is the signal.

500M ad-impression study: AI creatives match CTR when audiences can’t tell

AI ads performance (Taboola Realize): A shared summary claims Columbia, Harvard, and Carnegie Mellon analyzed 500M ad impressions (and ~3M clicks) on Taboola’s Realize platform and found AI-generated ads can reach comparable click-through rates to human creatives when users can’t distinguish the origin, as stated in the study summary poster. It frames “authenticity” as perception-led (human faces, familiar formats) rather than disclosure-led.

This is a trust problem, not a rendering problem.

Sundance’s Google.org-funded AI training plan triggers “incentive design” backlash

Sundance Collab (Sundance) + Google.org (Google): A circulated poster claims Sundance will “train 100,000 filmmakers in AI” via a 3‑year, “product agnostic” program funded by a $2M Google.org investment, delivered through Sundance Collab, as shown in the Sundance AI training poster. The pushback is less about “AI literacy” and more about capture dynamics—corporate-funded curriculum shaping norms, tool preference, and long-term dependency, as argued in the Sundance AI training poster.

The core concern is governance. Who sets defaults matters.

Claimed Disney+ plan: user-generated 30-second franchise videos “powered by Sora”

Disney+ (Disney) + Sora (OpenAI): One post claims Disney+ will introduce AI-generated content in September, letting members generate 30‑second videos using characters from Star Wars/Pixar/Disney “all powered by Sora,” per the Disney+ claim image. The post provides no product specs (watermarking, approval workflow, dataset licensing), so the provenance and rights model remain unclear from today’s evidence.

If real, it’s a platform-level authorship shift.

OnlyFans acquisition chatter spotlights Fanvue as a synthetic-creator outlet

Synthetic creator monetization (Fanvue vs OnlyFans): Deal chatter around OnlyFans (reported as “exclusive talks” at a $5.5B enterprise value) is being used as a proxy for how close “AI creator” content is to mainstream platform risk, as shown in the Axios deal screenshot. In replies, creators claim OnlyFans doesn’t allow certain fully synthetic setups “(yet)” while Fanvue does, and that successful AI models already exist there, as argued in the Fanvue disclosure comment and echoed in the IG Reels shift note.

The disclosure tension is the product.

While you're reading this, something just shipped.

New models, tools, and workflows drop daily. The creators who win are the ones who know first.

Last week: 47 releases tracked · 12 breaking changes flagged · 3 pricing drops caught